Abstract

Image-guided treatment adaptation is a game changer in oncological particle therapy (PT), especially for younger patients. The purpose of this study is to present a cycle generative adversarial network (CycleGAN)-based method for synthetic computed tomography (sCT) generation from cone beam CT (CBCT) towards adaptive PT (APT) of paediatric patients. Firstly, 44 CBCTs of 15 young pelvic patients were pre-processed to reduce ring artefacts and rigidly registered on same-day CT scans (i.e., verification CT scans, vCT scans) and then inputted to the CycleGAN network (employing either Res-Net and U-Net generators) to synthesise sCT. In particular, 36 and 8 volumes were used for training and testing, respectively. Image quality was evaluated qualitatively and quantitatively using the structural similarity index metric (SSIM) and the peak signal-to-noise ratio (PSNR) between registered CBCT (rCBCT) and vCT and between sCT and vCT to evaluate the improvements brought by CycleGAN. Despite limitations due to the sub-optimal input image quality and the small field of view (FOV), the quality of sCT was found to be overall satisfactory from a quantitative and qualitative perspective. Our findings indicate that CycleGAN is promising to produce sCT scans with acceptable CT-like image texture in paediatric settings, even when CBCT with narrow fields of view (FOV) are employed.

1. Introduction

Besides biological advantages, compared to conventional photon radiotherapy, particle therapy (PT) offers better conformity to the tumour target volume due to its sharp and steep dose gradients [1,2,3]. This feature makes particles the perfect candidate for paediatric patients with a long life expectancy, as they allow us to reduce long-term radiation-induced side effects. However, if on the one hand the enhanced conformal dose distribution resulting from the ballistic selectivity of particles is beneficial for the sparing of surrounding organs at risk (OARs), on the other hand it makes PT more susceptible to anatomical changes [4]. These are likely to occur as a combination of tumour shrinkage, weight loss, organ filling variation and displacement changes throughout the course of the treatment [5]. In particular, the pelvic and abdominal regions are highly prone to inter-fractional anatomical changes, due to the presence of organs with variable degrees of filling, including the gastrointestinal tract, bowel, rectum and bladder [6,7,8,9]. These variations can lead to reduced tumour coverage and/or healthy tissues overdosing, possibly undermining the oncological and toxicity outcomes of treatment [8,9].

To preserve the benefits of PT amid anatomical changes, the most common approaches include anatomical robust optimisation and plan adaptation [10]. The former considers non-rigid anatomical variations during the optimisation process, at the cost of an increased integral dose to healthy tissues [10]. Regarding the latter approach, to mitigate the possible detrimental effect of patient tissue changes on plan dosimetry, repeat computed tomography (CT) scans, also known as verification CT scans (vCT scans), are acquired during the course of treatment to recompute the plan on the current patient anatomy and trigger replanning if dosimetric objectives are no longer met [3,4]. In particular, it is estimated that about one third of PT patients will require at least one replanning during the course of their treatment [1]. However, the acquisition of vCT scans requires a strong clinical motivation, as they increase workload, in terms of room occupancy and staff time, and expose the patient to additional imaging doses [11,12]. Limiting the imaging dose is particularly important in sensitive patients such as paediatric patients with a good prognosis and life expectancy, to reduce additional complications to OARs as well as the risk of developing secondary malignancies [3,13].

Acquisition of vCT scans is not the only solution to obtain updated information on a current patient’s anatomy: the establishment of in-room, pre-treatment volumetric techniques for setup correction and image guidance has fostered the interest of the scientific community towards the possibility of their use for online dose calculation purposes. Magnetic resonance imaging (MRI) guidance has gained popularity in photon radiotherapy, while PT is at its infancy and limited to pre-clinical research settings, due to technical challenges related to the interaction of MRI with protons [11,14]. CT on rails is also promising, but increases the time and complexity of the treatment and constitutes an additional cost for the centre [15]. On the contrary, the availability of cone beam CT (CBCT) is definitely larger due to its lower cost and compactness [16], so it is routinely used worldwide for the daily verification and correction of the position of the patient. Since it is a volumetric imaging modality, it enables us to obtain valuable updated information daily on a patient’s anatomy quickly prior to radiation delivery and in treatment position [8].

Although CBCT imaging has the potential to adjust the treatment to the observed anatomical variations, the inherent lower image quality and contrast-to-noise ratio compared to CT and the consequent poor Hounsfield unit (HU) accuracy prevent its direct use for dose recalculation purposes in adaptive pathways [3,8,15,17,18,19]. In particular, the most relevant artefacts generally affecting CBCT images are beam hardening, aliasing and ring artefacts, a partial volume effect, as well as other ones arising from noise and scatter [20,21]. These can be prevented by means of pre-processing hardware solutions (e.g., bowtie filters, anti-scatter grids) or corrected via software post-processing methods either in the projection (i.e., on planar raw images, before reconstruction) or the image domain (e.g., on 3D volume, after reconstruction) [22,23]. The post-processing methods in the image domain are the most common to compensate for CBCT deficiencies and allow CBCT-based adaptive PT (APT) workflows [2], and can be roughly divided into two categories, namely analytical—also known as conventional—or data-driven, i.e., deep learning (DL)-based [22,24] methods. Amongst analytical methods, the most established technique to generate CT-like CBCT images, also denoted as synthetic CT (sCT), which is a deformable image registration (DIR) to planning CT (pCT) [8], alone [25,26] or in combination with other approaches, including HU mapping, histogram matching, multilevel threshold and empirical look-up table calibration [27,28].

Due to the recent unprecedented rise in artificial intelligence (AI) applications in healthcare and radiation oncology [29], DL-based methods have gained increasing popularity as a valuable tool for medical image synthesis, including CBCT to CT translation [8,11,22]. According to several authors, e.g., Dayarathana and colleagues, DL-based models can be broadly divided into supervised and unsupervised groups in terms of the learning method [30]. In computer vision, there is a large variety of models that are particularly utilised for image reconstruction and image synthesis [31]. Thanks to the recent development and advancements of generative adversarial networks (GANs) [32], it is feasible to synthesise images from input noise by simultaneously training two adversarial networks, a generator and a discriminator, as previously achieved by [33]. Another application of generative models that has attracted attention in recent years is image-to-image translation, that is generating synthetic images by translating an input image from a source domain to a target domain [34]. In this application, the model learns how to transfer style and underlying representations of the target domain to the content of the input image [34]. In the case of paired data availability, models such as pix2pix are employed to learn the mapping from one domain to the other in a supervised manner [35]. Unsupervised models, on the other hand, are advantageous when paired data (i.e., training data and ground truth) are not available [36]. In the context of medical image synthesis, several DL-based architectures for enhancing CBCT image quality were reported [37]. Among them, cycle consistency GAN (CycleGAN) has gained popularity as it allows us to use unpaired data for training, which is particularly important when CBCT and CT image pairs with completely matching anatomy are not available [17,23,24,38,39,40,41,42]. Despite the undisputable advances in the field, relevant open challenges still remain that prevent the routine use of DL and CycleGANs for image translation tasks in clinical settings and APT workflows [8].

In this work, we propose and evaluate a hybrid analytical and DL-based framework for CBCT to CT image synthesis tailored on a population of paediatric patients and young adults towards the implementation of APT pathways. In particular, this study focuses on an analytical ring artefact correction method followed by the generation of sCT scans based on 2D CycleGAN architectures featuring Res-Net and U-Net generators. The validity of the generated sCT scans is assessed in terms of qualitative and quantitative image quality, reserving dosimetric evaluations for future investigations. To the best of our knowledge, this study represents the first attempt to generate and evaluate DL-based sCT from narrow field-of-view (FOV) CBCT in a population of paediatric patients treated with either protons or carbon ions.

2. Materials and Methods

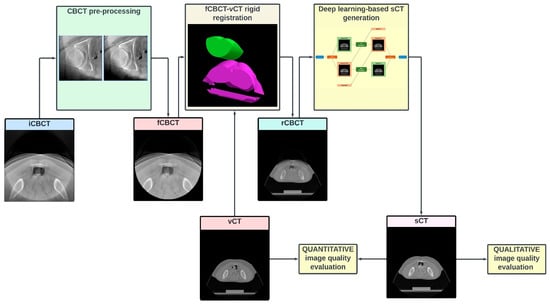

The flowchart in Figure 1 describes the workflow of the study.

Figure 1.

Study flowchart. Each CBCT volume (input CBCT, “iCBCT”) is pre-processed to (1) attenuate the ring artefact, (2) exclude the pixels outside the intrinsic CBCT circular field of view (FOV) and (3) intensity-match the CT grayscale. The pre-processed volume (filtered CBCT, fCBCT) is then resampled to match the geometrical CT scanning conditions and is rigidly registered (MATLAB intensity-based image registration) to same-day CT, i.e., verification CT (vCT), to obtain the CBCT registered on CT (rCBCT). After most cranial and caudal slices without CBCT are removed, and greyscale values are rescaled to a [–1, 1] range, the volume is inputted to the network to finally obtain the synthetic CT (sCT). Finally, image quality is assessed qualitatively and quantitatively.

2.1. Patients Dataset

This retrospective study included a consecutive cohort of paediatric (age < 18 years old, yo) and young adult (18 ≤ age ≤ 30) patients treated between December 2021 and December 2023 at the National Centre for Oncological Hadrontherapy (CNAO) in Pavia, Italy. To be eligible for the study, the patients had to meet the following inclusion criteria: (1) age < 30 yo at time of treatment; (2) completion of a full PT course in CNAO; (3) treated for tumours within the pelvic or abdominal district, (4) imaged with in-room CBCT; (4) availability of clinical and imaging data (i.e., pCT and at least one vCT); (5) written informed consent for use of data for clinical research and educational purposes. Both treatments with protons and carbon ions were allowed. The study was approved by the local Ethical Committee “Comitato Etico Territoriale Lombardia 6” under notification number 0052020/23, prot. CNAO OSS 57 2023.

2.2. CBCT at CNAO

CNAO is a synchrotron-based facility able to deliver PT with fixed beam lines of protons and carbon ions species [43,44]. The Centre is equipped with three treatment rooms, two of them featuring custom, developed in house, robotic systems for the acquisition, in a near-treatment position, of planar X-ray projections for patient position verification based on bony landmarks and subsequent volumetric CBCT scans for qualitatively evaluating anatomical consistency with one of the pCT scans [45]. In one room (i.e., Room 1), it is possible to acquire either full-fan (FF) or half-fan (HF) CBCT [46], while in the other one (i.e., Room 2), only FF acquisition is enabled, limiting the field of view (FOV) to 220 mm (Figure 2). For the present study, only CBCT data from Room 2 were considered, as the system in Room 1 is in a pre-clinical phase. All data were entirely collected by our own research group at CNAO.

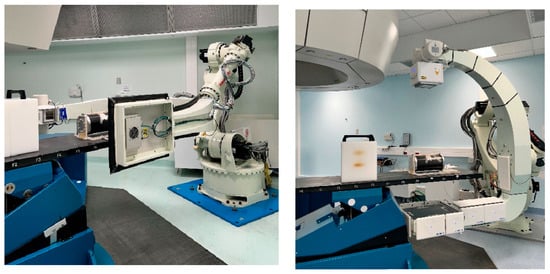

Figure 2.

Robotic CBCT devices in CNAO Rooms 2 (left) and 1 (right). The former allows us to acquire exclusively full-fan (FF) (i.e., small FOV) CBCT scans, while the latter also enables half-fan (HF) (i.e., large FOV) acquisitions.

2.3. Imaging Data

The CT scans were acquired using a Siemens Somatom Sensation Open Bore scanner (Siemens, Germany), with an image size of 512 × 512 × (94–107) and voxel spacing of 0.9765 × 0.9765 × 2 mm3, with a grayscale range of [0, 4095]. The CBCT scans were acquired using a Varian A-277 X-ray tube and a Varian PaxScan 4030D flat-panel detector (Varian: Las Vegas, NV, USA) installed on the c-arm of the robotic imaging system [45]. About 600 projective images are acquired during a 220° gantry rotation around the patient and used to reconstruct the 3D volume by means of the Feldkamp–Davis–Kress (FDK) algorithm [45]. Image size was 220 × 220 × 220, voxel spacing was 1 × 1 × 1 mm3, and the greyscale range was [−1024, 1023]. For each patient, all available CT scans and CBCT scans acquired at a one-day distance at most were considered. If more than one CBCT scan was acquired in the same treatment session, only the most recent one was selected. This time constraint was deemed necessary to limit anatomical variability, thus enabling us to bypass DIR and perform a reliable and consistent CBCT-CT rigid registration (RR), as proposed by Rossi et al. on the same CBCT system [47]. As a matter of fact, DIR might introduce uncertainties and require proper validation, especially when the CBCT volume is flawed and has a narrow FOV [47].

2.4. Pre-Processing

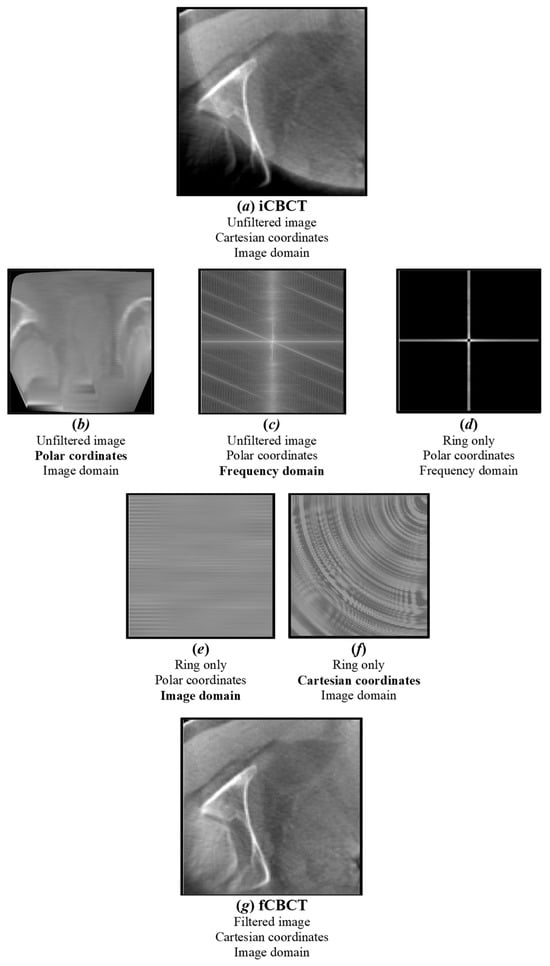

All images were pre-processed using MATLAB R2023b (The MathWorks Inc., Natick, MA, USA) before being presented to the DL network. First of all, all CBCT images were pre-aligned to the CT coordinate system to obtain input CBCT (iCBCT). After this, a ring artefact attenuation method was applied by transforming the images from Cartesian to polar coordinates and by isolating the characteristic signature of the ring in the frequency domain by means of discrete Fourier transform (DFT) (Figure 3), following these steps:

- Each CBCT slice is converted from Cartesian to polar coordinates using interpolation [48]. In Cartesian coordinates, the ring manifests itself as a series of concentric rings, with the centre coinciding with the reconstructed image centre. In polar coordinates, instead, it is converted into parallel horizontal stripes [49].

- The DFT of the CBCT in polar coordinates is computed: horizontal stripes appear as a vertical band in the middle of the whole spectrum, i.e., their high-frequency information is located at the centre position of the image [49].

- Low-pass filtering in the frequency domain is used to remove artefacts while the high-frequency details of the image are preserved [49].

- The ring’s frequencies are converted back from frequency to imaging domain-polar coordinates, appearing as a series of parallel stripes. An anisotropic Gaussian filter is also applied to smooth stripes in the horizontal direction only.

- The ring in polar coordinates is converted back to Cartesian coordinates, clamping its values in the [−10 10] range, in order not to significantly affect the original CBCT grey-level distribution.

- The ring in Cartesian coordinates is algebraically subtracted to the original image to obtain a new image with the ring artefact significantly reduced.

Figure 3.

Ring artefact reduction pipeline. The original image (a) (iCBCT) is converted from Cartesian to polar coordinates (b) and its DFT is computed (c). A notch filter is applied to isolate the major ring’s frequencies (d), which are converted back to the image domain (e) and Cartesian coordinates (f). The output filtered image (g) (fCBCT) is obtained by subtracting the ring from the original image.

At the end of this pipeline, a filtered CBCT (fCBCT) is obtained. Before CBCT-CT registration, the CBCT grayscale was shifted to approximate that of the CT, that is, [0, 4095]. This was achieved by shifting all CBCT values in the positive direction by their minimum values; in this way, minima of CBCT and CT coincide and are equal to 0. Even if CycleGAN does not require one-to-one registered voxel information, before feeding the data to the network, a resampling of CBCT imaging data and a rigid registration of CBCT on CT was performed, as proposed in [24], to obtain the registered CBCT (rCBCT) volume. The voxel spacing and image size of pre-processed data were consequently unified as those of CT, that is, 0.9765 × 0.9765 × 2 mm3 and 512 × 512 × (94–107), respectively. As the scanner range of CT was, in general, longer than that of CBCT, to balance the anatomic extent in the longitudinal direction, CT slices beyond the CBCT FOV were removed, as seen in [17]. Additionally, as the two most cranial and caudal CBCT slices were often truncated, they were removed systematically for all volumes, as also proposed by [8]. For the sake of computational efficiency and faster convergence, all images were then normalised to a (−1, 1) range, as suggested by [50]. This kind of normalisation preserves the original image contrast [50].

2.5. CycleGAN

A CT synthesis problem under a DL framework was formulated in this study. CycleGAN has been selected as it allows us to perform an image-to-image translation task using unpaired data [8,23,24,38,39,40,42]. This choice was mandatory in this dataset as a proper ground truth was not available, as CBCT scans and CT scans, even if acquired one day apart at most, still presented a certain degree of anatomical variation.

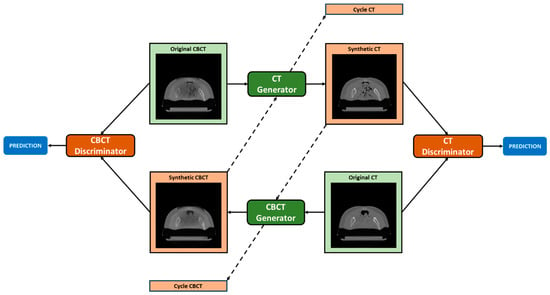

The particular design of CycleGAN, which consists of two generators and two discriminators, enables bidirectional translation between CBCT and CT domains. Each generator is trained to generate images of a specific domain, while fooling the discriminator of the corresponding domain that is responsible for distinguishing fake (i.e., synthetic) and real images in an adversarial manner. The assumption is that the generator, by learning the mapping from the source to the target domain, is capable of generating images that are similar to target domain images and have an ideally identical distribution as of the empirical distribution of the target domain data. In particular, the CT generator is responsible for generating pseudo-CT images (i.e., sCT) from CBCT images, and the CT discriminator is employed to distinguish the sCT scans from real CT scans (i.e., vCT scans), in a binary fashion, i.e., 1 = real, 0 = fake. The consistency of image translation from one domain to the other one is established by the cycle consistency concept proposed by [51]. Cycle consistency implies that if one input image is translated to the other domain and then translated back to the original domain, the output must be ideally identical to the initial input. For instance, one original CBCT is given to the CT generator and then the sCT (which is the output of the CT generator) is translated back to the CBCT domain through the CBCT generator; it prevents the two learned mappings (CBCT to CT and vice versa) from contradicting each other [51]. In order to maintain that the HU value of the generated image is as similar as possible to the target domain, identity mapping is also incorporated. Accordingly, the CT generator takes as input CT images, which are assumed to remain unchanged after going through the generator. The overall network structure is depicted in Figure 4.

Figure 4.

CycleGAN topology. The CT generator converts original CBCT into pseudo-CT (Synthetic CT) and pseudo-CBCT back to CT (Cycle CT). The CBCT generator converts original CT into pseudo-CBCT (Synthetic CBCT) and pseudo-CT back to CBCT (Cycle CBCT). CBCT and CT discriminators are employed to judge the authenticity of generated pseudo-CBCT and pseudo-CT images. The loss obtained from the discriminators is used to update the weights of both the generator and discriminator networks.

To achieve the above-mentioned goals (matching distributions, contradiction prevention and HU preservation), the following losses are presented in the objective. The two first terms (, Equations (1) and (2)) are adversarial losses that are applied to mapping functions from CBCT to CT and vice versa, respectively. This is a min-max competition in the sense that the generator tries to minimise this term by generating images that are as similar as possible to those of the target domain, while the discriminator aims to maximise the term by distinguishing fake and real images. Additionally, it employs cycle consistency loss (, Equation (3)) to ensure mappings are bijective, allowing for the reconstruction of original images from translated versions. Finally, identity loss (, Equation (4)) is added to emphasise HU preservation between vCT and sCT, and between CBCT and synthetic CBCT. The full objective (L, Equation (5)) combines the adversarial losses, the cycle consistency loss and the identity loss, and parameters α and β control the relative importance. In the equations, G is the CT generator, F is the CBCT generator, DCT is the CT discriminator, DCBCT is the CBCT discriminator, and E is the expected value.

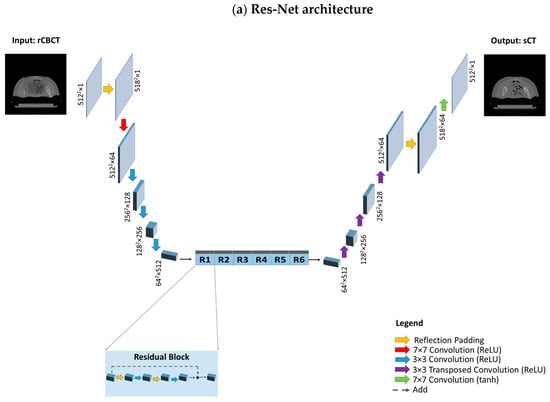

Two different architectures that are commonly utilised for image reconstruction were investigated for generators, namely Res-Net and U-net, as previously performed by [8,52]. In the former, the encoding part is composed of 2D convolution layers (kernel size 3 × 3, strides 2) followed by instance normalisation and ReLU as the activation function. It features symmetric downsampling and upsampling layers holding 6 residual blocks in between; each residual block is composed of 2D convolutional blocks (kernel size 3 × 3, strides 1), instance normalisation and reflection padding (1, 1). The decoder part of the network consists of 2D transpose convolution layers to restore the input shape of 512 × 512 × 1, zero padding, instance normalisation, and ReLU as the activation function. Concerning the last layer, convolution (kernel size 7 × 7, strides 1) is applied. Regarding U-net, skip connections concatenated from corresponding encoding layers help to preserve the spatial information lost in the contracting path, which facilitates locating features more accurately within the decoding path. Both discriminators have a similar structure and are composed of 2D convolutional blocks (kernel size 4 × 4, stride 2), zero padding, instance normalisation, and Leaky ReLU. Res-Net and U-Net architectures are depicted in Figure 5.

Figure 5.

Model architectures. (a) Res-Net architecture, composed of a downsampling path, a stack of residual blocks and an upsampling path. The downsampling path reduces the spatial dimension from 5122 to 642 through convolution layers, while increasing the depth from 1 to 512. Each residual block, consisting of reflection padding and convolution layers, provides as output the sum between the input activation map and the output of the last convolution layer. The upsampling path is composed of transposed convolution layers which increase the spatial dimension to restore the initial size (from 642 to 5122), while reducing the depth from 512 to 1. (b) The U-Net architecture is composed of a downsampling and an upsampling path; however, instead of residual blocks, there exist skip connections that directly transfer information through concatenating the activation maps from the donsampling path to the corresponding activation map with the same spatial dimension and depth in the upsampling path.

Regarding training configuration, the model was implemented in a dedicated workstation using Python 3.10.11, TensorFlow 2.10.0 and NVIDIA RTX A4000 graphical processing unit (GPU) (NVIDIA Santa Clara, CA, USA). A batch size of 2 was tried for U-Net and 1 for Res-Net. The training time per epoch was slightly over 25 min for U-Net and 105 min for Res-Net. An Adam optimizer was applied for optimisation and a random normal initializer was recruited to initialise network weights. The model was trained on 2D images of a size of 512 × 512 × 1.

2.6. Evaluation Metrics

In the context of APT, the quality of generated sCT images is commonly assessed either using image or dose similarity metrics [37]. In this work, the goodness of the generated sCT images is evaluated using image metrics only, as dosimetric evaluations will be the object of future investigations.

Among image similarity metrics, structural similarity (SSIM) and the peak signal-to-noise ratio (PSNR) were selected, being sufficiently robust towards anatomical variations between a reference image (i.e., vCT) and an examined image (i.e., sCT), which are likely to occur in the dataset herein considered.

SSIM is able to quantify the similarity between a couple of images as a combination of luminance (l), contrast (c) and structure (s) [53,54]:

where

Here, α, β and γ are positive constants, μx and μy are the local means, σx and σy are the standard deviations and σxy is the cross-covariance for images x and y. SSIM varies between 0 and 1, with higher values corresponding to higher image similarity [24].

The PSNR is computed as the ratio between the maximum signal power (Pmax) and the noise affecting the quality of representation (root mean square error, RMSE), and is expressed in decibels (dB) [37].

The sCT image quality was also assessed qualitatively by the visual inspection of the output image. The following image characteristics were investigated: image texture and contrast, the fidelity to the original anatomy and the presence of artefacts.

3. Results

3.1. Patients’ Data

Out of the 501 screened patients (<30 yo as of 31st December 2023), 21 (12 paediatric patients and 9 young adults, median age 17 yo) met the inclusion criteria and were included in the study. Nine were treated with protons, the remainder with carbon ions, with different treatment schedules. For all of them, pCT scans and vCT scans were exported in the DICOM format and were anonymized from the RayStation 11B (RaySearch Laboratories AB, Stockholm, Sweden) treatment planning system (TPS), while CBCT volumes and relative metadata were collected and anonymized from the dedicated hospital repository.

3.2. Training–Testing Split

Of the 55 same-day CBCT scans collected from the 21 patients, 44 (15 patients) were included in the study, and were divided into training (36 volumes, 3820 slices, 10 patients) and testing (8 volumes, 812 slices, 5 patients) sets. As a general rule, patients with more eligible CBCT scans available were included in the training set, to provide more data to the model to better learn the diverse features and representations while still guaranteeing a sufficient number of unseen test cases from the rest of the patients. Only 5 of the 44 volumes were produced with CT scans made at a one-day distance with respect to the CBCT; the remainder were acquired exactly on the same day. Eleven volumes (6 patients) were discarded due either to CT import issues, registration failure, or major image quality problems (Table 1 and Figure S1).

Table 1.

Summary of patients’ characteristics, including clinical, treatment and imaging data as well as training configuration information.

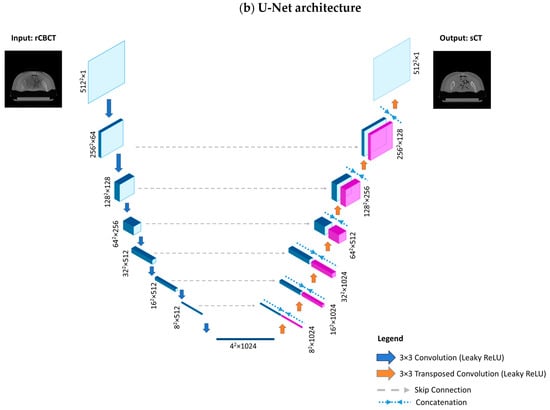

3.3. Pre-Processing Outcomes

Despite some residuals in the centre of the image, the ring artefact was strongly reduced while contrast and details remained almost unaltered (Figure 6). Registration was acceptable except for three cases, which were excluded from the analysis.

Figure 6.

Pre-processing outcome. CBCT before (a) and after (b) the ring artefact reduction procedure. The magnification shown in the two bottom figures further highlights how the stripes typical of the ring artefact (c) are strongly reduced in (d) while maintaining an acceptable image contrast.

3.4. Qualitative Evaluation

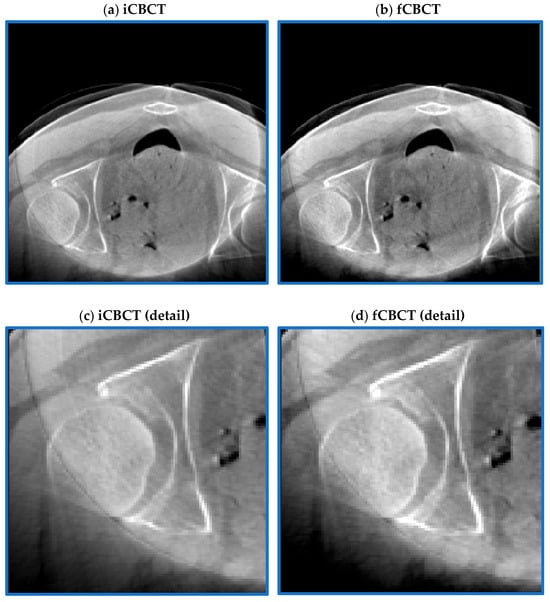

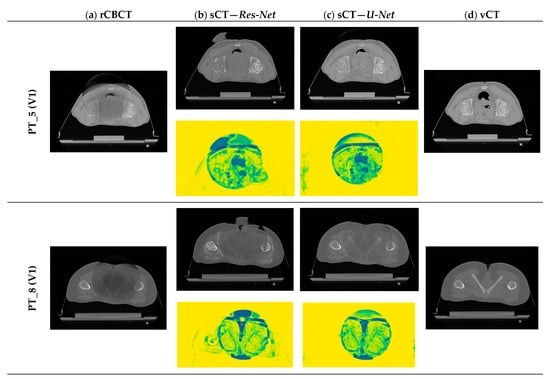

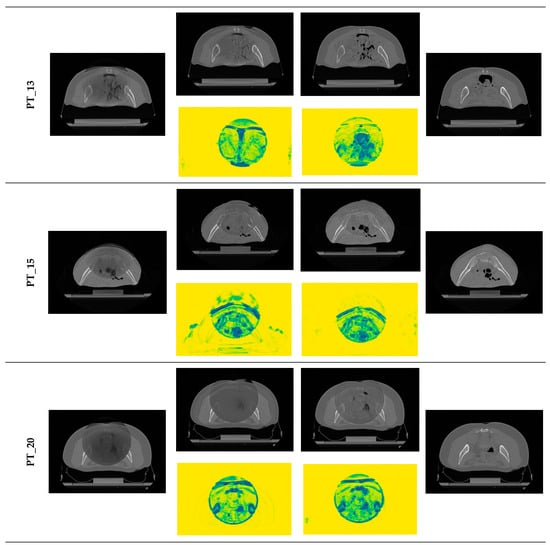

Qualitative evaluation is of paramount importance in exploratory studies assessing the performance of a network performing image-to-image translation tasks, in order to detect at a glance evident major issues beyond numerical results, as shown in [55]. To visually evaluate the performance of the proposed sCT generation infrastructure, some representative sCT slices and similarity maps of all testing patients (one volume per patient) are shown in Figure 7, for both Res-Net and U-Net.

Figure 7.

Examples of generated sCT scans. Examples of one representative slice for one sCT volume of all testing patients, generated from rCBCT (column a) using both Res-Net (column b) and U-Net (column c). Similarity maps (yellow background) are also shown: the degree of modification is given by the colour scale: yellow = 1 (i.e., no change, highest similarity), dark blue = 0 (i.e., highest discrepancy).

For both architectures, although CBCT and CT pairs were acquired on the same day or at most one day apart, some degree of anatomical variation was observed for all patients. Regarding image quality, it is evident that the sCT image texture approximates better that of vCT if compared to the original CBCT. In particular, soft tissues’ contrast is enhanced, air pockets are crisper and bones are sharper. High-frequency artefacts, such as streaks, were not removed completely but were significantly suppressed. CBCT FOV edges appear attenuated but remain visible. As hypothesized, the similarity maps, depicting the residual image between input CBCT on CT and sCT, witness that most changes (dark dots) brought by the network occur within the CBCT FOV and involve air and bones. It also emerges that the quality of the sCT depends on the quality of the input CBCT or pre-processing. In the examples in Figure 7, it can be appreciated that for patients 5 and 13, the better rCBCT appearance reflects on the better sCT image quality. Slight intensity variations between adjacent slices occur, as a consequence of the 2D implementation of the network, that synthesise the sCT not as a 3D volume but as a series of consecutive 2D slices (Figure 8).

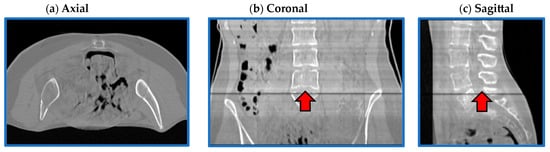

Figure 8.

Flaws in coronal and sagittal views. Axial (a), coronal (b) and sagittal (c) views from one representative sCT (PT_13). Image intensity appears uniform in the axial plane, while it slightly varies in the coronal and sagittal views. In (b,c), it is evident that the intensity of one slice was significantly different to the adjacent ones.

Finally, when comparing Res-Net with U-Net, it is evident that the latter provides a better delineation of the air–tissue interface as well as better soft tissue contrast in this setting of patients.

3.5. Quantitative Evaluation

The SSIM and PSNR values used to quantify image quality for both Res-Net and U-Net are reported in Table 2. Results are reported as the median (across slices) and interquartile range for all patients and volumes. A visual representation is also provided in Figures S2 and S3. SSIM values were computed for all rCBCT-vCT and sCT-vCT pairs, to assess the degree of improvement brought by the CycleGAN. The analysis focused exclusively on the area enclosed by the original CBCT, in order to exclude CT values outside, that are expected to be unaffected by the network. A Wilcoxon signed-rank test was performed to assess if any possible difference between input and output images in terms of the SSIM and PSNR was statistically significant (p-value < 0.05). This non-parametric test was employed as it does not require a normal distribution of the two populations. The statistically significant different distributions of SSIM and PSNR before and after CycleGAN processing, for all patients but one, witness the improvements brought on by the network. For patient 8, no statistically significant differences were observed between U-Net SSIM values.

Table 2.

Image quality quantitative evaluation.

4. Discussion

In this work, we have proposed and evaluated a CycleGAN-based approach to translate daily acquired CBCT scans into sCT scans in a cohort of paediatric patients and young adults treated with proton or carbon ion therapy. Even if the clinical validity for dose calculation purposes has not been proven yet, the satisfactory results in terms of qualitative and quantitative image quality suggest the potential usefulness of these sCT scans in APT pathways.

In particular, two generator architectures have been investigated, with one of them (i.e., U-Net) outperforming the other one (i.e., Res-Net) in the considered dataset, with the described pre-processing and with the chosen hyper-parameters’ configuration. Overall, sCT scans obtained from U-Net contain more details and, from an anatomical point of view, images are more realistic. In addition, this network almost does not change the regions in the input image that correspond to real CT values, indicating that it succeeds in fulfilling the identity mapping objective. On the other hand, Res-Net shows some deficiencies in effectively reproducing the air–body interface, soft tissues and bony anatomy. It was also evident from results that the quality of the generated sCT is highly dependent on the quality of the input images and pre-processing. Specifically, stronger artefacts affecting the image as well as poorer tissues contrast during the acquisition phase, which, although attenuated, still remained visible. Nevertheless, no evident flaws like the ones reported in [55] were observed.

This work presents several strengths that are worth acknowledging. First of all, few studies employ CBCT scans with a FOV smaller than the patient’s body in the axial plane [56]. Additionally, Rossi et al. applied deep learning algorithms to narrow-FOV truncated CBCT scans acquired with the same system but exclusively for image quality improvement [47]. Furthermore, in that study, CT volume was cropped to CBCT FOV, while here, CBCT volume was embedded in CT. Additionally, the framework was tailored for a population of paediatric patients with pelvic malignancies, which is a challenging application due to the high inter-fraction variability due to bowel filling conditions [8]. Indeed, this is one of the few studies on CBCT correction that includes paediatric data [3,8,17]. As a matter of fact, it is more common to use MRI to CT conversion for paediatric patients [3]. However, one comparative study on an adult head and neck cancer population demonstrated the non-inferiority of CBCT-based sCT to the MRI-based counterparts for dose calculation purposes for APT applications [11]. The choice of the paediatric population was mainly driven by two factors: the first was related to the clinical significance of the study, as younger patients are more sensitive to the imaging radiation dose and CBCT-based sCT could in theory avoid unnecessary vCT, and the second factor was related to technical reasons, as a smaller patient’s body is more suitable for narrow FOVs. The availability of a CBCT system fully developed in-house brings extraordinary flexibility and potential groundbreaking advantages as it allows us, in principle, to (1) explore new strategies based on raw data and (2) to easily streamline the sCT generation and APT process in future implementations. Another peculiarity characterising this study is training the model on spurious CBCT images, i.e., CBCT scans embedded in CT scans. Admittedly, most studies in the literature use pure CBCT scans as input for the networks [41,42,57,58]. However, the same studies employ large FOV CBCT images, that easily include the whole body part considered. Furthermore, other investigations exist that incorporate CT information as input [50]. In our case, this choice was driven by the final clinical endpoint of the study, i.e., producing CT-like images to be used for evaluating anatomical changes and for dose recalculation purposes. Our results demonstrated that this approach was successful from several points of view. As a matter of fact, we observed that changes occurred mainly within the CBCT FOV, demonstrating that the model effectively learned the mapping from the CBCT domain to the CT domain. To conclude, it is worth mentioning that, even though training was performed on CBCT images registered with and embedded in CT scans, similarity metrics were evaluated exclusively within the CBCT FOV: in this way, it was possible to establish the actual performance of the networks in mapping CBCT images into the CT domain.

Despite being innovative in nature, this study presents some limitations which are worth acknowledging. First of all, the sample size is small in consideration of the huge amount of data that is typically required for training deep convolutional neural networks (DCNNs). However, the number of paediatric patients treated for abdominal or pelvic tumours is known to be small in radiation oncology departments [17] and is a common issue in all similar studies involving this setting of patients (ours: 15 patients, 44 volumes; Sheikh et al. 2022 [3], Ates et al. 2023 [59]: 10 patients/10 volumes; Uh et al. 2021 [17]: 64 patients/64 volumes; Szmul et al. 2023 [8]: 64 patients/209 volumes, but in conventional radiotherapy). Another important limitation is the lack of a proper ground truth, which prevents us from performing a voxel-wise evaluation of image quality also in terms of HU accuracy, by means of metrics such as mean absolute error (MAE) or mean square error (MSE). Qiu et al. performed DIR between pCT and CBCT to produce perfectly paired data and employ supervised approaches [22]. However, as correctly pointed out by Szmul et al., achieving accurate DIR results is challenging, especially in the pelvic district characterised by important bowel gas changes [8]. In addition, from a DL perspective, the unavailability of paired data is not an issue, if unsupervised approaches, like the one herein investigated, are employed [8]. To make up for the absence of a gold standard, future implementations of the project could foresee the use of computational phantoms to ensure the perfect correspondence between rCBCT and vCT, as also suggested by Parrella et al. for MRI applications [60].

Another minor limitation is the occasional intensity variations between consecutive slices. This issue was not unexpected, as a 2D architecture was employed with no specific constraints enforced between adjacent slices, and can be easily solved using appropriate post-processing methods. Similar issues in the cranio-caudal direction were reported in similar studies in the literature employing 2D networks [8,24]. Overall, no major defects in axial and coronal planes were observed, as reported instead in [24].

Overall, the proposed sCT generation pipeline can be further improved by optimising pre-processing and fine-tuning the network’s hyperparameters. This can be particularly true for the Res-Net architecture, which significantly underperformed compared to the other one. Our choice was to pre-select the best pre-processing parameters and the network’s hyperparameters so that results from different architectures and configurations were more easily comparable [8].

Regarding PT_08, the decrease in performance can be ascribed to the different anatomical district considered for this patient (lower limbs) compared to that of the patients used to train the model (pelvis). This is a perfect example of how the low sample size has negatively impacted on the generalizability capabilities of the model.

SSIM and PSNR values before (rCBCT vs. vCT) and after (sCT vs. vCT) witness the image quality boost brought on by the network for most patients. From a quantitative standpoint, excluding PT_08, the average median SSIM increased from 0.72 (rCBCT vs. vCT) to 0.78 and 0.75 (sCT vs. vCT) for Res-Net and U-Net, respectively. Similarly, the average median PSNR value augmented from 47.77 (rCBCT vs. vCT) to 51.51 and 50.21 for Res-Net and U-Net, respectively. Our results are comparable with the published literature. Chen and colleagues [50] reported SSIM and PSNR values increasing from 0.9079 to 0.9642 and from 26.74 to 30.75, respectively, after feeding the images to a U-net-based model. Similarly, Liang and colleagues [41] reported SSIM and PSNR values increasing from 0.73 to 0.85 and from 25.28 to 30.65, respectively, employing CycleGAN to generate sCT from CBCT. As mentioned earlier, it was not possible to assess the absolute quality of sCT due to the absence of a true gold standard.

This study represents a preliminary investigation that paves the way to a large variety of possible future directions, including the following:

- Training and testing sets’ enlargement by including adult patients and employing data augmentation techniques—it is expected that by increasing the sample size, the performance of the network will improve;

- Validation on other anatomical areas, as previous investigations have demonstrated the inter-anatomic generalizability of DCNNs [17];

- Validation on pelvic and extra-pelvic images acquired in both FF and HF modalities using the newly developed system in CNAO Room 1, as soon as first data are available;

- Validation on publicly available data repositories to assess the performance of the network to generalise on data from different CBCT systems [61];

- Employment of computational phantoms to make up for the lack of a ground truth by properly retraining the network [21,41];

- Exploration of the 3D variants of the networks, by either decreasing the FOV and reducing computational burden or by employing powerful cloud computing services that are compliant with data protection regulation [17];

- Inclusion of the other image similarity metrics by selecting specific regions of interest (ROIs) to bypass the problems related to possible anatomical mismatch [55];

- Dosimetric validation by means of dose difference pass rates (DPRs), gamma pass rates (GPRs) and dose–volume histogram (DVH)-based parameters [62,63];

- In vivo range verification and assessment of CT number accuracy of sCT to detect failures and outliers in the generated images [64];

- Evaluation of the capability of CBCT-based sCT to trigger replanning [4,15,56];

- Theoretical feasibility of integrating a sCT software in a clinical APT workflow [64,65], estimating the increase in clinical workload [66,67];

- Investigation of CycleGAN performances with different simulated CBCT imaging dose levels to assess the suitability of this method in low-dose paediatric protocols [68].

In recent years, AI applications in radiation oncology and medical physics have gained increasing popularity [11]. As stated by Spadea et al. [69], “DL-based generation of sCT has a bright future, with extensive amount of research work being done on the topic”. However, even if PT is more sensitive than photon radiotherapy to interfractional changes [70], the clinical integration of sCT into APT pathways remains circumscribed to few selected treatment sites and limited data [71]. A recent survey on the topic confirmed that the implementation of online daily APT is limited, with two centres only using the plan library [70]. A major obstacle is represented by the fact that CBCT-based APT pathways are not compatible with the fast clinical workflow and the small number of available clinical resources [72]. On the other hand, it was also experimentally demonstrated that APT requires a similar amount of time to that of a standard treatment delivery [73]. In general, a more structured cooperation between clinicians, researchers and industries is warranted to convert research outcomes into concrete clinical implementations.

5. Conclusions

In the era of personalised medicine and tailored treatments, APT is deemed to be a game changer. Despite the undisputable importance of adapting the treatment plan to the anatomy of the day, especially for high-variability districts and more sensitive populations (e.g., paediatrics), online adaptation approaches still lag behind and the interest of the scientific community is growing exponentially. In this scenario, this work represents a very preliminary attempt to improve the quality of the images obtained from non-commercial custom CBCT devices towards the generation of sCT scans for offline and online treatment adaptation in a real clinical environment. Despite the above-mentioned limitations to be addressed and future directions to be pursued, the present study adds to the current body of the literature highlighting the groundbreaking potential of DCNNs in generating a high-quality sCT even with narrow-FOV truncated CBCT as an input.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/s24237460/s1, Figure S1: Dataset. Figure S2: Slice-wise SSIM values before (rCBCT vs vCT) and after (sCT vs vCT) CycleGAN for both Res-Net (left) and U-Net (right) architectures. Median values are also reported. Figure S3: SSIM (up) and PSNR (down) values distribution before (vCT vs rCBCT) and after (vCT vs sCT) CycleGAN, for both architectures (Res-Net, left and U-Net, right). Median values are also reported.

Author Contributions

Conceptualization, M.P. and A.P.; methodology, M.P. and A.P.; software, M.P. and S.T.; validation, C.P. and G.B.; formal analysis, M.P., S.T. and A.P.; investigation, M.P. and A.P.; resources, E.O. and G.B.; data curation, M.P.; writing—original draft preparation, M.P. and A.P.; writing—review and editing, all authors; visualisation, all authors; supervision, S.V., M.C., C.P., E.O. and G.B.; project administration, M.P., A.P. and G.B.; funding acquisition, not applicable. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the local Ethical Committee “Comitato Etico Territoriale Lombardia 6” under notification number 0052020/23, prot. CNAO OSS 57 2023.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Restrictions apply to the dataset.

Conflicts of Interest

The authors declare no conflicts of interest.

List of Abbreviations

| AI | Artificial Intelligence |

| APT | Adaptive PT |

| c | Contrast |

| C | Carbon ion |

| CBCT | Cone beam CT |

| CNAO | Centro Nazionale di Adroterapia Oncologica (National Centre for Oncological Hadrontherapy) |

| CT | Computed tomography |

| DCBCT | CBCT discriminator |

| DCT | CT discriminator |

| DCNN | Deep convolutional neural network |

| DFT | Discrete Fourier transform |

| DIR | Deformable image registration |

| DL | Deep learning |

| DPR | Dose Pass Rate |

| DVH | Dose–volume histogram |

| E | Expected value |

| F | Female |

| fCBCT | Filtered CBCT |

| FDK | Feldkamp–Davis–Kress (algorithm) |

| FF | Full-fan (CBCT) |

| FOV | Field of view |

| Fx | Fraction |

| GAN | Generative adversarial network |

| GPR | Gamma pass rate |

| GPU | Graphical processing unit |

| Gy | Grey |

| HF | Half-fan (CBCT) |

| HU | Hounsfield unit |

| iCBCT | Input CBCT |

| l | Luminance |

| L | Full objective |

| LIDENTITY | Identity loss |

| LGAN-F | Adversarial loss (F: CBCT generator) |

| LGAN-G | Adversarial loss (G: CT generator) |

| M | Male |

| MAE | Mean absolute error |

| MRI | Magnetic resonance imaging |

| MSE | Mean square error |

| OAR | Organ at risk |

| P | Proton |

| pCT | Planning CT |

| PSNR | Peak signal-to-noise ratio |

| PT | Particle therapy |

| PT_ | Patient |

| RBE | Relative Biological Effectiveness |

| rCBCT | CBCT registered (on CT) |

| RMSE | Root mean square error |

| RR | Rigid registration |

| s | Structure |

| SSIM | Structural similarity |

| sCT | Synthetic CT |

| TPS | Treatment Planning System |

| TR | Training |

| TS | Testing |

| vCT | Verification CT |

| X | Discarded |

| Yo | Years old |

References

- Stock, M.; Georg, D.; Ableitinger, A.; Zechner, A.; Utz, A.; Mumot, M.; Kragl, G.; Hopfgartner, J.; Gora, J.; Böhlen, T.; et al. The technological basis for adaptive ion beam therapy at MedAustron: Status and outlook. Z. Med. Phys. 2018, 28, 196–210. [Google Scholar] [CrossRef] [PubMed]

- Thummerer, A.; Seller Oria, C.; Zaffino, P.; Meijers, A.; Guterres Marmitt, G.; Wijsman, R.; Seco, J.; Langendijk, J.A.; Knopf, A.C.; Spadea, M.F.; et al. Clinical suitability of deep learning based synthetic CTs for adaptive proton therapy of lung cancer. Med. Phys. 2021, 48, 7673–7684. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Sheikh, K.; Liu, D.; Li, H.; Acharya, S.; Ladra, M.M.; Hrinivich, W.T. Dosimetric evaluation of cone-beam CT-based synthetic CTs in pediatric patients undergoing intensity-modulated proton therapy. J. Appl. Clin. Med. Phys. 2022, 23, e13604. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Taasti, V.T.; Hattu, D.; Peeters, S.; van der Salm, A.; van Loon, J.; de Ruysscher, D.; Nilsson, R.; Andersson, S.; Engwall, E.; Unipan, M.; et al. Clinical evaluation of synthetic computed tomography methods in adaptive proton therapy of lung cancer patients. Phys. Imaging Radiat. Oncol. 2023, 27, 100459. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Arai, K.; Kadoya, N.; Kato, T.; Endo, H.; Komori, S.; Abe, Y.; Nakamura, T.; Wada, H.; Kikuchi, Y.; Takai, Y.; et al. Feasibility of CBCT-based proton dose calculation using a histogram-matching algorithm in proton beam therapy. Phys. Med. 2017, 33, 68–76. [Google Scholar] [CrossRef] [PubMed]

- La Fauci, F.; Augugliaro, M.; Mazzola, G.C.; Comi, S.; Pepa, M.; Zaffaroni, M.; Vincini, M.G.; Corrao, G.; Mistretta, F.A.; Luzzago, S.; et al. Dosimetric Evaluation of the Inter-Fraction Motion of Organs at Risk in SBRT for Nodal Oligometastatic Prostate Cancer. Appl. Sci. 2022, 12, 10949. [Google Scholar] [CrossRef]

- Rosa, C.; Caravatta, L.; Di Tommaso, M.; Fasciolo, D.; Gasparini, L.; Di Guglielmo, F.C.; Augurio, A.; Vinciguerra, A.; Vecchi, C.; Genovesi, D. Cone-beam computed tomography for organ motion evaluation in locally advanced rectal cancer patients. Radiol. Med. 2021, 126, 147–154. [Google Scholar] [CrossRef] [PubMed]

- Szmul, A.; Taylor, S.; Lim, P.; Cantwell, J.; Moreira, I.; Zhang, Y.; D’Souza, D.; Moinuddin, S.; Gaze, M.N.; Gains, J.; et al. Deep learning based synthetic CT from cone beam CT generation for abdominal paediatric radiotherapy. Phys. Med. Biol. 2023, 68, 105006. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Taylor, S.; Lim, P.; Cantwell, J.; D’Souza, D.; Moinuddin, S.; Chang, Y.C.; Gaze, M.N.; Gains, J.; Veiga, C. Image guidance and interfractional anatomical variation in paediatric abdominal radiotherapy. Br. J. Radiol. 2023, 96, 20230058. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Lalonde, A.; Bobić, M.; Winey, B.; Verburg, J.; Sharp, G.C.; Paganetti, H. Anatomic changes in head and neck intensity-modulated proton therapy: Comparison between robust optimization and online adaptation. Radiother. Oncol. 2021, 159, 39–47. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Thummerer, A.; de Jong, B.A.; Zaffino, P.; Meijers, A.; Marmitt, G.G.; Seco, J.; Steenbakkers, R.J.H.M.; Langendijk, J.A.; Both, S.; Spadea, M.F.; et al. Comparison of the suitability of CBCT- and MR-based synthetic CTs for daily adaptive proton therapy in head and neck patients. Phys. Med. Biol. 2020, 65, 235036. [Google Scholar] [CrossRef] [PubMed]

- Yao, W.; Zhang, B.; Han, D.; Polf, J.; Vedam, S.; Lasio, G.; Yi, B. Use of CBCT plus plan robustness for reducing QACT frequency in intensity-modulated proton therapy: Head-and-neck cases. Med. Phys. 2022, 49, 6794–6801. [Google Scholar] [CrossRef] [PubMed]

- Dzierma, Y.; Mikulla, K.; Richter, P.; Bell, K.; Melchior, P.; Nuesken, F.; Rübe, C. Imaging dose and secondary cancer risk in image-guided radiotherapy of pediatric patients. Radiat. Oncol. 2018, 13, 168. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Pham, T.T.; Whelan, B.; Oborn, B.M.; Delaney, G.P.; Vinod, S.; Brighi, C.; Barton, M.; Keall, P. Magnetic resonance imaging (MRI) guided proton therapy: A review of the clinical challenges, potential benefits and pathway to implementation. Radiother. Oncol. 2022, 170, 37–47. [Google Scholar] [CrossRef] [PubMed]

- Stanforth, A.; Lin, L.; Beitler, J.J.; Janopaul-Naylor, J.R.; Chang, C.W.; Press, R.H.; Patel, S.A.; Zhao, J.; Eaton, B.; Schreibmann, E.E.; et al. Onboard cone-beam CT-based replan evaluation for head and neck proton therapy. J. Appl. Clin. Med. Phys. 2022, 23, e13550. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Nesteruk, K.P.; Bobić, M.; Lalonde, A.; Winey, B.A.; Lomax, A.J.; Paganetti, H. CT-on-Rails Versus In-Room CBCT for Online Daily Adaptive Proton Therapy of Head-and-Neck Cancers. Cancers 2021, 13, 5991. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Uh, J.; Wang, C.; Acharya, S.; Krasin, M.J.; Hua, C.H. Training a deep neural network coping with diversities in abdominal and pelvic images of children and young adults for CBCT-based adaptive proton therapy. Radiother. Oncol. 2021, 160, 250–258. [Google Scholar] [CrossRef] [PubMed]

- Harms, J.; Lei, Y.; Wang, T.; McDonald, M.; Ghavidel, B.; Stokes, W.; Curran, W.J.; Zhou, J.; Liu, T.; Yang, X. Cone-beam CT-derived relative stopping power map generation via deep learning for proton radiotherapy. Med. Phys. 2020, 47, 4416–4427. [Google Scholar] [CrossRef] [PubMed]

- Maspero, M.; Houweling, A.C.; Savenije, M.H.F.; van Heijst, T.C.F.; Verhoeff, J.J.C.; Kotte, A.N.T.J.; van den Berg, C.A.T. A single neural network for cone-beam computed tomography-based radiotherapy of head-and-neck, lung and breast cancer. Phys. Imaging Radiat. Oncol. 2020, 14, 24–31. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Schulze, R.; Heil, U.; Gross, D.; Bruellmann, D.D.; Dranischnikow, E.; Schwanecke, U.; Schoemer, E. Artefacts in CBCT: A review. Dentomaxillofac Radiol. 2011, 40, 265–273. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Neppl, S.; Kurz, C.; Köpl, D.; Yohannes, I.; Schneider, M.; Bondesson, D.; Rabe, M.; Belka, C.; Dietrich, O.; Landry, G.; et al. Measurement-based range evaluation for quality assurance of CBCT-based dose calculations in adaptive proton therapy. Med. Phys. 2021, 48, 4148–4159. [Google Scholar] [CrossRef] [PubMed]

- Qiu, R.L.J.; Lei, Y.; Shelton, J.; Higgins, K.; Bradley, J.D.; Curran, W.J.; Liu, T.; Kesarwala, A.H.; Yang, X. Deep learning-based thoracic CBCT correction with histogram matching. Biomed. Phys. Eng. Express 2021, 7, 065040. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Chang, Y.; Liang, Y.; Yang, B.; Qiu, J.; Pei, X.; Xu, X.G. Dosimetric comparison of deformable image registration and synthetic CT generation based on CBCT images for organs at risk in cervical cancer radiotherapy. Radiat. Oncol. 2023, 18, 3. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Sun, H.; Fan, R.; Li, C.; Lu, Z.; Xie, K.; Ni, X.; Yang, J. Imaging Study of Pseudo-CT Synthesized from Cone-Beam CT Based on 3D CycleGAN in Radiotherapy. Front. Oncol. 2021, 11, 603844. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Landry, G.; Nijhuis, R.; Dedes, G.; Handrack, J.; Thieke, C.; Janssens, G.; Orban de Xivry, J.; Reiner, M.; Kamp, F.; Wilkens, J.J.; et al. Investigating CT to CBCT image registration for head and neck proton therapy as a tool for daily dose recalculation. Med. Phys. 2015, 42, 1354–1366. [Google Scholar] [CrossRef] [PubMed]

- Landry, G.; Dedes, G.; Zöllner, C.; Handrack, J.; Janssens, G.; Orban de Xivry, J.; Reiner, M.; Paganelli, C.; Riboldi, M.; Kamp, F.; et al. Phantom based evaluation of CT to CBCT image registration for proton therapy dose recalculation. Phys. Med. Biol. 2015, 60, 595–613. [Google Scholar] [CrossRef] [PubMed]

- Kidar, H.S.; Azizi, H. Assessing the impact of choosing different deformable registration algorithms on cone-beam CT enhancement by histogram matching. Radiat. Oncol. 2018, 13, 217. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Liu, B.; Lerma, F.A.; Wu, J.Z.; Yi, B.Y.; Yu, C. Tissue Density Mapping of Cone Beam CT Images for Accurate Dose Calculations. Int. J. Med. Phys. 2015, 4, 162–171. [Google Scholar] [CrossRef][Green Version]

- Landry, G.; Kurz, C.; Traverso, A. The role of artificial intelligence in radiotherapy clinical practice. BJR Open. 2023, 5, 20230030. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Dayarathna, S.; Islam, K.T.; Uribe, S.; Yang, G.; Hayat, M.; Chen, Z. Deep learning based synthesis of MRI, CT and PET: Review and analysis. Med. Image Anal. 2024, 92, 103046. [Google Scholar] [CrossRef] [PubMed]

- Bengio, Y.; Goodfellow, I.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2017; Volume 1. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Amyar, A.; Ruan, S.; Vera, P.; Decazes, P.; Modzelewski, R. RADIOGAN: Deep convolutional conditional generative adversarial network to generate PET images. In Proceedings of the 7th International Conference on Bioinformatics Research and Applications, Berlin, Germany, 13–15 September 2020; pp. 28–33. [Google Scholar]

- Gatys, L.A. A neural algorithm of artistic style. arXiv 2015, arXiv:1508.06576. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Park, T.; Efros, A.A.; Zhang, R.; Zhu, J.Y. Contrastive learning for unpaired image-to-image translation. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020, Proceedings, Part. IX 16; Springer International Publishing: New York, NY, USA, 2020; pp. 319–345. [Google Scholar]

- Rusanov, B.; Hassan, G.M.; Reynolds, M.; Sabet, M.; Kendrick, J.; Rowshanfarzad, P.; Ebert, M. Deep learning methods for enhancing cone-beam CT image quality toward adaptive radiation therapy: A systematic review. Med. Phys. 2022, 49, 6019–6054. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Deng, L.; Ji, Y.; Huang, S.; Yang, X.; Wang, J. Synthetic CT generation from CBCT using double-chain-CycleGAN. Comput. Biol. Med. 2023, 161, 106889. [Google Scholar] [CrossRef] [PubMed]

- Yang, B.; Chang, Y.; Liang, Y.; Wang, Z.; Pei, X.; Xu, X.G.; Qiu, J. A Comparison Study Between CNN-Based Deformed Planning CT and CycleGAN-Based Synthetic CT Methods for Improving iCBCT Image Quality. Front. Oncol. 2022, 12, 896795. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Liang, X.; Zhang, Z.; Niu, T.; Yu, S.; Wu, S.; Li, Z.; Zhang, H.; Xie, Y. Iterative image-domain ring artifact removal in cone-beam CT. Phys. Med. Biol. 2017, 62, 5276–5292. [Google Scholar] [CrossRef] [PubMed]

- Liang, X.; Chen, L.; Nguyen, D.; Zhou, Z.; Gu, X.; Yang, M.; Wang, J.; Jiang, S. Generating synthesized computed tomography (CT) from cone-beam computed tomography (CBCT) using CycleGAN for adaptive radiation therapy. Phys. Med. Biol. 2019, 64, 125002. [Google Scholar] [CrossRef] [PubMed]

- Rusanov, B.; Hassan, G.M.; Reynolds, M.; Sabet, M.; Rowshanfarzad, P.; Bucknell, N.; Gill, S.; Dass, J.; Ebert, M. Transformer CycleGAN with uncertainty estimation for CBCT based synthetic CT in adaptive radiotherapy. Phys. Med. Biol. 2024, 69, 035014. [Google Scholar] [CrossRef] [PubMed]

- Rossi, S. The National Centre for Oncological Hadrontherapy (CNAO): Status and perspectives. Phys. Med. 2015, 31, 333–351. [Google Scholar] [CrossRef] [PubMed]

- Pella, A.; Riboldi, M.; Tagaste, B.; Bianculli, D.; Desplanques, M.; Fontana, G.; Cerveri, P.; Seregni, M.; Fattori, G.; Orecchia, R.; et al. Commissioning and quality assurance of an integrated system for patient positioning and setup verification in particle therapy. Technol. Cancer Res. Treat. 2014, 13, 303–314. [Google Scholar] [CrossRef] [PubMed]

- Fattori, G.; Riboldi, M.; Pella, A.; Peroni, M.; Cerveri, P.; Desplanques, M.; Fontana, G.; Tagaste, B.; Valvo, F.; Orecchia, R.; et al. Image guided particle therapy in CNAO room 2: Implementation and clinical validation. Phys. Med. 2015, 31, 9–15. [Google Scholar] [CrossRef] [PubMed]

- Belotti, G.; Rossi, M.; Pella, A.; Cerveri, P.; Baroni, G. A new system for in-room image guidance in particle therapy at CNAO. Phys. Med. 2023, 114, 103162. [Google Scholar] [CrossRef] [PubMed]

- Rossi, M.; Belotti, G.; Paganelli, C.; Pella, A.; Barcellini, A.; Cerveri, P.; Baroni, G. Image-based shading correction for narrow-FOV truncated pelvic CBCT with deep convolutional neural networks and transfer learning. Med. Phys. 2021, 48, 7112–7126. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Yang, Y.; Zhang, D.; Yang, F.; Teng, M.; Du, Y.; Huang, K. Post-processing method for the removal of mixed ring artifacts in CT images. Opt. Express 2020, 28, 30362–30378. [Google Scholar] [CrossRef] [PubMed]

- Huo, Q.; Li, J.; Lu, Y.; Yan, Z. Removing ring artifacts in CBCT images via L0 smoothing. Int. J. Imaging Syst. Technol. 2016, 26, 284–294. [Google Scholar] [CrossRef]

- Chen, L.; Liang, X.; Shen, C.; Nguyen, D.; Jiang, S.; Wang, J. Synthetic CT generation from CBCT images via unsupervised deep learning. Phys. Med. Biol. 2021, 66, 115019. [Google Scholar] [CrossRef] [PubMed]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Amyar, A.; Guo, R.; Cai, X.; Assana, S.; Chow, K.; Rodriguez, J.; Yankama, T.; Cirillo, J.; Pierce, P.; Goddu, B.; et al. Impact of deep learning architectures on accelerated cardiac T1 mapping using MyoMapNet. NMR Biomed. 2022, 35, e4794. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Sara, U.; Akter, M.; Uddin, M. Image Quality Assessment through FSIM, SSIM, MSE and PSNR—A Comparative Study. J. Comput. Commun. 2019, 7, 8–18. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Kida, S.; Kaji, S.; Nawa, K.; Imae, T.; Nakamoto, T.; Ozaki, S.; Ohta, T.; Nozawa, Y.; Nakagawa, K. Visual enhancement of Cone-beam CT by use of CycleGAN. Med. Phys. 2020, 47, 998–1010. [Google Scholar] [CrossRef] [PubMed]

- Reiners, K.; Dagan, R.; Holtzman, A.; Bryant, C.; Andersson, S.; Nilsson, R.; Hong, L.; Johnson, P.; Zhang, Y. CBCT-Based Dose Monitoring and Adaptive Planning Triggers in Head and Neck PBS Proton Therapy. Cancers 2023, 15, 3881. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Liu, Y.; Lei, Y.; Wang, T.; Fu, Y.; Tang, X.; Curran, W.J.; Liu, T.; Patel, P.; Yang, X. CBCT-based synthetic CT generation using deep-attention CycleGAN for pancreatic adaptive radiotherapy. Med. Phys. 2020, 47, 2472–2483. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Thummerer, A.; Zaffino, P.; Meijers, A.; Marmitt, G.G.; Seco, J.; Steenbakkers, R.J.H.M.; Langendijk, J.A.; Both, S.; Spadea, M.F.; Knopf, A.C. Comparison of CBCT based synthetic CT methods suitable for proton dose calculations in adaptive proton therapy. Phys. Med. Biol. 2020, 65, 095002. [Google Scholar] [CrossRef] [PubMed]

- Ates, O.; Uh, J.; Pirlepesov, F.; Hua, C.H.; Merchant, T.E.; Krasin, M.J. Monitoring of Interfractional Proton Range Verification and Dosimetric Impact Based on Daily CBCT for Pediatric Patients with Pelvic Tumors. Cancers 2023, 15, 4200. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Parrella, G.; Vai, A.; Nakas, A.; Garau, N.; Meschini, G.; Camagni, F.; Molinelli, S.; Barcellini, A.; Pella, A.; Ciocca, M.; et al. Synthetic CT in Carbon Ion Radiotherapy of the Abdominal Site. Bioengineering 2023, 10, 250. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Rossi, M.; Cerveri, P. Comparison of Supervised and Unsupervised Approaches for the Generation of Synthetic CT from Cone-Beam CT. Diagnostics 2021, 11, 1435. [Google Scholar] [CrossRef] [PubMed]

- Giacometti, V.; Hounsell, A.R.; McGarry, C.K. A review of dose calculation approaches with cone beam CT in photon and proton therapy. Phys. Med. 2020, 76, 243–276. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Thing, R.S.; Nilsson, R.; Andersson, S.; Berg, M.; Lund, M.D. Evaluation of CBCT based dose calculation in the thorax and pelvis using two generic algorithms. Phys. Med. 2022, 103, 157–165. [Google Scholar] [CrossRef] [PubMed]

- Seller Oria, C.; Thummerer, A.; Free, J.; Langendijk, J.A.; Both, S.; Knopf, A.C.; Meijers, A. Range probing as a quality control tool for CBCT-based synthetic CTs: In vivo application for head and neck cancer patients. Med. Phys. 2021, 48, 4498–4505. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Sibolt, P.; Andersson, L.M.; Calmels, L.; Sjöström, D.; Bjelkengren, U.; Geertsen, P.; Behrens, C.F. Clinical implementation of artificial intelligence-driven cone-beam computed tomography-guided online adaptive radiotherapy in the pelvic region. Phys. Imaging Radiat. Oncol. 2020, 17, 1–7. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Kaushik, S.; Ödén, J.; Sharma, D.S.; Fredriksson, A.; Toma-Dasu, I. Generation and evaluation of anatomy-preserving virtual CT for online adaptive proton therapy. Med. Phys. 2024, 51, 1536–1546. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Schaal, D.; Curry, H.; Clark, R.; Magliari, A.; Kupelian, P.; Khuntia, D.; Beriwal, S. Review of cone beam computed tomography based online adaptive radiotherapy: Current trend and future direction. Radiat. Oncol. 2023, 18, 144. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Chan, Y.C.I.; Li, M.; Thummerer, A.; Parodi, K.; Belka, C.; Kurz, C.; Landry, G. Minimum imaging dose for deep learning-based pelvic synthetic computed tomography generation from cone beam images. Phys. Imaging Radiat. Oncol. 2024, 30, 100569. [Google Scholar] [CrossRef] [PubMed]

- Spadea, M.F.; Maspero, M.; Zaffino, P.; Seco, J. Deep learning based synthetic-CT generation in radiotherapy and PET: A review. Med. Phys. 2021, 48, 6537–6566. [Google Scholar] [CrossRef] [PubMed]

- Trnkova, P.; Zhang, Y.; Toshito, T.; Heijmen, B.; Richter, C.; Aznar, M.C.; Albertini, F.; Bolsi, A.; Daartz, J.; Knopf, A.C.; et al. A survey of practice patterns for adaptive particle therapy for interfractional changes. Phys. Imaging Radiat. Oncol. 2023, 26, 100442. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Tsai, P.; Tseng, Y.L.; Shen, B.; Ackerman, C.; Zhai, H.A.; Yu, F.; Simone, C.B., 2nd; Choi, J.I.; Lee, N.Y.; Kabarriti, R.; et al. The Applications and Pitfalls of Cone-Beam Computed Tomography-Based Synthetic Computed Tomography for Adaptive Evaluation in Pencil-Beam Scanning Proton Therapy. Cancers 2023, 15, 5101. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Paganetti, H.; Botas, P.; Sharp, G.C.; Winey, B. Adaptive proton therapy. Phys. Med. Biol. 2021, 66, 22TR01. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Nenoff, L.; Matter, M.; Charmillot, M.; Krier, S.; Uher, K.; Weber, D.C.; Lomax, A.J.; Albertini, F. Experimental validation of daily adaptive proton therapy. Phys. Med. Biol. 2021, 66, 205010. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).