Hybrid Space Calibrated 3D Network of Diffractive Hyperspectral Optical Imaging Sensor

Abstract

1. Introduction

2. Experimental Methods

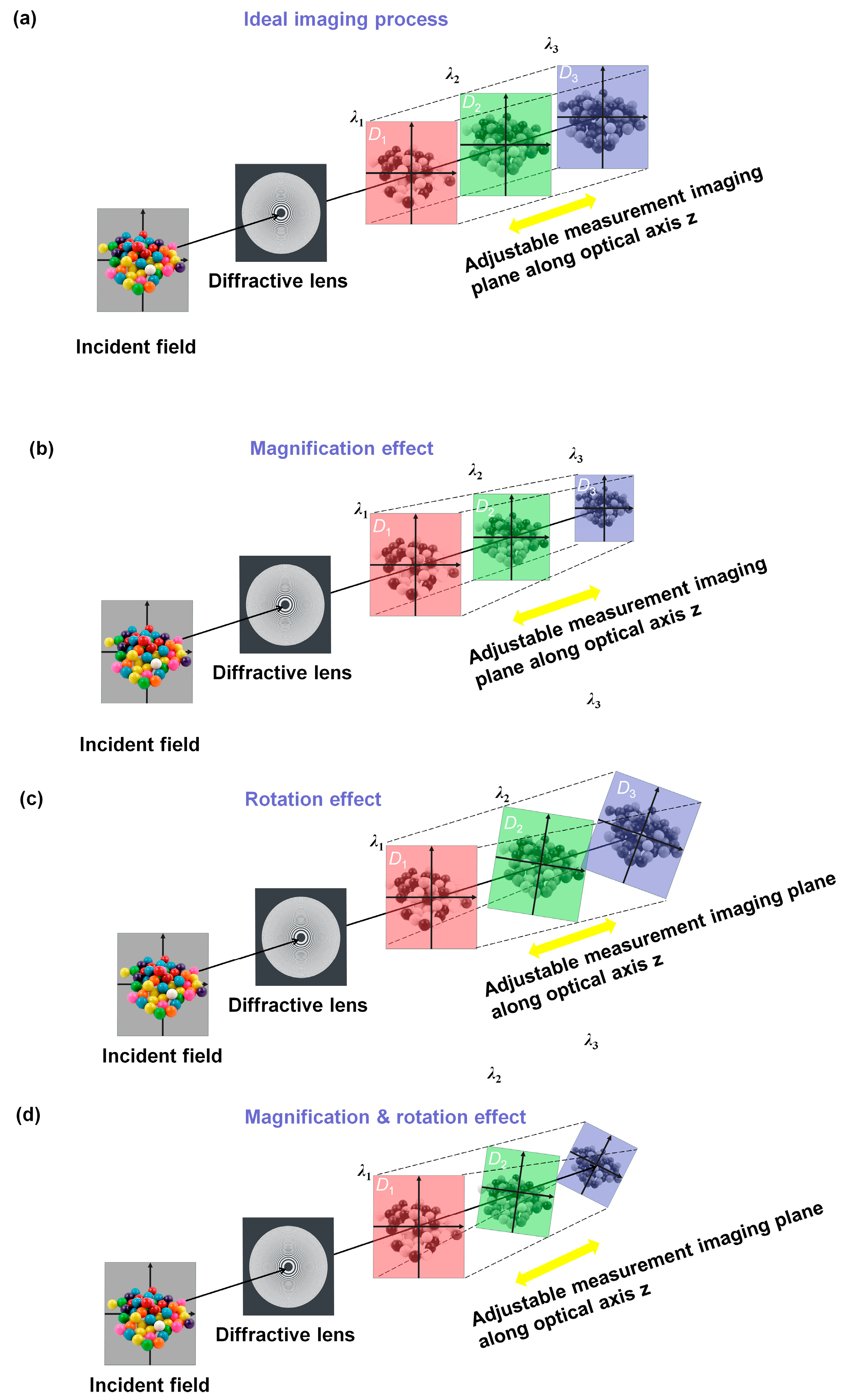

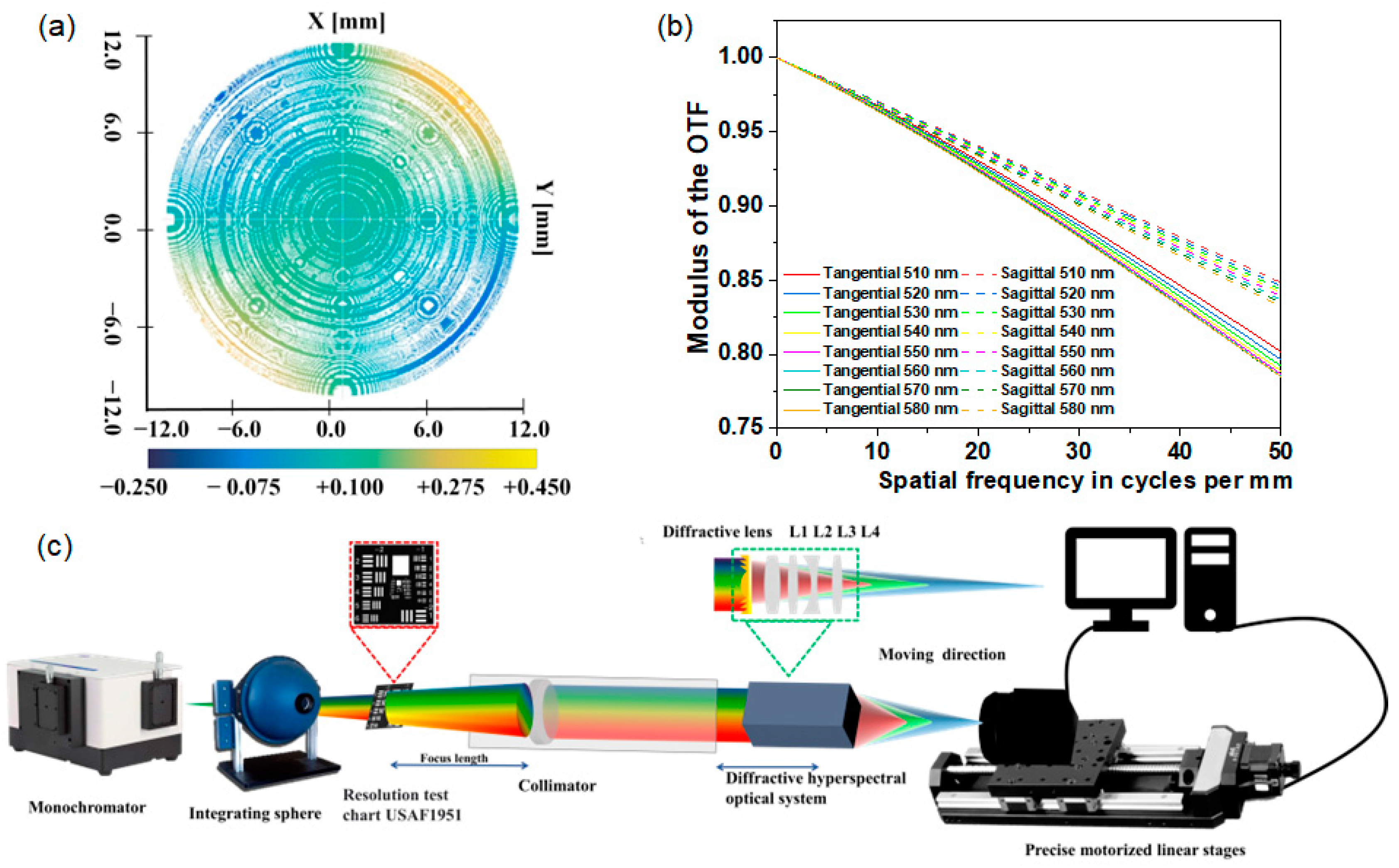

- Magnification and rotation calibration: The magnification variation α of diffractive multispectral imaging at the focal length of different wavelengths is calibrated through simulation of multispectral training data. Also, the random rotation angle difference β introduced by the complex imaging progress of the diffractive multispectral system (Figure 1c) is inputted into the data preprocessing step to improve the robustness of the network.

- Intensity calibration: The intensity calibration is applied to multispectral images at different wavelengths to obtain the spectral profile that is close to the final recovered image. The variation in intensity is caused by the small vibration of the light source and the transmitted deviation of different wavelength channels.

- Denoising: Noise, an important factor of diffractive multispectral imaging, causes the difference between simulated and measured information, which includes environmental noise, dark current, photon noise, readout noise, and analog-to-digital converter (ADC) noise. Google’s MAXIM model is used as the preprocessor to remove the noise of the aliased images.

- 3D U-Net: Calibrated diffractive multispectral images are trained by the 3D U-Net to reconstitute multispectral images. The 3D U-Net network is composed of the encoding module and decoding module based on the U-Net framework. The encoder consists of a down-sampling and feature extraction module that transforms the input 3D multispectral image into a multichannel feature map. Also, the decoding module with an up-sampling and image reconstruction module reduces the multichannel 4D feature tensor to the 3D multispectral image. Both feature extraction modules and image reconstruction modules are made up of a norm layer, 3D convolution layer, rectified linear unit (ReLU), and simplified channel attention (SCA) layer. The 3D convolution layer and the SCA layer are utilized to capture 3D features and adjust the weight between adjacent spectral channels to reconstruct diffractive multispectral images.

3. Diffractive Multispectral Imaging System

4. Diffractive Multispectral Imaging Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hinnrichs, M. Remote sensing for gas plume monitoring using state-of-the-art infrared hyperspectral imaging. In Proceedings of the Photonics East (ISAM, VVDC, IEMB), Boston, MA, USA, 1–6 November 1999; p. 12. [Google Scholar]

- Smith, D.; Gupta, N. Data collection with a dual-band Infrared hyperspectral imager. In Proceedings of the Optics and Photonics 2005, San Diego, CA, USA, 31 July–4 August 2005; Volume 5881, pp. 40–50. [Google Scholar] [CrossRef]

- Blanch-Perez-del-Notario, C.; Geelen, B.; Li, Y.; Vandebriel, R.; Bentell, J.; Jayapala, M.; Charle, W. Compact High-Speed Snapshot Multispectral Imagers in the VIS/NIR (460 to 960 nm) and SWIR Range (1.1 to 1.65 nm) and Its Potential in a Diverse Range of Applications; SPIE: Bellingham, WA, USA, 2023; Volume 12338. [Google Scholar]

- Shen, Y.; Li, J.; Lin, W.; Chen, L.; Huang, F.; Wang, S. Camouflaged Target Detection Based on Snapshot Multispectral Imaging. Remote Sens. 2021, 13, 3949. [Google Scholar] [CrossRef]

- Whitcomb, K.; Lyons, D.; Hartnett, S. DOIS: A Diffractive Optic Image Spectrometer; SPIE: Bellingham, WA, USA, 1996; Volume 2749. [Google Scholar]

- Hinnrichs, M.; Massie, M.A. New approach to imaging spectroscopy using diffractive optics. In Proceedings of the Imaging Spectrometry III, San Diego, CA, USA, 28–30 July 1997; pp. 194–205. [Google Scholar]

- Zhao, H.; Liu, Y.; Yu, X.; Xu, J.; Wang, Y.; Zhang, L.; Zhong, X.; Xue, F.; Sun, Q. Diffractive Optical Imaging Spectrometer with Reference Channel; SPIE: Bellingham, WA, USA, 2020; Volume 11566. [Google Scholar]

- Gundogan, U.; Oktem, F.S. Computational spectral imaging with diffractive lenses and spectral filter arrays. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 2938–2942. [Google Scholar]

- Hinnrichs, M.; Gupta, N.; Goldberg, A. Dual Band (MWIR/LWIR) Hyperspectral Imager. In Proceedings of the 32nd Applied Imagery Pattern Recognition Workshop, Washington, DC, USA, 15–17 October 2003; pp. 73–80. [Google Scholar]

- Cu-Nguyen, P.-H.; Grewe, A.; Hillenbrand, M.; Sinzinger, S.; Seifert, A.; Zappe, H. Tunable hyperchromatic lens system for confocal hyperspectral sensing. Opt. Express 2013, 21, 27611–27621. [Google Scholar] [CrossRef] [PubMed]

- Cu-Nguyen, P.-H.; Grewe, A.; Feßer, P.; Seifert, A.; Sinzinger, S.; Zappe, H. An imaging spectrometer employing tunable hyperchromatic microlenses. Light Sci. Appl. 2016, 5, e16058. [Google Scholar] [CrossRef] [PubMed]

- Bacca, J.; Martinez, E.; Arguello, H. Computational spectral imaging: A contemporary overview. J. Opt. Soc. Am. A 2023, 40, C115–C125. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Luo, R.; Liu, X.; Hao, X. Spectral imaging with deep learning. Light Sci. Appl. 2022, 11, 61. [Google Scholar] [CrossRef]

- Yuan, X.; Brady, D.J.; Katsaggelos, A.K. Snapshot Compressive Imaging: Theory, Algorithms, and Applications. IEEE Signal Process. Mag. 2021, 38, 65–88. [Google Scholar] [CrossRef]

- Zhang, M.; Cao, G.; Chen, Q.; Sun, Q. Image Restoration Method Based on Improved Inverse Filtering for Diffractive Optic Imaging Spectrometer. Comput. Sci. 2019, 46, 86–93. [Google Scholar] [CrossRef]

- Jeon, D.S.; Baek, S.-H.; Yi, S.; Fu, Q.; Dun, X.; Heidrich, W.; Kim, M.H. Compact snapshot hyperspectral imaging with diffracted rotation. ACM Trans. Graph. 2019, 38, 117. [Google Scholar] [CrossRef]

- Oktem, F.S.; Kar, O.F.; Bezek, C.D.; Kamalabadi, F. High-Resolution Multi-Spectral Imaging With Diffractive Lenses and Learned Reconstruction. IEEE Trans. Comput. Imaging 2021, 7, 489–504. [Google Scholar] [CrossRef]

- Xie, H.; Zhao, Z.; Han, J.; Xiong, F.; Zhang, Y. Dual camera snapshot high-resolution-hyperspectral imaging system with parallel joint optimization via physics-informed learning. Opt. Express 2023, 31, 14617–14639. [Google Scholar] [CrossRef] [PubMed]

- Bin, Y.; Danni, C.; Qiang, S.; Junle, Q.; Hanben, N. Design and Analysis of New Diffractive Optic Imaging Spectrometer. Acta Opt. Sin. 2009, 29, 1260–1263. [Google Scholar] [CrossRef]

- Fan, H.; Li, C.; Xu, H.; Zhao, L.; Zhang, X.; Jiang, H.; Yu, W. High accurate and efficient 3D network for image reconstruction of diffractive-based computational spectral imaging. IEEE Access 2024, 12, 120720–120728. [Google Scholar] [CrossRef]

- Born, M.A.X.; Wolf, E. Chapter VIII—Elements Of The Theory Of Diffraction. In Principles of Optics, 6th ed; Born, M.A.X., Wolf, E., Eds.; Pergamon Press: New York, NY, USA, 1980; pp. 370–458. [Google Scholar]

- Lohmann, A.W.; Paris, D.P. Space-Variant Image Formation. J. Opt. Soc. Am. 1965, 55, 1007–1013. [Google Scholar] [CrossRef]

- Sawchuk, A.A. Space-variant image motion degradation and restoration. Proc. IEEE 1972, 60, 854–861. [Google Scholar] [CrossRef]

- Wang, X.; Xie, L.; Yu, K.; Chan, K.C.K.; Loy, C.C.; Dong, C. BasicSR: Open Source Image and Video Restoration Toolbox. 2022. Available online: https://github.com/XPixelGroup/BasicSR (accessed on 24 October 2024).

- Bukhari, K.Z.; Wong, J. Visual Data Transforms Comparison; Delft University of Technology: Delft, The Netherlands, 1955. [Google Scholar]

- Zhou, W.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Guilloteau, C.; Oberlin, T.; Berné, O.; Dobigeon, N. Hyperspectral and Multispectral Image Fusion Under Spectrally Varying Spatial Blurs—Application to High Dimensional Infrared Astronomical Imaging. IEEE Trans. Comput. Imaging 2020, 6, 1362–1374. [Google Scholar] [CrossRef]

- Toader, B.; Boulanger, J.; Korolev, Y.; Lenz, M.O.; Manton, J.; Schönlieb, C.-B.; Mureşan, L. Image Reconstruction in Light-Sheet Microscopy: Spatially Varying Deconvolution and Mixed Noise. J. Math. Imaging Vis. 2022, 64, 968–992. [Google Scholar] [CrossRef] [PubMed]

- Janout, P.; Páta, P.; Skala, P.; Bednář, J. PSF Estimation of Space-Variant Ultra-Wide Field of View Imaging Systems. Appl. Sci. 2017, 7, 151. [Google Scholar] [CrossRef]

| Wavelength (nm) | PSNRmea | SSIMmea | PSNRcal | SSIMcal |

|---|---|---|---|---|

| 510.00 | 9.09 | 0.48 | 9.09 | 0.48 |

| 520.00 | 9.84 | 0.51 | 9.95 | 0.53 |

| 530.00 | 10.77 | 0.55 | 10.89 | 0.57 |

| 540.00 | 11.80 | 0.60 | 11.93 | 0.64 |

| 550.00 | 12.58 | 0.63 | 12.71 | 0.67 |

| 560.00 | 12.97 | 0.61 | 13.11 | 0.62 |

| 570.00 | 13.15 | 0.60 | 13.26 | 0.65 |

| 580.00 | 13.25 | 0.60 | 13.36 | 0.68 |

| Mean value | 11.68 | 0.57 | 11.79 | 0.61 |

| Wavelength (nm) | PSNRhyb | SSIMhyb | PSNRunr | SSIMunr |

|---|---|---|---|---|

| 510.00 | 4.03 | 0.44 | 8.90 | 0.54 |

| 520.00 | 7.87 | 0.47 | 9.64 | 0.58 |

| 530.00 | 8.66 | 0.52 | 10.44 | 0.63 |

| 540.00 | 9.08 | 0.53 | 11.19 | 0.65 |

| 550.00 | 9.08 | 0.62 | 11.85 | 0.65 |

| 560.00 | 8.70 | 0.60 | 12.41 | 0.64 |

| 570.00 | 8.19 | 0.57 | 12.84 | 0.65 |

| 580.00 | 7.97 | 0.54 | 13.00 | 0.65 |

| Mean value | 7.95 | 0.54 | 11.28 | 0.62 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, H.; Li, C.; Gao, B.; Xu, H.; Chen, Y.; Zhang, X.; Li, X.; Yu, W. Hybrid Space Calibrated 3D Network of Diffractive Hyperspectral Optical Imaging Sensor. Sensors 2024, 24, 6903. https://doi.org/10.3390/s24216903

Fan H, Li C, Gao B, Xu H, Chen Y, Zhang X, Li X, Yu W. Hybrid Space Calibrated 3D Network of Diffractive Hyperspectral Optical Imaging Sensor. Sensors. 2024; 24(21):6903. https://doi.org/10.3390/s24216903

Chicago/Turabian StyleFan, Hao, Chenxi Li, Bo Gao, Huangrong Xu, Yuwei Chen, Xuming Zhang, Xu Li, and Weixing Yu. 2024. "Hybrid Space Calibrated 3D Network of Diffractive Hyperspectral Optical Imaging Sensor" Sensors 24, no. 21: 6903. https://doi.org/10.3390/s24216903

APA StyleFan, H., Li, C., Gao, B., Xu, H., Chen, Y., Zhang, X., Li, X., & Yu, W. (2024). Hybrid Space Calibrated 3D Network of Diffractive Hyperspectral Optical Imaging Sensor. Sensors, 24(21), 6903. https://doi.org/10.3390/s24216903