Abstract

In this paper, a deep-learning-based frame synchronization blind recognition algorithm is proposed to improve the detection performance in non-cooperative communication systems. Current methods face challenges in accurately detecting frames under high bit error rates (BER). Our approach begins with flat-top interpolation of binary data and converting it into a series of grayscale images, enabling the application of image processing techniques. By incorporating a scaling factor, we generate RGB images. Based on the matching radius, frame length, and frame synchronization code, RGB images with distinct stripe features are classified as positive samples for each category, while the remaining images are classified as negative samples. Finally, the neural network is trained on these sets to classify test data effectively. Simulation results demonstrate that the proposed algorithm achieves a 100% probability in frame recognition when BER is below 0.2. Even with a BER of 0.25, the recognition probability remains above 90%, which exhibits a performance improvement of over 60% compared with traditional algorithms. This work addresses the shortcomings of existing methods under high error conditions, and the idea of converting sequences into RGB images also provides a reliable solution for frame synchronization in challenging communication environments.

1. Introduction

In modern digital communication systems, frame synchronization is typically achieved by periodically inserting predefined synchronization code into the binary data stream. The recognition of synchronization codes is a prerequisite for subsequent signal processing tasks such as error correction and information extraction. The receiver of the cooperating party can utilize a suitable algorithm to locate the known synchronization codes easily [1,2]. In contrast, the non-cooperative receiver needs to first obtain synchronization codes form the intercepted signals with little prior information, and then they can further get the original binary data stream, which is a more complicated process compared to the collaborative scenario [3,4,5]. The frame synchronization recognition includes obtaining the length of the predefined synchronization code, the specific synchronization code as well as the frame length, which is defined as the intervel between the adjacent synchronization codes.

In the classical frame synchronization blind recognition algorithms [6,7,8,9,10,11,12,13], Wang et al. first utilized small-area detection to estimate the frame length [6]. According to the characteristics of QPSK signal, Qin et al. proposed a recognition algorithm based on soft-decision [7], which achieved better performance of synchronization code recognition than hard-decision. In 2017, He realized the estimation of frame parameters by constructing a hierarchical model of equal frame length and rank finding in small regions [8]. In 2019, Xu et al. proposed an improved frame synchronization recognition algorithm based on self-correlation, which achieves reasonable frame length estimation by introducing peak-to-average ratio (PAR) [9]. In 2020, Shao and Lei used triple correlation filtering to identify the frame length after eliminating the redundant data of the filling matrix, calculated correlation values to extract the key fields, and finally extracted the synchronization code through dispersion analysis [10]. Chen et al. constructed matrices with varying column numbers and computed the first-order cumulant of these matrices. They set decision thresholds based on the frame structure characteristics to distinguish between synchronous bit columns and data bit columns, thereby achieving the recognition of frame length and synchronization codes [12]. In 2023, Jin utilized high-order cumulant to detect the burst signal in time domain, and then completed the frame synchronization recognition by combining the code domain information [13]. Some researchers have utilized the encoding features of signals in their recognition of frame synchronization [14,15,16]. Xia and Wu have achieved the blind identification of encoders and blind estimation of delays by maximizing the average log-likelihood ratio [15]. In 2023, Feng et al. have accomplished blind frame synchronization by utilizing soft information from frozen bits and employing polar coding assistance [16]. Wang et al. improved upon the pattern matching algorithm by removing the bad character array table from the preprocessing phase and retaining only the good suffix character array table. Additionally, to address the issue of the pattern matching algorithm being limited to exact matching, the proposed algorithm introduced error tolerance, enabling fuzzy matching for bit stream data. It also modified the index shifting rules for matching, which helped avoid frame header loss due to bit errors caused by noise in the communication environment, resulting in faster frame header detection speeds and improved bit error rate performance [17]. In 2024, Wang et al. divided the bitstream evenly into multiple windows, and perform a sliding XOR operation on the first two windows to obtain the Extended Synchronization Word (E-SW). Then, use the obtained E-SW to perform a sliding correlation operation with the remaining windows, resulting in the corresponding E-SWs for each window, which form the E-SW set. Finally, conduct statistical analysis on the E-SW set to filter out the codewords of the unknown synchronization word [18]. Lv et al. studied a joint frame synchronization and frequency offset estimation algorithm suitable for tropospheric scatter communication, addressing the issues of multipath fading and frequency offset at both the transmission and reception ends. They employed a partial correlation frequency domain capture algorithm based on Fast Fourier Transform (FFT) to search for the maximum correlation value and frequency offset index, simultaneously achieving frame synchronization and frequency offset estimation [19]. The aforementioned algorithms rely on good communication conditions as well as sufficient received data which limits their application in the case of high bit error rates and limited intercepted data.

Convolutional Neural Networks (CNN) [20] are a type of feedforward neural network that includes convolutional computations and has a deep structure. The parameter sharing of convolutional kernels within the hidden layers and the sparsity of connections between layers enable CNNs to learn grid-like topological features, such as pixels and audio, with a smaller computational load. This leads to stable performance without requiring additional feature engineering, making them widely applicable in fields such as computer vision and natural language processing [21,22,23]. Some researchers have introduced deep learning and CNN into frame synchronization recognition [24,25,26,27]. Li utilized some one-dimensional data labeled with one-hot encoding to train the neural network and realized the synchronization recognition of equal-length frames [24]. Shao and Lei transformed digital sequences into images with column number equal to frame length and then trained the neural network to recognize the frame length [25]. Shen combined recurrent neural network (RNN) with the sliding window and periodic sampling, and implemented the recognitionof frame synchronization under Rayleigh fading channel conditions [26]. The data preprocessing process in [24,26] did not involve the idea of converting the sequence into an image, which did not adequately highlight the sample features. Moreover, the positive samples in [25] are limited to the case where the matrix column number is strictly equal to the frame length. Kojima et al. proposed a CNN-based symbol timing synchronization method for pilotless OFDM systems under severe multipath fading channels. By using a supervised CNN to classify the signals based on spectrograms, which contain power density information in both the time and frequency domains, simulation results show that this method can provide more accurate synchronization [27].

The Transformer architecture is a deep learning model designed for sequence data. It primarily utilizes a self-attention mechanism to capture long-range dependencies and allows for parallel processing of input, improving training efficiency. It excels at processing complete sequences of images by learning the global relationships within the sequence to update each patch. It has been widely applied in various fields such as image understanding, object detection, and semantic segmentation [28,29]. When frame-synchronized sequences are converted into matrices, the frame synchronization codes scattered across the frames are rearranged spatially, forming local stripe features. Depending on the number of columns in the matrix and the parameters of the frame synchronization sequence, the stripe width and inclination of the image samples generated from the matrix vary. Considering that CNNs are more adept at detecting simple features such as edges and lines, we have decided to conduct our research based on the CNN architecture.

Attracted by the stripes that appear when converting binary data with frame synchronization code into pictures and considering the powerful capability of CNN in image recognition, we propose a deep-learning-based blind frame synchronization identification method by combining the classical algorithms and deep learning in this paper. The binary data was firstly subjected to a flat-top interpolation operation [30]. Subsequently, it was transformed into a series of grayscale images and combined with scaling factors to generate RGB images. Then we use the image samples to train the convolutional neural network and employ the trained network to recognize the frame synchronization code in new binary sequences. Simulation results show that compared with the existing algorithms, the proposed algorithm has better recognition performance under the condition of higher bit error rate.

2. Algorithm Description

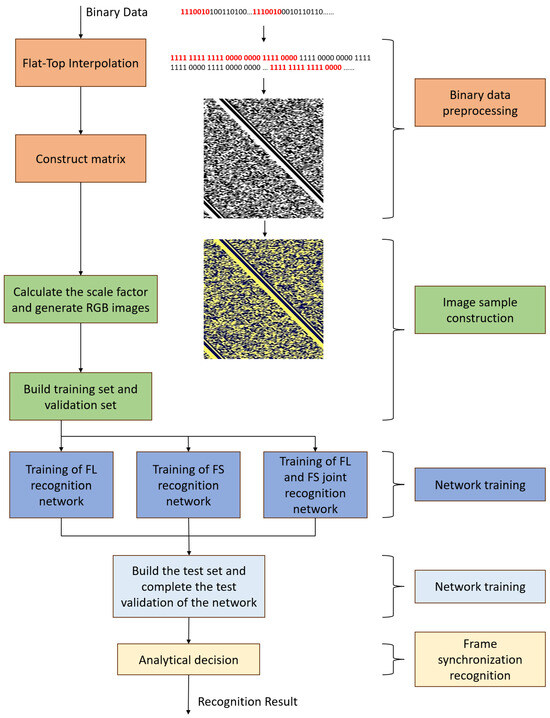

The deep-learning-based blind frame synchronization recognition algorithm’s workflow is illustrated in Figure 1, which includes binary data preprocessing, image sample construction, frame synchronization recognition network training, network testing, and frame synchronization recognition. Firstly, in the preprocessing stage of binary data, we do a flat-top interpolation on the data and construct a series of matrixs with columns near the interpolated frame’s length and transform them into grayscale images. As the synchronization codes in different rows are only staggered by a small distance, the grayscale image shows obvious stripes. Then for each grayscale image, combine it with the scaling factor based on the size of the matrix to construct an RGB image, which is one of the samples in the data set. In the training stage, we label RGB images by their frame lengths, frame synchronization code types or both of them and train recognition networks for different labels. When a binary data sequence of unknown parameters is received, we use it to construct multiple RGB images of different sizes, and then send them to the trained network model for classification recognition, and obtain the classification results for the frame parameters of each image. Finally, we take the categories, quantities, and recognition probabilities into consideration to make a final recognition decision.

Figure 1.

Frame synchronization code blind recognition algorithm flow based on deep learning. FL refers to Frame length and FS refers to Frame synchronization code.

2.1. Binary Data Preprocessing

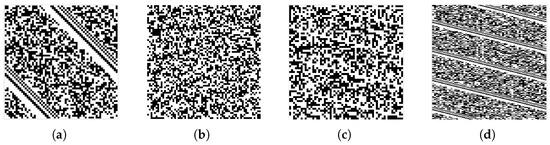

The frame length is defined as , where S represents the length of the synchronization code and K represents the length of the data in one frame. Reshape a binary data sequence into a matrix H of size , and when , the synchronization code in each frame appear in the same column of the matrix. Then the column vectors of the matrix are divided into synchronization code columns and data bits columns. In this case, if the matrix is converted into a binary grayscale image, there will be distinct vertical stripes in the positions of the synchronization code columns, as shown in Figure 2.

Figure 2.

Vertical stripes when the number of matrix columns is equal to the frame length or an integer multiple of the frame length. (a) The number of columns equals the frame length. (b) The number of columns is an integer multiple of the frame length.

When n is close to but not equal to L, the corresponding synchronization bits in each frame of the digital sequence are not in the same column. Figure 3a showns the case for , each row of the matrix is not sufficient to accommodate a complete frame, the synchronization bits of the next frame will shift backward, eventually forming oblique stripes with a negative slope of . It is similar for the the case of , this time the value of the slope is positive. With the increase of the difference, the stripes will be extremely narrow, as shown in Figure 3c. To enhance the stripe features of the images, we perform a flat-top interpolation on the original binary data stream with an interpolation factor of 4. The reshaped image sample from interpolated data is shown in Figure 3d, the more pronounced stripes will facilate the processing later. Thus, the data preprocessing includes the flat-top interpolation and the reshape of the data after interpolation.

Figure 3.

Images when the number of matrix columns is not equal to the frame length. (a) The number of columns is close to the frame length; (b) The number of columns differs greatly from frame length; (c) Image with faint stripes visible; (d) Images generated from a sequence using flat-topped interpolation.

When n and L differ greatly, as shown in Figure 3b, the black and white pixels tend to be randomly distributed, there is no stripes in the image. The above two cases can be defined in the following mathematical way:

Definition 1.

If the value of n is equal to or close to the length of the interpolated frame with , satisfying

then we define that n matches , where d represents the matching radius. In this case, there are obvious striples in the sample. The case where Equation (1) is not satisfied is defined as n dis-matches, there will be no striples in the sample.

2.2. Image Sample Construction

According to Section A, when n matches , the constructed images will be used as positive samples; when n dis-matches , the obtained images will be used as negative samples. Negative samples also contain image samples constructed from randomly generated binary sequences to enhance the generalization ability of the network model.

The neural network we will use requires the input image size to be , so the grayscale images should be resized before being fed into the network. The interpolated frame length can be obtained from the slope of the fringe . However, the image scaling process will cause n lost. To solve this problem, except for the grayscale images we need to add additional parameters to compensate for the information loss during image scaling. We will use RGB images as the input of the neural network. Both R and G channels will be filled with the grayscale images. For B channel, we introduce a scaling factor to fill in each pixel. The scale factor is given by

where with

where and respectively represent the minimum and the maximum in the training set.

Considering that the data matrix is built from a binary sequence, it is necessary to map the value of to [0, 1] using the following formula:

After the scaling process using bilinear interpolation, the original pixel values in the R and G channels will be stretched, leading to blurriness or distortion in the image, while the pixel values in the B channel remain the same and are not affected by the bilinear interpolation operation. As a result, frame length feature of the image, represented by the scale factor, are preserved.

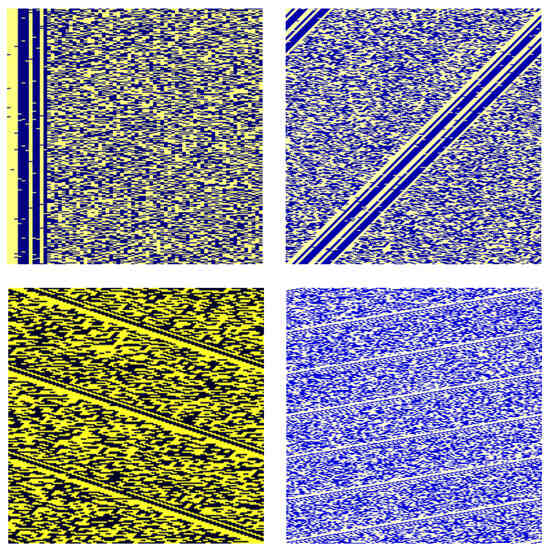

Some positive samples are shown in Figure 4. These images show obvious stripes, and each row contains at most one complete frame, with scattered spots in the stripes due to the existence of error bits. In addition, RGB images with different scaling factors exhibit differences in color.

Figure 4.

Some positive samples of the generated images.

2.3. Frame Synchronization Recognition Network Training

Let’s denote the set of frame length categories as , where p represents the total number of frame length categories to be recognized. For each frame category to be recognized, we generated enough data frames according to the frame structure with error rate of , , and then constructed positive and negative samples as described in Section B. All n satifies Equation (1) will be used to generated positive samples, so that all types of stripes will be included, the recognition ability of the network model will be improved. Each RGB image in positive samples is labeled with its corresponding frame length category. Split both the positive samples set and negative samples set with the same error rates into training and validation sets in a 9:1 ratio. All the training(validation) set for all the frame length categories with various error rates constitute the training(validation) set for the neural network.

CNN typically consists of multiple layers, where each layer sequentially performs operations such as convolution and pooling on the input information. This process ultimately completes the extraction of image features. The output of an image after passing through the convolutional layers can be written as

Considering the wide application and diversified branches of ResNet in image classification, we choose to use ResNet50 network in our recognition algorithm. ResNet50 [31] introduces the structure of residual blocks, which successfully solves problems such as gradient vanishing and explosion in training deep neural networks. This enables the network to learn feature representations more deeply, thereby improving the model’s performance. Table 1 presents the network structure of the ResNet50 model used in this paper.

Table 1.

ResNet50 Network Structure.

The activation function and loss function used in ResNet50 network are shown in Equations (6) and (7):

where Equation (6) is ReLU function, and Equation (7) is cross-entropy loss function of multi-classification form.

We feed the training set into the neural network model and the model will iteratively update the network parameters with the stochastic gradient descent algorithm. The training process continues until the cross-entropy loss function of the network reaches its minimum value, resulting in a well-trained model for frame length recognition.

3. Test and Analysis

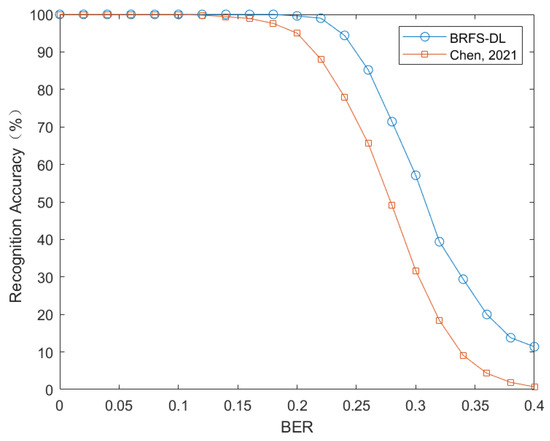

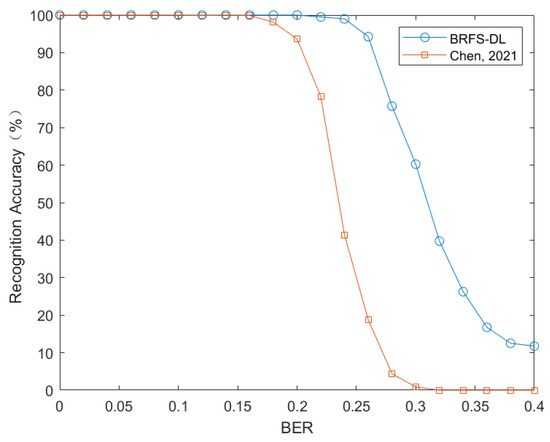

To evaluate the ability of blind recognition algorithm for frame synchronization based on deep learning (BRFS-DL) to recognize frame lengths, the data set is configured with five different physical frame lengths of {50, 60, 70, 80, 90} and each frame length utilizes the same 11-bit Barker code as the synchronization code. When constructing the matrix H, the matching radius , and the starting position of the extracted data sequence is randomly determined. The search range of is set to [160, 400] considering the interpolation factor of 4. The simulation results are shown in Figure 5.

Figure 5.

Comparison of frame length recognition performance between BRFS-DL and reference [12] (Chen, 2021).

When evaluating the ability of BRFS-DL to recognize frame synchronization codes, the data set is set up with four different synchronization codes with length of {7, 11, 13, 15}. Each category has the same frame length , the number of columns of the matrix H is set to . The simulation results are shown in Figure 6.

Figure 6.

Comparison of frame synchronization code recognition performance between BRFS-DL and reference [12] (Chen, 2021).

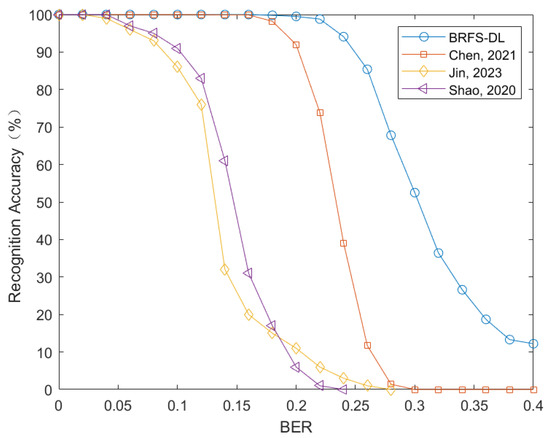

To verify whether BRFS-DL can simultaneously recognize the frame length and synchronization code, a data set is created with four different frame lengths {50, 60, 70, 80} and three different synchronization codes with length of {11, 13, 15}, totaling 12 different categories. A negative sample category is also included. The matrix H is constructed with and search range of is set to . The simulation results are shown in Figure 7.

Figure 7.

Comparison of joint recognition performance of frame length and frame synchronization code between BRFS-DL, references [12] (Chen, 2021), [13] (Jin, 2023) and [25] (Shao, 2020).

Using three network models trained on the above three datasets for recognition prediction, the error rate of the original binary data sequence is set to , , and each network was subjected to 200 random experiments for each error rate. In each experiment, the data sequence was first interpolated and then multiple matrices H were constructed using the same interpolated data sequence, with the number of columns of H increasing by within the range of n. Each matrix was transformed into an RGB image by combining its respective scaling factor, and fed into the trained network model for recognition. For every image, the recognition result includes a category and probability. We count the number of times () the sequence is recognized as being in each category () and the maximum probability () of being judged to be in that category, denoted by {}. If there is only one category satisfying , then we take () as the recognition result. If there are multiple categories satisfying , the category with the highest classification probability among them is chosen as the recognition result for this experiment.

Following, we simulate our algorithm and compare it with the method proposed in reference [13,25] and reference [12] which is implementated with FPGA in reference [32]. Reference [12] constructed matrices with varying column numbers and computed their first-order cumulants. Decision thresholds were set based on frame structure characteristics to distinguish between synchronous bit columns and data bit columns, enabling recognition of frame length and synchronization codes. Reference [13] utilized high-order cumulant to detect the burst signal in time domain, and then completed the frame synchronization recognition by combining the code domain information. Reference [25] transformed digital sequences into images with column number equal to frame length and then trained the neural network to recognize the frame length.

The frame length recognition results are shown in Figure 5. When the error rate is less than 0.2, BRFS-DL achieves a recognition accuracy of 100%. Furthermore, even when the error rate is as high as 0.25, the recognition accuracy of BRFS-DL remains above 90%.

Figure 6 shows the performance comparison between BRFS-DL and the algorithm in reference [12] in frame synchronization code recognition. It can be observed that when the error rate is less than 0.2, the recognition accuracy of BRFS-DL algorithm reaches 100%. In addition, even when the error rate is up to 0.25, the recognition accuracy of BRFS-DL algorithm is still above 95%.

The performance comparison of the four methods for comprehensive recognition of frame length and frame synchronization code is depicted in Figure 7. When the error rate is 0.15, the blind recognition method of frame synchronization in time-code domain proposed in Reference [13] achieves a recognition probability of about 22%, and the convolutional neural network-based physical layer cutting method in Reference [25] has a recognition probability of about 40%. In contrast, our algorithm achieves a recognition accuracy of 100%. Moreover, when the error rate is around 0.25, the recognition accuracy still exceeds 90%. In contrast, the first-order cumulant and error elimination method presented in reference [12] exhibits a rapid decline in recognition performance at this error rate and fails to accurately recognize frame synchronization. The main reason for the good recognition performance of BRFS-DL is that it transforms the feature of frame length into a scaling factor and combines it with the sequence to create a striped RGB image for neural network training. By employing flat-top interpolation on the original data and setting an appropriate matching radius d, the diversity of the dataset is enhanced. In contrast, the recognition methods in references [12,13] require setting suitable thresholds to make decisions based on the computed first or higher-order cumulants, however, it is difficult to find a threshold that fits all scenarios at the same time. Furthermore, the recognition method in reference [13] relies on the autocorrelation characteristics of frame synchronization codes to correct the data in the analysis matrix, which limits its applicability in high bit error rate environments. Reference [25] also employs the idea of converting sequences into images and using neural networks for recognition. However, it requires that the number of columns in the matrix be strictly uniform with the frame length during data compression, which is equivalent to setting the matching radius to 0 in our method. Additionally, it lacks the process of flat-top interpolation. Therefore, our method improves recognition accuracy while reducing computational complexity.

In constructing the training set, we used a matching radius of d and column number increment of 1, which means that all the matrices whose column number is within the range are used to generate positive samples. In each experiment, we generate multiple images of varying sizes for the fixed binary sequence. Theoretically, as long as one of the images has a matrix column number within the range , we can obtain the correct recognition result. In realization, the increment of the matrix column number is less than d, which means that at least two positive samples will definitely appear in one experiment, providing us with more grounds for post-selection, enabling us to reach more accurate decision results. It is obvious that the recognition accuracy will increase as decreases, but this comes at the cost of increased computational complexity. It’s a trade-off between computational complexity and accuracy.

Even with the maximum , the computational complexity of our method will still be significantly higher than the traditional algorithms, due to the millions of parameters in the neural network model. For example, ResNet-50 has approximately 25.6 million parameters and a computational complexity of about 4.1 billion floating point operations (FLOPs), whereas the complexity of the algorithm in reference [12] is approximately , where n is the actual frame length. Given that blind recognition is always used in a non-cooperative communication scenario with higher BER and limited prior information, it is more reasonable to sacrifice computational complexity to achieve better recognition performance. The main purpose of this paper is to demonstrate that using neural networks for frame synchronization recognition can achieve good performance. Further we can focus on the complexity and recognition performance of the algorithm to obtain neural networks with low complexity and excellent performance.

4. Conclusions

In order to improve the error tolerance of classical blind frame synchronization recognition algorithm, we proposed a BRFS-DL algorithm based on deep learning. Inspired by the property that RGB images have distinct stripes when the number of columns of the matrix matches the frame length, we generate positive and negative samples to train the neural network model. Moreover, we interpolated the original Binary data in order to enhance the stripe features and combined the scaling factor to improve the recognition accuracy. Three recognition networks, the frame length recognition network, frame synchronization code recognition network, and combined frame length and frame synchronization code recognition network are separately trained. Finally, the recognition networks are tested and verified using a test set. Simulation results demonstrate that compared with the algorithm proposed in reference [12], BRFS-DL algorithm achieves higher recognition accuracy. Even at an error rate of 0.25, the recognition accuracy still exceeds 90%, BRFS-DL algorithm exhibits significant improvements in error tolerance.

Nevertheless, our current algorithm is still unable to operate in a completely non-cooperative communication environment without any prior information. The trained neural network model can only recognize a limited number of predefined frame synchronization categories, indicating insufficient generalization capability. Moreover, in order to simplify the data preprocessing steps, we intentionally discarded several operations that could highlight the features of the frame synchronization code, such as gradient computation and Fourier transform. This may, to some extent, limit the further improvement of the algorithm’s performance. In addition, our algorithm has only experimented with CNN networks. Future work will consider architectures such as recurrent neural network(RNN) and Transformers, with a greater focus on enhancing the model’s generalization ability and optimizing data preprocessing steps, so that our algorithm can be more effectively applied in practical non-cooperative communication scenarios.

Author Contributions

Conceptualization, J.W. and S.Z.; methodology, J.W.; software, J.W. and M.J.; validation, X.H., D.Q. and C.C.; formal analysis, D.Q.; investigation, M.J.; resources, M.J.; data curation, J.W.; writing—original draft preparation, J.W.; writing—review and editing, J.W. and D.Q.; visualization, M.J.; supervision, S.Z., X.H., D.Q. and C.C.; project administration, X.H.; funding acquisition, S.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Foundation of National Key Laboratory of Electromagnetic Environment: (6142403220202), the National Natural Science Foundation of China (62072360, 62172438), the key research and development plan of Shaanxi province (2021ZDLGY02-09, 2023-GHZD-44, 2023-ZDLGY-54), the National Key Laboratory Foundation (2023-JCJQ-LB-007), the Natural Science Foundation of Guangdong Province of China (2022A1515010988), Key Project on Artificial Intelligence of Xi’an Science and Technology Plan (23ZDCYJSGG0021-2022, 23ZDCYYYCJ0008, 23ZDCYJSGG0002-2023), and the Proof-of-concept fund from Hangzhou Research Institute of Xidian University (GNYZ2023QC0201, GNYZ2024QC004, GNYZ2024QC015).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chiani, M.; Martini, M.G. Practical frame synchronization for data with unknown distribution on AWGN channels. IEEE Commun. Lett. 2005, 9, 456–458. [Google Scholar] [CrossRef]

- Gan, H.Q.; Wang, J.; Zhou, P.; Li, S.Q. An Antijaming OFDM Synchronization Algorithm under Complicated Multipath Fading Channels. Signal Process. 2015, 31, 1461–1466. [Google Scholar]

- Zhang, Y.; Yang, X.J. Recognition Method of Concentratively Inserted Frame Synchronization. Acta Armamentarii 2013, 34, 554–560. [Google Scholar]

- Liang, Y.; Rajan, D.; Eliezer, O.E. Sequential Frame Synchronization Based on Hypothesis Testing with Unknown Channel State Information. IEEE Trans. Commun. 2015, 63, 2972–2984. [Google Scholar] [CrossRef]

- He, X.D.; Tan, X.D.; Wu, B.Y. Wireless Clock Synchronization System Based on Long-wave Signal Sensing. In Proceedings of the 2022 41st Chinese Control Conference (CCC), Hefei, China, 25–27 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 3474–3478. [Google Scholar]

- Wang, D.; Xie, H.; Wang, F.H.; Huang, Z.T. Blind identification of frame synchronization codes based on first-order cumulant. Commun. Countermeas. 2013, 32, 17–20. [Google Scholar]

- Qin, J.; Huang, Z.; Liu, C.; Su, S.; Zhou, J. Novel blind recognition algorithm of frame synchronization words based on soft-decision in digital communication systems. PLoS ONE 2015, 10, e0132114. [Google Scholar] [CrossRef]

- He, J.; Zhou, J.R. Improvement of Frame Synchronization Algorithm for Burst Data in Anti-interference Network. Radio Commun. Technol. 2017, 43, 16–20. [Google Scholar]

- Xu, Y.Y.; Zhong, Y.; Huang, Z.P. An improved blind recognition algorithm of frame parameters based on self-correlation. Information 2019, 10, 64. [Google Scholar] [CrossRef]

- Shao, K.; Lei, Y.K. Fast Blind Recognition Algorithm of Frame Synchronization Based on Dispersion Analysis. J. Signal Process. 2020, 36, 361–372. [Google Scholar]

- Kil, Y.S.; Lee, H.; Kim, S.H.; Chang, S.H. Analysis of blind frame recognition and synchronization based on sync word periodicity. IEEE Access 2020, 8, 147516–147532. [Google Scholar] [CrossRef]

- Chen, X.F.; Liu, N.N.; Xu, W.B. Low Complexity Blind Recognition Method for Frame Synchronization. In Proceedings of the 14th National Conference on Signal and Intelligent Information Processing and Application, Beijing, China, 11 April 2021; Volume 4. [Google Scholar]

- Jin, M.; Zhang, S.; He, X.; Quan, D.; Wei, J. Blind Recognition of Frame Synchronization in Time-Code Domain. In Proceedings of the 2023 International Conference on Ubiquitous Communication (Ucom), Xi’an, China, 7–9 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 144–149. [Google Scholar]

- Imad, R.; Houcke, S. On blind frame synchronization of LDPC codes. IEEE Commun. Lett. 2021, 25, 3190–3194. [Google Scholar] [CrossRef]

- Xia, T.; Wu, H.C.; Chang, S.Y. Joint blind frame synchronization and encoder identification for LDPC codes. IEEE Commun. Lett. 2014, 18, 352–355. [Google Scholar] [CrossRef]

- Feng, Z.; Liu, Y.; Zhang, S.; Xiao, L.; Jiang, T. Polar-Coding-Assisted Blind Frame Synchronization Based on Soft Information of Frozen Bits. IEEE Commun. Lett. 2023, 27, 2563–2567. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, S.; Sun, H.; Gong, K.; Liu, H.; Wang, W. Fast Detection Algorithm for Equal Length Frame Signals Based on Pattern Matching. In Proceedings of the 2nd International Conference on Signal Processing, Computer Networks and Communications, Xiamen, China, 8–10 December 2023; pp. 402–407. [Google Scholar]

- Wang, Y.Q.; Hu, P.J.; Yang, J.A. Blind synchronization word recognition algorithm for non-equal length frame based on two window-sliding operations. Syst. Eng. Electron. 2024, 46, 3567–3576. [Google Scholar]

- Lv, Z.H.; Zhang, T.; Ren, W.C. Study on Joint Frame Synchronization and Frequency Bias Estimation Algorithm for Tropospheric Scattering Channels. Comput. Meas. Control. 2024, 32, 185–191+200. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.; Henderson, D.; Howard, R.; Hubbard, W.; Jackel, L. Handwritten digit recognition with a back-propagation network. Adv. Neural Inf. Process. Syst. 1989, 2, 396–404. [Google Scholar]

- Chen, C.; Wang, W.; Liu, Z.; Wang, Z.; Li, C.; Lu, H.; Pei, Q.; Wan, S. RLFN-VRA: Reinforcement Learning-based Flexible Numerology V2V Resource Allocation for 5G NR V2X Networks. IEEE Trans. Intell. Veh. 2024, 1–11. [Google Scholar] [CrossRef]

- Chen, C.; Si, J.; Li, H.; Han, W.; Kumar, N.; Berretti, S.; Wan, S. A High Stability Clustering Scheme for the Internet of Vehicles. IEEE Trans. Netw. Serv. Manag. 2024, 21, 4297–4311. [Google Scholar] [CrossRef]

- Xiao, T.; Chen, C.; Pei, Q.; Jiang, Z.; Xu, S. SFO: An adaptive task scheduling based on incentive fleet formation and metrizable resource orchestration for autonomous vehicle platooning. IEEE Trans. Mob. Comput. 2023, 23, 7695–7713. [Google Scholar] [CrossRef]

- Li, Y.H. Research on Non-Cooperative Signal Link Layer Analysis Technology. Master’s Thesis, Wuhan University, Wuhan, China, 2020. [Google Scholar] [CrossRef]

- Shao, K.; Lei, Y.K. Physical Frame Segmentation Method Based on Convolutional Neural Network. J. Data Acquis. Process. 2020, 35, 653–663. [Google Scholar]

- Shen, B.X. Noncooperative Signal Analysis via Deep Learning. Master’s Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2022. [Google Scholar]

- Kojima, S.; Goto, Y.; Maruta, K.; Sugiura, S.; Ahn, C.J. Timing synchronization based on supervised learning of spectrogram for ofdm systems. IEEE Trans. Cogn. Commun. Netw. 2023, 9, 1141–1154. [Google Scholar] [CrossRef]

- Ning, E.; Zhang, C.; Wang, C.; Ning, X.; Chen, H.; Bai, X. Pedestrian Re-ID based on feature consistency and contrast enhancement. Displays 2023, 79, 102467. [Google Scholar] [CrossRef]

- Wang, C.; Wu, M.; Lam, S.K.; Ning, X.; Yu, S.; Wang, R.; Li, W.; Srikanthan, T. GPSFormer: A Global Perception and Local Structure Fitting-based Transformer for Point Cloud Understanding. arXiv 2024, arXiv:2407.13519. [Google Scholar]

- Alrubei, M.A.; Dmitrievich, P.A. An Approach for Single-Tone Frequency Estimation Using DFT Interpolation with Parzen Windowing. Kufa J. Eng. 2023, 14, 93–104. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Liu, N.N. Research and FPGA Implementation of Blind Synchronization Algorithm. Master’s Thesis, Beijing University of Posts and Telecommunications, Beijing, China, 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).