Intracytoplasmic sperm injection (ICSI) is the most popular method of insemination worldwide. In the field of assisted reproductive medicine, ICSI is particularly effective for male infertility and has been widely adopted because of its high incidence rate [

1,

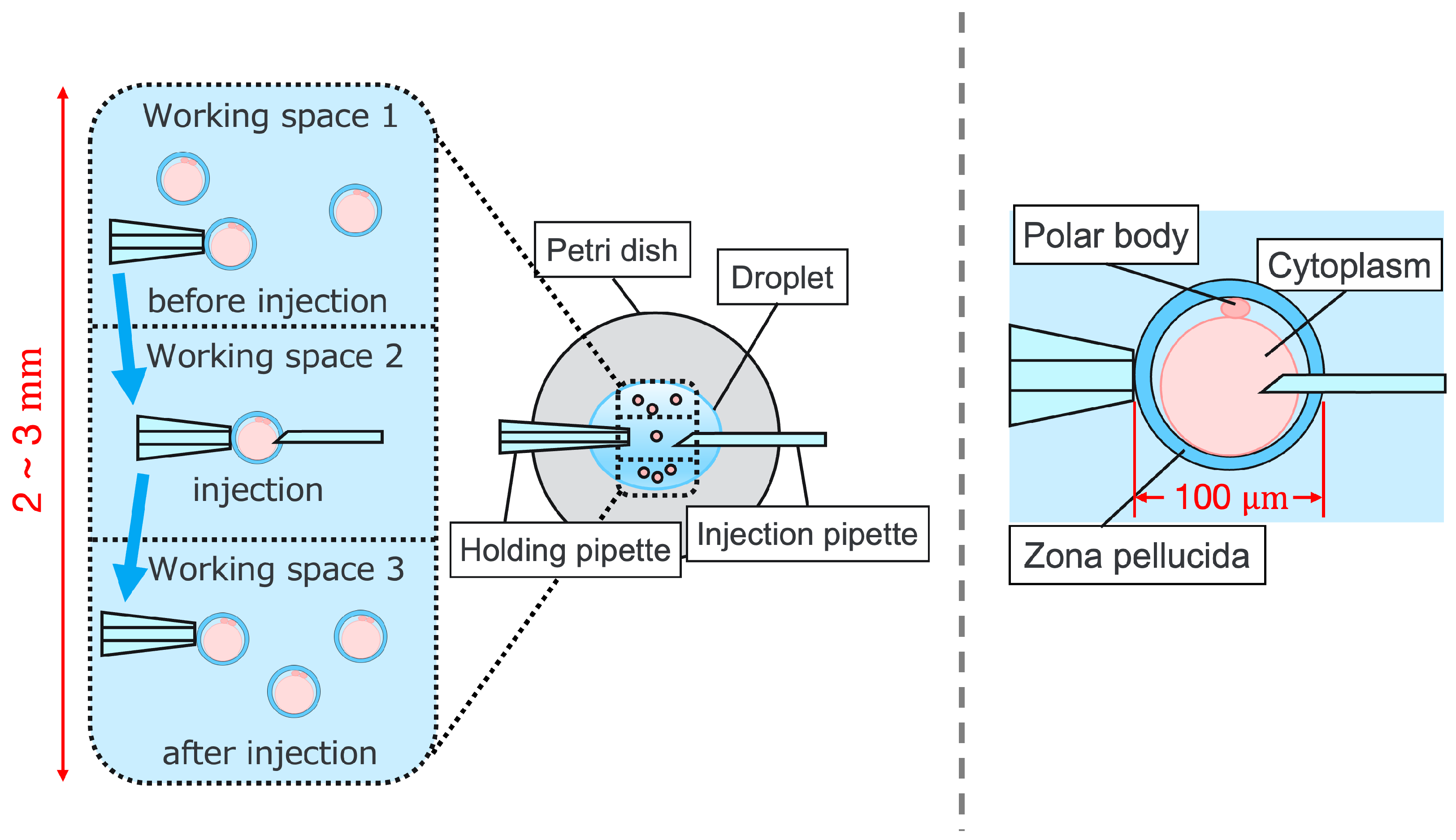

2]. However, ICSI generally requires the direct manipulation of oocytes and sperm under an optical microscope, which is a complicated and delicate operation necessitating high skill. In addition, oocytes are damaged by osmotic pressure during cell manipulation. Therefore, ICSI requires efficient manipulation in a short time. The ICSI procedure is conducted as follows. First, the pre-injected oocyte in workspace 1 in

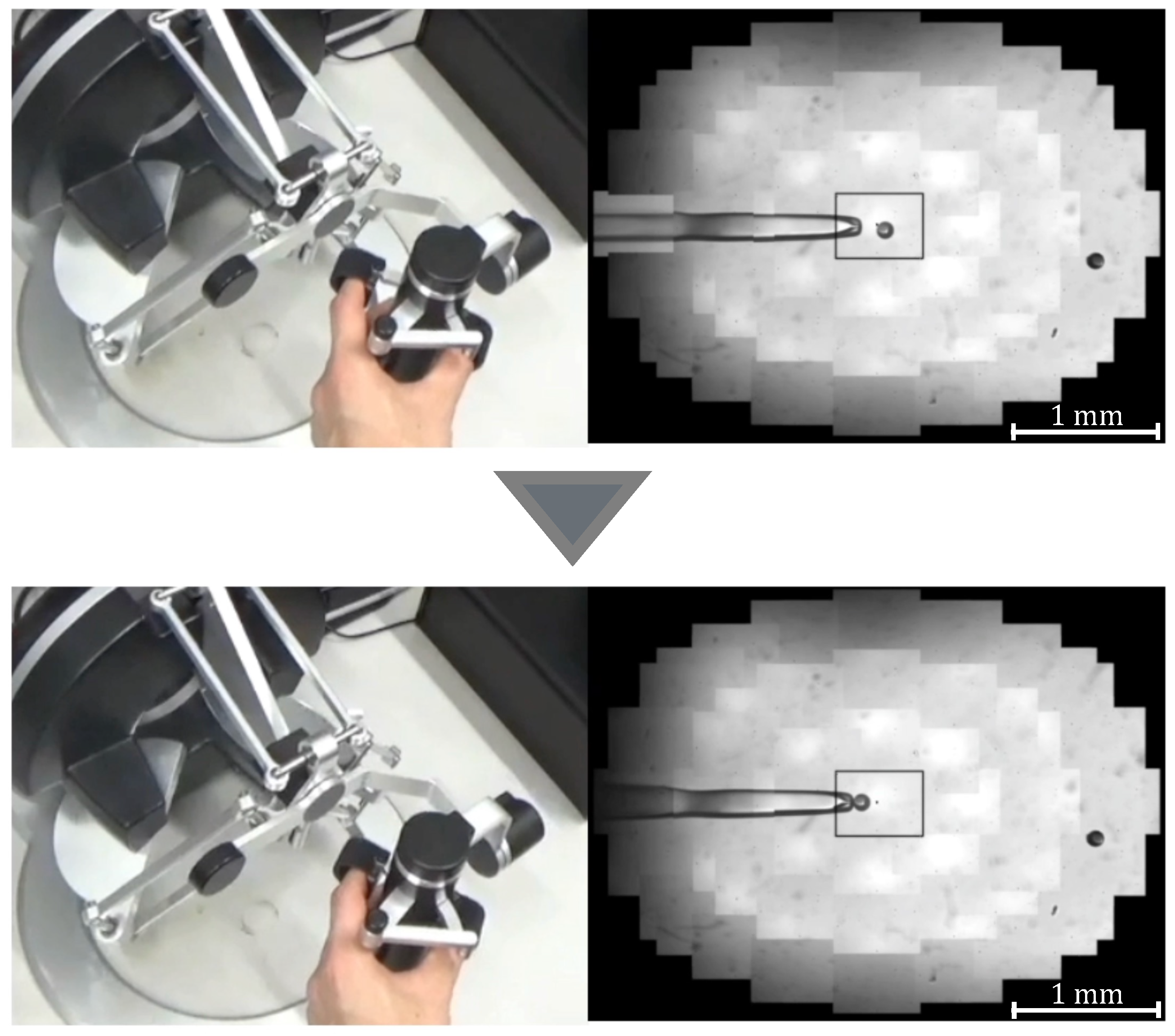

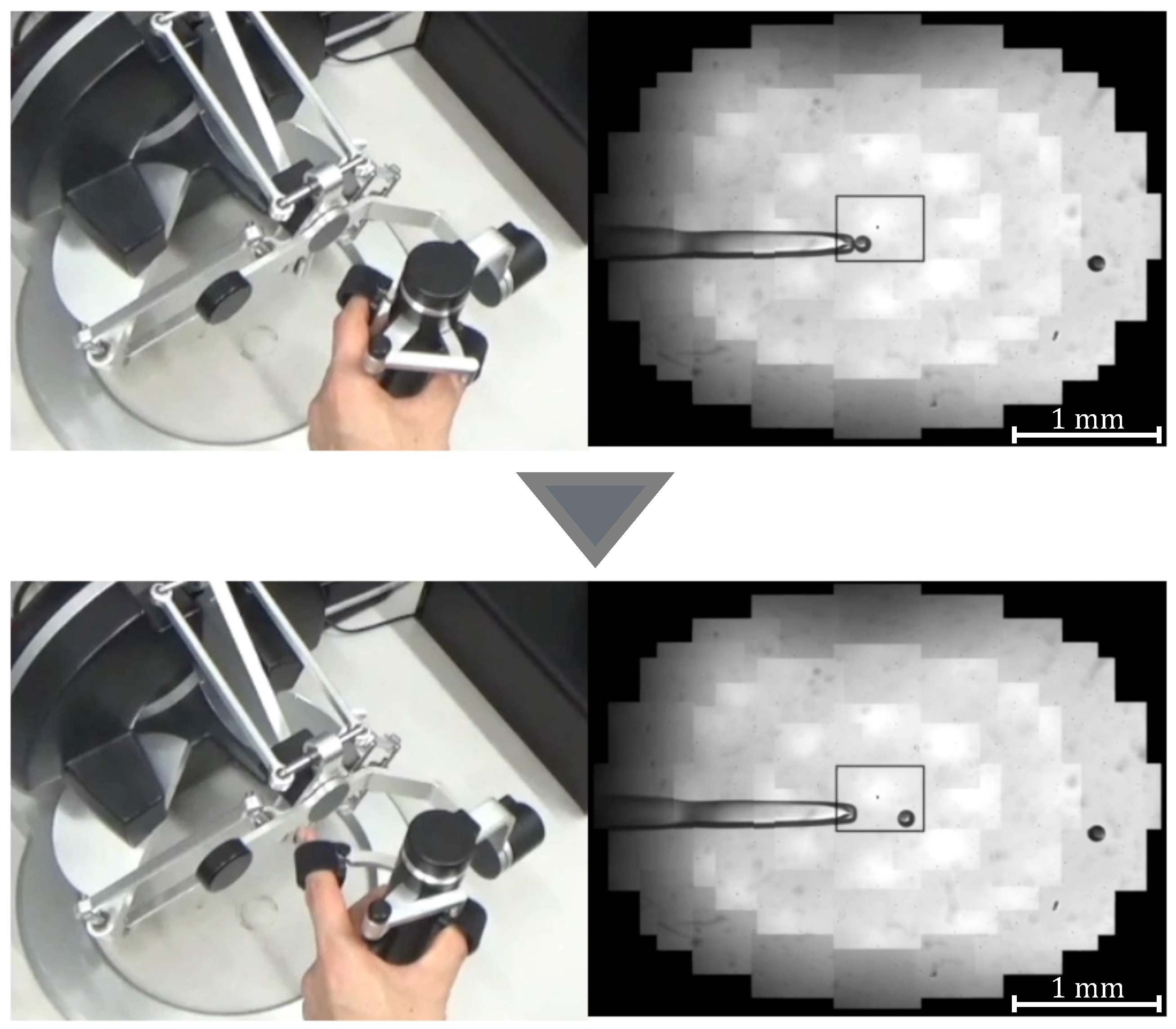

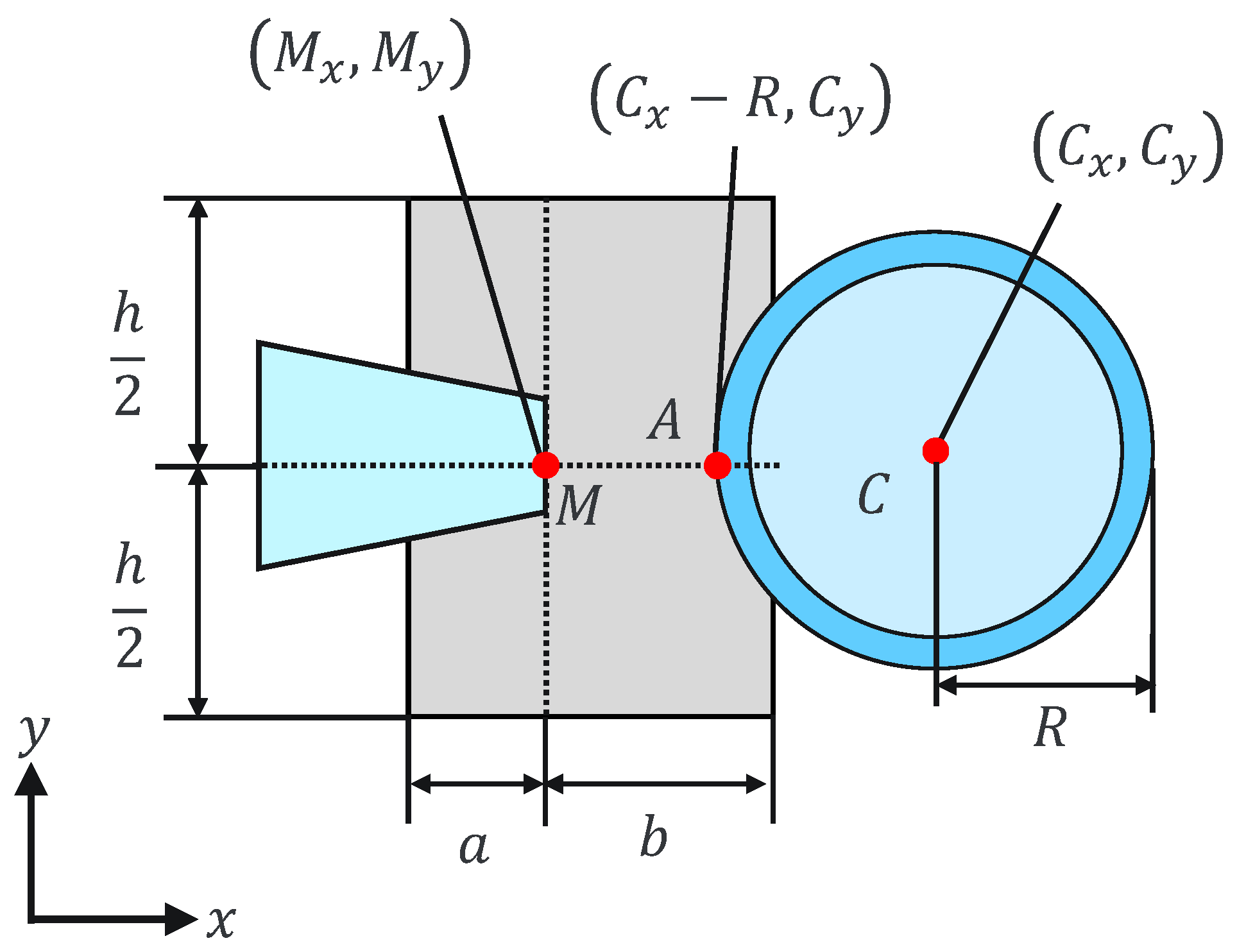

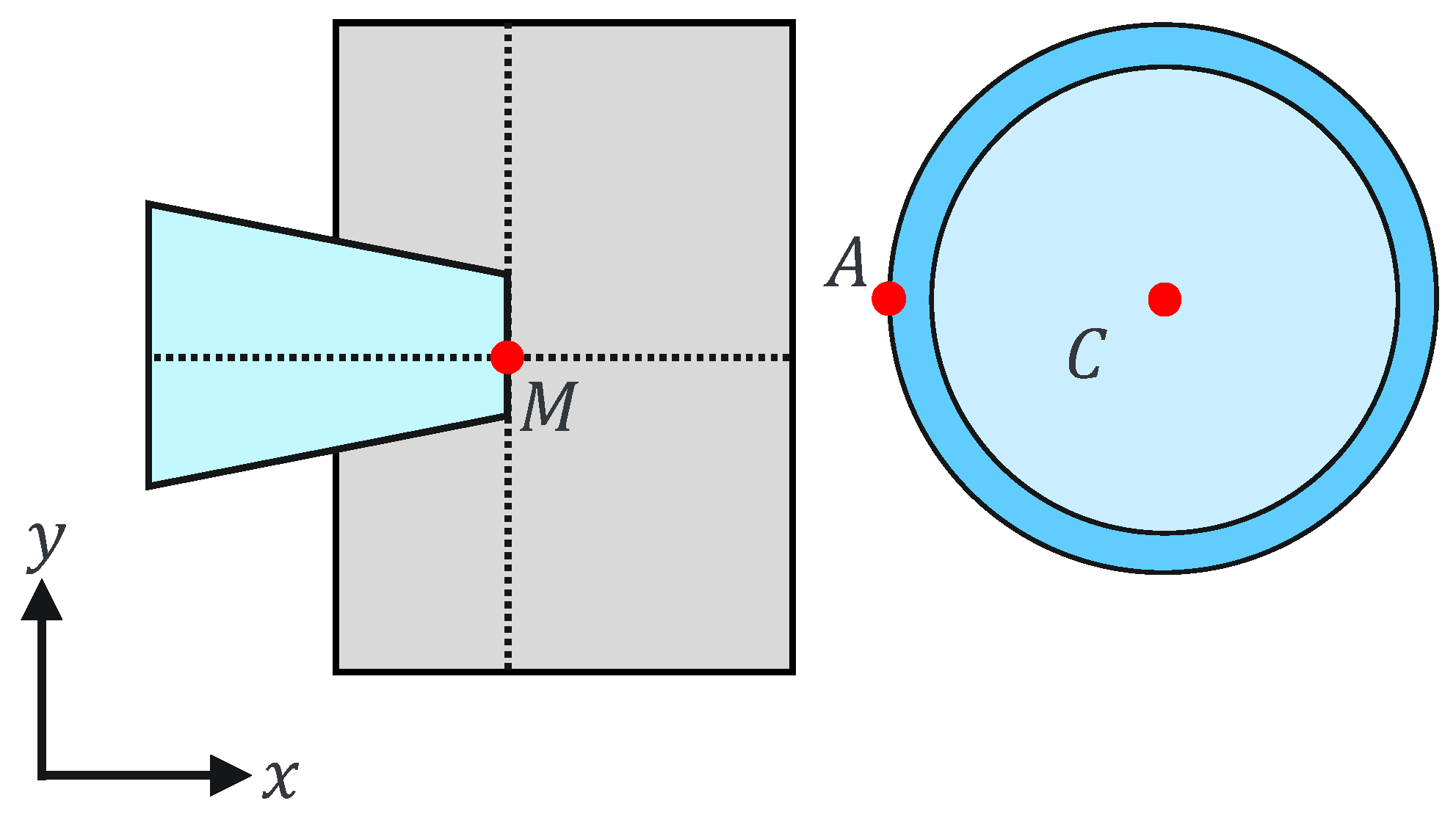

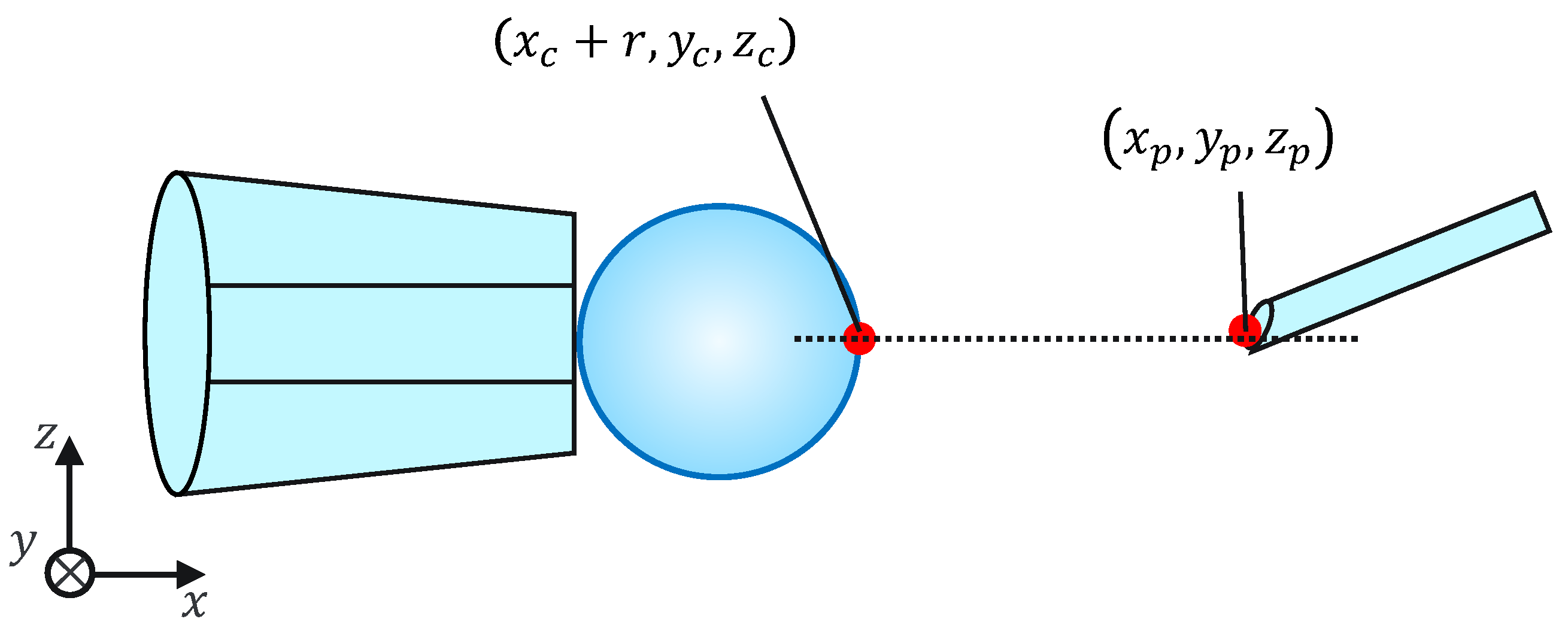

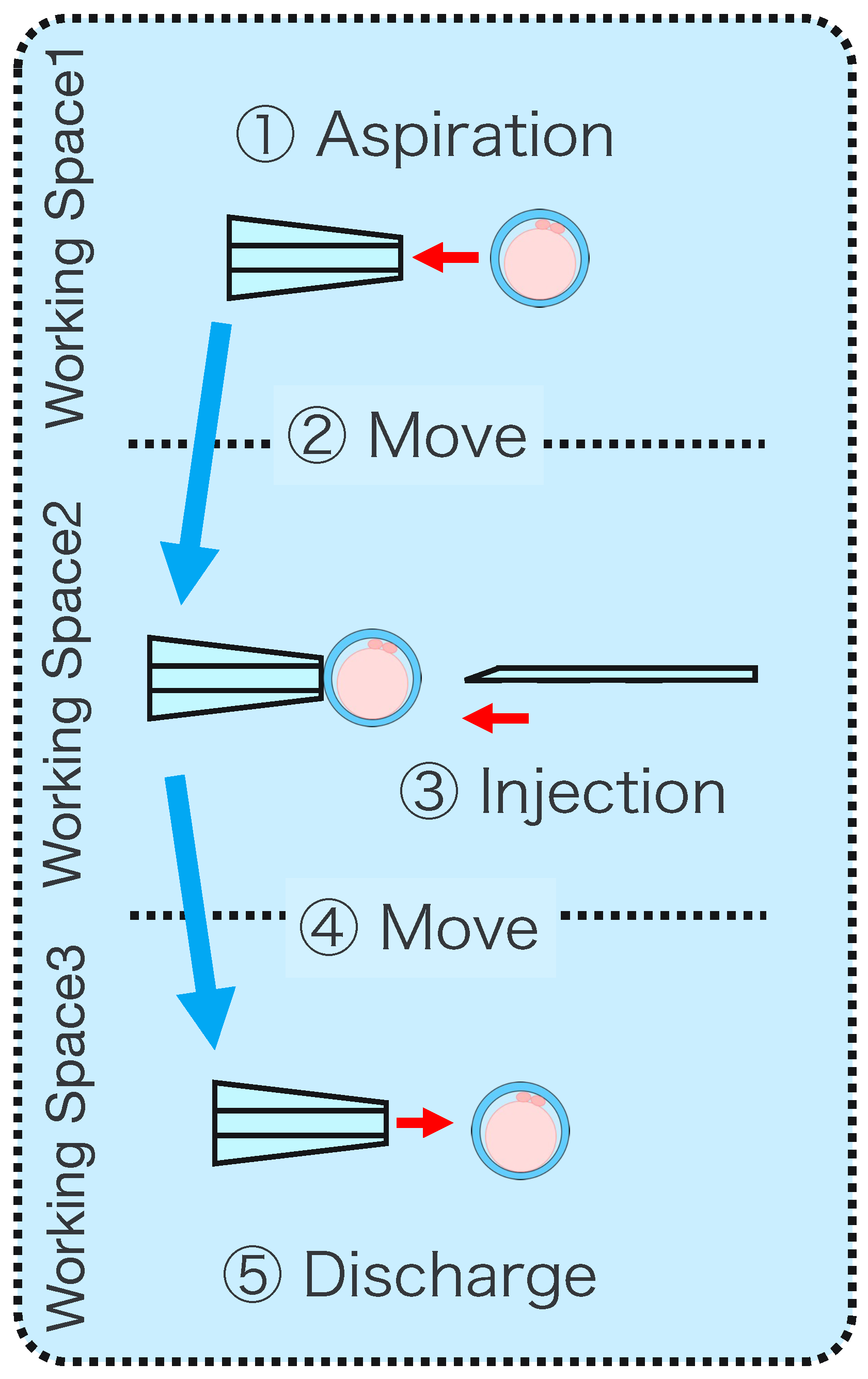

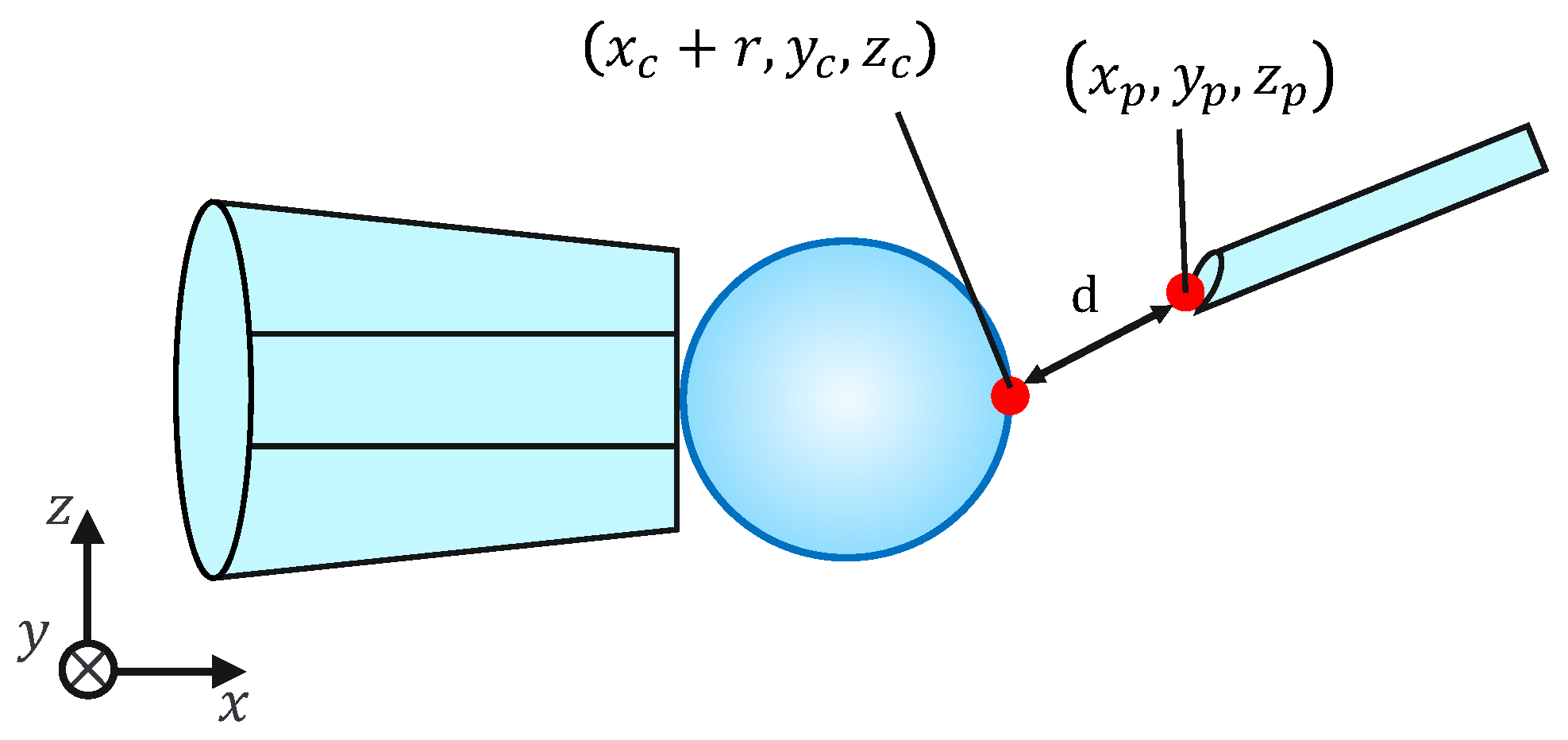

Figure 1 is aspirated using a holding pipette and moved to workspace 2. The cells are then rotated in workspace 2, the polar body is moved to the 12 or 6 o’clock directions, an injection pipette is injected into the cytoplasm of the oocyte, and the sperm are injected. The cells are rotated to avoid damage to the spindles near the polar body. High-resolution images are required to observe the microscopic polar bodies and sperm. The holding pipette is then moved to workspace 3, and the oocyte is ejected [

3,

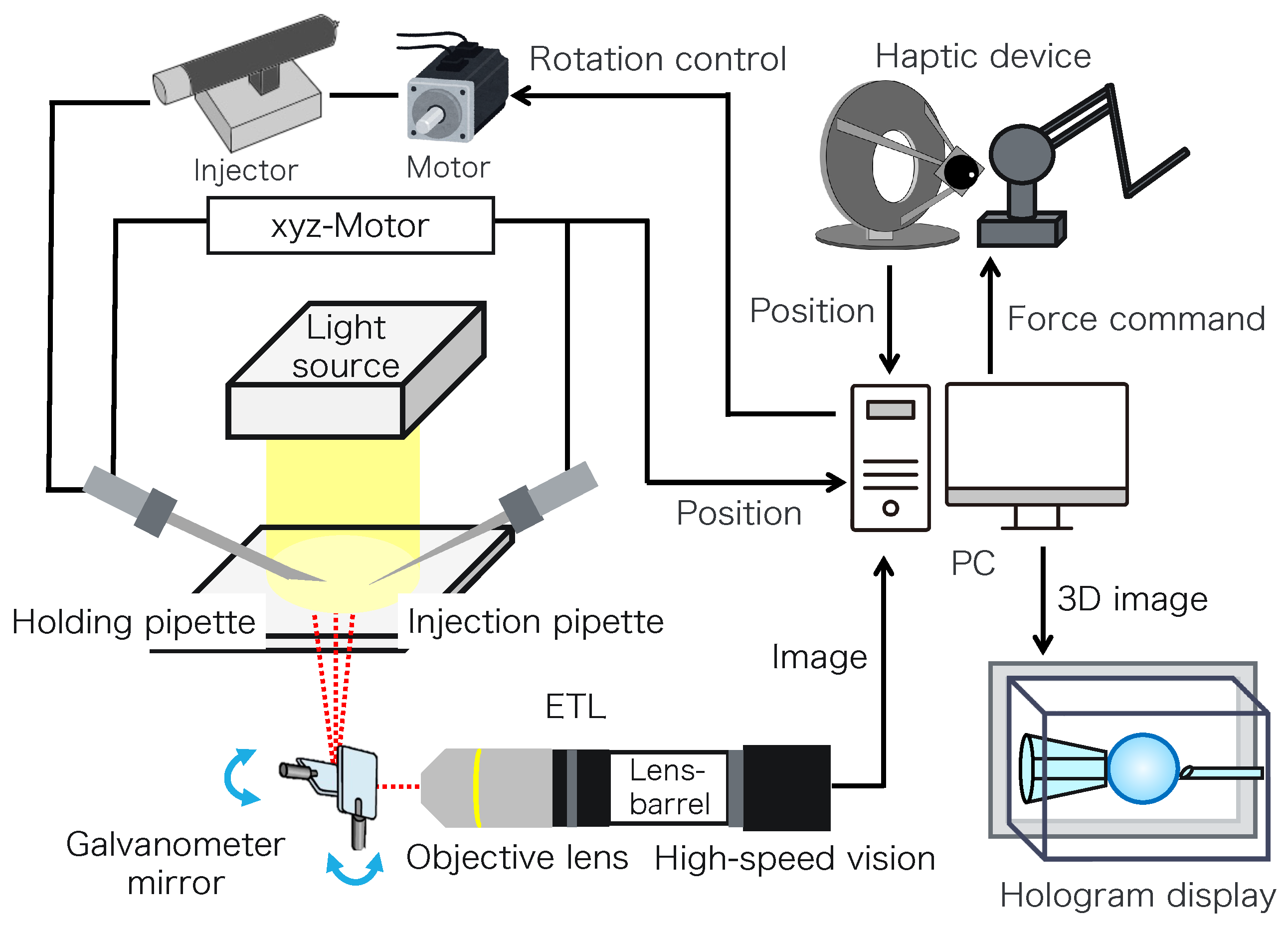

4]. The reasons why the ICSI operation is difficult and inefficient are explained from two aspects: the presenting image and the manipulation device. In terms of the presentation image, there is a lack of depth information and an inability to present a wide-area high-resolution image. The rotation and injection of cells requires high-precision work, and the focus position must be adjusted along the depth direction to observe details such as the position of the polar bodies and nuclei of the cells in the high-resolution image. In addition, because cells have individual differences, and the shapes of the cells and polar bodies differ [

5], conventional optical microscopes require the operator to change the light intensity and lenses to change the field of view according to the work stage. Observing the three-dimensional trajectories of particles can be challenging due to the limited depth of field of optical microscopes [

6]. Certain confocal laser microscopes are capable of three-dimensional (3D) measurements to acquire depth information [

7], and several inline holographic microscopes can acquire 3D information of a sample by transmitting light in a noncontact and nondestructive manner. In particular, there are many studies on 3D particle-tracking methods using digital holography [

6]. For instance, a clustering-based particle detection method for digital holography has been proposed [

8], as well as a method for determining the 3D location of multiple biological targets based on digital holography [

9]. However, confocal laser microscopy is not suitable for microinjection owing to the requirement of scanning to measure the depth information and the inability to measure moving cells. Further, inline holographic microscopes are not suitable for microinjection because the conjugate image of the transmitted zero-order light and the object light reduces the resolution of the reproduced image. Although the resolution can be improved using synthetic aperture or pixel super-resolution techniques, multiple images are required; therefore, neither microscope can be introduced to the microinjection process, which requires a spatial resolution of a few micrometers [

10,

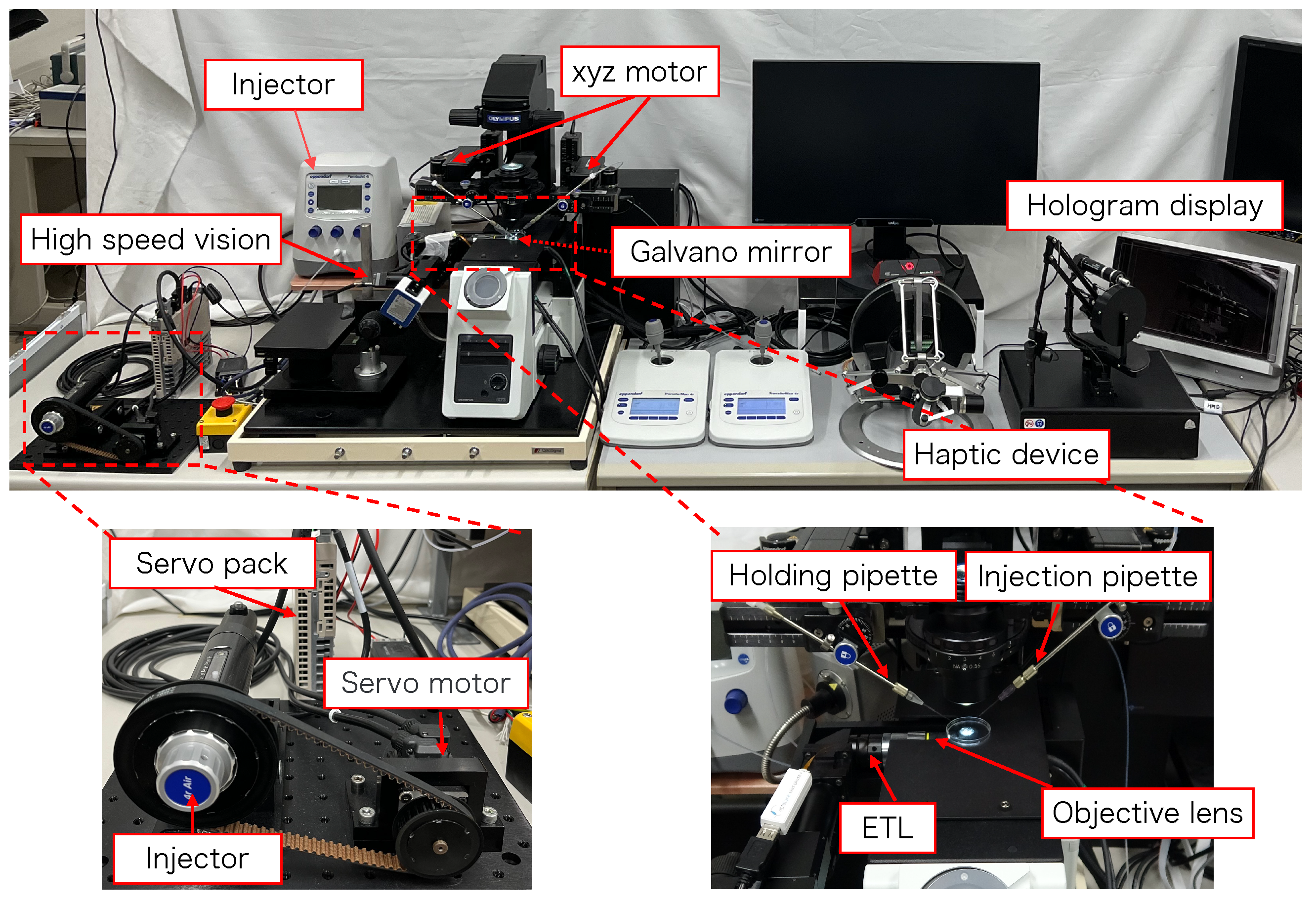

11], a field of view of 3 × 3 mm, and real-time visual feedback. Therefore, we have developed a field-expanding microscope that can capture both a wide field of view and high-resolution images using high-speed viewpoint movements with a galvano-mirror [

12,

13]. Furthermore, we have developed a microscopy system that uses a field-expanding microscope for the real-time 3D presentation of oocytes and manipulators on a holographic display [

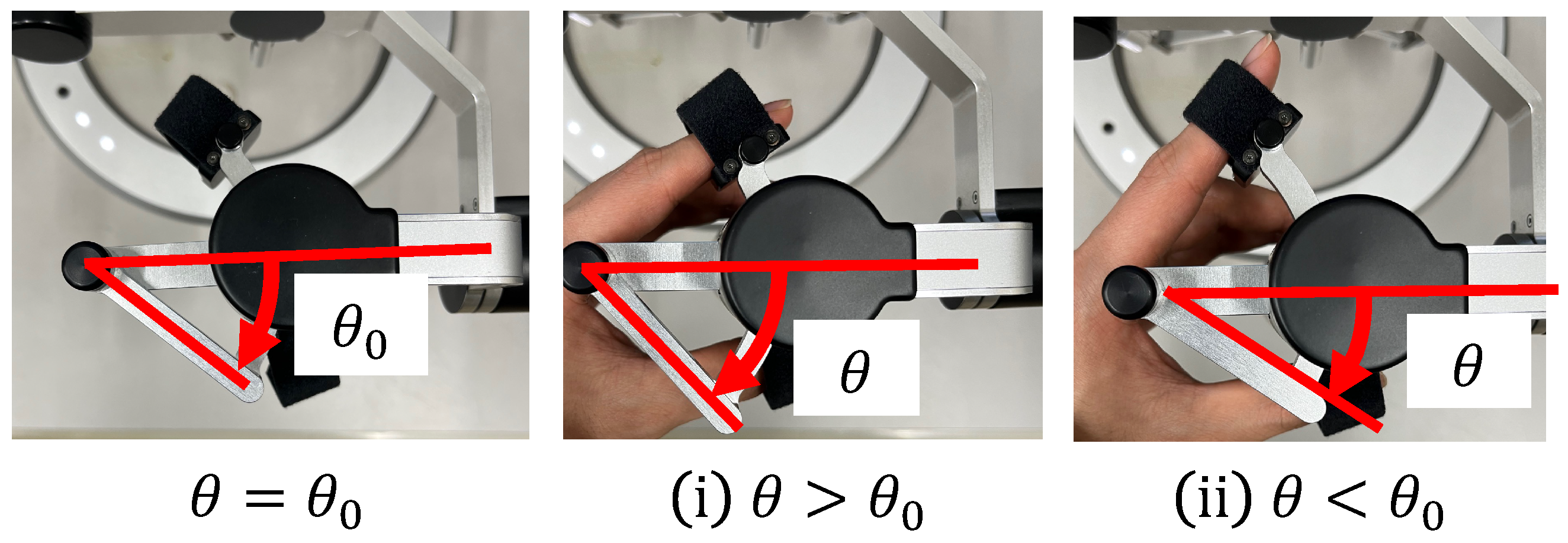

14]. However, the operating device is not intuitive because the micromanipulators used to move the pipettes and joysticks used in conventional devices do not correspond to each other in terms of the degrees of freedom (DOFs) of operation. Micromanipulators have 3-DOFs in translation, whereas joysticks have 2-DOFs in translation and 1-DOF in rotation. In addition, the manipulator must switch to an injector during the manipulation to suck and grasp the cells. Although there are examples of biomedical applications using haptic devices, haptic devices are not currently used in ICSI due to the difficulty of the real-time sensing of the three-dimensional position of the oocyte and the contact force during the ICSI process. To simplify these complicated operations, several studies have been conducted to automate cell manipulation. For example, the automation of a simple task, such as picking up and placing small objects, can be achieved by using MEMS micro-grippers with controllable plunge structures [

15]. In particular, the automation of cell rotation, which is a particularly important but difficult operation, has been attempted in several ways [

4], such as using electric [

16], magnetic [

17], sound [

18,

19], and light [

20,

21] fields. Furthermore, mechanical [

22] and fluidic contacts [

23,

24] have been used to achieve automation. However, although these noncontact rotation methods are suitable for rotating cells at a constant speed, their effects on the developmental process of oocytes have not been evaluated and are risky. Moreover, the light field also has a low power output, which may not be sufficient to rotate the oocytes (>100 μm) or may cause optical damage. Mechanical contact also has the potential for unexpected physical damage [

25] because of the difference in the rotation method from the way it is performed in an actual ICSI [

22]. In addition, research has been conducted on the automated microinjection of zebrafish as an automated microinjection method [

26,

27]. Genome editing has a low success rate and requires a large number of trials, and therefore is suitable for automation, where efficiency is more important than accuracy. However, the application of this technique to ICSI is challenging, as this method requires complex manipulations where oocytes and sperm are valuable; moreover, the success rate is an important factor. Automatic ICSI is a complex process requiring the precise control and coordination of various steps. Any error in any of these steps could result in fertilization failure or damage to the gametes. AI models and robotic systems used for automated ICSI are still in their infancy and require further data and training [

28]. There are also concerns about the potential for algorithmic bias and the difficulty of real-time error checking [

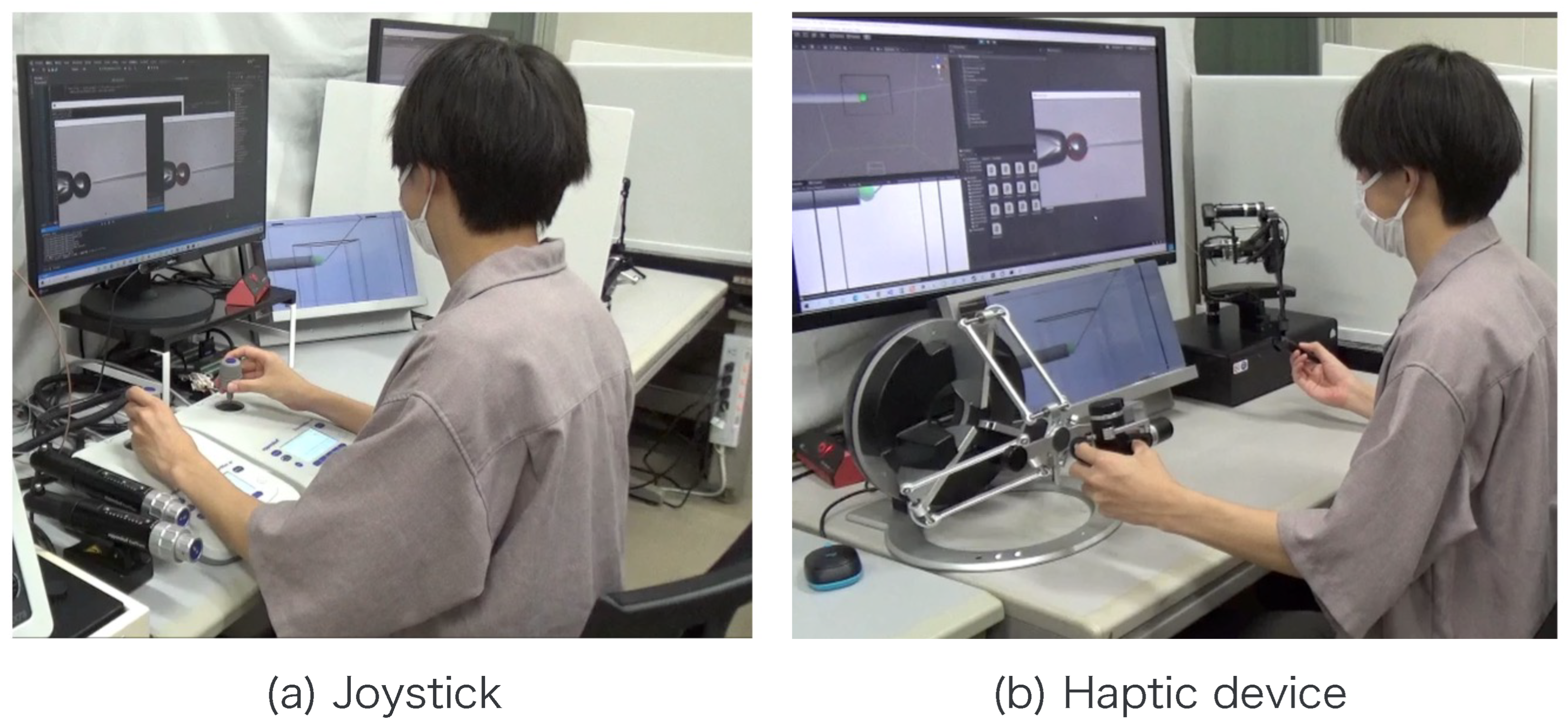

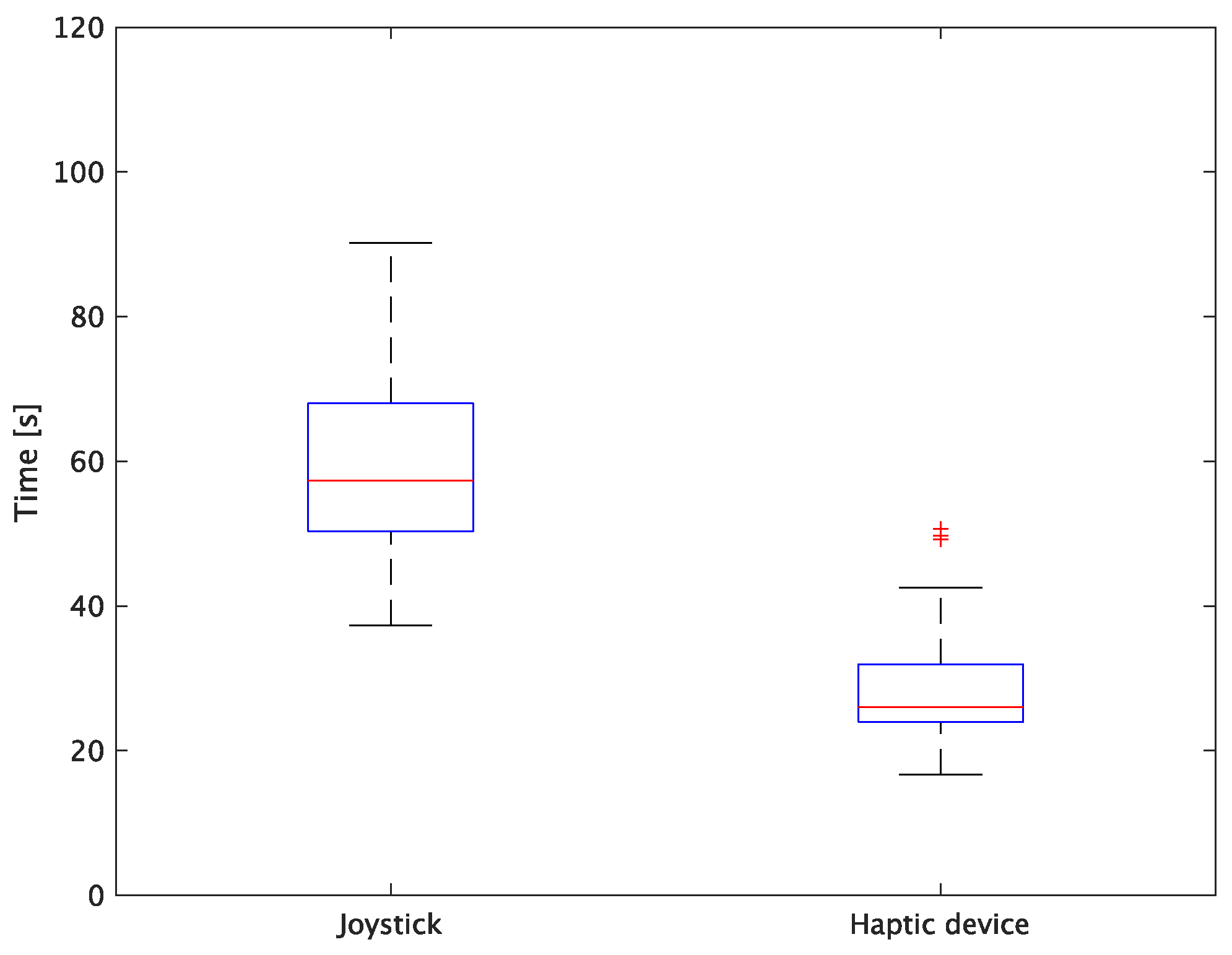

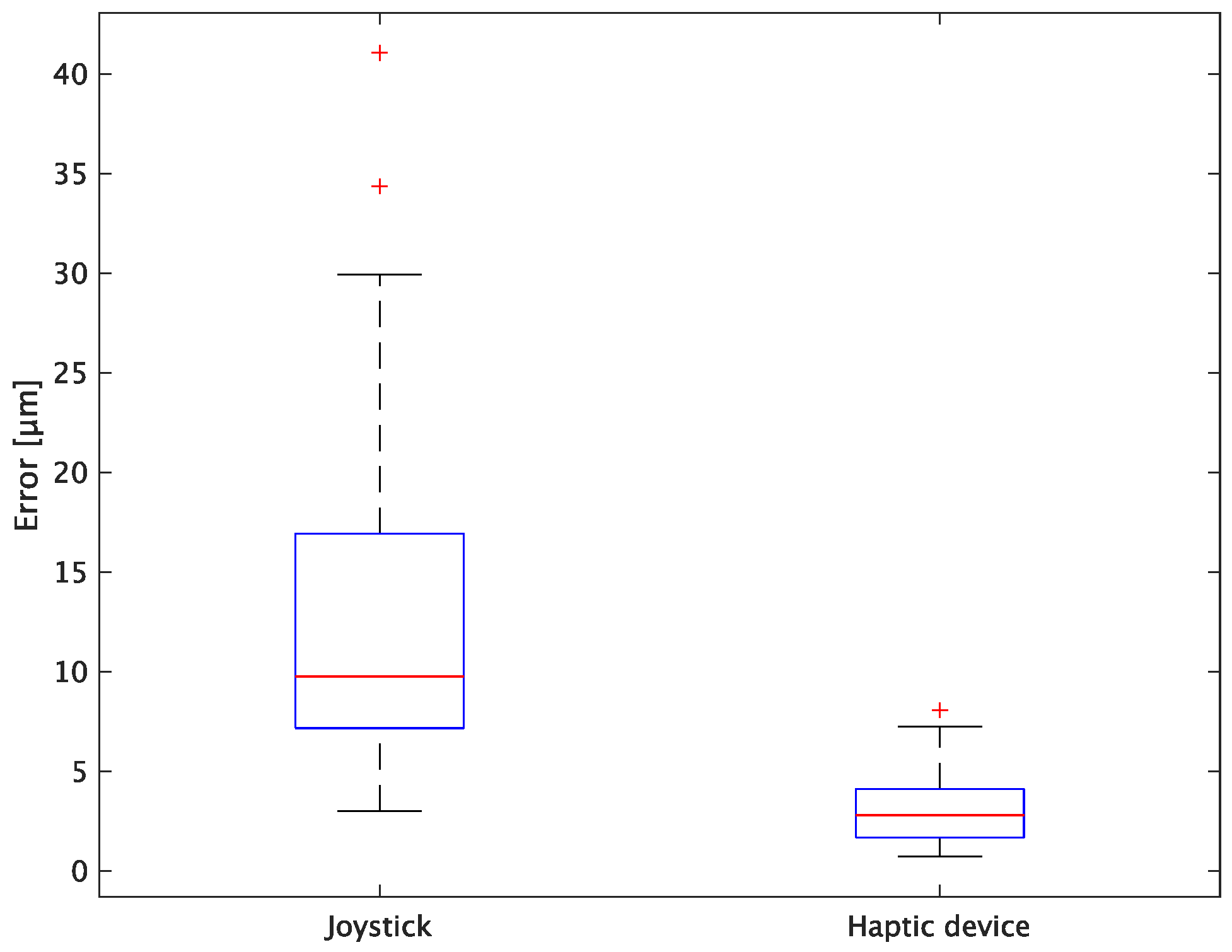

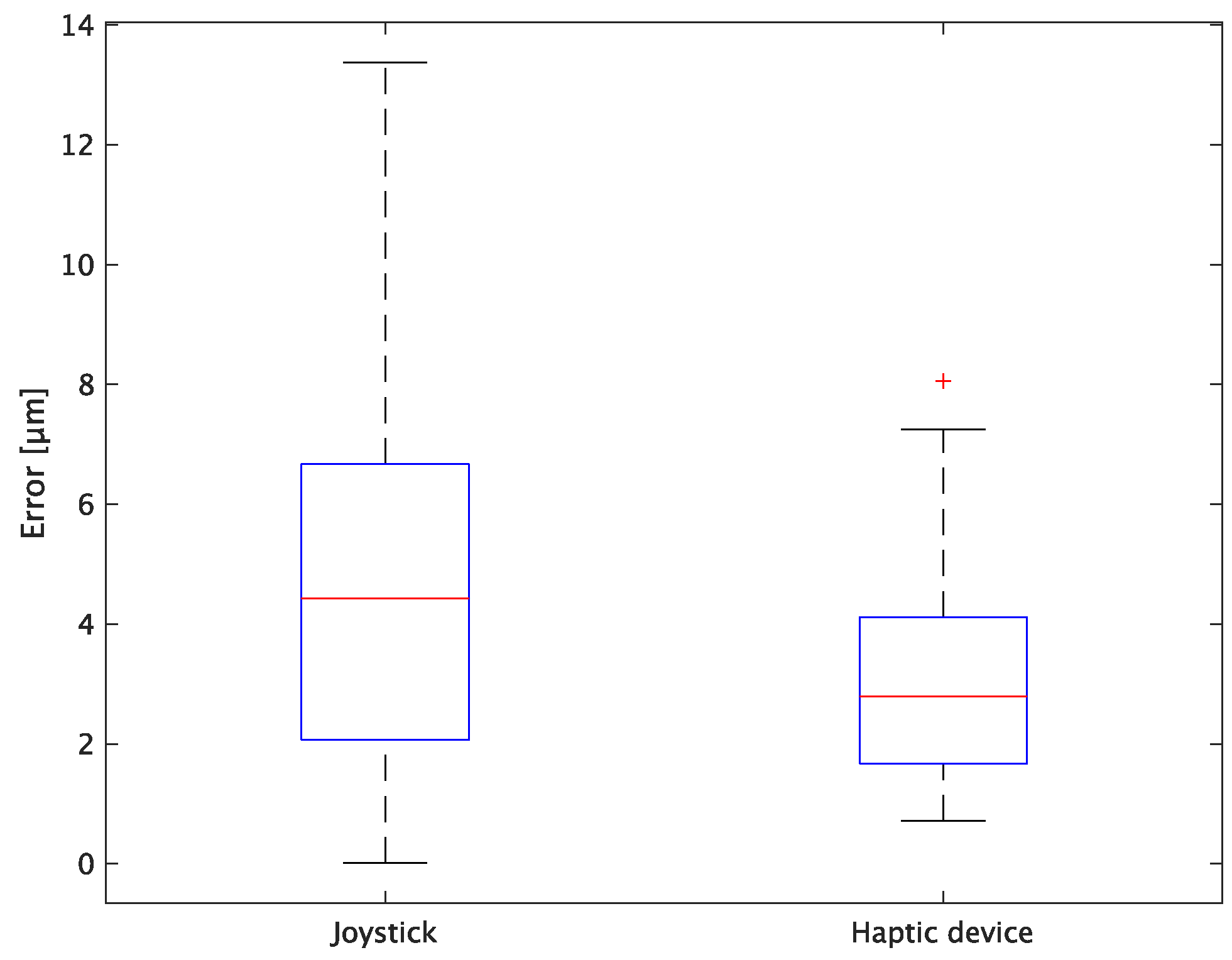

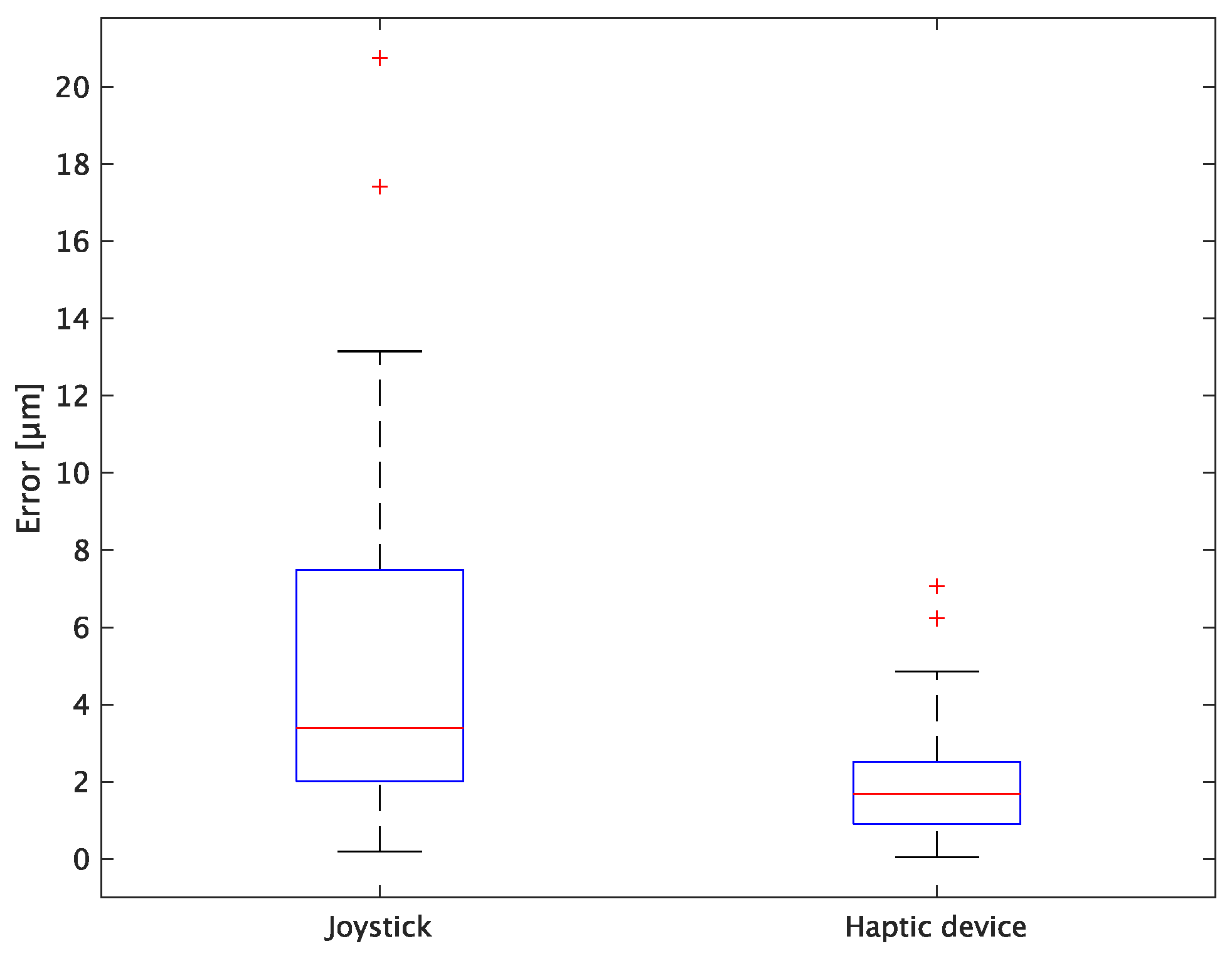

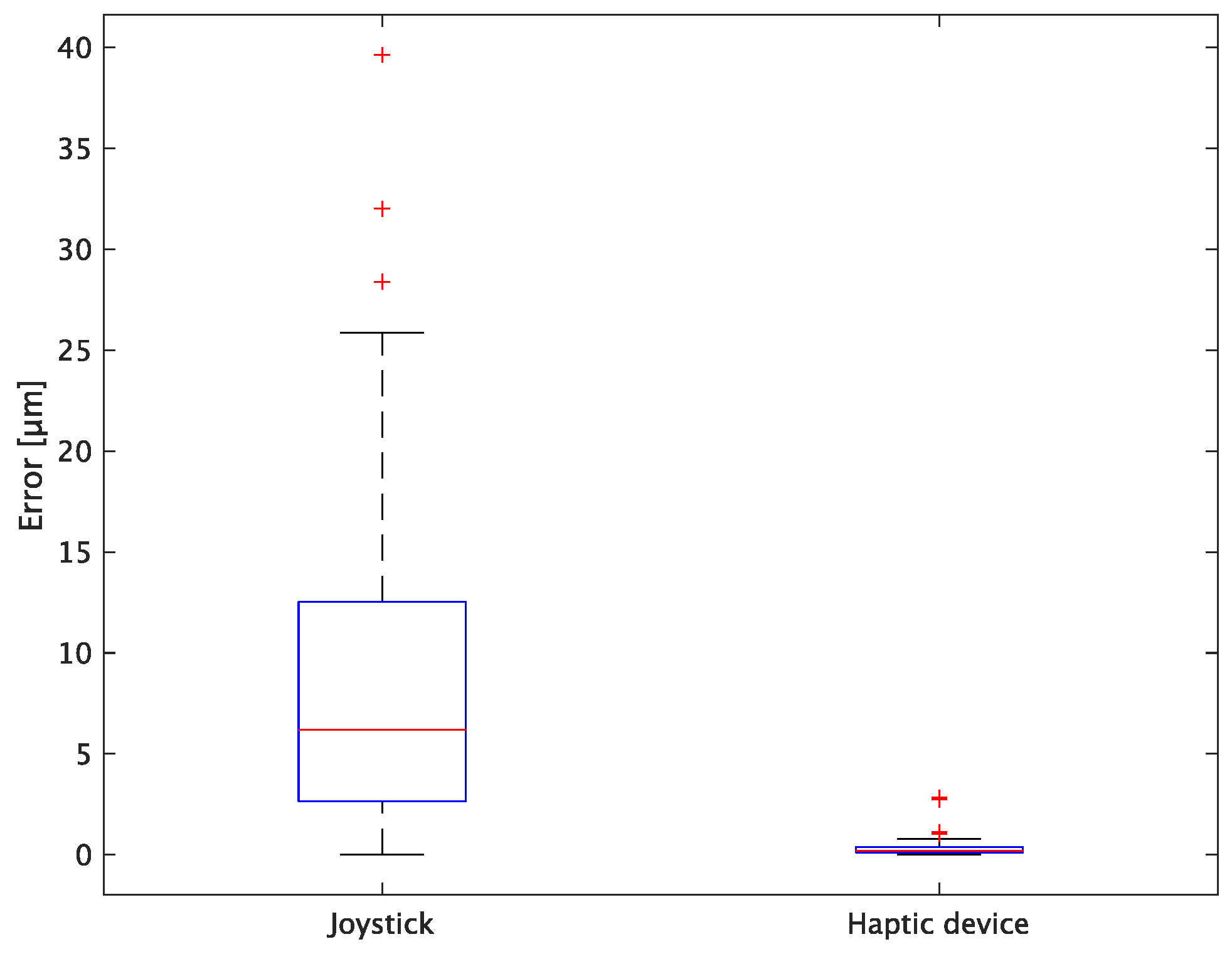

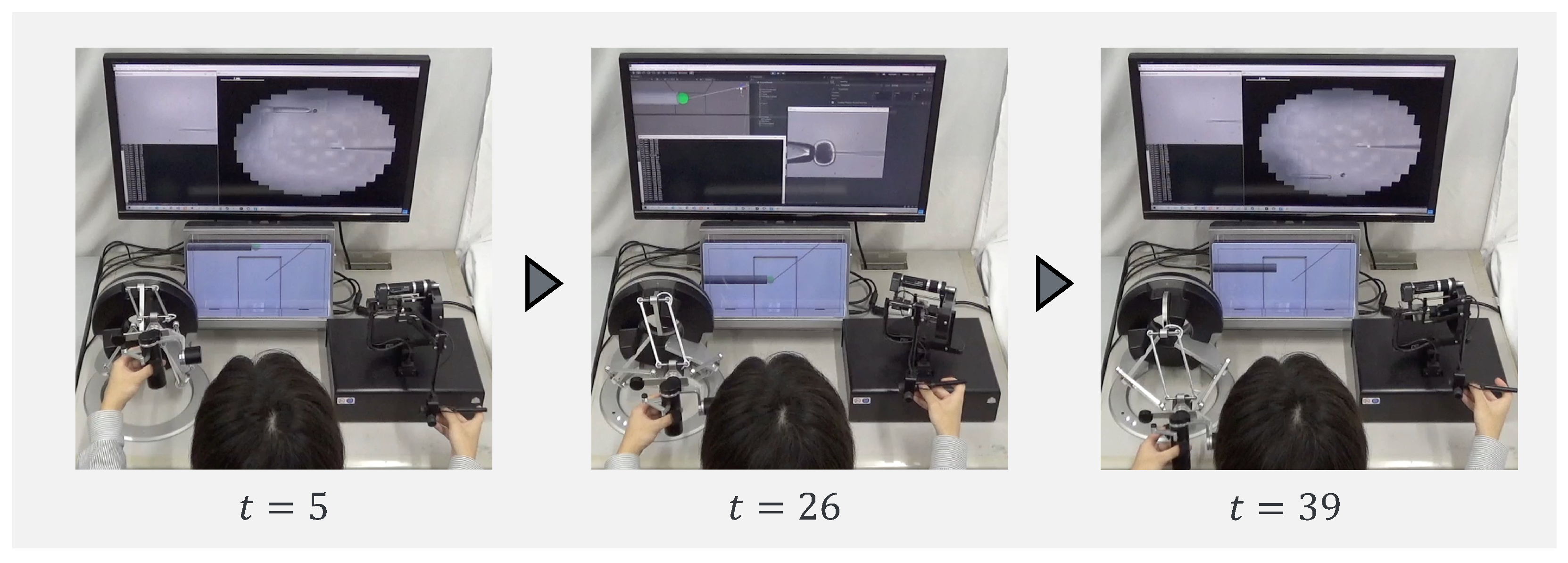

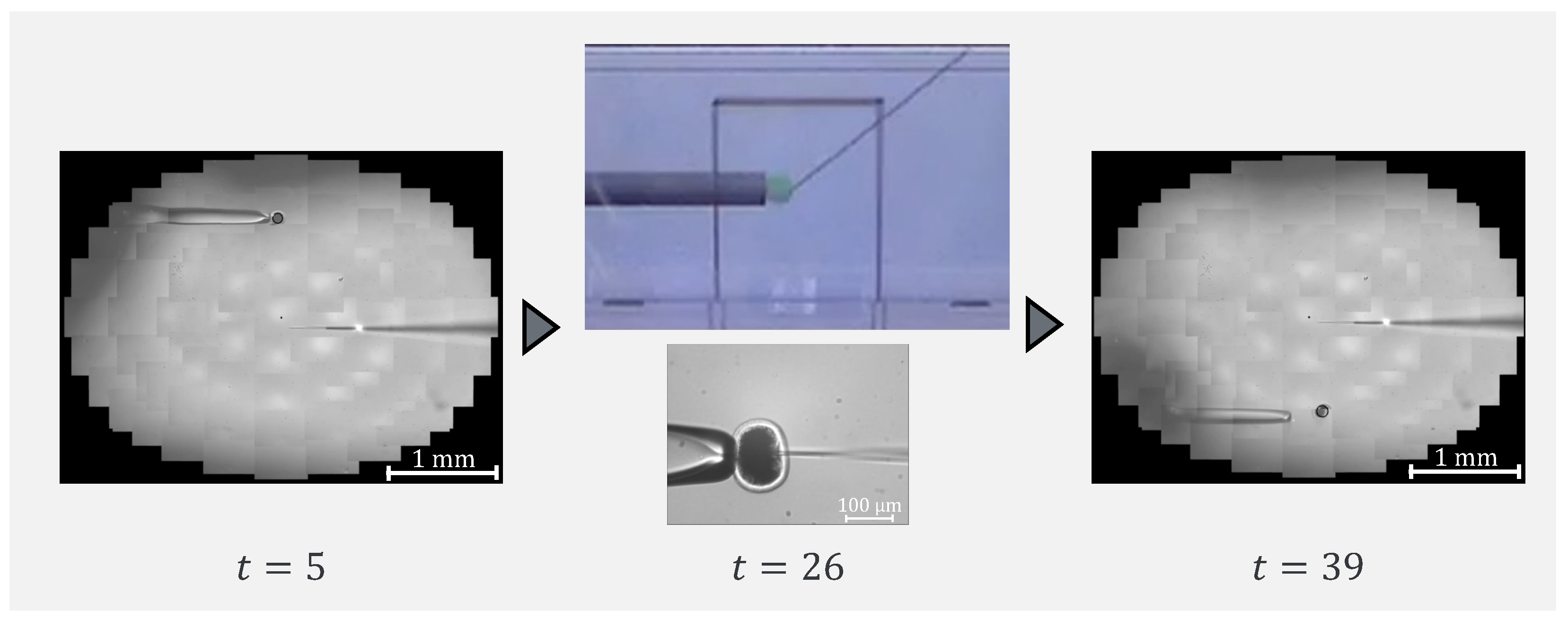

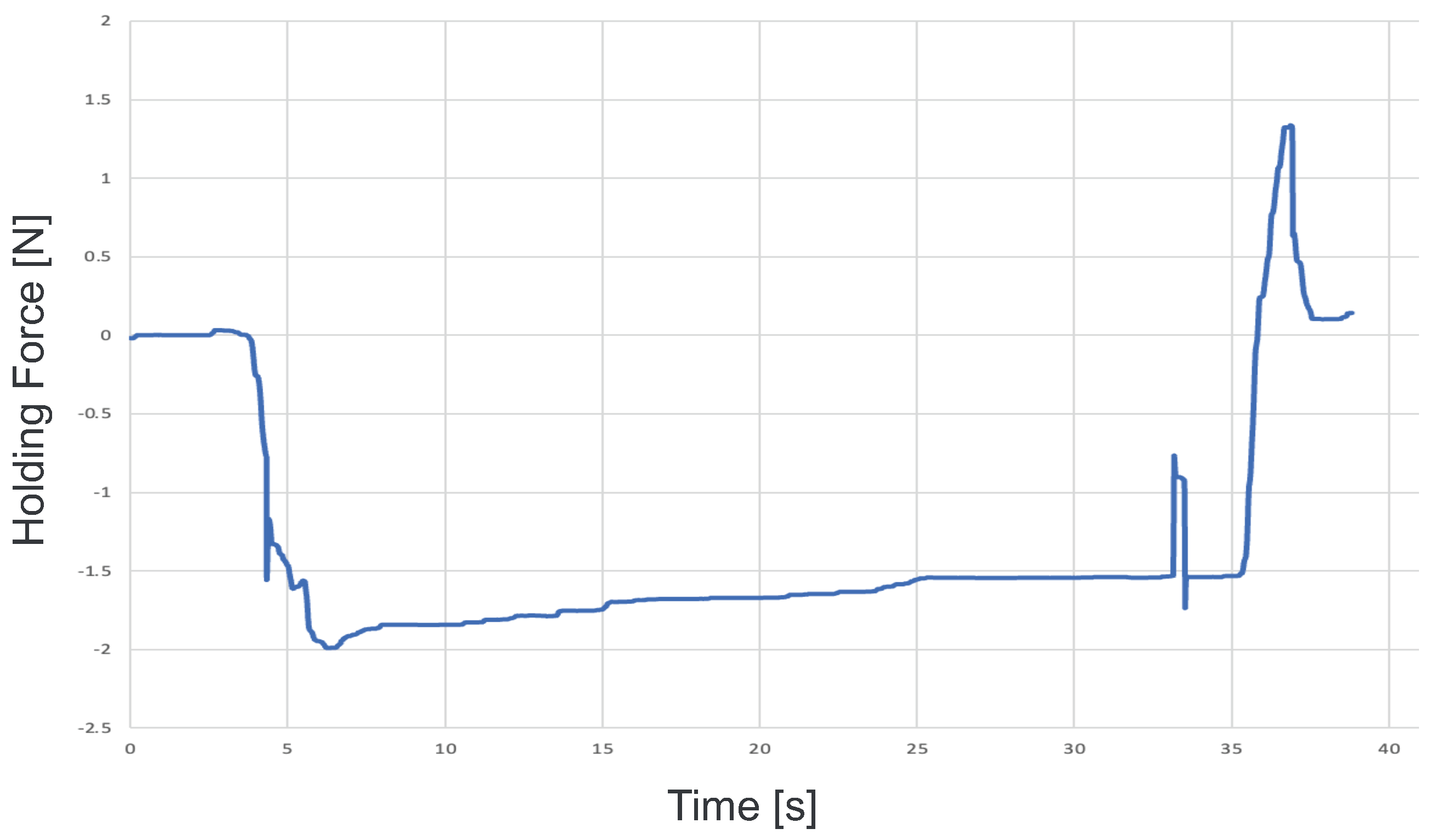

29]. All of these methods and devices differ from conventional manual ICSI, but their effect on cell development rates has not been evaluated. Therefore, we have developed a system that supports human cell manipulation and enables inexperienced operators to perform cell manipulation on par with or better than experienced operators. In this study, in addition to a microscope system that can switch between high-resolution, wide-area imaging and real-time 3D imaging according to the manipulation stage, two intuitive haptic devices that may present a sense of force are used to solve the problem of joysticks as a manipulation device. The haptic devices are used to manipulate the grasping of cells as if they were aspirating or ejecting cells; furthermore, the force sensation is presented to the operator according to the degree of suction, thereby realizing the intuitive suction and ejection of cells. In addition, an automatic position adjustment function during injection and a puncture direction fixation function are used to guide accurate injection. The effectiveness of the proposed system has been experimentally evaluated using porcine embryos to simulate ICSI.