A Multimodal Bracelet to Acquire Muscular Activity and Gyroscopic Data to Study Sensor Fusion for Intent Detection

Abstract

1. Introduction

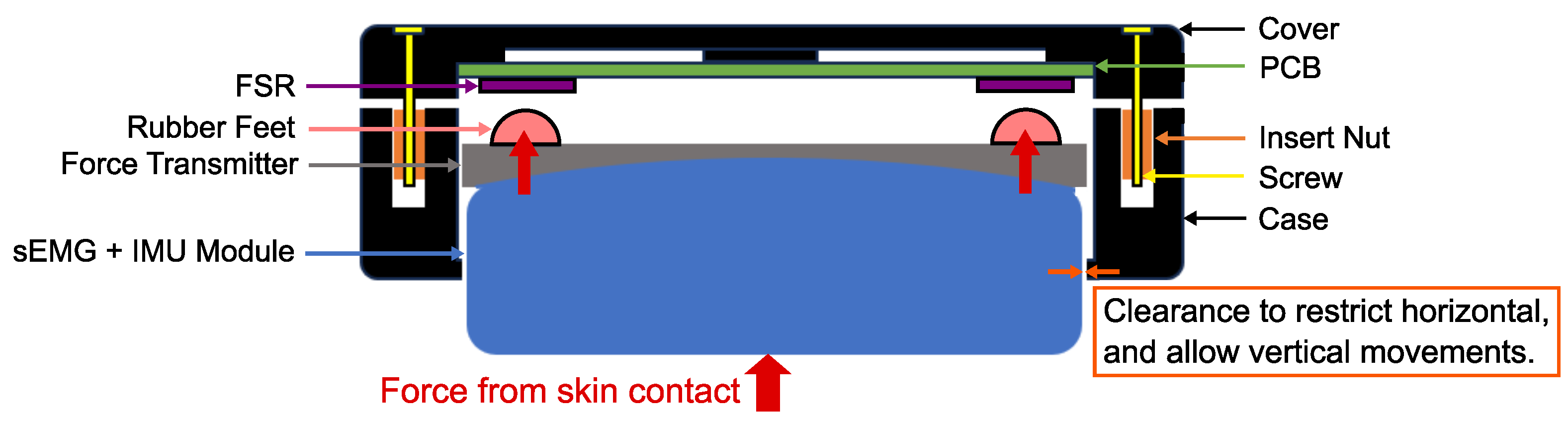

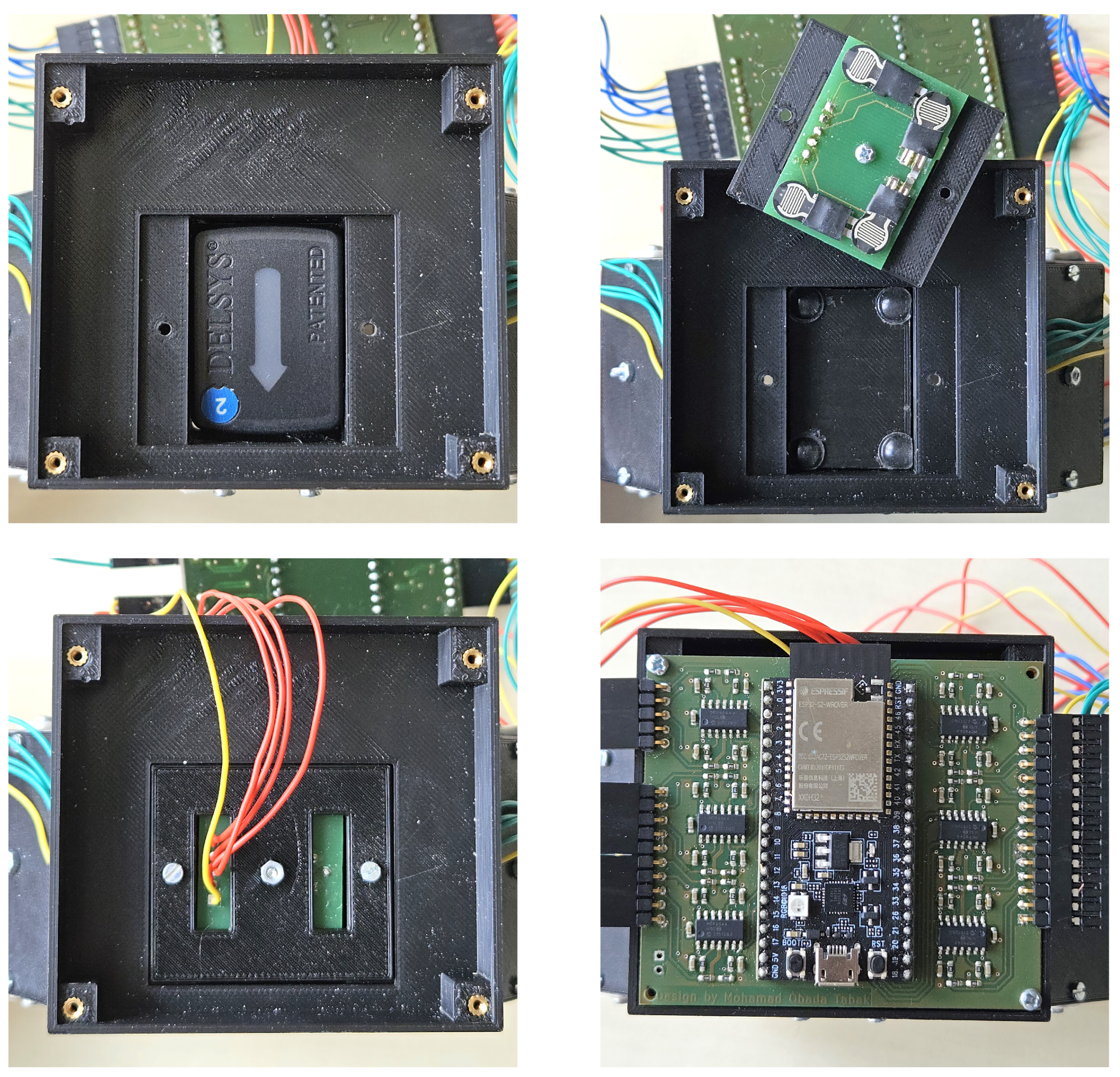

2. Design of the Multimodal Bracelet

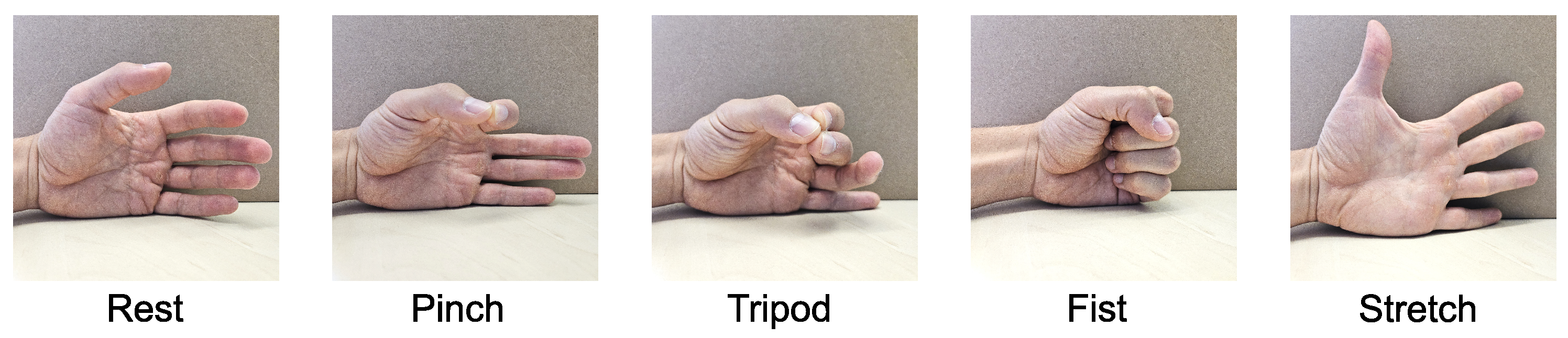

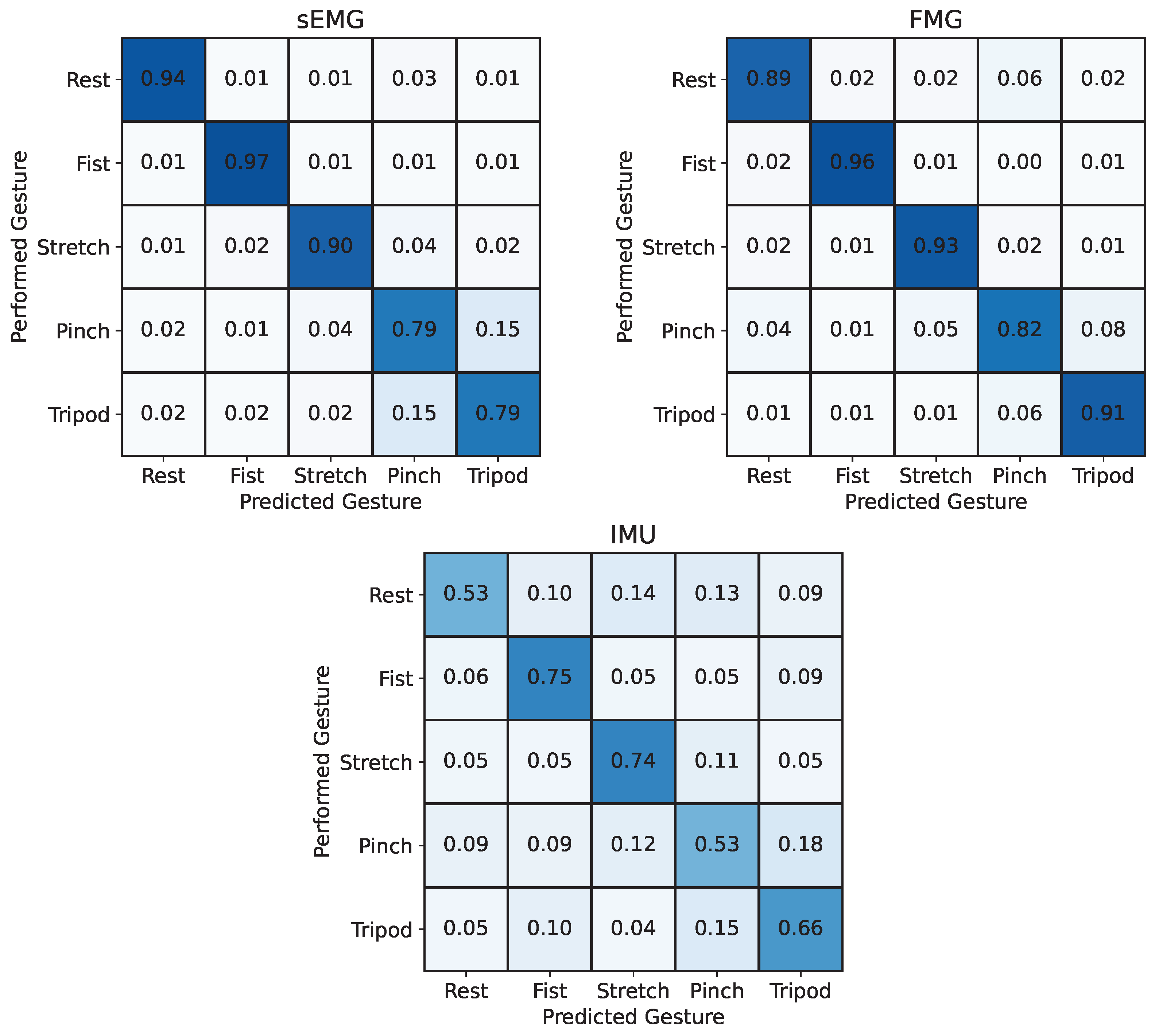

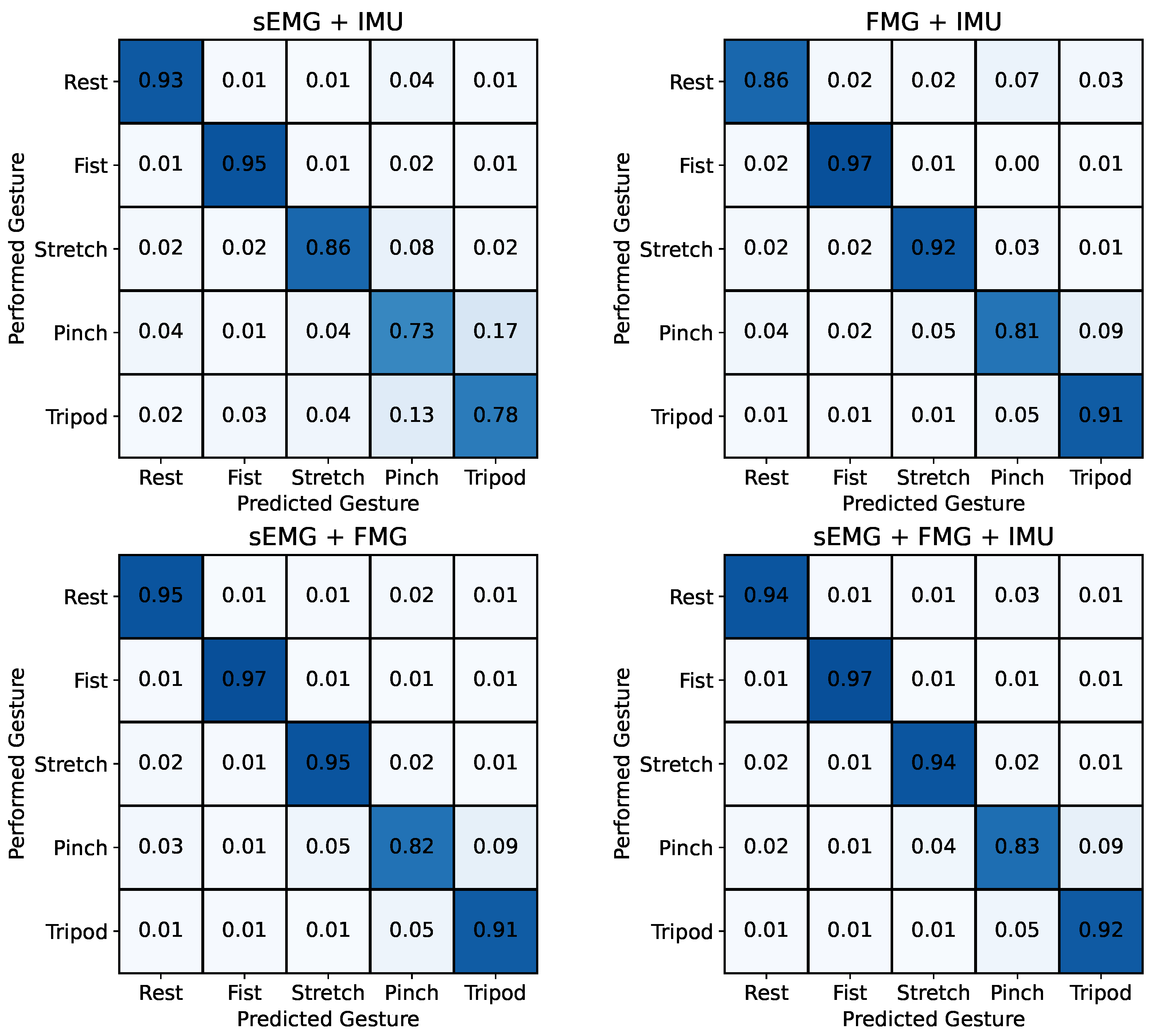

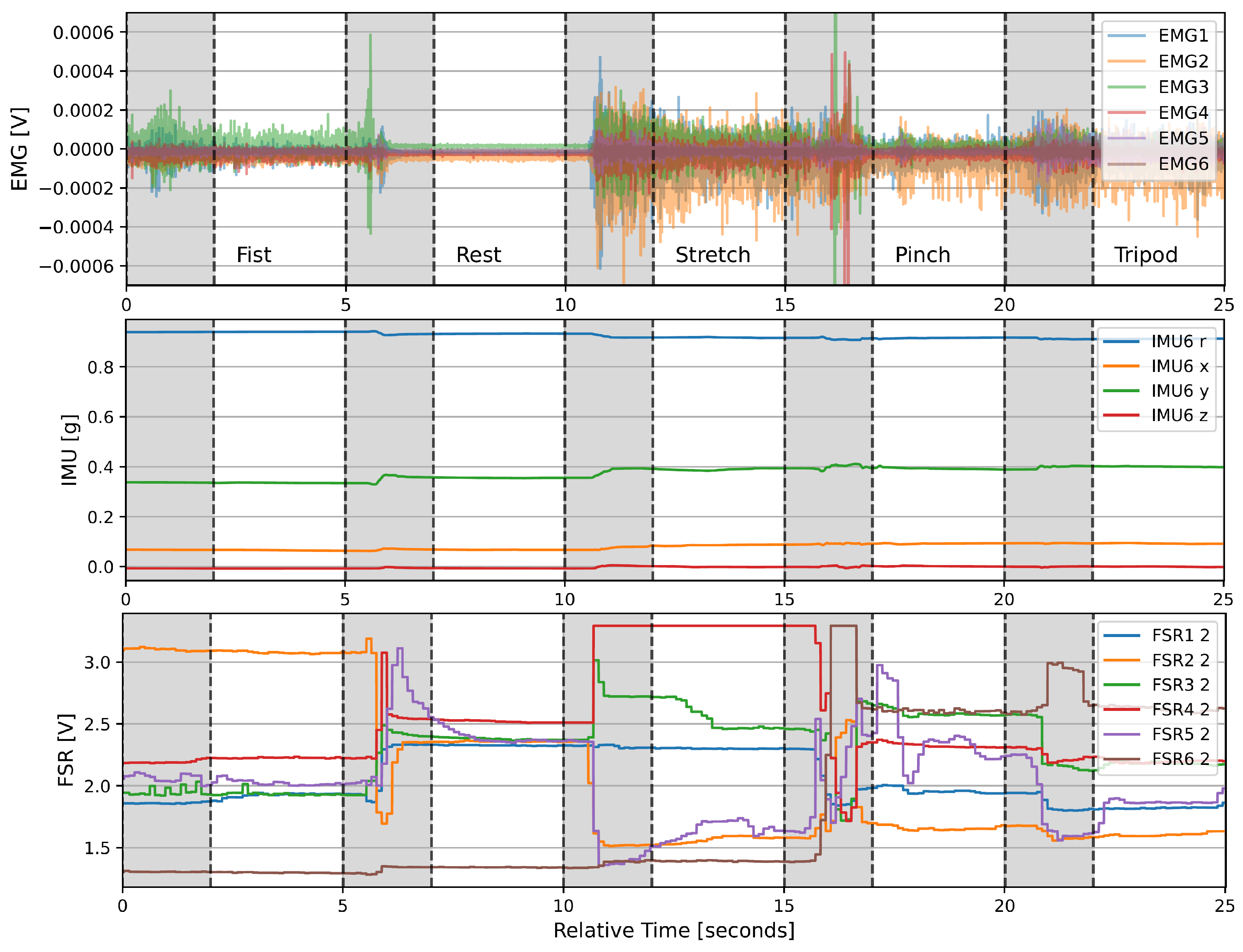

3. Functional Tests and Evaluation

- sEMG: Mean Absolute Value (MAV), Mean Absolute Value Slope (MAVS), Zero Crossings (ZCs), Slope Sign Changes (SSCs), Wavelength (WL), and Root Mean Square (RMS);

- FMG: Mean Value (MV) and RMS;

- IMU: MV.

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| sEMG | Surface electromyography |

| MMG | Mechanomyography |

| FMG | Force Myography |

| IMU | Inertial Measurement Unit |

| FSR | Force-Sensitive Resistor |

| PCB | Printed Circuit Board |

| RF | Random forest |

| SVM | Support Vector Machines |

| KNN | K-Nearest Neighbors |

References

- Beckerle, P.; Castellini, C.; Lenggenhager, B. Robotic interfaces for cognitive psychology and embodiment research: A research roadmap. Wiley Interdiscip. Rev. Cogn. Sci. 2019, 10, e1486. [Google Scholar] [CrossRef] [PubMed]

- Mills, K.R. The basics of electromyography. J. Neurol. Neurosurg. Psychiatry 2005, 76, ii32–ii35. [Google Scholar] [CrossRef] [PubMed]

- Vogel, J.; Castellini, C.; van der Smagt, P. EMG-based teleoperation and manipulation with the DLR LWR-III. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 672–678. [Google Scholar] [CrossRef]

- Artemiadis, P.K.; Kyriakopoulos, K.J. An EMG-based robot control scheme robust to time-varying EMG signal features. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 582–588. [Google Scholar] [CrossRef] [PubMed]

- Rojas-Martinez, M.; Mananas, M.A.; Alonso, J.F. High-density surface EMG maps from upper-arm and forearm muscles. J. Neuroeng. Rehabil. 2012, 9, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Dwivedi, A.; Lara, J.; Cheng, L.K.; Paskaranandavadivel, N.; Liarokapis, M. High-density electromyography based control of robotic devices: On the execution of dexterous manipulation tasks. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 3825–3831. [Google Scholar]

- Artemiadis, P.K.; Kyriakopoulos, K.J. EMG-based control of a robot arm using low-dimensional embeddings. IEEE Trans. Robot. 2010, 26, 393–398. [Google Scholar] [CrossRef]

- Meeker, C.; Ciocarlie, M. EMG-Controlled Non-Anthropomorphic Hand Teleoperation Using a Continuous Teleoperation Subspace. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 1576–1582. [Google Scholar] [CrossRef]

- Dwivedi, A.; Kwon, Y.; Liarokapis, M. EMG-Based Decoding of Manipulation Motions in Virtual Reality: Towards Immersive Interfaces. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 3296–3303. [Google Scholar] [CrossRef]

- Atkins, D.J.; Heard, D.C.Y.; Donovan, W.H. Epidemiologic overview of individuals with upper-limb loss and their reported research priorities. JPO J. Prosthetics Orthot. 1996, 8, 2–11. [Google Scholar] [CrossRef]

- Atzori, M.; Gijsberts, A.; Castellini, C.; Caputo, B.; Hager, A.G.M.; Elsig, S.; Giatsidis, G.; Bassetto, F.; Müller, H. Electromyography data for non-invasive naturally-controlled robotic hand prostheses. Sci. Data 2014, 1, 140053 . [Google Scholar] [CrossRef]

- Gharibo, J.S.; Naish, M.D. Multi-modal prosthesis control using semg, fmg and imu sensors. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 2983–2987. [Google Scholar]

- Zhou, H.; Alici, G. Non-invasive human-machine interface (HMI) systems with hybrid on-body sensors for controlling upper-limb prosthesis: A review. IEEE Sens. J. 2022, 22, 10292–10307. [Google Scholar] [CrossRef]

- Jiang, S.; Gao, Q.; Liu, H.; Shull, P.B. A novel, co-located EMG-FMG-sensing wearable armband for hand gesture recognition. Sens. Actuators Phys. 2020, 301, 111738. [Google Scholar] [CrossRef]

- Connan, M.; Ramírez, E.R.; Vodermayer, B.; Castellini, C. Assessment of a wearable forceand electromyography device and comparison of the related signals for myocontrol. Front. Neurorobot. 2016, 10, 17. [Google Scholar] [CrossRef]

- Islam, M.A.; Sundaraj, K.; Ahmad, R.B.; Ahamed, N.U. Mechanomyogram for Muscle Function Assessment: A Review. PLoS ONE 2013, 8, e58902. [Google Scholar] [CrossRef] [PubMed]

- Posatskiy, A.O.; Chau, T. The effects of motion artifact on mechanomyography: A comparative study of microphones and accelerometers. J. Electromyogr. Kinesiol. 2012, 22, 320–324. [Google Scholar] [CrossRef] [PubMed]

- Sarlabous, L.; Torres, A.; Fiz, J.A.; Morera, J.; Jané, R. Evaluation and adaptive attenuation of the cardiac vibration interference in mechanomyographic signals. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 3400–3403. [Google Scholar]

- Radmand, A.; Scheme, E.; Englehart, K. High-resolution muscle pressure mapping for upper-limb prosthetic control. In Proceedings of the MEC—Myoelectric Control Symposium, Fredericton, NB, Canada, 19–22 August 2014; pp. 193–197. [Google Scholar]

- Cho, E.; Chen, R.; Merhi, L.K.; Xiao, Z.; Pousett, B.; Menon, C. Force myography to control robotic upper extremity prostheses: A feasibility study. Front. Bioeng. Biotechnol. 2016, 4, 18. [Google Scholar] [CrossRef] [PubMed]

- Xiao, Z.G.; Menon, C. A review of force myography research and development. Sensors 2019, 19, 4557. [Google Scholar] [CrossRef] [PubMed]

- Ravindra, V.; Castellini, C. A comparative analysis of three non-invasive human-machine interfaces for the disabled. Front. Neurorobotics 2014, 8, 24. [Google Scholar] [CrossRef]

- Chapman, J.; Dwivedi, A.; Liarokapis, M. A wearable, open-source, lightweight forcemyography armband: On intuitive, robust muscle-machine interfaces. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 4138–4143. [Google Scholar]

- Barioul, R.; Gharbi, S.F.; Abbasi, M.B.; Fasih, A.; Ben-Jmeaa-Derbel, H.; Kanoun, O. Wrist Force Myography (FMG) Exploitation for Finger Signs Distinguishing. In Proceedings of the 2019 5th International Conference on Nanotechnology for Instrumentation and Measurement, NanofIM 2019, Sfax, Tunisia, 30–31 October 2019; pp. 13–16. [Google Scholar] [CrossRef]

- Dwivedi, A.; Groll, H.; Beckerle, P. A Systematic Review of Sensor Fusion Methods Using Peripheral Bio-Signals for Human Intention Decoding. Sensors 2022, 22, 6319. [Google Scholar] [CrossRef]

- Choi, Y.; Lee, S.; Sung, M.; Park, J.; Kim, S.; Choi, Y. Development of EMG-FMG Based Prosthesis with PVDF-Film Vibrational Feedback Control. IEEE Sens. J. 2021, 21, 23597–23607. [Google Scholar] [CrossRef]

- Chen, P.; Li, Z.; Togo, S.; Yokoi, H.; Jiang, Y. A Layered sEMG-FMG Hybrid Sensor for Hand Motion Recognition from Forearm Muscle Activities. IEEE Trans. Hum.-Mach. Syst. 2023, 53, 935–944. [Google Scholar] [CrossRef]

- Ke, A.; Huang, J.; Chen, L.; Gao, Z.; He, J. An ultra-sensitive modular hybrid EMG–FMG sensor with floating electrodes. Sensors 2020, 20, 4775. [Google Scholar] [CrossRef]

- Dwivedi, A.; Gerez, L.; Hasan, W.; Yang, C.H.; Liarokapis, M. A soft exoglove equipped with a wearable muscle-machine interface based on forcemyography and electromyography. IEEE Robot. Autom. Lett. 2019, 4, 3240–3246. [Google Scholar] [CrossRef]

- Ahmadizadeh, C.; Merhi, L.K.; Pousett, B.; Sangha, S.; Menon, C. Toward intuitive prosthetic control: Solving common issues using force myography, surface electromyography, and pattern recognition in a pilot case study. IEEE Robot. Autom. Mag. 2017, 24, 102–111. [Google Scholar] [CrossRef]

- Nowak, M.; Eiband, T.; Castellini, C. Multi-modal myocontrol: Testing combined force-and electromyography. In Proceedings of the 2017 International Conference on Rehabilitation Robotics (ICORR), London, UK, 17–20 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1364–1368. [Google Scholar]

- Palermo, F.; Cognolato, M.; Gijsberts, A.; Caputo, B.; Müller, H.; Atzori, M. Analysis of the repeatability of grasp recognition for hand robotic prosthesis control based on sEMG data. In Proceedings of the IEEE International Conference on Rehabilitation Robotics, London, UK, 17–20 July 2017. [Google Scholar]

- Krasoulis, A.; Vijayakumar, S.; Nazarpour, K. Effect of user practice on prosthetic finger control with an intuitive myoelectric decoder. Front. Neurosci. 2019, 13, 891. [Google Scholar] [CrossRef] [PubMed]

- Krasoulis, A.; Kyranou, I.; Erden, M.S.; Nazarpour, K.; Vijayakumar, S. Improved prosthetic hand control with concurrent use of myoelectric and inertial measurements. J. Neuroeng. Rehabil. 2017, 14, 71. [Google Scholar] [CrossRef] [PubMed]

- NinaPro. Available online: https://ninapro.hevs.ch/ (accessed on 23 September 2024).

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Boinee, P.; De Angelis, A.; Foresti, G.L. Meta random forests. Int. J. Comput. Intell. 2005, 2, 138–147. [Google Scholar]

- Boateng, E.Y.; Otoo, J.; Abaye, D.A. Basic tenets of classification algorithms K-nearest-neighbor, support vector machine, random forest and neural network: A review. J. Data Anal. Inf. Process. 2020, 8, 341–357. [Google Scholar] [CrossRef]

- Chen, R.C.; Dewi, C.; Huang, S.W.; Caraka, R.E. Selecting critical features for data classification based on machine learning methods. J. Big Data 2020, 7, 52. [Google Scholar] [CrossRef]

- Habib, A.B.; Ashraf, F.B.; Shakil, A. Finding Efficient Machine Learning Model for Hand Gesture Classification Using EMG Data. In Proceedings of the 2021 5th International Conference on Electrical Engineering and Information Communication Technology (ICEEICT), Dhaka, Bangladesh, 18–20 November 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Senturk, Z.K.; Bakay, M.S. Machine Learning-Based Hand Gesture Recognition via EMG Data. ADCAIJ Adv. Distrib. Comput. Artif. Intell. J. 2021, 10, 123–136. [Google Scholar]

- Kaczmarek, P.; Mańkowski, T.; Tomczyński, J. putEMG—a surface electromyography hand gesture recognition dataset. Sensors 2019, 19, 3548. [Google Scholar] [CrossRef]

- Ovadia, D.; Segal, A.; Rabin, N. Classification of hand and wrist movements via surface electromyogram using the random convolutional kernels transform. Sci. Rep. 2024, 14, 4134. [Google Scholar] [CrossRef]

| Year and Reference | sEMG Sensor Type | No. of sEMG Channels | FMG Sensor Type | No. of FMG Channels | IMU Type | No. of IMU Channels | Co-Located Sensor Configuration * |

|---|---|---|---|---|---|---|---|

| 2016 [15] | Ottobock MyoBock 13E200 | 10 | FSR | 10 | none | 0 | no |

| 2017 [30] | Ottobock MyoBock 13E200 | 2 | FSR | 90 | none | 0 | no |

| 2017 [31] | Ottobock MyoBock 13E200 | 10 | FSR | 10 | none | 0 | no |

| 2019 [29] | Wet silver electrodes | 3 | FSR | 5 | none | 0 | no |

| 2020 [14] | Silver foil electrodes | 8 | Barometer | 8 | none | 0 | yes |

| 2020 [28] | NeuroSky stainless steel electrodes | 4 | FSR | 4 | none | 0 | yes |

| 2021 [26] | Silver-plated yarn | 1 | FSR | 2 | none | 0 | yes |

| 2022 [12] | Convex electrodes | 5 | FSR | 5 | 9-axis | 1 | yes |

| 2023 [27] | Conductive silicon electrodes | 3 | Reflectance sensor | 3 | none | 0 | yes |

| This work | Delsys Avanti Trigno | 6 | FSR | 24 | 6-axis | 6 | yes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Andreas, D.; Hou, Z.; Tabak, M.O.; Dwivedi, A.; Beckerle, P. A Multimodal Bracelet to Acquire Muscular Activity and Gyroscopic Data to Study Sensor Fusion for Intent Detection. Sensors 2024, 24, 6214. https://doi.org/10.3390/s24196214

Andreas D, Hou Z, Tabak MO, Dwivedi A, Beckerle P. A Multimodal Bracelet to Acquire Muscular Activity and Gyroscopic Data to Study Sensor Fusion for Intent Detection. Sensors. 2024; 24(19):6214. https://doi.org/10.3390/s24196214

Chicago/Turabian StyleAndreas, Daniel, Zhongshi Hou, Mohamad Obada Tabak, Anany Dwivedi, and Philipp Beckerle. 2024. "A Multimodal Bracelet to Acquire Muscular Activity and Gyroscopic Data to Study Sensor Fusion for Intent Detection" Sensors 24, no. 19: 6214. https://doi.org/10.3390/s24196214

APA StyleAndreas, D., Hou, Z., Tabak, M. O., Dwivedi, A., & Beckerle, P. (2024). A Multimodal Bracelet to Acquire Muscular Activity and Gyroscopic Data to Study Sensor Fusion for Intent Detection. Sensors, 24(19), 6214. https://doi.org/10.3390/s24196214