Abstract

Rotating machinery is widely used in modern industrial systems, and its health status can directly impact the operation of the entire system. Timely and accurate diagnosis of rotating machinery faults is crucial for ensuring production safety, reducing economic losses, and improving efficiency. Traditional deep learning methods can only extract features from the vertices of the input data, thereby overlooking the information contained in the relationships between vertices. This paper proposes a Legendre graph convolutional network (LGCN) integrated with a self-attention graph pooling method, which is applied to fault diagnosis of rotating machinery. The SA-LGCN model converts vibration signals from Euclidean space into graph signals in non-Euclidean space, employing a fast local spectral filter based on Legendre polynomials and a self-attention graph pooling method, significantly improving the model’s stability and computational efficiency. By applying the proposed method to 10 different planetary gearbox fault tasks, we verify that it offers significant advantages in fault diagnosis accuracy and load adaptability under various working conditions.

1. Introduction

Rotating machinery plays an indispensable role in modern industrial systems and is extensively utilized in industries such as aerospace, automotive, and wind power generation [1]. When operating under complex and harsh conditions such as heavy loads, high temperatures, and high speeds, rotating machinery inevitably experiences various types of faults [2]. The reliable operation of these mechanical devices is crucial to production efficiency, safety, and energy utilization efficiency. Therefore, timely and accurate diagnosis of rotating machinery faults is essential for ensuring production safety, reducing economic losses, and improving efficiency. Fault diagnosis can help maintenance personnel implement preventive measures before a fault occurs, thereby extending equipment life, optimizing maintenance and repair plans, and reducing downtime.

However, fault diagnosis of rotating machinery encounters numerous challenges in practical engineering applications, including the high dimensionality, non-linearity, and non-stationarity of the data, as well as the diversity of fault modes and weak signals of early faults. Such factors significantly increase the difficulty of diagnosis. To address these challenges, numerous fault diagnosis methods and techniques have been developed, including traditional signal processing methods, machine learning techniques, deep learning techniques, and recently, emerging graph-neural-network-based methods. Although these methods have shown some progress in improving the accuracy and efficiency of fault diagnosis, they still face limitations in handling complex data structures, achieving early fault detection, and enhancing model generalization capabilities. Therefore, exploring and developing more efficient and intelligent fault diagnosis methods has become an important research direction in this field. In response to this, this paper introduces a fault diagnosis method for rotating machinery based on graph convolutional neural networks.

Benefiting from advances in deep learning theory, researchers have developed various intelligent fault diagnosis models based on deep learning. These models enable end-to-end fault diagnosis without the need for manual feature extraction. Feng [3] proposed a local connection network (LCN) constructed using a normalized sparse auto-encoder (NSAE) for intelligent fault diagnosis, integrating feature extraction and fault recognition into a general-purpose learning procedure, effectively identifying the health condition of machinery. Chen [4] proposed a novel diagnostic model combining convolutional neural networks (CNN) and extreme learning machines (ELM), achieving higher classification accuracy with less computation time. Jia [5] designed a KMedoids clustering method based on dynamic time warping (DTW-KMedoids) to cluster multi-channel signals, which were then input into clustered blueprint separable convolutions (CBS-Conv) for end-to-end HST bogie fault diagnosis. Zhang et al. [6] proposed a method for identifying types of rotating machinery faults based on recurrent neural networks (RNNs). Shi et al. [7] proposed a multi-scale feature adversarial fusion network for unsupervised cross-domain fault diagnosis. Kong et al. [8] proposed a multi-task self-supervised method to mine fault diagnosis knowledge from unlabeled data. Liu et al. [9] proposed a continuous learning model based on weight space meta-representation (WSMR) for fault diagnosis of switch machine plunger pumps. Quan [10] utilized the IJDA mechanism and I-Softmax loss to construct a deep discriminative transfer learning network (DDTLN) for fault transfer diagnosis.

Intelligent fault diagnosis based on deep learning has yielded numerous results. Traditional deep learning models can only input fixed-dimensional data, and the local input data must be ordered. However, widely used deep learning models such as CNNs struggle to achieve optimal performance in fields involving non-Euclidean structured data due to their inherent structural characteristics [11]. Given the universality of graph structures, extending deep learning to graph structures has garnered increasing attention, leading to the emergence of graph neural networks (GNNs) and the development of models such as GCN [12], GraphSAGE [13], and GAT [14]. These models are widely used in computer vision [15], natural language processing [16], and recommendation systems [17].

Inspired by this, researchers have started to apply GNNs to the field of fault diagnosis. Li [18] proposed a multi-receptive field graph convolutional network (MRF-GCN) and verified its effectiveness in mechanical fault diagnosis. Chen [19] proposed a GCN-based fault diagnosis method based on structural analysis, which converts the collected acoustic signals into association graphs and inputs them into the GCN model to achieve fault diagnosis of rolling bearings. Zhao [20] designed a new multi-scale deep graph convolutional network (MS-DGCN) algorithm to diagnose rotor-bearing system faults under fluctuating conditions. Yang [21] proposed a deep capsule graph convolutional network (DCGCN) method to diagnose compound faults in harmonic drives. Yang [22] proposed a feature extraction method based on spatiotemporal graphs, called SuperGraph, for fault diagnosis of rotating machinery. Li [23] proposed an adaptive multi-channel heterogeneous graph neural network (AMHGNN), which achieves more accurate node classification through flexible topological structures. Chen [24] proposed a neighborhood convolutional graph neural network (NCGNN) that avoids training the model with an adjacency matrix, effectively controlling training costs and enhancing scalability. Wang [25] proposed a novel temporal–spatial graph neural network with an attention-aware module (A-TSGNN) for mechanical fault diagnosis. Zhang [26] proposed a granger causality test-based bearing fault detection graph neural network method (GCT-GNN). Cao [27] proposed a novel pulse graph attention network for intelligent fault diagnosis of planetary gearboxes, achieving simultaneous extraction of spatiotemporal features from gearbox signals. Yu [28] proposed a two-stage importance-aware subgraph convolutional network (I2SGCN) based on multi-source sensors, which improves the fault recognition performance of intelligent neural networks under variable conditions and limited data. Zhong [29] designed a hierarchical GCN with latent structure learning (HGCN-LSL) for industrial fault diagnosis. This algorithm organizes hierarchical networks to collaboratively improve the quality of the latent graph structure, thereby ensuring enhanced diagnostic performance.

The contributions of this paper can be summarized as follows:

- We propose a method for constructing association graphs based on vibration signals, which transforms vibration signals in Euclidean space with translation invariance into graph signals in non-Euclidean space.

- We propose a fast local spectral filter based on Legendre polynomials. Compared to traditional Chebyshev filters used in graph neural networks, it enhances the model’s stability and load adaptability.

- We propose a graph pooling method based on self-attention for fault diagnosis of rotating machinery. This method adaptively focuses on key nodes in the graph to effectively capture fault features, thereby improving the accuracy of fault diagnosis.

2. Fault Diagnosis Model Based on SA-LGCN

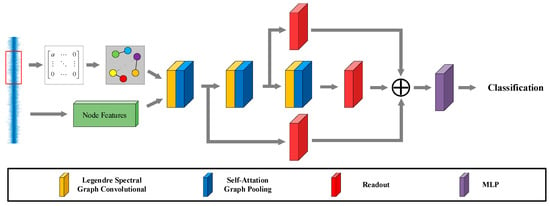

The collected vibration signals of rotating machinery include both normal and fault vibration signals. By introducing the KNN algorithm, the collected vibration signals are converted into non-Euclidean structured data, which contain more information. However, non-Euclidean data lacks translation invariance, making traditional convolutional neural networks unsuitable. Therefore, this paper utilizes graph convolutional networks (GCNs) to extract the spatial features of the topology graph from non-Euclidean structured data. Consequently, fault diagnosis of rotating machinery is transformed into a graph classification task within the graph convolutional network framework. To address the issue of weak early fault signals in rotating machinery, which are difficult to distinguish from normal signals, this paper proposes a fault diagnosis model based on self-attention pooling and a Legendre graph convolutional neural network, referred to as SA-LGCN. The model comprises four main components: (1) vibration signal association graph construction; (2) Legendre graph convolution; (3) self-attention graph pooling; and (4) readout. In this section, each component of the proposed model will be described in detail. The model is illustrated in Figure 1.

Figure 1.

SA-LGCN model.

2.1. Vibration Signal Association Graph Construction

In practice, the collected vibration signals are typically one-dimensional time-domain signals. By applying the KNN algorithm, these signals are converted into a graph, i.e., transformed from the general data domain to the graph domain. Suppose the length of the collected vibration signal is . First, the original data are divided into a set of sub-samples with a length of , where the sub-samples are independent and non-overlapping. The process is described as follows:

where represents the set of sub-samples, represents the number of sub-samples, and represents the ceiling operator. Each sub-sample is regarded as a node in the graph. Next, the KNN algorithm is used to find the neighbors of the nodes, and an association graph is constructed from the above dataset. The number of nodes in the graph is . According to the KNN algorithm, the nearest neighbors of the node can be represented as

where represents the set of neighbors of the sample, represents the output of the KNN algorithm, represents the number of nearest neighbors given in the KNN algorithm, and represents the subset with mmm samples.

Finally, for the constructed KNN Graph, weights are assigned to the edges between nodes. In this paper, a Gaussian kernel weighting function is chosen, defined as follows:

where represents the edge weight between nodes and in the KNN graph, represents the Euclidean norm, and represents the bandwidth of the Gaussian kernel, controlling the radial range of influence.

2.2. Legendre Graph Convolutional Filter

Based on the above content, one-dimensional vibration signal data with a non-Euclidean structure can be converted into weighted undirected graph structure data. For a given undirected graph:

where represents the set of vertices of the undirected graph , represents the set of edges of the undirected graph , represents the existence of an edge between nodes and , represents the weighted adjacency matrix of the undirected graph , and represents the weight of the edge between nodes and .

According to the definition of the graph Laplacian matrix ,

where represents the degree matrix of the weighted undirected graph . For any given vertex , its degree is represented as the weighted sum of all edges connected to that node, defined as follows:

The degree matrix is represented as , where is an diagonal matrix. Since the constructed graph is a weighted undirected graph, the adjacency matrix is a symmetric matrix. Therefore, the Laplacian matrix is a real symmetric matrix, which can be orthogonally diagonalized. In other words,

where represents the eigenvalue of the Laplacian matrix , represents the component of the eigenvector corresponding to , and is an orthogonal matrix, i.e., . Therefore,

Next, we define the Fourier transform on the graph. The traditional Fourier transform is known as

which represents the transformation of the continuous signal from the time domain to the frequency domain. It is known that is the eigenfunction of the Laplacian operator. The Laplacian operator in continuous space corresponds to the Laplace matrix in discrete space, and the eigenvectors of the Laplace matrix form a set of orthogonal bases in -dimensional space. According to reference [30], when extending the traditional Fourier transform to the graph Fourier transform, the eigenfunctions of the Laplacian operator are transformed into the eigenvectors of the Laplace matrix on the graph. The specific form is expressed as follows:

It is known that the Fourier transform on the graph converts the graph signal from the vertex domain to the spectral domain (corresponding to the eigenvectors of the Laplace matrix). Further expanding to matrix form, we obtain

That is, the graph Fourier transform is defined as

Similarly, the inverse graph Fourier transform in matrix form is given by

That is, the inverse graph Fourier transform is defined as

It is known that the convolution theorem states that the Fourier transform of the convolution of two functions is equal to the product of their Fourier transforms. Extending this to the graph Fourier transform, we obtain

where represents the graph convolution operator, and are the graph signals in the vertex domain, and and are the corresponding results after the graph Fourier transform. Similar to Equations (9) and (11), we obtain

The corresponding matrix form is

Assuming is the convolution kernel, using Equation (14), we obtain

where the first equality is the matrix form of Equation (14), the second equality uses the multiplication property of diagonal matrices, and the third equality uses Equation (11). Combining Equation (13) and using the convolution theorem, the inverse Fourier transform of Equation (17) gives the graph convolution result of the two as follows:

where denotes the Hadamard product. In graph convolutional neural networks, when the graph signal is acted upon by the convolution kernel , the above equation is also written as

To avoid eigenvalue decomposition and reduce the computational complexity of graph convolution operations, an -th order orthogonal polynomial is generally used to approximate the convolution kernel . This study proposes a novel graph convolution filter—Legendre orthogonal polynomials—to replace the traditional Chebyshev orthogonal polynomials. The specific expression is as follows:

where represents the coefficient of the -th order Legendre orthogonal polynomial, and represents the -th order Legendre orthogonal polynomial. Since the range of the independent variable of Legendre polynomials is , the eigenvalue diagonal matrix must be scaled to satisfy the range of the independent variable of Legendre polynomials. The specific operation is similar to the traditional Chebyshev orthogonal polynomial approximation convolution kernel. First, methods such as the power iteration method can be used to find the maximum eigenvalue ; then, the eigenvalues are normalized. Using the transformation , the eigenvalues . Next, it is proven that Legendre orthogonal polynomials can be used to approximate the convolution kernel . Substituting Equation (20) into Equation (19), we obtain

Similar to the Chebyshev orthogonal polynomial approximation, we derive

where, .

2.3. Graph Pooling

Similar to CNN on images, pooling operations can be defined on graphs to reduce the dimensions of the graph after the GCN layer. Diffpool [31] is a pooling method based on algebraic multigrid, which introduces a learnable hierarchical clustering module by training the matrix assigned to each layer:

where is the node feature matrix, is the coarsened adjacency matrix of the -th layer, and represents the probability that the nodes of the -th layer can be assigned to the coarsened nodes of the -th layer.

Although DiffPool achieves good results, it has a significant drawback: even if the graph itself is a sparse graph, the resulting is still a dense matrix. Top-K pool [32] overcomes this drawback by learning a projection vector , projecting the node features onto the vector as the importance of the nodes, and retaining the Top- nodes with the highest scores. The pooling graph is calculated as follows:

where is the L2 norm, represents the pooling ratio, is an indexing operation that obtains the slice at the specified index , and represents the Top-K ranking mechanism.

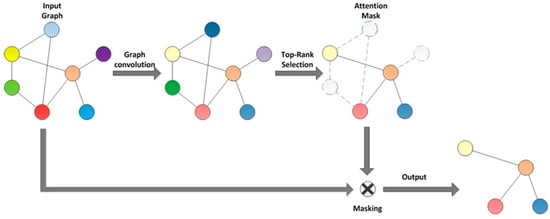

In traditional Top-K pooling, the selection of nodes is based on some metric of node features (such as the norm of feature vectors), retaining the most important K nodes. Although this method is simple and efficient, it may overlook global information in the graph structure and the interrelationships between nodes. This paper proposes a self-attention graph pooling strategy for fault diagnosis of rotating machinery (as shown in Figure 2). This strategy dynamically determines the importance of each node through a self-attention mechanism, improving the accuracy of node selection. This makes the pooling process better suited to the complexity and diversity of graph data, thereby enhancing the performance of graph neural networks in rotating machinery fault diagnosis. The steps of SAGP are as follows:

Figure 2.

Self-attention graph pooling.

- Node representation learning: First, use Legendre graph convolution to update the feature representation of each node. For each node in the graph, its updated feature representation is .

- Attention score calculation: Calculate the attention score for each node.

- Node selection and pooling: Based on the calculated attention scores, select the most important nodes to retain while removing nodes with lower scores. This process can be accomplished by directly selecting the Top-K nodes based on their scores.

- Constructing the pooled graph: Construct the pooled graph based on the retained nodes and the edge connections from the original graph. This process may also include re-connecting edges or adjusting weights to maintain the coherence and completeness of the graph structure.

3. Experimental Section

To verify the effectiveness of the SA-LGCN model in rotating machinery fault diagnosis, experiments were performed using a dataset of planetary gearboxes measured in the laboratory. The experimental setup and process are described in the following subsections.

The programming language used in this study was Python 3.6, and the framework for the graph neural network algorithm was PyTorch Geometric 2.0.3. The computer utilized for the experiments featured a Core i7-12700 CPU @ 4.8 GHz and runs on a Windows 64-bit operating system. To improve training speed, an RTX 3070 GPU with 8 GB of memory was employed.

3.1. Dataset Introduction

The main components of the HFXZ-I planetary gearbox fault diagnosis experimental platform are illustrated in Figure 3. The platform comprises of a variable speed drive motor, bearings, a helical gearbox, a planetary gearbox, a magnetic powder brake, a variable-frequency drive controller, and a load controller.

Figure 3.

Planetary gearbox experimental platform.

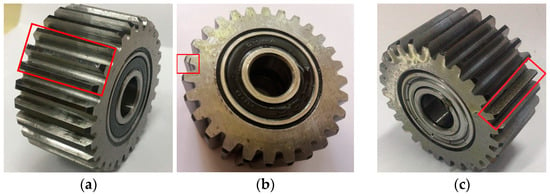

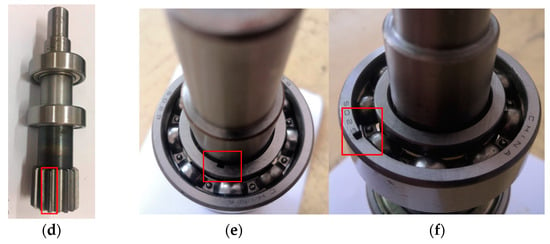

Using the planetary gearbox fault diagnosis experimental platform illustrated in Figure 3, various conditions were simulated. This platform includes common internal gearbox fault types such as gear pitting, gear cracks, gear wear (levels 1–3), sun gear broken teeth (levels 1–2), inner race defects, and outer race defects. The specific details of these faults are illustrated in Figure 4. Vibration signals were recorded using an accelerometer mounted on the top surface of the gearbox housing, with a sampling frequency of 10240 Hz. Continuous sampling was performed for 60 s under three motor speeds (20 Hz, 30 Hz, 50 Hz) and two load conditions (0.3 A, 0.5 A). Experiments were conducted for each failure mode to further verify the method’s generality and its ability to assess failure severity. The experiments were conducted 50 times, covering 10 fault types under 5 different load and speed conditions. It is worth noting that this experimental dataset includes varying degrees of faults, which further demonstrates the method’s decoupling ability and generality in feature extraction. The details of the data obtained from the experiments are presented in Table 1.

Figure 4.

Common gearbox fault types: (a) gear pitting; (b) gear cracks; (c) gear wear; (d) sun gear broken teeth; (e) inner race defects; (f) outer race defects. Fault details are shown in the red boxes in the figure.

Table 1.

Details about the dataset.

3.2. Data Preprocessing

First, the collected vibration signals from the ten conditions were normalized. Using the method described in Section 3.1, 1000 sub-samples were obtained, each with a length of 1024, and 100 graphs were constructed, each containing 10 nodes. The data were split into a training set and a test set in a ratio of 8:2.

3.3. Model Parameter Settings

The network structure and parameter settings of the SA-LGCN model are presented in Table 2. The hyper-parameters are set as follows: the model is trained using the Adam-optimized weighted loss function, with a momentum value of 0.9 and a batch size of 64; the input graph size is 10 × 1024 × 1024; and there are 200 training iterations. The initial learning rate was set to 0.01, with a decay of 1 × 10−5 after each epoch. It is worth noting that the above hyper-parameters were determined based on model performance. To ensure fairness, all comparative experiments were conducted in the same experimental environment.

Table 2.

Structure parameters and hyper-parameters setup of SA-LGCN.

3.4. Experimental Results and Analysis

To verify the effectiveness of SA-LGCN in rotating machinery fault diagnosis, multiple experiments were conducted using datasets with different loads and speeds: 20 Hz + 0.3 A, 20 Hz + 0.5 A, 30 Hz + 0.3 A, 30 Hz + 0.5 A, and 50 Hz + 0.5 A. The fault diagnosis accuracy of SA-LGCN was compared with that of five graph neural network models (ChebyNet, GCN, GAT, NCGCN, HGCN-LSL) and one deep learning model (CNN).

The comparison results of fault diagnosis accuracy across different models on five datasets are presented in Table 3. As shown in Table 3, the fault diagnosis accuracy of the SA-LGCN model is significantly higher than that of other models across various datasets, especially under high-load and high-speed conditions. This indicates that the SA-LGCN model more effectively captures the features in the vibration signals, thereby improving the accuracy of fault diagnosis. ChebyNet uses Chebyshev polynomials for spectral filtering, which improves accuracy to a certain extent but still has shortcomings in stability and computational efficiency. GCN’s excessive approximation of computational parameters results in the lowest accuracy under various conditions. GAT introduces an attention mechanism, which improves fault diagnosis accuracy but increases computational complexity. CNN performs fairly well in handling vibration signals, but since it can only process Euclidean space data, it performs worse than graph neural networks on complex signals and graph-structured data. Compared to two state-of-the-art GCN models, the proposed model improves accuracy by 4.76% to 8.79% over NHGCN and by 5.71% to 9.88% over HGCN-LSL.

Table 3.

Accuracy of each model under five datasets.

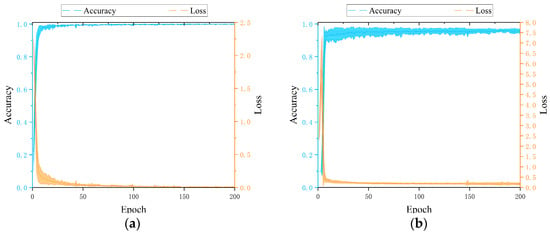

Next, the training process of the proposed model under the condition of 50 Hz + 0.5 A is visualized. The loss and accuracy of the training set are presented in Figure 5a, while the loss and accuracy of the validation set are presented in Figure 5b. From the loss and accuracy curves on the training and validation sets, it can be observed that the SA-LGCN model converges quickly during training, with accuracy rapidly increasing and loss values rapidly decreasing, indicating good convergence of the model. After about 10 epochs, the accuracy and loss values on both the training and validation sets tend to stabilize, indicating stable performance of the model on both sets without obvious overfitting or underfitting.

Figure 5.

Training process of SA-LGCN: (a) the loss and accuracy of the training set; (b) the loss and accuracy of the validation set.

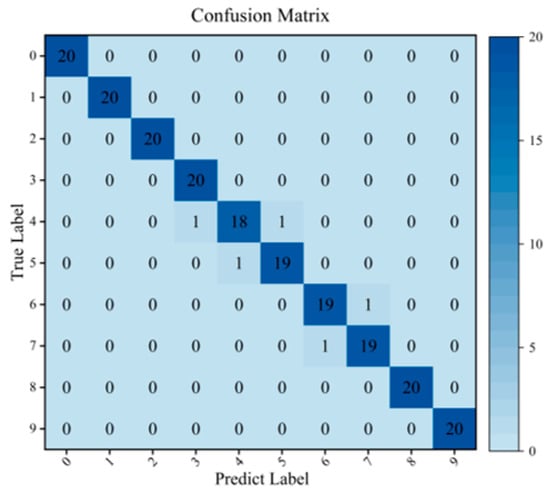

Additionally, the confusion matrix of the SA-LGCN model under the 50 Hz + 0.5 A condition is presented in Figure 6, where the X-axis and Y-axis represent the predicted labels and the true labels, respectively. The results show that the SA-LGCN model can effectively identify normal conditions and six different fault conditions at various locations. The model can also distinguish between different levels of faults fairly well. Out of 200 test samples, five samples were misclassified. Specifically, two samples with label 4 were predicted as labels 3 and 5, one sample with label 5 was predicted as label 4, one sample with label 6 was predicted as label 7, and one sample with label 7 was predicted as label 6. This is because labels 3, 4, and 5 correspond to three different levels of gear wear, and labels 6 and 7 correspond to two levels of sun gear broken teeth. The fault features among these labels are very similar.

Figure 6.

Confusion matrix results on SA-LGCN.

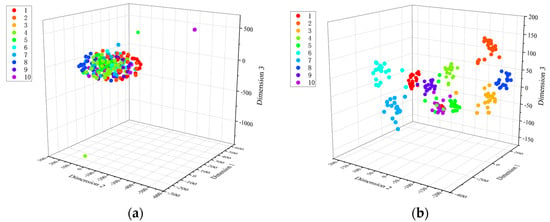

To verify the proposed model’s ability to learn discriminative features further, t-SNE was used to project the features learned by the SA-LGCN model in the fully connected layer from high-dimensional space to three-dimensional space for visualization. The three-dimensional scatter plot illustrates the change in sample distribution during the fault diagnosis process. Each point in the figure represents a graph sample, with different colors representing different health states. As illustrated in the figure, the feature distribution of the original graph is highly clustered (Figure 7a). After applying the proposed method (Figure 7b), the sample distribution within each category becomes more concentrated, while the distribution between categories becomes more discrete. Clearly, the proposed method demonstrates strong effectiveness in the fault diagnosis of rotating machinery.

Figure 7.

SA−LGCN feature visualization: (a) input feature; (b) output feature.

3.5. Ablation Study

To verify the effectiveness of each component in the SA-LGCN model further, three sets of ablation experiments were designed, where key parts of the model were removed or replaced to observe the impact on performance. This approach allows for a clear determination of the contribution of each component to the overall performance of the model.

3.5.1. Experimental Setup

Baseline model: The complete SA-LGCN model, including the Legendre-polynomial-based fast local spectral filter and the self-attention graph pooling method.

Ablation 1 (without Legendre filter): The Legendre-polynomial-based fast local spectral filter is removed and replaced with the traditional Chebyshev filter.

Ablation 2 (without self-Attention graph pooling): Self-attention graph pooling is removed and replaced with the Top-K pooling.

Ablation 3 (without Legendre filter and self-attention graph pooling): Both the Legendre-polynomial-based fast local spectral filter and self-attention graph pooling are removed and replaced with the traditional Chebyshev filter and Top-K pooling.

3.5.2. Ablation Experiment Results

Experiments were conducted on the above models using the same datasets (20 Hz + 0.3 A, 20 Hz + 0.5 A, 30 Hz + 0.3 A, 30 Hz + 0.5 A, 50 Hz + 0.5 A), and the fault diagnosis accuracy of each model was recorded. The results of the ablation experiments are presented in Table 4.

Table 4.

Accuracy of each ablation experiment.

As shown in Table 4, the accuracy of the proposed model improves by 2.35% to 9.70% compared to Ablation 1, by 1.56% to 7.32% compared to Ablation 2, and by 8.37% to 14.38% compared to Ablation 3. These significant improvements demonstrate that the model achieves strong fault diagnosis performance. Compared to the Chebyshev-based fast local spectral filter, the Legendre-polynomial-based fast local spectral filter plays an important role in improving the model’s stability. Compared to Top-K pooling, the graph pooling based on the self-attention mechanism more effectively focuses on key nodes, thereby improving the accuracy of fault diagnosis.

4. Conclusions

This paper proposes a Legendre graph convolutional network with a self-attention graph pooling method, applied to the fault diagnosis of rotating machinery. The proposed method offers the following advantages: (1) converting one-dimensional vibration signals into association graphs in non-Euclidean space accurately characterizes fault information, simplifying the fault diagnosis process, eliminating the need for manual intervention, and achieving end-to-end multi-source information fusion and classification; (2) the self-attention graph pooling method enhances the accuracy of node selection, making the pooling process better suited to the complexity and diversity of graph data; (3) experimental results demonstrate that the proposed method effectively identifies different severities and types of faults in rotating machinery. Under various loads and speeds, the proposed method outperforms other models in diagnostic accuracy and load adaptability.

Author Contributions

Conceptualization, J.M. and J.H.; methodology, J.M.; software, J.M.; validation, J.M., J.H. and S.L.; formal analysis, S.L.; investigation, J.L.; resources, J.M.; data curation, S.L.; writing—original draft preparation, J.M.; writing—review and editing, J.M. and L.J.; visualization, L.J.; supervision, J.H.; project administration, J.H.; funding acquisition, J.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Key R&D program of Shanxi Province (International Cooperation, 201903D421008), the Natural Science Foundation of Shanxi Province (201901D111157), and Research Project Supported by Shanxi Scholarship Council of China (2022-141). This work is also Supported by the Fundamental Research Program of Shanxi Province (202203021211096) and the Shanxi Provincial Postgraduate Scientific Research Innovation Project (2023KY600).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The ownership belongs to corresponding author. Please contact jyhuang@nuc.edu.cn if necessary.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lei, Y.; Yang, B.; Jiang, X.; Jia, F.; Li, N.; Nandi, A.K. Applications of machine learning to machine fault diagnosis: A review and roadmap. Mech. Syst. Signal Process. 2020, 138, 106587. [Google Scholar] [CrossRef]

- Chen, X.; Wang, S.; Qiao, B.; Chen, Q. Basic research on machinery fault diagnostics: Past, present, and future trends. Front. Mech. Eng. 2018, 13, 264–291. [Google Scholar] [CrossRef]

- Jia, F.; Lei, Y.; Guo, L.; Lin, J.; Xing, S. A neural network constructed by deep learning technique and its application to intelligent fault diagnosis of machines. Neurocomputing 2018, 272, 619–628. [Google Scholar] [CrossRef]

- Chen, Z.; Gryllias, K.; Li, W. Mechanical fault diagnosis using convolutional neural networks and extreme learning machine. Mech. Syst. Signal Process. 2019, 133, 106272. [Google Scholar] [CrossRef]

- Jia, X.; Qin, N.; Huang, D.; Zhang, Y.; Du, J. A clustered blueprint separable convolutional neural network with high precision for high-speed train bogie fault diagnosis. Neurocomputing 2022, 500, 422–433. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, T.; Huang, X.; Cao, L.; Zhou, Q. Fault diagnosis of rotating machinery based on recurrent neural networks. Measurement 2021, 171, 108774. [Google Scholar] [CrossRef]

- Shi, Y.; Deng, A.; Deng, M.; Xu, M.; Liu, Y.; Ding, X. A novel multiscale feature adversarial fusion network for unsupervised cross-domain fault diagnosis. Measurement 2022, 200, 111616. [Google Scholar] [CrossRef]

- Kong, D.; Huang, W.; Zhao, L.; Ding, J.; Wu, H.; Yang, G. Mining knowledge from unlabeled data for fault diagnosis: A multi-task self-supervised approach. Mech. Syst. Signal Process. 2024, 211, 111189. [Google Scholar] [CrossRef]

- Liu, S.; Huang, J.; Ma, J.; Luo, J. Class-incremental continual learning model for plunger pump faults based on weight space meta-representation. Mech. Syst. Signal Process. 2023, 196, 110309. [Google Scholar] [CrossRef]

- Qian, Q.; Qin, Y.; Luo, J.; Wang, Y.; Wu, F. Deep discriminative transfer learning network for cross-machine fault diagnosis. Mech. Syst. Signal Process. 2023, 186, 109884. [Google Scholar] [CrossRef]

- Chen, Z.; Xu, J.; Alippi, C.; Ding, S.X.; Shardt, Y.; Peng, T.; Yang, C. Graph neural network-based fault diagnosis: A review. arXiv 2021, arXiv:2111.08185. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Hamilton, W.; Ying, Z.; Leskovec, J. Inductive representation learning on large graphs. Adv. Neural Inf. Process. Syst. 2017, 30, 1025–1035. [Google Scholar]

- Velickovic, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. Stat 2017, 1050, 10-48550. [Google Scholar]

- Xie, G.S.; Liu, J.; Xiong, H.; Shao, L. Scale-aware graph neural network for few-shot semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5475–5484. [Google Scholar]

- Phu, M.T.; Nguyen, T.H. Graph convolutional networks for event causality identification with rich document-level structures. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Virtual, 6–11 June 2021; pp. 3480–3490. [Google Scholar]

- Ramnath, K.; Sari, L.; Hasegawa-Johnson, M.; Yoo, C. Worldly wise (WoW)-cross-lingual knowledge fusion for fact-based visual spoken-question answering. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Virtual, 6–11 June 2021; pp. 1908–1919. [Google Scholar]

- Li, T.; Zhao, Z.; Sun, C.; Yan, R.; Chen, X. Multireceptive field graph convolutional networks for machine fault diagnosis. IEEE Trans. Ind. Electron. 2020, 68, 12739–12749. [Google Scholar] [CrossRef]

- Chen, Z.; Xu, J.; Peng, T.; Yang, C. Graph convolutional network-based method for fault diagnosis using a hybrid of measurement and prior knowledge. IEEE Trans. Cybern. 2021, 52, 9157–9169. [Google Scholar] [CrossRef]

- Zhao, X.; Yao, J.; Deng, W.; Ding, P.; Zhuang, J.; Liu, Z. Multiscale deep graph convolutional networks for intelligent fault diagnosis of rotor-bearing system under fluctuating working conditions. IEEE Trans. Ind. Inform. 2022, 19, 166–176. [Google Scholar] [CrossRef]

- Yang, G.; Tao, H.; Du, R.; Zhong, Y. Compound fault diagnosis of harmonic drives using deep capsule graph convolutional network. IEEE Trans. Ind. Electron. 2022, 70, 4186–4195. [Google Scholar] [CrossRef]

- Yang, C.; Zhou, K.; Liu, J. SuperGraph: Spatial-temporal graph-based feature extraction for rotating machinery diagnosis. IEEE Trans. Ind. Electron. 2021, 69, 4167–4176. [Google Scholar] [CrossRef]

- Li, Y.; Jian, C.; Zang, G.; Song, C.; Yuan, X. Node classification oriented Adaptive Multichannel Heterogeneous Graph Neural Network. Knowl.-Based Syst. 2024, 292, 111618. [Google Scholar] [CrossRef]

- Chen, J.; Li, B.; He, K. Neighborhood convolutional graph neural network. Knowl.-Based Syst. 2024, 295, 111861. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Z.; Li, X.; Shao, H.; Han, T.; Xie, M. Attention-aware temporal–spatial graph neural network with multi-sensor information fusion for fault diagnosis. Knowl.-Based Syst. 2023, 278, 110891. [Google Scholar] [CrossRef]

- Zhang, Z.; Wu, L. Graph neural network-based bearing fault diagnosis using Granger causality test. Expert Syst. Appl. 2024, 242, 122827. [Google Scholar] [CrossRef]

- Cao, S.; Li, H.; Zhang, K.; Yang, C.; Xiang, W.; Sun, F. A novel spiking graph attention network for intelligent fault diagnosis of planetary gearboxes. IEEE Sens. J. 2023, 23, 13140–13154. [Google Scholar] [CrossRef]

- Yu, Y.; He, Y.; Karimi, H.R.; Gelman, L.; Cetin, A.E. A two-stage importance-aware subgraph convolutional network based on multi-source sensors for cross-domain fault diagnosis. Neural Netw. 2024, 179, 106518. [Google Scholar] [CrossRef]

- Zhong, K.; Han, B.; Han, M.; Chen, H. Hierarchical graph convolutional networks with latent structure learning for mechanical fault diagnosis. IEEE/ASME Trans. Mechatron. 2023, 28, 3076–3086. [Google Scholar] [CrossRef]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional neural networks on graphs with fast localized spectral filtering. Adv. Neural Inf. Process. Syst. 2016, 29, 3844–3852. [Google Scholar]

- Ying, Z.; You, J.; Morris, C.; Ren, X.; Hamilton, W.; Leskovec, J. Hierarchical graph representation learning with differentiable pooling. Adv. Neural Inf. Process. Syst. 2018, 31. [Google Scholar]

- Gao, H.; Ji, S. Graph u-nets. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 2083–2092. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).