Syntax-Guided Content-Adaptive Transform for Image Compression

Abstract

1. Introduction

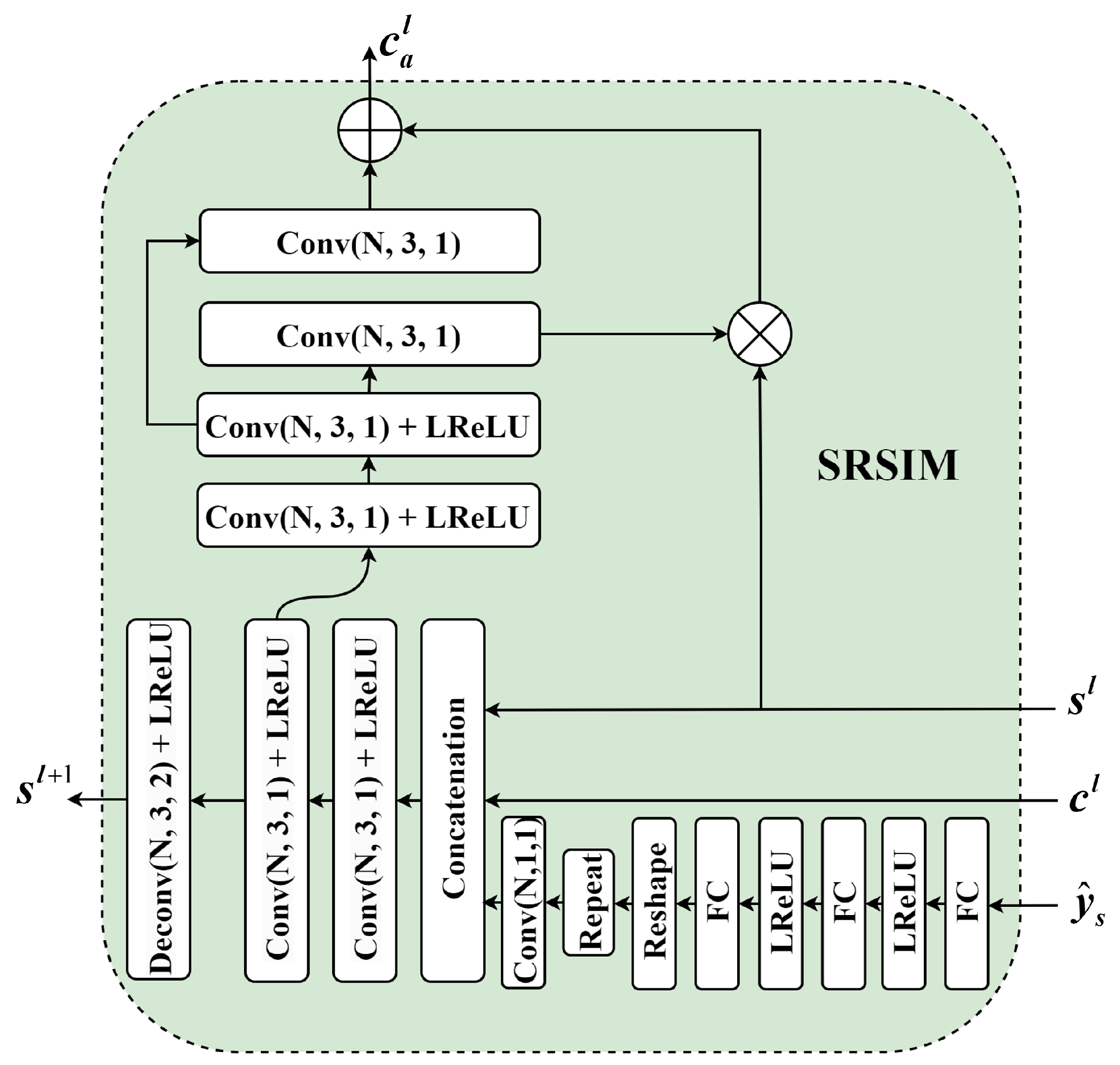

- We introduced the syntax-refined side information module in the decoder, which fully utilizes the syntax and side information to guide the adaptive transform of content features. This enhances the decoder’s ability to perform nonlinear transforms.

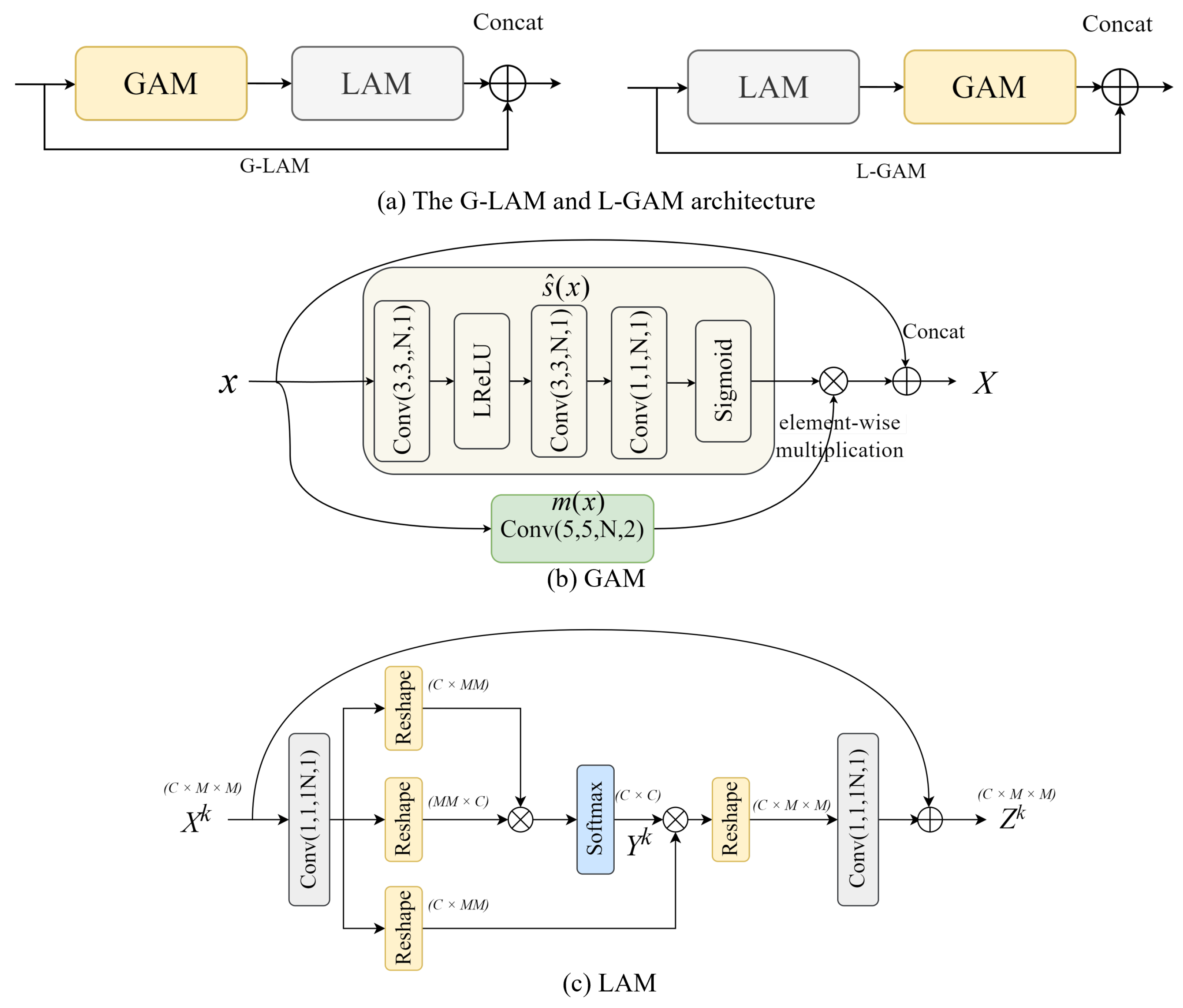

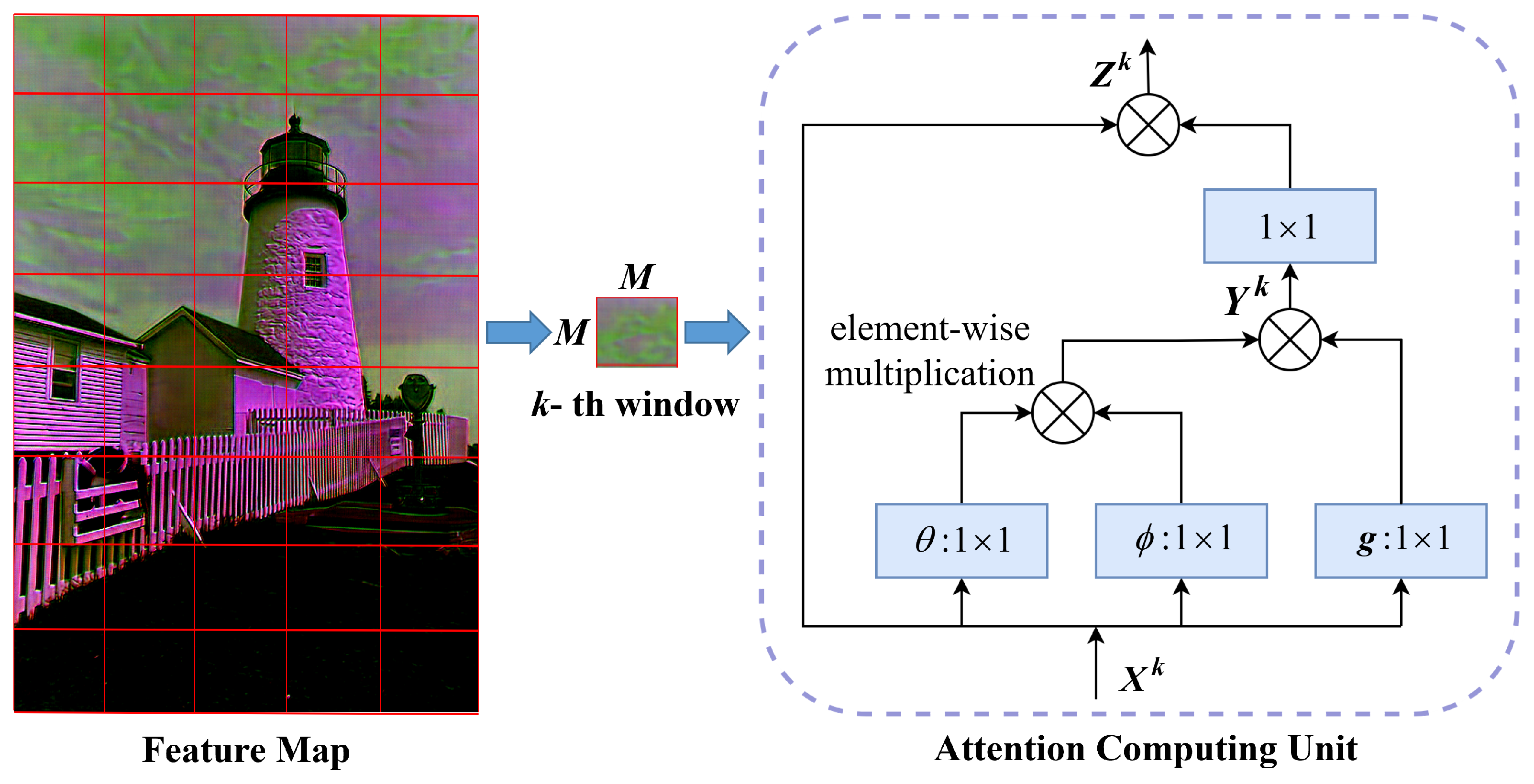

- Within the encoder and decoder, we created distinct global-to-local and local-to-global modules aimed at tapping into both global and local redundancies within images, thereby enhancing coding performance further.

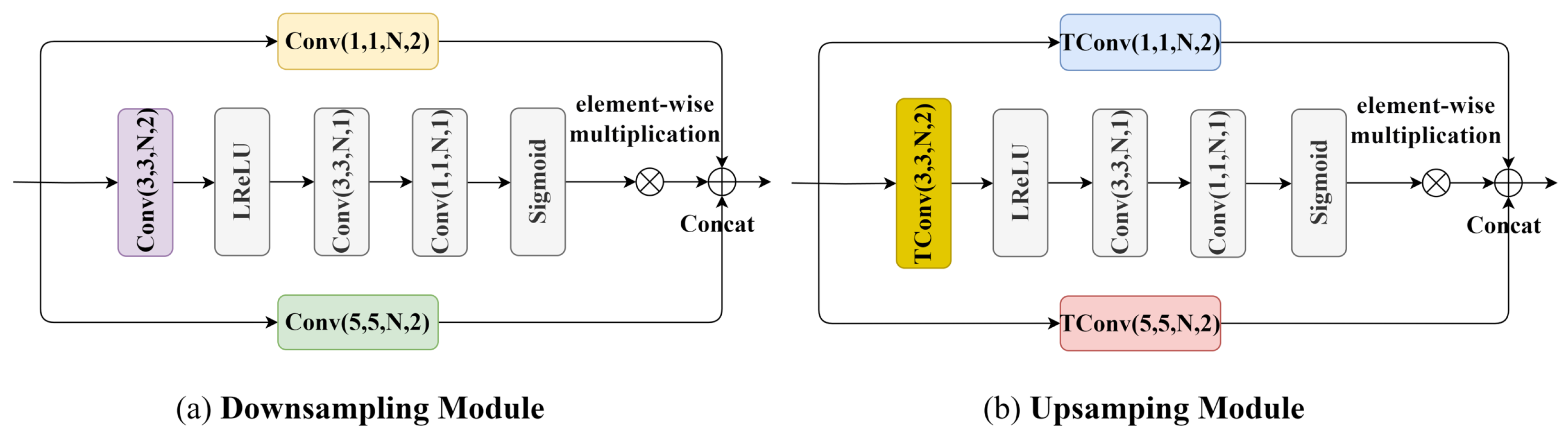

- We proposed upsampling and downsampling modules to further capture the global correlations within images, thereby enhancing the coding performance of the model.

2. Materials and Methods

2.1. Datasets and Data Processing

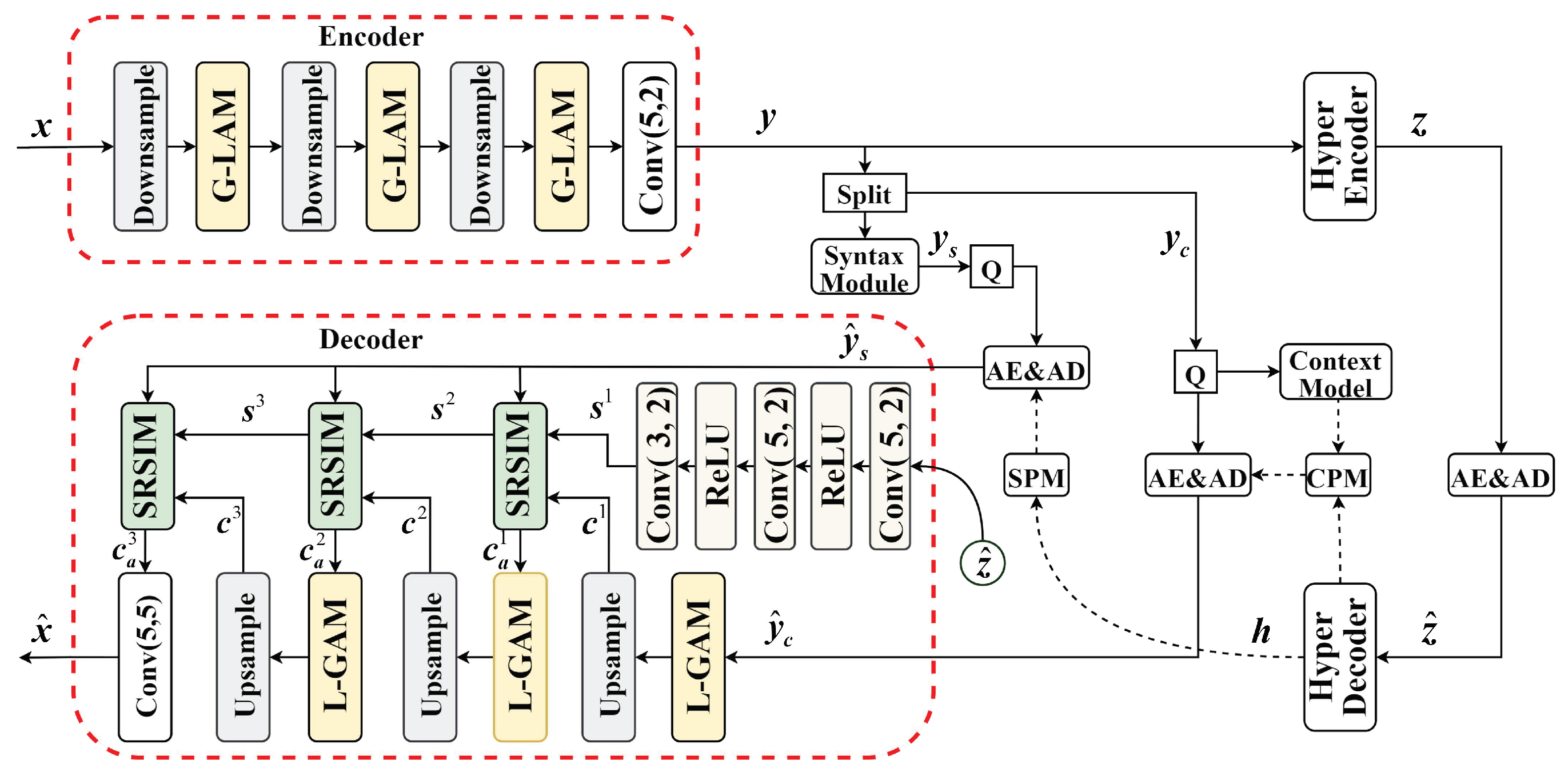

2.2. The Proposed Syntax-Guided Content-Adaptive Transform Model

2.3. Syntax-Refined Side Information Module

2.4. Global–Local and Local–Global Attention Module

2.5. Downsampling and Upsampling Modules

2.6. Loss Function

3. Results

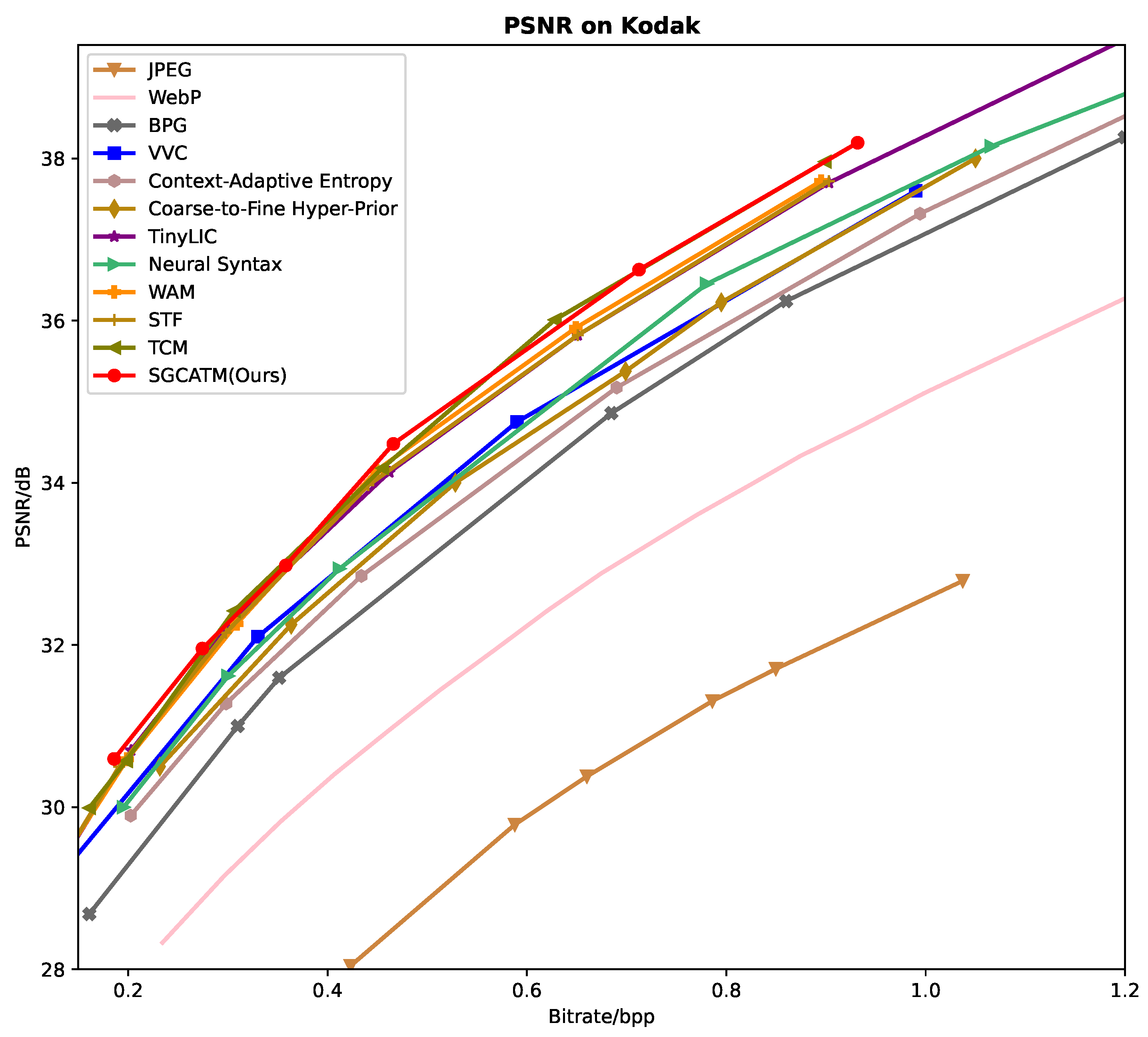

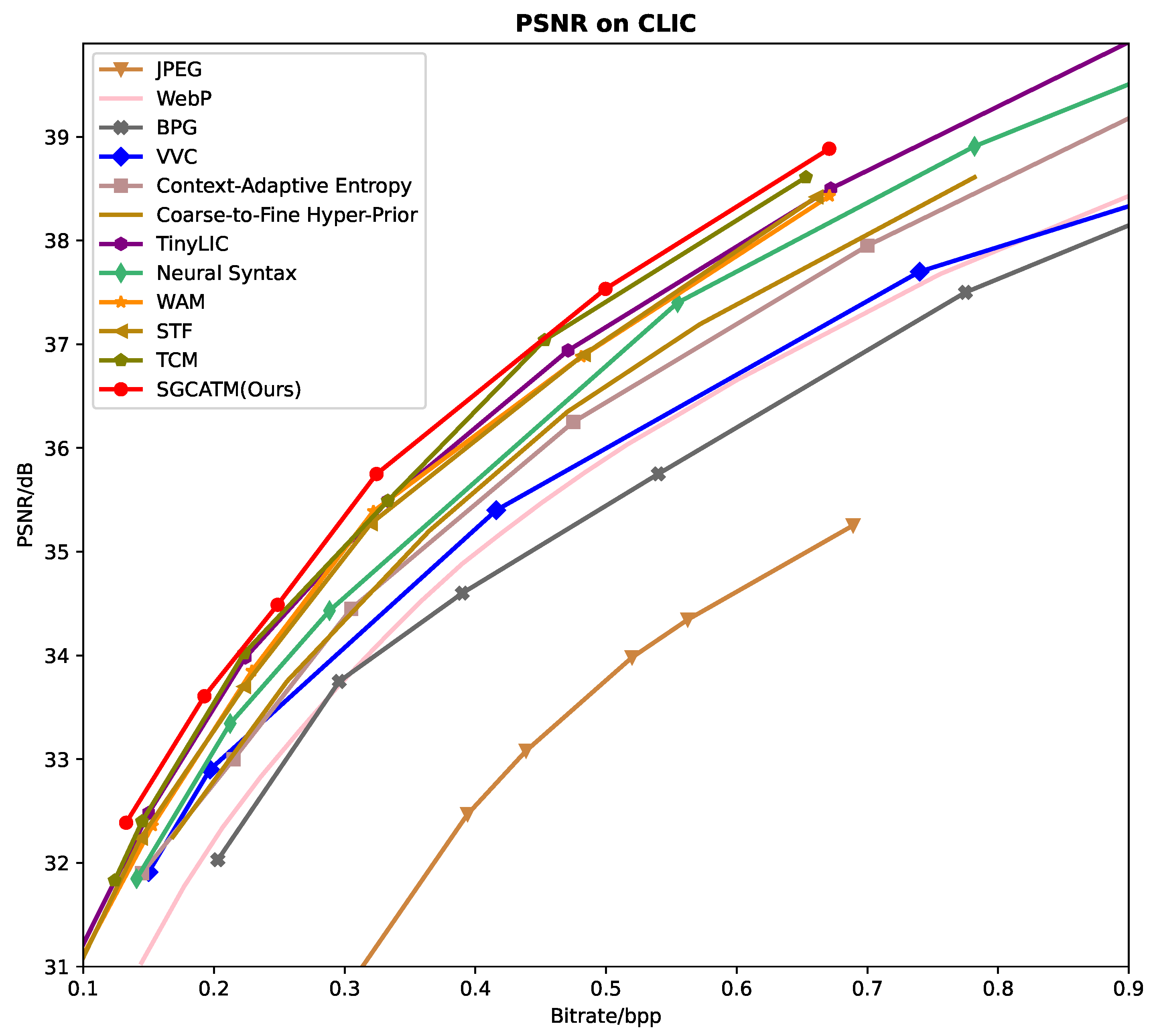

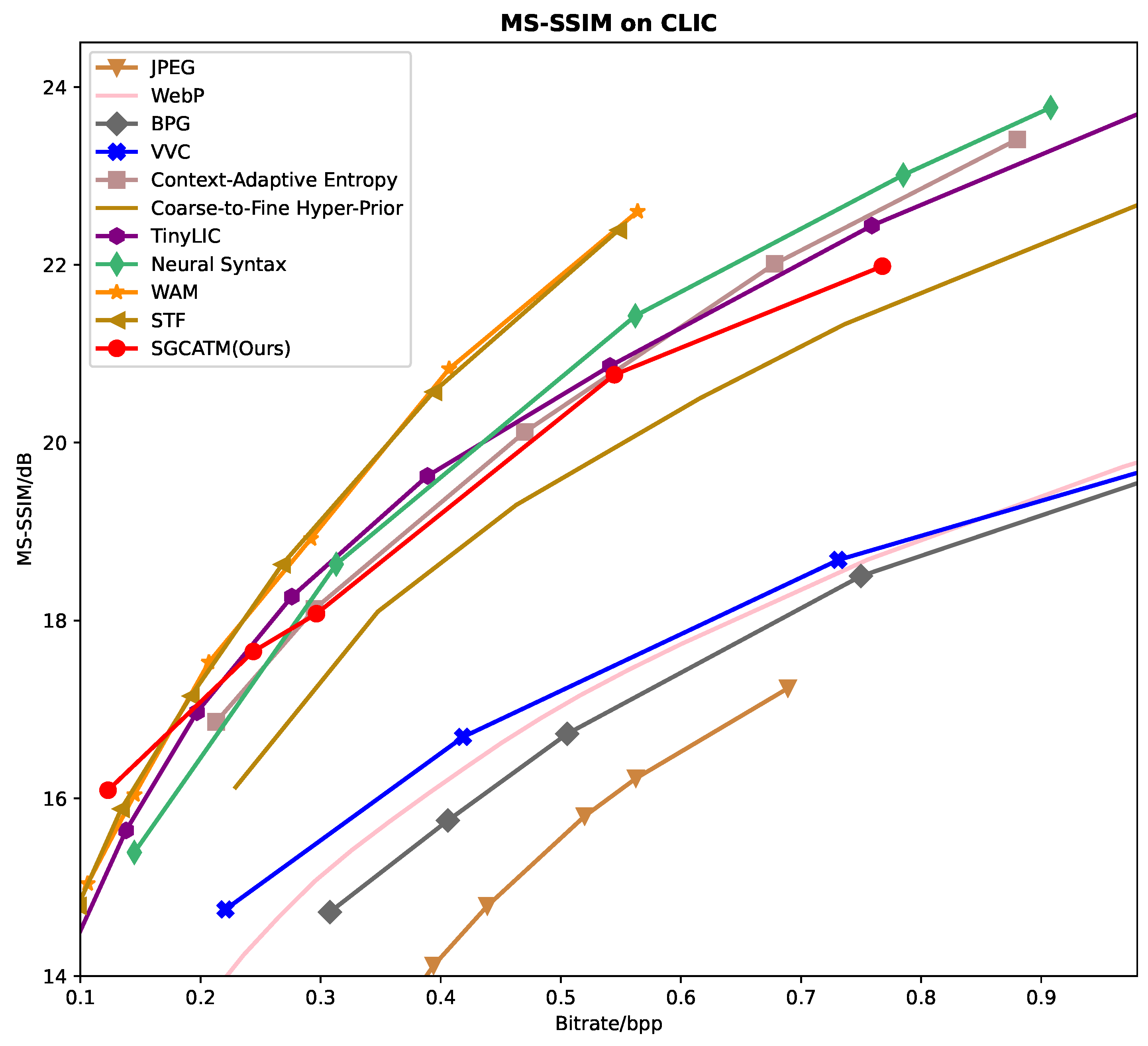

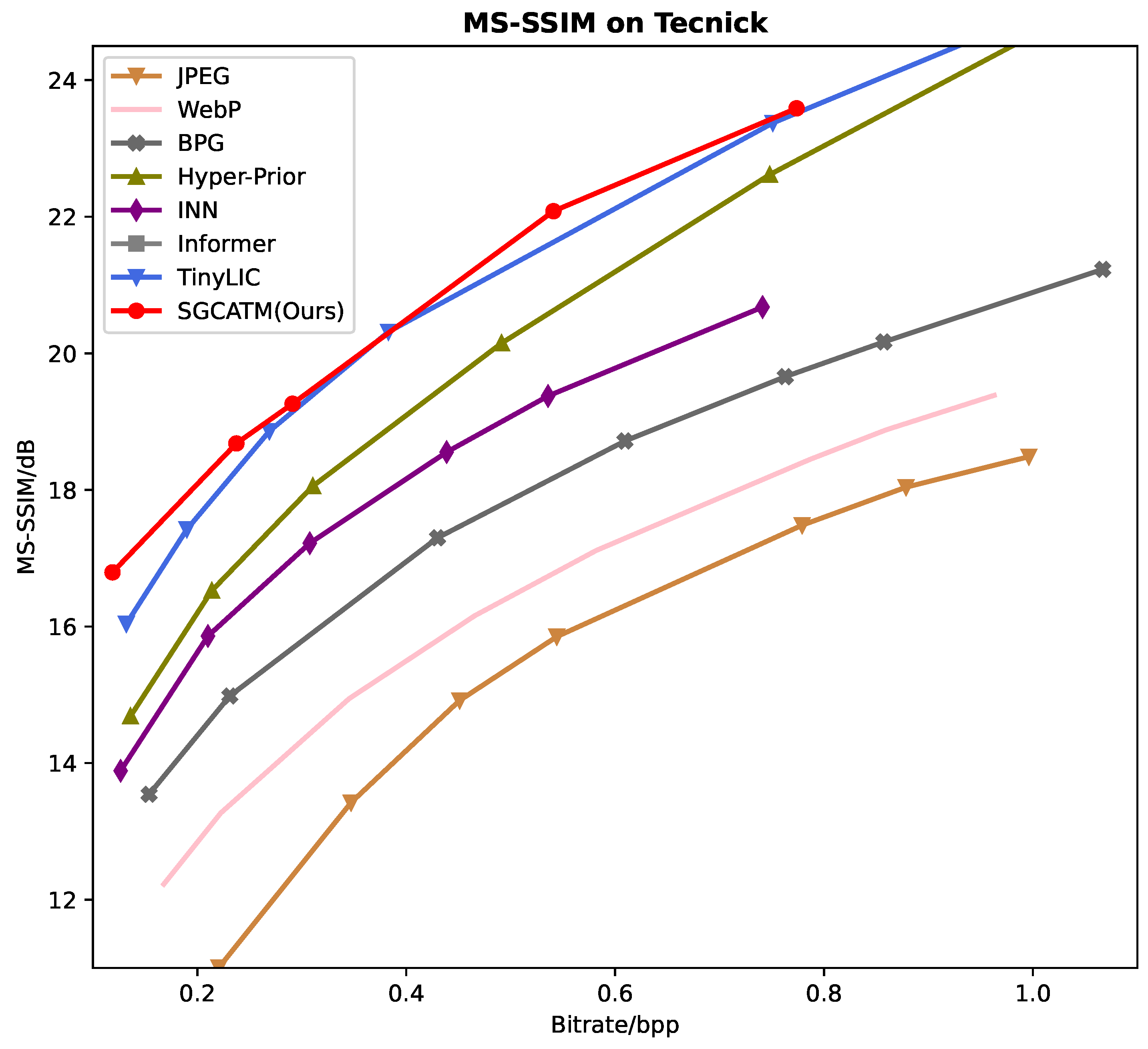

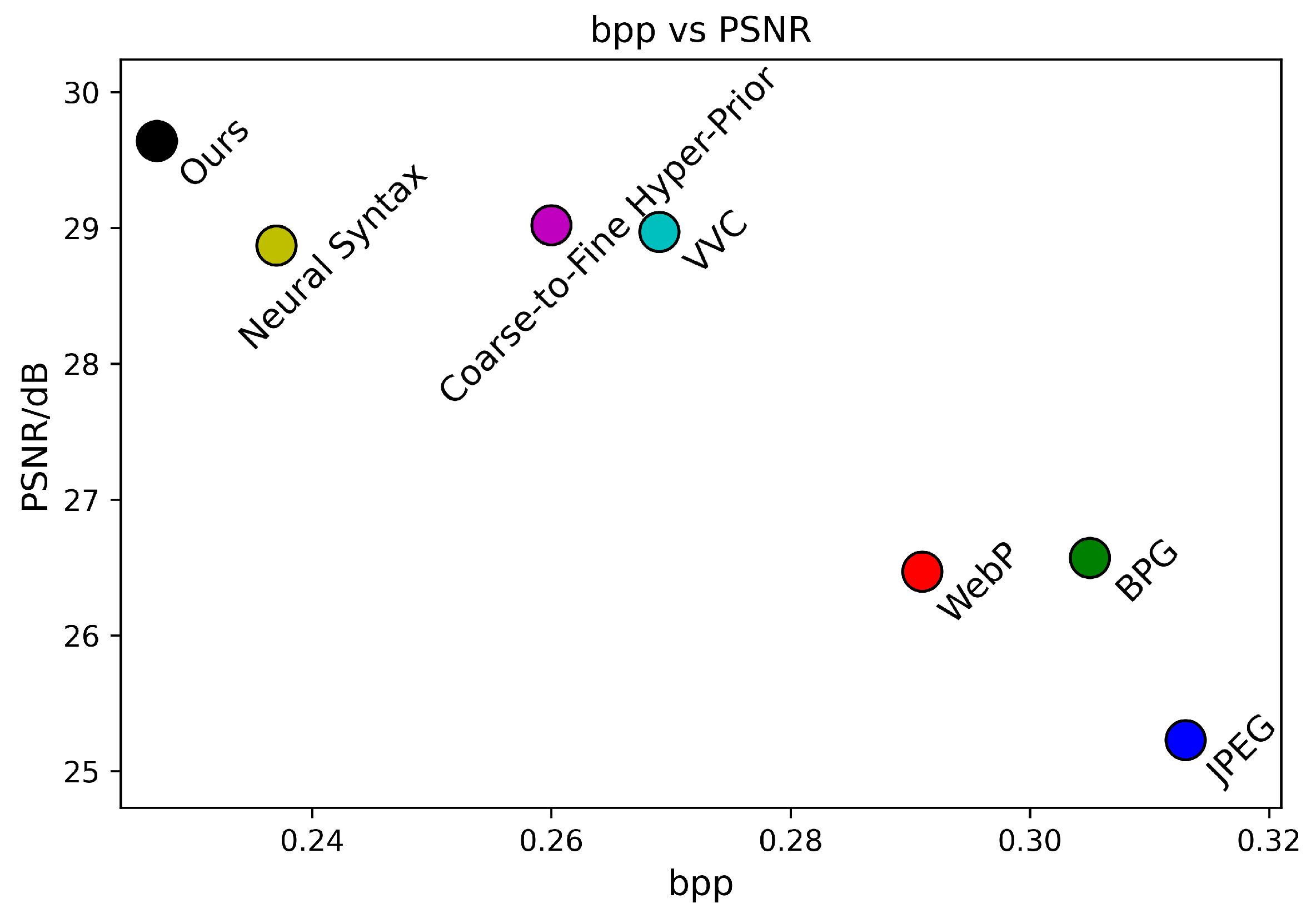

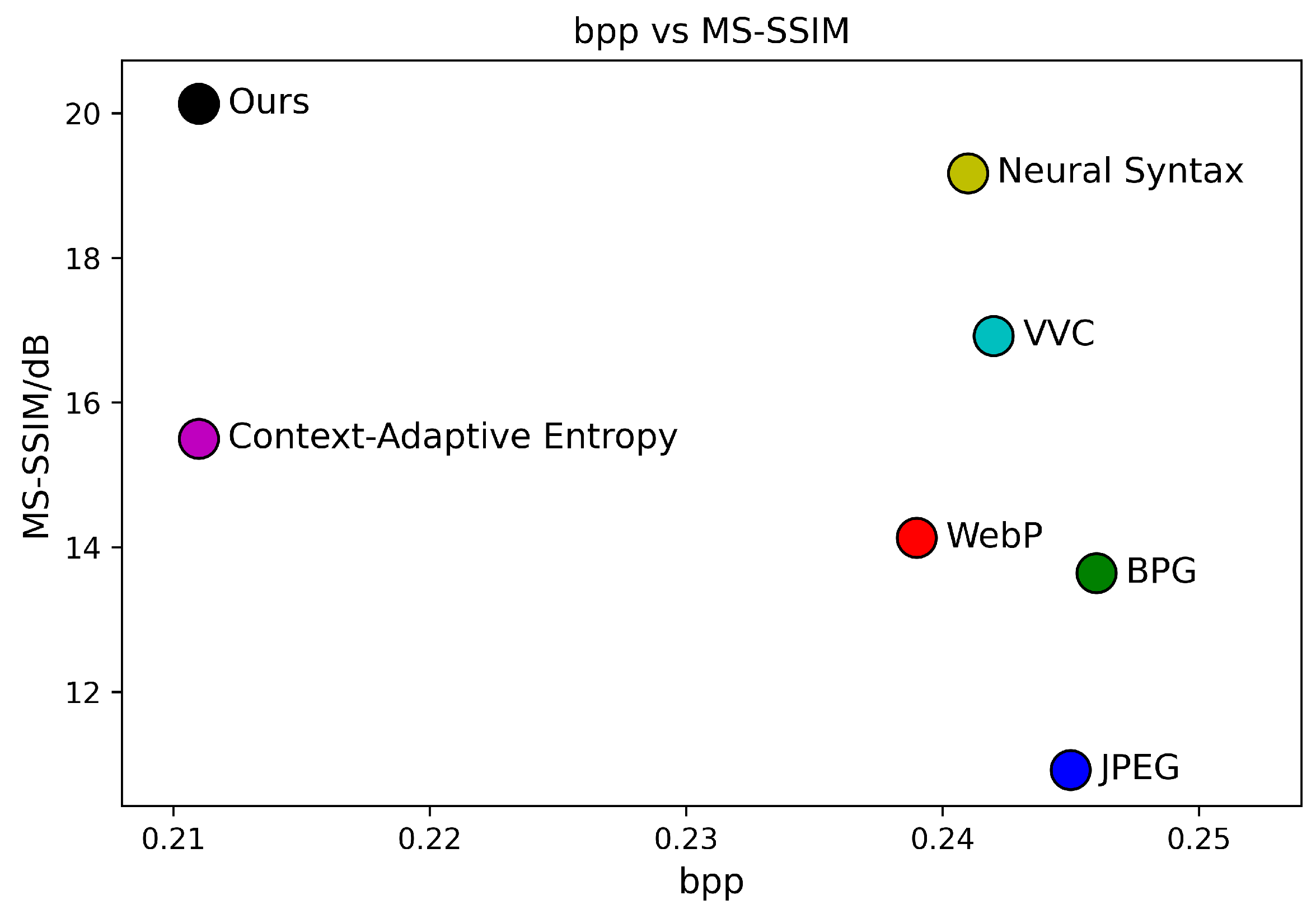

3.1. Rate-Distortion Performance

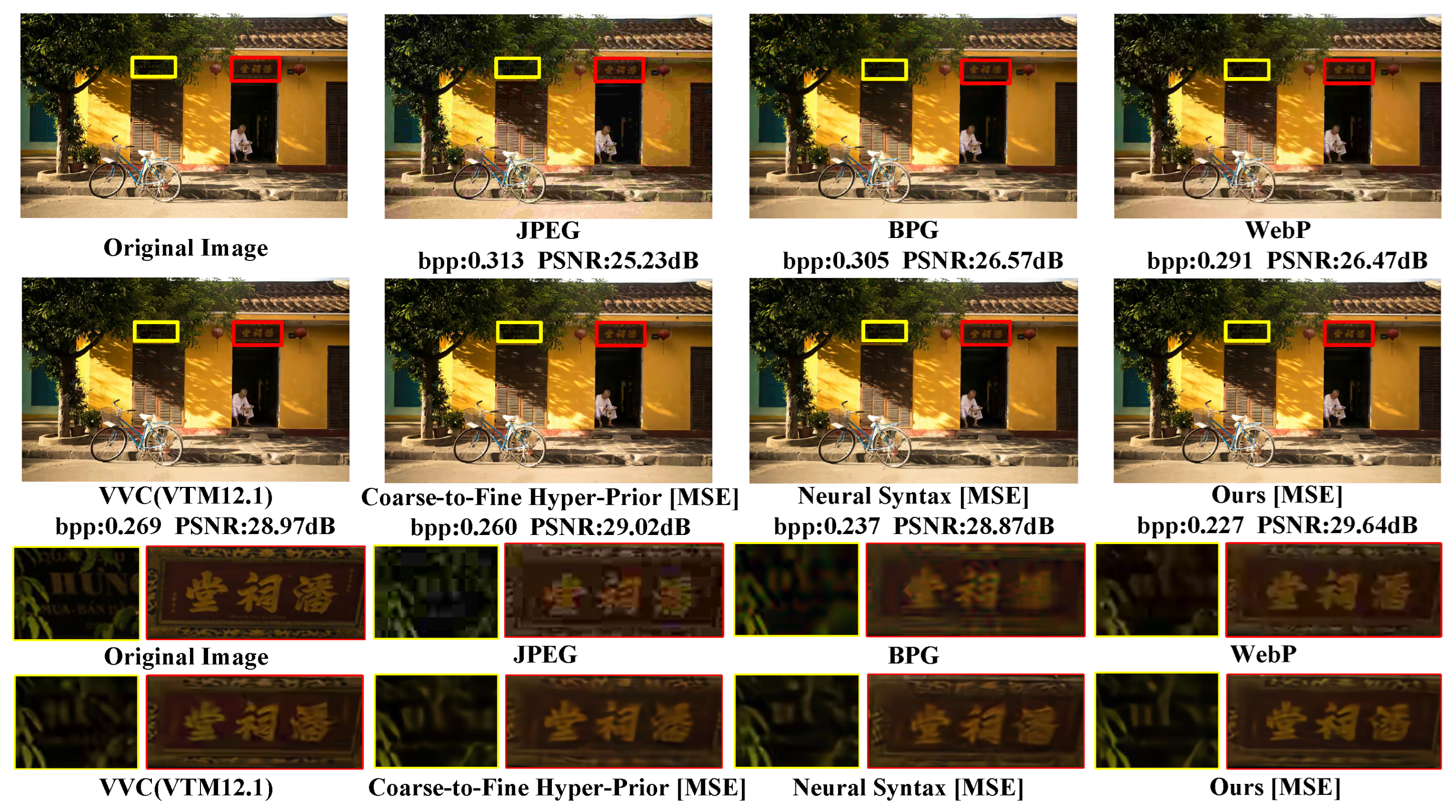

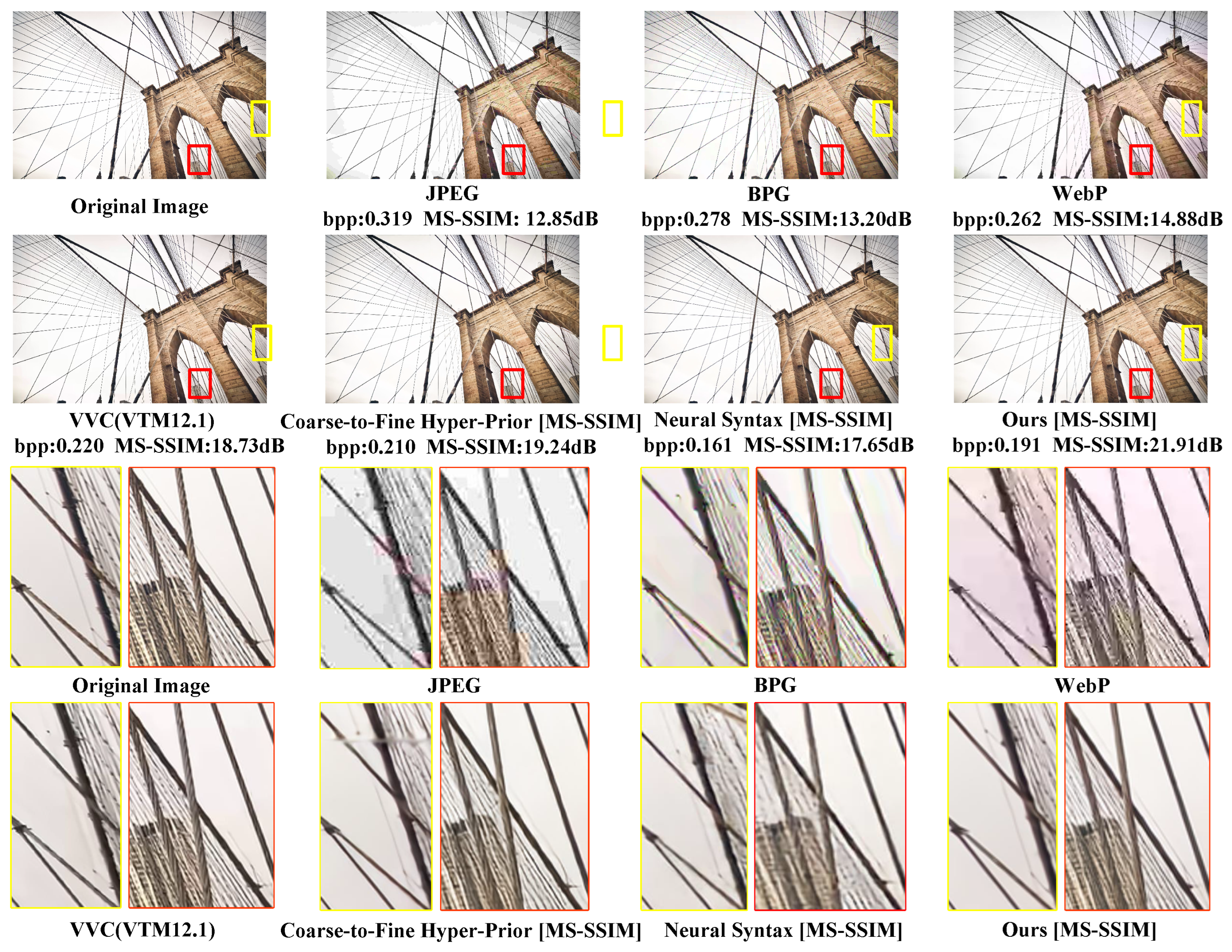

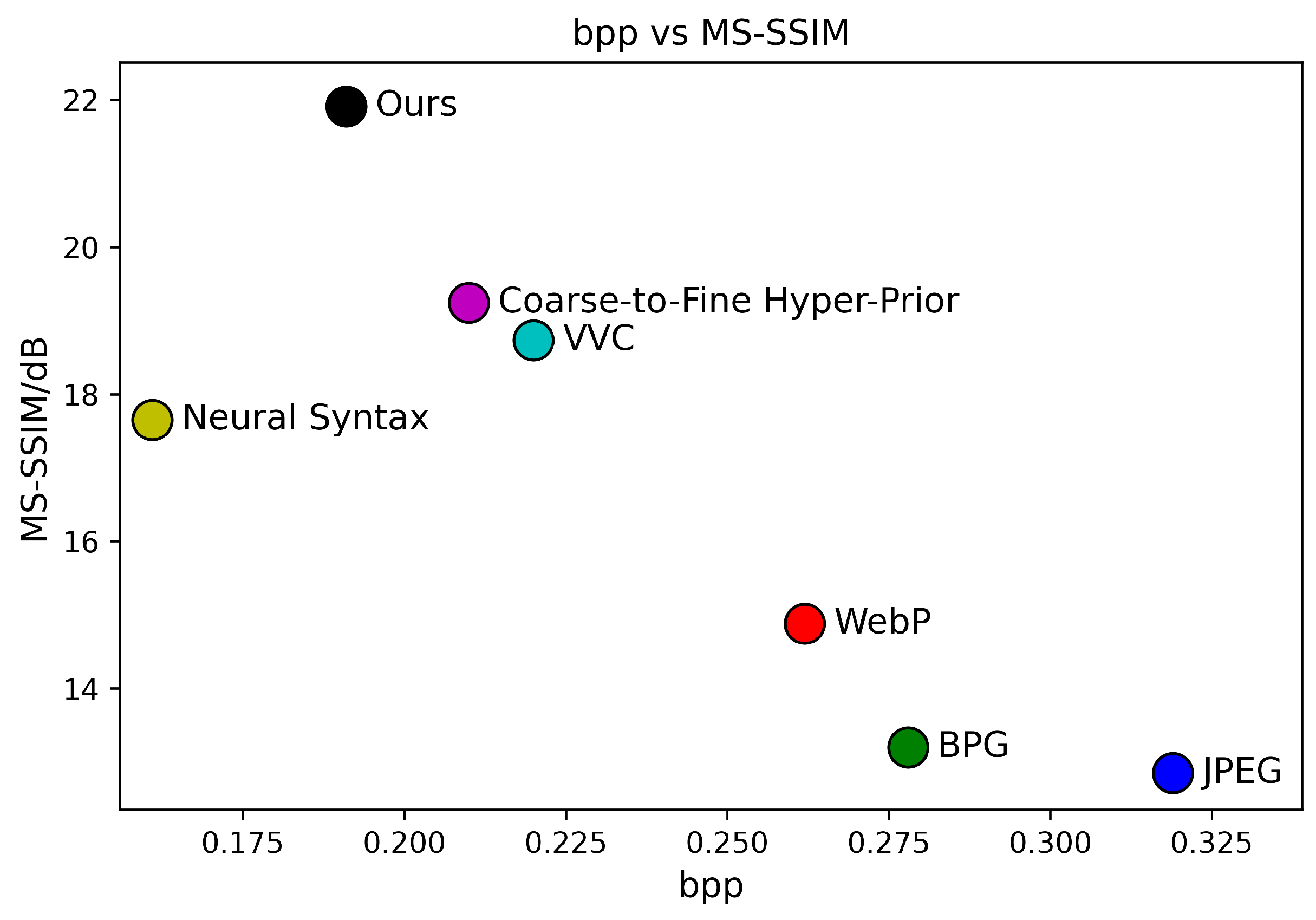

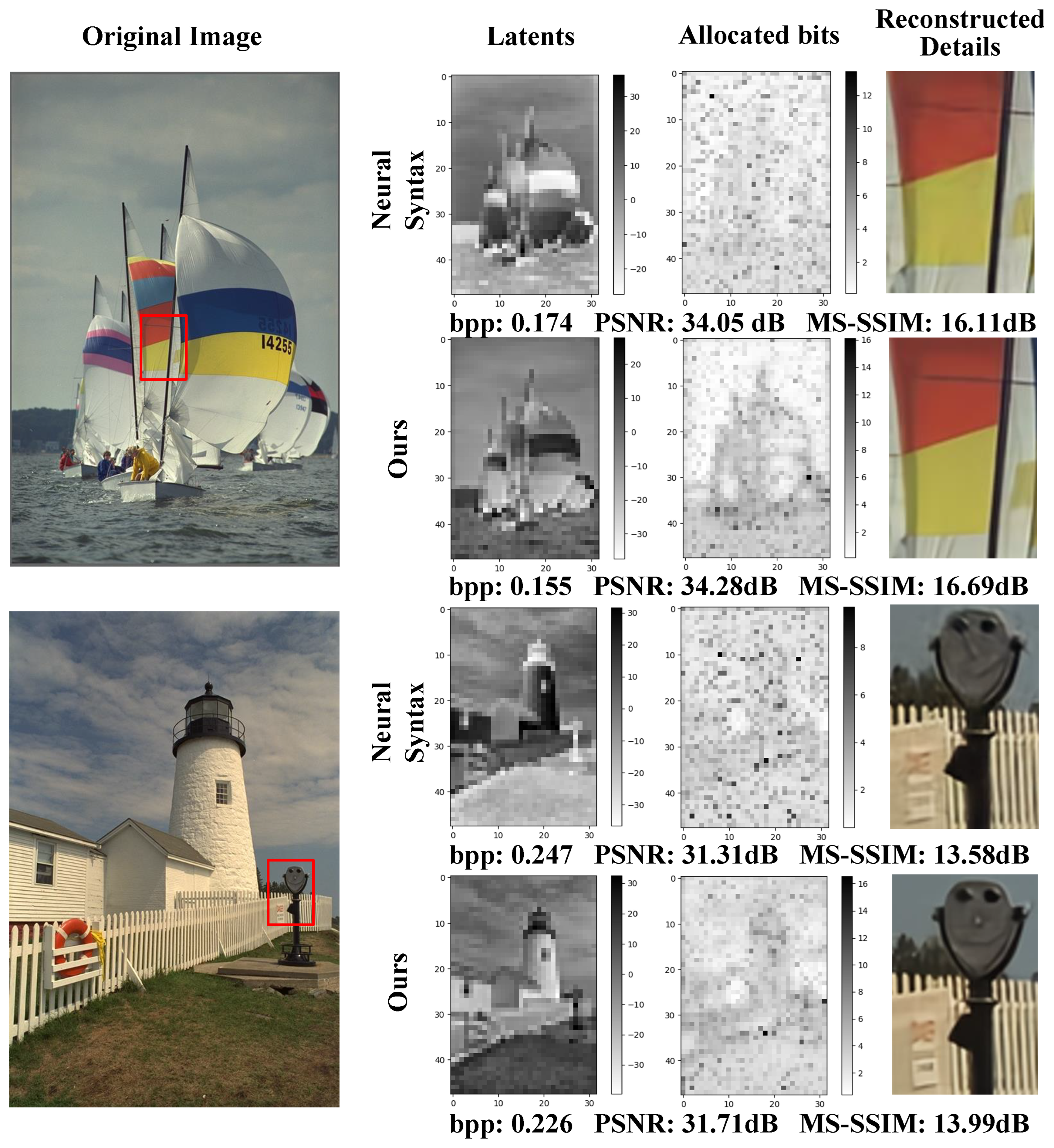

3.2. Subjective Quality Comparisons

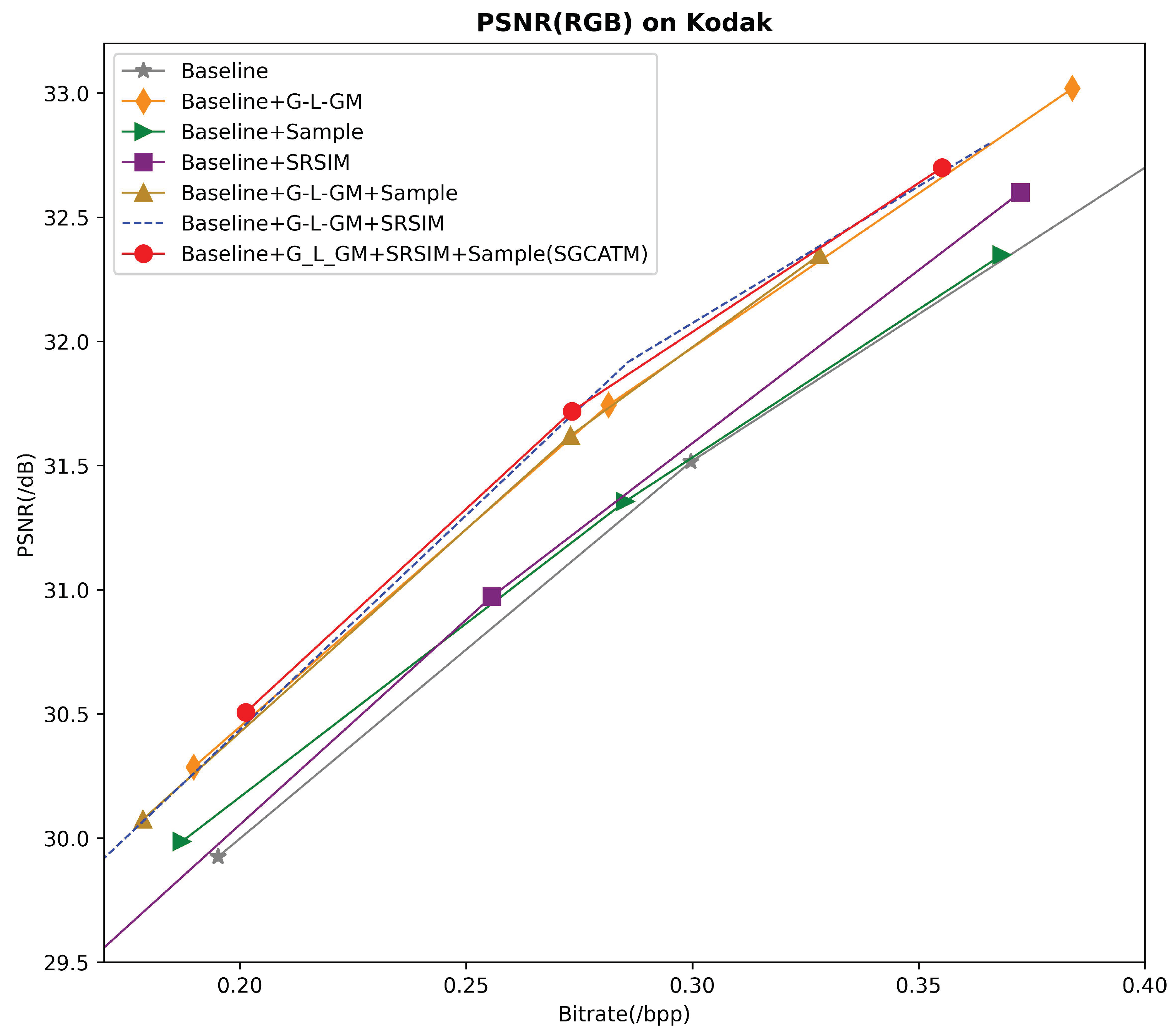

3.3. Ablation Studies

3.4. Complexity Analysis

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kim, J.K.; Oh, K.J.; Kim, J.W.; Kim, D.W.; Seo, Y.H. Intra prediction-based hologram phase component coding using modified phase unwrapping. Appl. Sci. 2021, 11, 2194. [Google Scholar] [CrossRef]

- Savchenkova, E.A.; Ovchinnikov, A.S.; Rodin, V.G.; Starikov, R.S.; Evtikhiev, N.N.; Cheremkhin, P.A. Adaptive non-iterative histogram-based hologram quantization. Optik 2024, 311, 171933. [Google Scholar] [CrossRef]

- Zea, A.V.; Amado, A.L.V.; Tebaldi, M.; Torroba, R. Alternative representation for optimized phase compression in holographic data. OSA Contin. 2019, 2, 572–581. [Google Scholar] [CrossRef]

- Cheremkhin, P.; Kurbatova, E. Wavelet compression of off-axis digital holograms using real/imaginary and amplitude/phase parts. Sci. Rep. 2019, 9, 7561. [Google Scholar] [CrossRef]

- Xing, Y.; Kaaniche, M.; Pesquet-Popescu, B.; Dufaux, F. Adaptive nonseparable vector lifting scheme for digital holographic data compression. Appl. Opt. 2015, 54, A98–A109. [Google Scholar] [CrossRef] [PubMed]

- Belaid, S.; Hattay, J.; Machhout, M. Tele-Holography: A new concept for lossless compression and transmission of inline digital holograms. Signal Image Video Process. 2022, 16, 1659–1666. [Google Scholar] [CrossRef]

- Cheremkhin, P.A.; Kurbatova, E.A.; Evtikhiev, N.N.; Krasnov, V.V.; Rodin, V.G.; Starikov, R.S. Adaptive digital hologram binarization method based on local thresholding, block division and error diffusion. J. Imaging 2022, 8, 15. [Google Scholar] [CrossRef]

- Gonzalez, S.T.; Velez-Zea, A.; Barrera-Ramírez, J.F. High performance holographic video compression using spatio-temporal phase unwrapping. Opt. Lasers Eng. 2024, 181, 108381. [Google Scholar] [CrossRef]

- Kizhakkumkara Muhamad, R.; Birnbaum, T.; Blinder, D.; Schretter, C.; Schelkens, P. Binary hologram compression using context based Bayesian tree models with adaptive spatial segmentation. Opt. Express 2022, 30, 25597–25611. [Google Scholar] [CrossRef]

- Seeling, P. Visual user experience difference: Image compression impacts on the quality of experience in augmented binocular vision. In Proceedings of the 2016 13th IEEE Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 9–12 January 2016; pp. 924–929. [Google Scholar]

- Ohta, M.; Motokurumada, M.; Yokomichi, R.; Yamashita, K. A data compression for photo-based augmented reality system. In Proceedings of the 2013 IEEE International Symposium on Consumer Electronics (ISCE), Las Vegas, NV, USA, 11–14 January 2013; pp. 65–66. [Google Scholar]

- Zhou, X.; Qi, C.R.; Zhou, Y.; Anguelov, D. Riddle: Lidar data compression with range image deep delta encoding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17212–17221. [Google Scholar]

- Rossinelli, D.; Fourestey, G.; Schmidt, F.; Busse, B.; Kurtcuoglu, V. High-throughput lossy-to-lossless 3D image compression. IEEE Trans. Med. Imaging 2020, 40, 607–620. [Google Scholar] [CrossRef]

- Wallace, G.K. The JPEG still picture compression standard. IEEE Trans. Consum. Electron. 1992, 38, xviii–xxxiv. [Google Scholar] [CrossRef]

- Taubman, D.S.; Marcellin, M.W.; Rabbani, M. JPEG2000: Image compression fundamentals, standards and practice. J. Electron. Imaging 2002, 11, 286–287. [Google Scholar] [CrossRef]

- Yee, D.; Soltaninejad, S.; Hazarika, D.; Mbuyi, G.; Barnwal, R.; Basu, A. Medical image compression based on region of interest using better portable graphics (BPG). In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 216–221. [Google Scholar]

- Ginesu, G.; Pintus, M.; Giusto, D.D. Objective assessment of the WebP image coding algorithm. Signal Process. Image Commun. 2012, 27, 867–874. [Google Scholar] [CrossRef]

- Bross, B.; Wang, Y.K.; Ye, Y.; Liu, S.; Chen, J.; Sullivan, G.J.; Ohm, J.R. Overview of the versatile video coding (VVC) standard and its applications. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3736–3764. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. ACM SIGMOBILE Mob. Comput. Commun. Rev. 2001, 5, 3–55. [Google Scholar] [CrossRef]

- Sinz, F.H.; Bethge, M. What is the limit of redundancy reduction with divisive normalization? Neural Comput. 2013, 25, 2809–2814. [Google Scholar] [CrossRef]

- Carandini, M.; Heeger, D.J. Normalization as a canonical neural computation. Nat. Rev. Neurosci. 2012, 13, 51–62. [Google Scholar] [CrossRef]

- Ballé, J.; Minnen, D.; Singh, S.; Hwang, S.J.; Johnston, N. Variational image compression with a scale hyperprior. arXiv 2018, arXiv:1802.01436. [Google Scholar]

- Minnen, D.; Ballé, J.; Toderici, G. Joint autoregressive and hierarchical priors for learned image compression. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018; pp. 10794–10803. [Google Scholar]

- Lee, J.; Cho, S.; Beack, S.K. Context-adaptive Entropy Model for End-to-end Optimized Image Compression. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Minnen, D.; Singh, S. Channel-wise autoregressive entropy models for learned image compression. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 3339–3343. [Google Scholar]

- Hu, Y.; Yang, W.; Liu, J. Coarse-to-fine hyper-prior modeling for learned image compression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11013–11020. [Google Scholar]

- Hu, Y.; Yang, W.; Ma, Z.; Liu, J. Learning end-to-end lossy image compression: A benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4194–4211. [Google Scholar] [CrossRef]

- Kim, J.H.; Heo, B.; Lee, J.S. Joint global and local hierarchical priors for learned image compression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5992–6001. [Google Scholar]

- Ballé, J.; Laparra, V.; Simoncelli, E.P. End-to-end optimized image compression. In Proceedings of the 5th International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Ballé, J.; Laparra, V.; Simoncelli, E.P. Density modeling of images using a generalized normalization transformation. In Proceedings of the 4th International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 9–12 November 2003; Volume 2, pp. 1398–1402. [Google Scholar]

- Liu, H.; Chen, T.; Shen, Q.; Ma, Z. Practical Stacked Non-local Attention Modules for Image Compression. In Proceedings of the CVPR Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Liu, H.; Chen, T.; Guo, P.; Shen, Q.; Cao, X.; Wang, Y.; Ma, Z. Non-local attention optimized deep image compression. arXiv 2019, arXiv:1904.09757. [Google Scholar]

- Chen, T.; Liu, H.; Ma, Z.; Shen, Q.; Cao, X.; Wang, Y. End-to-end learnt image compression via non-local attention optimization and improved context modeling. IEEE Trans. Image Process. 2021, 30, 3179–3191. [Google Scholar] [CrossRef]

- Liu, J.; Lu, G.; Hu, Z.; Xu, D. A unified end-to-end framework for efficient deep image compression. arXiv 2020, arXiv:2002.03370. [Google Scholar]

- Akbari, M.; Liang, J.; Han, J.; Tu, C. Learned bi-resolution image coding using generalized octave convolutions. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 6592–6599. [Google Scholar]

- Ye, Z.; Li, Z.; Huang, X.; Yin, H. Joint asymmetric convolution block and local/global context optimization for learned image compression. In Proceedings of the 2021 Data Compression Conference (DCC), Snowbird, UT, USA, 23–26 March 2021; p. 381. [Google Scholar]

- Ma, H.; Liu, D.; Xiong, R.; Wu, F. iWave: CNN-based wavelet-like transform for image compression. IEEE Trans. Multimed. 2019, 22, 1667–1679. [Google Scholar] [CrossRef]

- Ma, H.; Liu, D.; Yan, N.; Li, H.; Wu, F. End-to-end optimized versatile image compression with wavelet-like transform. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1247–1263. [Google Scholar] [CrossRef]

- Xie, Y.; Cheng, K.L.; Chen, Q. Enhanced invertible encoding for learned image compression. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 162–170. [Google Scholar]

- Wang, D.; Yang, W.; Hu, Y.; Liu, J. Neural data-dependent transform for learned image compression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17379–17388. [Google Scholar]

- Pan, G.; Lu, G.; Hu, Z.; Xu, D. Content adaptive latents and decoder for neural image compression. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 556–573. [Google Scholar]

- Zou, R.; Song, C.; Zhang, Z. The devil is in the details: Window-based attention for image compression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17492–17501. [Google Scholar]

- Liu, J.; Sun, H.; Katto, J. Learned image compression with mixed transformer-cnn architectures. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14388–14397. [Google Scholar]

- Lu, M.; Chen, F.; Pu, S.; Ma, Z. High-efficiency lossy image coding through adaptive neighborhood information aggregation. arXiv 2022, arXiv:2204.11448. [Google Scholar]

- Ruan, H.; Wang, F.; Xu, T.; Tan, Z.; Wang, Y. MIXLIC: Mixing Global and Local Context Model for learned Image Compression. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; pp. 684–689. [Google Scholar]

- Agustsson, E.; Timofte, R. Ntire 2017 challenge on single image super-resolution: Dataset and study. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 126–135. [Google Scholar]

- Kodak, E. Kodak Lossless True Color Image Suite (PhotoCD PCD0992). 1993. Available online: http://r0k.us/graphics/kodak (accessed on 15 November 1999).

- Toderici, G.; Shi, W.; Timofte, R.; Theis, L.; Ballé, J.; Agustsson, E.; Johnston, N.; Mentzer, F. Workshop and challenge on learned image compression (clic2020). In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Asuni, N.; Giachetti, A. TESTIMAGES: A Large-scale Archive for Testing Visual Devices and Basic Image Processing Algorithms. In Proceedings of the Italian Chapter Conference 2014—Smart Tools and Apps in computer Graphics (STAG 2014), Cagliari, Italy, 22–23 September 2014; pp. 63–70. [Google Scholar]

- Bégaint, J.; Racapé, F.; Feltman, S.; Pushparaja, A. Compressai: A pytorch library and evaluation platform for end-to-end compression research. arXiv 2020, arXiv:2011.03029. [Google Scholar]

- Cheremkhin, P.A.; Lesnichii, V.V.; Petrov, N.V. Use of spectral characteristics of DSLR cameras with Bayer filter sensors. J. Phys. Conf. Ser. 2014, 536, 012021. [Google Scholar] [CrossRef]

| Method Name | Paper Title | Published In | Highlight |

|---|---|---|---|

| NLAIC [34] | End-to-End Learnt Image Compression via Non-Local Attention Optimization and Improved Context Modeling | TIP 2021 | Embeds nonlocal network operations in the encoder–decoder and applying the attention mechanism to generate implicit masks for weighing the features of adaptive bit allocation. |

| Learned Bi-Resolution Image Coding [36] | Learned Bi-Resolution Image Coding using Generalized Octave Convolutions | AAAI 2021 | Introduces octave convolution to decompose the latent factors into high-resolution and low-resolution components, reducing spatial redundancy. |

| Neural Syntax [41] | Neural Data-Dependent Transform for Learned Image Compression | CVPR 2022 | This work is the first attempt to construct neural data-dependent transformation to optimize the encoding efficiency for each individual image. |

| CAFT [42] | Content Adaptive Latents and Decoder for Neural Image Compression | ECCV 2022 | The work introduces the Content Adaptive Channel Dropping (CACD) technique, which intelligently selects the optimal quality for each part of the data and eliminates unnecessary details to avoid redundancy. |

| STF&WAM [43] | The Devil Is in the Details: Window-Based Attention for Image Compression | CVPR 2022 | Introduces a more direct and effective window-based local attention block for capturing global structure and local texture. |

| TCM [44] | Learned Image Compression with Mixed Transformer–CNN Architectures | CVPR 2023 | This article proposes an efficient parallel Transformer–CNN hybrid block to combine the local modeling capabilities of CNNs with the nonlocal modeling capabilities of Transformers. |

| Kodak | CLIC | Tecnick | |

|---|---|---|---|

| BPG [16] | 0% | 0% | 0% |

| VVC [18] | −18.1% | −13.9% | — |

| Channel-wise autoregressive [25] | −19.5% | — | −22.0% |

| Coarse-to-Fine Hyper-Prior [26] | −13.8% | −19.5% | 19.0% |

| INN [40] | −22.1% | −29.2% | −24.7% |

| TinyLIC [45] | −23.9% | −33.0% | −26.0% |

| Neural Syntax [41] | −12.9% | −25.7% | −15.2% |

| WAM [43] | −23.9% | −31.9% | −27.1% |

| TCM [44] | −26.1% | −34.4% | −27.8% |

| SGCATM (Ours) | −25.3% | −38.5% | −30.8% |

| Method | Parameters (/M) ↓ | GMACs ↓ |

|---|---|---|

| Coarse-to-Fine Hyper-Prior [26] | 74.64 | 713.58 |

| STF [43] | 99.86 | 200.6 |

| Neural Syntax [41] | 14.7 | 203.22 |

| TCM [44] | 45.18 | 212.5 |

| SGCATM (Ours) | 34.35 | 1296.57 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, Y.; Ye, L.; Wang, J.; Wang, L.; Hu, H.; Yin, B.; Ling, N. Syntax-Guided Content-Adaptive Transform for Image Compression. Sensors 2024, 24, 5439. https://doi.org/10.3390/s24165439

Shi Y, Ye L, Wang J, Wang L, Hu H, Yin B, Ling N. Syntax-Guided Content-Adaptive Transform for Image Compression. Sensors. 2024; 24(16):5439. https://doi.org/10.3390/s24165439

Chicago/Turabian StyleShi, Yunhui, Liping Ye, Jin Wang, Lilong Wang, Hui Hu, Baocai Yin, and Nam Ling. 2024. "Syntax-Guided Content-Adaptive Transform for Image Compression" Sensors 24, no. 16: 5439. https://doi.org/10.3390/s24165439

APA StyleShi, Y., Ye, L., Wang, J., Wang, L., Hu, H., Yin, B., & Ling, N. (2024). Syntax-Guided Content-Adaptive Transform for Image Compression. Sensors, 24(16), 5439. https://doi.org/10.3390/s24165439