3.1. Image Distortion Caused by Camera Lens

Image distortion is a common issue in photography caused by the camera lens producing curved lines where straight lines should be [

26]. The two most common types of lens distortion are radial and tangential distortion. Distortion is the result of the lens’s geometrics and can significantly disrupt the image’s quality. The better the quality of a camera’s lens, the smaller the distortions introduced into the image. Especially in photogrammetry, limited distortions are an important factor in obtaining reliable measurement information from images. Alternatively, it is possible to correct the distortions by using mathematical models. In any case, the lack of distortions is better than using these mathematical models, which often roughly describe reality and do not have such precise results. In photogrammetry, lens distortions are calculated using camera calibration, which involves photographing a control field. In this case, a checkerboard with 1813 (49 × 37) control points was utilized, as shown in

Figure 2.

The calibration was performed using the Surveyor-Photogrammetry software version 6.0 [

20], which, in addition to the estimation of the calibration parameters, also evaluates the results. Two methods were used to determine the magnitude and variation of the distortions in the cameras under review: an OpenCV function and the bundle adjustment method with additional parameters. For the smartphone, five photos were used for the calibration, while for the still camera, seven. That choice was made because the still camera has a narrower sensor than the smartphone camera and requires more photos to centrally cover the entire image sensor. This way, the same checkerboard was used to calibrate both cameras.

An OpenCV function [

27] was used to perform the camera calibration, which returns the intrinsic matrix (

fx,

fy,

cx,

cy) and the distortion coefficients matrix (

k1,

k2,

k3,

p1,

p2). The process’s accuracy is described by the total re-projection, the Euclidean distance between the points re-projected using the estimated intrinsic and extrinsic matrix, and the image coordinates of the checkerboard corners. The smaller this error, the better the accuracy of the calculated parameters. The re-projection errors for all experiments are given in

Table 3.

The smartphone has a slightly larger re-projection error, which shows a worse performance of the camera calibration parameter values. Whereas for the still camera, it seems in general that as the focal length increases, the parameter application performance worsens.

The intrinsic matrix and the distortion coefficients matrix, as they result from the calibration, are presented in

Table 4 and

Table 5, respectively. In the intrinsic matrix, the focal length (

fx,

fy), the coordinates of the primary point with respect to the upper left corner of the image (

cx,

cy), the estimation of the aspect ratio

AspectRatio (

fy/

fx), the nominal focal length

f, the calibrated focal length

c in pixels and mm, and the coordinates of the primary point (

xo,

yo) concerning the center of the image in pixels and mm are tabulated.

In

Table 4, the

AspectRatio is approximately 1.000 in all cases, and the calibrated focal length

c deviates from the nominal focal length

f from 1.085 mm to 5.341 mm for the still camera and 0.475 mm for the smartphone. In percentages, the still camera’s, focal length differs from the nominal value by 3.9% to 8.2%, while the smartphone’s differs by 9.5%. In any case, the results are realistic.

The coefficients

k1,

k2,

k3, and

p1,

p2, see

Table 5, obtained from the calibration process can be applied to the distortion model equations and generate information to provide distortion diagrams. This information concerns the total distortion along pre-defined evaluation segments starting from the primary point and ending at the image’s corners and mid-sides, according to the guide in

Figure 3.

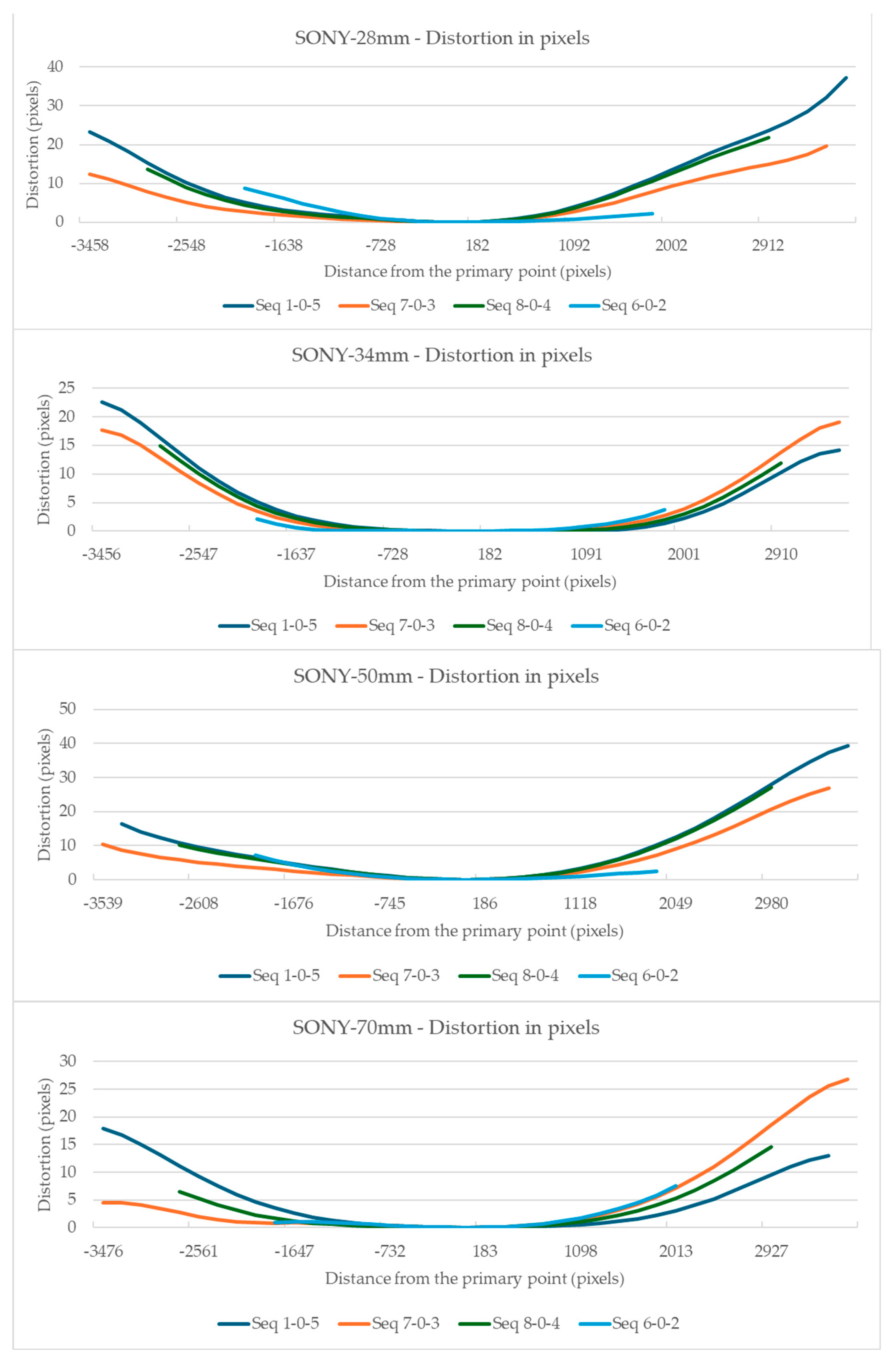

The visualization of the total distortion for the evaluation segments 1-0-5, 8-0-4, 7-0-3, and 6-0-2, concerning distance from the primary point, is shown in

Figure 4 and

Figure 5 for the Sony camera and the Samsung smartphone, respectively.

In the Sony camera’s case,

Figure 4 shows a smooth variation in distortion relative to the primary point, at least in the central part of the graphs. No systematic symmetry can be discerned except for the 34 mm focal length, where exceptionally the distortion results show a form of symmetry.

The total distortion in the Samsung smartphone camera, see

Figure 5, shows sharp changes, with the most significant deviations occurring at the ends of the diagonal evaluation segments 7-0-3 and 1-0-5. The form of the chart is symmetric as to the primary point.

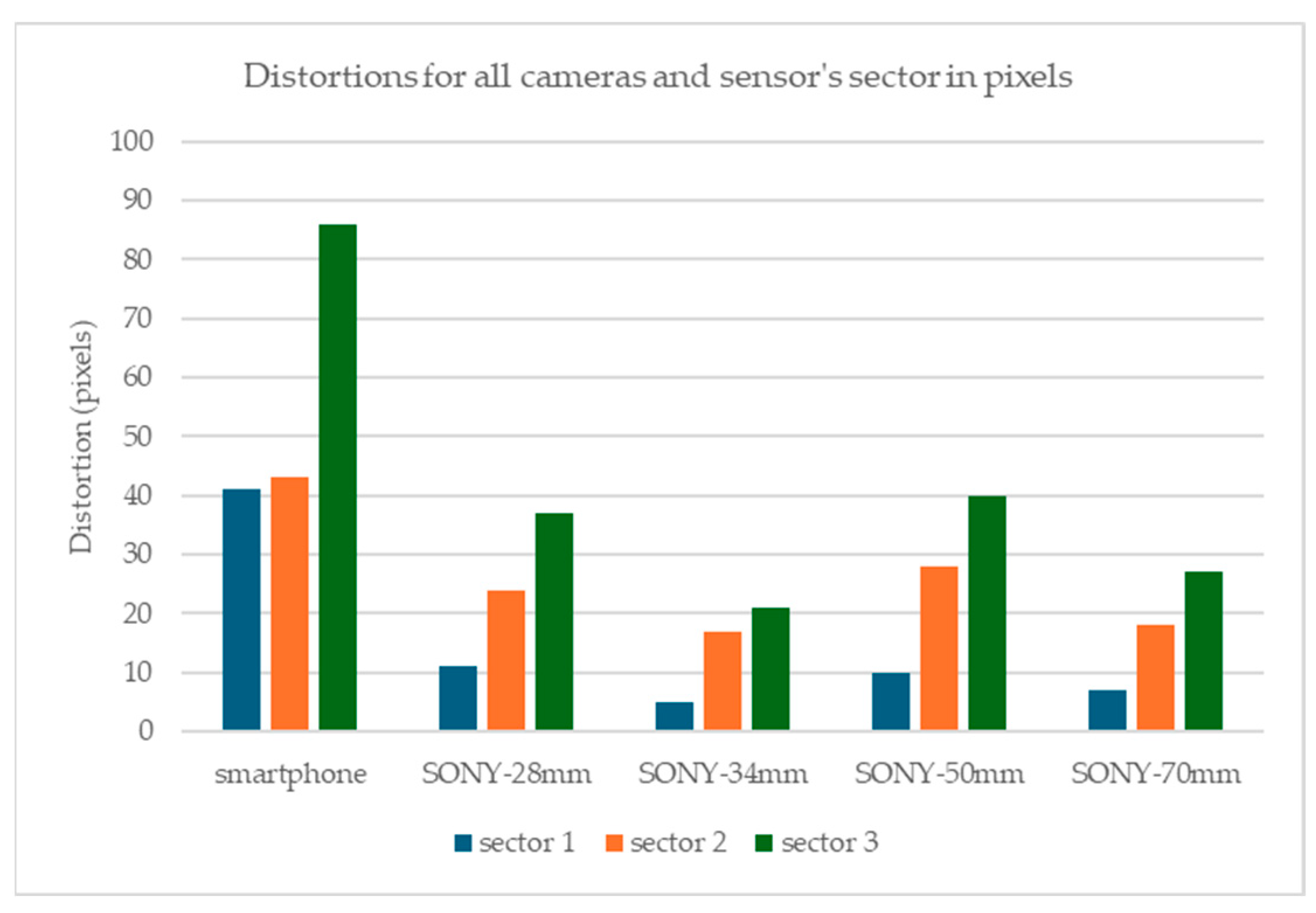

The surface of the sensor was divided into three sectors to generalize the conclusions of the existence of distortion in the sensor areas, see

Figure 6. Sector 1 is the circular sector centered at the primary point, which has a radius of the sensor’s height. Sector 2 is defined by the intersection of sector 1 and another circular sector with a radius of the sensor’s width. Finally, Sector 3 is defined as the intersection of the circular sector with a radius of the sensor diagonal and that with a radius of its width.

For these sectors, and by examining

Figure 4 and

Figure 5, the maximum distortions that occurred for the Sony camera and the Samsung smartphone in each sector are displayed in

Figure 7.

Figure 7 shows that for the Sony camera, sector 1 has the lowest distortions between 5 and 11 pixels in sector 2, the distortion ranges between 17 and 28 pixels, and in sector 3, 21 and 40 pixels. The best case is the 34 mm lens where the distortions are limited to 5, 17, and 21 pixels for sectors 1, 2, and 3. For the smartphone’s camera, the maximum distortion observed is 41 pixels in sector 1, 43 in sector 2, and 86 in sector 3.

To enable a direct comparison of the distortions of the two cameras and their lens combinations, all distortion measurements were normalized to the sensor size. All distortion values and distances from the primary point were divided by the number of pixels along the longest evaluate segments, per camera. The resulting values are shown in

Figure 8, for all cameras at the 7-0-3 diagonal evaluation segment and for all cameras, at the sector level, in

Figure 9.

Figure 8 shows that the smartphone camera has a more complex form of distortion that increases steeply than the Sony camera distortion. However, the Sony camera presents smoother gradients with characteristic asymmetry towards the primary point. As for the normalized measurement of the total distortion due to lenses, the smartphone camera displays larger values, see

Figure 9. However, in the Sony camera, the total distortion is smaller except at the edges of the images; in this case, there is also noticeable distortion.

The alternative for camera calibration is the bundle adjustment method with additional parameters. The method provides more statistics to evaluate the outcomes than the OpenCV function. The general elements of the bundle adjustment solution contain the number of photos, control points used, observations, additional parameters, and degrees of freedom, see

Table 6.

In

Table 6, the eight additional parameters are the variables

c, xo,

yo,

k1,

k2,

k3, and

p1,

p2. The degrees of freedom are large in both camera calibrations and distinct from each other due to the different number of photos used in each case.

In the analytical solution of the bundle adjustment with additional parameters, the residuals of the unknown parameters indicate the accuracy achieved in the results. The

sigma, the estimates of the main elements of the internal orientation, and their standard deviations are displayed in

Table 7.

In

Table 7, the

sigma ranges at approximately the same levels for all cameras, and the standard deviations in the estimates of the focal length and the coordinates of the primary point are low (1.59–5.82 pixels), except in the case of Sony with the focal length of 70 mm, where the standard deviations are relatively more significant (7.82–11.02 pixels). This order of magnitude of variation in the standard deviations indicates that the results in the camera calibrations are reliable.

The results of the single-image photogrammetry rectification of a photo with the checkerboard are utilized to evaluate the camera calibration parameters [

20]. The process is performed twice using the distorted image and the undistorted. The analytical differences between the accuracies in the two cases show the performance of the calibration at a control point level. A positive value means that the calibration parameters have a positive effect, and a negative value means that the calibration parameters do not work effectively. For the visual evaluation of the results, depending on the color gradation, see

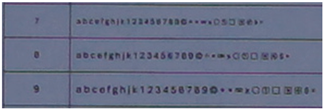

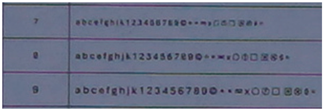

Table 8, the improvement or worsening of the results is illustrated.

Table 9 presents the evaluation images from the application of the internal orientation elements, as derived separately from the OpenCV function and the bundle adjustment method.

From the evaluation images in

Table 9, on the Sony camera, depending on the focal length, there appears to be a distinct result each time. In 28, 50, and 70 mm cases, it follows a sort of pattern, but for 34 mm it is a different form. In the Samsung smartphone, it is evident that there is an improvement in most of the image surface, forming a concentric symmetrical shape. The results of applying the distortion coefficients are improved, but without avoiding certain cores where the results are worse, such as Sony’s 28, 34, and 70 mm.

The overall percentage difference between the distorted and undistorted rectifications, called the “Rect” Indicator [

20], is an indication of the overall performance of the calibration parameters. The results for each camera are detailed in

Table 10.

Using the distortion coefficients to correct the images results in a significant improvement in all cases, see

Table 10, with the smartphone camera showing the highest performance rate. The OpenCV method is more effective than the bundle adjustment method in every case, verifying and extending the findings of the study [

20]. The results of the photogrammetric bundle adjustment with additional parameters have the same trends but smaller percentages. The same conclusions emerge, in greater detail, from the evaluation images in

Table 9 by studying the spatial distribution of the differences.

3.2. Accuracy of Geometrical Measurements Extraction

A series of images were taken from each camera to determine the accuracy of extracting geometric measurements, which can be achieved through photogrammetry using the cameras in question. Three converged photographic shots were taken from the smartphone and the still camera for the nominal focal lengths of 28, 34, 50, and 70 mm.

Figure 10 shows an example of three photographs depicting the test field taken by the Sony-34 mm camera.

For photogrammetric processes to be carried out, points with known ground coordinates must be depicted in the photographs. These points will be used as control points or checkpoints to determine the accuracy achieved in actual three-dimensional measurements. For this purpose, 21 points with known coordinates were used, of which 5 served as checkpoints and the rest as control points. These points are characteristic details on the face of the building, see

Figure 11 as an example. To document control points in the field survey, each point code was noted on printed close-up photographs of the object. This allows post-processing to identify the points in the photographs and assign coordinates to them. The coordinates of the points have been measured and calculated by topographic methods [

28], which ensure accuracy and reliability [

29]. The points were measured with a total station, in reflectorless mode, from the same location. With this technique and considering the measurement distances were close, high precision in calculating the coordinates is ensured, estimated at 2 mm.

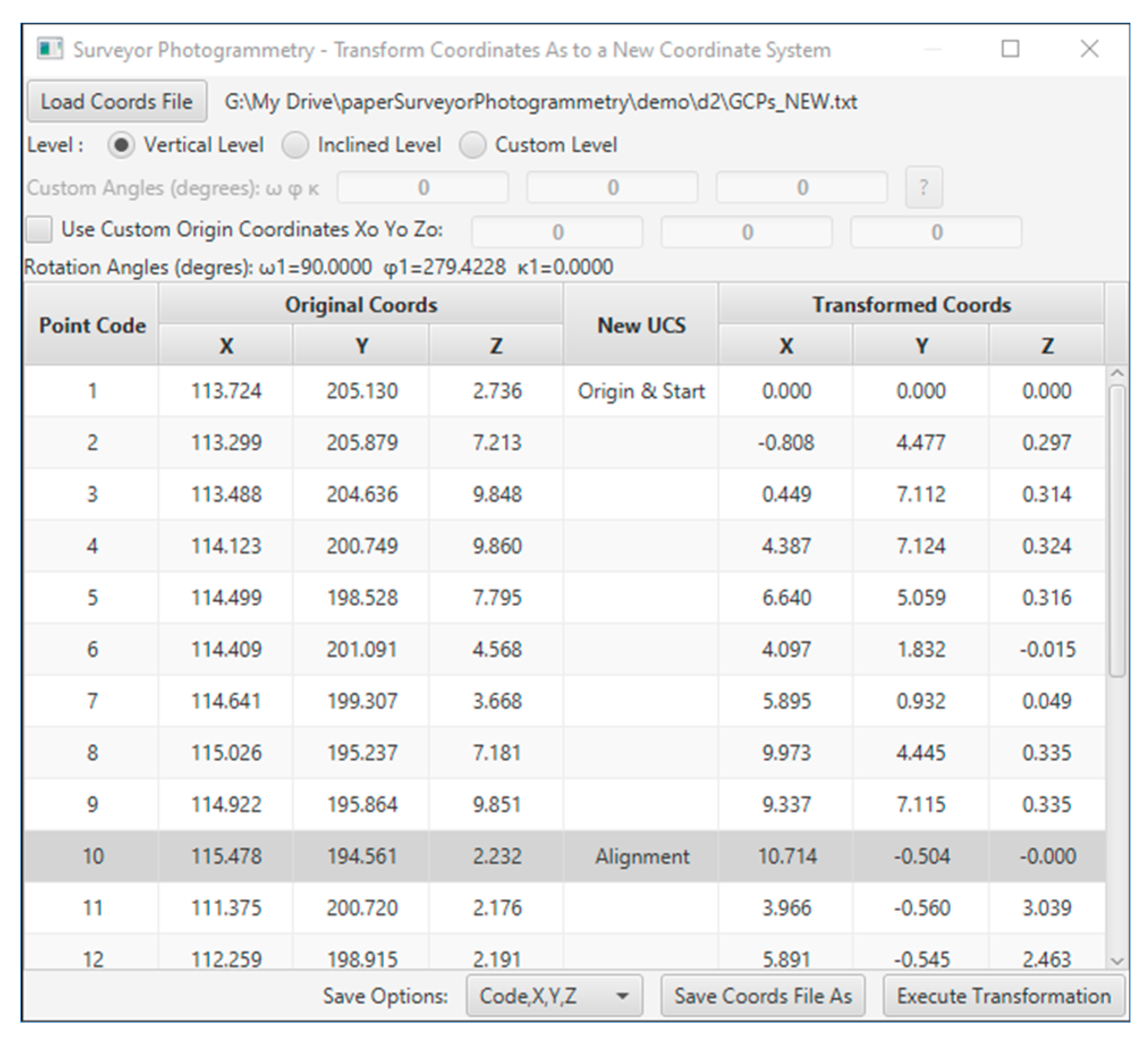

It was considered appropriate to transform the coordinates of the control and checkpoints into a coordinate system that is adjusted to the building’s face. The

X-axis is parallel to the building facade, the

Y-axis is vertical, and the

Z-axis is perpendicular to the XY plane, see

Figure 12.

The coordinates transformation was performed in the Surveyor-Photogrammetry software version 6.0, see

Figure 13. For the transformation, it is sufficient to define the direction of the building face with at least two points, one for the origin (Origin and Start) and one for the definition of the direction (Alignment).

The ground coordinates of the control and checkpoints, as to the new coordinate system, are listed in

Table 11. Regarding the Z coordinates, their values range from −0.015 m to 3.039 m ensuring a satisfactory range of depth of field, theoretically ensuring reliable values for the external orientation of the photographs that will lead to reliable photogrammetric intersections.

Using this custom coordinate system, the accuracy in calculating the checkpoints in actual 3D measurements will be better estimated, especially when the Z-axis is in the same direction as the camera’s optical axis. The accuracy estimate along the Z-axis is critical in photogrammetry as it is the most sensitive, in terms of the accuracy of the measurements along this direction.

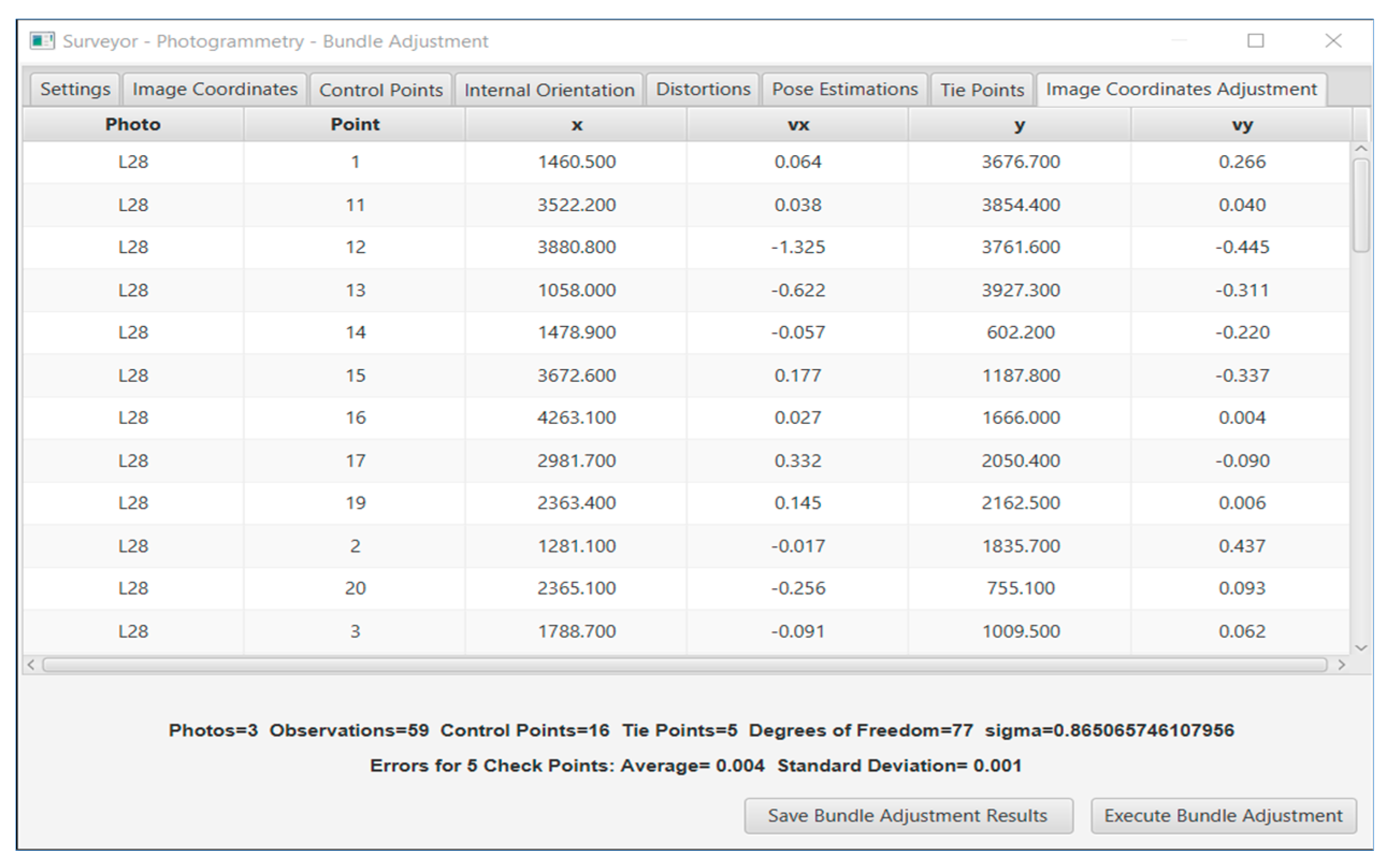

To test the accuracy that can be achieved in the calculation of coordinates on the object, a photogrammetric survey on a building face was conducted. Specifically, the photogrammetric bundle adjustment method with additional parameters was used in the Surveyor-Photogrammetry environment, see an instance in

Figure 14. The method accepts as input, the image, and the ground coordinates of the control points and estimates the coordinates of the checkpoints. Also, if selected, the internal orientation elements, i.e., the focal length, primary point coordinates, and distortion coefficients can be estimated too.

The bundle adjustment method was performed for the still camera and the smartphone. Two solutions were extracted; in the first, the focal distance and the coordinates of the primary point were included as unknown parameters, and in the second, the distortion parameters

k1,

k2,

k3,

p1,

p2 were added, as unknowns to the first case. The results of both cases are shown in

Table 12 and

Table 13, respectively.

The differences in the checkpoint coordinates, calculated using the two mapping methods, i.e., photogrammetry and topography, are an estimation of accuracy achieved. It is evident from

Table 12 and

Table 13 that the still camera outperforms the smartphone camera in all cases of various focal lengths. The accuracy differences between the two cameras are 10–17 mm. These accuracies determine the scales of the mapping products that can be produced photogrammetrically with this equipment. Furthermore, it significantly improves accuracy when distortion coefficients are considered.

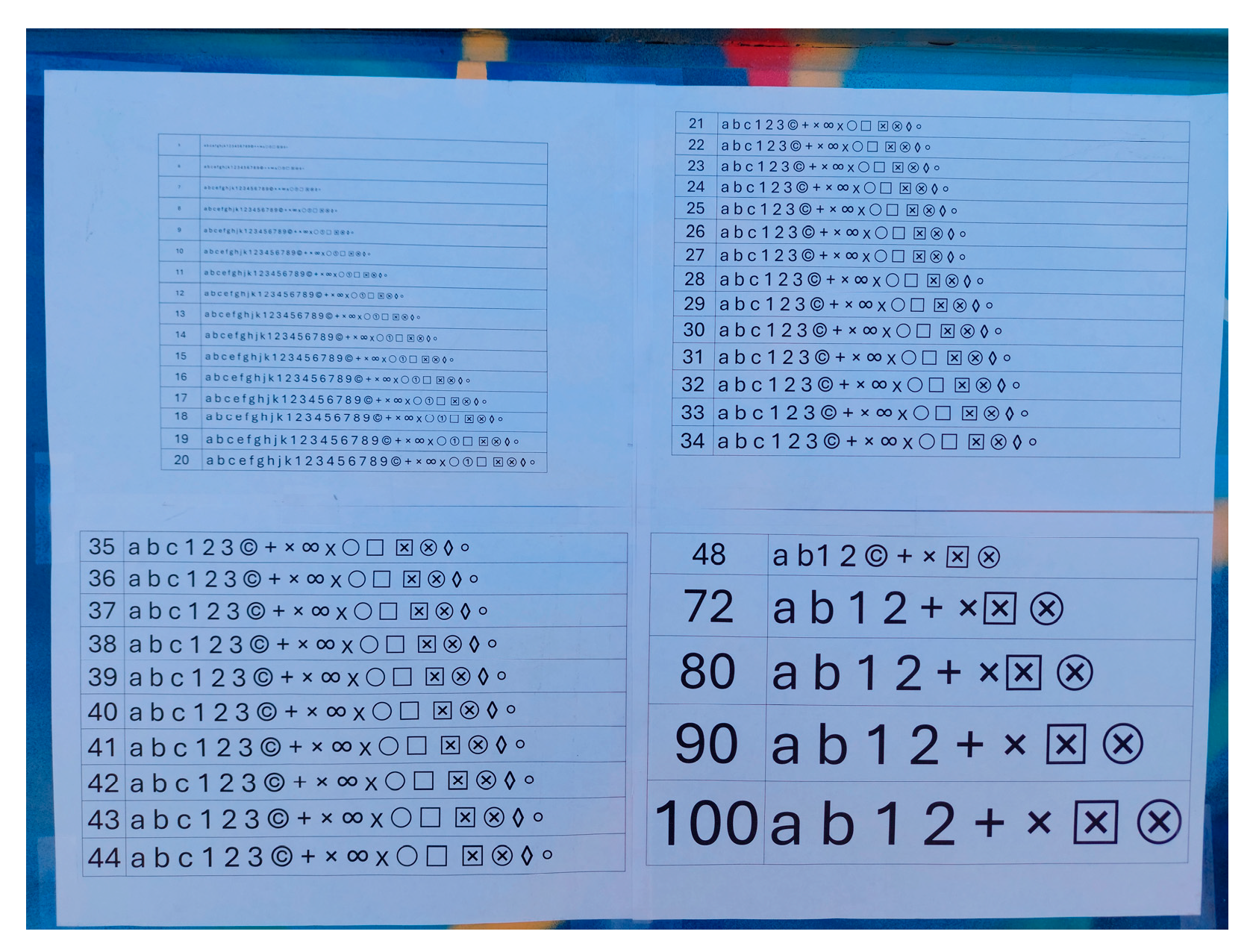

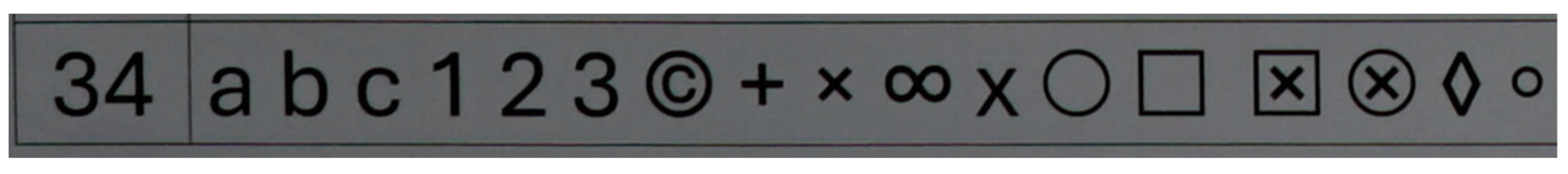

3.3. Image Quality Test between the Smartphone and the Still Camera

To compare the quality of the images from the smartphone camera and the still camera, a series of photographs was taken, where a board with printed alphanumeric characters and special symbols, in varying sizes, was photographed, see

Figure 15.

The experiment was divided into three sections, wherein in each section a specific horizontal width distance is photographically covered on the surface of a building, regardless of the shooting distance. These three sections were named frame 1, frame 2, and frame 3. For the still camera, the nominal focal lengths of 28, 34, 50, and 70 mm were used, while for the smartphone camera, the only available focal length of 5 mm was used. Therefore, in each frame, there are five photos, one from the smartphone camera and four from the still camera. The three photo frames, taken by the Samsung smartphone, are shown in

Figure 16, for example.

During the experiment, all the photographs were taken in a brief period. In this way, the sun’s position, which affects the lighting, did not play a significant role in the quality of the photos. For the same reason, the humidity, pressure, and atmosphere composition remained the same during the photography and did not affect the quality of the photos. The same horizontal coverage on the object, in the frame photos, was achieved using as a guide the distinctive vertical lines on the facade of the building, see

Figure 16, where the quality board was placed. At the same time, the camera was placed each time, along a line perpendicular to the facade of the building, which passed through the quality board. This way, the same horizontal coverage on the object and the same shooting angles were ensured.

Image quality is a subjective concept that depends on the purpose for which the image will be utilized and the viewer’s preferences. However, some quantitative metrics [

30] can be used to measure and compare image quality, such as sharpness, contrast, noise, color accuracy, and dynamic range. Photogrammetry is mainly interested in identifying the edges of shapes in images. In this case, it was chosen to photograph alphanumeric characters and symbols in each frame. Depending on whether these are recognizable, distorted, or sharp, it will provide a clear picture of each camera’s image quality. In this way, the performance of the camera lens at different nominal focal lengths will also be studied.

Selected parts of the photos where the board is depicted have been cropped from the frames and are presented in

Table 14,

Table 15 and

Table 16.

The photos of the alphanumeric characters and symbols show that between the different nominal focal lengths of 28, 34, 50, and 70 mm, there are no significant differences in the quality of the images. This means that the performance of the lens used by the Sony still camera is satisfactory.

Comparing the photos from the Sony camera with those from the Samsung smartphone in

Table 14,

Table 15 and

Table 16, there is a qualitative difference in the recognition, and the sharpness of the depicted characters and symbols, especially as the shooting distance increases. The results for Sony are slightly better, but in no case are there any big differences. A slightly larger difference in image quality is shown in frame 3, which corresponds to the longest shooting distance of the test.

At longer shooting distances, no comparison can be made, as the smartphone cannot use a longer focal length lens. The digital zoom capability, on the one hand, cannot be used in photogrammetry as it is not a central projection, and on the other hand, it has no practical effect as it does not provide additional information as it simply magnifies the original information from the imaging sensor. The length x height ratio of the still camera sensor is 6000 × 4000, i.e., 1.5:1, while the smartphone camera is 9248 × 6936, i.e., 1.33:1. Since the same horizontal area is photographed, the pixel ratio between the smartphone camera and the still camera is 6000:9248, which is 0.65 in favor of the smartphone sensor.

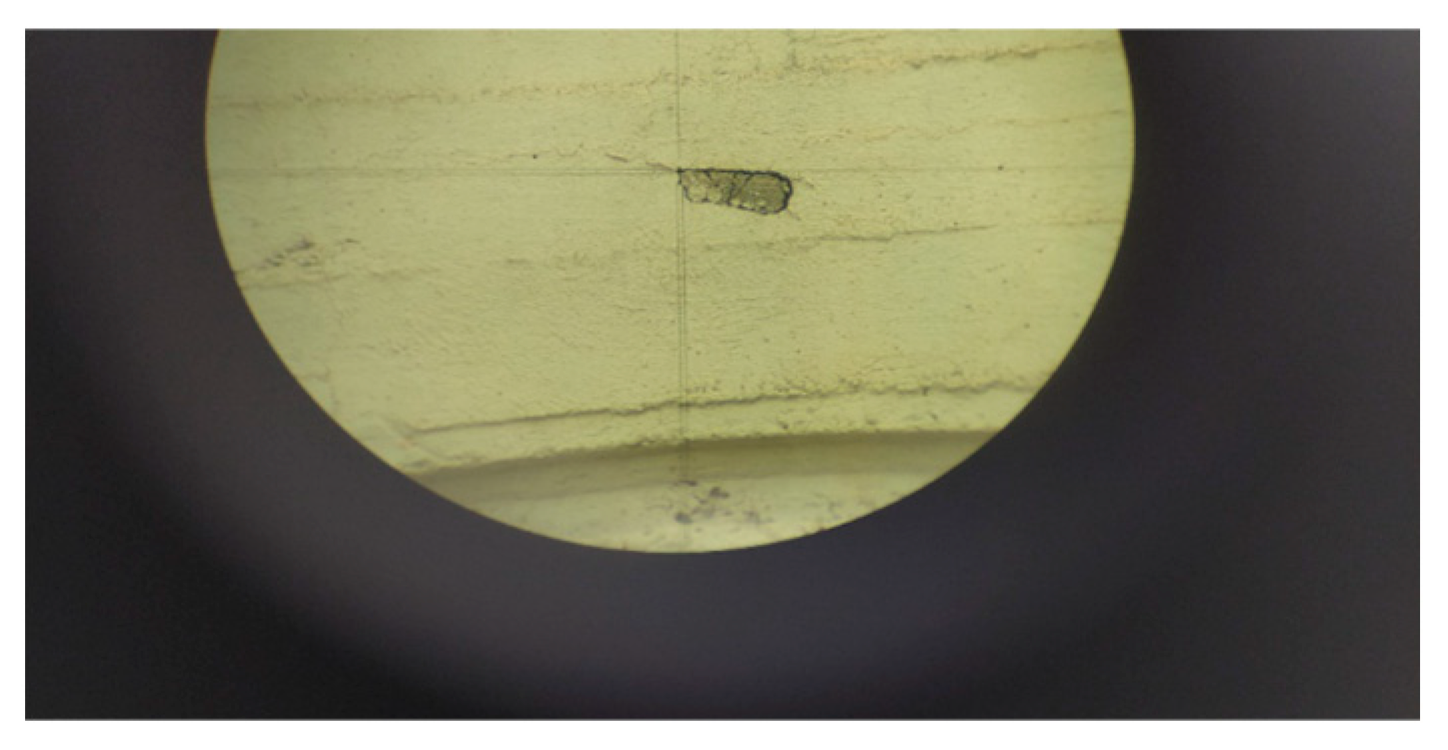

Figure 17 is an additional sample related to the comparison of the two cameras’ image quality, depicting a construction detail on the face of a building.

Figure 17 shows differences between the two photographs, yet the outline and boundaries of the construction detail are evident in both cases.

Some practical issues encountered and dealt with during photography, which may affect the quality of the images, are worth mentioning.

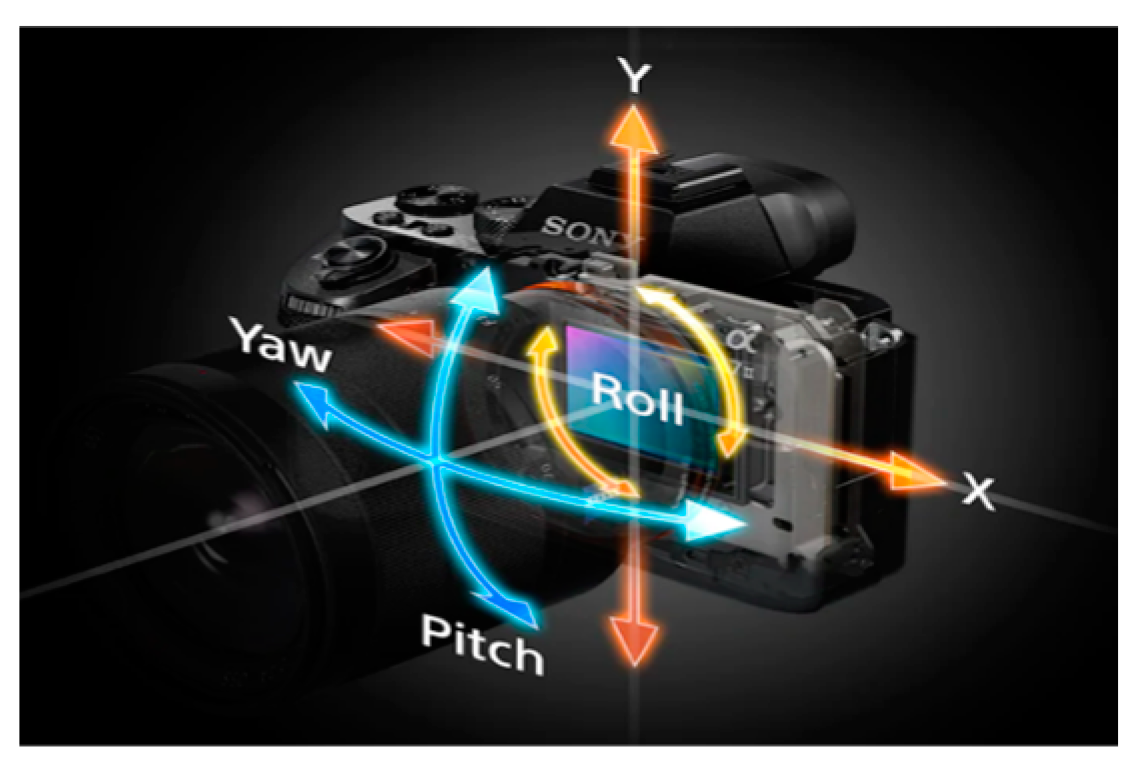

The relative movement of the imaging sensor about the camera body creates issues in photogrammetric procedures, where the assumption is to maintain a constant geometry in the internal orientation in all shots. For this reason, the automatic image stabilization function in the Sony camera was disabled. To determine the effects of this setting, test shots were taken with and without a photo tripod, and the results are shown in

Figure 18 and

Figure 19, respectively.

With the automatic image stabilization function of the camera disabled, especially in the cases of poor lighting, where the shutter speed is slow, the photography results are “shaken”, see

Figure 18. In the case that a photo tripod is used, and the time-delay function of the photo shot is parallel, the results are very satisfactory, see

Figure 19. In the case of the smartphone, the problem of “shaken” photos did not occur.

Another issue noticed is that while both cameras’ shooting parameters are set to AUTO, the mobile phone camera achieves brighter photos than the professional still camera. This would have been different if the photo capture parameters were set to manual.