Query-Based Object Visual Tracking with Parallel Sequence Generation

Abstract

1. Introduction

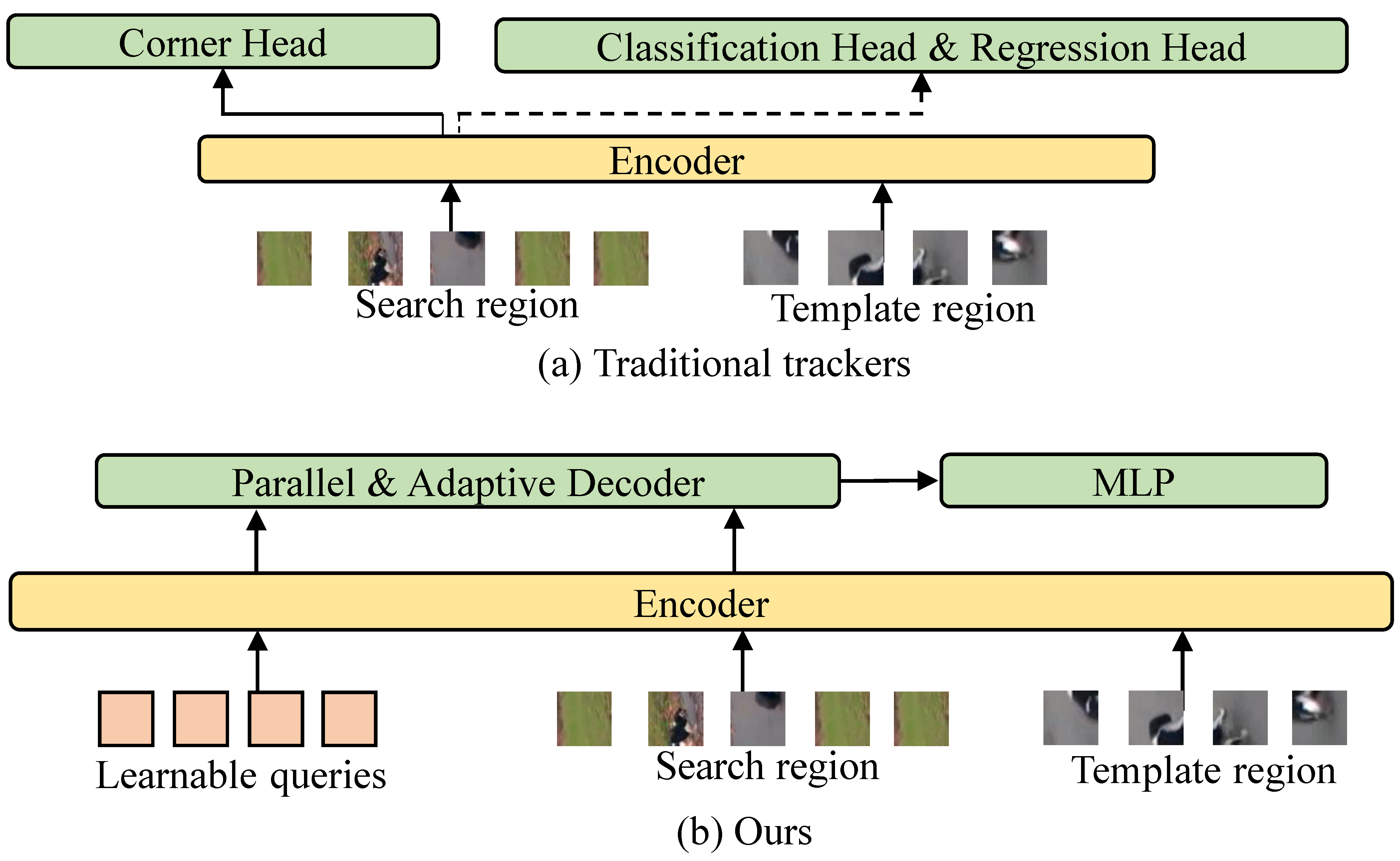

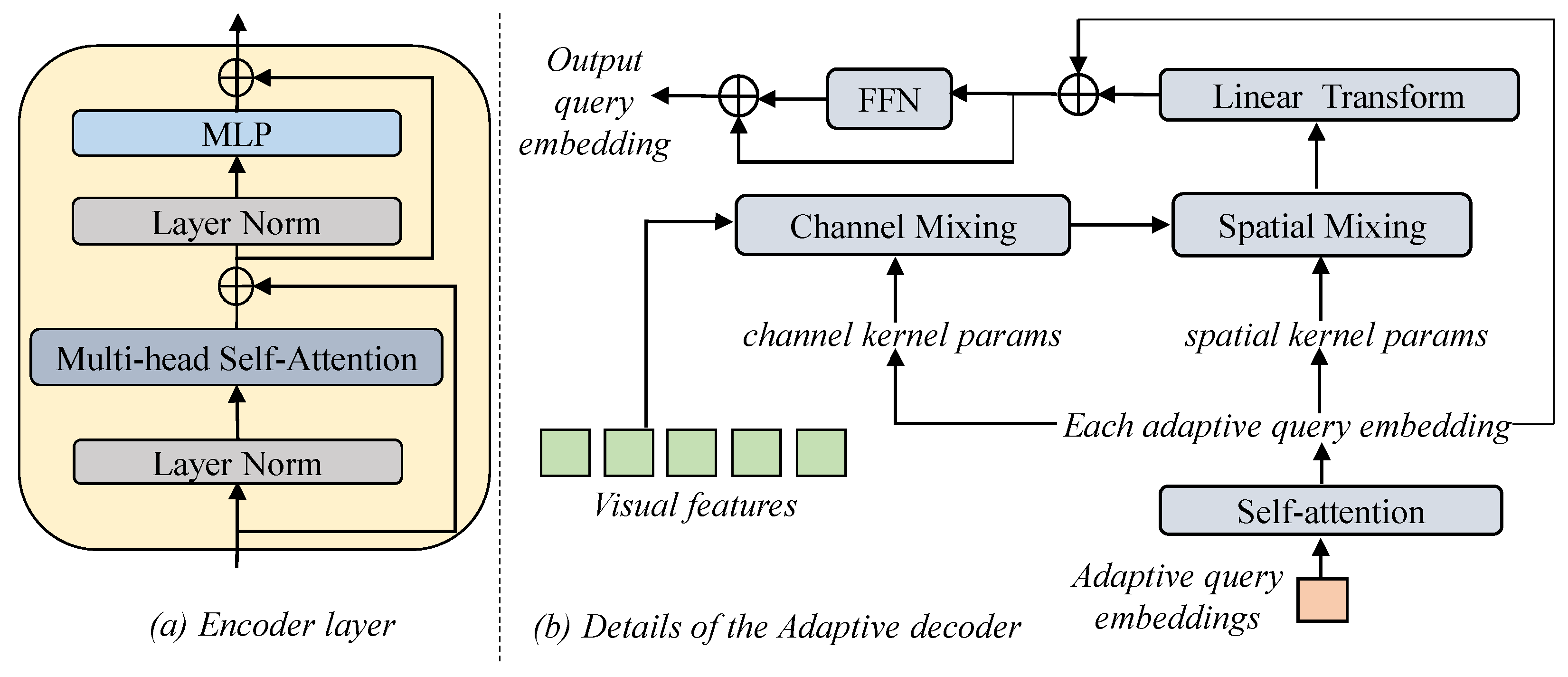

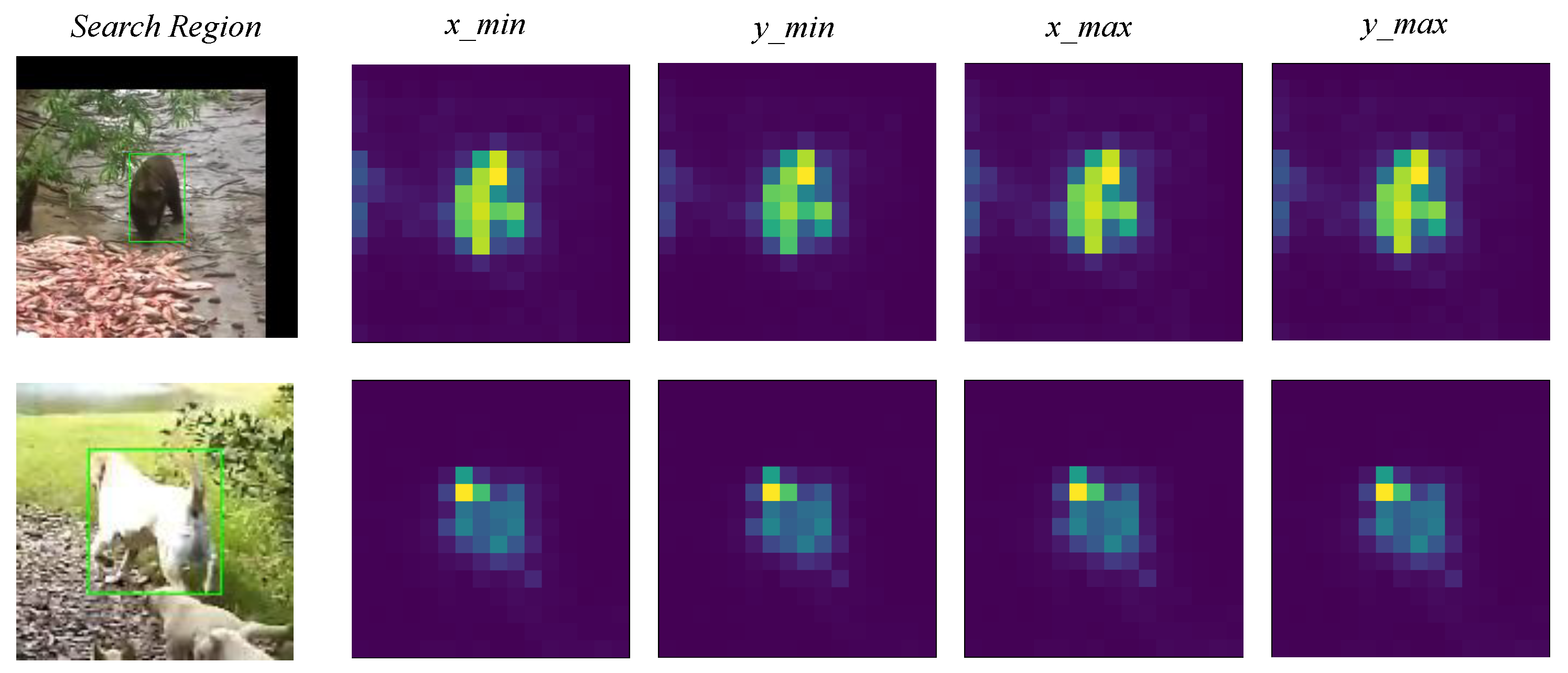

- We propose a concise framework for query-based sequence generation tracking. A set of four queries are designed to represent the target’s localization, with each query being responsible for one of the target coordinates. This framework generates the target coordinate sequence with a query-based head operating in parallel.

- To make the decoding more adaptive to template-specific search region features from the perspectives of content and position, we adopt an adaptive decoding scheme including a one-layer adaptive decoder and learnable adaptive inputs for the decoder.

- We explore the ViT-Large, ViT-Base [17], and light LeViT [16] backbones to obtain a family of trackers. The experiments are conducted on popular benchmarks, and the results demonstrate that our framework can obtain comparable performance and achieve a good balance of performance and speed when compared with the state-of-the-art trackers.

2. Related Work

3. Proposed Method

3.1. Overview

3.2. Network Architecture

3.3. Training

4. Experiments

4.1. Implementation Details

4.2. Benchmark Evaluation

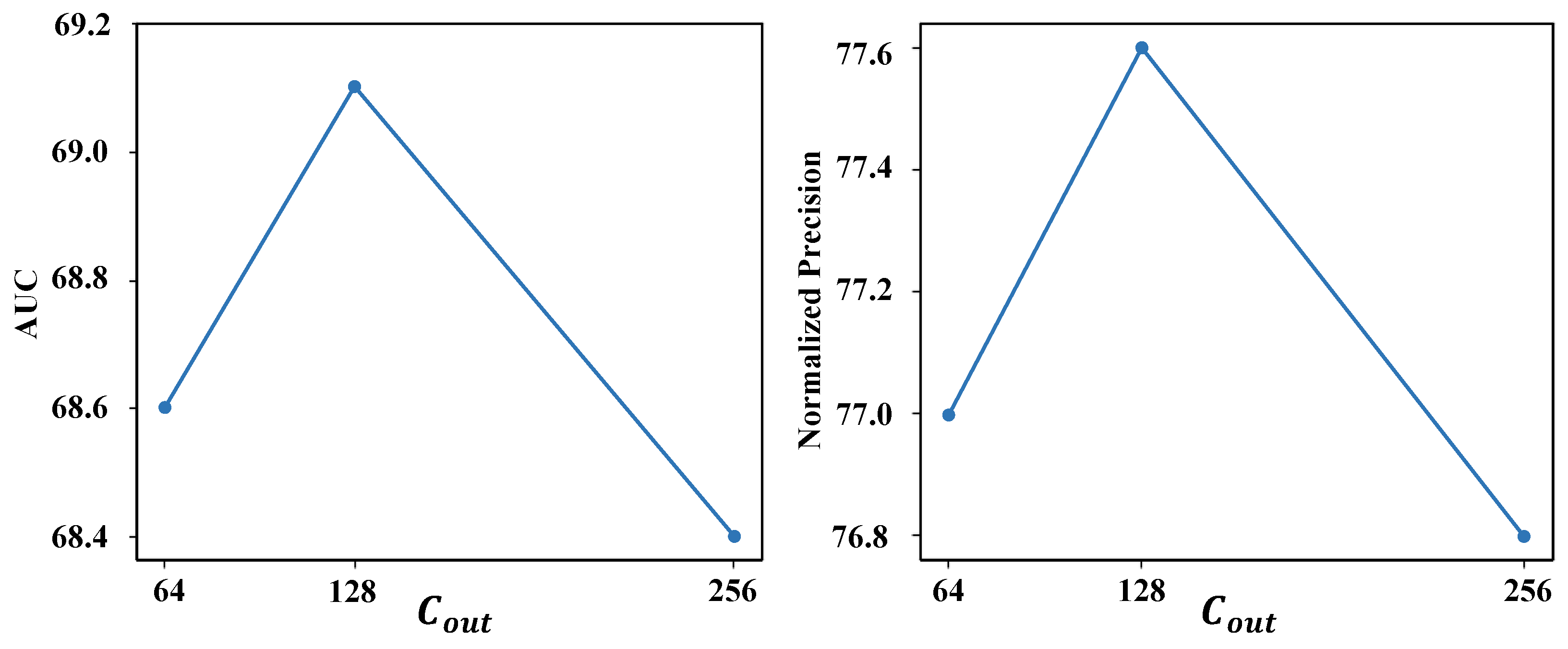

4.3. Ablation Studies

4.4. Lightweight Backbone

4.5. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, N.; Zhou, W.; Wang, J.; Li, H. Transformer Meets Tracker: Exploiting Temporal Context for Robust Visual Tracking. In Proceedings of the CVPR, Nashville, TN, USA, 20–25 June 2021; pp. 1571–1580. [Google Scholar]

- Yan, B.; Peng, H.; Fu, J.; Wang, D.; Lu, H. Learning spatio-temporal transformer for visual tracking. In Proceedings of the ICCV, Montreal, BC, Canada, 11–17 October 2021; pp. 10448–10457. [Google Scholar]

- Cui, Y.; Jiang, C.; Wang, L.; Wu, G. Target transformed regression for accurate tracking. arXiv 2021, arXiv:2104.00403. [Google Scholar]

- Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; Lu, H. Transformer tracking. In Proceedings of the CVPR, Nashville, TN, USA, 20–25 June 2021; pp. 8126–8135. [Google Scholar]

- Ye, B.; Chang, H.; Ma, B.; Shan, S.; Chen, X. Joint feature learning and relation modeling for tracking: A one-stream framework. In Proceedings of the ECCV, Tel Aviv, Israel, 23–27 October 2022; pp. 341–357. [Google Scholar]

- Cui, Y.; Jiang, C.; Wang, L.; Wu, G. MixFormer: End-to-End Tracking with Iterative Mixed Attention. In Proceedings of the CVPR, New Orleans, LA, USA, 21–24 June 2022; pp. 13608–13618. [Google Scholar]

- Lin, L.; Fan, H.; Xu, Y.; Ling, H. Swintrack: A simple and strong baseline for transformer tracking. arXiv 2021, arXiv:2112.00995. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the CVPR, Long Beach, CA, USA, 15–20 June 2019; pp. 4282–4291. [Google Scholar]

- Bhat, G.; Danelljan, M.; Gool, L.V.; Timofte, R. Learning discriminative model prediction for tracking. In Proceedings of the ICCV, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6182–6191. [Google Scholar]

- Zhang, Z.; Peng, H.; Fu, J.; Li, B.; Hu, W. Ocean: Object-aware anchor-free tracking. In Proceedings of the ECCV, Glasgow, UK, 23–28 August 2020; pp. 771–787. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the ECCV, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Tolstikhin, I.O.; Houlsby, N.; Kolesnikov, A.; Beyer, L.; Zhai, X.; Unterthiner, T.; Yung, J.; Steiner, A.; Keysers, D.; Uszkoreit, J.; et al. MLP-Mixer: An all-MLP Architecture for Vision. In Proceedings of the NIPS, Online, 6–14 December 2021; pp. 24261–24272. [Google Scholar]

- Gao, Z.; Wang, L.; Han, B.; Guo, S. AdaMixer: A Fast-Converging Query-Based Object Detector. In Proceedings of the CVPR, New Orleans, LA, USA, 21–24 June 2022; pp. 5354–5363. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the CVPR, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked autoencoders are scalable vision learners. In Proceedings of the CVPR, New Orleans, LA, USA, 21–24 June 2022; pp. 15979–15988. [Google Scholar]

- Graham, B.; El-Nouby, A.; Touvron, H.; Stock, P.; Joulin, A.; Jégou, H.; Douze, M. LeViT: A Vision Transformer in ConvNet’s Clothing for Faster Inference. In Proceedings of the ICCV, Montreal, BC, Canada, 11–17 October 2021; pp. 12239–12249. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. In Proceedings of the ICLR, Virtual, 3–7 May 2021. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the CVPR, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chen, B.; Li, P.; Bai, L.; Qiao, L.; Shen, Q.; Li, B.; Gan, W.; Wu, W.; Ouyang, W. Backbone is All Your Need: A Simplified Architecture for Visual Object Tracking. In Proceedings of the ECCV, Tel Aviv, Israel, 23–27 October 2022; pp. 375–392. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the ECCV, Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Zhang, H.; Wang, Y.; Dayoub, F.; Sunderhauf, N. Varifocalnet: An iou-aware dense object detector. In Proceedings of the CVPR, Nashville, TN, USA, 20–25 June 2021; pp. 8514–8523. [Google Scholar]

- Chen, T.; Saxena, S.; Li, L.; Fleet, D.J.; Hinton, G.E. Pix2seq: A Language Modeling Framework for Object Detection. In Proceedings of the ICLR, Virtual, 25–29 April 2022. [Google Scholar]

- Liu, S.; Li, F.; Zhang, H.; Yang, X.; Qi, X.; Su, H.; Zhu, J.; Zhang, L. Dab-detr: Dynamic anchor boxes are better queries for detr. arXiv 2022, arXiv:2201.12329. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Proceedings of the ECCV, Zurich, Switzerland, 8–14 September 2014; pp. 740–755. [Google Scholar]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. LaSOT: A high-quality benchmark for large-scale single object tracking. In Proceedings of the CVPR, Long Beach, CA, USA, 15–20 June 2019; pp. 5374–5383. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. GOT-10k: A Large High-Diversity Benchmark for Generic Object Tracking in the Wild. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1562–1577. [Google Scholar] [CrossRef] [PubMed]

- Muller, M.; Bibi, A.; Giancola, S.; Alsubaihi, S.; Ghanem, B. TrackingNet: A large-scale dataset and benchmark for object tracking in the wild. In Proceedings of the ECCV, Munich, Germany, 8–14 September 2018; pp. 300–317. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Fan, H.; Bai, H.; Lin, L.; Yang, F.; Ling, H. LaSOT: A High-quality Large-scale Single Object Tracking Benchmark. Int. J. Comput. Vis. 2021, 129, 439–461. [Google Scholar] [CrossRef]

- Gao, S.; Zhou, C.; Ma, C.; Wang, X.; Yuan, J. Aiatrack: Attention in attention for transformer visual tracking. In Proceedings of the ECCV, Tel Aviv, Israel, 23–27 October 2022; pp. 146–164. [Google Scholar]

- Mayer, C.; Danelljan, M.; Bhat, G.; Paul, M.; Paudel, D.P.; Yu, F.; Van Gool, L. Transforming model prediction for tracking. In Proceedings of the CVPR, New Orleans, LA, USA, 21–24 June 2022; pp. 8731–8740. [Google Scholar]

- Zhou, Z.; Chen, J.; Pei, W.; Mao, K.; Wang, H.; He, Z. Global tracking via ensemble of local trackers. In Proceedings of the CVPR, New Orleans, LA, USA, 21–24 June 2022; pp. 8761–8770. [Google Scholar]

- Mayer, C.; Danelljan, M.; Paudel, D.P.; Van Gool, L. Learning target candidate association to keep track of what not to track. In Proceedings of the ICCV, Montreal, BC, Canada, 11–17 October 2021; pp. 13444–13454. [Google Scholar]

- Voigtlaender, P.; Luiten, J.; Torr, P.H.; Leibe, B. Siam R-CNN: Visual Tracking by Re-Detection. In Proceedings of the CVPR, Seattle, WA, USA, 13–19 June 2020; pp. 6578–6588. [Google Scholar]

- Ma, F.; Shou, M.Z.; Zhu, L.; Fan, H.; Xu, Y.; Yang, Y.; Yan, Z. Unified transformer tracker for object tracking. In Proceedings of the CVPR, New Orleans, LA, USA, 21–24 June 2022; pp. 8781–8790. [Google Scholar]

- Zhang, Z.; Zhong, B.; Zhang, S.; Tang, Z.; Liu, X.; Zhang, Z. Distractor-aware fast tracking via dynamic convolutions and MOT philosophy. In Proceedings of the CVPR, Nashville, TN, USA, 20–25 June 2021; pp. 1024–1033. [Google Scholar]

- Dai, K.; Zhang, Y.; Wang, D.; Li, J.; Lu, H.; Yang, X. High-Performance Long-Term Tracking With Meta-Updater. In Proceedings of the CVPR, Seattle, WA, USA, 13–19 June 2020; pp. 6298–6307. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. GlobalTrack: A Simple and Strong Baseline for Long-term Tracking. In Proceedings of the AAAI, New York, NY, USA, 7–12 February 2020; pp. 11037–11044. [Google Scholar]

- Yan, B.; Zhao, H.; Wang, D.; Lu, H.; Yang, X. ‘Skimming-Perusal’ Tracking: A Framework for Real-Time and Robust Long-Term Tracking. In Proceedings of the ICCV, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2385–2393. [Google Scholar]

- Danelljan, M.; Bhat, G.; Shahbaz Khan, F.; Felsberg, M. ECO: Efficient convolution operators for tracking. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 6638–6646. [Google Scholar]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the CVPR, Las Vegas, NV, USA, 27–30 June 2016; pp. 4293–4302. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-Convolutional Siamese Networks for Object Tracking. In Proceedings of the ECCV, Amsterdam, The Netherlands, 11–14 October 2016; pp. 850–865. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for UAV tracking. In Proceedings of the ECCV, Amsterdam, The Netherlands, 11–14 October 2016; pp. 445–461. [Google Scholar]

- Galoogahi, H.K.; Fagg, A.; Huang, C.; Ramanan, D.; Lucey, S. Need for Speed: A Benchmark for Higher Frame Rate Object Tracking. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017; pp. 1134–1143. [Google Scholar]

- Chen, X.; Wang, D.; Li, D.; Lu, H. Efficient Visual Tracking via Hierarchical Cross-Attention Transformer. arXiv 2022, arXiv:2203.13537. [Google Scholar]

- Blatter, P.; Kanakis, M.; Danelljan, M.; Gool, L.V. Efficient Visual Tracking with Exemplar Transformers. In Proceedings of the WACV, Waikoloa, HI, USA, 2–7 January 2023; pp. 1571–1581. [Google Scholar]

- Yan, B.; Peng, H.; Wu, K.; Wang, D.; Fu, J.; Lu, H. LightTrack: Finding Lightweight Neural Networks for Object Tracking via One-Shot Architecture Search. In Proceedings of the CVPR, Nashville, TN, USA, 20–25 June 2021; pp. 15180–15189. [Google Scholar]

- Borsuk, V.; Vei, R.; Kupyn, O.; Martyniuk, T.; Krashenyi, I.; Matas, J. FEAR: Fast, Efficient, Accurate and Robust Visual Tracker. In Proceedings of the ECCV, Tel Aviv, Israel, 23–27 October 2022; pp. 644–663. [Google Scholar]

| Tracker | LaSOT | TrackingNet | GOT-10k | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AUC | p | AUC | p | AUC | p | AO | SR0.5 | SR0.75 | ||||

| QPSTrack-L256 | 70.9 | 80.0 | 76.9 | 49.1 | 58.4 | 54.5 | 83.5 | 87.4 | 82.4 | 71.2 | 79.5 | 68.9 |

| SimTrack-L [19] | 70.5 | 79.7 | - | - | - | - | 83.4 | 87.4 | - | 69.8 | 78.8 | 66.0 |

| QPSTrack-B256 | 69.1 | 77.6 | 73.9 | 47.0 | 55.5 | 51.4 | 81.6 | 85.4 | 79.3 | 68.0 | 76.0 | 63.7 |

| SwinTrack-B [7] | 69.6 | 78.6 | 74.1 | 47.6 | 58.2 | 54.1 | 82.5 | 87.0 | 80.4 | 69.4 | 78.0 | 64.3 |

| SimTrack-B [19] | 69.3 | 78.5 | - | - | - | - | 82.3 | 86.5 | - | 68.6 | 78.9 | 62.4 |

| MixFormer-22k [6] | 69.2 | 78.7 | 74.7 | - | - | - | 83.1 | 88.1 | 81.6 | 70.7 | 80.0 | 67.8 |

| OSTrack-256 [5] | 69.1 | 78.7 | 75.2 | 47.4 | 57.3 | 53.3 | 83.1 | 87.8 | 82.0 | 71.0 | 80.4 | 68.2 |

| AiATrack [30] | 69.0 | 79.4 | 73.8 | 47.7 | 55.6 | 55.4 | 82.7 | 87.8 | 80.4 | 69.6 | 63.2 | 80.0 |

| ToMP-101 [31] | 68.5 | - | - | 45.9 | - | - | 81.5 | 86.4 | 78.9 | - | - | - |

| GTELT [32] | 67.7 | - | 73.2 | 45.0 | 54.2 | 52.4 | 82.5 | 86.7 | 81.6 | - | ||

| KeepTrack [33] | 67.1 | 77.2 | 70.2 | 48.2 | 58.1 | 56.4 | - | - | - | - | - | - |

| STARK-101 [2] | 67.1 | 77.0 | - | - | - | - | 82.0 | 86.9 | - | 68.8 | 78.1 | 64.1 |

| TransT [4] | 64.9 | 73.8 | 69.0 | - | - | - | 81.4 | 86.7 | 80.3 | 67.1 | 76.8 | 60.9 |

| SiamR-CNN [34] | 64.8 | 72.2 | - | - | - | - | 81.2 | 85.4 | 80.0 | 64.9 | 72.8 | 59.7 |

| UTT [35] | 64.6 | - | 67.2 | - | - | - | 79.7 | - | 77.0 | 67.2 | 76.3 | 60.5 |

| TrDiMP [1] | 63.9 | - | 61.4 | - | - | - | 78.4 | 83.3 | 73.1 | 67.1 | 77.7 | 58.3 |

| DMTrack [36] | 58.4 | - | 59.7 | - | - | - | ||||||

| LTMU [37] | 57.2 | - | 57.2 | 41.4 | 49.9 | 47.3 | - | - | - | - | - | - |

| GlobalTrack [38] | 51.7 | - | 52.8 | 35.6 | 43.6 | 41.1 | 70.4 | 75.4 | 65.6 | - | - | - |

| SiamPRN++ [8] | 49.6 | 56.9 | 49.1 | 34.0 | 41.6 | 39.6 | 73.3 | 80.0 | 69.4 | 51.7 | 61.6 | 32.5 |

| SPLT [39] | 42.6 | - | 39.6 | 27.2 | 33.9 | 29.7 | ||||||

| ECO [40] | 32.4 | 33.8 | 30.1 | 22.0 | 25.2 | 24.0 | 55.4 | 61.8 | 49.2 | 31.6 | 30.9 | 11.1 |

| MDNet [41] | 39.7 | 46.0 | 37.3 | 27.9 | 34.9 | 31.8 | 60.6 | 70.5 | 56.5 | 29.9 | 30.3 | 9.9 |

| SiamFC [42] | 33.6 | 42.0 | 33.9 | 23.0 | 31.1 | 26.9 | 57.1 | 66.3 | 53.3 | 34.8 | 35.3 | 9.8 |

| Tracker | UAV123 | NFS | ||

|---|---|---|---|---|

| AUC | P | AUC | P | |

| QPSTrack-L256 | 68.7 | 89.8 | 64.6 | 80.1 |

| QPSTrack-B256 | 67.5 | 87.8 | 63.1 | 76.8 |

| MixFormer-22k [6] | 70.4 | 91.8 | - | - |

| SimTrack-B [19] | 69.8 | 89.6 | - | - |

| KeepTrack [33] | 69.7 | - | 66.4 | - |

| OSTrack-256 [5] | 68.3 | - | 64.7 | - |

| STARK-101 [2] | 68.2 | - | 66.2 | - |

| TransT [4] | 68.1 | 87.6 | 65.3 | 78.8 |

| TrDiMP [1] | 66.4 | 86.9 | 66.2 | 79.1 |

| SiamR-CNN [34] | 64.9 | 83.4 | 63.9 | - |

| SiamPRN++ [8] | 59.3 | 78.2 | 57.1 | 69.3 |

| Tracker | MACs | Params | Speed (FPS) | |

|---|---|---|---|---|

| (G) | (M) | GPU | CPU | |

| QPSTrack-L256 | 98.4 | 337.8 | 31.6 | - |

| QPSTrack-B256 | 27.9 | 120.3 | 104.8 | - |

| QPSTrack-Light | 6.64 | 83.8 | 140.6 | 24.6 |

| SwinTrack-B [7] | - | 91 | - | |

| SimTrack-B [19] | 22.3 | 88.1 | - | |

| OSTrack-256 [5] | 21.5 | 92.1 | - | |

| STARK [2] | 12.8 | 28.2 | - | |

| TransT [4] | 19.2 | 28.4 | - | |

| Adaptive Inputs | Adaptive Decoder | LaSOT | ||

|---|---|---|---|---|

| AUC | P | |||

| ✓ | 67.4 | 75.9 | 71.4 | |

| ✓ | 68.5 | 77.0 | 73.0 | |

| ✓ | ✓ | 69.1 | 77.6 | 73.9 |

| Settings | LaSOT | |||

|---|---|---|---|---|

| AUC | Normalized Precision | Precision | ||

| Loss Function | cross-entropy loss | 69.1 | 77.6 | 73.9 |

| task-specific loss | ||||

| 69.1 | 77.6 | 73.9 | ||

| Token Format | ||||

| Decoder | Adaptive decoder | 69.1 | 77.6 | 73.9 |

| Plain decoder | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, C.; Zhang, B.; Bo, C.; Wang, D. Query-Based Object Visual Tracking with Parallel Sequence Generation. Sensors 2024, 24, 4802. https://doi.org/10.3390/s24154802

Liu C, Zhang B, Bo C, Wang D. Query-Based Object Visual Tracking with Parallel Sequence Generation. Sensors. 2024; 24(15):4802. https://doi.org/10.3390/s24154802

Chicago/Turabian StyleLiu, Chang, Bin Zhang, Chunjuan Bo, and Dong Wang. 2024. "Query-Based Object Visual Tracking with Parallel Sequence Generation" Sensors 24, no. 15: 4802. https://doi.org/10.3390/s24154802

APA StyleLiu, C., Zhang, B., Bo, C., & Wang, D. (2024). Query-Based Object Visual Tracking with Parallel Sequence Generation. Sensors, 24(15), 4802. https://doi.org/10.3390/s24154802