Visual Navigation Algorithms for Aircraft Fusing Neural Networks in Denial Environments

Abstract

1. Introduction

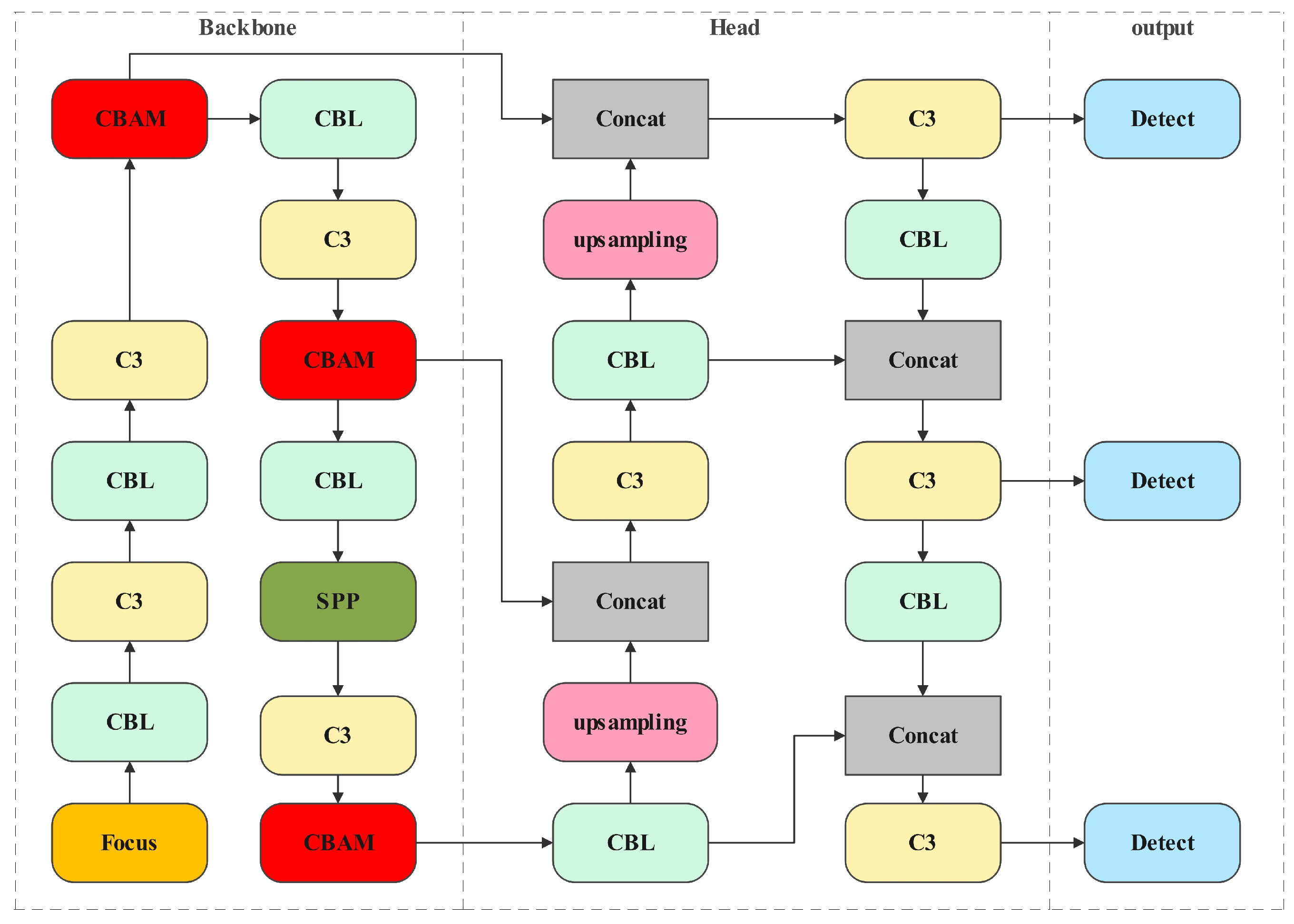

- A small target detection algorithm is proposed for the viewpoint of unmanned aerial vehicles (UAVs). Given the application background of UAVs and with real-time constraints, we select the YOLOv5 [26], which takes both real-time and accuracy requirements into account, and add the Convolutional Attention Module (CBAM) [27] to enhance the detection accuracy of the algorithm for small targets without increasing the computational cost. Afterward, the correctness of the algorithm selection and the effectiveness of the improvement are verified by comparing it with the YOLOv8 [28] algorithm and YOLOv5 algorithm.

- The CBAM-YOLOv5 target detection algorithm is combined with semi-direct visual odometry to eliminate dynamic objects in image frames and reduce their impact on navigation accuracy. Furthermore, an improved feature point extraction strategy based on the quadtree algorithm is introduced to enhance its ability and robustness in extracting the background feature points and to reduce the impact of the dynamic region deletion on the feature point extraction.

- The proposed method was validated in the TUM dataset and actual flight experiments, which demonstrated the effectiveness, usability, and robustness of the proposed algorithm.

2. Methods

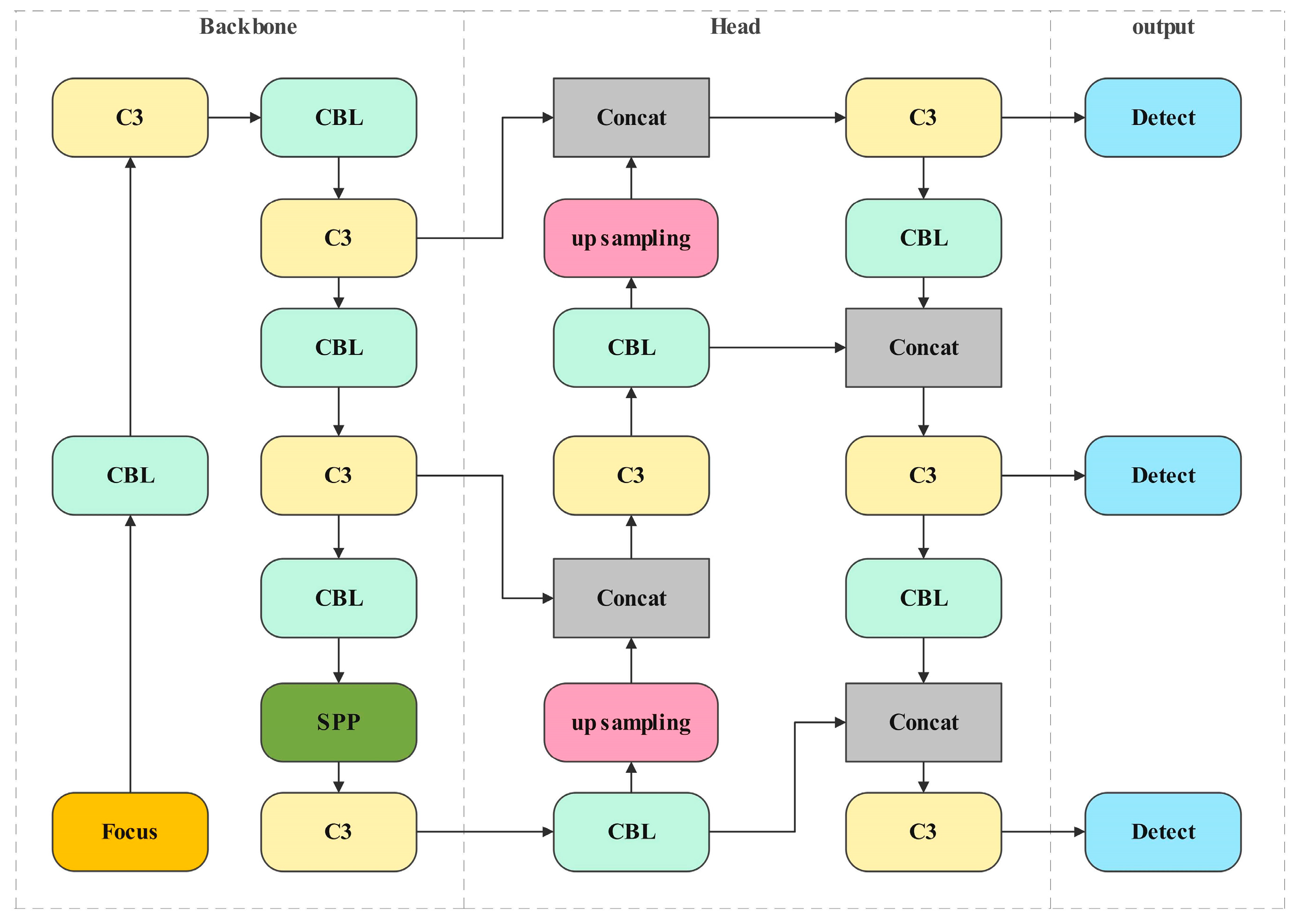

2.1. CBAM-YOLOv5

2.1.1. YOLOv5

2.1.2. CBAM-YOLOv5 for Small Target Detection on UAV

2.1.3. Comparison with State-of-the-Art Methods

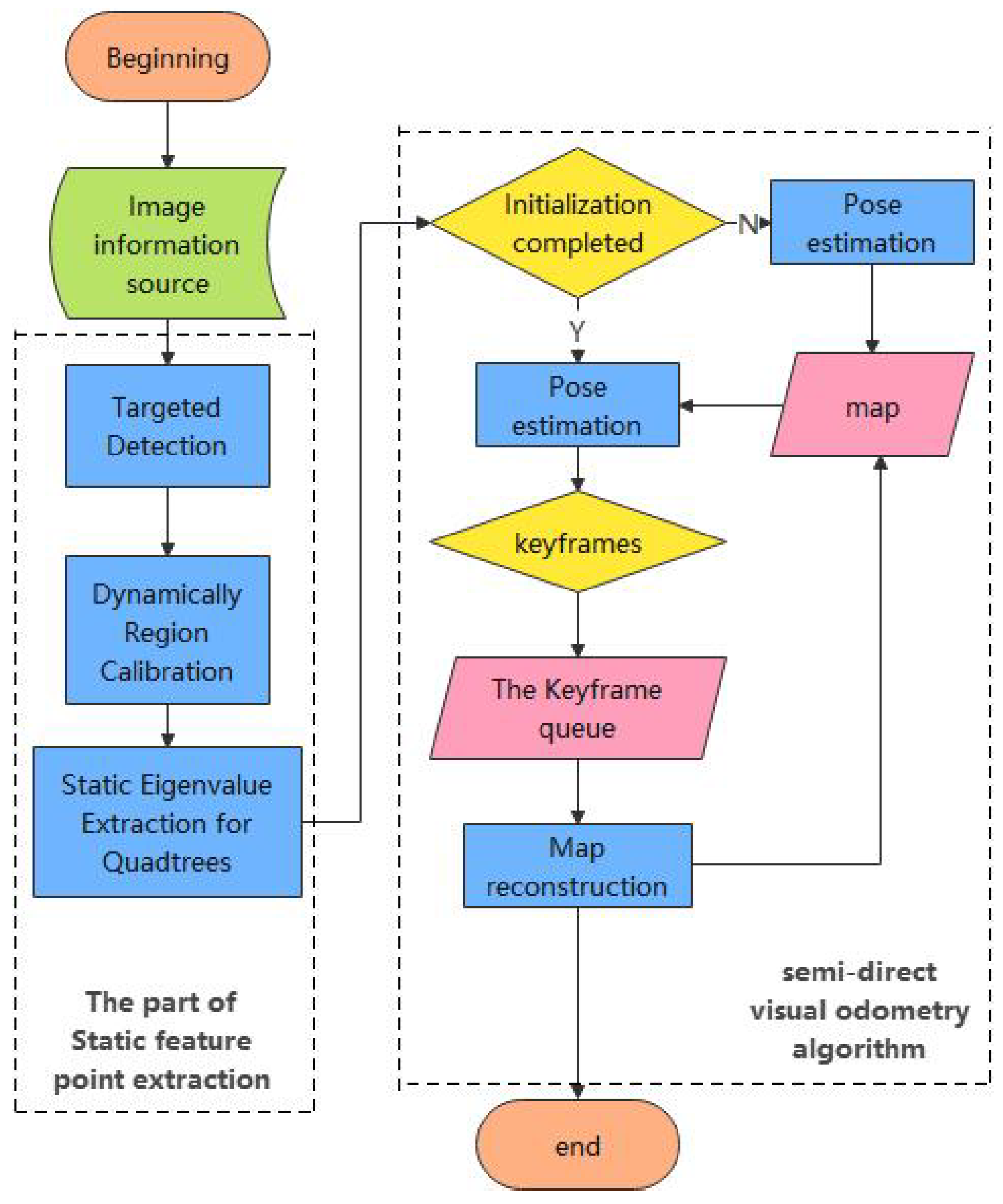

2.2. Semi-Direct Visual Odometry Fusion with YOLOv5

2.2.1. Algorithm Implementation

Basic Principles of Semi-Direct Visual Odometry

Semi-Direct Visual Odometry Fusion with YOLOv5

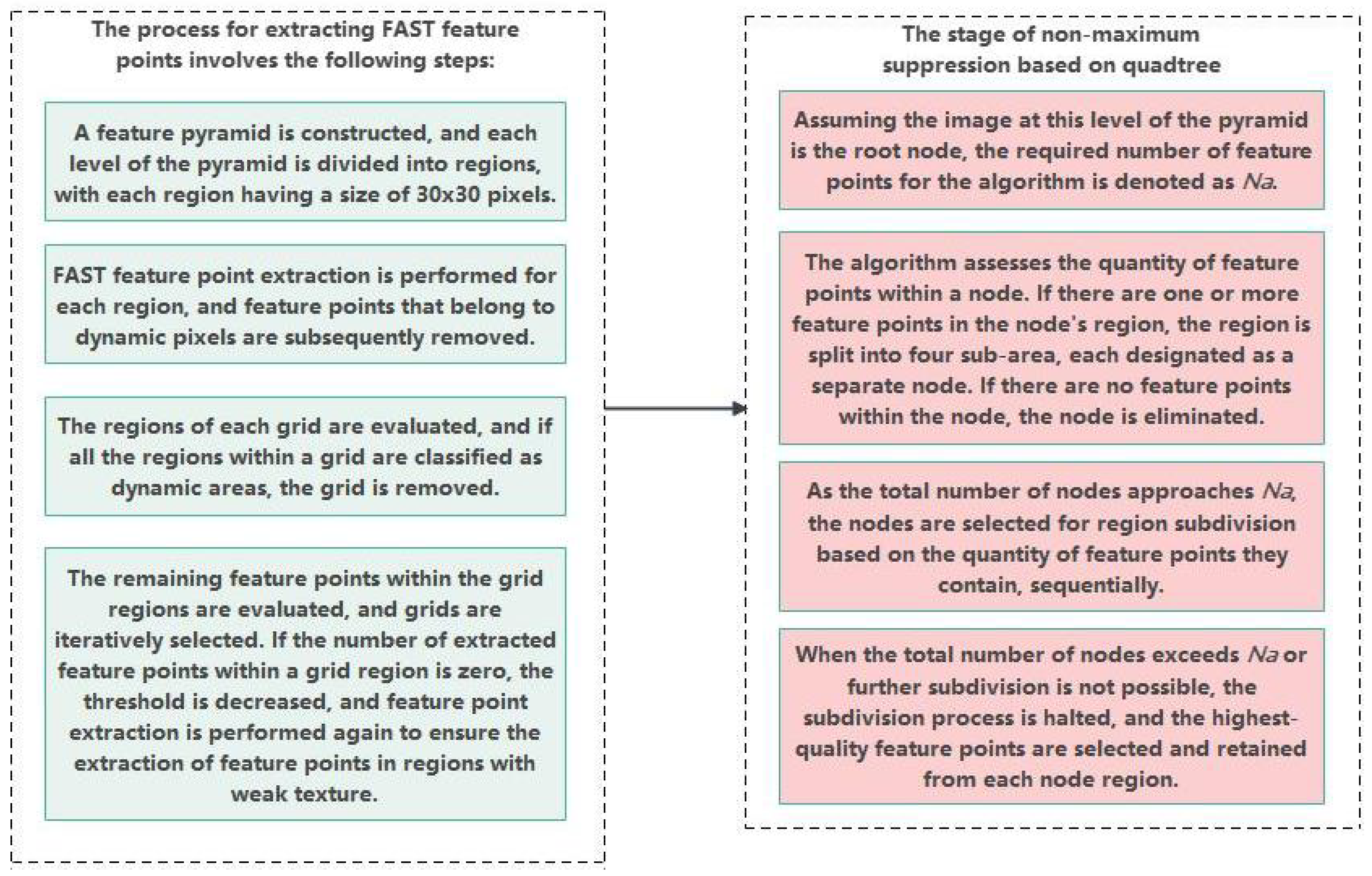

2.3. Static Feature Point Extraction

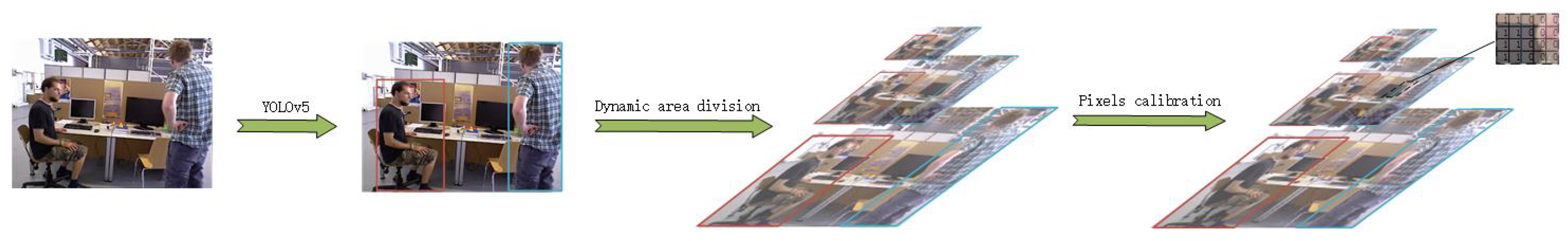

2.3.1. Dynamic Pixel Calibration

2.3.2. Static Feature Point Extraction Based on Quadtree

2.4. Initial Pose Estimation

3. Results

3.1. The Simulation Experiment

3.1.1. The TUM Dataset

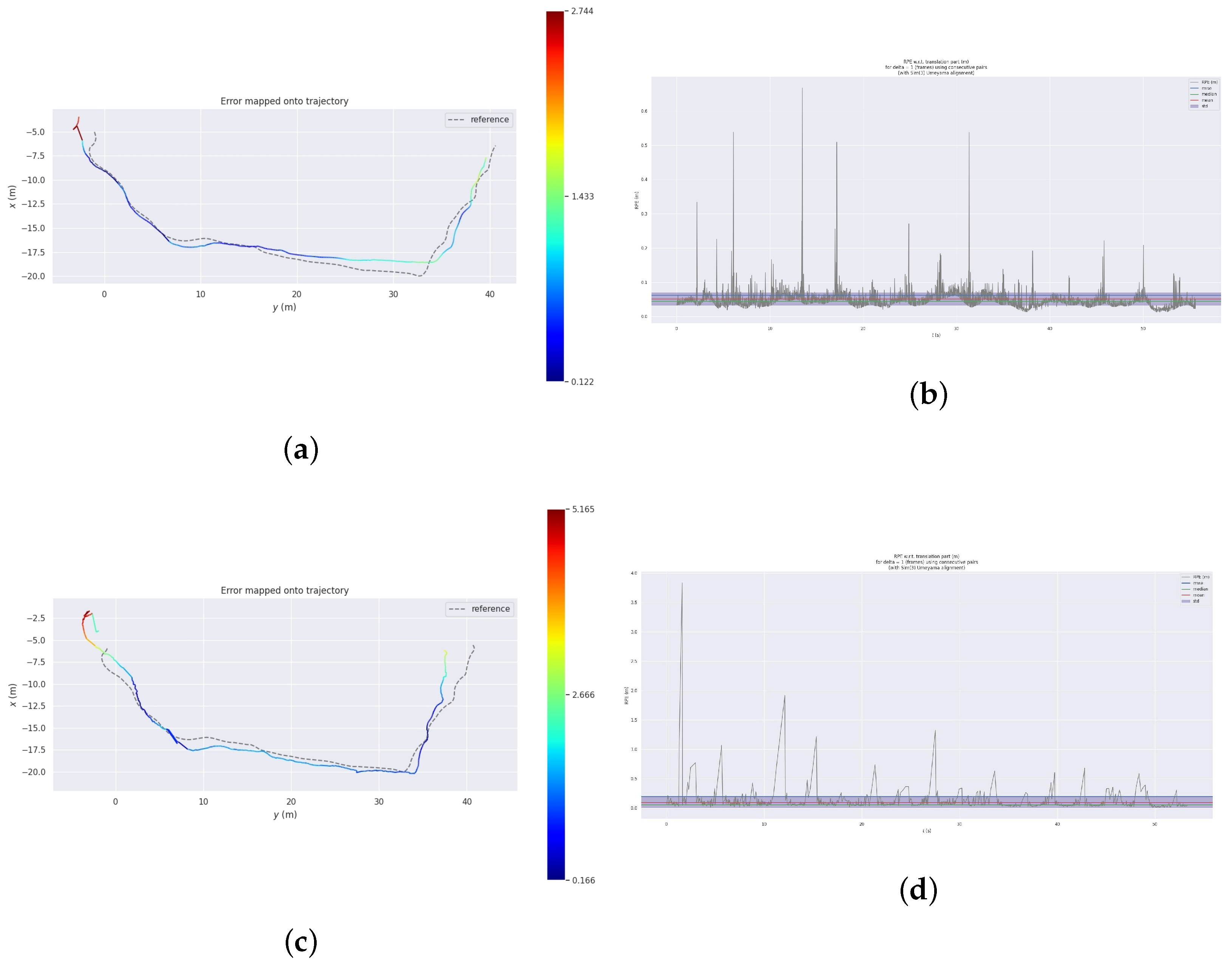

3.1.2. Evaluation of Positioning Accuracy

3.1.3. Evaluation in Real-Time

3.2. UAV Dynamic Scene Experiment

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Antonopoulos, A.; Lagoudakis, M.G.; Partsinevelos, P. A ROS Multi-Tier UAV Localization Module Based on GNSS, Inertial and Visual-Depth Data. Drones 2022, 6, 135. [Google Scholar] [CrossRef]

- Tian, D.; He, X.; Zhang, L.; Lian, J.; Hu, X. A Design of Odometer-Aided Visual Inertial Integrated Navigation Algorithm Based on Multiple View Geometry Constraints. In Proceedings of the 2017 9th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 26–27 August 2017; Volume 1, pp. 161–166. [Google Scholar] [CrossRef]

- Sukvichai, K.; Thongton, N.; Yajai, K. Implementation of a Monocular ORB SLAM for an Indoor Agricultural Drone. In Proceedings of the 2023 Third International Symposium on Instrumentation, Control, Artificial Intelligence, and Robotics (ICA-SYMP), Bangkok, Thailand, 18–20 January 2023; pp. 45–48. [Google Scholar] [CrossRef]

- Fan, Y.; Zhang, Q.; Tang, Y.; Liu, S.; Han, H. Blitz-SLAM: A semantic SLAM in dynamic environments. Pattern Recognit. 2022, 121, 108225. [Google Scholar] [CrossRef]

- Yuan, H.; Wu, C.; Deng, Z.; Yin, J. Robust Visual Odometry Leveraging Mixture of Manhattan Frames in Indoor Environments. Sensors 2022, 22, 8644. [Google Scholar] [CrossRef]

- Li, S.; Lee, D. RGB-D SLAM in Dynamic Environments Using Static Point Weighting. IEEE Robot. Autom. Lett. 2017, 2, 2263–2270. [Google Scholar] [CrossRef]

- Qi, N.; Yang, X.; Li, C.; Li, X.; Zhang, S.; Cao, L. Monocular Semidirect Visual Odometry for Large-Scale Outdoor Localization. IEEE Access 2019, 7, 57927–57942. [Google Scholar] [CrossRef]

- Soliman, A.; Bonardi, F.; Sidibé, D.; Bouchafa, S. IBISCape: A Simulated Benchmark for multi-modal SLAM Systems Evaluation in Large-scale Dynamic Environments. J. Intell. Robot. Syst. 2022, 106, 53. [Google Scholar] [CrossRef]

- Cheng, J.; Wang, Z.; Zhou, H.; Li, L.; Yao, J. DM-SLAM: A Feature-Based SLAM System for Rigid Dynamic Scenes. ISPRS Int. J. Geo-Inf. 2020, 9, 202. [Google Scholar] [CrossRef]

- Li, A.; Wang, J.; Xu, M.; Chen, Z. DP-SLAM: A visual SLAM with moving probability towards dynamic environments. Inf. Sci. 2021, 556, 128–142. [Google Scholar] [CrossRef]

- Xu, G.; Yu, Z.; Xing, G.; Zhang, X.; Pan, F. Visual odometry algorithm based on geometric prior for dynamic environments. Int. J. Adv. Manuf. Technol. 2022, 122, 235–242. [Google Scholar] [CrossRef]

- Xu, H.; Cai, G.; Yang, X.; Yao, E.; Li, X. Stereo visual odometry based on dynamic and static features division. J. Ind. Manag. Optim. 2022, 18, 2109–2128. [Google Scholar] [CrossRef]

- Yang, X.; Yuan, Z.; Zhu, D.; Chi, C.; Li, K.; Liao, C. Robust and Efficient RGB-D SLAM in Dynamic Environments. IEEE Trans. Multimed. 2021, 23, 4208–4219. [Google Scholar] [CrossRef]

- Kuo, X.Y.; Liu, C.; Lin, K.C.; Luo, E.; Chen, Y.W.; Lee, C.Y. Dynamic Attention-based Visual Odometry. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 5753–5760. [Google Scholar] [CrossRef]

- Kitt, B.; Moosmann, F.; Stiller, C. Moving on to dynamic environments: Visual odometry using feature classification. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 5551–5556. [Google Scholar] [CrossRef]

- Bescos, B.; Campos, C.; Tardós, J.D.; Neira, J. DynaSLAM II: Tightly-Coupled Multi-Object Tracking and SLAM. IEEE Robot. Autom. Lett. 2021, 6, 5191–5198. [Google Scholar] [CrossRef]

- Tan, W.; Liu, H.; Dong, Z.; Zhang, G.; Bao, H. Robust monocular SLAM in dynamic environments. In Proceedings of the 2013 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Adelaide, SA, Australia, 1–4 October 2013; pp. 209–218. [Google Scholar] [CrossRef]

- Jaimez, M.; Kerl, C.; Gonzalez-Jimenez, J.; Cremers, D. Fast odometry and scene flow from RGB-D cameras based on geometric clustering. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3992–3999. [Google Scholar] [CrossRef]

- Scona, R.; Jaimez, M.; Petillot, Y.R.; Fallon, M.; Cremers, D. StaticFusion: Background Reconstruction for Dense RGB-D SLAM in Dynamic Environments. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 3849–3856. [Google Scholar] [CrossRef]

- Wang, Y.; Huang, S. Motion segmentation based robust RGB-D SLAM. In Proceedings of the 11th World Congress on Intelligent Control and Automation, Shenyang, China, 29 June–4 July 2014; pp. 3122–3127. [Google Scholar] [CrossRef]

- Bescos, B.; Fácil, J.M.; Civera, J.; Neira, J. DynaSLAM: Tracking, Mapping, and Inpainting in Dynamic Scenes. IEEE Robot. Autom. Lett. 2018, 3, 4076–4083. [Google Scholar] [CrossRef]

- Cheng, J.; Zhang, H.; Meng, M.Q.H. Improving Visual Localization Accuracy in Dynamic Environments Based on Dynamic Region Removal. IEEE Trans. Autom. Sci. Eng. 2020, 17, 1585–1596. [Google Scholar] [CrossRef]

- Ai, Y.B.; Rui, T.; Yang, X.Q.; He, J.L.; Fu, L.; Li, J.B.; Lu, M. Visual SLAM in dynamic environments based on object detection. Def. Technol. 2021, 17, 1712–1721. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020. [Google Scholar] [CrossRef]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar] [CrossRef]

- Jocher, G. Ultralytics YOLOv5. Zenodo 2020. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Computer Vision–ECCV 2018; Ferrari, V., Hebert, M., Minchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO, 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 11 June 2024).

- Li, Y.; Xue, J.; Zhang, M.; Yin, J.; Liu, Y.; Qiao, X.; Zheng, D.; Li, Z. YOLOv5-ASFF: A Multistage Strawberry Detection Algorithm Based on Improved YOLOv5. Agronomy 2023, 13, 1901. [Google Scholar] [CrossRef]

- Du, D.; Zhu, P.; Wen, L.; Bian, X.; Lin, H.; Hu, Q.; Peng, T.; Zheng, J.; Wang, X.; Zhang, Y.; et al. VisDrone-DET2019: The Vision Meets Drone Object Detection in Image Challenge Results. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; pp. 213–226. [Google Scholar] [CrossRef]

- Yuan, Z.; Wang, Q.; Cheng, K.; Hao, T.; Yang, X. SDV-LOAM: Semi-Direct Visual–LiDAR Odometry and Mapping. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 11203–11220. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A Combined Corner and Edge Detector. In Proceedings of the Alvey Vision Conference; Alvety Vision Club: Manchester, UK, 1988; pp. 23.1–23.6. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar] [CrossRef]

- Rosten, E.; Drummond, T. Machine Learning for High-Speed Corner Detection. In Computer Vision–ECCV 2006; Leonardis, A., Bischof, H., Pinz, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 430–443. [Google Scholar]

- Wang, Y.; Zhang, S.; Wang, J. Ceiling-View Semi-Direct Monocular Visual Odometry with Planar Constraint. Remote Sens. 2022, 14, 5447. [Google Scholar] [CrossRef]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 573–580. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Shi, H.L. Visual Odometry Pose Estimation Based on Point and Line Features Fusion in Dynamic Scenes. Master’s Thesis, Guilin University of Technology, Guilin, China, 2022. [Google Scholar]

- Chen, W.; Chen, L.; Wang, R.; Pollefeys, M. LEAP-VO: Long-term Effective Any Point Tracking for Visual Odometry. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 19844–19853. [Google Scholar]

| mAP | R | P | Inference Time/ms | |

|---|---|---|---|---|

| CBAM-YOLOv5 | 14.48% | 30% | 41.43% | 7.4 |

| YOLOv5s | 13.5% | 29.6% | 37.8% | 7.3 |

| YOLOv8 | 23% | 13.7% | 49.8% | 27.7 |

| Fr3 Sequence | ORB-SLAM2 | DynaSLAM | PL-SVO | SVO | LEAP-VO | Our |

|---|---|---|---|---|---|---|

| Walking_static | 0.102 | 0.007 | 0.022 | 0.009 | 0.012 | 0.007 |

| Walking_xyz | 0.427 | 0.015 | 0.082 | 0.037 | 0.030 | 0.03 |

| walking_rpy | 0.741 | 0.035 | 0.169 | - | 0.100 | 0.04 |

| Walking_halfsphere | 0.494 | 0.025 | 0.158 | 0.041 | 0.029 | 0.01 |

| fr3 Sequence | ORB-SLAM2 | SVO | LEAP-VO | DynaSLAM | Our | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Initialization | FPS | Initialization | FPS | Initialization | FPS | Initialization | FPS | Initialization | FPS | |

| Walking_static | 2732 | 38.9 | 120 | 67.1 | - | - | 1638 | 10 | 325 | 37.4 |

| Walking_xyz | 7415 | 243 | 423 | |||||||

| Walking_rpy | 4325 | 223 | 412 | |||||||

| Walking_halfsphee | 6612 | 132 | 338 | |||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, Y.; Wang, Y.; Tian, L.; Li, D.; Wang, F. Visual Navigation Algorithms for Aircraft Fusing Neural Networks in Denial Environments. Sensors 2024, 24, 4797. https://doi.org/10.3390/s24154797

Gao Y, Wang Y, Tian L, Li D, Wang F. Visual Navigation Algorithms for Aircraft Fusing Neural Networks in Denial Environments. Sensors. 2024; 24(15):4797. https://doi.org/10.3390/s24154797

Chicago/Turabian StyleGao, Yang, Yue Wang, Lingyun Tian, Dongguang Li, and Fenming Wang. 2024. "Visual Navigation Algorithms for Aircraft Fusing Neural Networks in Denial Environments" Sensors 24, no. 15: 4797. https://doi.org/10.3390/s24154797

APA StyleGao, Y., Wang, Y., Tian, L., Li, D., & Wang, F. (2024). Visual Navigation Algorithms for Aircraft Fusing Neural Networks in Denial Environments. Sensors, 24(15), 4797. https://doi.org/10.3390/s24154797