A Video Mosaicing-Based Sensing Method for Chicken Behavior Recognition on Edge Computing Devices

Abstract

1. Introduction

2. Video Mosaicing Method to Classify Chicken Behavior

2.1. CCTV Camera Input

2.2. Detection and Tracking of Individual Chickens

2.3. Video Frames Storage

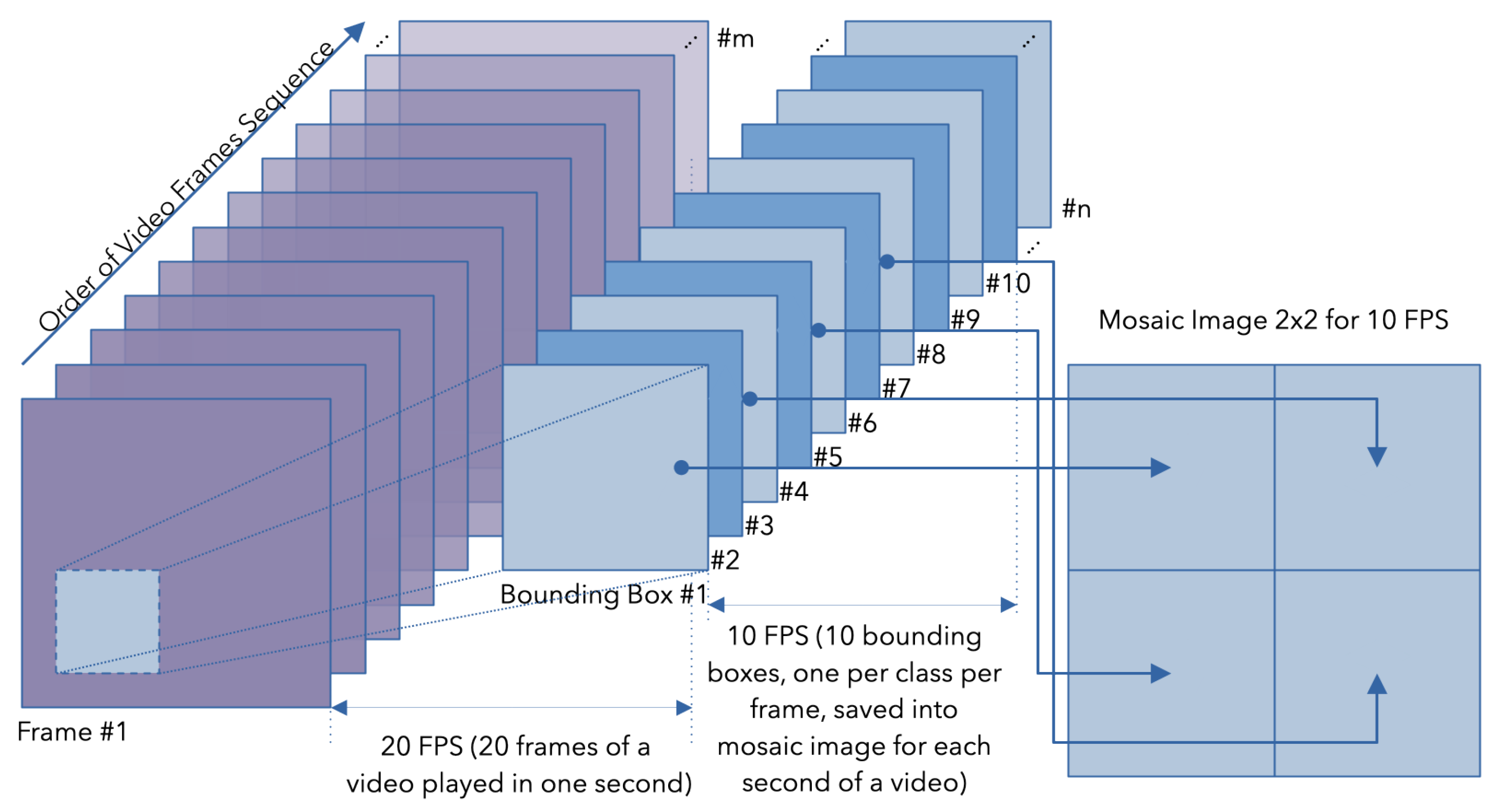

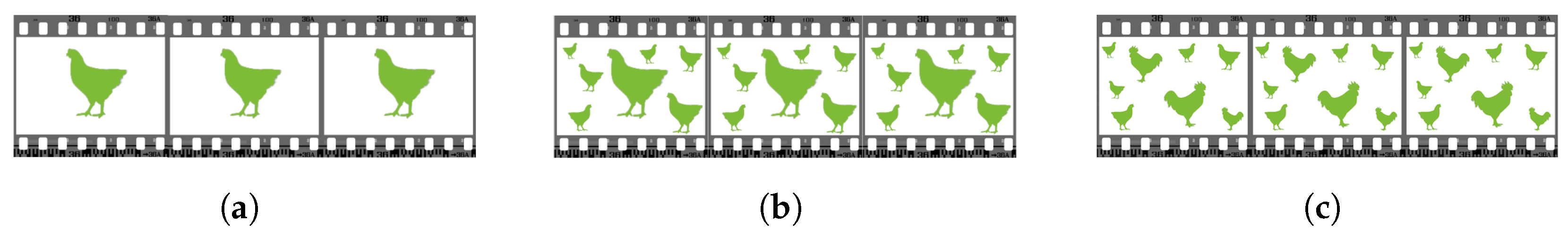

2.4. Image Mosaicing of Video Frames

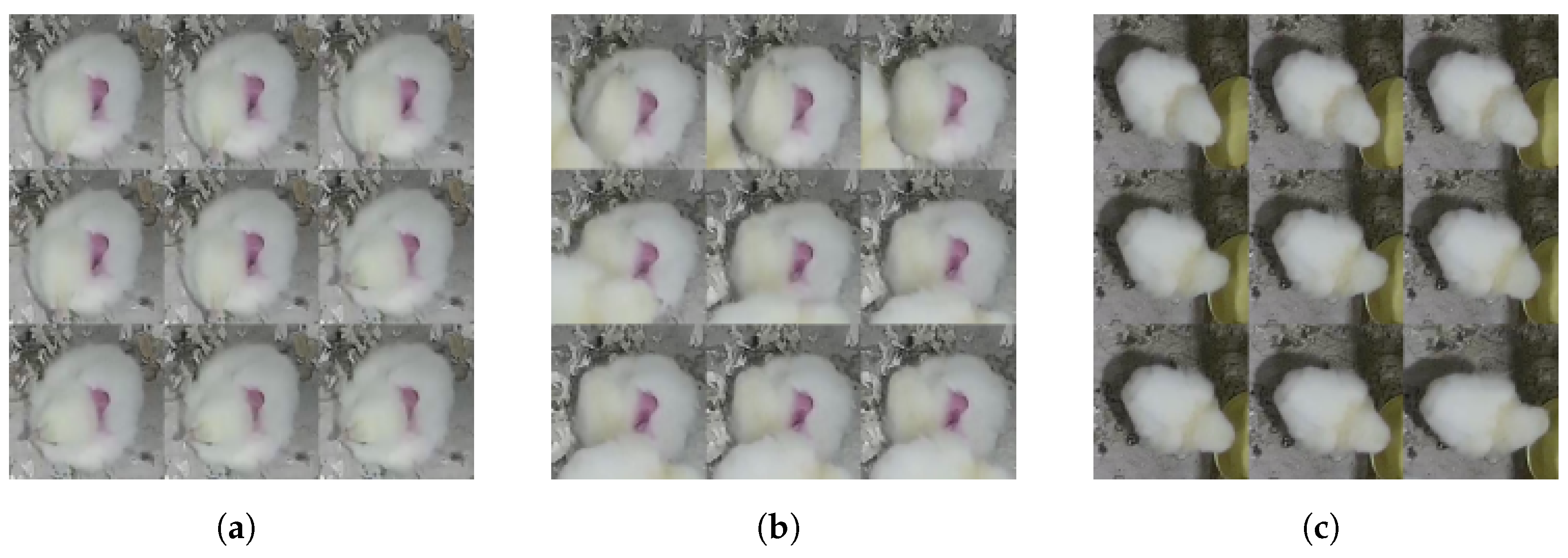

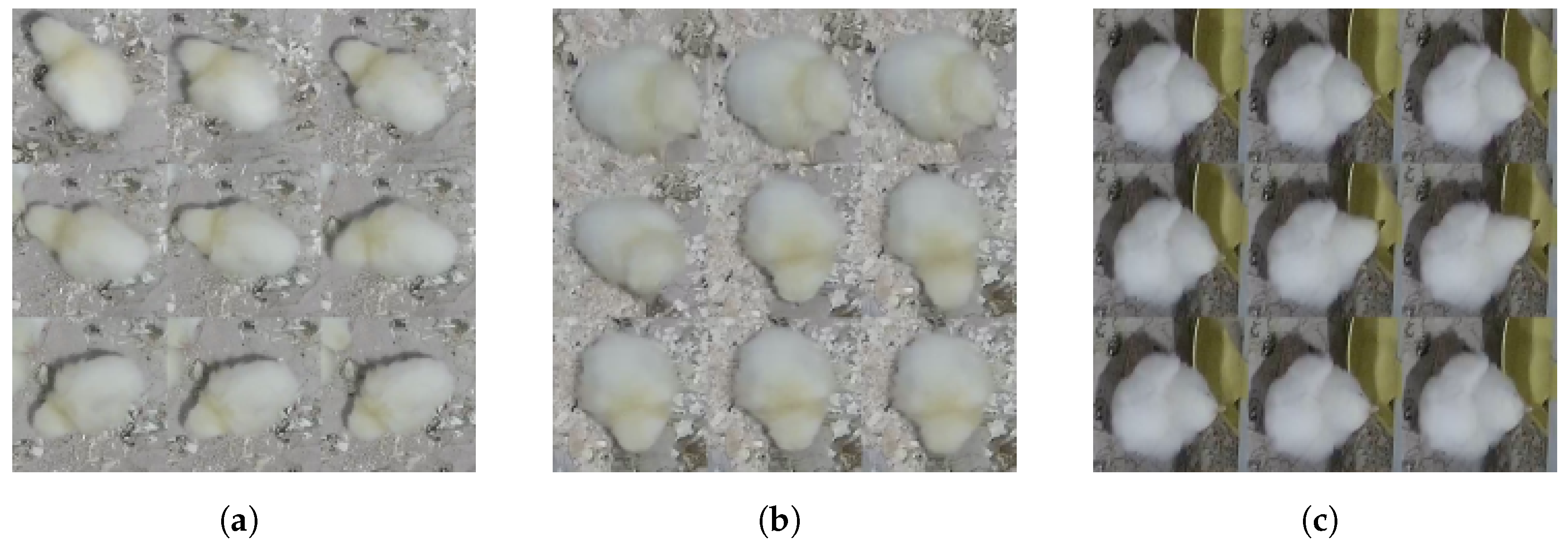

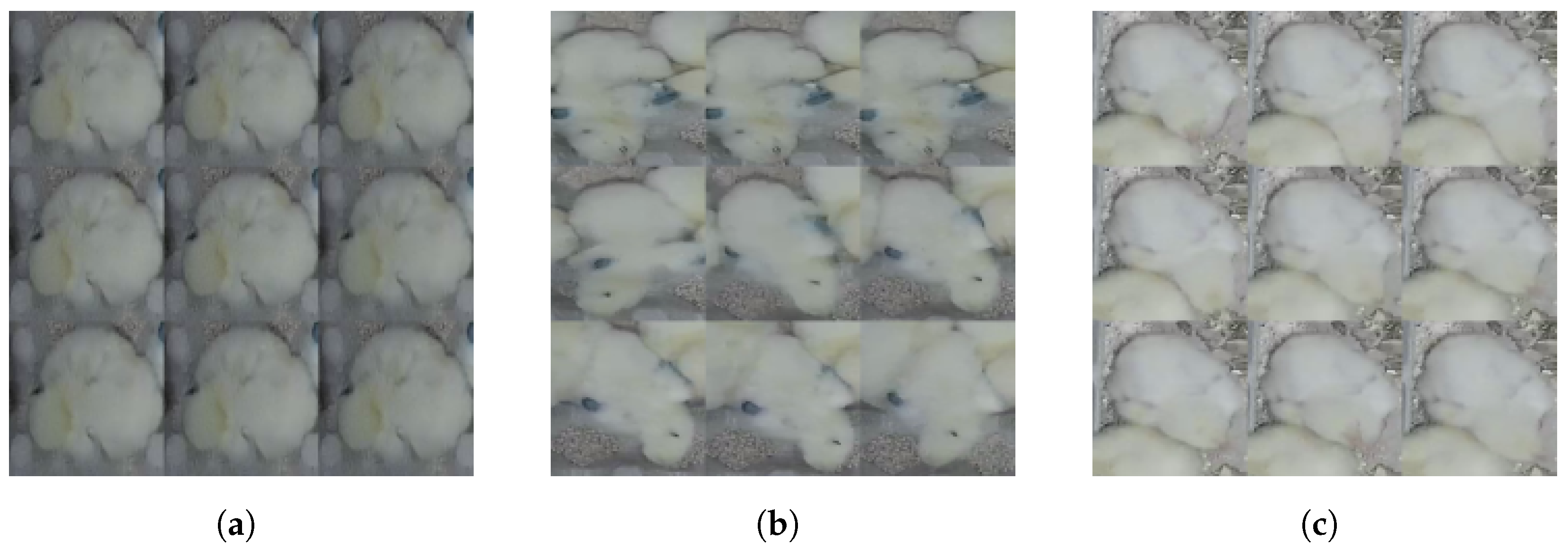

2.5. Mosaic Video Frames Dataset

2.6. Inference Phase

2.7. Final Thoughts on the Architecture

3. Experiments and Results

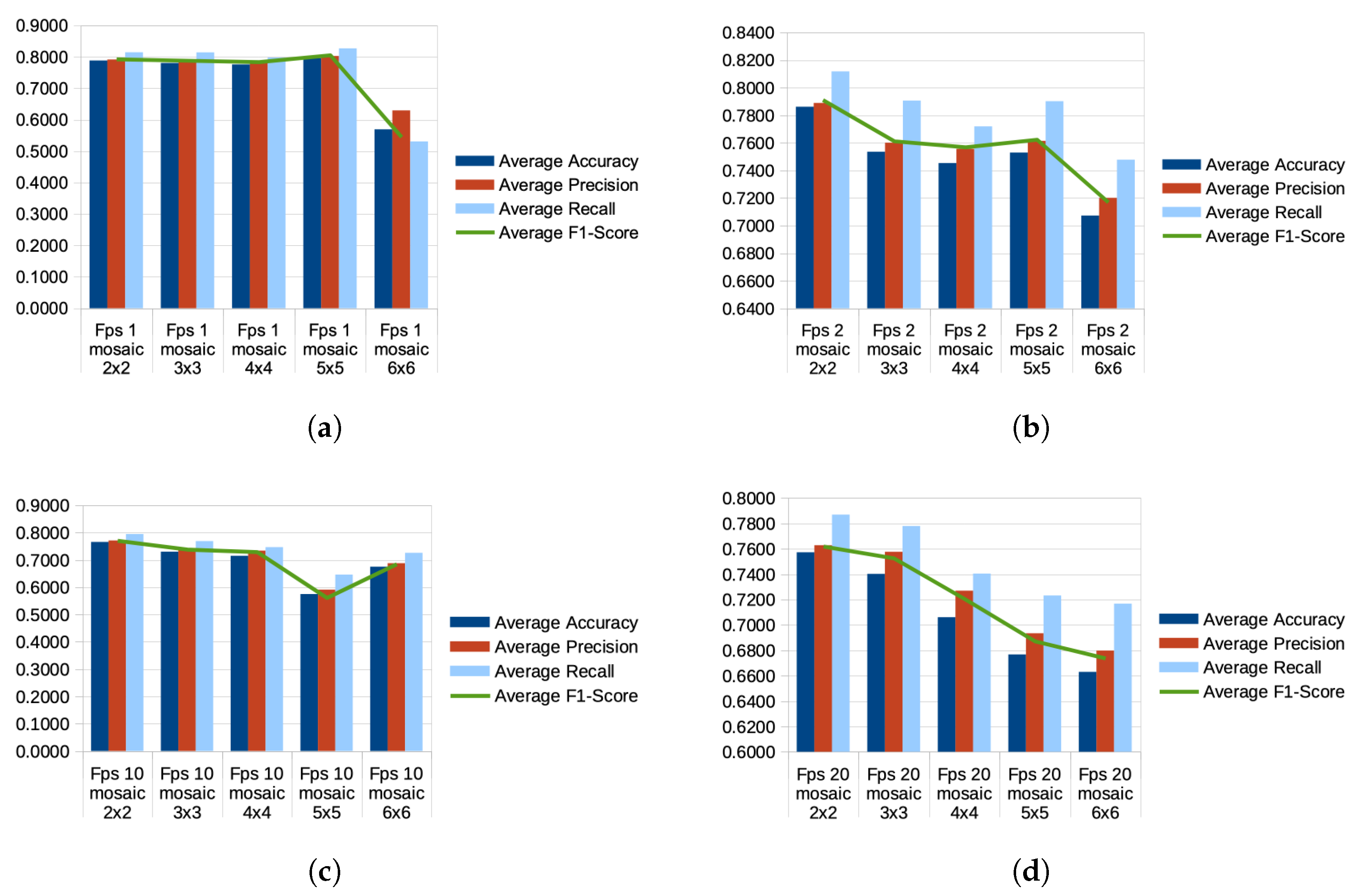

3.1. Mosaic Image Division Pattern

3.2. Video Sampling for Mosaic Images

3.3. Describing the Dataset

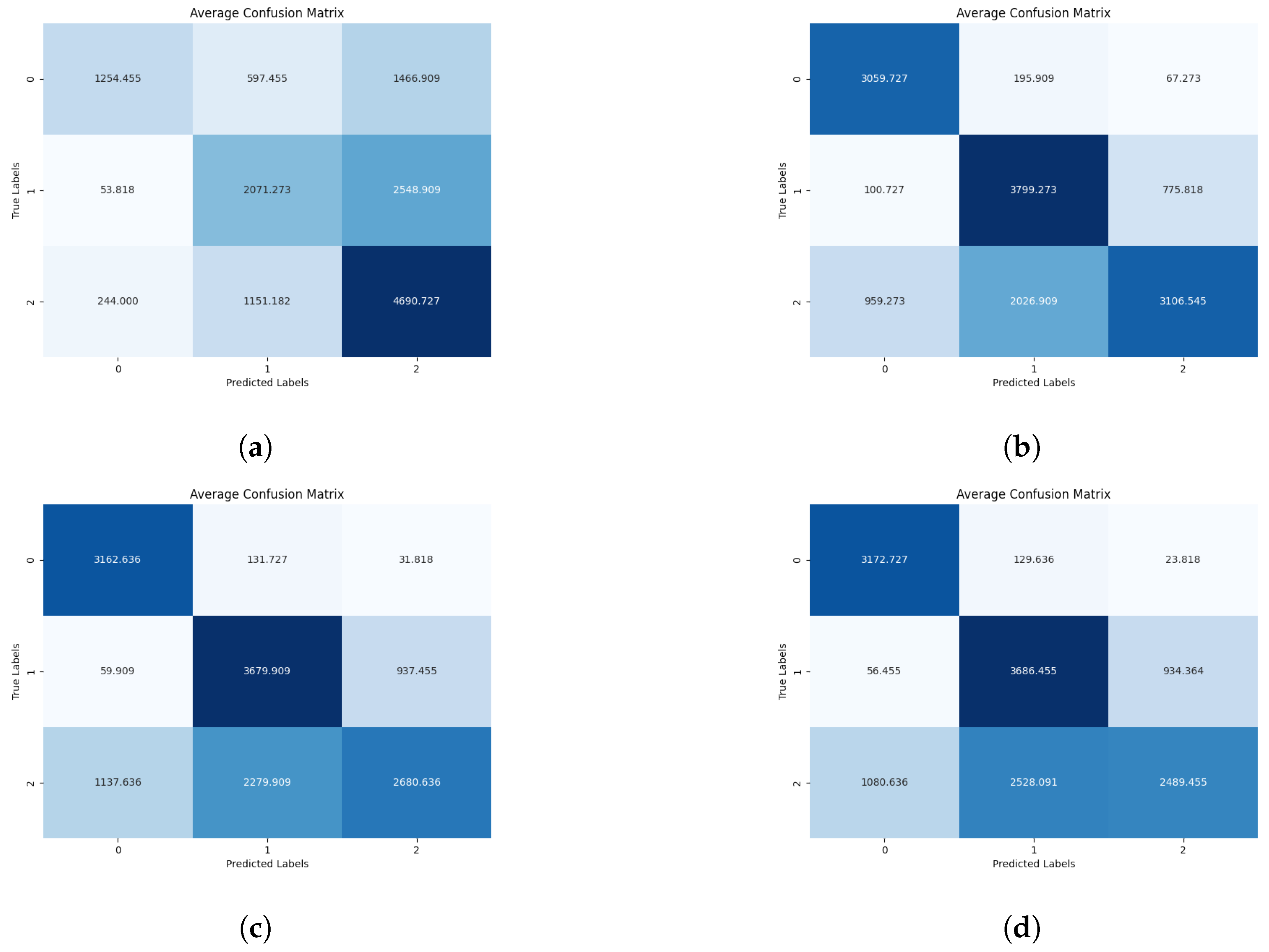

3.4. Overview

3.5. Analysis of the Results

- ./datasets/behavior/mosaic5x5fps10/Drinking/;

- ./datasets/behavior/mosaic5x5fps10/Eating/;

- ./datasets/behavior/mosaic5x5fps10/Sleepy/.

3.6. Challenges

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Huang, J.; Zhang, T.; Cuan, K.; Fang, C. An intelligent method for detecting poultry eating behaviour based on vocalization signals. Comput. Electron. Agric. 2021, 180, 105884. [Google Scholar] [CrossRef]

- Li, G.; Ji, B.; Li, B.; Shi, Z.; Zhao, Y.; Dou, Y.; Brocato, J. Assessment of layer pullet drinking behaviors under selectable light colors using convolutional neural network. Comput. Electron. Agric. 2020, 172. [Google Scholar] [CrossRef]

- Siriani, A.L.R.; Miranda, I.B.D.C.; Mehdizadeh, S.A.; Pereira, D.F. Chicken Tracking and Individual Bird Activity Monitoring Using the BoT-SORT Algorithm. AgriEngineering 2023, 5, 1677–1693. [Google Scholar] [CrossRef]

- Li, G.; Zhao, Y.; Porter, Z.; Purswell, J.L. Automated measurement of broiler stretching behaviors under four stocking densities via faster region-based convolutional neural network. Animal 2020, 15, 100059. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Hui, X.; Chen, Z.; Chesser, G.D.; Zhao, Y. Development and evaluation of a method to detect broilers continuously walking around feeder as an indication of restricted feeding behaviors. Comput. Electron. Agric. 2021, 181, 105982. [Google Scholar] [CrossRef]

- Cheng, F.; Tiemin, Z.; Haikun, Z.; Huang, J.; Cuan, K. Pose estimation and behavior classification of broiler chickens based on deep neural networks. Comput. Electron. Agric. 2020, 180, 105863. [Google Scholar]

- Li, G.; Hui, X.; Lin, F.; Zhao, Y. Developing and evaluating poultry preening behavior detectors via mask region-based convolutional neural network. Animals 2020, 10, 1762. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.W.; Hung, C.C.; Tsai, C.Y.; Wen, H.K.; Lin, H.T. Identifying images of dead chickens with a chicken removal system integrated with a deep learning algorithm. Sensors 2021, 21, 3579. [Google Scholar] [CrossRef]

- Okinda, C.; Nyalala, I.O.; Korohou, T.; Wang, J.; Achieng, T.; Wamalwa, P.; Mang, T.; Shen, M. A review on computer vision systems in monitoring of poultry: A welfare perspective. Artif. Intell. Agric. 2020, 4, 184–208. [Google Scholar] [CrossRef]

- Abdi-Soojeede, M.I.; Funwie, A.D.S. Challenges Of Chicken Production On Farmers In Mogadishu, Somalia. IQ Res. J. 2022, 1, 531–541. [Google Scholar]

- Guo, Y.; Chai, L.; Aggrey, S.E.; Oladeinde, A.; Johnson, J.; Zock, G. A machine vision-based method for monitoring broiler chicken floor distribution. Sensors 2020, 20, 3179. [Google Scholar] [CrossRef]

- Geffen, O.; Yitzhaky, Y.; Barchilon, N.; Druyan, S.; Halachmi, I. A machine vision system to detect and count laying hens in battery cages. Animal 2020, 14, 2628–2634. [Google Scholar] [CrossRef] [PubMed]

- Nasiri, A.; Yoder, J.; Zhao, Y.; Hawkins, S.; Prado, M.; Gan, H. Pose estimation-based lameness recognition in broiler using CNN-LSTM network. Comput. Electron. Agric. 2022, 197, 106931. [Google Scholar] [CrossRef]

- Li, N.; Ren, Z.; Li, D.; Zeng, L. Review: Automated techniques for monitoring the behaviour and welfare of broilers and laying hens: Towards the goal of precision livestock farming. Animal 2020, 14, 617–625. [Google Scholar] [CrossRef] [PubMed]

- Abdoli, A.; Alaee, S.; Imani, S.; Murillo, A.; Gerry, A.; Hickle, L.; Keogh, E. Fitbit for Chickens? Time Series Data Mining Can Increase the Productivity of Poultry Farms. In Proceedings of the KDD’20, San Diego, CA, USA, 22–27 August 2020; pp. 3328–3336. [Google Scholar]

- Okinda, C.; Lu, M.; Liu, L.; Nyalala, I.; Muneri, C.; Wang, J.; Zhang, H.; Shen, M. A machine vision system for early detection and prediction of sick birds: A broiler chicken model. Biosyst. Eng. 2019, 188, 229–242. [Google Scholar] [CrossRef]

- Kumar, S.; Kondaveeti, H.K.; Simhadri, C.G.; Reddy, M.Y. Automatic Bird Species Recognition using Audio and Image Data: A Short Review. In Proceedings of the IEEE InC4 2023—2023 IEEE International Conference on Contemporary Computing and Communications, Bangalore, India, 21–22 April 2023. [Google Scholar]

- Yang, S.; Samarasinghe, D.; Arukgoda, A.; Abpeikar, S.; Lakshika, E.; Barlow, M. Automatic Recognition of Collective Emergent Behaviors Using Behavioral Metrics. IEEE Access 2023, 11, 89077–89092. [Google Scholar] [CrossRef]

- Górriz, J.M.; Álvarez-Illán, I.; Álvarez-Marquina, A.; Arco, J.E.; Atzmueller, M.; Ballarini, F.; Barakova, E.; Bologna, G.; Bonomini, P.; Castellanos-Dominguez, G.; et al. Computational approaches to Explainable Artificial Intelligence: Advances in theory, applications and trends. Inf. Fusion 2023, 100, 101945. [Google Scholar] [CrossRef]

- Teterja Dmitrij, T.D.; Jose Garcia-Rodriguez, J.G.; Jorge Azorin-Lopez, J.A.; Esther Sebastian-Gonzalez, E.S.; van der Walt, R.E.; Booysen, M.J. An Image Mosaicing-Based Method for Bird Identification on Edge Computing Devices. In Proceedings of the 18th International Conference on Soft Computing Models in Industrial and Environmental Applications (SOCO 2023), Salamanca, Spain, 5–7 September 2023; Volume 2. [Google Scholar]

- De Montis, A.; Pinna, A.; Barra, M.; Vranken, E. Analysis of poultry eating and drinking behavior by software eYeNamic. J. Agric. Eng. 2013, 44. [Google Scholar] [CrossRef]

- Olejnik, K.; Popiela, E.; Opalinski, S. EmergingPrecision Management Methods in Poultry Sector. Agriculture 2022, 12, 718. [Google Scholar] [CrossRef]

- Vijay, A.; Garg, T.; Goyal, V.; Rashmi; Yadav, A.; Mukherjee, R. A Low-Cost Edge-IoT Based Smart Poultry Farm. In Proceedings of the 15th International Conference on COMmunication Systems and NETworkS (COMSNETS), Bangalore, India, 3–8 January 2023; pp. 397–399. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020. [Google Scholar] [CrossRef]

- Open Data Annotation Platform. Available online: https://www.cvat.ai (accessed on 30 January 2024).

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, L.C. Microsoft COCO: Common Objects in Context. In Proceedings of the ECCV, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Lin, C.W.; Chen, Z.; Lin, M. Video-based bird posture recognition using dual feature-rates deep fusion convolutional neural network. Ecol. Indic. 2022, 141, 109141. [Google Scholar] [CrossRef]

- Carreira, J.; Zisserman, A. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; Volume 7. [Google Scholar]

- Lin, C.W.; Hong, S.; Lin, M.; Huang, X.; Liu, J. Bird posture recognition based on target keypoints estimation in dual-task convolutional neural networks. Ecol. Indic. 2022, 135, 108506. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Dollar, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; Volume 9. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-Local Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. SlowFast Networks for Video Recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Lin, J.; Gan, C.; Han, S. TSM: Temporal Shift Module for Efficient Video Understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Li, X.; Hu, X.; Yang, J. Spatial Group-wise Enhance: Improving Semantic Feature Learning in Convolutional Networks. arXiv 2019. [Google Scholar] [CrossRef]

- Gao, S.-H.; Cheng, M.-M.; Zhao, K.; Zhang, X.-Y.; Yang, M.-H.; Torr, P. Res2Net: A New Multi-scale Backbone Architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 652–662. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep High-Resolution Representation Learning for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Guo, S.; Huang, W.; Scott, M.R.; Wang, L. V4D: 4D Convolutional Neural Networks for Video-Level Representation Learning. Int. Conf. Learn. Represent 2020. Available online: https://openreview.net/forum?id=SJeLopEYDH (accessed on 23 May 2024).

- Yang, C.; Xu, Y.; Shi, J.; Dai, B.; Zhou, B. Temporal Pyramid Network for Action Recognition. arXiv 2020. [Google Scholar] [CrossRef]

- Liu, J.-J.; Hou, Q.; Cheng, M.-M.; Wang, C.; Feng, J. Improving Convolutional Networks with Self-Calibrated Convolutions. IEEE CVPR 2020. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, C.; Zhang, Z.; Zhu, Y.; Lin, H.; Zhang, Z.; Sun, Y.; He, T.; Mueller, J.; Manmatha, R.; et al. ResNeSt: Split-Attention Networks. arXiv 2020. [Google Scholar] [CrossRef]

- Fan, H.; Xiong, B.; Mangalam, K.; Li, Y.; Yan, Z.; Malik, J.; Feichtenhofer, C. Multiscale Vision Transformers. arXiv 2021. [Google Scholar] [CrossRef]

- Gao, Z.; Wang, Q.; Zhang, B.; Hu, Q.; Li, P. Temporal-attentive Covariance Pooling Networks for Video Recognition. arXiv 2021. [Google Scholar] [CrossRef]

- Guo, Y.; Aggrey, S.E.; Oladeinde, A.; Johnson, J.; Zock, G.; Chai, L. A machine vision-based method optimized for restoring broiler chicken images occluded by feeding and drinking equipment. Animals 2021, 11, 123. [Google Scholar] [CrossRef] [PubMed]

- Ray, S.; Stopfer, M.A. Argos: A toolkit for tracking multiple animals in complex visual environments. Methods Ecol. Evol. 2022, 13, 585–595. [Google Scholar] [CrossRef]

- Azorín-López, J.; Saval-Calvo, M.; Fuster-Guilló, A.; García-Rodríguez, J. Human behaviour recognition based on trajectory analysis using neural networks. In Proceedings of the 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013; pp. 1–7. [Google Scholar] [CrossRef]

- Azorin-Lopez, J.; Saval-Calvo, M.; Fuster-Guillo, A.; Garcia-Rodriguez, J. A Novel Prediction Method for Early Recognition of Global Human Behaviour in Image Sequences. Neural Process. Lett. 2016, 43, 363–387. [Google Scholar] [CrossRef]

- Azorin-Lopez, J.; Saval-Calvo, M.; Fuster-Guillo, A.; Garcia-Rodriguez, J.; Cazorla, M.; Signes-Pont, M.T. Group activity description and recognition based on trajectory analysis and neural networks. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 1585–1592. [Google Scholar] [CrossRef]

| Model | Epochs | Input Data Farm | Input Format | Length | Output Format | Mosaic Images | Behavior Classes | Chickens | Test Acc. |

|---|---|---|---|---|---|---|---|---|---|

| MobileNetV2 | 20 | The Netherlands | Video (MP4) | 5 min | Mosaic, RGB | ∼1,368,500 | Sleepy, Eating, Drinking (3) | 13 | 79.61% |

| Custom Folds | Chicken IDs Used for Training | Chicken IDs Used for Testing |

|---|---|---|

| C_Fold 0 | 3, 4, 5, 6, 7, 8, 9, 10, 11, 12 | 0, 1, 2 |

| C_Fold 1 | 4, 5, 6, 7, 8, 9, 10, 11, 12, 0 | 1, 2, 3 |

| C_Fold 2 | 5, 6, 7, 8, 9, 10, 11, 12, 0, 1 | 2, 3, 4 |

| C_Fold 3 | 6, 7, 8, 9, 10, 11, 12, 0, 1, 2 | 3, 4, 5 |

| C_Fold 4 | 7, 8, 9, 10, 11, 12, 0, 1, 2, 3 | 4, 5, 6 |

| C_Fold 5 | 8, 9, 10, 11, 12, 0, 1, 2, 3, 4 | 5, 6, 7 |

| C_Fold 6 | 9, 10, 11, 12, 0, 1, 2, 3, 4, 5 | 6, 7, 8 |

| C_Fold 7 | 11, 12, 0, 1, 2, 3, 4, 5, 6, 7 | 8, 9, 10 |

| C_Fold 8 | 12, 0, 1, 2, 3, 4, 5, 6, 7, 8 | 9, 10, 11 |

| C_Fold 9 | 0, 1, 2, 3, 4, 5, 6, 7, 8, 9 | 10, 11, 12 |

| Variable 1 | Variable 2 | Avg. Accuracy | Avg. Precision | Avg. Recall | Avg. F1-Score |

|---|---|---|---|---|---|

| mosaic 2 × 2 | FPS 1 | 0.7883 | 0.7915 | 0.8146 | 0.7934 |

| mosaic 2 × 2 | FPS 2 | 0.7861 | 0.7889 | 0.8118 | 0.7913 |

| mosaic 2 × 2 | FPS 10 | 0.7657 | 0.7712 | 0.7946 | 0.7713 |

| mosaic 2 × 2 | FPS 20 | 0.7572 | 0.7628 | 0.7869 | 0.7620 |

| mosaic 3 × 3 | FPS 1 | 0.7806 | 0.7860 | 0.8143 | 0.7890 |

| mosaic 3 × 3 | FPS 2 | 0.7535 | 0.7601 | 0.7906 | 0.7614 |

| mosaic 3 × 3 | FPS 10 | 0.7299 | 0.7434 | 0.7687 | 0.7390 |

| mosaic 3 × 3 | FPS 20 | 0.7402 | 0.7576 | 0.7779 | 0.7527 |

| mosaic 4 × 4 | FPS 1 | 0.7758 | 0.7795 | 0.7980 | 0.7845 |

| mosaic 4 × 4 | FPS 2 | 0.7453 | 0.7556 | 0.7719 | 0.7570 |

| mosaic 4 × 4 | FPS 10 | 0.7149 | 0.7337 | 0.7468 | 0.7300 |

| mosaic 4 × 4 | FPS 20 | 0.7061 | 0.7269 | 0.7404 | 0.7207 |

| mosaic 5 × 5 | FPS 1 | 0.7961 | 0.8030 | 0.8272 | 0.8063 |

| mosaic 5 × 5 | FPS 2 | 0.7529 | 0.7614 | 0.7901 | 0.7625 |

| mosaic 5 × 5 | FPS 10 | 0.5750 | 0.5911 | 0.6462 | 0.5622 |

| mosaic 5 × 5 | FPS 20 | 0.6767 | 0.6934 | 0.7232 | 0.6873 |

| mosaic 6 × 6 | FPS 1 | 0.5694 | 0.6297 | 0.5306 | 0.5457 |

| mosaic 6 × 6 | FPS 2 | 0.7072 | 0.7200 | 0.7477 | 0.7171 |

| mosaic 6 × 6 | FPS 10 | 0.6753 | 0.6880 | 0.7257 | 0.6854 |

| mosaic 6 × 6 | FPS 20 | 0.6629 | 0.6798 | 0.7168 | 0.6738 |

| PA | MOD | FRA | FI | PE | FE | SW | FL | WA | SW | EA | SWF | STF | FLF | SFW | CR |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CVPR [27,28] | I3D | 8 | 60.00 | 62.96 | 95.83 | 87.50 | 76.62 | 32.50 | 46.67 | 68.29 | - | - | - | - | - |

| CVPR [27,28] | I3D | 16 | 56.00 | 64.81 | 100 | 85.94 | 81.82 | 57.50 | 46.67 | 68.29 | - | - | - | - | - |

| CVPR [27,28] | I3D | 32 | 52.00 | 68.52 | 100 | 82.81 | 87.01 | 52.50 | 51.11 | 60.98 | - | - | - | - | - |

| CVPR [29,30] | ResNeXt-50 | - | - | - | - | 97.09 | 94.52 | - | 90.42 | - | 51.11 | 70.06 | 23.61 | 47.83 | 66.40 |

| CVPR [29,31] | SE-ResNet-50 | - | - | - | - | 96.22 | 91.44 | - | 86.58 | - | 64.44 | 64.97 | 18.06 | 47.83 | 63.20 |

| CVPR [29,31] | SE-ResNeXt-50 | - | - | - | - | 94.77 | 92.47 | - | 88.18 | - | 62.22 | 69.75 | 19.44 | 39.86 | 58.40 |

| CVPR [29,30] | ResNeXt-101 | - | - | - | - | 95.64 | 92.29 | - | 90.10 | - | 73.33 | 76.43 | 41.67 | 41.67 | 74.40 |

| CVPR [29,31] | SE-ResNet-101 | - | - | - | - | 95.93 | 92.64 | - | 87.86 | - | 71.11 | 74.84 | 27.78 | 27.78 | 71.20 |

| CVPR [29,31] | SE-ResNeXt-101 | - | - | - | - | 97.09 | 94.18 | - | 86.74 | - | 73.33 | 67.83 | 8.33 | 8.33 | 66.40 |

| CVPR [27,32] | Non-Local | 8 | 40.00 | 77.78 | 100 | 93.75 | 87.01 | 57.50 | 57.78 | 63.41 | - | - | - | - | - |

| CVPR [27,32] | Non-Local | 16 | 52.00 | 75.93 | 100 | 93.75 | 90.91 | 47.50 | 55.56 | 63.41 | - | - | - | - | - |

| CVPR [27,32] | Non-Local | 32 | 48.00 | 85.19 | 100 | 98.44 | 89.61 | 57.50 | 48.89 | 63.41 | - | - | - | - | - |

| ECCV [29,33] | CBAM50 | - | - | - | - | 95.64 | 93.32 | - | 87.54 | - | 71.11 | 67.83 | 30.56 | 47.10 | 68.80 |

| ECCV [29,33] | CBAM101 | - | - | - | - | 96.22 | 90.24 | - | 89.78 | - | 80.00 | 74.52 | 30.56 | 30.56 | 67.20 |

| ICCV [27,34] | SlowFast | 8 + 8 | 52.00 | 85.19 | 100 | 89.06 | 80.52 | 47.50 | 60.00 | 60.98 | - | - | - | - | - |

| ICCV [27,34] | SlowFast | 8 + 16 | 52.00 | 81.48 | 95.83 | 90.63 | 92.21 | 52.50 | 53.33 | 58.54 | - | - | - | - | - |

| ICCV [27,34] | SlowFast | 8 + 32 | 52.00 | 75.93 | 100 | 89.06 | 90.91 | 60.00 | 57.78 | 60.98 | - | - | - | - | - |

| ICCV [27,35] | TSM | 8 | 64.00 | 83.33 | 91.67 | 95.31 | 80.52 | 45.00 | 68.89 | 48.78 | - | - | - | - | - |

| ICCV [27,35] | TSM | 16 | 68.00 | 83.34 | 87.50 | 96.88 | 83.12 | 47.50 | 66.67 | 48.78 | - | - | - | - | - |

| ICCV [27,35] | TSM | 32 | 56.00 | 85.19 | 95.83 | 95.88 | 90.91 | 57.50 | 64.44 | 51.22 | - | - | - | - | - |

| ACCV [29,36] | SGE-ResNet-50 | - | - | - | - | 95.06 | 93.66 | - | 86.90 | - | 60.00 | 72.29 | 18.06 | 52.90 | 67.20 |

| ACCV [29,36] | SK-ResNet-50 | - | - | - | - | 96.22 | 93.15 | - | 88.34 | - | 73.33 | 75.16 | 25.00 | 50.00 | 61.60 |

| IEEE-TPAMI [29,37] | Res2Net-50 | - | - | - | - | 96.22 | 93.15 | - | 89.14 | - | 64.44 | 68.15 | 23.61 | 49.28 | 64.80 |

| IEEE-PAMI [29,38] | HRNet-w32 | - | - | - | - | 96.51 | 91.78 | - | 86.74 | - | 66.67 | 76.75 | 27.78 | 68.84 | 69.60 |

| ACCV [29,36] | SGE-ResNet-101 | - | - | - | - | 95.93 | 94.01 | - | 86.58 | - | 68.89 | 76.11 | 25.00 | 25.00 | 69.60 |

| ACCV [29,36] | SK-ResNet-101 | - | - | - | - | 96.22 | 94.01 | - | 91.05 | - | 77.78 | 73.25 | 25.00 | 25.00 | 64.80 |

| IEEE [29,37] | Res2Net-101 | - | - | - | - | 95.06 | 92.29 | - | 91.21 | - | 75.56 | 66.88 | 31.94 | 31.94 | 68.00 |

| IEEE-PAMI [29,38] | HRNet-w48 | - | - | - | - | 95.35 | 93.32 | - | 90.26 | - | 88.89 | 67.83 | 26.39 | 26.39 | 77.60 |

| ICLR [27] [39] | V4D | 8 | 40.00 | 88.89 | 100 | 89.06 | 80.52 | 52.50 | 60.00 | 63.41 | - | - | - | - | - |

| ICLR [27,39] | V4D | 16 | 44.00 | 92.59 | 100 | 92.19 | 79.22 | 50.00 | 60.00 | 63.41 | - | - | - | - | - |

| ICLR [27,39] | V4D | 32 | 48.00 | 83.33 | 100 | 90.63 | 80.52 | 67.50 | 62.22 | 63.41 | - | - | - | - | - |

| CVPR [27,40] | TPN | 8 | 44.00 | 85.19 | 100 | 100 | 90.91 | 52.50 | 71.11 | 58.54 | - | - | - | - | - |

| CVPR [27,40] | TPN | 16 | 40.00 | 92.59 | 100 | 100 | 90.91 | 57.50 | 53.33 | 63.41 | - | - | - | - | - |

| CVPR [27,40] | TPN | 32 | 52.00 | 81.48 | 100 | 100 | 88.31 | 70.00 | 48.89 | 60.98 | - | - | - | - | - |

| CVPR [29,41] | SCNet50 | - | - | - | - | 95.06 | 92.12 | - | 85.94 | - | 68.89 | 68.47 | 22.22 | 50.72 | 67.20 |

| IEEE-CVF [29,42] | ResNeSt-50 | - | - | - | - | 97.38 | 91.44 | - | 90.58 | - | 80.00 | 78.03 | 30.56 | 56.52 | 65.60 |

| IEEE-CVF [29,41] | SCNet101 | - | - | - | - | 95.58 | 95.21 | - | 84.66 | - | 82.22 | 71.34 | 19.44 | 19.44 | 63.20 |

| IEEE-CVF [29,42] | ResNeSt-101 | - | - | - | - | 97.38 | 92.64 | - | 92.81 | - | 75.56 | 79.30 | 37.50 | 37.50 | 72.00 |

| ICCV [27,43] | MViT | 8 | 56.00 | 90.74 | 100 | 95.31 | 80.52 | 47.50 | 51.11 | 65.85 | - | - | - | - | - |

| ICCV [27,43] | MViT | 16 | 52.00 | 81.48 | 100 | 100 | 76.62 | 40.00 | 48.89 | 63.41 | - | - | - | - | - |

| ICCV [27,43] | MViT | 32 | 60.00 | 83.33 | 100 | 90.63 | 89.61 | 37.50 | 57.78 | 60.98 | - | - | - | - | - |

| NeurIPS [27,44] | TCP | 8 | 76.00 | 75.93 | 100 | 79.69 | 87.01 | 57.50 | 73.33 | 58.54 | - | - | - | - | - |

| NeurIPS [27,44] | TCP | 16 | 72.00 | 74.07 | 91.67 | 90.63 | 92.21 | 65.00 | 60.00 | 56.10 | - | - | - | - | - |

| NeurIPS [27,44] | TCP | 32 | 60.00 | 79.63 | 70.83 | 82.81 | 90.91 | 50.00 | 73.33 | 68.29 | - | - | - | - | - |

| EI-135 [29] | KFENet+-LFENet-w32 | - | - | - | - | 96.22 | 92.29 | - | 93.13 | - | 84.44 | 82.80 | 37.50 | 68.84 | 79.20 |

| EI-135 [29] | KFENet+-LFENet-w48 | - | - | - | - | 96.22 | 92.00 | - | 93.45 | - | 86.67 | 82.17 | 48.61 | 67.39 | 80.00 |

| EI-141 [27] | DF2-Net | 8 | 56.00 | 79.63 | 100 | 93.75 | 90.91 | 62.50 | 71.11 | 56.10 | - | - | - | - | - |

| EI-141 [27] | DF2-Net | 16 | 44.00 | 94.44 | 100 | 98.44 | 93.51 | 75.00 | 53.33 | 63.41 | - | - | - | - | - |

| EI-141 [27] | DF2-Net | 32 | 56.00 | 83.33 | 100 | 98.44 | 92.21 | 65.00 | 42.22 | 58.54 | - | - | - | - | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Teterja, D.; Garcia-Rodriguez, J.; Azorin-Lopez, J.; Sebastian-Gonzalez, E.; Nedić, D.; Leković, D.; Knežević, P.; Drajić, D.; Vukobratović, D. A Video Mosaicing-Based Sensing Method for Chicken Behavior Recognition on Edge Computing Devices. Sensors 2024, 24, 3409. https://doi.org/10.3390/s24113409

Teterja D, Garcia-Rodriguez J, Azorin-Lopez J, Sebastian-Gonzalez E, Nedić D, Leković D, Knežević P, Drajić D, Vukobratović D. A Video Mosaicing-Based Sensing Method for Chicken Behavior Recognition on Edge Computing Devices. Sensors. 2024; 24(11):3409. https://doi.org/10.3390/s24113409

Chicago/Turabian StyleTeterja, Dmitrij, Jose Garcia-Rodriguez, Jorge Azorin-Lopez, Esther Sebastian-Gonzalez, Daliborka Nedić, Dalibor Leković, Petar Knežević, Dejan Drajić, and Dejan Vukobratović. 2024. "A Video Mosaicing-Based Sensing Method for Chicken Behavior Recognition on Edge Computing Devices" Sensors 24, no. 11: 3409. https://doi.org/10.3390/s24113409

APA StyleTeterja, D., Garcia-Rodriguez, J., Azorin-Lopez, J., Sebastian-Gonzalez, E., Nedić, D., Leković, D., Knežević, P., Drajić, D., & Vukobratović, D. (2024). A Video Mosaicing-Based Sensing Method for Chicken Behavior Recognition on Edge Computing Devices. Sensors, 24(11), 3409. https://doi.org/10.3390/s24113409