Light and Displacement Compensation-Based iPPG for Heart-Rate Measurement in Complex Detection Conditions

Abstract

1. Introduction

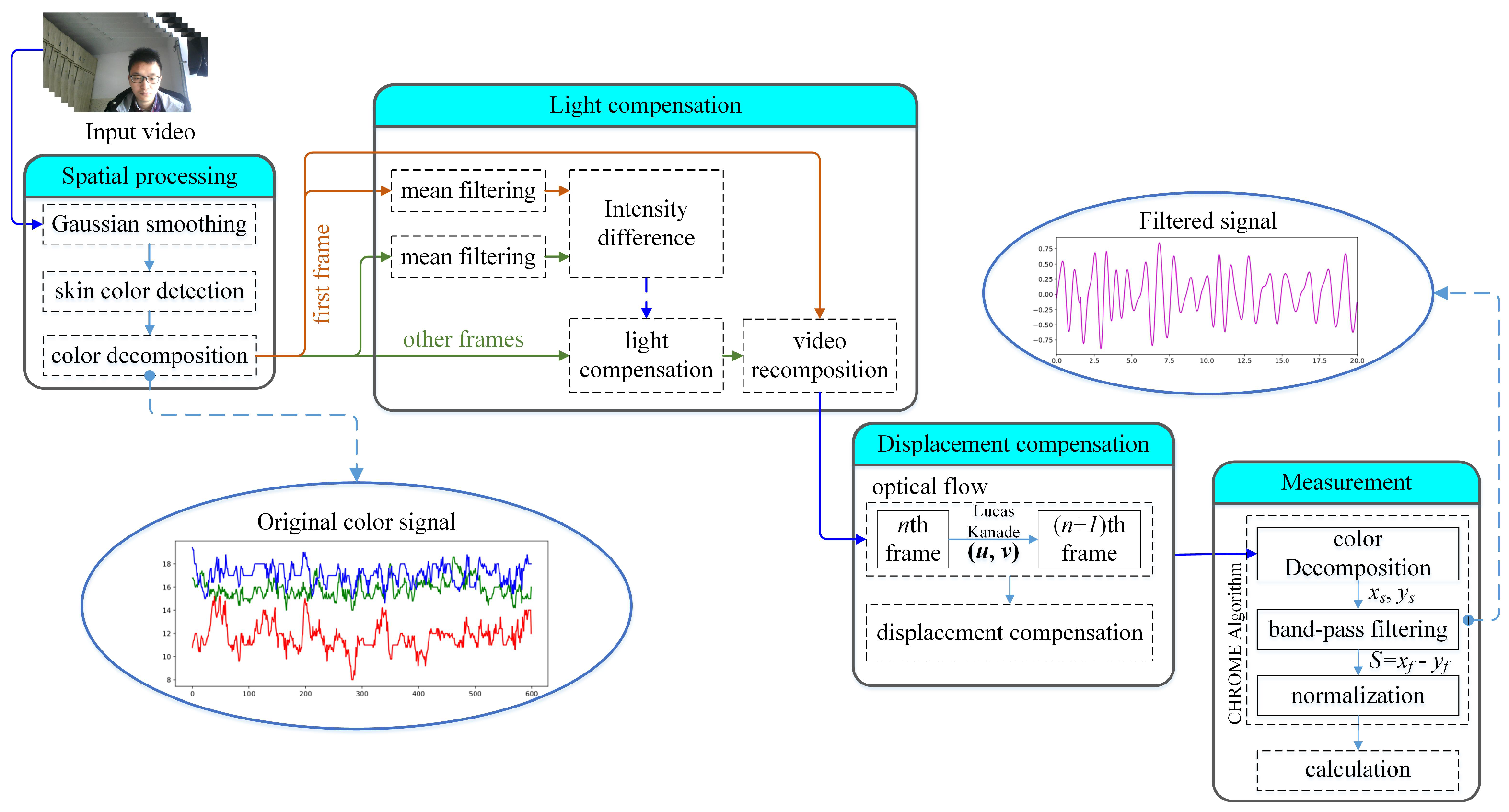

2. Overview of the Heart-Rate Measurement

3. Spatial Processing

3.1. Gaussian Smoothing

3.2. Skin-Color Detection Color Decomposition

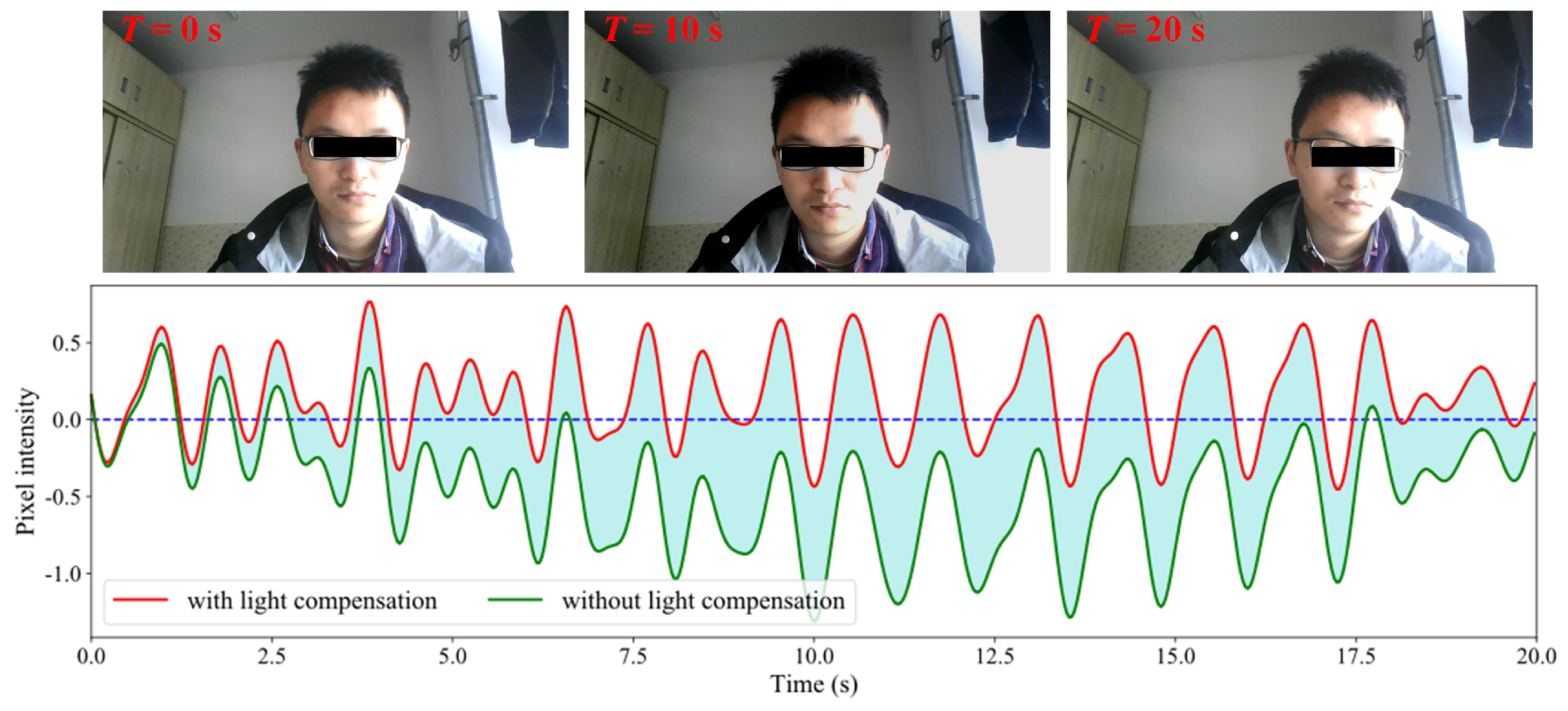

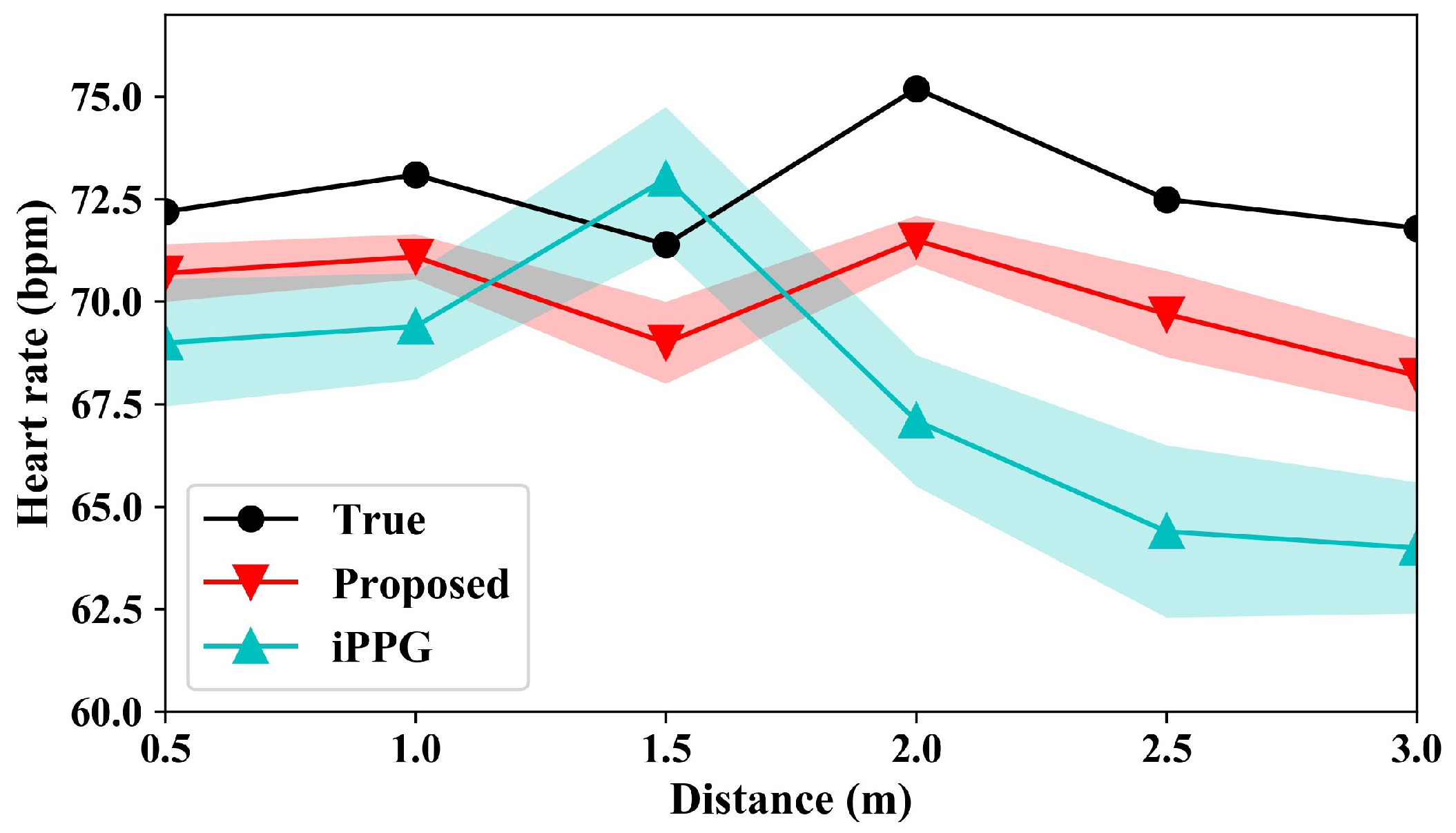

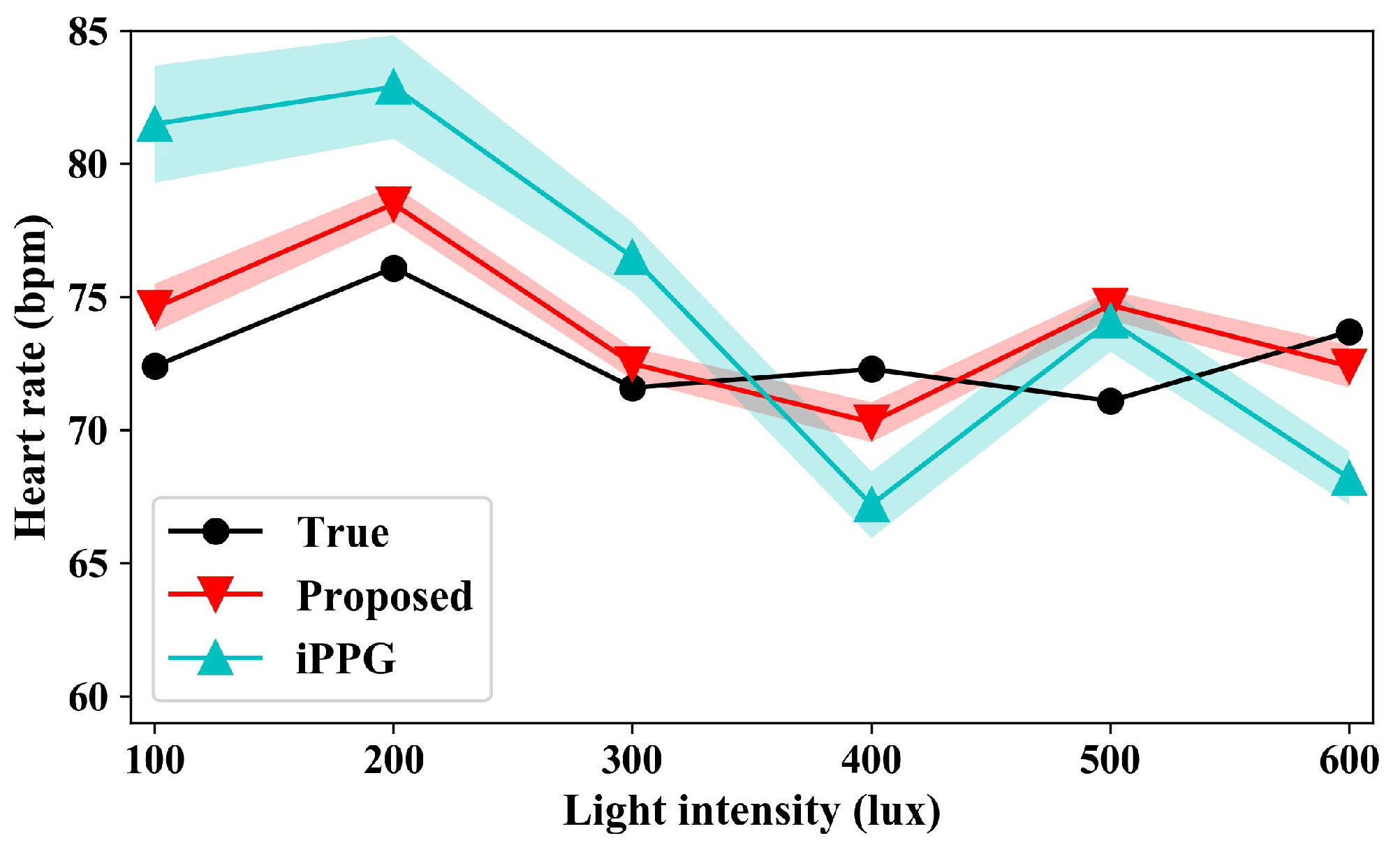

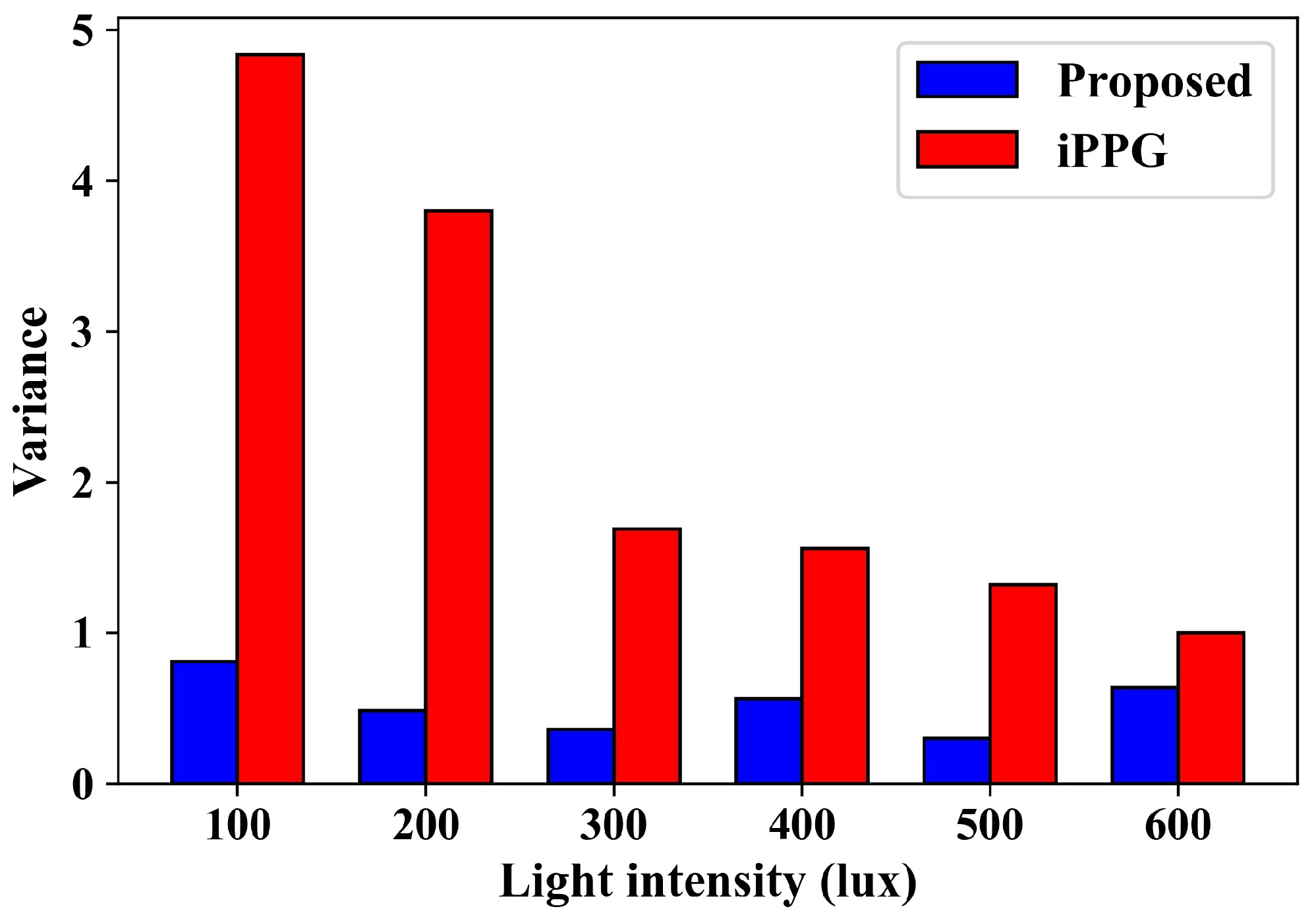

4. Light Compensation

4.1. Light-Compensation Algorithm

| Algorithm 1: Light-compensation sub-algorithm |

| Input: R = {R1, …, RN}, G = {G1, …, GN}, B = {B1, …, BN}; |

| / / N represents the number of frames |

| Output: |

|

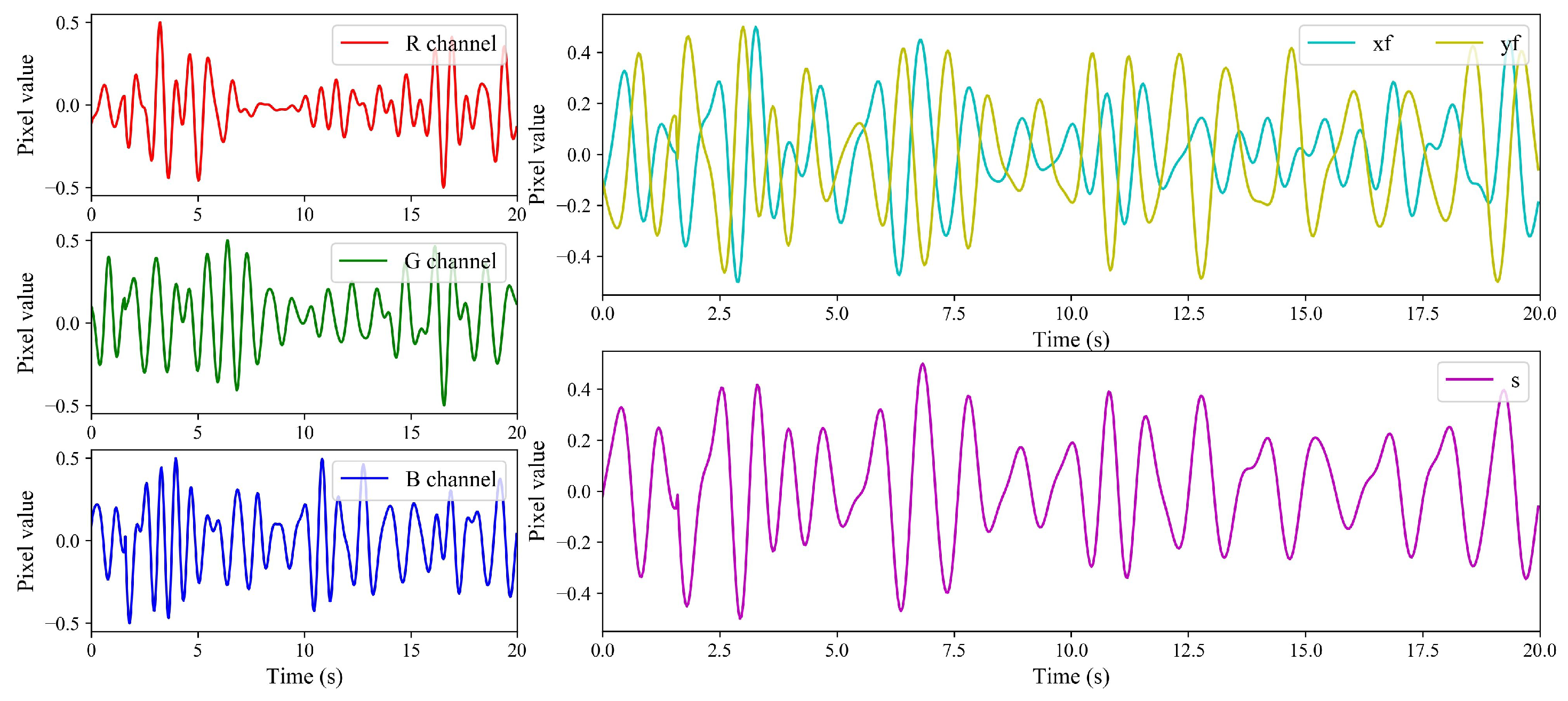

4.2. Results of Light Compensation

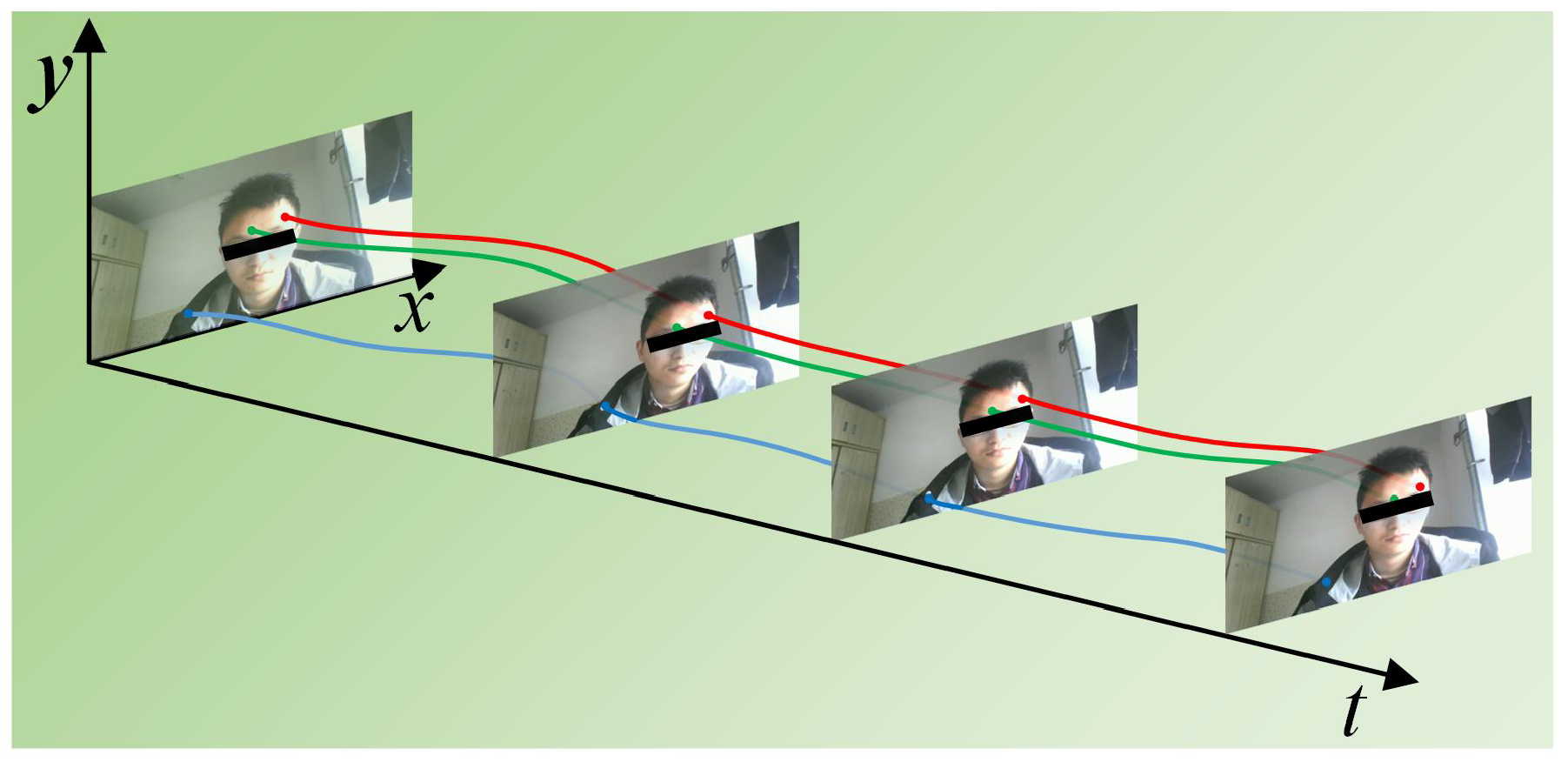

5. Displacement Compensation

6. Heart-Rate Measurement

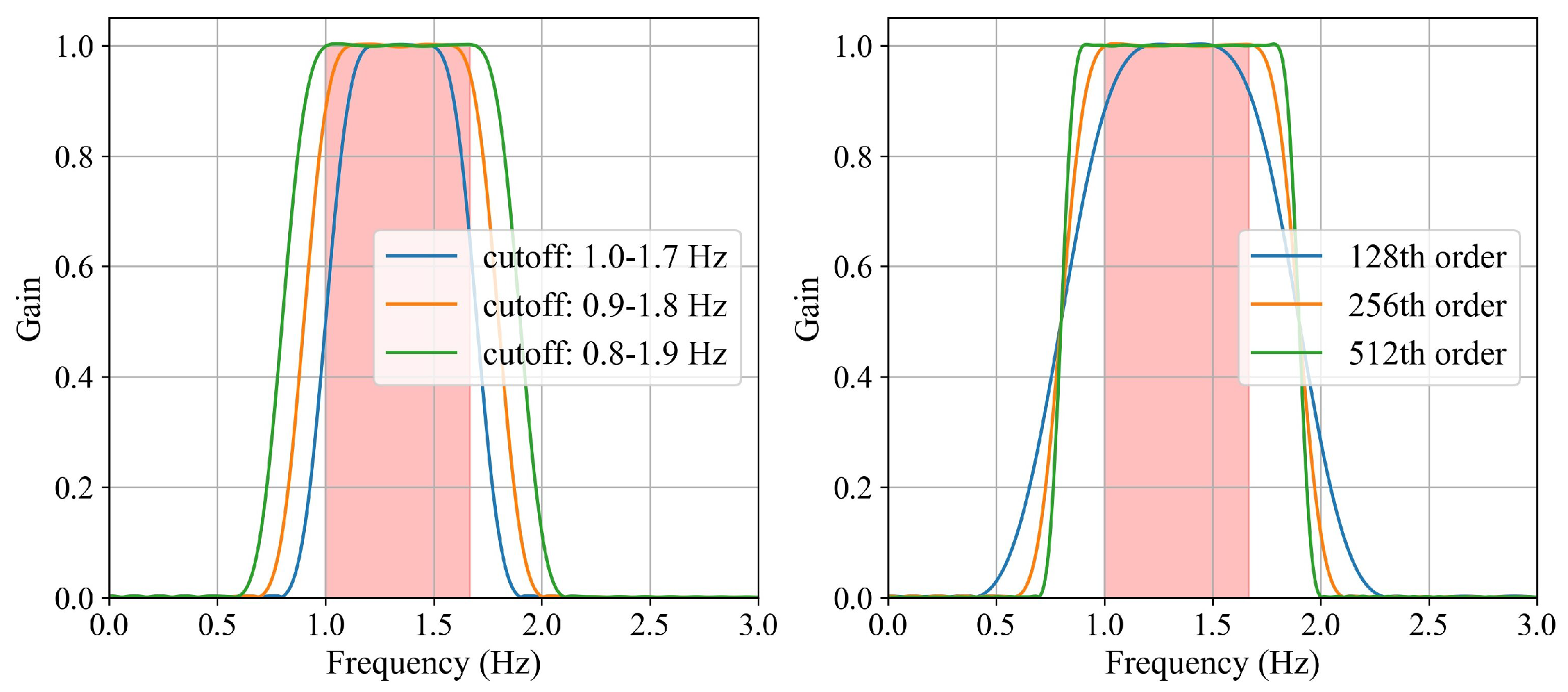

6.1. Design of the Filter

6.2. Color Decomposition

6.3. Heart-Rate Measurement

7. Experiment

8. Discussion and Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| bpm | beat per minute |

| iPPG | imaging Photoplethysmography |

| SNR | signal-to-noise ratio |

| ICA | independent component analysis |

| PCA | principal component analysis |

| CHROME | Chrominance-Based |

| fps | frames per second |

| LK | Lucas–Kanade |

References

- Bansal, P.; Malik, M.; Kundu, R. Smart heart rate monitoring system. In Proceedings of the 2018 IEEMA Engineer Infinite Conference (eTechNxT), New Delhi, India, 13–14 March 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Luo, J.; Yan, Z.; Guo, S.; Chen, W. Recent Advances in Atherosclerotic Disease Screening Using Pervasive Healthcare. IEEE Rev. Biomed. Eng. 2021, 15, 293–308. [Google Scholar] [CrossRef] [PubMed]

- Lakshmi Devi, R.; Kalaivani, V.; Velammal, B.L. Adaptive neuro-fuzzy inference healthcare system for cardiac arrhythmia detection using heart rate variability features. In Advances in Automation, Signal Processing, Instrumentation, and Control; Springer: Singapore, 2021; pp. 2167–2174. Available online: https://link.springer.com/chapter/10.1007%2F978-981-15-8221-9_201 (accessed on 21 May 2024).

- Georgiou, K.; Larentzakis, A.V.; Khamis, N.N.; Alsuhaibani, G.I.; Alaska, Y.A.; Giallafos, E.J. Can wearable devices accurately measure heart rate variability? A systematic review. Folia Medica 2018, 60, 7–20. [Google Scholar] [CrossRef] [PubMed]

- Lambert, K.; Merci, B.; Gryspeert, C.; Jekovec, N. Search & rescue operations during interior firefighting: A study into crawling speeds. Fire Saf. J. 2021, 121, 103269. [Google Scholar] [CrossRef]

- Khong, W.L.; Mariappan, M.; Chong, C.S. Contact and non-contact heart beat rate measurement techniques: Challenges and issues. Pertanika J. Sci. Technol. 2021, 29, 1707–1732. [Google Scholar] [CrossRef]

- Li, C.; Peng, Z.; Huang, T.Y.; Fan, T.; Wang, F.K.; Horng, T.S.; Munoz-Ferreras, J.M.; Gomez-Garcia, R.; Ran, L.; Lin, J. A review on recent progress of portable short-range noncontact microwave radar systems. IEEE Trans. Microw. Theory Tech. 2017, 65, 1692–1706. [Google Scholar] [CrossRef]

- Chen, S.; Wu, N.; Ma, L.; Lin, S.; Yuan, F.; Xu, Z.; Li, W.; Wang, B.; Zhou, J. Noncontact heartbeat and respiration monitoring based on a hollow microstructured self-powered pressure sensor. ACS Appl. Mater. Interfaces 2018, 10, 3660–3667. [Google Scholar] [CrossRef] [PubMed]

- Guo, K.; Zhai, T.; Pashollari, E.; Varlamos, C.J.; Ahmed, A.; Islam, M.N. Contactless vital sign monitoring system for heart and respiratory rate measurements with motion compensation using a near-infrared time-of-flight camera. Appl. Sci. 2021, 11, 10913. [Google Scholar] [CrossRef]

- Kurihara, K.; Sugimura, D.; Hamamoto, T. Non-contact heart rate estimation via adaptive rgb/nir signal fusion. IEEE Trans. Image Process. 2021, 30, 6528–6543. [Google Scholar] [CrossRef] [PubMed]

- Ren, L.; Kong, L.; Foroughian, F.; Wang, H.; Theilmann, P.; Fathy, A.E. Comparison study of noncontact vital signs detection using a Doppler stepped-frequency continuous-wave radar and camera-based imaging photoplethysmography. IEEE Trans. Microw. Theory Tech. 2017, 65, 3519–3529. [Google Scholar] [CrossRef]

- Hassan, H.; Zulkifli, M.S.A.; Suhaime, M.A.M.; Kaidi, H.M.; Bakar, R.A. A real-time non-contact heart rate measurement based on imaging photoplethysmography (ippg)-power spectral density (psd). In Proceedings of the 2021 IEEE Symposium on Industrial Electronics & Applications (ISIEA), IEEE, Langkawi Island, Malaysia, 10–11 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Zhang, C.; Tian, J.; Li, D.; Hou, X.; Wang, L. Comparative study on the effect of color spaces and color formats on heart rate measurement using the imaging photoplethysmography (IPPG) method. Technol. Health Care 2022, 30, 391–402. [Google Scholar] [CrossRef]

- Wieler, M.E.; Murphy, T.G.; Blecherman, M.; Mehta, H.; Bender, G.J. Infant heart-rate measurement and oxygen desaturation detection with a digital video camera using imaging photoplethysmography. J. Perinatol. 2021, 41, 1725–1731. [Google Scholar] [CrossRef]

- Zaunseder, S.; Vehkaoja, A.; Fleischhauer, V. Signal-to-noise ratio is more important than sampling rate in beat-to-beat interval estimation from optical sensors. Biomed. Signal Process. Control. 2022, 74, 103538. [Google Scholar] [CrossRef]

- Matsumura, K.; Toda, S.; Kato, Y. RGB and near-infrared light reflectance/transmittance photoplethysmography for measuring heart rate during motion. IEEE Access 2020, 8, 80233–80242. [Google Scholar] [CrossRef]

- Lin, Y.C.; Lin, Y.H. A study of color illumination effect on the SNR of rPPG signals. In Proceedings of the 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), IEEE, Jeju, Republic of Korea, 11–15 July 2017; pp. 4301–4304. [Google Scholar] [CrossRef]

- Kim, G.; Park, K.; Hwang, K.J.; Jin, S. Highly sunlight reflective and infrared semi-transparent nanomesh textiles. ACS Nano 2021, 15, 15962–15971. [Google Scholar] [CrossRef]

- Scherpf, M.; Ernst, H.; Misera, L.; Malberg, H.; Schmidt, M. Skin Segmentation for Imaging Photoplethysmography Using a Specialized Deep Learning Approach. In Proceedings of the 2021 Computing in Cardiology (CinC), Brno, Czech Republic, 13–15 September 2021; Volume 48, pp. 1–4. [Google Scholar] [CrossRef]

- Woyczyk, A.; Fleischhauer, V.; Zaunseder, S. Skin segmentation using active contours and gaussian mixture models for heart rate detection in videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 312–313. [Google Scholar] [CrossRef]

- Kamshilin, A.A.; Zaytsev, V.V.; Mamontov, O.V. Novel contactless approach for assessment of venous occlusion plethysmography by video recordings at the green illumination. Sci. Rep. 2017, 7, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Iakovlev, D.; Hu, S.; Dwyer, V. Frame registration for motion compensation in imaging photoplethysmography. Sensors 2018, 18, 4340. [Google Scholar] [CrossRef]

- Hashemi-Nasab, F.S.; Parastar, H. Vis-NIR hyperspectral imaging coupled with independent component analysis for saffron authentication. Food Chem. 2022, 393, 133450. [Google Scholar] [CrossRef]

- Inagawa, A.; Kimura, M.; Uehara, N. Total protein assay by PCA-based RGB-spectrum conversion methods with smartphone-acquired digital images. Anal. Sci. 2022, 38, 869–880. [Google Scholar] [CrossRef] [PubMed]

- Qi, H.; Guo, Z.; Chen, X. Video-based human heart rate measurement using joint blind source separation. Biomed. Signal Process. Control 2017, 31, 309–320. [Google Scholar] [CrossRef]

- Macwan, R.; Benezeth, Y.; Mansouri, A. Remote photoplethysmography with constrained ICA using periodicity and chrominance constraints. Biomed. Eng. Online 2018, 17, 1–22. [Google Scholar] [CrossRef]

- Pai, A.; Veeraraghavan, A.; Sabharwal, A. HRVCam: Robust camera-based measurement of heart rate variability. J. Biomed. Opt. 2021, 26, 022707. [Google Scholar] [CrossRef] [PubMed]

- De Haan, G.; Van Leest, A. Improved motion robustness of remote-PPG by using the blood volume pulse signature. Physiol. Meas. 2014, 35, 1913. [Google Scholar] [CrossRef] [PubMed]

- Huang, R.Y.; Dung, L.R. A motion-robust contactless photoplethysmography using chrominance and adaptive filtering. In Proceedings of the IEEE Biomedical Circuits and Systems Conference (BioCAS), Atlanta, GA, USA, 22–24 October 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Cheng, J.; Chen, X.; Xu, L.; Wang, Z.J. Illumination variation-resistant video-based heart rate measurement using joint blind source separation and ensemble empirical mode decomposition. IEEE J. Biomed. Health Inform. 2016, 21, 1422–1433. [Google Scholar] [CrossRef] [PubMed]

- Yue, H.; Li, X.; Cai, K.; Chen, H.; Liang, S.; Wang, T.; Huang, W. Non-contact heart rate detection by combining empirical mode decomposition and permutation entropy under non-cooperative face shake. Neurocomputing 2020, 392, 142–152. [Google Scholar] [CrossRef]

- Li, S.; Wang, H.; Wang, S.; Zhang, S. Life detection and non-contact respiratory rate measurement in cluttered environments. Multimed. Tools Appl. 2020, 79, 32065–32077. [Google Scholar] [CrossRef]

- Xu, H.; Zhang, J.; Cai, J.; Rezatofighi, H.; Tao, D. Gmflow: Learning optical flow via global matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8121–8130. [Google Scholar]

- Zhai, M.; Xiang, X.; Lv, N.; Kong, X. Optical flow and scene flow estimation: A survey. Pattern Recognit. 2021, 114, 107861. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bi, S.; Wang, H.; Zhang, S. Light and Displacement Compensation-Based iPPG for Heart-Rate Measurement in Complex Detection Conditions. Sensors 2024, 24, 3346. https://doi.org/10.3390/s24113346

Bi S, Wang H, Zhang S. Light and Displacement Compensation-Based iPPG for Heart-Rate Measurement in Complex Detection Conditions. Sensors. 2024; 24(11):3346. https://doi.org/10.3390/s24113346

Chicago/Turabian StyleBi, Shubo, Haipeng Wang, and Shuaishuai Zhang. 2024. "Light and Displacement Compensation-Based iPPG for Heart-Rate Measurement in Complex Detection Conditions" Sensors 24, no. 11: 3346. https://doi.org/10.3390/s24113346

APA StyleBi, S., Wang, H., & Zhang, S. (2024). Light and Displacement Compensation-Based iPPG for Heart-Rate Measurement in Complex Detection Conditions. Sensors, 24(11), 3346. https://doi.org/10.3390/s24113346