1. Introduction

Data fusion can utilize the information of multiple different data sources to complement each other, so the application of data fusion algorithms can suppress negative environmental factors and get more accurate results [

1,

2]. Data fusion algorithms in combination with various algorithms can achieve better results [

3,

4], so they have been widely used in various fields [

5,

6]. However, data fusion can only be applied to linear systems where all noises are independent of each other; if it is applied to a nonlinear system, it needs to be combined with the extended Kalman filter or the unscented Kalman filter [

7]. If noises are correlated, they need to be decoupled by linear changes [

8].

It is necessary to first process the original data, which includes removing abnormal data, filtering system deviations, and lossless compression of data. Abnormal data is removed using the Grubbs criterion. Filtering bias uses bias estimation algorithms [

9,

10], and in distributed fusion, if data need to be restored in the data center, lossless compression is required [

11].

Before conducting multi-sensor data fusion, each sensor can also use various algorithms to estimate the true value (called the estimation value), which can improve the fusion accuracy because the estimation value has higher accuracy than the measurement value.

When the measured physical quantity does not change over time, if there is sufficient understanding of the system, linear minimum mean square error estimation is the best method. This algorithm can find a linear function that minimizes mean square error through a single measurement value [

12,

13,

14]. Otherwise, it is best to use least squares estimation [

15,

16] and batch estimation [

17]. When the measured physical quantity changes over time, it can only be suitable to use Kalman filtering [

18,

19].

Adaptive weighted data fusion is a widely used multi-sensor data fusion algorithm with a unique optimal weighting factor that enables the fusion estimation value to have a lower estimation variance than the measurement variance of all sensors, but it requires prior knowledge of the measurement variance to run [

20]. However, its combination with a partially unbiased estimation algorithm (hereinafter referred to as unbiased estimation data fusion) not only further reduces the estimation variance but also removes the need for prior knowledge.

Unbiased estimation data fusion has two shortcomings. The first one is that unbiased estimation does not mean that the estimation results must be reliable. According to the Gauss-Markov theorem, the unbiased estimation variance has a lower bound, and when the lower bound itself is very large, even if the unbiased estimation variance is minimized, the difference between the estimation results and the true value (hereinafter referred to as estimation error) may not be within the acceptable range [

21]. The other one is that the optimal weighting factor is not necessarily optimal; the optimal weighting factor is calculated according to the measurement variance; a smaller measurement variance does not necessarily mean a smaller measurement error, so in some cases, the optimal weighting factor is not optimal. Therefore, this paper proposes a biased estimation data fusion algorithm that also does not require priori knowledge, which uses unbiased estimation values to construct biased estimation values with lower estimation errors and calculates the optimal weighting factor according to the estimation error to ensure its optimality.

This paper is organized as follows:

Section 2 reviews some existing unbiased estimation data fusion algorithms,

Section 3 describes the shortcomings of the existing algorithms and the feasibility of the improved methods,

Section 4 describes the specific implementation of the algorithms in this paper,

Section 5 compares the performance of the different algorithms, and

Section 6 summarizes the entire content and provides prospects for the future.

2. Unbiased Estimation Data Fusion

When N sensors are measuring an invariant physical quantity and all the sensor nodes in a network measure the observed physical quantity at the same time, the measurement equation is:

where

is the unknown quantity to be measured,

is the true value of

, and

is the discrete time indicator,

is the measurement value of the

ith sensor at the moment

,

is the measurement matrix of the

ith sensor (the

are often unit matrices),

is the measurement noise,

and are independent of each other, and

be called measurement variance. Moreover, according to the additivity of the normal distribution,

.

In this system, only is a known quantity; how one can use to calculate the true value of ? Since the measurement equations are designed for multiple sensors and the measurement variances may vary, adaptive weighted data fusion is often used to solve such problems, but adaptive weighted data fusion needs prior knowledge of the measurement variance of all the sensors, and in practice, the measurement covariance can be obtained by experiments.

2.1. Unbiased Estimation

Unbiased estimation refers to: if the mathematical expectation of an estimator is equal to the true value of the estimated parameter (this property is called unbiasedness), then this estimator is called the unbiased estimation of the estimated parameter. The mathematical expectation of the estimator constructed by unbiased estimation is equal to the true value of the estimated parameter, so using unbiased estimators instead of measurement values will not cause an increase in error. Unbiased estimation of sample variance can be used to replace the measurement variance of sensors, allowing adaptive weighted data fusion to operate without knowing the measurement variance of sensors. There are two commonly used unbiased estimation algorithms, as follows:

Least squares estimation:

Assuming that a particular sensor collects n data over a period of time,

, the estimation value, and

, estimation variance, are given as follows:

Batch estimation:

Assuming that a particular sensor collects n data over a period of time, these n data are randomly divided into two groups, where the data in the

ith groups are:

,

.

, the sample mean, and

, sample variance, are given as follows:

According to the theory of batch estimation in statistics, the optimal estimation value

and the optimal estimation variance

of these 2 sets of data are given as follows:

2.2. Adaptive Weighted Data Fusion

Adaptive weighted data fusion principle: assume that there are

sensors working on the same unknown quantity

(the true value is

) is measured, and the measurement value of the

ith sensor is

. The measurement variance of the

ith sensor is

, and the measurement data of the

ith sensor follows

, then the unbiased estimator

is given as follows:

Since

is an unbiased estimator, so that

In order to find

that minimizes

(call this

as the optimal weighting factor), the auxiliary function that is constructed according to the Lagrange multiplier method is given as follows:

According to multivariate extremum theory, it is known that the solution of the following partial differential equation makes the estimation variance

minimized, as follows:

The simplified formula is given as follows:

Thus, the formula for

are given as follows:

So,

and

are given as follows:

When adaptive weighted data fusion is combined with other unbiased estimation algorithms, it is possible to replace

and

in Equation (15) with an unbiased estimation value and an unbiased estimation variance. The

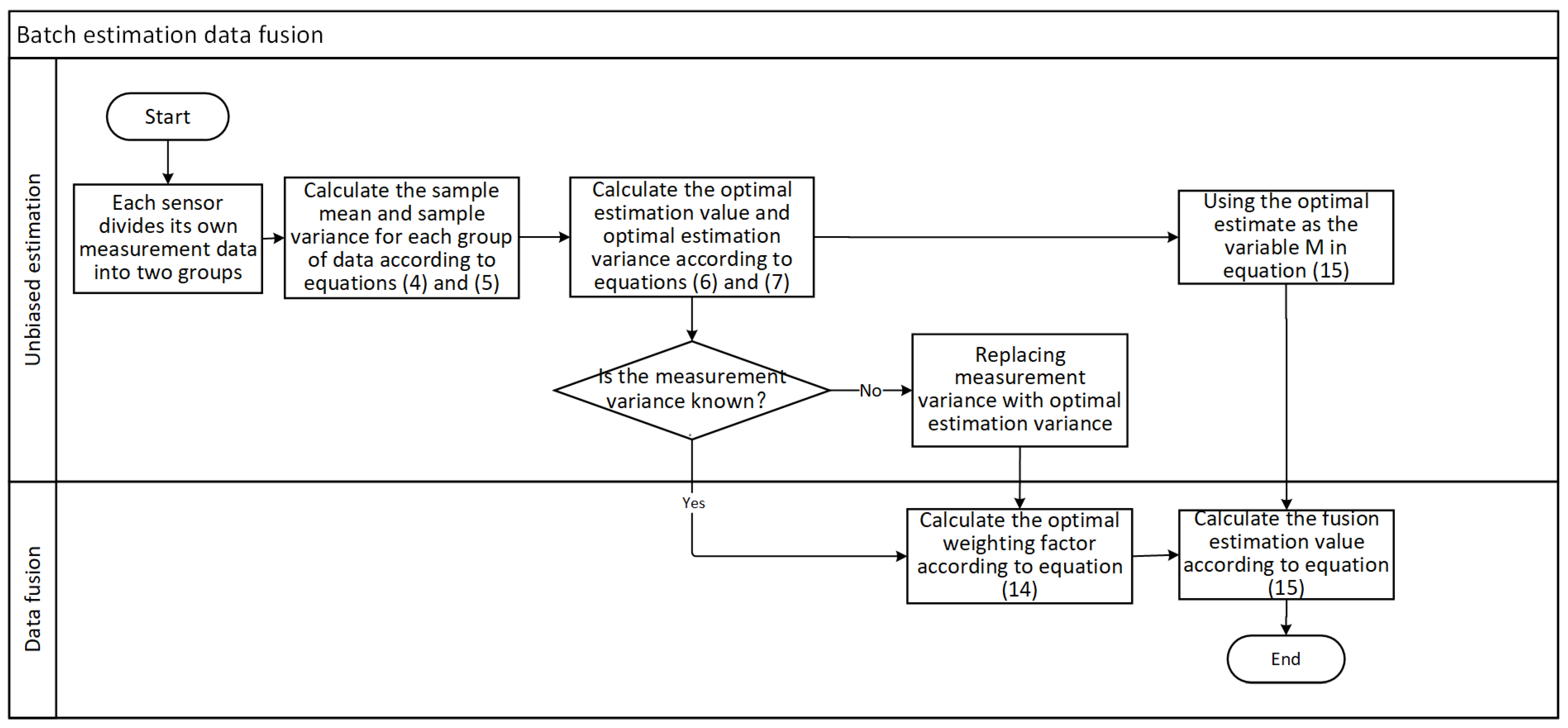

Figure 1 shows the flowchart for batch estimation data fusion, and the flowchart for other unbiased estimation data fusion is more or less the same.

3. Rationale

Statistical inference is a statistical method of inferring the whole through samples, and there are two kinds of errors in statistical inference: systematic error and random error. Unbiased estimation has no systematic error because of its unbiased nature, but there is still random error, so its estimation results are not necessarily reliable.

The optimal weighting factor is calculated based on the measurement variance alone, but a smaller measurement variance does not mean a smaller measurement error. Although the measurement variance of the data measured by different sensors varies, the mathematical expectations are all equal, so, in practice, it is easy to have a smaller measurement variance but a larger measurement error.

3.1. Biased Estimators

Theorem 1. The error of the unbiased estimator can be further optimized.

Proof of Theorem 1. Assuming that the true value of the measured unknown quantity

is

, the sensor measurement data follows

.

is an unbiased estimator constructed using a linear combination of the sensor measurement data, and letting the estimation variance of

be

, then

, defining the estimation error

as follows:

is easy to obtain, and

follows

. Assuming that the estimation error is tolerable when

, the probability that the estimation error is tolerable is:

If , we obtain: , which means that for any , there is about a 31.7% chance that is larger than the estimation standard deviation , so that even if is very small, may be able to be reduced further. □

Theorem 2. A biased estimation value with a smaller error can be constructed from an unbiased estimation value.

Proof of Theorem 2. Assuming unbiased estimator

follows

, the biased estimator corresponding to

is defined as

and

, where

is called offset, which is a nonzero variable. The following results can be obtained:

Because is not equal to the true value , B is a biased estimator.

Assuming that

is a specific estimate of

, its estimation error is

and

.

is the offset corresponding to

and

, and the biased estimator corresponding to

is

and

. The estimation error of

is

and

, so:

So, when and , . In other words, when the sign of is opposite to and , the estimation error of biased estimation is smaller than that of unbiased estimation. □

3.2. Optimal Weighting Factors

Theorem 3. In some cases, the optimal weighting factor is not optimal.

Proof of Theorem 3. Suppose there are two sensors and that simultaneously measure the unknown quantity (the true value is ). Their measurement variances are and , and they satisfy . Their measurement data are and . For adaptive weighted data fusion, , the weights of , will always be greater than , the weights of , since < .

Define

, the measurement error (unlike

,

only considers the magnitude of the error, not the sign of the error), as follows:

So

,

. If

, then

, which implies that the estimation error of the fusion estimation value computed from optimal weighting factors is not minimal. So, when

<

but

, the optimal weighting factor is not optimal, and the probability is given as follows:

It can be shown that , which indicates that the weights of any two sensors may not be optimal. The above proof uses measured values and measured variances, but replacing them with estimated values and estimated variances is equally valid. □

Theorem 4. It is optimal to compute the optimal weighting factor using the measurement error.

Proof of Theorem 4. Suppose there are N sensors measuring the unknown quantity

(the true value is

), their measurement values are

, and their measurement errors are

; moreover, there are

. The optimal weighting factor calculated from the measurement error is

, because

, then

, so the corresponding fusion estimation value

is as follows:

In this paper, only one case is proved, which has two weights different from , and the proof can be easily generalized to other cases.

Counterfactual: suppose that when the optimal weighting factor is

and

, the fusion estimation value

is more accurate than

, where

is given as follows:

The common term of

and

is

, and

,

where

. So

and

become this form:

Because

, so

and set

. Substituting

for

and

, then we get the following equation:

Because

, so the larger

and

are, the less accurate

and

are. Because

and

, so

=

and

=

. Set

is given as follows:

So

and

. The derivative of

is given as follows:

So, the larger is, the smaller . Because and , so , , and , is more accurate than . Contradicting the hypothesis, the optimal weighting factor calculated from the estimation error is better. The above proof uses measured values and measured variances, but replacing them with estimated values and estimated variances is equally valid. □

4. Algorithm Implementation

The algorithm in

Section 4.1 only uses measurement data from a single sensor, so it can be implemented in a distributed manner. The algorithms in

Section 4.2 and

Section 4.3 include data fusion algorithms, so they can only be implemented using centralized methods.

4.1. Unbiased Estimation

Suppose that N sensors simultaneously measure the unknown quantity

(the true value is

µ), and all the sensor nodes in a network measure the observed physical quantity at the same time. For any of the sensors, if it collects 10 data over a period of time, in order from smallest to largest:

, then construct the unbiased estimators as follows:

Assuming that the sensor follows , theoretically, and . Because ~ are data sorted in order of size, these estimators have lower estimated variances. Through experiments, it was found that the estimated variances of are approximately . Therefore, is independent of each other and has an estimated variance smaller than the measured variance.

, the mean of estimation values, and

, the estimation variance, are given as follows:

An adaptive weighted data fusion algorithm is used to obtain

, the unbiased estimation value, and

, the unbiased estimation variance. And the specific formula is as follows:

Because is the least squares estimator, theoretically, the accuracy of the fused estimate will be higher than that of the least squares estimate.

If the number of measurement data collected by a sensor within a certain period is m and m is not equal to 10, the unbiased estimator can be constructed according to the following rules:

Each group consists of at least two measurement values, and each group does not use duplicate measurement values. The unbiased estimator corresponding to each group is the mean of the measurement values within the group.

When m is even (m = 2k), for any measurement value , it is necessary to ensure that and are in the same group.

When m is odd (m = 2k + 1), for any measurement value , it is necessary to ensure that and are in the same group. In addition, it is also necessary to ensure that , , and are in the same group.

Add an additional group that includes all measured values, such as in Equation (30).

4.2. Biased Estimation

, the size of the offset , needs to be determined first. For the ith sensor, define its unbiased estimation value and unbiased estimation variance as and . The fusion estimation value and the fusion estimation variance of these unbiased estimation values are computed by adaptive weighted data fusion algorithm.

Define

as the degree of deviation of the

ith unbiased estimation value from the true value. And

is given as (use

replaces true value) follows:

Define as the coefficient of , where the size of , and the value of are determined by . When , , when , . Otherwise, . is used to control the size of the offset and avoid excessive offset.

Then, the sign of

needs to be determined.

takes a negative sign when

>

and a positive sign otherwise. So

is given as follows:

4.3. Data Fusion

The application of the adaptive weighted data fusion algorithm requires measuring variance, which is replaced by estimated variance in unbiased estimation data fusion. In biased estimation data fusion, the estimated variance is calculated based on the estimation error, and the design concept of the calculation formula comes from the Kalman gain. Define

as the biased estimation error of

, and

is given as follows:

The true value is unknown; use

instead of the true value. So

is given as:

Then, through 100,000 experiments, calculate

, mean of

, and

, mean of

, for different g-value intervals. The

and

over the three

intervals are shown in the

Table 1.

and

can be represented as follows:

So,

, which represents the biased estimation variance of

, is given as follows:

Thus,

, the optimal weighting factor, and

, the fusion estimation value, are given as follows:

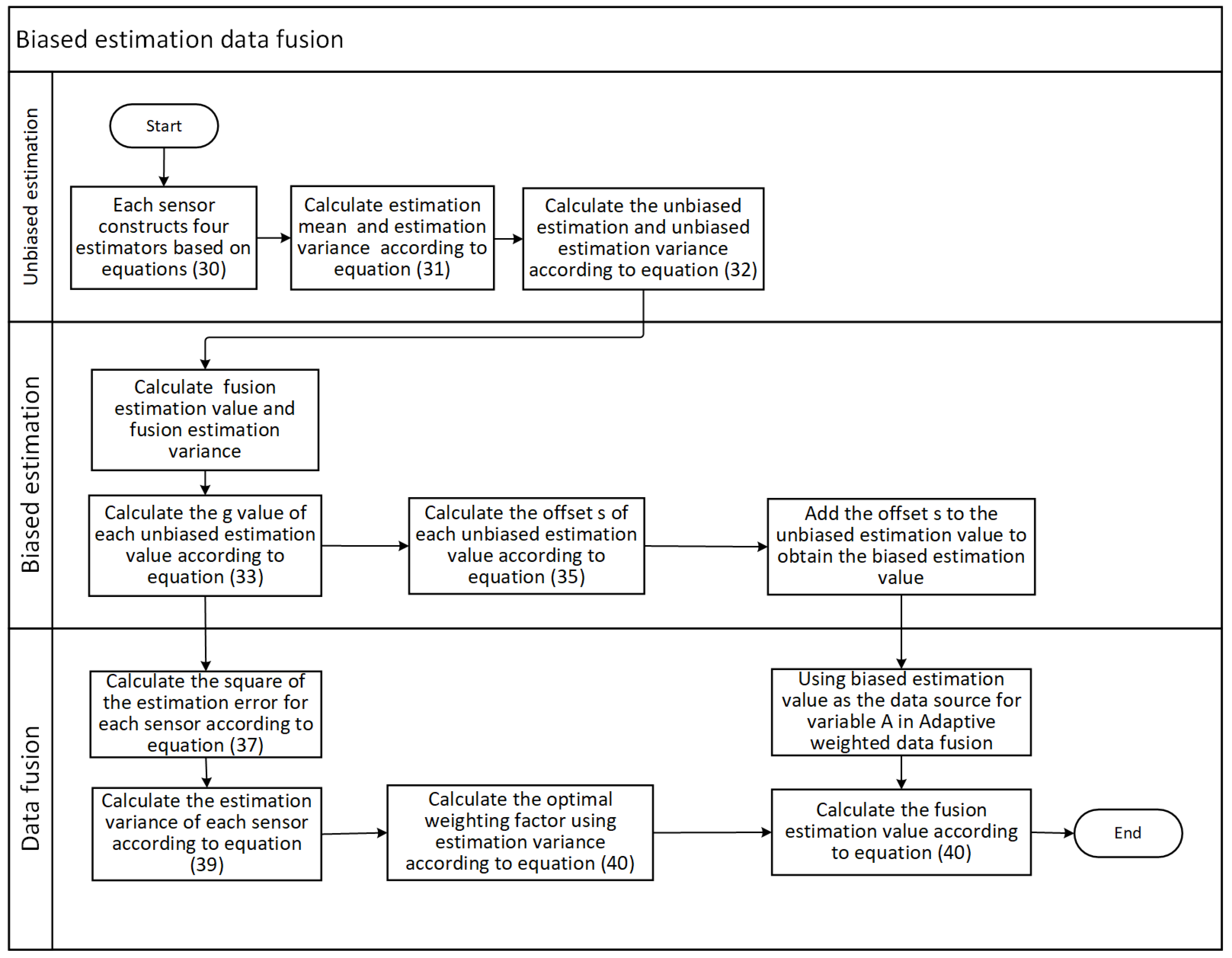

The flowchart of biased estimation data fusion is shown in

Figure 2:

5. Results

The simulation test is divided into two parts as follows: 1. a normal distribution white noise test (all noise follows a normal distribution), and 2. A uniform distribution white noise test (environmental noise is uniformly distributed white noise). The test items for the two tests are shown in

Table 2:

The testing mode is as follows: ten rounds of experiments are conducted for each combination of test items, and ten tests are conducted for each round. The average of the ten test results is used as the result of this round of experiments. Three sensors are used for each test, with 10 measurement data used for each sensor.

The test indicators are mean relative error (

) and mean square error (

).

is used to compare the average performance of the three algorithms, and

is used to compare the stability of the three algorithms. Their calculation formulas are as follows:

where

represents the number of test rounds and

represents the estimated value of the jth test in the

ith round. Relative improvement (

) is used to compare the performance differences between two algorithms, and the calculation formula for

is as follows:

where

and

represent the same indicator value (e.g.,

) for algorithms

and algorithms

. And, if the indicator has multiple values, calculate the mean of the indicator first, and then calculate

. If

is greater than 0, it means that algorithm

has improved

compared to algorithm

.

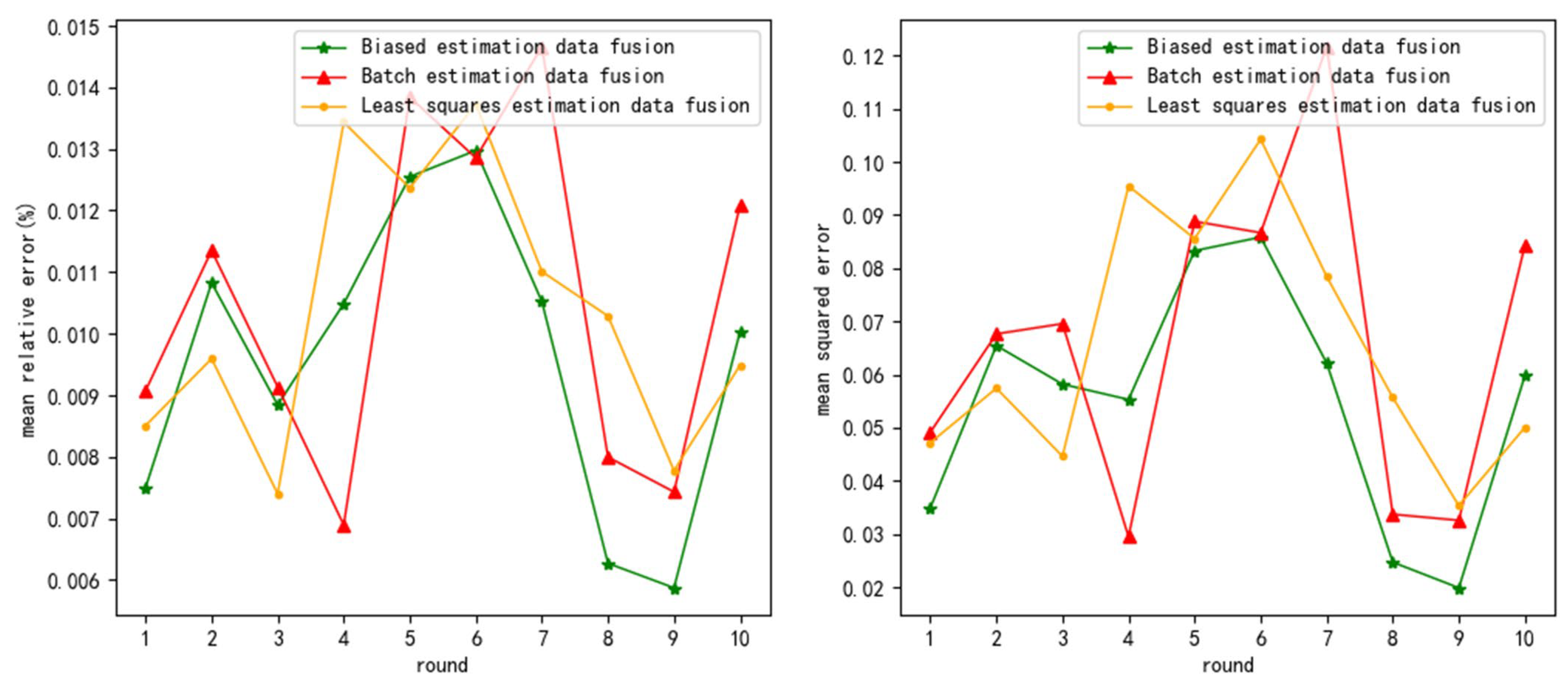

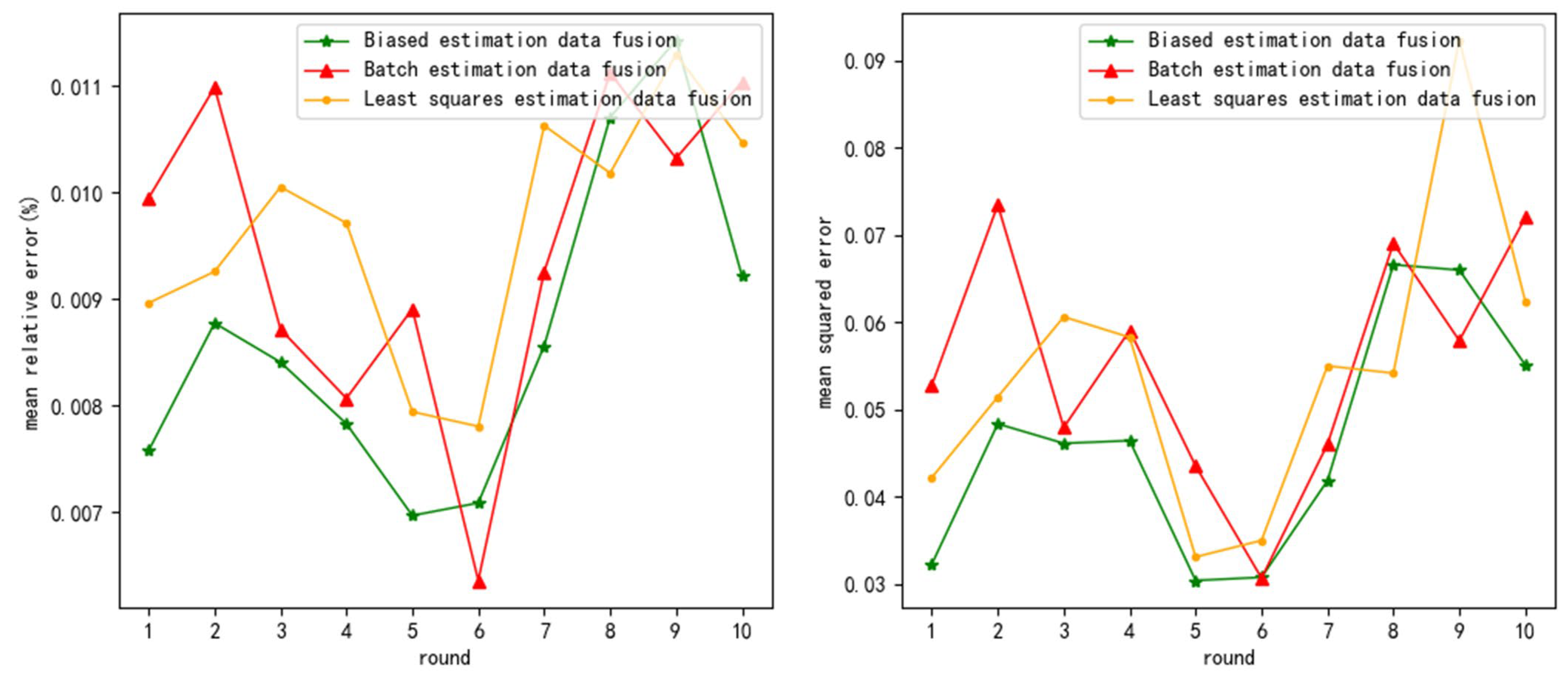

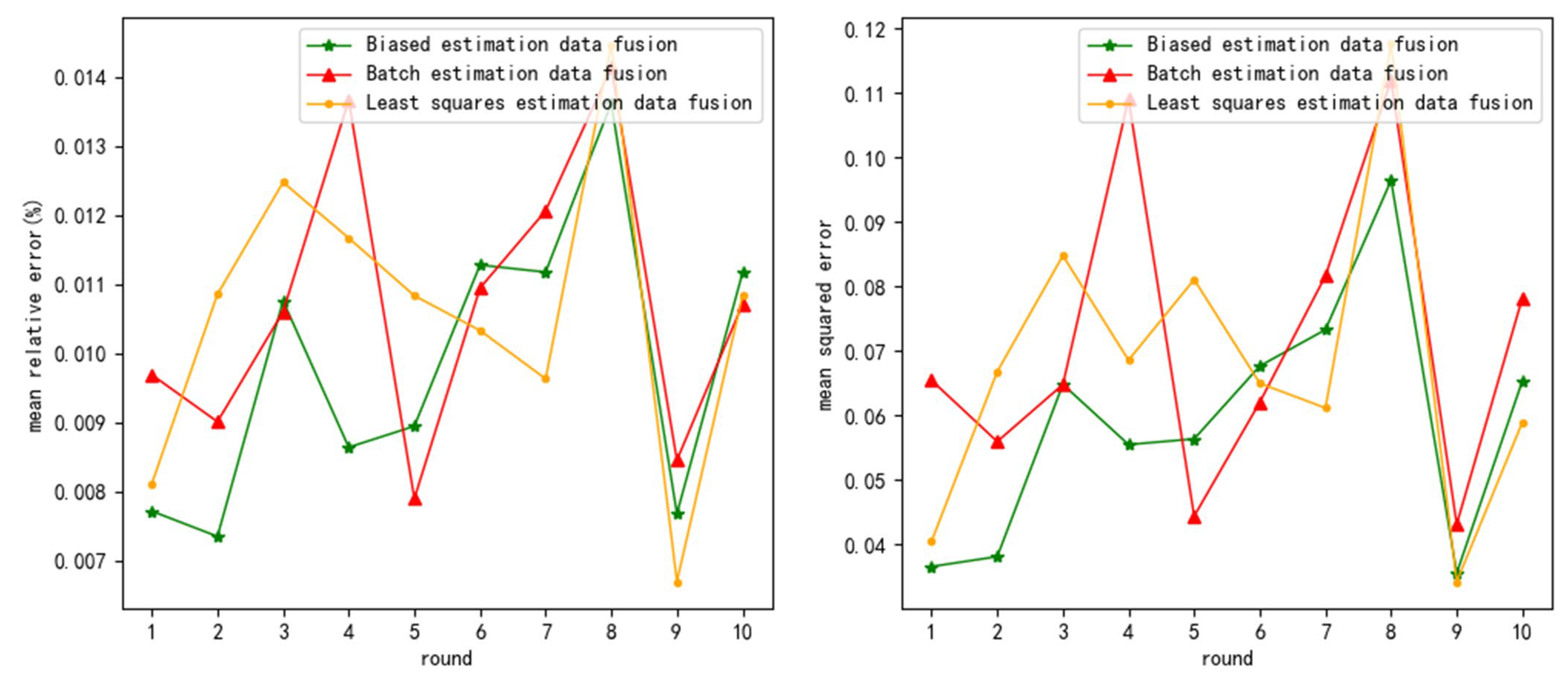

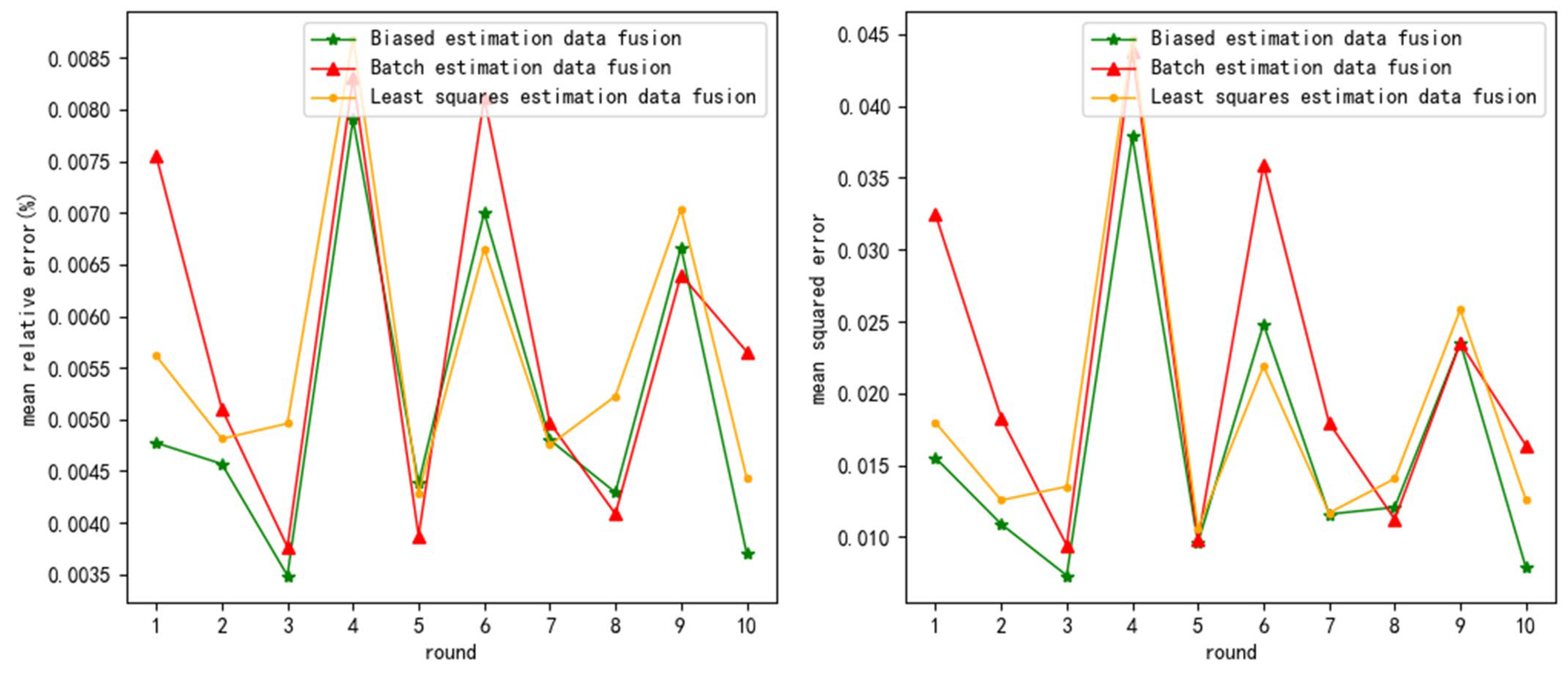

5.1. Normal Distribution White Noise Test

From

Table 3, it can be seen that the

of biased estimation data fusion (BED) decreased by an average of 9.25% and 10.26% compared to least squares estimation data fusion (LSD) and batch estimation data fusion (BTD). Therefore, BED has higher fusion accuracy.

From

Table 4, it can be seen that the

of BED decreased by an average of 16.24% and 20.28% compared to LSD and BTD. Therefore, the bed has higher stability.

And, as the noise variance increases, the average increases in and of LSD are 0.297% and 0.036, the average increases in and of BTD are 0.306% and 0.038, and the average increases in the and of BED are 0.269% and 0.03. So, and of BED decreased by an average of 11.59% and 18.85% compared to LSD and BTD, respectively. Therefore, BED has higher noise resistance.

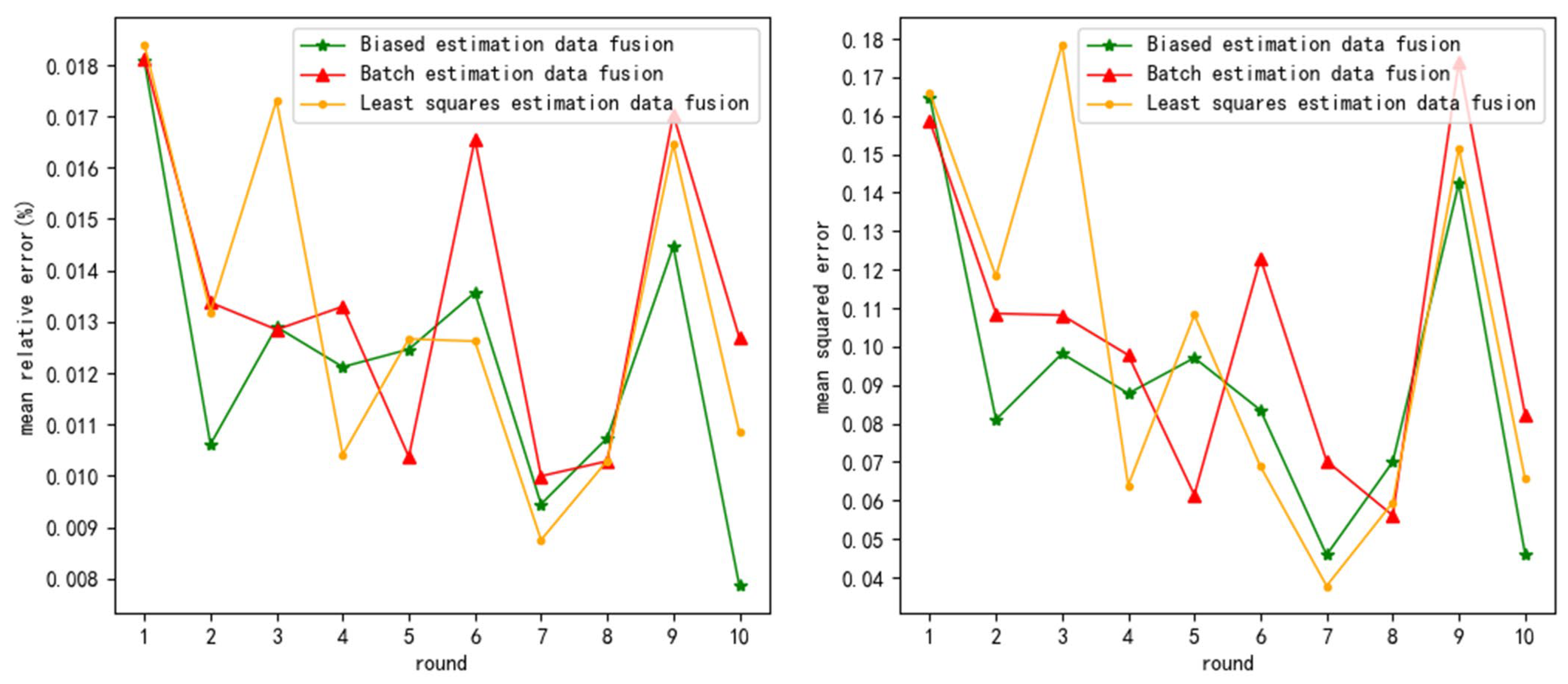

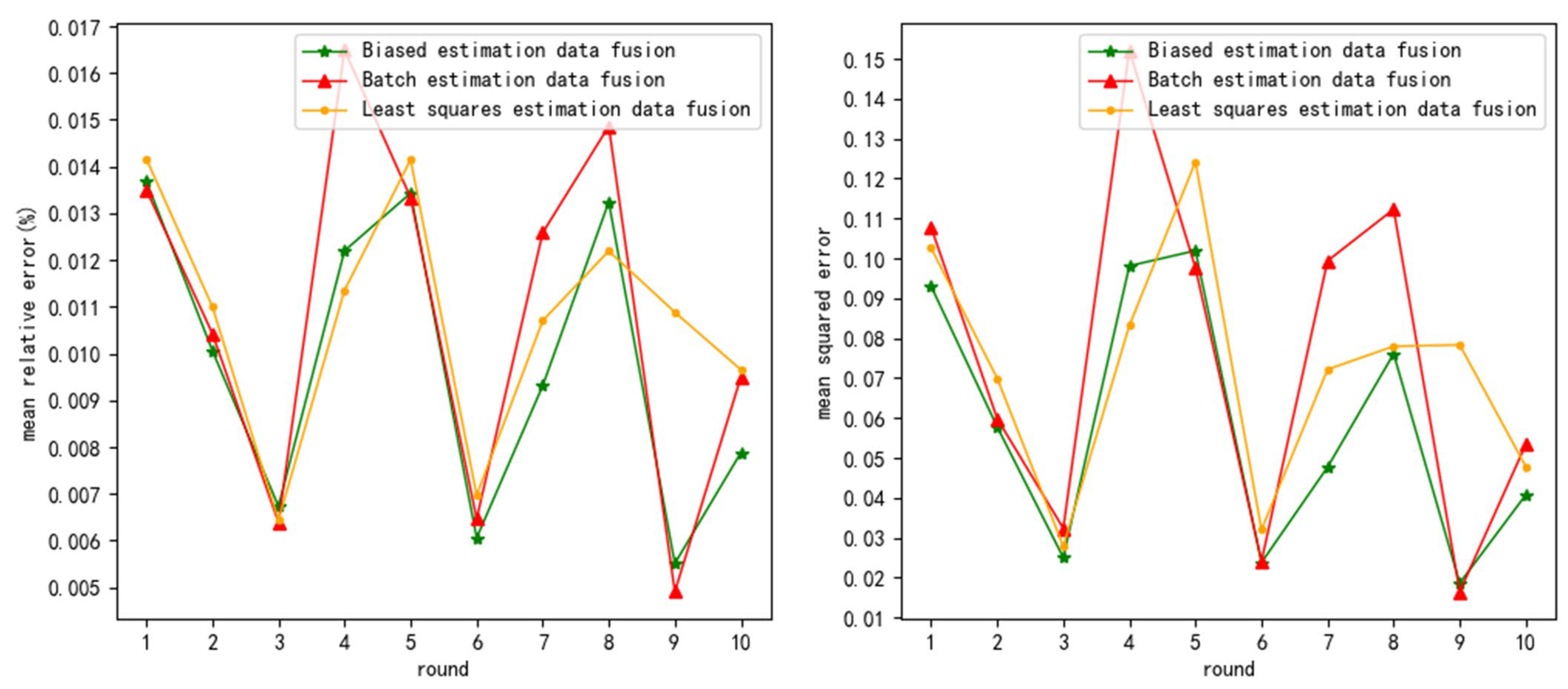

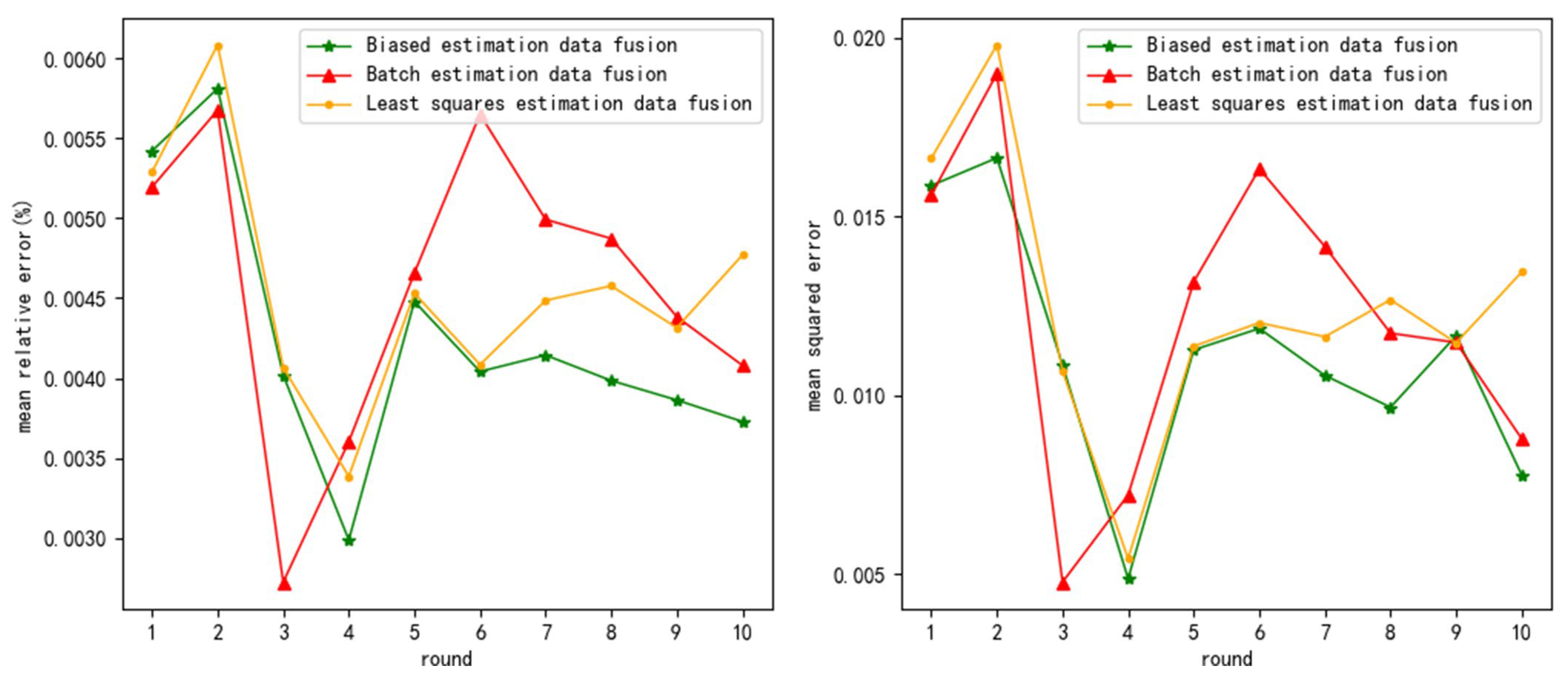

5.2. Uniform Distribution White Noise Test

From

Table 5, it can be seen that the

of BED decreased by an average of 8.56% and 6.58% compared to LSD and BTD. Therefore, BED has higher fusion accuracy, but the advantage of

is reduced by 22.39% compared to normal distribution white noise testing.

From

Table 6, it can be seen that the

of BED decreased by an average of 17.42% and 21.01% compared to LSD and BTD. Therefore, BED has higher fusion accuracy, but the advantage of

is increased by 5.23% compared to normal distribution white noise testing.

As the noise variance increases, the average increases in and of LSD are 0.305% and 0.03, the average increases in and of BTD are 0.298% and 0.031, and the average increases in and of BED are 0.278% and 0.024. So, and of BED decreased by an average of 8.21% and 21.29% compared to LSD and BTD. BED has higher noise resistance, and the comprehensive noise resistance is equivalent to that of normal distribution white noise testing.

In summary, even if the noise does not conform to a normal distribution, as long as the mean noise is 0, the algorithm can perform well.

6. Discussion

In this paper, an adaptive weighting data fusion algorithm based on biased estimation is proposed to address the shortcomings of unbiased estimation data fusion. The algorithm in this paper first further reduces the estimation error by biased estimation and then uses the estimation error instead of the measurement variance to compute the optimal weighting factor, which avoids the fact that the optimal weighting factor is not optimal in some cases. The final results of the simulation experiments show that this algorithm outperforms the original algorithm in terms of fusion accuracy, stability, and noise resistance, which are better than other algorithms.

The essence of this algorithm is to sacrifice unbiasedness for higher estimation accuracy, so the overall accuracy of biased estimation is significantly higher than that of unbiased estimation. However, due to the loss of unbiasedness, the performance of the algorithm is not as good as expected when using adaptive weighted data fusion, so there is still much room for improvement.