Abstract

The optimal design and construction of multispectral cameras can remarkably reduce the costs of spectral imaging systems and efficiently decrease the amount of image processing and analysis required. Also, multispectral imaging provides effective imaging information through higher-resolution images. This study aimed to develop novel, multispectral cameras based on Fabry–Pérot technology for agricultural applications such as plant/weed separation, ripeness estimation, and disease detection. Two multispectral cameras were developed, covering visible and near-infrared ranges from 380 nm to 950 nm. A monochrome image sensor with a resolution of 1600 × 1200 pixels was used, and two multispectral filter arrays were developed and mounted on the sensors. The filter pitch was 4.5 μm, and each multispectral filter array consisted of eight bands. Band selection was performed using a genetic algorithm. For VIS and NIR filters, maximum RMS values of 0.0740 and 0.0986 were obtained, respectively. The spectral response of the filters in VIS was significant; however, in NIR, the spectral response of the filters after 830 nm decreased by half. In total, these cameras provided 16 spectral images in high resolution for agricultural purposes.

1. Introduction

Single-shot (also called snap-shot) multi-spectral (MS) cameras contain multi-spectral filter arrays (MSFAs) composed of several spectral filters (normally more than five filters). These filters are narrow-band filters that provide extra information compared to common RGB cameras [1]. MS cameras provide more information, as they provide images in different regions of the electromagnetic spectrum [2]. The classical solution was to use a filter wheel in front of an image sensor. Each time that a bandpass filter was in front of the image sensor, an image was captured. Another solution was to use passband filters on different image sensors and different optics. However, the recent solution proposes that MSFAs be used on the top of the image sensor to provide a MS image in a single-shot [3].

Previous research has shown that, for agricultural applications, most of the necessary information is obtained in the visible (VIS) and near-infrared (NIR) parts of the spectrum [4,5,6,7,8,9]. In this regard, much research has been conducted on the use of VIS and NIR spectral data, such as the development of spectral indices [10,11,12], the detection of plant diseases [13,14,15,16], weed detection [7,17,18], nutrient content estimation [19,20], monitoring water stress [21], phenotyping [22,23], and the quality measurement of agricultural products [24,25]. Thomas et al. [26], in their study on the benefits of hyperspectral imaging for disease detection, pointed out that, in addition to the visible part of spectral data (i.e., 400–700 nm), the NIR wavelengths (i.e., 700–1000 nm) significantly represent the pathogens influencing the cellular structure of plants. Shapira et al. [27] used spectroscopy for weed detection in wheat and chickpea fields in the range of 400 to 2400 nm. They reported 11 wavelengths as the most effective wavelengths for weed detection, all of which fell into the range of 400–1070 nm.

MS imaging brings about many advantages compared to other spectral techniques. Spectroradiometers have been used in previous research; however, spectroradiometers are highly expensive and sensitive tools that provide data only for a single point. Another difficulty is dimensionality reduction, as the spectroradiometers provide a huge amount of data. But MS imaging provides the data via effective spectral bands, which directly helps the extraction of useful features for analysis. In addition, the MS imaging provides the spectral data for a region in a snap-shot that is necessary for a wide range of applications. Thus, novel snap-shot MS cameras can replace the usage of spectroradiometers, facilitate agricultural production and operation management, reduce costs of spectral measurements, and decrease the amount of hyperspectral data analysis.

Recently, much attention has been paid to MS imaging, and several works have been reported for the development of MSFA, MS cameras, and demosaicking algorithms [28,29,30,31,32]. Abbas et al. [33] reported a novel technique for the demosaicking of spectral snap-shot images. They reported a better performance compared to the previous technique. However, recent research on demosaicking techniques has been focused on the use of deep learning applications [34,35,36]. Ma et al. [37] proposed a near-infrared hyperspectral demosaicking method which was based on convolutional neural networks. The method was developed for low illumination and a mosaic of 5 × 5 pixels.

Research on the development of MSFA cameras has been oriented toward low-cost, compact, snap-shot, and high-resolution cameras. However, improving the resolution, increasing the number of bands, and providing snap-shot imaging remarkably increases the costs of these cameras. Sun et al. [38] developed a four-band MS camera based on MSFA technology. They used an array of 2 × 2 and proposed a demosaicking algorithm based on directional filtering and wavelet transform. As another solution, Bolton et al. [30] developed a MS imaging system for VIS and NIR based on the use of a monochrome camera and 13 different LEDs with different working areas in VIS and NIR. Cao et al. [39] developed an artificial compound eye (ACE) for MS imaging. The center wavelengths were 445 nm, 532 nm, and 650 nm, and the imaging systems were made up of combinations of diffractive beam splitting lenses. They concluded that, based on the structure of the system, which was multi-aperture and composed of multiple sub-eye lenses, different kinds of spectral imaging structures with various central wavelengths would be possible to design. He et al. [40] developed a three-band MS camera using a filter array integrated on the monochrome CMOS image sensor. Their camera was based on a narrow spectral band, a red–green–blue color mosaic in a Bayer pattern. The proposed MSFA consisted of a hybrid combination of plasmonic color filters and a heterostructured dielectric.

In this work, two MS cameras covering VIS and NIR ranges were designed and developed for agricultural applications. The spectral resolution of these cameras was several times higher than that in previous research, as 16 spectral bands were provided for VIS and NIR. The optimized spectral bands were selected using a genetic algorithm between 380 nm and 950 nm based on the spectral reflectance of eight plants and weeds. A novel design for filter arrays was proposed for the MS cameras, with eight spectral bands in a mosaic of 4 × 4 pixels. The MSFAs were developed based on Fabry–Pérot technology. A commercial CMOS sensor was chosen, and specific sensor boards were developed.

2. Materials and Methods

The development of novel MS cameras requires the creation of MSFAs, the design and development of a sensor board, the assembly of the MSFA, and the development image sensor and software. Hereafter, these steps will be explained in different sections.

2.1. Image Sensor

The CMOS sensor is the physical element whose performance impacts the quality of the final imaging system. In this project, the chosen sensor needed to meet the criteria below in order to achieve the best performance for this application:

- Minimum pixel size needed to be greater than 4 µm; this was a limitation caused by the COLOR SHADES® Filter technology, which was used to create the MSFA filters;

- The CMOS sensor resolution had to be high enough to compensate the loss related to the MSFA system (i.e., sensor resolution > 1 k);

- The spectral sensitivity of the sensor was required to be extended to the near-infrared range (i.e., 380–1000 nm);

- Quantum efficiency was very good in the chosen spectral band;

- Integration time was variable.

Taking into account the above specifications, our choice was the E2V Sapphire sensor which is a grey-scale CMOS sensor without cover glass. This sensor was provided by E2V Teledyne Company, Saint-Egrève, France. Table 1 provides the technical details of the chosen image sensor.

Table 1.

Electro-optical specifications of the E2V image sensor.

2.2. Band Selection

The goal of this project was to provide a sensor based on MSFA technology that covered a spectral range between 380 and 1000 nm. In this regard, an MSFA was needed for the visible range and an MSFA for the NIR. Thus, the spectral area of interest could be divided into two zones:

- The area was centered around 550 nm and was all-around green, the most important color in the field;

- The area of near infrared, which was promising for the estimations based on the amount of chlorophyll in the plant leaves.

For band selection, a dataset of 626 spectral reflectance samples of eight plants, including three crop plants (i.e., tomato, cucumber, and bell pepper) and five weeds (i.e., bindweed, nutsedge, Plantago lanceolata, potentilla, and sorrel) was prepared and used via the band selection program. The spectral reflectance was measured using the spectroradiometer Specbos 1211 (JETI Technische Instrumente GmbH, Jena, Germany) under a type A illuminant.

The optimized selection of spectral bands is a difficult task, and thousands of combinations are possible. Accordingly, the band selection was conducted using Genetic Algorithm (GA). The program was prepared using MATLAB software (2019b version, MathWorks Inc., Natick, Massachusetts, USA). Considering k samples and i (i = 1, 2, …, nu) multispectral filters, the response of a CMOS sensor, O, could be expressed as follows:

where S is the sensitivity of the CMOS sensor, Ui is the diagonal matrix of spectral transmittance for the ith filter, E is the diagonal matrix of spectral power distribution of an illuminant, and is the spectral reflectance of the kth sample in the data set. The reconstruction of spectral reflectance was achieved via Equation (2):

where W is the Wiener transformation matrix. The Gaussian model, which is a continuous and symmetric distribution, was used as follows for the simulations of the transmission of interference filters [41]:

where and control the center and width of the filter, stands for the wavelength, and and k are constants. The SNR was chosen as 100, adding normally distributed white noise to the system. FWHM (filter width at half maximum) was set to 30 nm for both VIS and NIR. The fitness limit, crossover fraction, and migration fraction were set at 0.02, 0.6, and 0.2, respectively. The population size was determined to be 100.

2.3. MSFA Sensor Technology

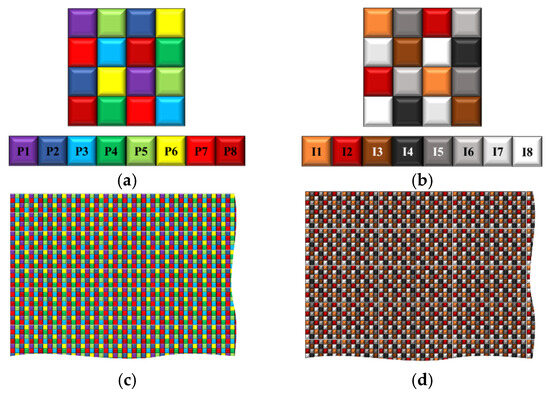

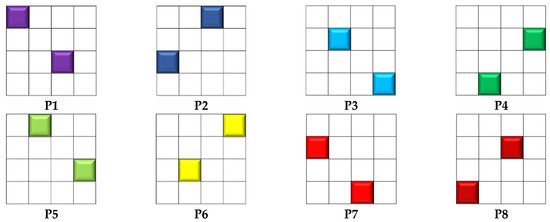

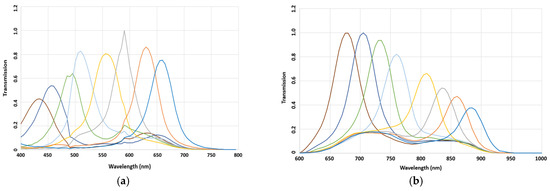

The development of MSFA was carried out using color shade technology. In this work, the VIS camera was equipped with an MSFA of 8 bands, covering from 380 to 680 nm (Figure 1). The NIR camera was equipped with an MSFA of 8 bands, started from 650 to 900 nm (Figure 1). This solution is often used with several cameras, where each camera is equipped with one multi-band filter array. Despite the ease of implementation of this solution, it presented some challenges, one of which was that each camera had its own optical axis. This poses a problem in the reconstruction of multi-band images. Also, it is difficult to properly manage the synchronization of the cameras. One important advantage is the ability to manage the integration time of the two cameras separately based on their sensitivity.

Figure 1.

Distribution of the final moxel. (a) VIS, (b) NIR, (c) cross-section of VIS MSFA, and (d) cross-section of NIR MSFA.

Based on our experiences in MSFA imaging, some modifications were introduced in order to improve the final sensor [42]. The modification was a change that was made at the level of the moxel (i.e., filter pixel array). Indeed, to compensate for the loss of sensitivity beyond 850 nm, a complex structure of the moxel (4 × 4 pixels) was utilized (Figure 1).

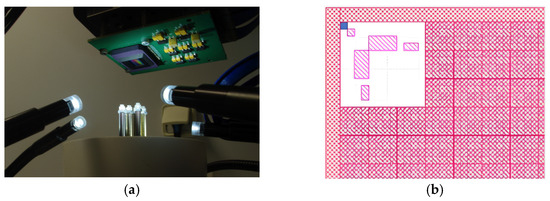

2.4. Hybridation of MSFA and Image Sensor

Hybridation is the assembly process of the MSFA on the CMOS sensor. This assembly brings about difficulties which have been observed in previous projects [43]. The most important problem is crosstalk, which stands for the misplacement of filter units on the image sensor pixels. This mismatch between a pixel and its correspondent filter unit leads to the passing of unfavorable light or partial blocking of light. For this hybridation, a nano-positioning system (6D ALIO, Arvada, CO, USA) was used. Figure 2a shows the central part of this system, in which the CMOS sensor is attached to the top part and the MSFA is held to the system by the supports. Using the alignment marks placed in the 4 corners of the MSFA plane, the CMOS was displaced and positioned on the MSFA plane (Figure 2b). The final sensor was a snap-shot MFSA camera sensor (Figure 3).

Figure 2.

Sensor hybridation: (a) the central part of the nano-positioning system and (b) alignment marks on the top left corner of the filter plane.

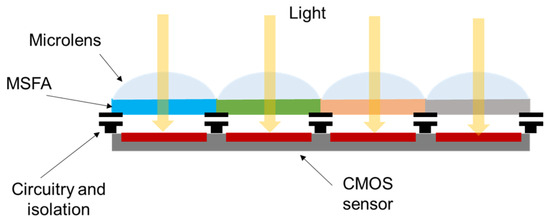

Figure 3.

Structure of the hybrid sensor.

The sensor, integrated into a camera with dedicated hardware and software, allowed for the real-time operation of applications at 30 fps. The increase in the camera speed had an impact on the spatial resolution. In order to provide an optimal solution for the loss of spatial resolution inherent to the MSFA, specific algorithms for multispectral demosaicking were developed.

2.5. Driving Board

This unit was responsible for receiving data from the sensor board, the control unit, the processing data, and the communication unit with a PC or other devices. The design was carried out using the Vivado platform (Vivado version 2018.2) of Xilinx, which is a graphical development environment. The algorithm representing the functionality of this unit was as follows:

- Receive the video stream (48-bit) from the sensor board;

- De-serialize the data into a single stream;

- Detect the pixel flow (timing detector);

- Control and prepare the data for simultaneous input and output;

- Generate a pixel flow (timing generator);

- Send data to communication gates (HDMI/VGA).

2.6. Sensor Analysis and Characterization

To estimate the spectral response of the filter, a global estimation of the filter was carried out. This was achieved using a monochromator system (Gooch and Housego, Orlando, FL, USA) present in the PImRob Platform of the ImViA. This system has a full-width half-maximum (FWHM) of 0.25 nm. The wavelengths of the incoming light were swept in steps of 5 nm from 300 nm to 1000 nm. An image was captured for each wavelength with a proper integration time, which permitted no saturation of any of the channels, but maximized the incoming signal to limit noise. Some information was required to be verified during this calibration process, as follows:

- Verifying energy balancing, which is important in order to provide the same factors for a polychromatic light so that a software correction can be provided;

- Analyzing the impact of rejecting bands, which sometimes occur when using the Fabry–Pérot system. In this case, the impact of the addition of a band pass filter to the hybrid sensor needed to be analyzed;

- Analysis of the MSFA positioning impact and the crosstalk between the MSFA and the image sensor;

- Considering the need to amplify the signal or change the nature of the substrate used for MSFA development.

Energy balance is important for single-sensor spectral imaging in order to minimize the noise and balance it between channels. Indeed, it might happen that one channel is saturated while another does not receive enough incoming light, which would critically impair the application [42]. We call the response of the camera according to a simple model of image formation, assuming the perfect diffuser reflectance, as defined in Equation (4) [42]:

where I(λ) is the spectral emission of the tested illuminant, S(λ) is the camera response, and p is the index of the spectral band. The standard illuminants of the CIE (Commission Internationale de l’Eclairage) could not be used for the NIR part of the spectrum, as they could not yet be described by these standards. Thus, alternative illuminations were selected and computed. Therefore, a D65 solar emission simulator was selected. In addition, tungsten and illuminate E were considered. To estimate the spectral response of the filter, a global estimation of the MS imaging sensor was set up. To realize this calibration, the following steps were defined. First, the energy generated by the monochromator was measured from 360 to 800 nm for the VIS Sensor and from 550 to 1050 nm for the NIR. Second, the system generated a monochromatic light each 5 nm from 360 to 1100 nm. Thus, the original CMOS sensor (without a mounted filter) was illuminated. The integrated time of the camera was set as constant. In this way, the spectral response of the sensor was measured. The second measurement was made with the MS imaging sensor. An image processing algorithm was built to extract a pixel related to a specific filter from each moxel. Finally, the filter’s spectral response was obtained from the CMOS sensor response measured in the second step.

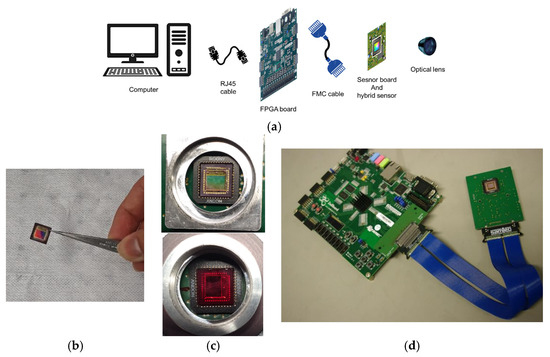

2.7. Assembly and Build-Up

Figure 4 represents the components of the MSFA system. There are three main parts, including the MSFA sensor and its board, the FPGA board, and the windows-based application. The developed sensor board was connected to a Zedboard (Digilent FPGA) via a deported and very flexible connection. For the connection, an FMC cable with 100 pins was used. This unit was designed to control signals coming from the sensor, so a link between the processor part and the PL part was necessary. The Zedboard was connected to the PC using an RJ45 cable.

Figure 4.

The overall structure and different components of the system. (a) All of the components before assembly, (b) the CMOS sensor, (c) CMOS with MSFA mounted on the board (the VIS sensor above and the NIR sensor below), and (d) the assembled components and boards.

2.8. Demosaicking and Image Reconstruction

Each monochrome image taken by the cameras consisted of data on 8 single-band planes. The demosaicking procedure refers to the extraction and reconstruction of an MS image consisting of eight single-band images (i.e., an image cube of eight layers). The demosaicking algorithm read the monochrome image, took each mosaic of the image, completed the missing pixels, and produced an image of the same size. Figure 5 illustrates the architecture of a single mosaic and how two pixels were attributed to each filter. These masks were repeated Nl and Nc times along lines and columns of the image to cover the entire image.

Nl = 1200/4 = 300

Nc = 1600/4 = 400

Figure 5.

Mosaic masks and the pixels whose values need to be determined.

Bilinear demosaicking was applied on each band. This included finding the missing values using linear interpolation in both main directions. The final step was to calibrate the reconstructed image based on the standard Lambertian white.

3. Results and Discussion

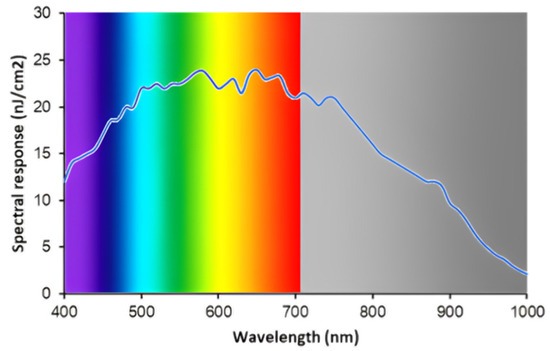

3.1. CMOS Sensitivity

The sensitivity of the CMOS sensor was evaluated. Figure 6 shows the spectral response of this sensor, which should be taken into consideration for band selection and filter development. The sensor was provided by Teledyne E2V (EV76C560, Chelmsford, UK). Although the gain fell toward the IR, which makes sense for an SI sensor, it was still acceptable.

Figure 6.

Spectral response of E2V image sensor.

3.2. Spectral Bands

The spectral bands for the creation of MSFAs were determined using a simulation program based on GA. Table 2 presents the details of the simulations for band selection in VIS and NIR, obtaining goodness of fitness coefficients (GFCs) of 99% and 98%, respectively, at the probability level of 99%. The standard deviation was quite small, and the achieved RMS values were 0.012 and 0.011 for VIS and NIR, respectively. Hence, using a genetic algorithm, the sixteen optimized Gaussian filters were obtained based on the fitness function of Wiener.

Table 2.

Simulation results of band selection using GA.

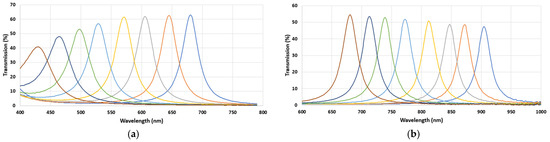

Figure 7 illustrates the spectral sensitivities of the chosen filters after fabrication. This figure presents the relative sensitivities of each filter. It can be seen that the transmission rates of all bands were over 40%, and very good consistency was observed for the primary prototypes.

Figure 7.

Spectral sensitivities of the MSFAs measured before the hybridation on the sensor; (a) VIS and (b) NIR.

The bands selected through the simulation and the final, real results are shown in Table 3 and Table 4. It is observed that the actual bands after fabrication were slightly different from the desired bands; however, this is normal as a result of the production process of the filters. The choice of bands plays a critical role in the development of MS cameras, as it directly depends on the application of the MS cameras and their functionalities. The final spectral bands of the MSFAs perfectly match agricultural applications such as disease detection. Yang et al. [44] reported 426 nm as the effective wavelength in the spectral detection of brown plant hopper and leaf folder infestations of rice canopy. Zhang et al. [45] reviewed the previous research on the remote sensing techniques for disease detection and reported 655 nm and 735 nm as important fluorescence indicators. Huang et al. [46] studied the detection of rice leaf folder based on hyperspectral data. Important disease detection regions were observed at 526–545 nm, 550–568 nm, 581–606 nm, 688–699 nm, 703–715 nm, and 722–770 nm. Khaled et al. [47] studied the early detection of diseases in plant tissue using spectroscopy. They found that the wavelengths of 550 to 630 nm aided in the detection of the disease. The study of Qin et al. [48] on the detection of citrus canker showed that the wavelengths of 553, 677, 718, and 858 nm were the most effective. The usage of MS cameras with a few bands on the effective wavelengths also has been taken into consideration. Stemmler [49] developed an MS camera using an RGB and two monochrome cameras taking MS images in three bands (i.e., 548, 725, and 850 nm). For the monochrome cameras, they took advantage of band-pass interference filters. Du et al. [50] developed a MS imaging system covering a wider range of electromagnetic spectrum. They designed and constructed a common-aperture MS imaging system which took VIS, short-wave infrared (SIR), and mid-wave infrared (MIR) MS images. Their structure was based on two dichroic mirrors for the purpose of splitting the light spectrum.

Table 3.

Comparison between the specification and the actual centered wavelengths for the VIS MSFA.

Table 4.

Comparison between simulated and real centered wavelengths for the NIR MSFA.

These tables provide the spectral specifications of the MSFAs. We observed that, except for the filters of 425 and 460 nm, all other filters met the desired FWHM of the specification. For both VIS and NIR MSFAs, the maximum transmission was over 40% which is desired for MS applications. As we observed, the spectral response of the filters was very good, and the best response was achieved in the red region of the spectrum for the VIS and between 650 nm and 750 nm for the NIR.

After mounting the MSFA on the image sensor, other pieces, including the sensor board, the driving board, the data transfer cables, and the box, were assembled. Figure 8 shows the developed camera. The box, designed via 3D printing, included two main parts: the small part in front for the sensor board and the back part for holding the driving board and other connections.

Figure 8.

The final camera after assembly of all parts.

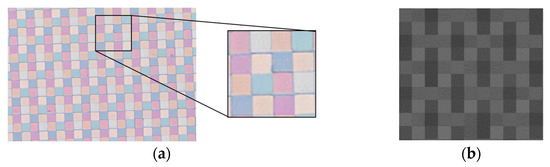

A photo of the mounted hybrid sensor under microscope is presented in Figure 9. A mosaic of the MSFA is magnified for verification of the structure and pixels (Figure 9a). Figure 9b presents a zoom-in of image acquired with the one-shot VIS MS camera. Figure 10 illustrates the spectral sensitivity of the MS image sensor. The lowest filter responses were found at 425 nm and 885 nm for the VIS and NIR MSFAs, respectively.

Figure 9.

The sensor imaged under a microscope; (a) hybrid sensor; (b) mosaic image.

Figure 10.

Spectral response of the cameras after hybridation process. (a) VIS hybrid sensor; (b) NIR hybrid sensor.

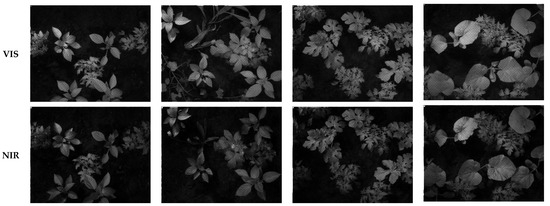

Figure 11 presents some of the images taken by the cameras from several plants. The VIS and NIR images were taken at the same time for the same scenes. These images are the raw images, which include eight single-band images to be extracted by demosaicking. The illumination used for these images consisted of three halogen lights. This illumination was chosen to provide enough energy for both VIS and NIR. Shrestha et al. [51] used a 3D camera with two sensors for an MS camera by using MS filters in front of the image sensors. In a recent study, Zhang et al. [52] developed an MS camera for the visible range. The camera was designed to be compact, handheld, and of high spatial resolution.

Figure 11.

Example images taken by the cameras from different plants. The cameras were mounted beside each other, and the images of the scene were taken by the cameras simultaneously. Top row: VIS image; bottom row: NIR image.

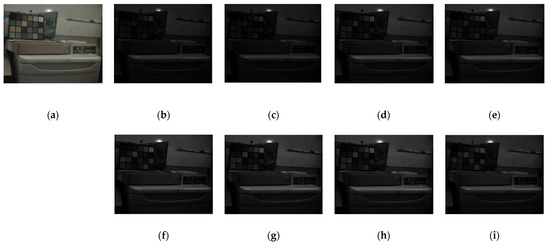

Figure 12 provides an image of a scene with a standard color checker. In this figure, (a) represents a color image built using one of the multispectral cameras, and the rest are eight bands of the VIS MS camera. In this figure, (b)–(i) are images that have been reconstructed by the demosaicking algorithm.

Figure 12.

A sample image of a scene with a color checker and the result after demosaicking with the bilinear algorithm. (a) The RGB built using three bands, (b–i) different MS image bands from P1 to P8 channels.

4. Conclusions

In this project, two MS cameras were developed for agricultural applications. Each MS camera consisted of an eight-band MSFA, which covered from 380 nm to 950 nm (i.e., VIS and NIR). This type of MS camera, which has relatively good resolution, brings about advantages for many applications in agriculture.

A novel mosaic of 4×4 pixels was proposed, and it was observed that the proposed mosaic pattern may be promising for snap-shot MS cameras. The pixel pitch decreased in comparison to previous research, leading to a significant outcome. The proposed CMOS sensor provided good spectral sensitivity in VIS and NIR. Spectral band selection was optimized using GA, and a total of 16 spectral bands were selected and proposed for agricultural applications. It was observed that the fabrication process shifted the centering of the filters, but the sensitivity levels of all filters were acceptable. The MSFA was mounted on the image sensor with a nano-positioning system to avoid the crosstalk problem. It was observed that the proposed mosaic pattern and the demosaicking algorithm functioned successfully. The spectral response of the cameras was remarkable, and for both cameras, the MS images were clear, precise, and of a high resolution.

Author Contributions

Conceptualization, P.G. and V.M.; methodology, P.G. and V.M.; software, M.R. and K.K.K.; validation, P.G.; formal analysis, V.M.; investigation, V.M.; resources, P.G.; data curation, V.M.; writing—original draft preparation, V.M.; writing—review and editing, P.G.; visualization, K.K.K.; supervision, P.G.; project administration, P.G.; funding acquisition, P.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are contained within the article.

Acknowledgments

This paper presents the cameras developed in the European CAVIAR project accepted by Penta organization. The authors appreciate the support and collaboration of Penta and other members who participated in this project.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shinoda, K.; Yanagi, Y.; Hayasaki, Y.; Hasegawa, M. Multispectral filter array design without training images. Opt. Rev. 2017, 24, 554–571. [Google Scholar] [CrossRef]

- Genser, N.; Seiler, J.; Kaup, A. Camera Array for Multi-Spectral Imaging. IEEE Trans. Image Process. 2020, 29, 9234–9249. [Google Scholar] [CrossRef] [PubMed]

- Thomas, J.-B.; Lapray, P.-J.; Gouton, P. HDR Imaging Pipeline for Spectral Filter Array Cameras. In Proceedings of the Scandinavian Conference on Image Analysis, Tromsø, Norway, June 12–14 2017; Springer: Cham, Switzerland, 2017; pp. 401–412. [Google Scholar]

- Chang, J.; Clay, S.A.; Clay, D.E.; Dalsted, K. Detecting weed-free and weed-infested areas of a soybean field using near-infrared spectral data. Weed Sci. 2004, 52, 642–648. [Google Scholar] [CrossRef]

- Wójtowicz, M.; Wójtowicz, A.; Piekarczyk, J. Application of remote sensing methods in agriculture. Commun. Biometry Crop Sci. 2016, 11, 31–50. [Google Scholar]

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef] [PubMed]

- Pott, L.P.; Amado, T.J.C.; Schwalbert, R.A.; Sebem, E.; Jugulam, M.; Ciampitti, I.A. Pre-planting weed detection based on ground field spectral data. Pest Manag. Sci. 2020, 76, 1173–1182. [Google Scholar] [CrossRef]

- Prananto, J.A.; Minasny, B.; Weaver, T. Near Infrared (NIR) Spectroscopy as a Rapid and Cost-Effective Method for Nutrient Analysis of Plant Leaf Tissues. Adv. Agron. 2020, 164, 1–49. [Google Scholar]

- Sarić, R.; Nguyen, V.D.; Burge, T.; Berkowitz, O.; Trtílek, M.; Whelan, J.; Lewsey, M.G.; Čustović, E. Applications of hyperspectral imaging in plant phenotyping. Trends Plant Sci. 2022, 27, 301–315. [Google Scholar] [CrossRef]

- Beeri, O.; Peled, A. Spectral indices for precise agriculture monitoring. Int. J. Remote Sens. 2006, 27, 2039–2047. [Google Scholar] [CrossRef]

- Mahlein, A.-K.; Rumpf, T.; Welke, P.; Dehne, H.-W.; Plümer, L.; Steiner, U.; Oerke, E.-C. Development of spectral indices for detecting and identifying plant diseases. Remote Sens. Environ. 2013, 128, 21–30. [Google Scholar] [CrossRef]

- Huang, W.; Guan, Q.; Luo, J.; Zhang, J.; Zhao, J.; Liang, D.; Huang, L.; Zhang, D. New optimized spectral indices for identifying and monitoring winter wheat diseases. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 2516–2524. [Google Scholar] [CrossRef]

- Sankaran, S.; Mishra, A.; Ehsani, R.; Davis, C. A review of advanced techniques for detecting plant diseases. Comput. Electron. Agric. 2010, 72, 1–13. [Google Scholar] [CrossRef]

- Owomugisha, G.; Melchert, F.; Mwebaze, E.; Quinn, J.A.; Biehl, M. Machine learning for diagnosis of disease in plants using spectral data. In Proceedings of the International Conference on Artificial Intelligence (ICAI), The Steering Committee of the World Congress in Computer Science, Computer, Stockholm, Sweden, 13–19 July 2018; pp. 9–15. [Google Scholar]

- Owomugisha, G.; Nuwamanya, E.; Quinn, J.A.; Biehl, M.; Mwebaze, E. Early detection of plant diseases using spectral data. In Proceedings of the APPIS 2020: 3rd International Conference on Applications of Intelligent Systems, Las Palmas de Gran Canaria, Spain, 7–9 January 2020; pp. 1–6. [Google Scholar]

- Feng, L.; Wu, B.; Zhu, S.; Wang, J.; Su, Z.; Liu, F.; He, Y.; Zhang, C. Investigation on Data Fusion of Multisource Spectral Data for Rice Leaf Diseases Identification Using Machine Learning Methods. Front. Plant Sci. 2020, 11, 577063. [Google Scholar] [CrossRef]

- Bradley, B.A. Remote detection of invasive plants: A review of spectral, textural and phenological approaches. Biol. Invasion 2014, 16, 1411–1425. [Google Scholar] [CrossRef]

- Shirzadifar, A.; Bajwa, S.; Mireei, S.A.; Howatt, K.; Nowatzki, J. Weed species discrimination based on SIMCA analysis of plant canopy spectral data. Biosyst. Eng. 2018, 171, 143–154. [Google Scholar] [CrossRef]

- Pandey, P.; Irulappan, V.; Bagavathiannan, M.V.; Senthil-Kumar, M. Impact of Combined Abiotic and Biotic Stresses on Plant Growth and Avenues for Crop Improvement by Exploiting Physio-morphological Traits. Front. Plant Sci. 2017, 8, 537. [Google Scholar] [CrossRef]

- Taha, M.F.; ElManawy, A.I.; Alshallash, K.S.; ElMasry, G.; Alharbi, K.; Zhou, L.; Liang, N.; Qiu, Z. Using Machine Learning for Nutrient Content Detection of Aquaponics-Grown Plants Based on Spectral Data. Sustainability 2022, 14, 12318. [Google Scholar] [CrossRef]

- Ihuoma, S.O.; Madramootoo, C.A. Sensitivity of spectral vegetation indices for monitoring water stress in tomato plants. Comput. Electron. Agric. 2019, 163, 104860. [Google Scholar] [CrossRef]

- Li, L.; Zhang, Q.; Huang, D. A Review of Imaging Techniques for Plant Phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef]

- Gutiérrez, S.; Tardaguila, J.; Fernández-Novales, J.; Diago, M.P. Data mining and NIR spectroscopy in viticulture: Applications for plant phenotyping under field conditions. Sensors 2016, 16, 236. [Google Scholar] [CrossRef]

- Zhang, B.; Dai, D.; Huang, J.; Zhou, J.; Gui, Q.; Dai, F. Influence of physical and biological variability and solution methods in fruit and vegetable quality nondestructive inspection by using imaging and near-infrared spectroscopy techniques: A review. Crit. Rev. Food Sci. Nutr. 2017, 58, 2099–2118. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Luo, X.; Zhang, X.; Passos, D.; Xie, L.; Rao, X.; Xu, H.; Ting, K.C.; Lin, T.; Ying, Y. A deep learning approach to im-proving spectral analysis of fruit quality under interseason variation. Food Control 2022, 140, 109108. [Google Scholar] [CrossRef]

- Thomas, S.; Kuska, M.T.; Bohnenkamp, D.; Brugger, A.; Alisaac, E.; Wahabzada, M.; Behmann, J.; Mahlein, A.-K. Benefits of hyperspectral imaging for plant disease detection and plant protection: A technical perspective. J. Plant Dis. Prot. 2018, 125, 5–20. [Google Scholar] [CrossRef]

- Shapira, U.; Herrmann, I.; Karnieli, A.; Bonfil, D.J. Field spectroscopy for weed detection in wheat and chickpea fields. Int. J. Remote Sens. 2013, 34, 6094–6108. [Google Scholar] [CrossRef]

- Kise, M.; Park, B.; Heitschmidt, G.W.; Lawrence, K.C.; Windham, W.R. Multispectral imaging system with inter-changeable filter design. Comput. Electron. Agric. 2010, 72, 61–68. [Google Scholar] [CrossRef]

- Frey, L.; Masarotto, L.; Armand, M.; Charles, M.-L.; Lartigue, O. Multispectral interference filter arrays with compensation of angular dependence or extended spectral range. Opt. Express 2015, 23, 11799–11812. [Google Scholar] [CrossRef]

- Bolton, F.J.; Bernat, A.S.; Bar-Am, K.; Levitz, D.; Jacques, S. Portable, low-cost multispectral imaging system: Design, development, validation, and utilization. J. Biomed. Opt. 2018, 23, 121612. [Google Scholar] [CrossRef]

- Shinoda, K.; Yoshiba, S.; Hasegawa, M. Deep demosaicking for multispectral filter arrays. arXiv 2018, arXiv:1808.08021. [Google Scholar]

- Gutiérrez, S.; Wendel, A.; Underwood, J. Spectral filter design based on in-field hyperspectral imaging and machine learning for mango ripeness estimation. Comput. Electron. Agric. 2019, 164, 104890. [Google Scholar] [CrossRef]

- Abbas, K.; Puigt, M.; Delmaire, G.; Roussel, G. Joint Unmixing and Demosaicing Methods for Snapshot Spectral Images. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Habtegebrial, T.A.; Reis, G.; Stricker, D. Deep convolutional networks for snapshot hypercpectral demosaicking. In Proceedings of the 2019 10th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 September 2019; pp. 1–5. [Google Scholar]

- Li, P.; Ebner, M.; Noonan, P.; Horgan, C.; Bahl, A.; Ourselin, S.; Shapey, J.; Vercauteren, T. Deep learning approach for hyperspectral image demosaicking, spectral correction and high-resolution RGB reconstruction. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2022, 10, 409–417. [Google Scholar] [CrossRef]

- Dijkstra, K.; van de Loosdrecht, J.; Schomaker, L.R.; Wiering, M.A. Hyperspectral demosaicking and crosstalk correction using deep learning. Mach. Vis. Appl. 2019, 30, 1–21. [Google Scholar] [CrossRef]

- Ma, X.; Tan, M.; Zhang, S.; Liu, S.; Sun, J.; Han, Y.; Li, Q.; Yang, Y. A snapshot near-infrared hyperspectral demosaicing method with convolutional neural networks in low illumination environment. Infrared Phys. Technol. 2023, 129, 104510. [Google Scholar] [CrossRef]

- Sun, B.; Yuan, N.; Cao, C.; Hardeberg, J.Y. Design of four-band multispectral imaging system with one single-sensor. Future Gener. Comput. Syst. 2018, 86, 670–679. [Google Scholar] [CrossRef]

- Cao, A.; Pang, H.; Zhang, M.; Shi, L.; Deng, Q.; Hu, S. Design and Fabrication of an Artificial Compound Eye for Multi-Spectral Imaging. Micromachines 2019, 10, 208. [Google Scholar] [CrossRef] [PubMed]

- He, X.; Liu, Y.; Ganesan, K.; Ahnood, A.; Beckett, P.; Eftekhari, F.; Smith, D.; Uddin, M.H.; Skafidas, E.; Nirmalathas, A.; et al. A single sensor based multispectral imaging camera using a narrow spectral band color mosaic integrated on the monochrome CMOS image sensor. APL Photonics 2020, 5, 046104. [Google Scholar] [CrossRef]

- Ansari, K.; Thomas, J.-B.; Gouton, P. Spectral band Selection Using a Genetic Algorithm Based Wiener Filter Estimation Method for Reconstruction of Munsell Spectral Data. Biol. Imaging 2017, 29, 190–193. [Google Scholar] [CrossRef]

- Thomas, J.-B.; Lapray, P.-J.; Gouton, P.; Clerc, C. Spectral Characterization of a Prototype SFA Camera for Joint Visible and NIR Acquisition. Sensors 2016, 16, 993. [Google Scholar] [CrossRef]

- Houssou, M.E.E.; Mahama, A.T.S.; Gouton, P.; Degla, G. Robust Facial Recognition System using One Shot Multispectral Filter Array Acquisition System. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 25–33. [Google Scholar] [CrossRef]

- Yang, C.-M.; Cheng, C.-H.; Chen, R.-K. Changes in Spectral Characteristics of Rice Canopy Infested with Brown Planthopper and Leaffolder. Crop Sci. 2007, 47, 329–335. [Google Scholar] [CrossRef]

- Zhang, N.; Yang, G.; Pan, Y.; Yang, X.; Chen, L.; Zhao, C. A review of advanced technologies and development for hyperspec-tral-based plant disease detection in the past three decades. Remote Sens. 2020, 12, 3188. [Google Scholar] [CrossRef]

- Huang, J.; Liao, H.; Zhu, Y.; Sun, J.; Sun, Q.; Liu, X. Hyperspectral detection of rice damaged by rice leaf folder (Cnaphalocrocis medinalis). Comput. Electron. Agric. 2012, 82, 100–107. [Google Scholar] [CrossRef]

- Khaled, A.Y.; Abd Aziz, S.; Bejo, S.K.; Nawi, N.M.; Seman, I.A.; Onwude, D.I. Early detection of diseases in plant tissue using spectroscopy—Applications and limitations. Appl. Spectrosc. Rev. 2018, 53, 36–64. [Google Scholar] [CrossRef]

- Qin, J.; Burks, T.F.; Kim, M.S.; Chao, K.; Ritenour, M.A. Citrus canker detection using hyperspectral reflectance imaging and PCA-based image classification method. Sens. Instrum. Food Qual. Saf. 2008, 2, 168–177. [Google Scholar] [CrossRef]

- Stemmler, S. Development of a high-speed, high-resolution multispectral camera system for airborne applications. In Proceedings of the Multimodal Sensing and Artificial Intelligence: Technologies and Applications II, Online, 26–29 June 2021; SPIE: Bellingham, WA, USA, 2021; Volume 11785, pp. 233–236. [Google Scholar]

- Du, S.; Chang, J.; Wu, C.; Zhong, Y.; Hu, Y.; Zhao, X.; Su, Z. Optical design and fabrication of a three-channel common-aperture multispectral polarization camera. J. Mod. Opt. 2021, 68, 1121–1133. [Google Scholar] [CrossRef]

- Shrestha, R.; Hardeberg, J.Y.; Khan, R. Spatial arrangement of color filter array for multispectral image acquisition. In Proceedings of the Sensors, Cameras, and Systems for Industrial, Scientific, and Consumer Applications XII, San Francisco, CA, USA, 23–27 February 2011; SPIE: Bellingham, WA, USA, 2011; Volume 7875, pp. 20–28. [Google Scholar]

- Zhang, W.; Suo, J.; Dong, K.; Li, L.; Yuan, X.; Pei, C.; Dai, Q. Handheld snapshot multi-spectral camera at tens-of-megapixel resolution. Nat. Commun. 2023, 14, 5043. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).