Wand-Based Calibration of Unsynchronized Multiple Cameras for 3D Localization

Abstract

1. Introduction

2. Materials and Methods

2.1. Preliminaries and Problem Formulation

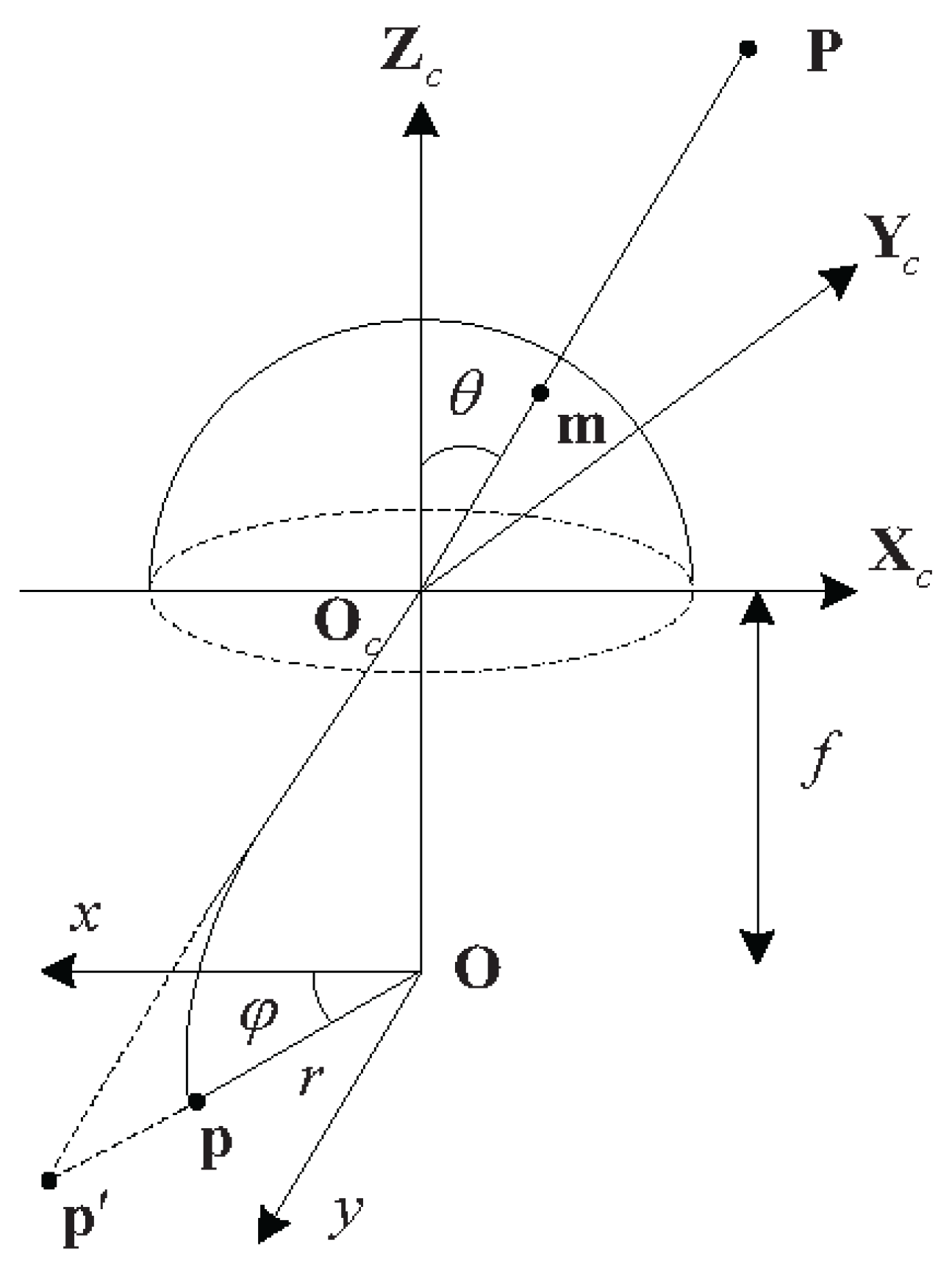

2.1.1. General Camera Model

2.1.2. Problem Formulation

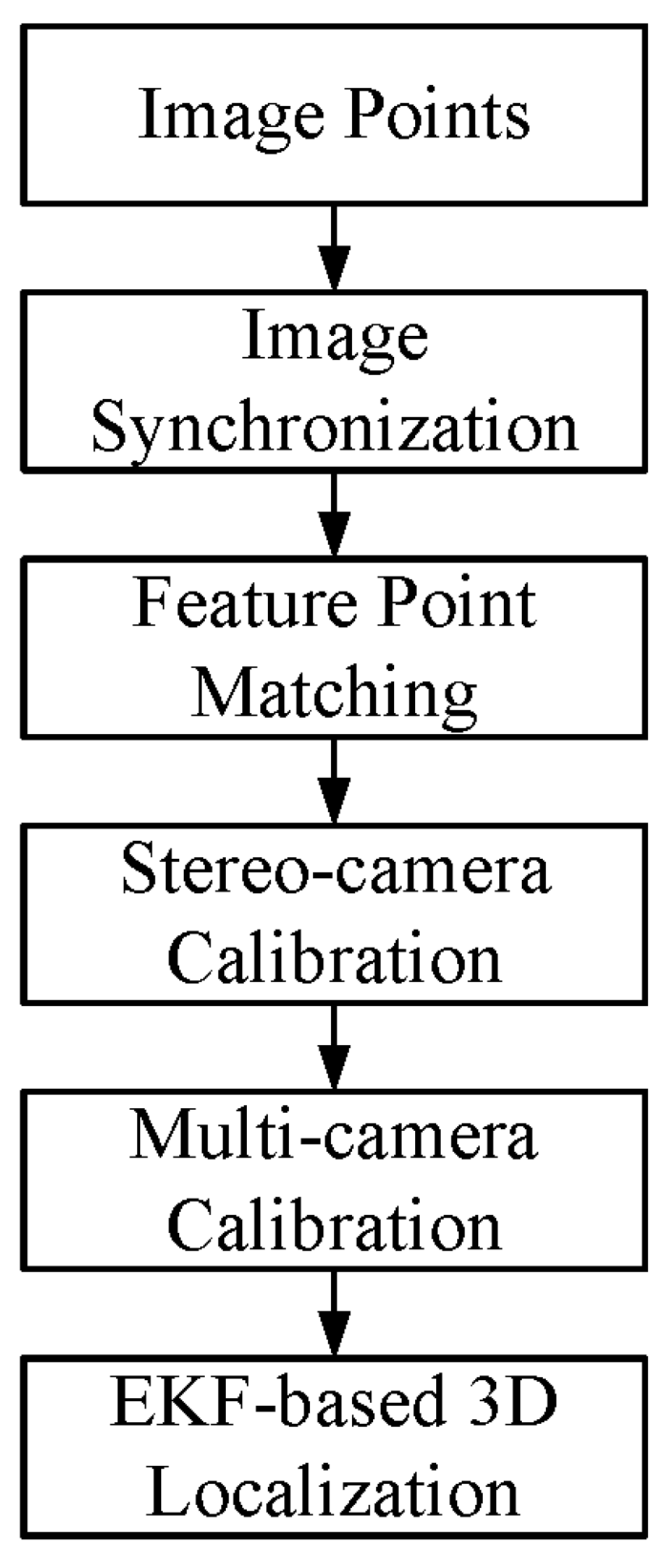

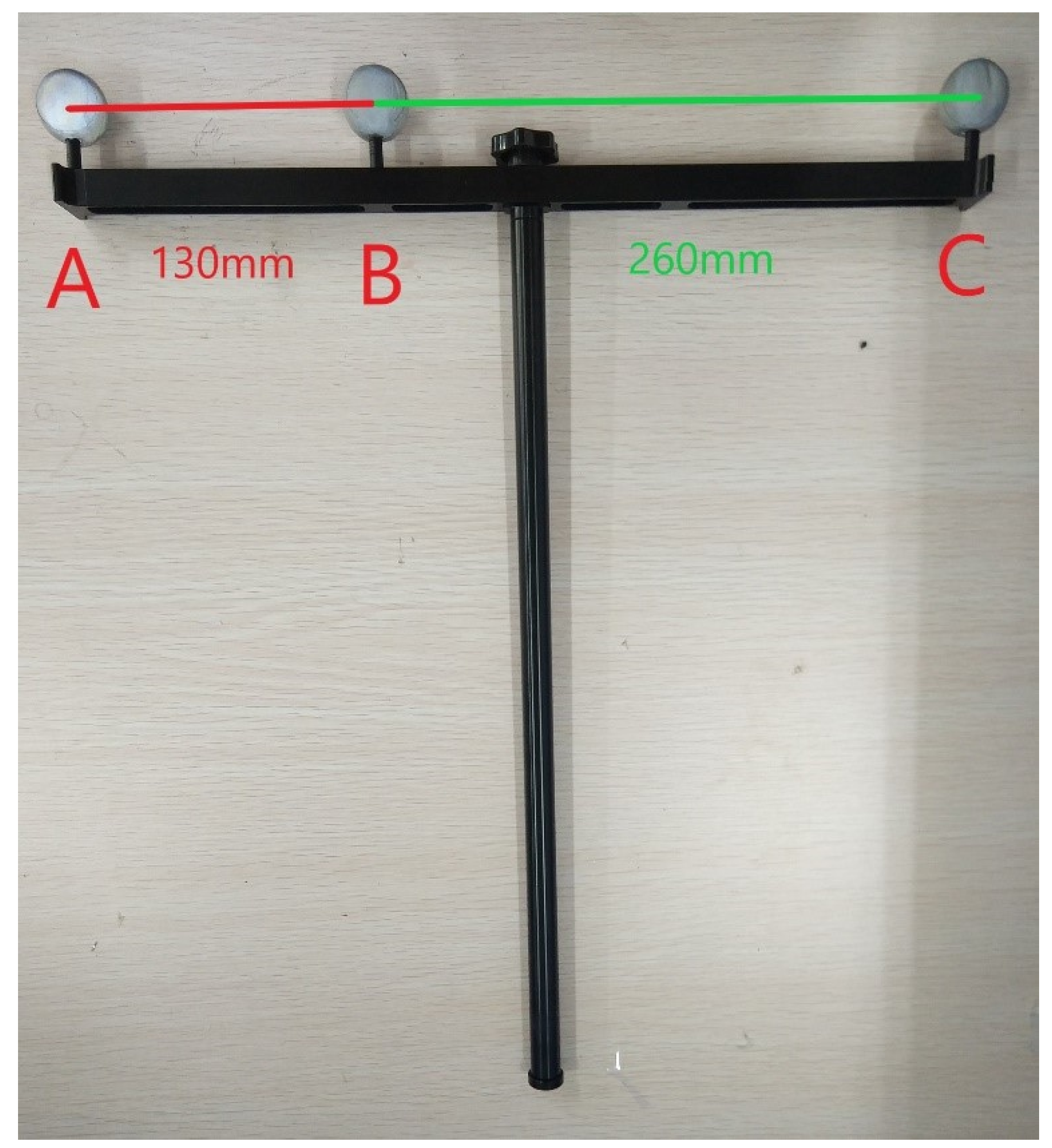

2.2. Calibration Algorithm

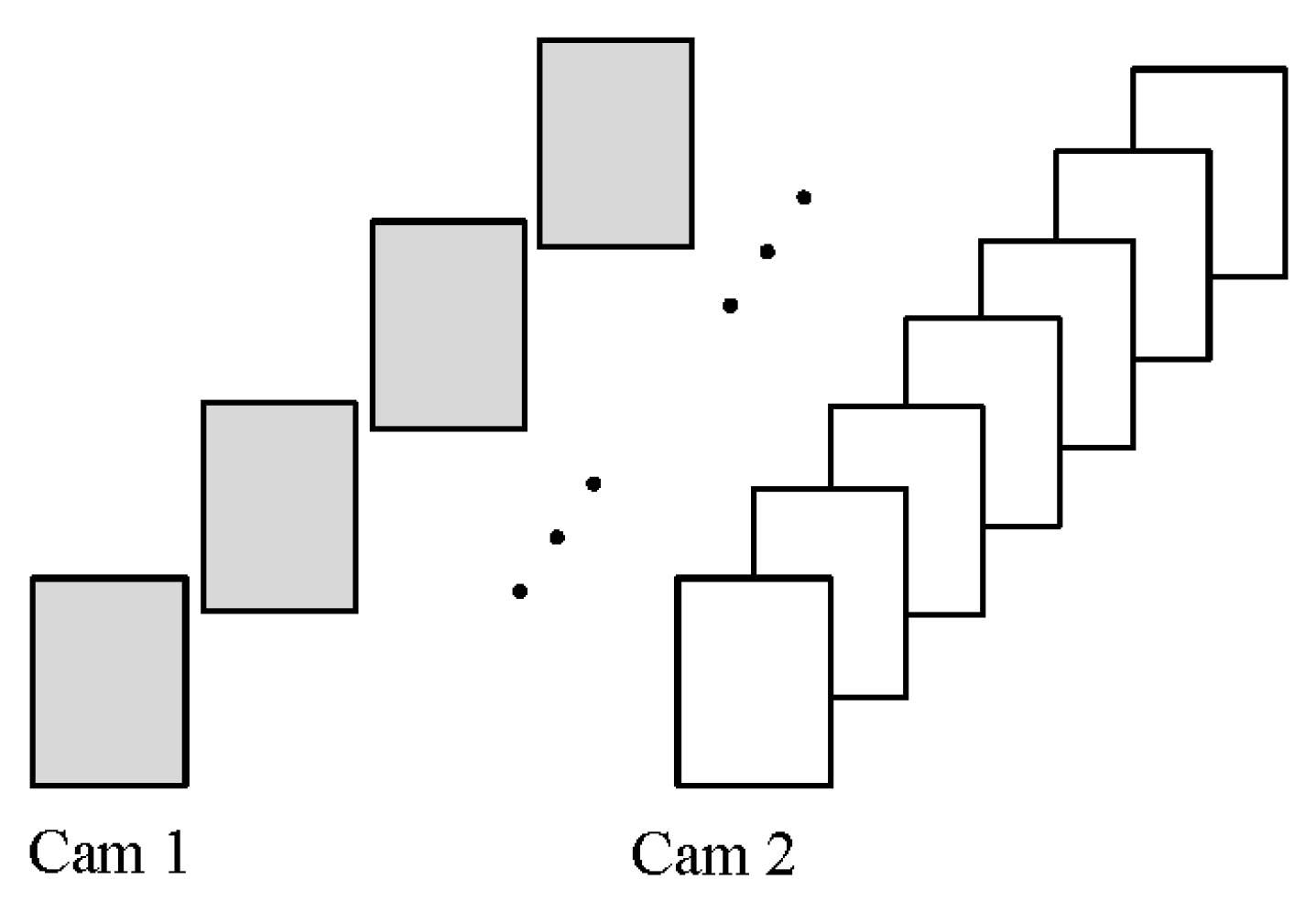

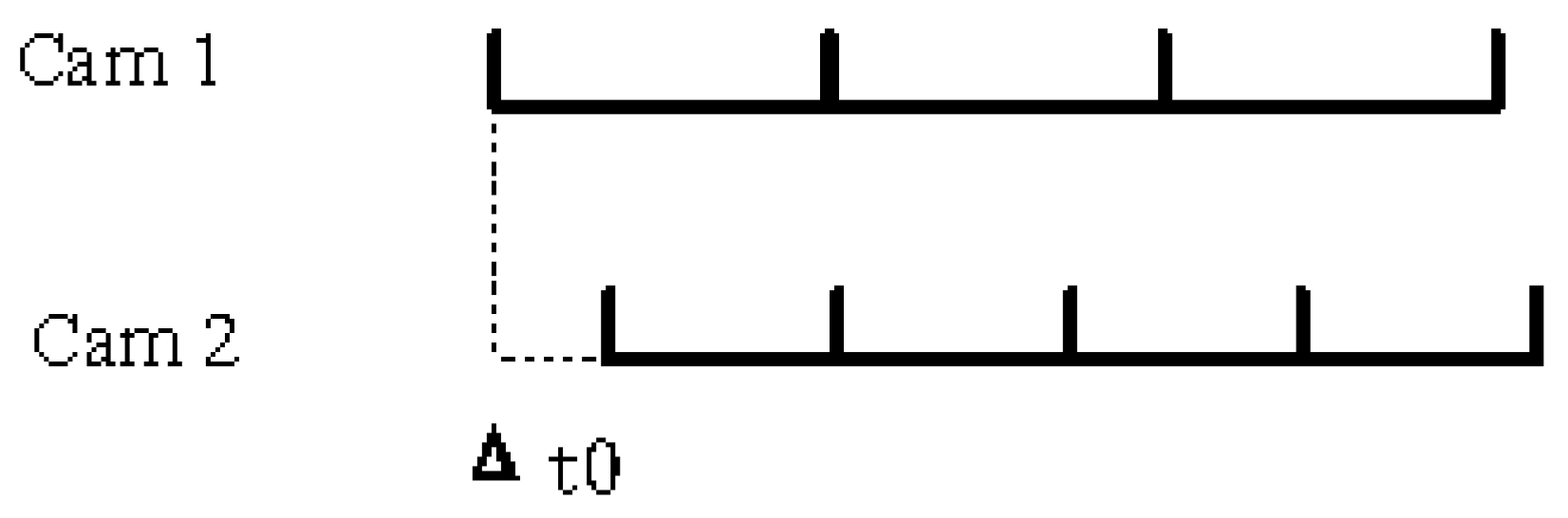

2.2.1. Pre-Processing for Two Cameras

2.2.2. Pre-Processing for Multiple Cameras

2.2.3. Loss of Marker

2.2.4. Multi-Camera Calibration Method

2.3. Three-Dimensional Localization Algorithm

3. Results and Discussion

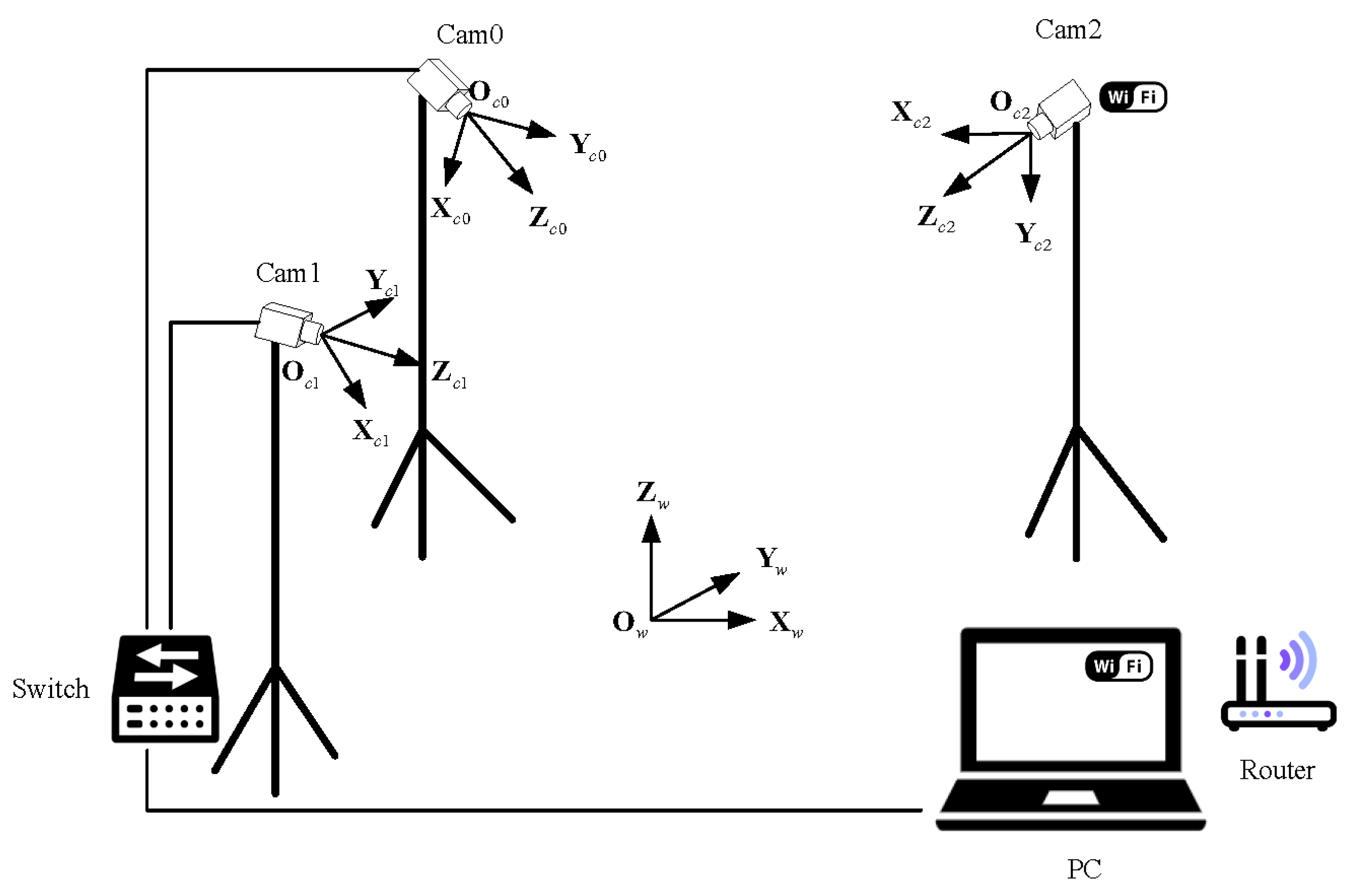

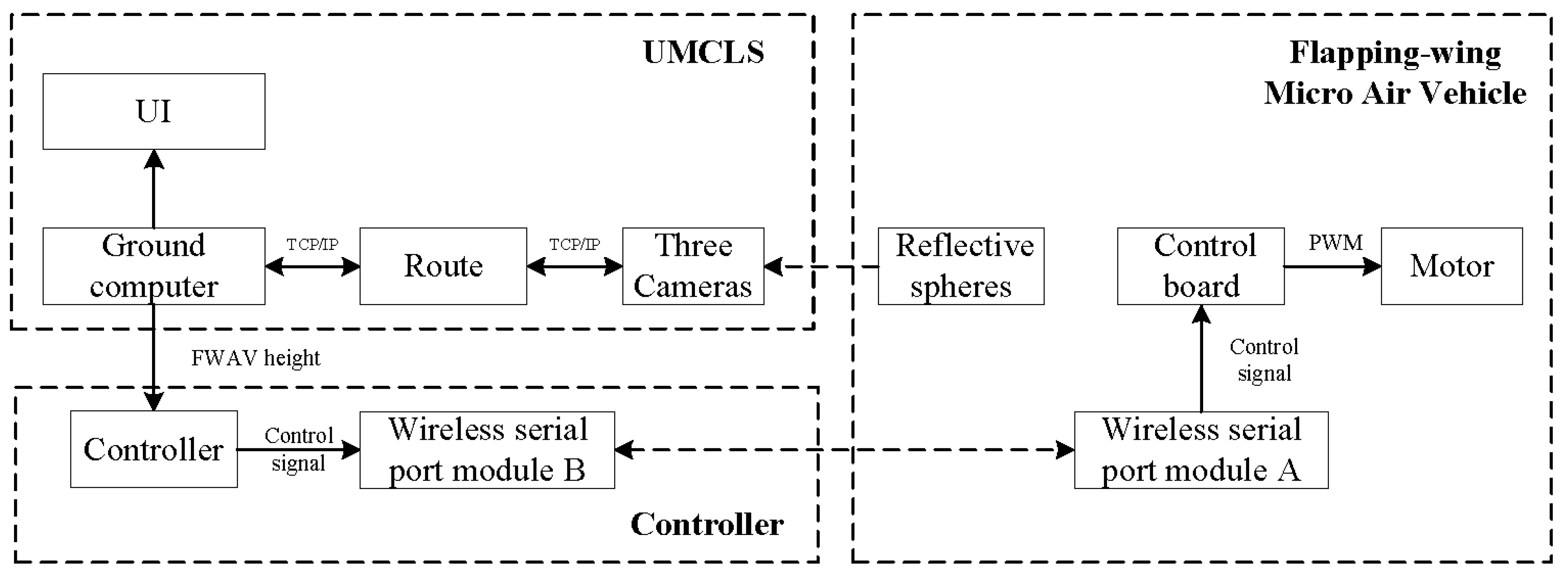

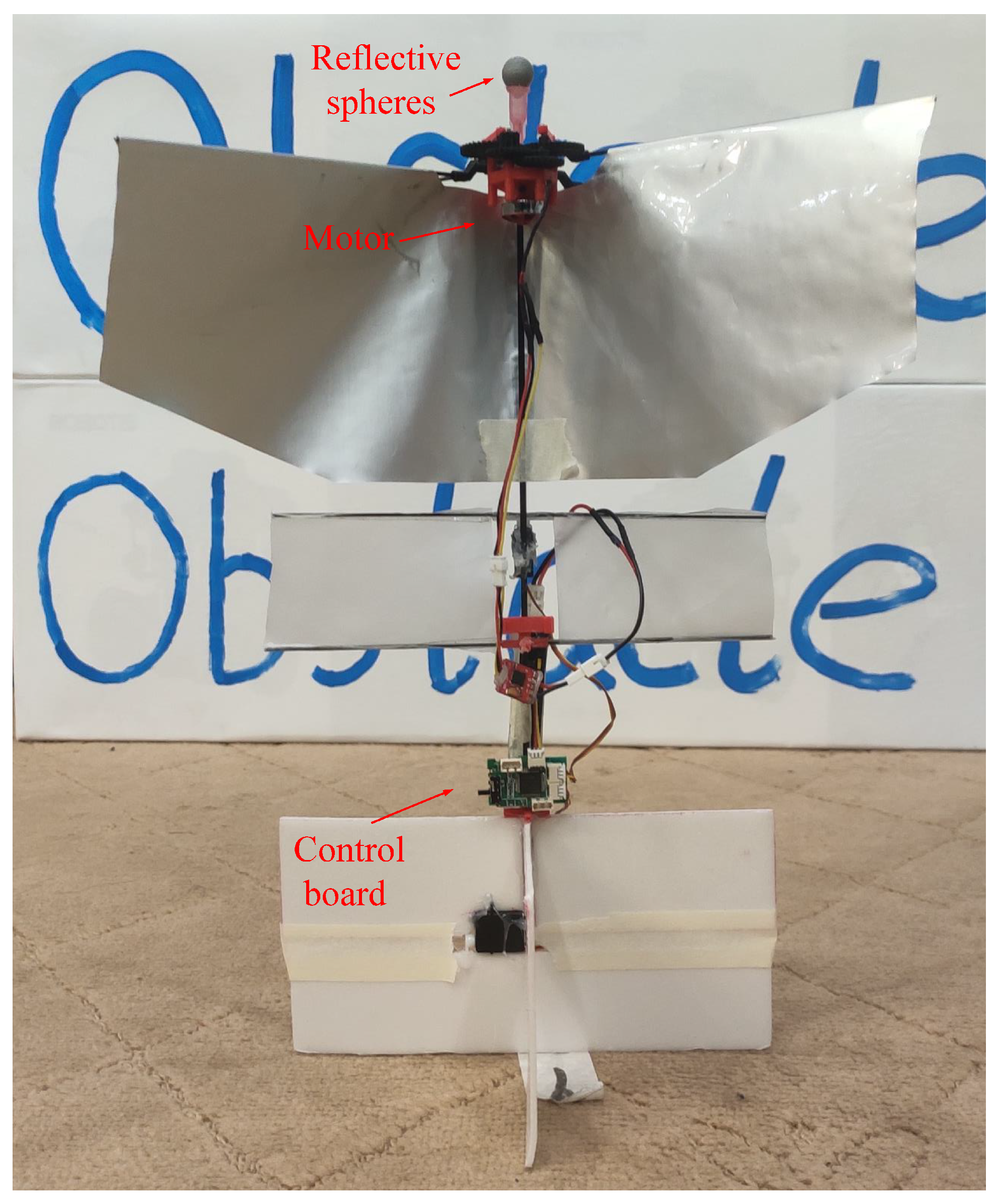

3.1. System Construction and Experimental Design

3.2. Experimental Result

3.2.1. Calibration Experiment

3.2.2. Localization Experiment

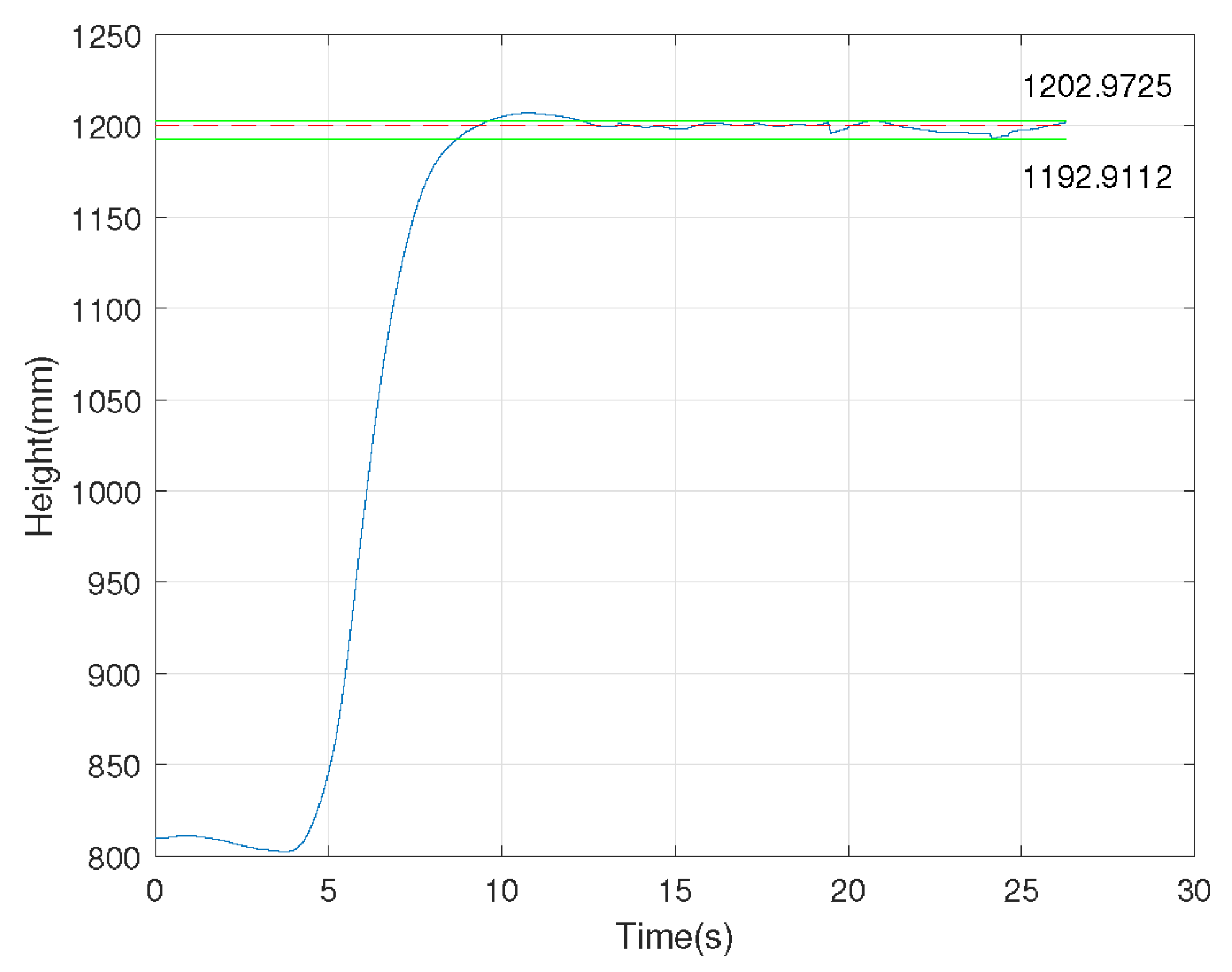

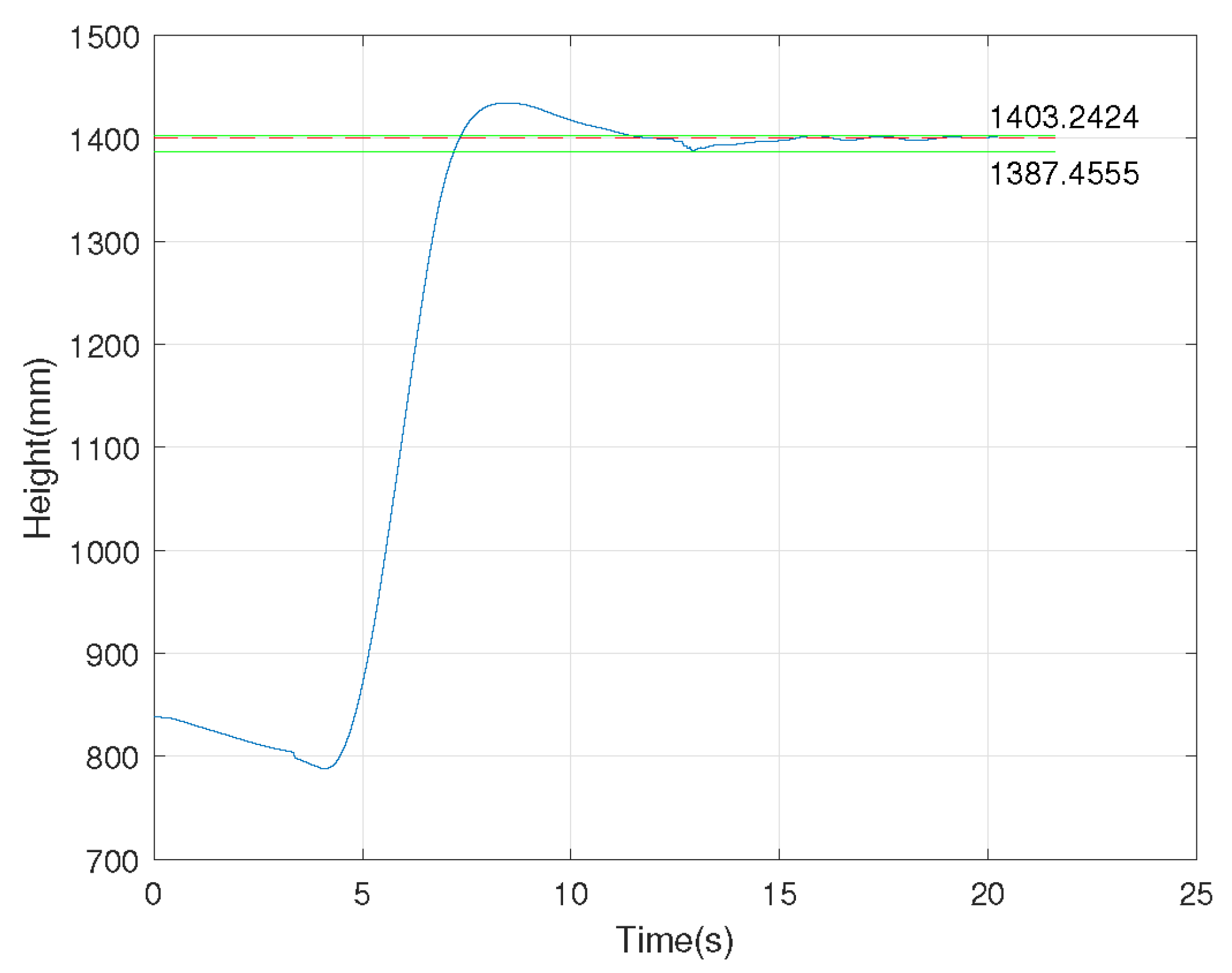

3.2.3. Fixed-Height Experiment

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zeng, Y.; Hu, Y.; Liu, S.; Ye, J.; Han, Y.; Li, X.; Sun, N. Rt3d: Real-time 3-d vehicle detection in lidar point cloud for autonomous driving. IEEE Robot. Autom. Lett. 2018, 3, 3434–3440. [Google Scholar] [CrossRef]

- Deng, H.; Fu, Q.; Quan, Q.; Yang, K.; Cai, K.-Y. Indoor multi-camera-based testbed for 3-D tracking and control of UAVs. IEEE Trans. Instrum. Meas. 2019, 69, 3139–3156. [Google Scholar] [CrossRef]

- Sivrikaya, F.; Yener, B. Time synchronization in sensor networks: A survey. IEEE Netw. 2004, 18, 45–50. [Google Scholar] [CrossRef]

- Huang, Y.; Xue, C.; Zhu, F.; Wang, W.; Zhang, Y.; Chambers, J.A. Adaptive recursive decentralized cooperative localization for multirobot systems with time-varying measurement accuracy. IEEE Trans. Instrum. Meas. 2021, 70, 1–25. [Google Scholar] [CrossRef]

- Puig, L.; Bastanlar, Y.; Sturm, P.; Guerrero, J.J.; Barreto, J. Calibration of central catadioptric cameras using a DLT-like approach. Int. J. Comput. Vis. 2011, 93, 101–114. [Google Scholar] [CrossRef]

- Du, B.; Zhu, H. Estimating fisheye camera parameters using one single image of 3D pattern. In Proceedings of the 2011 International Conference on Electric Information and Control Engineering, Wuhan, China, 15–17 April 2011; pp. 1–4. [Google Scholar]

- Song, L.; Wu, W.; Guo, J.; Li, X. Survey on camera calibration technique. In Proceedings of the 2013 5th International Conference on Intelligent Human-Machine Systems and Cybernetics, Hangzhou, China, 26–27 August 2013; pp. 389–392. [Google Scholar]

- Mei, C.; Rives, P. Single view point omnidirectional camera calibration from planar grids. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 3945–3950. [Google Scholar]

- Deng, H.; Yang, K.; Quan, Q.; Cai, K.-Y. Accurate and flexible calibration method for a class of visual sensor networks. IEEE Sens. J. 2019, 20, 3257–3269. [Google Scholar] [CrossRef]

- Zhou, T.; Cheng, X.; Lin, P.; Wu, Z.; Liu, E. A general point-based method for self-calibration of terrestrial laser scanners considering stochastic information. Remote Sens. 2020, 12, 2923. [Google Scholar] [CrossRef]

- Faugeras, O.D.; Luong, Q.T.; Maybank, S.J. Camera self-calibration: Theory and experiments. In Proceedings of the Second European Conference on Computer Vision, Santa Margherita Ligure, Italy, 19–22 May 1992; pp. 321–334. [Google Scholar]

- Maybank, S.J.; Faugeras, O.D. A theory of self-calibration of a moving camera. Int. J. Comput. Vis. 1992, 8, 123–151. [Google Scholar] [CrossRef]

- Hartley, R.I. Self-calibration of stationary cameras. Int. J. Comput. Vis. 1997, 22, 5–23. [Google Scholar] [CrossRef]

- Sang, D.M. A self-calibration technique for active vision systems. IEEE Trans. Robot. Autom. 1996, 12, 114–120. [Google Scholar] [CrossRef]

- Kwon, H.; Park, J.; Kak, A.C. A new approach for active stereo camera calibration. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 3180–3185. [Google Scholar]

- Khan, A.; Aragon-Camarasa, G.; Sun, L.; Siebert, J.P. On the calibration of active binocular and RGBD vision systems for dual-arm robots. In Proceedings of the 2016 IEEE International Conference on Robotics and Biomimetics (ROBIO), Qingdao, China, 3–7 December 2016; pp. 1960–1965. [Google Scholar]

- Zhang, Z. Camera calibration with one-dimensional objects. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 892–899. [Google Scholar] [CrossRef] [PubMed]

- Velipasalar, S.; Wolf, W.H. Frame-level temporal calibration of video sequences from unsynchronized cameras. Mach. Vis. Appl. 2008, 19, 395–409. [Google Scholar] [CrossRef]

- Noguchi, M.; Kato, T. Geometric and timing calibration for unsynchronized cameras using trajectories of a moving marker. In Proceedings of the 2007 IEEE Workshop on Applications of Computer Vision (WACV’07), Austin, TX, USA, 21–22 February 2007; pp. 1–6. [Google Scholar]

- Matsumoto, H.; Sato, J.; Sakaue, F. Multiview constraints in frequency space and camera calibration from unsynchronized images. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1601–1608. [Google Scholar]

- Kim, J.-H.; Koo, B.-K. Convenient calibration method for unsynchronized camera networks using an inaccurate small reference object. Opt. Express 2012, 20, 25292–25310. [Google Scholar] [CrossRef] [PubMed]

- Benrhaiem, R.; Roy, S.; Meunier, J. Achieving invariance to the temporal offset of unsynchronized cameras through epipolar point-line triangulation. Mach. Vis. Appl. 2016, 27, 545–557. [Google Scholar] [CrossRef]

- Piao, Y.; Sato, J. Computing epipolar geometry from unsynchronized cameras. In Proceedings of the 14th International Conference on Image Analysis and Processing (ICIAP 2007), Modena, Italy, 10–14 September 2007; pp. 475–480. [Google Scholar]

- Kannala, J.; Brandt, S.S. A generic camera model and calibration method for conventional, wide-angle, and fish-eye lenses. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1335–1340. [Google Scholar] [CrossRef] [PubMed]

- Fu, Q.; Quan, Q.; Cai, K.-Y. Calibration of multiple fish-eye cameras using a wand. IET Comput. Vis. 2015, 9, 378–389. [Google Scholar] [CrossRef]

- Nister, D. An efficient solution to the five-point relative pose problem. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 756–770. [Google Scholar] [CrossRef] [PubMed]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Lourakis, M.I.A. Computing epipolar geometry from unsynchronized cameras. In Proceedings of the 11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; pp. 43–56. [Google Scholar]

- Fu, Q.; Zheng, Z.-L. A robust pose estimation method for multicopters using off-board multiple cameras. IEEE Access 2020, 8, 41814–41821. [Google Scholar] [CrossRef]

| Experiment Number | Proposed (Pixel, Pixel) | [9] (Pixel, Pixel) |

|---|---|---|

| 1 | (1.308,1.731) | (>100, >100) |

| 2 | (0.811,1.048) | (>100, >100) |

| 3 | (1.285,1.560) | (>100, >100) |

| Experiment Number | Proposed (Pixel, Pixel) | [9] (Pixel, Pixel) |

|---|---|---|

| 1 | (0.836,1.098) | (>100, >100) |

| 2 | (3.404,3.340) | (>100, >100) |

| 3 | (3.636,4.346) | (>100, >100) |

| Experiment Number | Proposed (Pixel, Pixel) | [9] (Pixel, Pixel) |

|---|---|---|

| 1 | (1.891,2.032) | (>100, >100) |

| 2 | (0.684,0.751) | (>100, >100) |

| 3 | (0.821,0.794) | (>100, >100) |

| Experiment Number | Cam0 (Pixel) | Cam1 (Pixel) | Cam2 (Pixel) |

|---|---|---|---|

| 1 | 7.780 | 2.427 | 2.743 |

| 2 | 6.382 | 4.734 | 4.445 |

| 3 | 5.329 | 2.405 | 2.650 |

| Experiment Number | Cam0 (Pixel) | Cam1 (Pixel) | Cam2 (Pixel) |

|---|---|---|---|

| 1 | 3.850 | 3.385 | 2.936 |

| 2 | 5.314 | 1.066 | 1.143 |

| 3 | 4.247 | 2.975 | 2.539 |

| Error (mm) | 60-60-110 HZ | 75-75-110 HZ | 90-90-110 HZ |

|---|---|---|---|

| 1 | 7.9496 | 12.1173 | 8.2264 |

| 2 | 1.3425 | 18.5684 | 1.6776 |

| 3 | 4.3363 | 5.6703 | 16.1708 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Fu, Q. Wand-Based Calibration of Unsynchronized Multiple Cameras for 3D Localization. Sensors 2024, 24, 284. https://doi.org/10.3390/s24010284

Zhang S, Fu Q. Wand-Based Calibration of Unsynchronized Multiple Cameras for 3D Localization. Sensors. 2024; 24(1):284. https://doi.org/10.3390/s24010284

Chicago/Turabian StyleZhang, Sujie, and Qiang Fu. 2024. "Wand-Based Calibration of Unsynchronized Multiple Cameras for 3D Localization" Sensors 24, no. 1: 284. https://doi.org/10.3390/s24010284

APA StyleZhang, S., & Fu, Q. (2024). Wand-Based Calibration of Unsynchronized Multiple Cameras for 3D Localization. Sensors, 24(1), 284. https://doi.org/10.3390/s24010284