1. Introduction

Advanced driver assistance systems (ADASs) are a group of electronic technologies that assist drivers in driving and parking functions. Through a safe human–machine interface, ADASs increase car and road safety. They use automated technology, such as sensors and cameras, to detect nearby obstacles or driver errors, and respond or issue alerts accordingly. They can enable various levels of autonomous driving, depending on the features installed in the car.

ADASs use a variety of sensors such as cameras, radar, lidar, and a combination of these, to detect objects and conditions around the vehicle. The sensors send data to a computing system, which then analyzes the data and determines the best course of action based on the algorithmic design. For instance, if a camera detects a pedestrian in the vehicle’s path, the computing system may trigger the ADAS to sound an alarm or apply the brakes.

The chronicles of ADAS date back to the 1970s [

1,

2] with the development of the first anti-lock braking system (ABS). Following a slow and steady evolution, additional features such as the lane departure warning system (LDWS) and electronic stability control (ESC) emerged in the 1990s. In recent years, there has been a rapid development of numerous ADASs, with new functionalities being introduced every other day and becoming increasingly prevalent in modern vehicles, as they offer a variety of safety features that aid in preventing accidents, relying on the aforementioned variety of sensors that have made the ADAS a potential system with which to significantly reduce the number of traffic accidents and fatalities. A study by the Insurance Institute for Highway Safety [

3] found that different uses of ADASs can reduce the risk of a fatal crash by up to 20–25%. Therefore, ADASs are becoming increasingly common in cars. In 2021, 33% of new cars sold in the United States had ADAS features. This number is expected to grow to 50% by 2030, as ADASs are expected to play a major role in the future of transportation [

4]. By helping to prevent accidents and collisions, reducing drivers’ fatigue and stress [

5,

6], improving fuel efficiency [

7,

8], making parking easier and more convenient [

9] and thereby providing peace of mind to drivers and passengers [

5,

6], ADASs can save lives and make our roads safer.

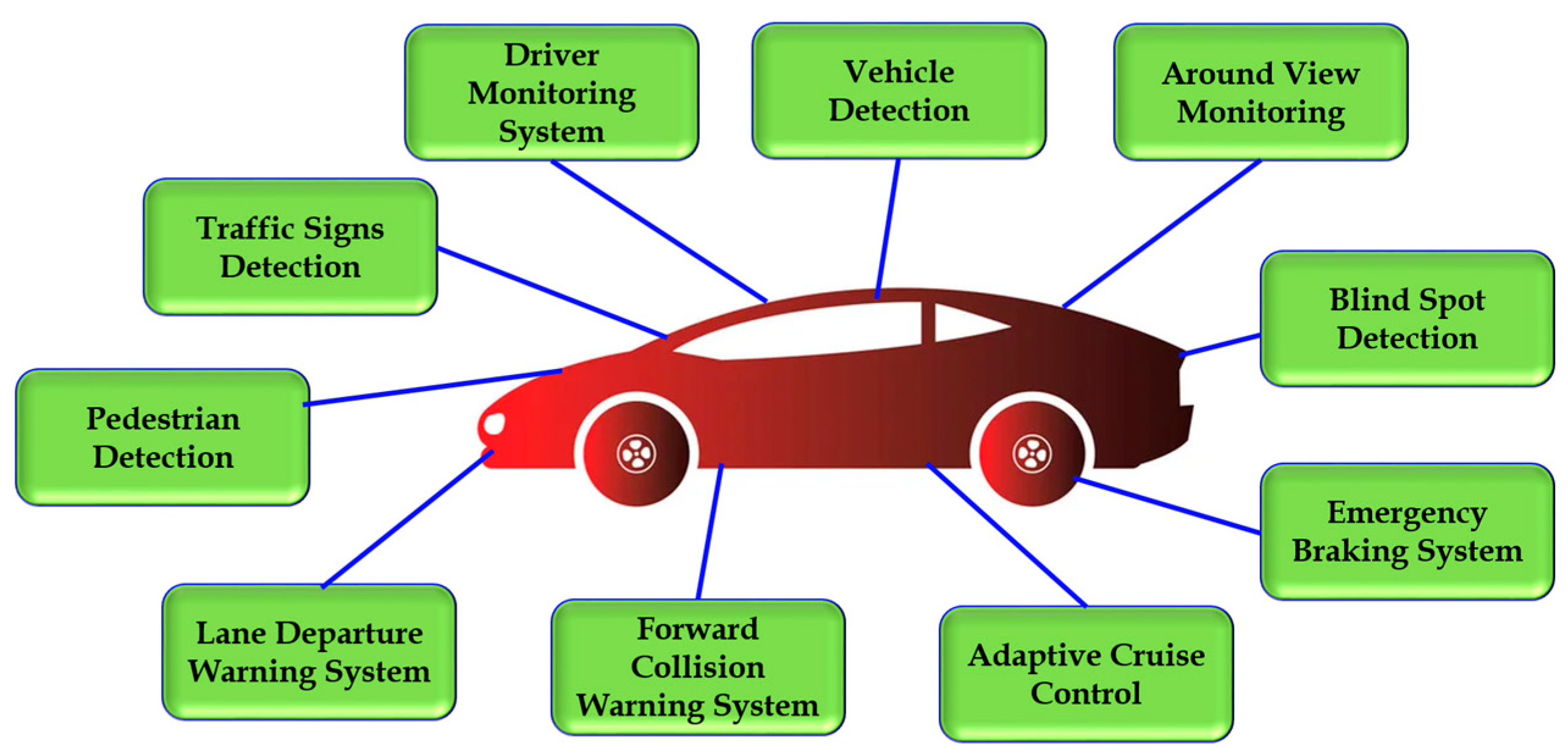

Additionally, various features of ADASs, as shown in

Figure 1, are a crucial part of the development of autonomous driving; in other words, self-driving cars, as autonomous vehicles, rely on the performance and efficiency of ADASs to detect objects and conditions in their surroundings in real-world scenarios. Self-driving cars use a combination of ADASs and artificial intelligence to drive themselves. Therefore, ADASs are continuing to play an important role in the development of autonomous driving as the technology matures.

The basic functionalities of ADASs are object detection, recognition, and tracking. Numerous algorithms allow vehicles to detect and recognize—in other words, to identify and then track—other objects on the road, such as vehicles, pedestrians, cyclists, traffic signs, lanes, probable obstacles on the road, and more; warn the driver of potential hazards; and/or take evasive action automatically.

There are a number of different object detection, recognition, and tracking algorithms that have been developed for ADASs. These algorithms can be broadly classified into two main categories: traditional methods and deep learning (DL) methods, as discussed in detail in

Section 1.3.

This paper attempts to provide a comprehensive review of recent trends in different algorithms for various ADAS functions. The paper begins by discussing the challenges of object detection, recognition, and tracking in ADAS applications. The paper then discusses the different types of sensors used in ADASs and different types of object detection, recognition, and tracking algorithms that have been developed for various ADAS methodologies and datasets used to train and test the methods. The paper concludes by discussing the future trends in object detection, recognition, and tracking for ADASs.

1.1. Basic Terminologies

Before diving into the main objective of the paper, the section below introduces some of the basic terminologies commonly used in the field of ADAS research:

Image processing is the process of manipulating digital images to improve their quality or extract useful information from them. Image processing techniques are commonly used in ADASs for object detection, recognition, and tracking tasks;

Object detection is the task of identifying and locating objects in a scene, such as vehicles, pedestrians, traffic signs, and other objects that could pose a hazard to the driver;

Object tracking involves following the movement of vehicles, pedestrians, and other objects over time to predict their future trajectories;

Image segmentation is the task of dividing an image into different regions, each of which corresponds to a different object or part of an object such as the bumper, hood, and wheels and other objects such as pedestrians, traffic signs, lanes, forward objects, and so on;

Feature extraction is the extraction of features like shape, size, color, and so on from an image or a video; these features are used to identify objects or track their movements.

Classification is the task of assigning a label such as vehicles, pedestrians, traffic signs, or others to an object or several images to categorize the objects;

Recognition is the task of identifying an object or a region in an image by its name or other attributes.

1.2. An Overview of ADASs

The history of ADAS technology can be traced back to the 1970s with the adoption of the anti-lock braking system [

10,

11]. Early ADASs including electronic stability control, anti-lock brakes, blind spot information systems, lane departure warning, adaptive cruise control, and traction control emerged in the 1900s and 2000s [

12,

13]. These systems can be affected by mechanical alignment adjustments or damage from a collision requiring automatic reset for these systems after a mechanical alignment is performed.

1.2.1. The Scope of ADASs

ADASs perform a variety of tasks using object detection, recognition, and tracking algorithms which are deemed as falling within the scope of ADASs; namely, (i) vehicle detection, (ii) pedestrian detection, (iii) traffic signs detection (TSD), (iv) driver monitoring system (DMS), (v) lane departure warning system (LDWS), (vi) forward collision warning system (FCWS), (vii) blind-spot detection (BSD), (viii) emergency braking system (EBS), (ix) adaptive cruise control (ACC), and (x) around view monitoring (AVM).

These are some of the most important of the many ADAS features that rely on detection, recognition, and tracking algorithms. These algorithms are constantly being improved as the demand for safer vehicles continues to grow.

1.2.2. The Objectives of Object Detection, Recognition, and Tracking in ADASs

An ADAS system has various functions with different objectives that can be listed as:

Improving road safety: ADASs can aid in improving road safety by reducing the number of accidents; this is achieved by warning drivers of potential hazards and by taking corrective actions to avoid collisions. For example, a LDWS can warn the driver if they are about to drift out of their lane, while a forward collision warning system can warn the driver if they are about to collide with another vehicle;

Reducing driver workload: ADASs can help to reduce driver workload by automating some of their driving tasks. This can help to make driving safer and more enjoyable. For example, ACC can automatically maintain a safe distance between the vehicle and the vehicle in front of it, and lane-keeping assist can automatically keep the vehicle centered in its lane;

Increasing fuel efficiency: ADASs can help to increase fuel efficiency by reducing the need for the driver to brake and accelerate, which is achieved by maintaining a constant speed and by avoiding sudden speed changes. For example, ACC can automatically adjust the speed of the vehicle to maintain a safe distance from the vehicle in front of it, which can help to reduce fuel consumption;

Providing information about the road environment: ADASs can provide drivers with more information about the road environment, such as the speed of other vehicles, the distance to the nearest object, traffic signs, and the presence of pedestrians or cyclists. This information can help drivers to make better decisions about how to drive and can help to reduce the risk of accidents;

Assisting drivers with difficult driving tasks: ADASs can assist drivers with difficult driving tasks, such as parking, merging onto a highway, and driving in bad weather conditions, thereby reducing driver workload and enabling safer driving;

Ensuring a comfortable and enjoyable driving experience: ADASs can provide a more comfortable and enjoyable driving experience by reducing stress and fatigue that drivers experience which can be achieved by automating some of the tasks involved in driving, such as maintaining a constant speed and avoiding sudden changes in speed.

The ADAS algorithms are designed to achieve these objectives by using sensors, such as cameras, radar, lidar, and now a combination of these, to collect data about the road environment. The data thus obtained are processed by the algorithms as per their design to identify and track objects, predict the future movement of objects, and warn the driver of potential hazards. These ADAS algorithms are constantly being improved as new technologies are being developed. Continuous and consistent advancements in these technologies are making ADASs even more capable of improving road safety and reducing drivers’ workloads.

1.2.3. The Challenges of ADASs

The task of the essential functions of ADASs, namely object detection, recognition, and tracking, is to allow ADASs to identify and track objects in the vehicle’s surroundings, such as other vehicles, pedestrians, cyclists, and sometimes random objects and obstacles, using which ADASs can prevent accidents, keep the vehicle in its lane, and provide other driver assistance features. However, there are various challenges associated with object detection, recognition, and tracking in ADASs, such as:

Varying environmental conditions: ADASs must be able to operate in a variety of environmental conditions, including different lighting conditions like bright sunlight, dark shadows, fog, daytime, nighttime, etc., different weather conditions such as drizzle, rain, snow, and so on, along with various road conditions including dirt, gravel, etc.;

Occlusion: objects on the road in real scenarios can often be occluded by other objects, such as other vehicles, pedestrians, or trees, making it difficult for ADASs to detect and track objects;

Deformation: objects on the road can often be deformed, such as when a vehicle is turning or when a pedestrian is walking, causing difficulties for ADASs in detecting and tracking objects;

Scale: objects on the road can vary greatly in size, from small pedestrians to large trucks, inducing difficulties for ADASs in detecting and tracking objects of all sizes;

Multi-object tracking: ADASs must be able to track multiple objects simultaneously, and this can be challenging as objects move and interact with each other in complex ways in real-world scenarios;

Real-time performance: most importantly, ADASs must be able to detect, recognize, and track objects in real time, which is essential for safety-critical applications, as delays in detection or tracking can lead to accidents and make them unreliable.

Researchers are working on developing newer algorithms and improving the existing algorithms and techniques to address these challenges. Due to this, ADASs are becoming increasingly capable of detecting and tracking objects in a variety of challenging conditions.

1.2.4. The Essentials of ADASs

The above section discusses the challenges of different ADAS methods, whereas in this section, we discuss the numerous requirements of [

14,

15] ADASs, which must be tackled before the aforementioned issues can be resolved. In other words, ADAS algorithms are facing numerous additional predicaments while working on overcoming the challenges discussed in the previous section:

The need for accurate sensors: ADASs rely on a variety of sensors to detect and track objects on the road. These sensors must be accurate and reliable to provide accurate information to the ADAS. Nevertheless, sensors are usually affected by factors such as weather, lighting, and the environment, causing difficulties for sensors in providing accurate information, and thus leading to errors in the ADASs;

The need for reliable algorithms: ADASs also rely on a variety of algorithms to process the data from the sensors and make decisions about how to respond to objects on the road. These algorithms must be reliable to make accurate and timely decisions. However, these algorithms can also be affected by factors such as the complexity of the environment and the number of objects on the road. This makes it difficult for algorithms to make accurate decisions, leading to errors in the ADAS;

The need for integration with other systems: ADASs must be integrated with different systems in the vehicle, such as the braking system and the steering system. This integration is necessary in order for the ADAS system to take action to avoid probable accidents. Nonetheless, integration is complex and time-consuming, resulting in deployment delays of ADASs;

The cost of ADASs: ADASs are expensive to develop and deploy, making it difficult for some manufacturers to offer ADASs as standard features in their vehicles. As a result, ADASs are often only available as optional features, which can make them less accessible to all drivers;

The acceptance of ADASs by drivers: Some drivers may still be hesitant to adopt ADASs because they worry about the technology or they do not trust the technology. This will result in difficulties persuading drivers to opt for vehicles with ADASs.

Despite these challenges, ADASs have the potential to significantly improve road safety. As the technology continues to improve, ADASs are likely to become more affordable and more widely accepted by drivers. This will help to make roads safer for everyone.

1.3. ADAS Algorithms: Traditional vs. Deep Learning

There are two main types of algorithms used in ADASs: traditional algorithms and DL algorithms. In this section, we discuss the advantages and disadvantages of traditional and DL algorithms for ADASs and also some of the challenges involved in developing and deploying ADASs.

1.3.1. Traditional Algorithms

Traditional methods for object detection, recognition, and tracking are typically the most common type of algorithms used in ADASs, based on hand-crafted, rule-based features, and heuristics designed to capture the distinctive characteristics of different objects. That is, a feature for detecting vehicles might be the presence of four wheels and a windshield. This means that these algorithms use a set of pre-defined rules to determine what objects are present in the environment and how to respond to them. For instance, a traditional lane-keeping algorithm might use a rule that says, ‘If the vehicle is drifting out of its lane, then turn the steering wheel in the opposite direction’ or ‘a rule might state that if a vehicle is detected in the vehicle’s blind spot, then the driver should be warned’.

Traditional methods are less complex than DL algorithms, making them easier to develop, and are very effective in certain cases, but they are difficult to generalize to new objects or situations because they are limited by the rules that are hard-coded into them. If a new object, obstacle, or hazard is not covered by a rule, then the algorithm may not be able to detect it. Some of the basic traditional methods-based algorithms are:

These traditional object detection, recognition, and tracking algorithm are effective for a variety of ADAS applications. However, they can be computationally expensive and may not be able to handle challenging conditions, such as occlusion or low lighting.

In recent years, there has been a trend towards using DL algorithms for object detection, recognition, and tracking in ADASs. DL algorithms have been shown to be more accurate than traditional algorithms, and they can handle challenging conditions more effectively.

1.3.2. Deep Learning Algorithms

Inspired by the human brain, DL methods for object detection, recognition, and tracking use artificial neural networks (ANNs) to learn the features that are important for identifying different objects. They are composed of layers of interconnected nodes. Each node performs a simple calculation, and the output of each node is used as the input to the next node.

DL algorithms can learn to detect objects, obstacles, and hazards from large datasets of labeled data usually collected using a variety of sensors. The algorithm is trained to associate specific patterns in the data with specific objects or hazards. DL algorithms are generally more complex than traditional algorithms, but they can achieve higher accuracy as they are not limited by hand-crafted rules, they can learn to detect objects and hazards not covered by any rules, and they are also able to handle challenging conditions, such as occlusion or low lighting, more effectively. Some of the standard DL method-based algorithms are discussed below:

Object detection: DL object detection algorithms commonly use a convolutional neural network (CNN) to extract features from an image. The CNN is then trained on a dataset of images that have been labeled with the objects that they contain. Once the CNN is trained, it can be used to detect objects in new images;

Object recognition: DL object recognition algorithms also conventionally use a CNN to extract features from an image. However, the CNN is trained on a dataset of images that have been labeled with the class of each object. The trained CNN can be used to recognize the class of objects in new images;

Object tracking: DL object tracking algorithms typically use a combination of CNNs and Kalman filters [

21]. The CNN is used to extract features from an image and the Kalman filter is used to track the state of the object over time.

2. Sensors Used in Object Detection, Recognition, and Tracking Algorithms of ADASs

Several sensors can be used for object detection, recognition, and tracking in ADASs. The most common sensors include cameras, radars, and lidars. In addition to these sensors, some other sensors can also be used, such as:

Ultrasonic sensors: used to detect objects that are close to the vehicle, aiding in preventing collisions with pedestrians or other vehicles;

Inertial measurement units (IMUs): employed to track the movement of the vehicle using which the accuracy of object detection and tracking can be improved;

GPS sensors: used to determine the position of the vehicle and are utilized to track the movement of the vehicle and to identify objects that are in the vehicle’s path;

Gyroscope sensors: used to track the orientation of the vehicle and employed to improve the accuracy of object detection and tracking algorithms.

The choice of sensors for object detection, recognition, and tracking in ADASs depends on the specific application. For instance, a system that is designed to detect pedestrians may use a combination of cameras and radar, while a system that is designed to track the movement of other vehicles may use a combination of radar and lidar.

The combination of multiple sensors is mostly used in more recent state-of-the-art methods, as this improves the accuracy of object detection, recognition, and tracking algorithms. The combination of sensors combines the strengths of the sensors and overcomes the weaknesses of the other sensors.

2.1. Cameras, Radar, and Lidar

Cameras, radar, and lidar are the most common types of sensors used in ADASs. While there are two main types of cameras—monocular cameras are the most common type used in ADASs, which have a single lens and can only see in two dimensions, while stereo cameras have two lenses and can see in three dimensions—there are no distinctive types of radars and lidars. These sensors are used in ADASs in a variety of ways, including:

Object detection: the sensors are used to detect objects in the road environment such as pedestrians, vehicles, cyclists, and traffic signs, and then warn the driver of potential hazards or take corrective actions like braking or steering control using the gathered information;

Object recognition: the sensors are used to recognize the class of an object, such as a pedestrian, a vehicle, a cyclist, or a traffic sign. This information can be used to provide the driver with more information about the hazard, such as the type of vehicle, the type of traffic sign and the road condition ahead, or the speed of a pedestrian;

Object tracking: the sensors can be used to track the movement of an object over time, which is then used to predict the future position of an object, which can be used to warn the driver of potential collisions.

The advantages of cameras are their low cost, ease of installation, wide field of view (FOV), and high resolution, but they are easily impacted by weather conditions, occlusion of objects, and varying light conditions. On the other hand, both radars and lidars are resistant to varying weather conditions such as rain, snow, fog, and so on. While radars are occlusion-resistant and provide a longer range than cameras, they fail to provide as many details as cameras and are more expensive than cameras. Compared to both cameras and radars, lidars provide very accurate information about the distance and shape of objects, even in difficult conditions, and possess 3D capabilities, enabling them to create a 3D map of the road environment that makes it easier and more efficient to identify and track objects that are occluded by other objects. Nonetheless, lidars are more expensive than cameras and radars, and lidar systems are more complex, making them more challenging to install and maintain. Cameras are used in almost all ADAS functions, while radars and lidars are used in FCWS, LDWS, BSD, and ACC, with lidars having an additional application in autonomous driving.

All the above features allow these versatile sensors to be used for a variety of object detection, recognition, and tracking tasks in ADASs. However, some challenges need to be addressed before they can be used effectively in all conditions. Hence, some researchers have attempted to use a combination of these sensors, as discussed in the following section.

2.2. Sensor Fusion

Sensor fusion is the process of combining data from multiple sensors to create a more complete and accurate picture of the world. This can be used to improve the performance of object detection, recognition, and tracking algorithms in ADASs.

Numerous different sensor fusion techniques can be used for ADASs, namely:

Data-level fusion: a technique that combines data from different sensors at the data level by averaging the data from different sensors, or by using more sophisticated techniques such as Kalman filtering [

21,

22];

Feature-level fusion: combines features extracted from data from different sensors by combining the features, or by using more sophisticated techniques such as Bayesian fusion [

23,

24];

Decision-level fusion: combines decisions made by different sensors by taking the majority vote, or by using more sophisticated techniques such as the Dempster–Shafer theory [

25,

26,

27].

The choice of sensor fusion technique is application-specific. A data-level fusion may be a good choice for applications where accuracy is critical, whereas a decision-level fusion may be a good choice for applications where speed is critical.

The benefits of using sensor fusion for object detection, recognition, and tracking in ADASs can be listed as [

15,

28,

29,

30,

31]:

Improved accuracy: sensor fusion improves the accuracy of object detection, recognition, and tracking algorithms by combining the strengths of different sensors;

Improved robustness: sensor fusion also improves the robustness of object detection, recognition, and tracking algorithms by making them less susceptible to noise and other disturbances;

Reduced computational complexity: sensor fusion also reduces the computational complexity of object detection, recognition, and tracking algorithms, as the data from multiple sensors can be processed together, resulting in saved time and processing power.

Overall, sensor fusion is a promising, powerful technique that has the potential to make ADAS object detection, recognition, and tracking algorithms much safer and more reliable. Although sensor fusion is advantageous, it has some challenges [

15,

32], such as:

Data compatibility: the data from different sensors must be compatible to be fused, implying the data must be in the same format and have the same resolution;

Sensor calibration: the sensors must be calibrated to ensure that they are providing accurate data, which can be challenging, especially if the sensors are in motion;

Computational complexity: Sensor fusion is computationally expensive, especially if a large number of sensors are being fused. This can limit the use of sensor fusion in real-time applications.

Despite these challenges, sensor fusion is emerging with greater potential to improve the performance of ADAS object detection, recognition, and tracking algorithms. As sensor technology continues to improve, a fusion of sensors will become even more powerful and efficient, and it will likely become a standard feature in ADASs.

The following section discusses the most commonly fused sensors in ADASs.

2.2.1. Camera–Radar Fusion

Camera–radar fusion is a technique that combines data from cameras and radar sensors to improve the performance of object detection, recognition, and tracking algorithms in ADASs. As cameras are good at providing good image quality but are susceptible to weather conditions, radar sensors compensate by seeing through weather conditions. Data-level fusion and decision-level fusion are the two main approaches to camera–radar fusion.

2.2.2. Camera–Lidar Fusion

Camera–lidar fusion is a technique that combines data from cameras and lidar sensors to improve the performance of object detection, recognition, and tracking algorithms in ADASs. Cameras are good at providing detailed information about the appearance of objects, while lidar sensors are good at providing information about the distance and shape of objects. By combining data from these two sensors, it is feasible to create a complete and accurate picture of the object, leading to improved accuracy in object detection and tracking.

2.2.3. Radar–Lidar Fusion

Radar–lidar fusion is a technique that combines the data from radar and lidar sensors, improving the performance of ADAS algorithms. Radar sensors use radio waves to detect objects at long distances, while lidar sensors use lasers to detect objects in detail. By fusing the data from the two sensors, the system can obtain a more complete and accurate view of the environment.

2.2.4. Lidar–Lidar Fusion

Lidar–lidar fusion is a technique that combines data from two or more lidar sensors, improving the performance of object detection, recognition, and tracking algorithms in ADASs. Lidar sensors are good at providing information about the distance and shape of objects, but they can be limited in their ability to detect objects that are close to the vehicle or that are occluded by other objects. By fusing data from multiple lidar sensors, it is possible to create a complete and accurate picture of the environment, which can lead to improved accuracy in object detection and tracking.The above discussed advantages and disadvantages of various ADASs sensors are listed in the

Table 1.

3. Systematic Literature Review

The main objective of this review is to determine the latest trends and approaches implemented for different ADAS methods in autonomous vehicles and discuss their achievements. This paper also attempts to evaluate the valuable basis of the methods, implementation, and applications to furnish a state-of-the-art understanding for new researchers in this computer vision and autonomous vehicles field.

The writing of this paper follows a planned, conducted, and observed process. The planning phase involved clarifying the research questions and review protocol, which comprised identifying the publications’ sources, keywords to search for, and selection criteria. The conducting phase involved analyzing, extracting, and synthesizing the literature collection. This included identifying the key themes and findings from the literature and drawing conclusions that address the research questions and objectives. The observed stage contained the review results, addressing the summary of the key findings as well as any limitations or implications of the study.

3.1. Research Questions (RQs)

The main objective of this review is to determine the trend of the methods implemented for different ADAS methods in the field of autonomous vehicles, as well as the achievements of the latest techniques. Additionally, we aim to provide a valuable foundation for the methods, challenges, and opportunities, thus providing state-of-the-art knowledge to support new research in the field of computer vision and ADASs.

Two research questions (RQs) have been defined as follows:

What techniques have been implemented for different ADAS methods in an autonomous vehicle?

What dataset was applied for the network training, validation, and testing?

A focused approach has been adopted while scanning the literature. First, each article was reviewed to see if it answered the earlier questions. The information acquired was then presented comprehensively to achieve the vision of this article.

3.2. Review Protocol

Below, we have listed the literature search sources, search terms, and inclusion and exclusion selection criteria, as well as the technique of literature collection used for this systematic literature review (SLR).

3.2.1. Search Sources

IEEE Xplore and MDPI were chosen as the databases from which the data were extracted.

3.2.2. Search Terms

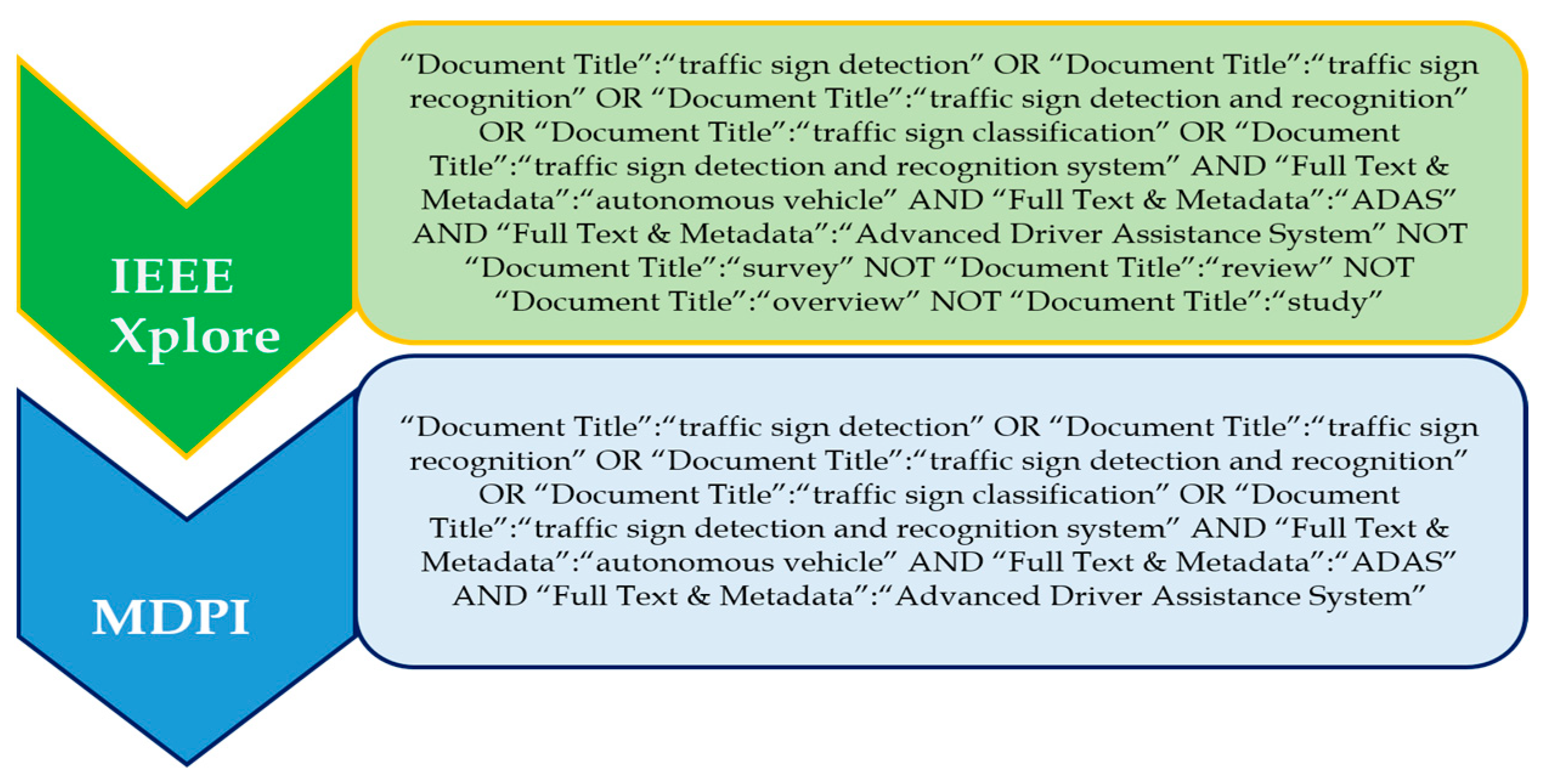

Different sets of search terms were used to investigate the various ADAS methods presented in this research. The OR, AND, and NOT operators were used to select and combine the most relevant and commonly used applicable phrases. The AND operator combined individual search strings into a search query. The databases included IEEE Xplore and MDPI. The search terms used for the respective different methods of ADASs are listed in the respective sections of this paper.

3.2.3. Inclusion Criteria

The study covered all primary publications published in English that discussed the different ADAS methods or any other task related to them discussed in this paper. There were no constraints on subject categories or time frames for a broad search spectrum. The selected articles were among the top most cited journal papers published across four years, from 2019 to 2022.

In addition, the below parameters were also considered while selecting the papers:

Relevance of the research papers to the topic of the review paper covering the most important aspects of the topic and providing a comprehensive overview of the current state of knowledge;

The quality of the research papers should be high. They should be well written, well argued, and well supported by implementation details and experimental results;

Coverage of the research papers should include a wide range of perspectives on the topic and not limited to a single viewpoint or approach;

The methodology presented in the research papers should be sound such that the research methods must be rigorous and provide clear evidence to support their conclusions;

The research papers should be well written and easy to understand in a clear and concise style so that the information is accessible and understandable to a wide audience;

The research papers should have had a significant impact on the field. They should have been cited by other researchers and used to inform new research.

3.2.4. Exclusion Criteria

Articles written in languages other than English were not considered. The exclusion criteria also included short papers, such as abstracts or expanded abstracts, earlier published versions of the detailed works, and survey/review papers.

4. Discussion—Methodology

4.1. Vehicle Detection

Vehicle detection, one of the key components and a critical task of ADASs, is the process of identifying and locating vehicles in the surrounding scenes using sensors such as cameras, radars, and lidar employing computer vision techniques. This information is used to provide drivers with warnings about potential hazards, such as cars that are too close or that are changing lanes and pedestrians or cyclists that might be in the vehicles’ way. It is a crucial function for many ADAS features, such as ACC, LDWS, FCWS, and BSD, discussed in the later sections of the paper.

Vehicle detection is a challenging task, as vehicles vary in size, shape, and color, affecting their appearance in images and videos. They can be seen from a variety of different angles, which can also affect their appearance; furthermore, vehicle sizes can be too small or too big, they could be partially or fully occluded by other objects in the scene; there are different types of vehicles, each with a unique appearance, and the lighting conditions and possible background clutter also affect the appearance of vehicles. All of these factors make detection challenging.

Despite these challenges, the vehicle detection algorithm in ADASs has greatly evolved and is still evolving, and there have been significant advances in vehicle detection over the years. Early algorithms were based on relatively simple-to-implement image processing techniques, such as edge detection and color segmentation, but they were not very accurate. In the early 2000s, there was a shift towards using ML techniques that can learn from data, making them more accurate than simple image processing techniques. Some of the most common ML algorithms used for vehicle detection include support vector machines (SVMs), random forests, and DL NNs.

Deep learning NNs are the most effective machine learning algorithms for vehicle detection. Deep learning NNs can learn complex features from data, which makes them very accurate. Regardless, DL NNs are also more computationally expensive than other ML algorithms. In recent years, there has been a trend towards using sensor fusion for vehicle detection.

The vehicle detection algorithms in ADASs are still evolving. As sensor technology continues to improve, and as ML algorithms become more powerful, vehicle detection algorithms will become even more accurate and reliable.

Search Terms and Recent Trends in Vehicle Detection

‘Vehicle detection’, ‘vehicle tracking’, and ‘vehicle detection and tracking’ are three prominent search terms which were used to investigate the topic. The ‘OR’ operator was used to choose and combine the most relevant and regularly used applicable phrases; that is, the search phrases ‘vehicle detection’, ‘vehicle tracking’, and ‘vehicle detection and tracking’ were discovered.

Figure 2 shows the complete search query for each of the databases. The databases include IEEE Xplore and MDPI.

Since the evolution of vehicle detection has been rapid, considering the detection, recognition, and tracking of other vehicles, pedestrians, and objects, plenty of different methods have been proposed in the past few years. Some of the recent prominent state-of-the-art vehicle detection methods are discussed in the following sections.

Ref. [

33] presents a scale-insensitive CNN, SINet, which is designed for rapid and accurate vehicle detection. SINet employs two lightweight techniques: context-aware RoI pooling and multi-branch decision networks. These preserve small-scale object information and enhance classification accuracy. Ref. [

34] introduces an integrated approach to monocular 3D vehicle detection and tracking. It utilizes a CNN for vehicle detection and employs a Kalman filter-based tracker for temporal continuity. The method incorporates multi-task learning, 3D proposal generation, and Kalman filter-based tracking. Combining radar and vision sensors, ref. [

35] proposes a novel distant vehicle detection approach. Radar generates candidate bounding boxes for distant vehicles, which are classified using vision-based methods, ensuring accurate detection and localization. Ref. [

36] focuses on multi-vehicle tracking, utilizing object detection and viewpoint estimation sensors. The CNN detects vehicles, while viewpoint estimation enhances tracking accuracy. Ref. [

37] utilizes CNN with feature concatenation for urban vehicle detection, improving robustness through layer-wise feature combination. Ref. [

38] presents a robust vehicle detection and counting method integrating CNN and optical flow, while [

39] pioneers vehicle detection and classification via distributed fiber optic acoustic sensing. Ref. [

40] introduces a vehicle tracking and speed estimation method using roadside lidar, incorporating a Kalman filter. Ref. [

41] modifies Tiny-YOLOv3 for front vehicle detection with SPP-Net enhancement, excelling in challenging conditions. Ref. [

42] proposes an Extended Kalman Filter (EKF) for vehicle tracking using radar and lidar data, while [

43] enhances SSD for accurate front vehicle detection. Ref. [

44] improves Faster RCNN for oriented vehicle detection in aerial images with feature amplification and oversampling. Ref. [

45] employs reinforcement learning with partial vehicle detection for efficient intelligent traffic signal control. Ref. [

46] presents a robust DL framework for vehicle detection in adverse weather conditions. Ref. [

47] adopts GAN-based image style transfer for nighttime vehicle detection, while ref. [

48] introduces MultEYE for real-time vehicle detection and tracking using UAV imagery. Ref. [

49] analyzes traffic patterns during COVID-19 using Planet remote-sensing satellite images for vehicle detection. Ref. [

50] proposes one-stage anchor-free 3D vehicle detection from lidar, ref. [

51] fuses RGB-infrared images for accurate vehicle detection using uncertainty-aware learning. Ref. [

52] optimizes YOLOv4 for improved vehicle detection and classification. Ref. [

53] introduces a real-time foveal classifier-based system for nighttime vehicle detection. Ref. [

54] combines YOLOv4 and SPP-Net for multi-scale vehicle detection in varying weather. Ref. [

55] efficiently detects moving vehicles with a CNN-based method incorporating background subtraction. Ref. [

56] refines YOLOv5 for vehicle detection in aerial infrared images, ensuring robustness against challenges like occlusion and low contrast.

Overall, the aforementioned papers represent a diverse set of approaches to vehicle detection and tracking. Each paper has its strengths and weaknesses, and it is important to consider the specific application when choosing a method. However, all of the papers represent significant advances in the field of vehicle detection and tracking. The list of reviewed papers on vehicle detection is summarized in

Table 2.

4.2. Pedestrian Detection

Pedestrian detection is also a key component of ADASs that uses sensors to identify and track pedestrians in the surrounding environment and prevent collisions with pedestrians. The goal of pedestrian detection is to identify and track pedestrians in the surrounding environment, warn the driver of potential collisions with pedestrians, and take evasive action such as automatically applying brakes, if necessary.

Pedestrian detection systems typically use a combination of sensors, such as cameras, radar, and lidar. Cameras are often used to identify the shape and movement of pedestrians, while radar and lidar can be used to determine the distance and speed of pedestrians. Cameras can be susceptible to glare and shadows, whereas radar and lidars are less susceptible to these problems.

Pedestrian detection systems can be used to warn drivers of potential collisions in a variety of ways. Some systems simply alert the driver with a visual or audible warning. Others can take more active measures, such as automatically braking the vehicle or slightly steering it away from the pedestrian. However, pedestrian detection is more challenging, as pedestrians are often smaller and more difficult to distinguish from other objects in the environment. Thus, it is an important safety feature for ADASs, as it can help to prevent accidents involving pedestrians. According to the National Highway Traffic Safety Administration (NHTSA) [

57], pedestrians are involved in about 17% of all traffic fatalities in the United States. Pedestrian detection systems can help to reduce this number by warning drivers of potential hazards and by automatically applying the brakes in emergencies.

Search Terms and Recent Trends in Pedestrian Detection

‘Pedestrian detection’, ‘pedestrian tracking’, and ‘pedestrian detection and tracking’ are three prominent search terms which were used to investigate this topic. The ‘OR’ operator was used to choose and combine the most relevant and regularly used applicable phrases; that is, the search phrases pedestrian detection, ‘pedestrian tracking’, and ‘pedestrian detection and tracking’ were discovered.

Figure 3 shows the complete search query for each of the databases. The databases include IEEE Xplore and MDPI.

Ref. [

58] introduces a novel approach to pedestrian detection, emphasizing high-level semantic features instead of traditional low-level features. This method employs context-aware RoI pooling and a multi-branch decision network to preserve small-scale object details and enhance classification accuracy. The CNN initially captures high-level semantic features from images, which are then used to train a classifier to distinguish pedestrians from other objects. Ref. [

59] proposes an adaptive non-maximum suppression (NMS) technique tailored for refining pedestrian detection in crowded scenarios. Conventional NMS algorithms often eliminate valid detections along with duplicates in crowded scenes. The new ‘Adaptive NMS’ algorithm dynamically adjusts the NMS threshold based on crowd density, enabling the retention of more pedestrian candidates in congested areas. Ref. [

60] introduces the ‘Mask-Guided Attention Network’ (MGAN) for detecting occluded pedestrians. Utilizing a CNN, MGAN extracts features from both pedestrians and backgrounds. Pedestrian features guide the network’s focus towards occluded regions, improving the accuracy of detecting occluded pedestrians. Ref. [

61] presents a real-time method to track pedestrians by utilizing camera and lidar sensors in a moving vehicle. Combining sensor features enables accurate pedestrian tracking. Features from the camera image, such as silhouette, clothing, and gait, are extracted. Additionally, features like height, width, and depth are obtained from the lidar point cloud. These details facilitate precise tracking of pedestrians’ locations and poses over time. A Kalman filter enhances tracking performance through sensor data fusion, offering better insights into pedestrian behavior in dynamic environments. Ref. [

62] proposes a computationally efficient single-template matching technique for accurate pedestrian detection in lidar point clouds. The method creates a pedestrian template from training data and uses it to identify pedestrians in new point clouds, even under partial occlusion. Ref. [

63] focuses on tracking pedestrian flow and statistics using a monocular camera and a CNN–Kalman filter fusion. The CNN extracts features from the camera image, which is followed by a Kalman filter for trajectory estimation. This approach effectively tracks pedestrian flow and vital statistics, including count, speed, and direction.

Ref. [

64] addresses hazy weather pedestrian detection with deep learning. DL models are trained on hazy weather datasets and use architectural modifications to handle challenging conditions. This approach achieves high pedestrian detection accuracy, even in hazy weather. Ref. [

65] introduces the ‘NMS by Representative Region’ algorithm to refine pedestrian detection in crowded scenes. By employing representative regions, this method enhances crowded scene handling by comparing these regions and removing duplicate detections, resulting in reduced false positives. Ref. [

66] proposes a graininess-aware deep feature learning approach, equipping DL models to handle grainy images. A DL model is trained using a graininess-aware loss function on a dataset containing grainy and non-grainy pedestrian images. This model effectively detects pedestrians in new images, even when they are grainy. Ref. [

67] presents a DL framework for real-time vehicle and pedestrian detection on rural roads, optimized for embedded GPUs. Modified Faster R-CNN detects both vehicles and pedestrians simultaneously in rural road scenes. A new rural road image dataset is developed for training the model. Ref. [

68] addresses infrared pedestrian detection at night using an attention-guided encoder–decoder CNN. Attention mechanisms focus on relevant regions in infrared images, enhancing detection accuracy in low-light conditions. Ref. [

69] focuses on improved YOLOv3-based pedestrian detection in complex scenarios, incorporating modifications to handle various challenges like occlusions, lighting variations, and crowded environments.

Ref. [

70] introduces Ratio-and-Scale-Aware YOLO (RASYOLO), handling pedestrians with varying sizes and occlusions through ratio-aware anchors and scale-aware feature fusion. Ref. [

71] introduces Track Management and Occlusion Handling (TMOH), managing occlusions and multiple-pedestrian tracking through track suspension and resumption. Ref. [

72] incorporates a Part-Aware Multi-Scale fully convolutional network (PAM-FCN) to enhance pedestrian detection accuracy by considering pedestrian body part information and addressing scale variation. Ref. [

73] proposes Attention Fusion for One-Stage Multispectral Pedestrian Detection (AFOS-MSPD), combining attention fusion and a one-stage approach for multispectral pedestrian detection, improving efficiency and accuracy. Ref. [

74] utilizes multispectral images for Multispectral Pedestrian Detection (MSPD), improving detection using a DNN designed for multispectral data. Ref. [

75] presents Robust Pedestrian Detection Based on Multi-Spectral Image Fusion and Convolutional Neural Networks (RPOD-FCN), utilizing multi-spectral image fusion and a CNN-based model for accurate detection.

Ref. [

76] introduces Uncertainty-Guided Cross-Modal Learning for Robust Multispectral Pedestrian Detection (UCM-RMPD), addressing multispectral detection challenges using uncertainty-guided cross-modal learning. Ref. [

77] focuses on multimodal pedestrian detection for autonomous driving using a Spatio-Contextual Deep Network-Based Multimodal Pedestrian Detection (SCDN-PMD) approach. Ref. [

78] proposes a Novel Approach to Model-Based Pedestrian Tracking Using Automotive Radar (NMPT radar), utilizing radar data for model-based pedestrian tracking. Ref. [

79] adopts YOLOv4 Architecture for Low-Latency Multispectral Pedestrian Detection in Autonomous Driving (AYOLOv4), enhancing detection accuracy using multispectral images. Ref. [

80] introduces modifications to [

79] called AIR-YOLOv3, an improved network-pruned YOLOv3 for aerial infrared pedestrian detection, enhancing robustness and efficiency. Ref. [

81] presents YOLOv5-AC, an attention mechanism-based lightweight YOLOv5 variant for efficient pedestrian detection on embedded devices. The list of reviewed papers on pedestrian detection is summarized in

Table 3.

4.3. Traffic Signs Detection

Traffic Signs Detection and Recognition (TSR) is another key component of ADASs that automatically detects and recognizes traffic signs on the road and provides information to the driver regarding speed limits, upcoming turns, and so on. TSR systems typically use cameras to capture images of traffic signs and then use computer vision algorithms to identify and classify the signs.

TSR systems can be a valuable safety feature, as they can help to prevent accidents caused by driver distraction or drowsiness. For example, TSR systems can alert drivers to speed limit changes, stop signs, and yield signs. They can also help drivers to stay in their lane and avoid crossing over into oncoming traffic. Although TSR can be challenging due to the variety of traffic signs, the different fonts and styles used, and the presence of noise and clutter, TSR systems are becoming increasingly common in new vehicles. The NHTSA has mandated that all new cars sold in the United States come equipped with TSR systems by 2023 [

57].

Search Terms and Recent Trends in Traffic Signs Detection

‘Traffic sign detection’, ‘traffic sign recognition, ‘traffic sign classification’, ‘traffic sign detection and recognition’, and ‘traffic sign detection and recognition system’ are some of the prominent search terms which were used to investigate this topic. The ‘OR’ operator was used to choose and combine the most relevant and regularly used applicable phrases; that is, the search phrases ‘driver monitoring system’ and ‘driver monitoring and assistance system’ were discovered.

Figure 4 shows the complete search query for each of the databases. The databases include IEEE Xplore and MDPI.

Yuan et al. [

82] introduce VSSA-NET, a novel architecture for traffic sign detection (TSD), which employs a vertical spatial sequence attention network to improve accuracy in complex scenes. VSSA-NET extracts features via CNN, followed by a vertical spatial sequence attention module to emphasize vertical locations crucial for TSD. The detection module outputs traffic sign bounding boxes. Li and Wang [

83] present real-time traffic sign recognition using efficient CNNs, addressing diverse lighting and environmental conditions. MobileNet extracts features from input images, followed by SVM classification. Liu et al. [

84] propose multi-scale region-based CNN (MR-CNN) for recognizing small traffic signs. MR-CNN extracts multi-scale features using CNN, generates proposals with RPN, and uses Fast R-CNN for classification and bounding box outputs. Tian et al. [

85] introduce a multi-scale recurrent attention network for TSD. CNN extracts multi-scale features, the recurrent attention module prioritizes scale, and the detection module outputs bounding boxes for robust detection across scenarios. Cao et al. [

86] present improved TSDR for intelligent vehicles. CNN performs feature extraction, RPN generates region proposals, and SVM classifies proposals, enhancing reliability in dynamic road environments. Shao et al. [

87] improve Faster R-CNN TSD with a second RoI and HPRPN. CNN performs feature extraction, RPN generates region proposals, and the second RoI refines proposals, enhancing accuracy in complex scenarios.

Zhang et al. [

88] propose cascaded R-CNN with multiscale attention for TSD. RPN generates proposals, Fast R-CNN classifies, and multiscale attention improves detection performance, particularly when there is an imbalanced data distribution. Tabernik and Skočaj [

89] explore the DL framework for large-scale TSDR. CNN performs feature extraction, RPN generates region proposals, and Fast R-CNN classifies, exploring DL’s potential in handling diverse real-world scenarios. Kamal et al. [

90] introduce automatic TSDR using SegU-Net and modified Tversky loss. SegU-Net segments traffic signs and modified loss function enhances detection and recognition, handling appearance variations. Tai et al. [

91] propose a DL approach for TSR with spatial pyramid pooling and scale analysis. CNN performs feature extraction, while spatial pyramid pooling captures context and scales, enhancing recognition across scenarios. Dewi et al. [

92] evaluate the spatial pyramid pooling technique on CNN for TSR system robustness. Assessing pooling sizes and strategies, they evaluate different CNN architectures for effective traffic sign recognition. Nartey et al. [

93] propose robust semi-supervised TSR with self-training and weakly supervised learning. CNN performs feature extraction, self-training labels unlabeled data, and weakly supervised learning classifies labeled data, enhancing accuracy using limited labeled data.

Dewi et al. [

94] leverage YOLOv4 with synthetic GAN-generated data for advanced TSR. YOLOv4 with synthetic data from BigGAN achieves top performance, enhancing detection on the GTSDB dataset. Wang et al. [

95] improve YOLOv4-Tiny TSR with new features and classification modules. New data augmentation improves the performance on the GTSDB dataset, optimizing recognition while maintaining efficiency. Cao et al. [

96] present improved sparse R-CNN for TSD with a new RPN and loss function. Enhancing detection accuracy using advanced techniques within the sparse R-CNN framework. Lopez-Montiel et al. [

97] propose DL-based embedded system evaluation and synthetic data generation for TSD. Methods to assess DL system performance and efficiency for real-time TSD applications are developed. Zhou et al. [

98] introduce a learning region-based attention network for TSR. The attention module emphasizes important image regions, potentially enhancing recognition accuracy. Koh et al. [

99] evaluate senior adults’ TSR recognition through EEG signals, utilizing EEG signals to gain unique insights into senior individuals’ traffic sign perception.

Ahmed et al. [

100] present a weather-adaptive DL framework for robust TSR. A cascaded detector with a weather classifier improves TSD performance in adverse conditions, enhancing road safety. Xie et al. [

101] explore efficient federated learning in TSR with spike NNs (SNNs). SNNs enable training on decentralized datasets, minimizing communication overhead and resources. Min et al. [

102] propose semantic scene understanding and structural location for TSR, leveraging scene context and structural information for accurate traffic sign recognition. Gu and Si [

103] introduce a lightweight real-time TSD integration framework based on YOLOv4. Novel data augmentation and YOLOv4 optimization are used for speed and accuracy, achieving real-time performance. Liu et al. [

104] introduce the M-YOLO TSD algorithm for complex scenarios. M-YOLO detects and classifies traffic signs, addressing detection in intricate environments. Wang et al. [

105] propose real-time multi-scale TSD for driverless cars. The multi-scale approach detects traffic signs of various sizes, enhancing performance in diverse scenarios. The list of reviewed papers on traffic signs detection is summarized in

Table 4.

4.4. Driver Monitoring System (DMS)

A driver monitoring system (DMS), also called a driver monitoring and assistance system (DMAS), is a camera-based safety system used to assess the driver’s alertness and attention. It monitors a driver’s behavior by detecting and tracking the driver’s face, eyes, and head position and warns or alerts them when they become distracted or drowsy for long enough to lose situational awareness or full attention to the task of driving. DMSs can also use other sensors, such as radar or infrared sensors, to gather additional information about the driver’s state.

DMSs are becoming increasingly common in vehicles and are used to monitor the driver’s alertness and attention. This information is then used to prevent accidents and save lives by warning the driver if they are starting to become drowsy or distracted. Some of the latest DMSs can even predict if drivers are eating and drinking while driving.

4.4.1. Driver Monitoring System Methods

There are a variety of methods used in DMSs. One common approach is to use a camera to monitor the driver’s face, while the other approach is to use a sensor fusion approach, which combines data from multiple sensors, such as cameras, radar, and eye tracking sensors.

DMSs can use a variety of sensors to monitor the driver, including:

Facial recognition. This is the most common type of sensor used in DMSs. Facial recognition systems can track the driver’s face and identify signs of distraction or drowsiness, such as eye closure, head tilt, and lack of facial expression.

A head pose sensor tracks the position of the driver’s head and can identify signs of distraction or drowsiness, such as looking away from the road or nodding off.

An eye gaze sensor tracks the direction of the driver’s eye gaze and can identify signs of distraction or drowsiness, such as looking at the phone or dashboard.

An eye blink rate sensor tracks the driver’s eye blink rate and can identify signs of drowsiness, such as a decrease in the blink rate.

Speech recognition is used in DMSs to detect if the driver is talking on the phone or if they are not paying attention to the road.

The above sensors are used in DMSs to detect a variety of driver behaviors, such as (i) when a driver is distracted by looking away from the road, talking on the phone, or using a mobile device; (ii) when a driver is drowsy, which can be determined by tracking the driver’s eye movements and eyelid closure; (iii) when a driver is inattentive, which can be determined by tracking the driver’s head position and eye gaze.

When a DMS detects risky driver behavior, it can provide a variety of alerts to the driver, including alerts displayed on the dashboard or windshield, referred to as visual alerts; alerts played through the vehicle’s speakers, which are called audio alerts; and hectic alerts, in which alerts are issued through vibrations of the steering wheel or the driver’s seat. In some cases, the DMS may also take corrective action, such as applying the brakes or turning off the engine.

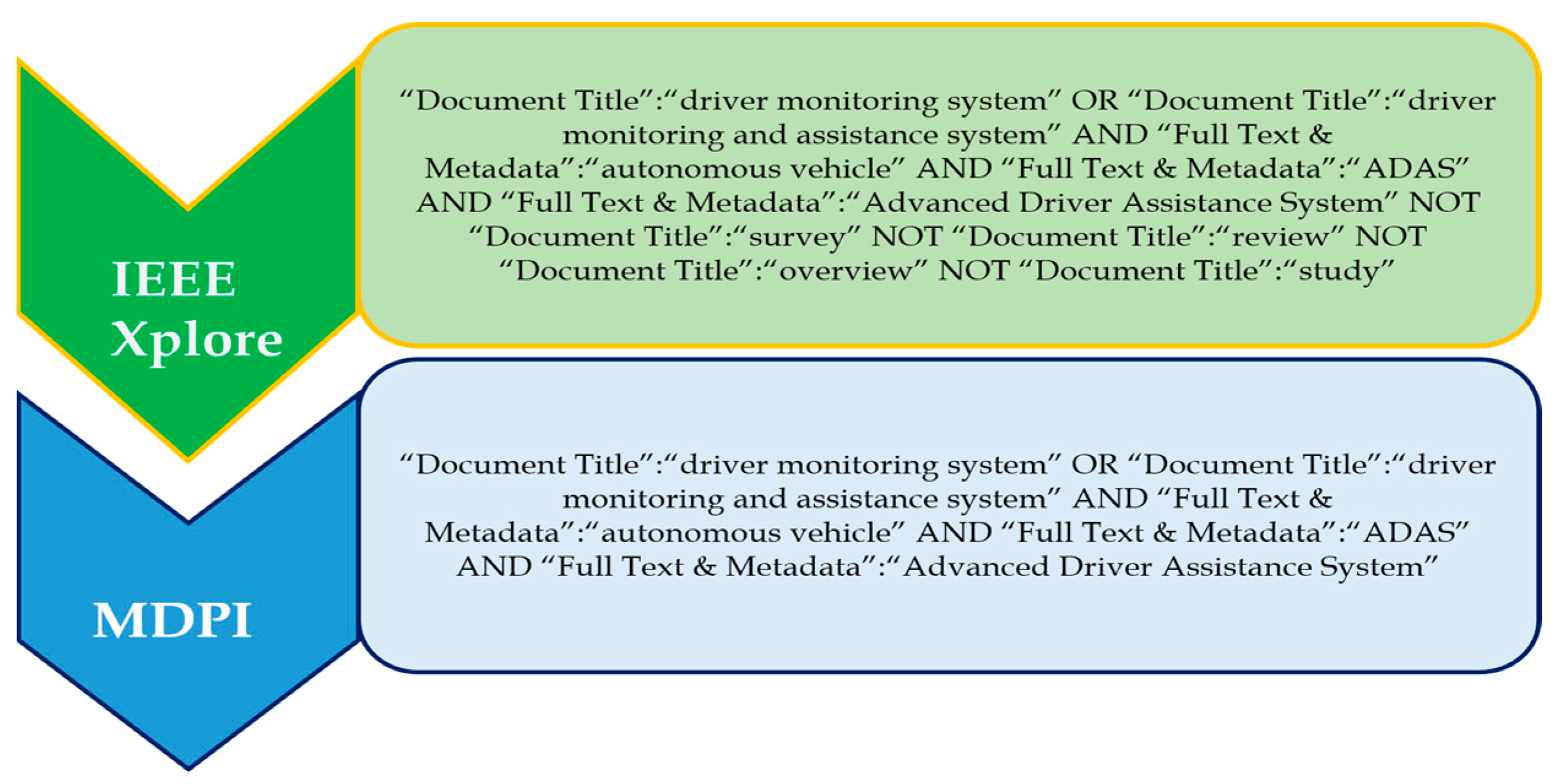

4.4.2. Search Terms and Recent Trends in Driver Monitoring System Methods

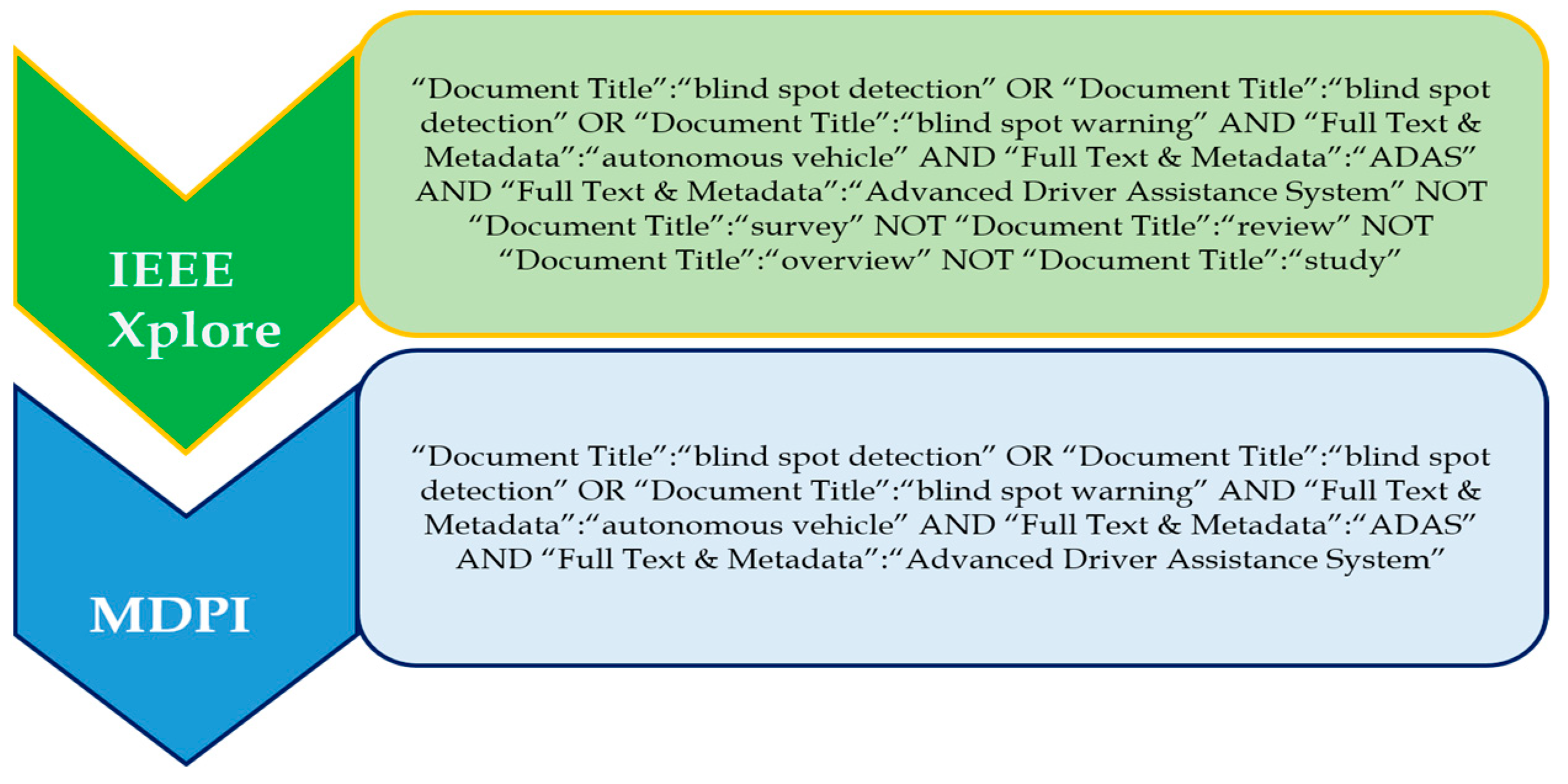

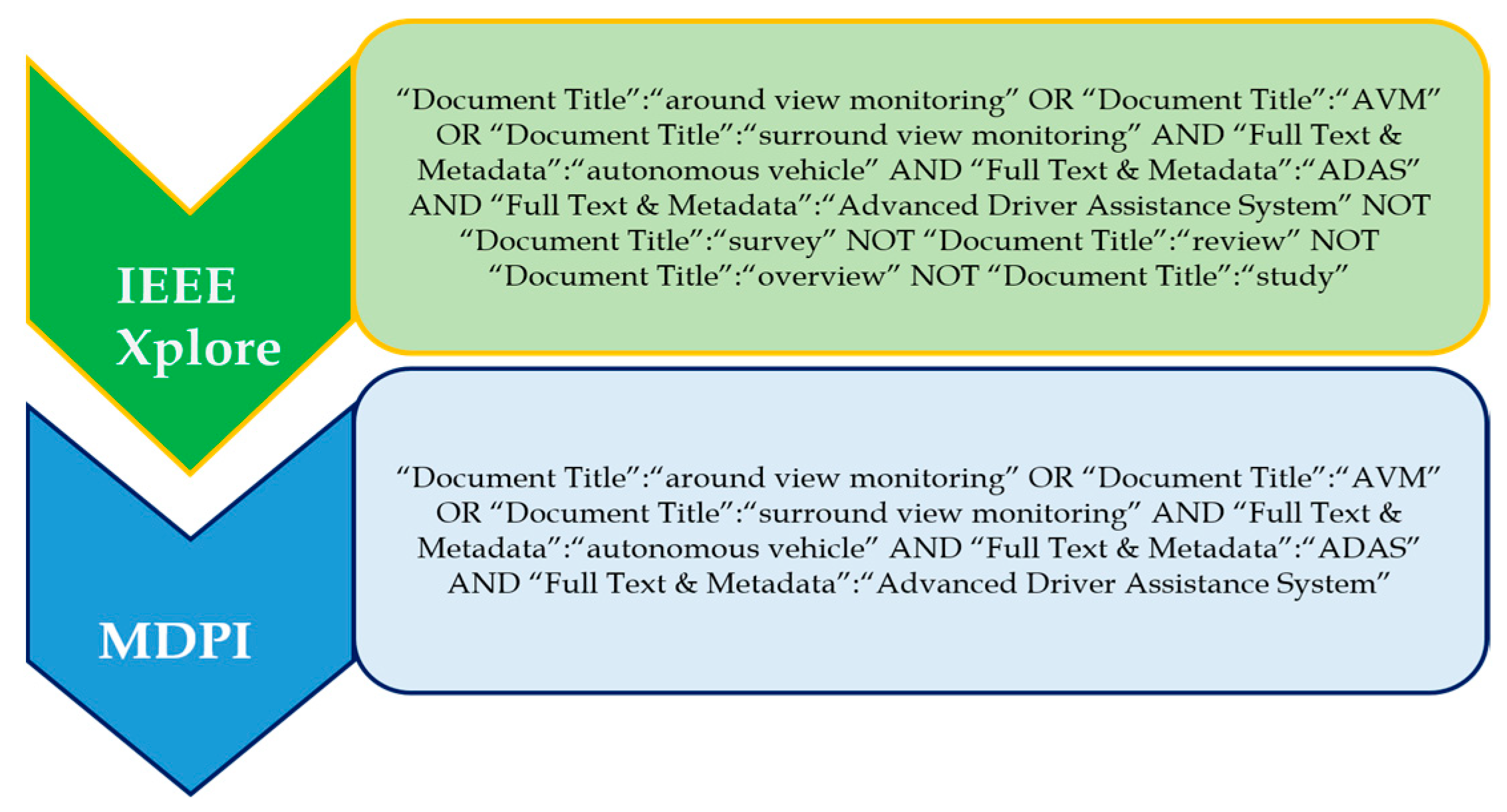

‘Driver monitoring system’ and ‘driver monitoring and assistance system’ are the two prominent search terms used to investigate this topic. The ‘OR’ operator was used to choose and combine the most relevant and regularly used applicable phrases. That is, the search phrases ‘driver monitoring system’ and ‘driver monitoring and assistance system’ were discovered.

Figure 5 shows the complete search query for each of the databases. The databases include IEEE Xplore and MDPI.

The papers [

106,

107,

108,

109,

110,

111,

112,

113,

114] discuss a variety of approaches to DMSs. These include some of the key methods like (i) the powerful technique employing DL, which is used to extract features from images and videos. These are used to identify driver behaviors such as eye closure, head pose, and facial expressions. (ii) A more general approach is using machine learning, which can be used to learn patterns from data. These are used to identify driver behaviors that are not easily captured using traditional methods, such as hand gestures and body language, and (iii) a technique that combines data from multiple sensors, referred to as sensor fusion, to improve the accuracy of DMSs. For instance, a DMS could combine data from a camera, an eye tracker, and a heart rate monitor to provide a more comprehensive assessment of the driver’s state.

Y. Zhao et al. [

106] propose a novel real-time DMSs based on deep CNN to monitor drivers’ behavior and detect distractions. It uses video input from an in-car camera and employs CNNs to analyze the driver’s facial expressions and head movements to assess their attentiveness. It can detect eye closure, head pose, and facial expressions with high accuracy. Ref. [

107] works towards a DMS that uses machine learning to estimate driver situational awareness using eye-tracking data. It aims to predict driver attention and alertness to the road, enhancing road safety. Ref. [

108] proposes a lightweight DMS based on Multi-Task Mobilenets architecture, which efficiently monitors drivers’ behavior and attention using low computational resources. It can even run on a simple smartphone, making it suitable for real-time monitoring. Ref. [

109] introduces an optimization algorithm for DMSs using DL. This algorithm improves the accuracy of the DMS by reducing the number of false positives and ensuring real-time performance.

Ref. [

110] proposes a real-time DMS based on visual cues, leveraging facial expressions and eye movements to assess driver distraction and inattention. It is able to detect driver behaviors such as eye closure, head pose, and facial expressions using only a camera. Ref. [

111] proposes an intelligent DMS that uses a combination of sensors and ML. It is capable of providing a comprehensive assessment of the driver’s state, including their attention level, fatigue, and drowsiness, and provides timely alerts to improve safety. Ref. [

112] proposes a hybrid DMS combining Internet of Things (IoT) and ML techniques for comprehensive driver monitoring. It collects data from multiple sensors and uses ML to identify driver behaviors. Ref. [

113] focuses on a distracted DMS that uses AI to detect and prevent risky behaviors on the road. It detects distracted driving behaviors such as texting and talking on the phone while driving. Ref. [

114] proposes a DMS based on a distracted driving decision algorithm which aims to assess and address potential distractions to ensure safe driving practices. It predicts whether the driver is distracted or not.

These papers provide a good overview of the current state of the art in DMS and contribute to the development of advanced DMS technologies, aiming to enhance driver safety, detect distractions, and improve situational awareness on the roads. They employ various techniques, including deep learning, IoT, and machine learning, to create efficient and effective driver monitoring solutions. However, before DMSs can be widely deployed, there are still some challenges that need to be addressed, such as:

Data collection: It is difficult to collect large datasets of driver behavior representative of the real world, as it is difficult to monitor drivers naturally without disrupting their driving experience.

Algorithm development: Since the driver behaviors can be subtle and vary from person to person, it is challenging to develop algorithms that can accurately identify driver behaviors in real time.

Cost: DMS demands the use of specialized sensors and software, making them expensive to implement and maintain.

Additionally, with the development and availability of new sensors, they could be used to improve the accuracy and performance of DMSs; for example, radar sensors could be used to track driver head movements and eye gaze. Besides, autonomous vehicles will not need DMSs in the same way that human-driven vehicles do. However, DMSs could still be used to monitor the state of the driver in autonomous vehicles and to provide feedback to the driver if necessary. Despite these challenges, there is a lot of potential for DMSs to improve road safety and the future of DMSs looks promising. As the technology continues to develop, DMSs could become an essential safety feature in vehicles, both human-driven and autonomous. The list of reviewed papers on driver monitoring system is summarized in

Table 5.

4.5. Lane Departure Warning System

The Lane Departure Warning System (LDWS) is a type of ADAS that is designed to warn drivers when they are unintentionally drifting out of their lane. LDWSs typically use cameras, radar, lidar, or a combination of sensors to detect the lane markings on the road, and then they use this information to monitor the driver’s position in the lane. If the driver starts to drift out of the lane, the LDWS will sound an audible alert or vibrate the steering wheel to warn the driver. These systems can be a valuable safety feature and are especially helpful for drivers, as they can help to prevent accidents caused by driver drowsiness or distraction and they can help to keep drivers alert and focused on the road.

LDWSs are becoming increasingly common in new vehicles. In fact, according to NHTSA, lane departure crashes account for about 5% of all fatal crashes in the United States and the NHTSA has mandated that all new vehicles sold in the United States be equipped with LDWSs by 2022 [

115].

LDWSs can be a valuable safety feature, but they are not perfect. They can sometimes be fooled by objects that look like lane markings, such as shadows or road debris, and may not be accurate when the road markings are faded or obscured. Additionally, LDWS can only warn drivers; they cannot take corrective action on their own, which means they may not be effective for drivers who are drowsy or distracted.

Despite these limitations, LDWS can be a valuable tool for reducing the number of accidents, and are especially beneficial for long-distance driving, as they can help keep drivers alert and focused. They can: (i) help to prevent accidents by alerting drivers to unintentional lane departures, (ii) help drivers stay alert and focused on the road, (iii) be especially helpful for drivers who are drowsy or distracted, (iv) help to keep drivers in their lane, which can improve lane discipline and reduce the risk of sideswipe collisions, thus improving the driver safety and comfort. Therefore, LDWSs are becoming increasingly common in new vehicles, as they greatly reduce drivers’ stress and fatigue.

Overall, LDWSs are a valuable safety feature that can help to prevent accidents, though they are not guaranteed to do so. It is important to remember that these systems are not a substitute for safe driving practices. Drivers should always be alert and focused on the road, aware of their surroundings and use safe driving practices at all times, even when they are using an LDWS.

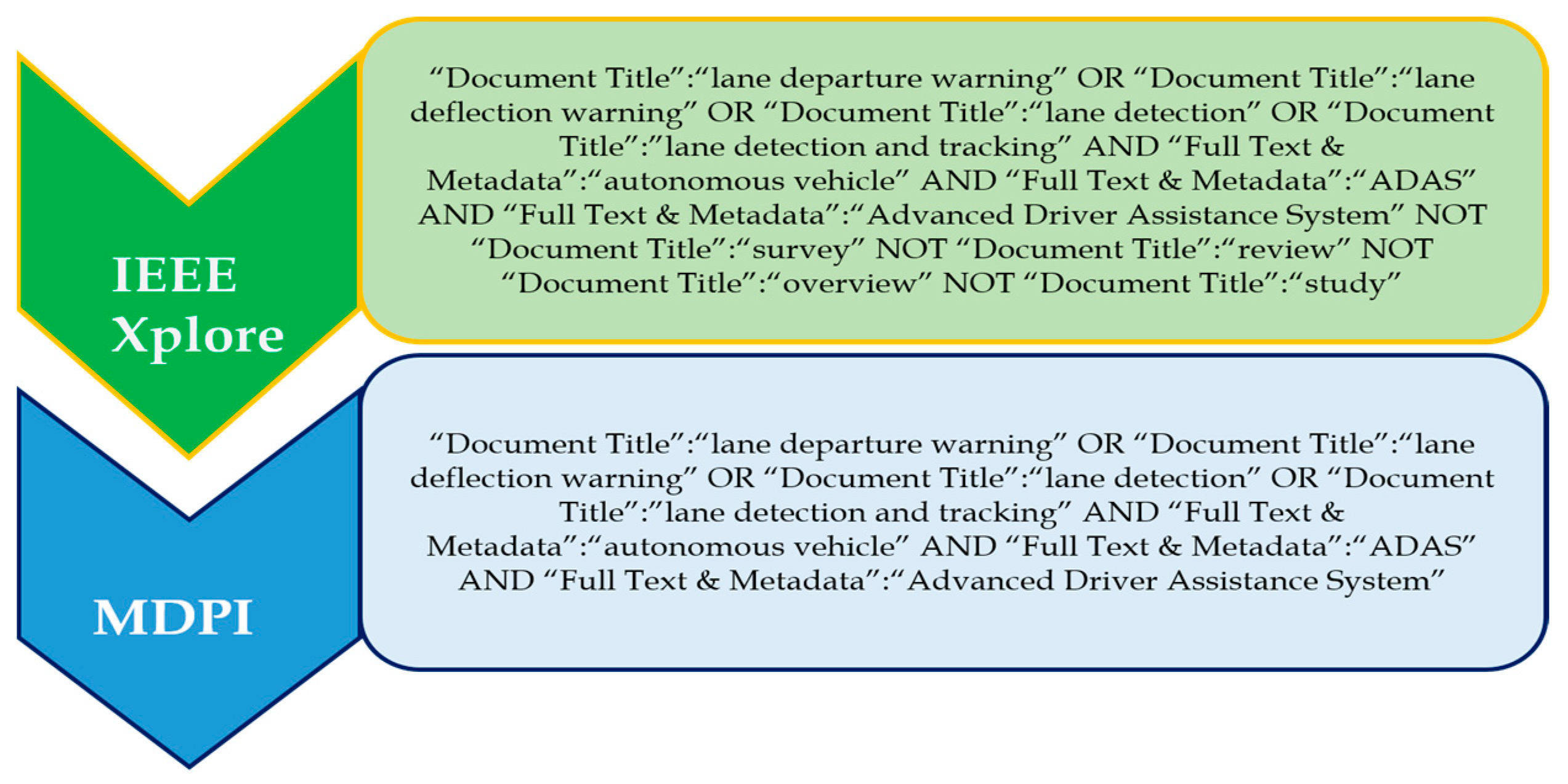

Search Terms and Recent Trends in LDWS

‘Lane departure warning’, ‘lane deflection warning’, ‘lane detection’, and ‘lane detection and tracking’ are four prominent search terms used to investigate the topic. The ‘OR’ operator was used to choose and combine the most relevant and regularly used applicable phrases. The search phrases ‘lane departure warning’, ‘lane deflection warning’, ‘lane detection’, and ‘lane detection and tracking’ were discovered.

Figure 6 shows the complete search query for each of the databases. The databases include IEEE Xplore and MDPI.

Lane detection is a critical task in computer vision and autonomous driving systems. These review papers explore various lane detection techniques proposed in recent research papers. The reviewed papers cover diverse approaches, including lightweight CNNs, sequential prediction networks, 3D lane detection, and algorithms for intelligent vehicles in complex environments. The existing lane detection algorithms are not robust to challenging road conditions, such as shadows, rain, and snow, along with occlusion and illumination, and scenarios where lane markings are not visible and are limited in their ability to detect multiple lanes and to accurately estimate the 3D position of the lanes.

This research review paper examines recent advancements in lane detection techniques, focusing on the integration of DNNs and sensor fusion methodologies. The review encompasses papers published between 2019 and 2022, exploring innovative approaches to improve the robustness, accuracy, and performance of lane detection systems in various challenging scenarios.

The reviewed papers present various innovative approaches for lane detection in the context of autonomous driving systems. Lee et al. [

116] introduce a self-attention distillation method to improve the efficiency of lightweight lane detection CNNs without compromising accuracy. FastDraw [

117] addresses the long tail of lane detection using a sequential prediction network to consider contextual information for better predictions. 3D-LaneNet [

118] incorporates depth information from stereo cameras for end-to-end 3D multiple lane detection. Wang et al. [

119] propose a data enhancement technique called Light Conditions Style Transfer for lane detection in low-light conditions, improving model robustness. Other methods explore techniques such as ridge detectors [

120], LSTM networks [

121], and multitask attention networks [

122] to enhance lane detection accuracy in various challenging scenarios. Additionally, some papers integrate multiple sensor data [

123,

124,

125,

126] or use specific sensors like radar [

127] and light photometry systems [

128] to achieve more robust and accurate lane detection for autonomous vehicles. These research contributions provide valuable insights into the development of advanced lane detection systems for safer and more reliable autonomous driving applications.

In their recent research, Lee et al. [

116] proposed a novel approach for learning lightweight lane detection CNNs by applying self-attention distillation. FastDraw [

117] addressed the long tail of lane detection by using a sequential prediction network to better predict lane markings in challenging conditions. Garnett et al. [

118] presented 3D-LaneNet, an end-to-end method incorporating depth information from stereo cameras for 3D multiple lane detection. Additionally, Cao et al. [

123] tailored a lane detection algorithm for intelligent vehicles in complex road conditions, enhancing real-world driving reliability. Kuo et al. [

129] optimized image sensor processing techniques for lane detection in vehicle lane-keeping systems. Lu et al. [

120] improved lane detection accuracy using a ridge detector and regional G-RANSAC. Zou et al. [

130] achieved robust lane detection from continuous driving scenes using deep neural networks. Liu et al. [

119] introduced Light Conditions Style Transfer for lane detection in low-light conditions. Wang et al. [

124] used a map to enhance ego-lane detection in missing feature scenarios. Khan et al. [

127] utilized impulse radio ultra-wideband radar and metal lane reflectors for robust lane detection in adverse weather conditions. Yang et al. [

121] employed long short-term memory (LSTM) networks for lane position detection. Gao et al. [

131] minimized false alarms in lane departure warnings using an Extreme Learning Residual Network and ϵ-greedy LSTM. Moreover, ref. [

132] proposed a real-time attention-guided DNN-based lane detection framework and CondLaneNet [

133] used conditional convolution for top-to-down lane detection. Dewangan and Sahu [

134] analyzed driving behavior using vision-sensor-based lane detection. Haris and Glowacz [

135] utilized object feature distillation for lane line detection. Lu et al. [

136] combined semantic segmentation and optical flow estimation for fast and robust lane detection. Suder et al. [

128] designed low-complexity lane detection methods for light photometry systems. Ko et al. [

137] combined key points estimation and point instance segmentation for lane detection. Zheng et al. [

138] introduced CLRNet for lane detection, while Wang et al. [

122] proposed a multitask attention network (MAN). Khan et al. [

139] developed LLDNet, a lightweight lane detection approach for autonomous cars. Chen and Xiang [

125] incorporated pre-aligned spatial–temporal attention for lane mark detection. Nie et al. [

126] integrated a camera with dual light sensors to improve lane-detection performance in autonomous vehicles. These studies collectively present diverse and effective methodologies, contributing to the advancement of lane-detection systems in autonomous driving and intelligent vehicle applications. The list of reviewed papers on lane-departure warning system is summarized in

Table 6.

4.6. Forward-Collision Warning System

A Forward-Collision Warning System (FCWS) is a type of ADAS that warns drivers of potential collisions with other vehicles or objects in front of them. FCWSs typically use radar, cameras, or lidar to track the distance and speed of vehicles in front of the vehicle, and they alert the driver if the vehicle is getting too close to the vehicle in front. When the system detects that a collision is imminent, it alerts the driver with a visual or audible warning.

FCWSs can be an invaluable safety feature, as they can help prevent accidents caused by driver distraction or drowsiness. According to the NHTSA, rear-end collisions account for about 25% of all fatal crashes in the United States [

140].

FCWSs are becoming increasingly common in new vehicles. The NHTSA has mandated that all new cars sold in the United States come equipped with FCWS systems by 2022.

FCWSs: (i) help prevent accidents caused by driver distraction or drowsiness, (ii) help drivers to brake sooner, which can reduce the severity of rear-end crashes and accidents, (iii) help improve the driver awareness of the surrounding traffic, (iv) help to reduce driver stress and fatigue.

Although FCWSs offer many advantages, they have limitations such as: (i) being less effective in certain conditions, such as heavy rain or snow, (ii) being prone to false alarms, which can lead to driver desensitization, (iii) are not a substitute for safe driving practices, such as paying attention to the road and using turn signals.

Overall, FCWSs can be a valuable safety feature, but they are not guaranteed to prevent accidents. Drivers should still be aware of their surroundings and use safe driving practices at all times.

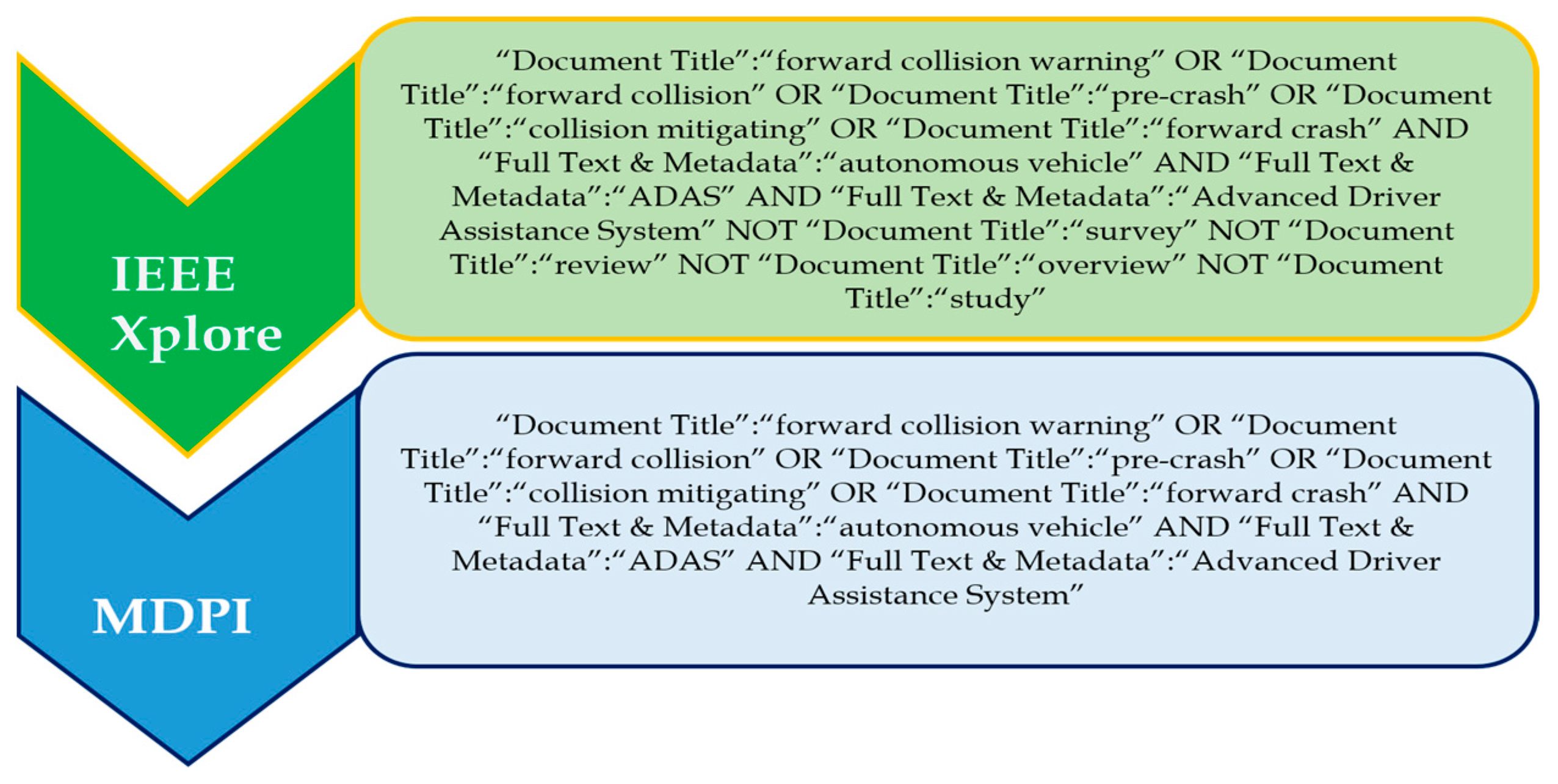

Search Terms and Recent Trends in FCWS

‘Forward collision warning’, ‘forward collision’, ‘pre-crash’, ‘collision mitigating’, and ‘forward crash’ are the prominent search terms used to investigate this topic. The ‘OR’ operator was used to choose and combine the most relevant and regularly used applicable phrases. That is, the search phrases ‘forward collision warning’, ‘forward collision’, ‘pre-crash’, ‘collision mitigating’, and ‘forward crash’ were discovered.

Figure 7 shows the complete search query for each of the databases. The databases include IEEE Xplore and MDPI.

The papers listed discuss the development of FCWSs for autonomous vehicles in recent years. Ref. [

141] suggests an autonomous vehicle collision avoidance system that employs predictive occupancy maps to estimate other vehicles’ future positions, enabling collision-free motion planning. Ref. [

142] introduces a forward collision prediction system using online visual tracking to anticipate potential collisions based on other vehicles’ positions. Ref. [

143] proposes an FCWS that combines driving intention recognition and V2V communication to predict and warn about potential collisions with front vehicles. Ref. [

144] presents an FCWS for autonomous vehicles that deploys a CNN to detect and track nearby vehicles. Ref. [

145] introduces a real-time FCW technique involving detection and depth estimation networks to identify nearby vehicles and estimate distances. Ref. [

146] proposes a vision-based FCWS merging camera and radar data for real-time multi-vehicle detection, addressing challenging conditions like occlusions and lighting variations. Tang et al. [

147] introduce a monocular range estimation system using a single camera for precise FCWS, especially in difficult scenarios. Lim et al. [

148] suggest a smartphone-based FCWS for motorcyclists utilizing phone sensors to predict collision risks. Farhat et al. [

149] present a cooperative FCWS using DL to predict collision likelihood in real time by considering data from both vehicles’ sensors. Hong and Park [

150] offer a lightweight FCWS for low-power embedded systems, combining cameras and radar for real-time multi-vehicle detection. Albarella et al. [

151] and Lin et al. [

152] propose V2X communication-based FCWS, with [

151] for electric vehicles and [

152] targeting curve scenarios. Yu and Ai [

153] suggest a hybrid DL approach employing CNN and recurrent NN for robust FCWS predictions. Olou et al. [

154] introduce an efficient CNN model for accurate forward collision prediction, even in challenging conditions. Pak [

155] presents a hybrid filtering method that improves radar-based FCWS by fusing data from multiple sensors, enhancing reliability.

This compilation of research papers demonstrates the extensive efforts in the field of forward-collision warning and avoidance systems, which are crucial for enhancing vehicular safety. Lee and Kum [

141] propose a ‘Collision Avoidance/Mitigation System’ incorporating predictive occupancy maps for autonomous vehicles. Manghat and El-Sharkawy [

142] present ‘Forward Collision Prediction with Online Visual Tracking’, utilizing online visual tracking for collision prediction. Yang, Wan, and Qu [

143] introduce ‘A Forward Collision Warning System Using Driving Intention Recognition’, integrating driving intention recognition and V2V communication. Kumar, Shaw, Maitra, and Karmakar [

144] offer ‘FCW: A Forward Collision Warning System Using Convolutional Neural Network’, deploying CNN for warning generation. Wang and Lin [

145] present ‘A Real-Time Forward Collision Warning Technique’, integrating detection and depth estimation networks for real-time warnings. Lin, Dai, Wu, and Chen [

146] introduce a ‘Driver Assistance System with Forward Collision and Overtaking Detection’. Tang and Li [

147] propose ‘End-to-End Monocular Range Estimation’ for collision warning. Lim et al. [