2. Noise Level Estimation Based on PCA

For an input noisy image

of size

, the image is scanned pixel by pixel, point by point, in a sliding window fashion;

is the set of overlapping patches of size

, containing a total of

patches. The matrix columns of the patches

are vectorized:

where

is the i-th noiseless (original image) patch,

is additive white noise following a Gaussian distribution

, and

is the corresponding noise patch. Assuming that the noise vectors are uncorrelated with each other, refs. [

27,

28] used principal component analysis on this noise model to derive the following equation:

where

and

are the minimum eigenvalues of the covariance matrices

and

respectively. However, for the blind noise estimation problem, the noiseless image is unknown information [

36]. It can be assumed that

of the set of patches

x covered by weak-textured and relatively homogenous regions in the noisy image is equal to zero. Then, Equation (2) can be rewritten as:

where

is the subset of patches in set

y that satisfy the homogenous conditions. The minimum eigenvalue of the covariance matrix

is equal to the actual noise level, so screening homogenous patches from the set of raw patches is a key in the noise estimation algorithm based on principal component analysis.

An appropriate measure of image structure must be determined to select low-ranked patches with similar structures [

37]. The gradient covariance matrix [

38] can be used to detect image texture and structure, and Liu et al. [

27] proposed an iterative weak-textured patch selection method that uses the trace of a patch’s gradient covariance matrix as a texture strength measure. The statistical properties of the texture strength

satisfy the gamma distribution, and when the texture strength of a patch is less than the threshold

, the patch can be considered a weak-textured patch. In the k-th iteration, the threshold

can be expressed as a function of the given significance level

and the noise level

of the k-th iteration as follows:

where

is the inverse gamma cumulative distribution function with shape parameter

and scale parameter

,

is the probability that the strength of the texture is not greater than

,

is the standard deviation of the Gaussian noise at the k-th iteration, and

and

represent the matrices of horizontal and vertical derivative operators.

The local variance is also an important feature for characterizing the structural information of the image. When the local variance of the patch is large, it indicates that the patch is a high-frequency part of the image, such as the edge and corner point regions. The local variance of

is defined as follows:

where

is the average gray value of

. The

of the flat patch obeys a gamma distribution

. Based on the nature of the gamma distribution we can obtain the mean value of the variance

. Thus, we can use the mean local variance to estimate the noise. For the flat patch, Ping et al. [

39] proved the following theorem:

Theorem 1. There must exist a unique such that with where represents the pdf of , is the inverse Gamma cumulative distribution function, and , which is a given significance level, denotes the probability of that local variance is no more than , i.e., ( presents the probability). Following Theorem 1, we consider the patches whose local variances do not exceed

to be flat patches. Assuming that the patches obtained after the selection process of weak-textured and flat patches are homogenous patches, the true noise variance can be obtained by performing principal component analysis-based noise estimation on these patches. However, for images with high texture and high rank, the homogenous patch selection method has some obvious drawbacks. Patches with small local variance

or texture strength

do not necessarily satisfy the homogeneity condition, and patches containing high-frequency information with high similarity are also low-rank patches [

40]. Distinguishing such patches for collection is difficult, so homogenous patches will not be fully extracted when relying only on the above patch selection methods.

Most of the eigenvalues of a noisy image fluctuate around the true noise variance rather than in an absolutely equal relationship, which requires us to compute the standard deviation of the noise based on the statistical properties of the eigenvalues. The methods in [

27,

28] assumed that the smallest eigenvalue

of homogenous patches is an estimate of the noise variance

. However, experimental results have shown that the estimated noise variance is consistently smaller than the true noise variance

.

Due to the inherent information redundancy and correlation of natural images, the patches obtained from images are usually located in low-dimensional subspaces [

41].

is the set of eigenvalues of the homogenous patch set

. Given

, we can represent

as

, where

denotes the eigenvalue of the principal dimension and

denotes the eigenvalue of the redundant dimension [

15]. While the eigenvalues of the redundant dimension

follow a Gaussian distribution

, the expected value of

can be approximated as [

42,

43]:

where

and

denotes the cumulative distribution function of the standard Gaussian distribution. When the number of redundant dimensions

, we obtain the minimum eigenvalue that is intrinsically smaller than the actual noise level,

. The result of Equation (3) underestimates the noise variance, and the accuracy of the estimation results progressively decreases as the number of redundant dimensions increases.

The redundant dimension assumption also has limitations because highly textured images have extremely rich textures with large differences in pixel values. Therefore, detailed information tends to be incorrectly included in the noise signal, resulting in a minimum eigenvalue being greater than zero [

44].

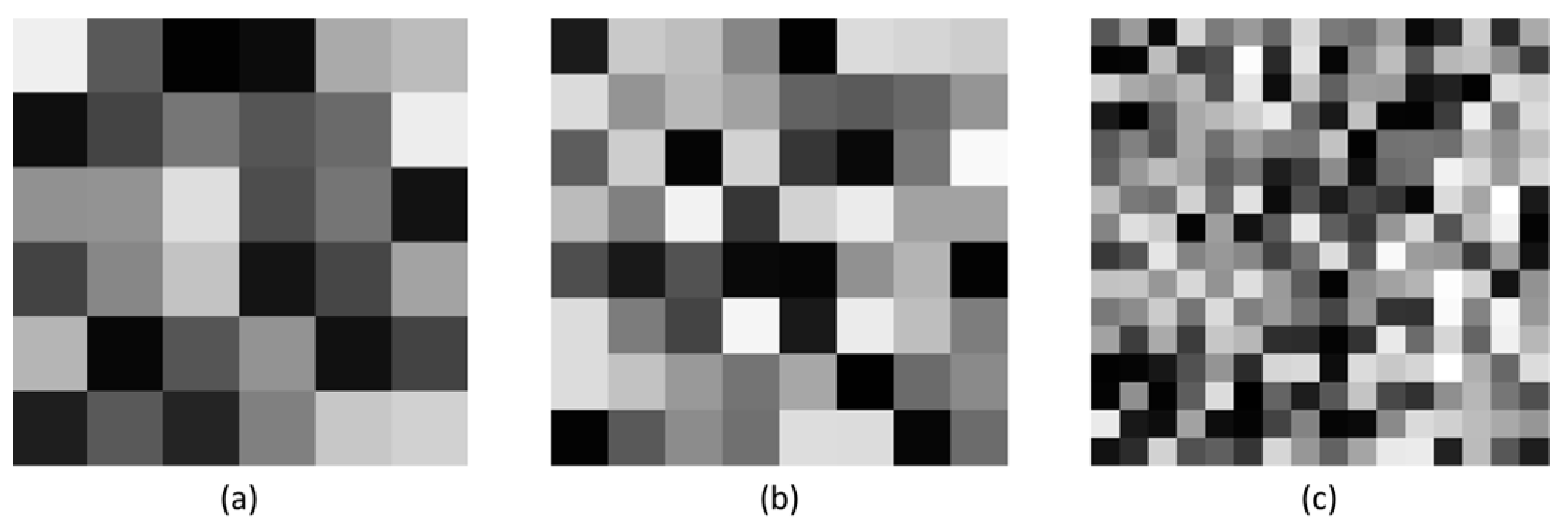

Figure 1 shows two images with different texture levels, “Bark” and “House”, and the results of the estimated noise standard deviation. “House” has a simpler structure, with most of the localized areas relatively smooth and having weak texture strength, and the minimum eigenvalue is slightly smaller than the actual noise level. “Bark” presents complex details with fine textures, including rich edges and ridged scenes, and the minimum eigenvalue is larger than the actual noise level, especially in low-noise conditions. Obviously, the noise estimation premised on redundancy assumptions will overestimate the noise level, which will seriously reduce the accuracy of the estimation results.

4. Experimental Results

In this section, the performance results of the proposed algorithm on synthetic noisy images are obtained through experimental tests. We use the following two classical image datasets, which are commonly used to evaluate image noise estimation algorithms: the BSDS500-test dataset and the Textures dataset. The BSDS500-test dataset contains 200 real-world scenes involving scenes with diversity (e.g., transportation, architecture, landscape, nature, people, flora, and fauna), and

Figure 5 shows some natural images in the BSDS500 dataset.

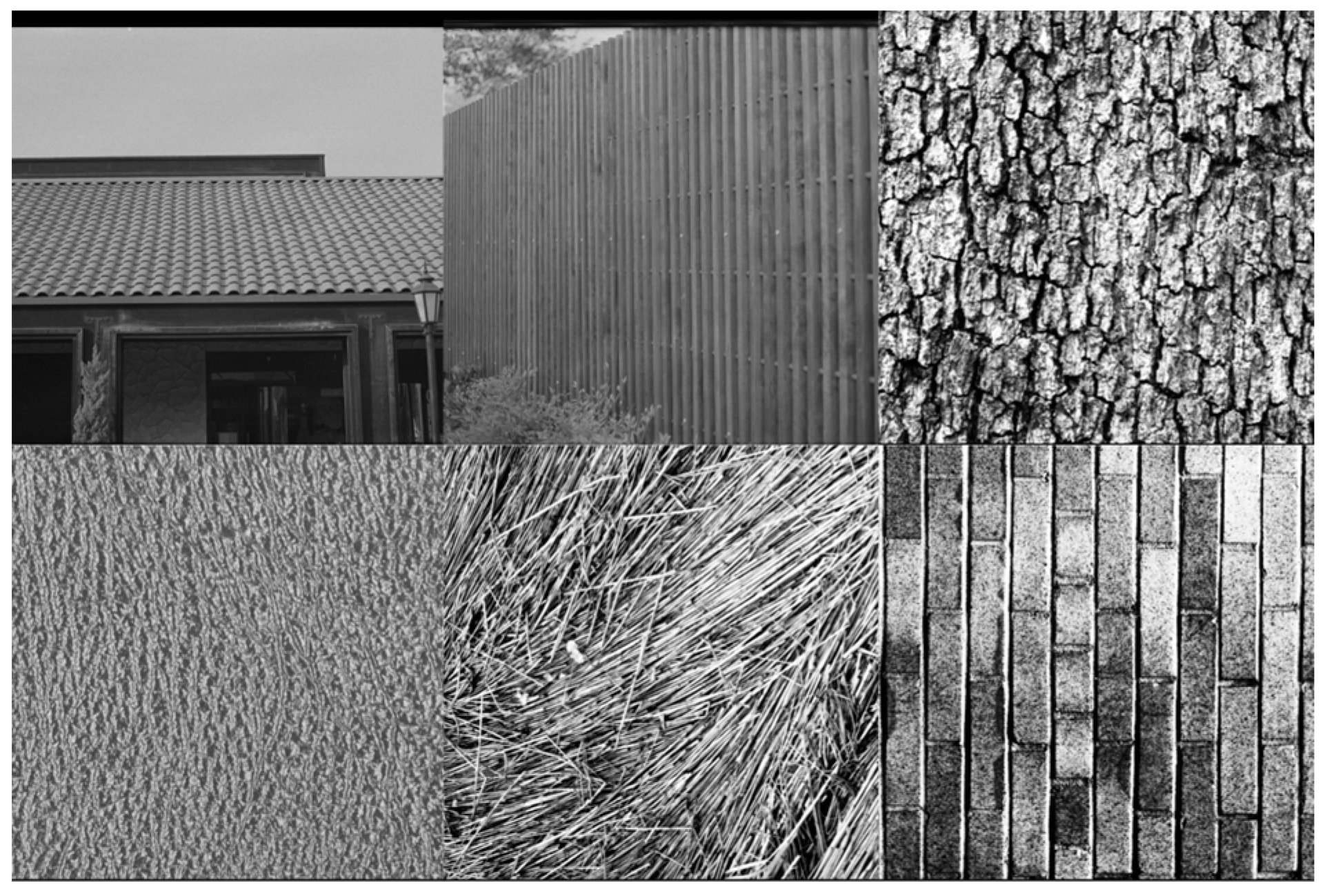

The Texture dataset contains 64 texture images, including surface texture images of various materials with differences in features such as size, shape, and color, several texture images are shown in

Figure 6.

In our experiments, we added AWGN with variance from 10 to 100 in steps of 10 to the test images to generate synthetic images, and we ran a total of 100 simulations for each image and noise level.

We selected five of the most commonly used and state-of-the-art noise estimation algorithms [

14,

27,

28,

29,

30] for performance comparison with the proposed algorithm. In this study, the source codes of these methods are downloaded from the corresponding authors’ websites. To ensure the authenticity and reliability of the comparison results, the default parameters provided by the authors were used for all five algorithms. In the comparison experiments, the final noise level estimates were obtained by calculating the average of the noise level estimates for the different color channels while testing the color images. All algorithms were implemented on a MATLAB2021a platform (8 GB RAM, Intel(

®) Core(™) i5-6500 CPU, 3.20 GHz processor (Intel, Santa Clara, CA, USA)).

4.1. Evaluation Metrics

In this study, we use the following three commonly used evaluation metrics to evaluate the performance of the proposed algorithm: bias (Bias), standard deviation (Std), and root mean square error (RMSE), which reflect the accuracy, robustness, and overall performance of the algorithm, respectively. The calculation formulas are as follows [

29,

40]:

where

is the output result of the noise estimation algorithm. When these three evaluation metrics are smaller, the performance of the noise estimation algorithm is better.

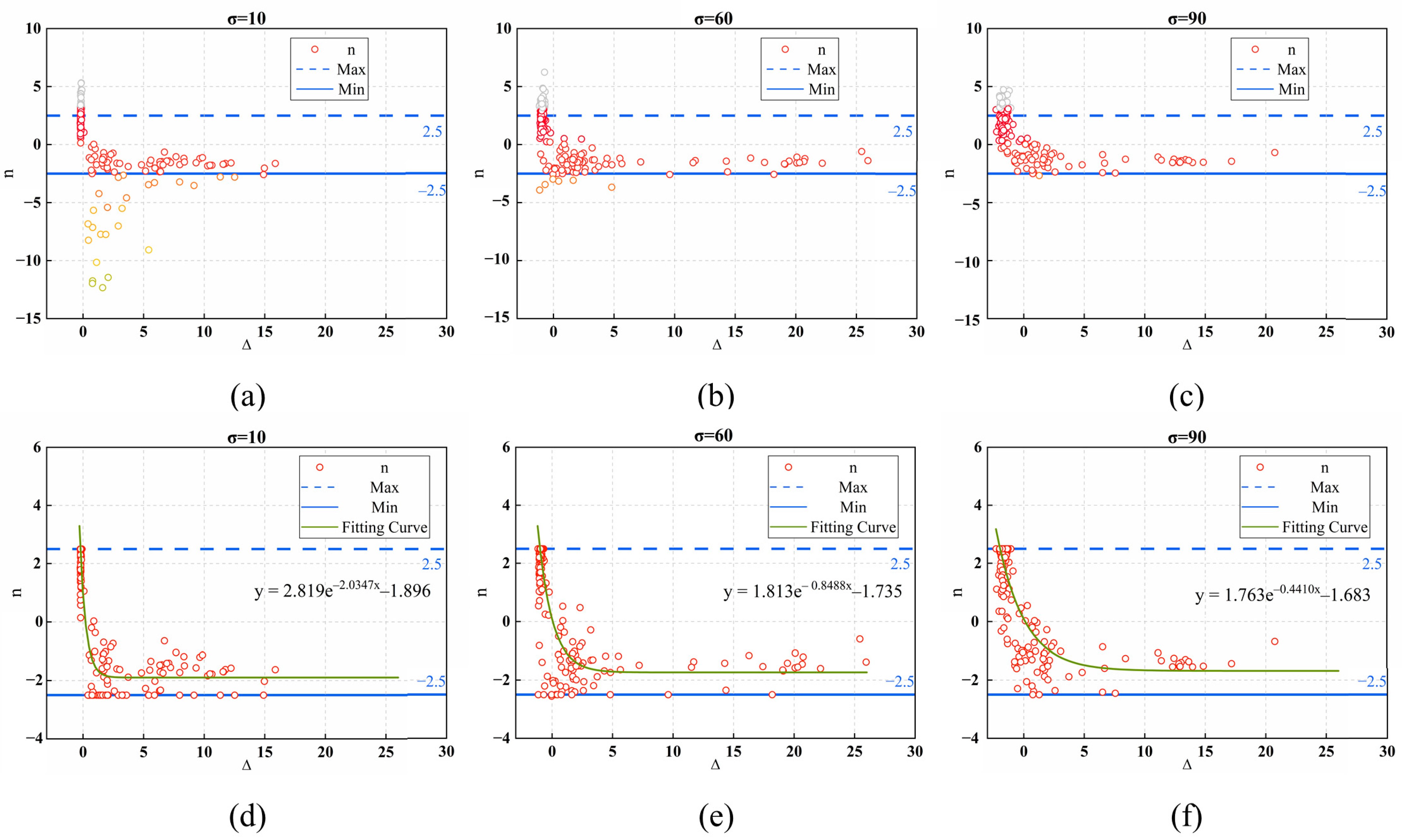

4.2. Parameter Configuration

Before validating the proposed algorithm, the parameters in Equation (16) were obtained by sampling the image datasets. We calculated the values of parameters

,

, and

of the synthetic images at various noise levels by adding known noise levels to the reference images in the three image datasets as described in

Section 3.2. The process was repeated for a total of 100 experimental simulations and the parameters in Equation (16) were calculated using multivariate nonlinear regression to obtain robust nonlinear regression model parameters. The results are presented in

Table 1.

Using the parameters obtained from the image dataset ensures the accuracy of the redundant dimension prediction and improves the accuracy and robustness of the noise estimation results. The images used to obtain the parameters of the regression model and the images used for validation are mostly mutually exclusive.

In the proposed algorithm, there are four fixed parameters in the patch selection process. The significance level

and the number of iterations

were used directly with the best parameters chosen in [

27]. Two other important parameters of the algorithm: the significance level

and the patch size

, need to be set. We first selected a range of parameters based on the default parameters of other comparative algorithms for reference and determined the optimal parameters by testing the proposed algorithm using the trial and error method. The parameter values for

ranged from 4 to 9, and the parameter values for

ranged from 0.7 to 0.9. We introduced these parameters into the proposed algorithm and applied the proposed algorithm to the BSDS500-train dataset. The simulations were performed according to the experimental methodology described in

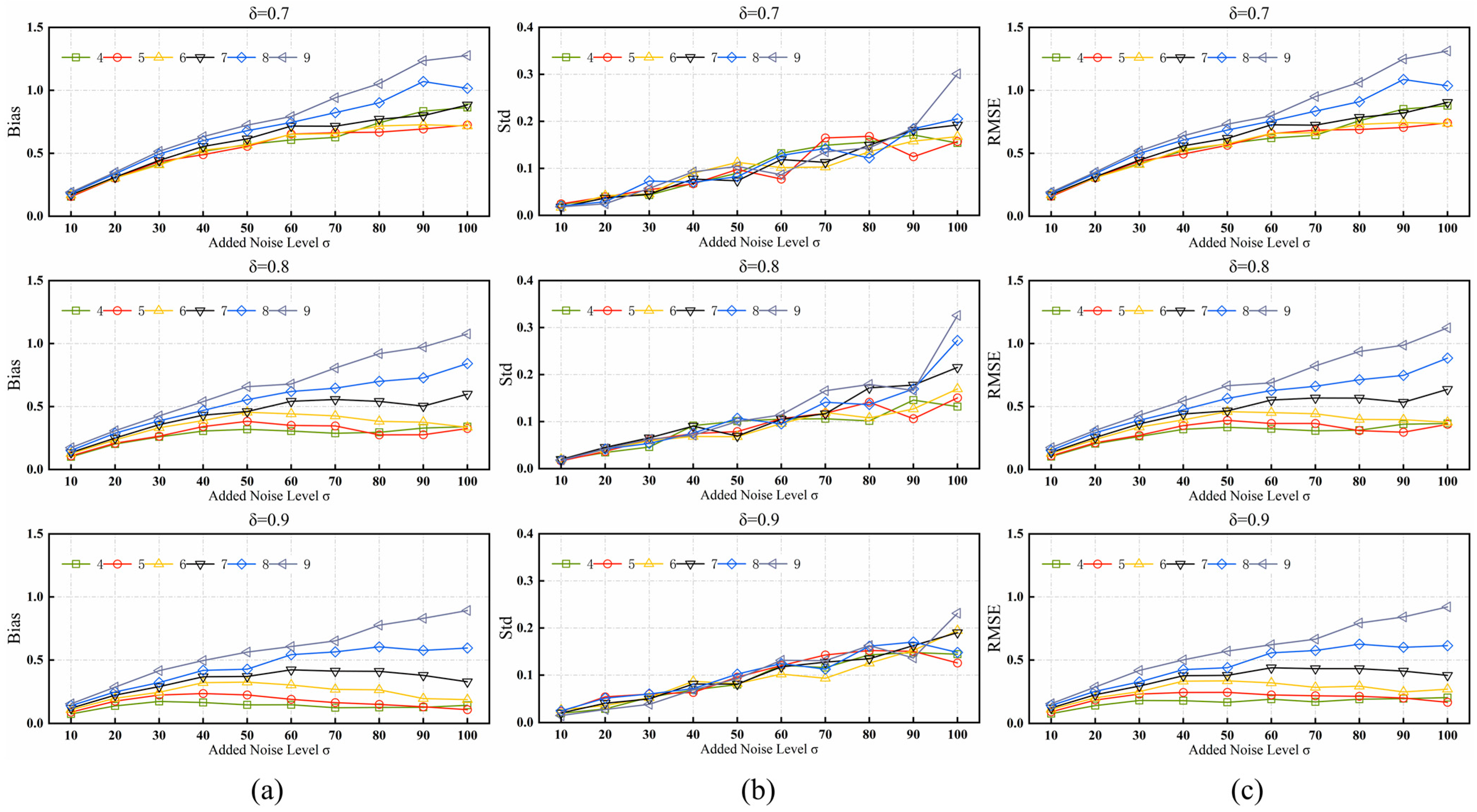

Section 4, and evaluation metrics were used to ensure that the parameter settings were optimal.

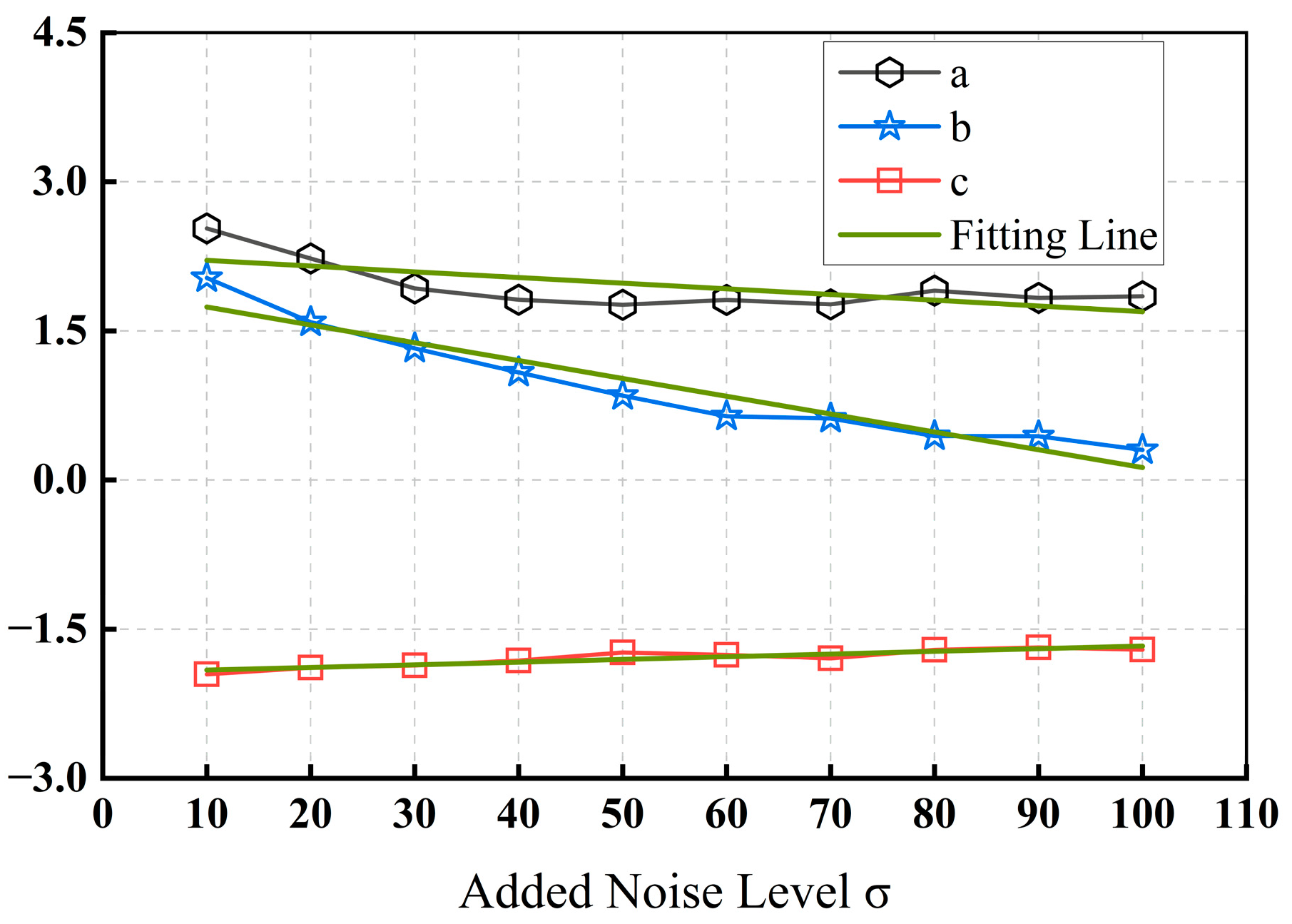

The experimental results are shown in

Figure 7, which clearly shows that the performance of the proposed algorithm is extremely similar when

is set to 9 and

is set to 4 or 5. The accuracy and robustness are better under these parameters than under other parameter combinations. The image characteristics, noise strength, and application-specific requirements should also be accounted for when choosing the appropriate patch size

and significance level

. Because smaller patches are more sensitive to structure, the results may be high and unstable under low noise levels and large structural differences, whereas larger patches contain more pixel information for statistical analysis, resulting in higher accuracy and reliability. Therefore,

is set to 5 and

to 0.9 as the default parameters. The initial parameters of the proposed algorithm are listed in

Table 1, this set of parameters was used for all experiments in this section.

4.3. Performance Comparison

To test the robustness of the algorithm to scene transformations, we conducted experiments on the BSDS500-test dataset with high complexity and realism, and

Table 2,

Table 3 and

Table 4 illustrates the results of the accuracy, reliability, and overall performance comparisons.

The proposed algorithm is clearly superior to the other methods in all evaluation metrics and maintains a stable performance level in the selected noise range of 10 to 100. The proposed model achieves the best accuracy, robustness, and overall performance when compared with other algorithms. The results also show that our algorithm is not limited to a single situation and can effectively respond to the needs of different scenarios.

We further evaluated the performance of the algorithm on high-frequency images from the challenging Textures dataset, where most of the tests contained a large range of fine structures. As can be seen from

Table 5,

Table 6 and

Table 7, when the noise standard deviation is 10, the Bias of [

27] slightly outperforms the proposed algorithm, so there is no significant difference. The Std and RMSE [

28] are slightly higher than the proposed algorithm under low noise level conditions. However, as the noise level increases, in the noise level range of 40–100, our algorithm gradually establishes a significant performance advantage, and every performance indicator is ahead of the comparison method.

The experimental results in this subsection show that our algorithm mitigates the underestimation in traditional noise estimation algorithms for images with the presence of homogenous regions, and effectively avoids the loss of image data information and the aggravation of overestimation due to redundancy assumptions in low-noise conditions for highly textured images.

4.4. Computational Complexity

For an image, when considering the computational complexity of Algorithm 1, we must focus on the calculation of the sample covariance matrix, as it is applied to calculate the initial noise standard deviation (Equation (3)), and two iterative computational procedures: weak texture patches search and flat patches search. Algorithm 1 generally has a computational complexity

in generating the overlapping patches, and

in calculating the eigenvalue of its covariance matrix.

in searching the weak-textured patches, and

in calculating the eigenvalue of its covariance matrix. According to the explanation in [

39], the total computational complexity in searching the flat patches is

; obviously, the computational complexity is much larger than that of the weak texture patch search process.

in calculating the overall texture strength,

in calculating the redundant dimension, and

in calculating the final estimate of the standard deviation of actual noise level.

The most expensive part of Algorithm 1 is the flat block searching, which is much more complex than the other steps, thus it has an overall computational complexity of . (In this study and ).

We also evaluate the computational complexity by comparing the running time in seconds of the proposed algorithm with that of other noise estimation algorithms. The average execution time of various algorithms on a 512 × 512 image is shown in

Table 8.

The proposed algorithm possesses a faster running speed than [

14,

28,

29]. Although refs. [

27,

30] are faster than the proposed algorithm, they perform much worse in the experiments in

Section 4.3.

5. Conclusions

In this study, we propose a noise estimation algorithm to estimate the true noise variance from a single noisy image with complex texture and strong noise. Using the PCA noise estimation algorithm based on homogenous patches as a foundation, we discuss the relationship between eigenvalues, noise strength, and scene complexity and analyze the reasons for the generation of underestimation and overestimation. We optimize the outlier removal process by introducing the absolute median difference. According to the data sample statistics, the nonlinear regression model of discriminant coefficients is fitted to improve the accuracy of the estimation results, which makes the algorithm more robust.

To validate the performance of the proposed algorithm, we compare it with five state-of-the-art algorithms under different types of datasets and different noise levels and further validate it in denoising applications. The experimental results show that the accuracy and robustness of the proposed algorithm are the highest in most scenarios over a wide range of noise levels. In some scenarios, the algorithm also runs faster while achieving the same overall performance. In addition, the performance of the algorithm is extremely stable on different datasets, having better results for both high- and low-frequency images.

For the algorithm proposed in this study, it is necessary to build a more comprehensive noisy image dataset in the future, including more types of scenes and different levels of noise, for training and expanding the algorithm. Explore the use of different feature extraction methods, such as wavelet transform, quantum mechanics, etc., to improve the robustness and performance of the algorithm. The current nonlinear regression model and its parameters are not necessarily the optimal solution. The optimal model parameter configuration can be selected by cross-validation, optimization algorithms, and other methods to try to improve and optimize the algorithm and apply it to a wide range of fields, such as remote sensing [

48] image, Channel noise [

49], and so on.

In this study, the noise model of the images is assumed to be a zero-mean additive Gaussian distribution, but in real-world applications, the noise may be more complex. In addition to the common Gaussian noise model, other types of noise need to be considered, such as salt and pepper noise and Poisson noise, and understanding the nature and generation mechanism of these noise models is crucial for the expansion of the algorithm. Next, we will also look into the possibility of extending the research algorithm to multiplicative noise evaluation, and we will also aim to improve the noise parameter estimation algorithm by using more robust methods such as [

50]. According to the characteristics of different noise types, we can use targeted preprocessing methods and select suitable features to extract the noise information in the image. In order to adapt to the modeling and estimation of different types of noise, we need to adjust the model parameters in the algorithm accordingly or explore the design of more flexible model structures.