Abstract

The latest advances in mobile platforms, such as robots, have enabled the automatic acquisition of full coverage point cloud data from large areas with terrestrial laser scanning. Despite this progress, the crucial post-processing step of registration, which aligns raw point cloud data from separate local coordinate systems into a unified coordinate system, still relies on manual intervention. To address this practical issue, this study presents an automated point cloud registration approach optimized for a stop-and-go scanning system based on a quadruped walking robot. The proposed approach comprises three main phases: perpendicular constrained wall-plane extraction; coarse registration with plane matching using point-to-point displacement calculation; and fine registration with horizontality constrained iterative closest point (ICP). Experimental results indicate that the proposed method successfully achieved automated registration with an accuracy of 0.044 m and a successful scan rate (SSR) of 100% within a time frame of 424.2 s with 18 sets of scan data acquired from the stop-and-go scanning system in a real-world indoor environment. Furthermore, it surpasses conventional approaches, ensuring reliable registration for point cloud pairs with low overlap in specific indoor environmental conditions.

1. Introduction

1.1. Background

The digital transformation (DX) of construction sites has become increasingly important for achieving productivity growth. While DX for productivity improvement has been adopted across various industrial sectors since 2005, the construction industry has lagged behind, exhibiting the lowest digitization index and annual growth rate [1]. Recognizing this challenge, many leading construction companies have initiated the implementation of DX at their construction sites.

A key factor in successfully implementing these digital solutions is the effective management of data from facilities, which begins with acquiring accurate 3D geometric information. Point clouds, obtained through state-of-the-art field data acquisition systems and LiDAR scanners, have emerged as the prevailing approach for documenting the 3D geometry of existing buildings [2,3]. This method has gained popularity due to its ability to provide precise and comprehensive representations of structures, facilitating the overall DX of the construction sector.

Recent advancements in LiDAR scanning technology have revolutionized data acquisition by offering long-distance, high-resolution, and rapid scanning capabilities with millimeter-level accuracy [4,5]. Leveraging these advantages, laser scanning has found widespread applications across diverse fields, encompassing 3D model reconstruction [6,7,8], geometry quality control [9,10], construction assessment [11,12], historic preservation [13,14], and facility management [15].

The current laser scanning solutions for acquiring point cloud data can be broadly classified into two categories: (1) terrestrial laser scanning (TLS) and (2) mobile laser scanning (MLS). Each solution has its own set of advantages and limitations. TLS offers high-density point cloud data with exceptional accuracy, making it an ideal choice for generating reliable 3D geometric information. However, it collects data under static conditions, resulting in longer acquisition times compared to MLS [16]. On the other hand, MLS provides instant and continuous point cloud data, allowing for faster data collection. However, it comes with some drawbacks, such as lower point cloud resolution and higher noise due to motion distortion [17]. As a result, MLS may not be as suitable for generating precise 3D geometric information as TLS [18].

Progress in mobile robotics platforms has led to a revolutionary transformation in the process of acquiring high-resolution point clouds. These advancements have effectively addressed the drawbacks associated with TLS by significantly reducing the time required for scanning, resulting in a more efficient and time-effective process [19]. Many researchers have proposed stop-and-go scanning systems that incorporate mobile robots to collect precise 3D geometric data, thereby minimizing scanning time and human intervention [16,20,21,22,23,24]. In such systems, mobile robots navigate automatically to specific positions, where they conduct static laser scanning to generate detailed 3D point clouds.

However, the 3D point cloud collected by stop-and-go scanning systems requires essential post-processing known as registration, which involves aligning multiple point cloud datasets from local coordinate systems into a unified coordinate system that covers the entire scene [25,26]. While existing stop-and-go scanning systems can perform the acquisition of 3D point cloud data automatically, many of them do not address the issue of automatic point cloud registration or still rely on manual registration methods to align the acquired data [16,20], which can be highly inefficient and time-consuming.

1.2. Related Studies

1.2.1. Point Cloud Registration

Previous research on TLS point cloud data registration has been conducted in various fields, including remote sensing, photogrammetry, and mobile robotics, with the aim of improving robustness and efficiency [25,27,28]. Registration methods commonly follow a coarse-to-fine strategy and employ different types of geometric features for registration. Geometric features, such as points, lines, and planes, are often used in TLS point cloud coarse registration due to their strong geometric constraints in urban environments. Point-feature-based registration methods rely on algorithms such as 3D SURF [29], Harris 3D [30], RANSAC [31], and the 4PCS-based method [32] for feature detection. However, these methods can be sensitive to variations in point cloud density and noise [33]. Line features are often used in urban TLS point cloud registration since roads and buildings, which are the most typical infrastructure in urban space, provide unique line features [34]. Al-Durgham and Habib [35] insist that line features have better geometric constraints than point features and yield more accurate results. Prokop et al. [36] proposed a line-feature-based solution for a very low overlapping indoor area between the two scans. However, these approaches still exhibit low accuracy and slow computation speed relative to other methods.

Plane-feature-based coarse registration methods are less affected by point cloud density and noise, making them suitable for various types of input point clouds [37,38,39]. Theiler and Schindler [40] proposed a coarse registration method using indirect plane features, utilizing virtual tie points generated by intersecting planar surfaces in the scene. Xu et al. [41] introduced a semi-automatic coarse registration approach for point clouds using the geometric constraints of voxel-based 4-plane congruent sets. Inspired by these methods, several automatic coarse registration methods based on plane features have been developed [42,43]. Chen et al. [44] utilized plane and line extraction for registering scans with limited features and small overlap in arbitrary initial poses. Zou et al. [45] utilized integrated navigation system (INS) sensors to acquire longitude, latitude, altitude, and attitude data for their mobile mapping systems (MMS). They then conducted a plane-based global registration using this data to perform 3D reconstruction. In summary, plane-feature-based approaches offer robustness and good performance in urban environments with man-made artifacts [26].

With the development of AI technology, several coarse registration methods based on deep learning have also been proposed. Zeng et al. [46] introduced a voxelization-based registration method called 3DMatch that divides point clouds into regular 3D grids and utilizes a 3D convolution network structure. Ao et al. [47] presented a deep-learning-based surface descriptor called SpinNet for 3D point cloud registration. Li et al. [48] designed the point cloud multi-view convolution neural network (PC-MVCNN) for point cloud registration. However, deep-learning-based approaches face a critical limitation due to their dependence on the training dataset. Facilities exhibit diverse structures and shapes depending on the purpose of the building or infrastructure. Therefore, a model trained with a general facility might fail to perform segmentation of the point cloud successfully for different types of sites [10,49]. Additionally, the limited amount of input data and complexity pose challenges in applying these methods to large-scale point clouds [26].

The Iterative Closest Point (ICP) algorithm [50] is the most commonly used method for fine registration [25,26]. Variants of the ICP algorithm, including point-to-plane ICP [51] and plane-to-plane ICP [52], have also been developed. Zhang et al. [53] proposed a new ICP method to improve the convergence speed and robustness of point-to-point and point-to-plane ICP methods. However, despite their popularity, those ICPs and their variants heavily rely on a good initial estimation and the quality of the point cloud data, considering factors like noise, density, and occlusions.

Another frequently employed technique for fine registration is the normal distribution transform (NDT) algorithm [54]. NDT-based registration methods represent point cloud data using probability density functions (PDF) and assess the similarity between these functions. Several variants of NDT have been proposed, involving differentiating the grid size for PDFs [55,56] and evaluating similarity through point-to-distribution or distribution-to-distribution approaches [57]. However, NDT and its variants face similar limitations to ICP, as they also depend on a reliable initial estimation.

1.2.2. Point Cloud Registration for Stop-and-Go Scanning System

To address the static nature of traditional TLS, many various moving platforms have been explored to develop stop-and-go scanning systems. Blaer and Allen [20] pioneered the stop-and-go scanning system using an unmanned ground vehicle (UGV) and optimized its scanning plan. Notably, they relied on manual registration using reflective targets distributed throughout the environment. Kurazume et al. [58] devised a TLS scanning system that employed multiple UGVs, which included a primary robot and subsidiary robots. Intriguingly, the child robots were utilized as target markers for the registration process. Liu et al. [59] introduced a vehicle-based stop-and-go scanning system tailored for detecting deformations in highway bridgeheads. For registration, they manually selected the shared feature points between two scans, such as traffic sign corner points and the guardrail cylinder center. Additionally, some researchers have investigated innovative robot models. For instance, Park et al. [16] launched an automated stop-and-go scanning system powered by a quadruped walking robot, Boston Dynamics Spot. However, the post-scan registration was still manually executed. The recurring theme of manual and target-based registration processes underlines their inefficiency and the need for an automated solution. Most of these systems either overlook the imperative of automatic registration or remain anchored to laborious manual registration techniques [16,20,58,59]. This oversight not only introduces inefficiencies but also stretches the time required for post-acquisition processing.

To address this issue, several researchers have suggested automatic registration techniques. These methods are designed to potentially integrate with stop-and-go scanning systems, and many have leaned on supplementary data-guided registration using additional sensor data, such as odometry, inertial measurement units (IMU), and global Navigation satellite system (GNSS), a camera, and a robot, to improve the registration process [60,61,62]. Lin et al. [63] conceptualized a MMS framework grounded in the TLS-based stop-and-go mapping concept. The proposed registration relied on scanner poses derived from IMU/GPS data and the ICP algorithm. Yet, only a theoretical analysis was offered without testing in real-world scenarios. A distinct approach taps into visual sensor image data. Mohammed et al. [64] deployed RGB images linked with scans, utilizing the SURF algorithm to estimate relative orientation between scans. Ge et al. [61] described an image-informed, end-to-end registration strategy for unordered TLS point cloud datasets. Recent methodologies have incorporated scan planning data. Knechtel et al. [65] presented a 2D polygon-centric optimal scan planning technique for the Boston Dynamics Spot and crafted a connectivity graph derived from the planning results. This graph informs the ICP-based registration process. Although these studies did not validate their methods with tangible stop-and-go scanning systems, they showcased their significant potential for remedying the auto-registration challenges inherent in stop-and-go scanning applications. Foryś et al. [66] introduced the FAMFR algorithm to precisely register two-point clouds representing various cultural heritage interiors based on two different handcrafted features, utilizing the color and shape of the object to accurately register point clouds with extensive surface geometry details or geometrically deficient but with rich color decorations.

Recent endeavors have moved towards implementing automated registration approaches for real-world stop-and-go scanning systems. Chow et al. [21] revealed a continuous stop-and-go scanning system that combined IMU and RGB-D cameras for registration. However, the system was based on a rudimentary wheeled handcart requiring manual operation. Borrmann et al. [67] constructed thermal 3D geometric data rooted in a mobile robot’s odometry readings. The simultaneous localization and mapping (SLAM) algorithm determined the scanner’s position. Zhong et al. [60] rolled out a stop-and-go scanning system for rural cadastral assessments using a vehicle-backed MMS and GNSS-IMU sensors. Prieto et al. [23] addressed the registration conundrum by harnessing the mobile robot’s odometry and the trusted ICP method. The visibility analysis conducted for their next best scan (NBS) algorithm is based on a 2D vertical image projection. This approach involves vertically projecting the point cloud onto each 3D model of a wall component and calculating the visibility from the number of pixel points and the overall size of the image. However, these studies’ primary focus was not on refining the registration technique. Consequently, geometric data accuracy and computational performance remained unevaluated.

1.3. Existing Problems and Research Objectives

Table 1 summarizes the literature review on the stop-and-go scanning system in terms of its registration method. The recent trend in automatic point cloud registration with the stop-and-go scanning system involves the use of prior information from additional sensors or the mobile platform itself. However, the major limitations of previous research include: (1) Many studies have not explored automatic registration for their systems, even though some researchers have proposed theoretical automatic registration methods for the stop-and-go scanning system. Some have addressed this, but recent studies conducted with state-of-the-art mobile platforms are limited; (2) The majority of the registration processes assume a high overlapping ratio between two point clouds. This presumption restricts the applicability of these methods in irregular scenarios with low overlap scan data; (3) While a few studies have tackled automatic registration with autonomous data acquisition using robots, they often overlook the evaluation of their registration processes. Consequently, the quality of their complete point cloud data remains unverified.

Table 1.

Summary of the literature review on the stop-and-go scanning system in terms of its registration method.

To address these challenges, this study introduces an automated point cloud registration approach optimized for a stop-and-go scanning system. This approach efficiently registers 3D scan data using mobile platform localization as prior knowledge. The objectives of the study are as follows:

- To present an automatic point cloud registration approach for the stop-and-go scanning system considering plane-based registration, which uses prior information from the localization of the mobile platform;

- To introduce a robust method capable of handling irregular scenarios with low overlap scan data;

- To evaluate the proposed method and compare it with conventional registration methods;

- Substantiation of the proposed method with the stop-and-go scanning system using the state-of-the-art mobile platform, quadruped walking robot in real-world indoor environments.

The remainder of this chapter is structured as follows: Section 2 proposes the automatic point cloud registration method. Section 3 presents the implementation of this method, accompanied by the results of point cloud registration from real-world experimental scans. Section 4 discusses the strengths and limitations of the approach. Finally, in Section 5, the conclusion and future works are presented.

2. Methodology

2.1. Overview

The general concept of registration for TLS scan data is to align multiple point clouds from local coordinate systems into a unified coordinate system. This involves determining 3D rotation and translation values for each local point cloud, taking into account their geometrical relation. In this study, the focus is on achieving registration by utilizing information from the mobile platform, which is well-suited for scenarios with low overlapping scans in indoor environments. To facilitate the registration process, two key assumptions are made:

- The approximate locations of TLS are known: It is assumed that the locations of the TLS scans are approximately known, and therefore, consideration for translations is not required during the coarse registration phase. This assumption is valid for most state-of-the-art mobile platforms, as they come equipped with their own localization function, enabling accurate location information for scan positions.

- Rotation around the z-axis is sufficient for registration. During the registration, only the rotation around the z-axis is needed to determine the correct rotations of the scans relative to each other. This assumption is reasonable because modern LiDAR scanners are typically equipped with a gyroscope sensor, and their output is correctly aligned with the gravity vector, pointing in the negative direction of the z-axis [36].

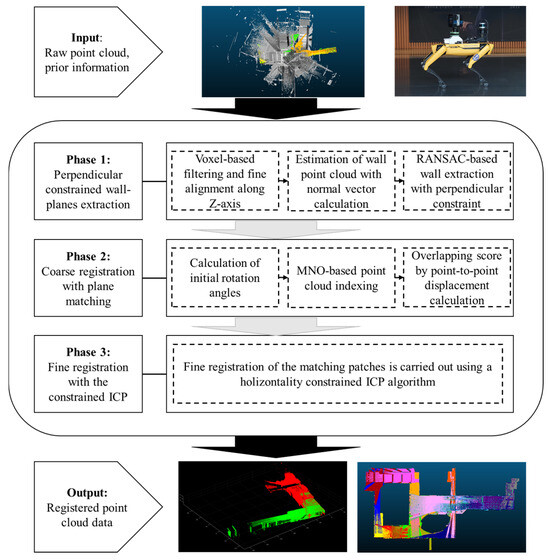

The proposed method comprises three main phases: (1) the initial phase begins with the extraction of perpendicular constrained wall planes from the input point cloud, sourced from the stop-and-go scanning system detailed in our previous study [16]. The point cloud is first subjected to voxel filtering and then downsampled. Subsequently, points presumed to be parts of walls are identified based on their normal vectors. Using the RANSAC-based algorithm, M-SAC wall-plane models adhering to perpendicular constraints are extracted; (2) the second phase involves the alignment of the wall-planes, taking into account the scan system’s initial position. Potential rotation angles are assessed by comparing the normal vector alignment of each wall plane. Overlapping scores for each angle are then computed using a point-to-point displacement analysis. The modifiable-nest-octree-(MNO)-based point cloud indexing technique is utilized to enhance computational efficiency, aiding in the accurate calculation of point-to-point displacements. This computation marks the completion of the coarse registration process and determines the matching patches essential for the next fine registration phase; (3) the final stage focuses on the precise registration of the identified matching patches. A horizontality constrained ICP algorithm is proposed and used for this purpose. The proposed method is automated in all of the phases of the workflow without any manual intervention. Figure 1 illustrates the procedure for the proposed method.

Figure 1.

The workflow for the proposed point cloud registration approach.

2.2. Perpendicular Constrained Wall-Plane Extraction

Many traditional point cloud registration methods focus on identifying salient features and use local geometric descriptors to establish correspondences between point clouds. However, ensuring sufficient overlap and descriptive features can be challenging in an indoor environment [44]. Therefore, this approach aims to extract wall planes as geometric features, taking advantage of the fact that man-made environments often consist of planar structures, such as walls. These walls represent the main structure of the scene and can provide valuable information for robust registration [68]. Additionally, a constrained condition that adjusts wall-plane extractions was applied based on the assumption that all such walls are parallel or perpendicular to each other [6,7].

2.2.1. Voxel-Based Filtering and Alignment along the Z-Axis Subsubsection

The point cloud data acquired by TLS at an extensive indoor site exhibits the following characteristics: Firstly, their density is irregular, with points closer to the sensor being densely distributed, while those farther away are sparsely represented. Secondly, the data volume is substantial, with a single station’s TLS data comprising several million points in sizable scenarios, demanding significant computational resources [48]. To address this issue, several studies have adopted voxel-based filtering for point cloud downsampling [28,42,48,69] because the subsampled point cloud has a more even point density and lower point count, which benefits the efficiency of registration [28,69]. Voxel filtering is used to preprocess the point cloud, reducing the influence of noise points on registration accuracy and improving efficiency. It is insensitive to density and can remove noise points and outliers while downsampling the point cloud to some extent. Therefore, it is adopted in this study as input for coarse registration. The spatial resolution for voxel-based filtering: based on the maximum construction tolerance standard from the Korean Construction Standard Specification (KCS) [70], the value was selected as 0.05 m.

Following the voxel filtering process, the fine alignment of the normal vector of the ceiling and floor along the Z-axis is conducted. Although modern LiDAR scanners are correctly aligned with the gravity vector, pointing in the negative direction of the Z-axis, there may be some fine misalignment due to the vibration of the robot system. The main XY plane of the input point cloud is extracted using the M-SAC algorithm [71], which is an extension of the RANSAC algorithm [72], within the normal vector under a given threshold angular of 5 degrees to the Z-axis. The rotation parameters to align the normal vector with the Z-axis are estimated, and the input point cloud is transformed using these rotation parameters.

2.2.2. Estimation of Wall Point Cloud with Normal Vector Calculation

For the robust fitting of the wall-plane model, this section outlines the initial estimation procedure applied to identify points that are estimated to be part of the wall component. Given that most walls within indoor scenes exhibit verticality relative to the ground floor, the points constituting the wall structure should have normal vectors that are perpendicular to the normal vector of the ground plane. Essentially, this implies that the z component of the unit normal vector should be close to 0.

The estimation of normal for individual points can be achieved by considering neighboring points to determine a local plane [73]. In the interest of both safety and computational efficiency, this study opts to utilize four neighboring points to compute each normal vector. Let 1, 2, …, and s be the labels of the points and be a point where denotes the set of all points from the scan point cloud. Also, let be a unit vector of , where denotes a set of all unit vectors from . Thus, , the set of segmented wall points from , is mathematically expressed in Equation (1).

The terms are defined as follows:

- : a set of segmented points as wall,

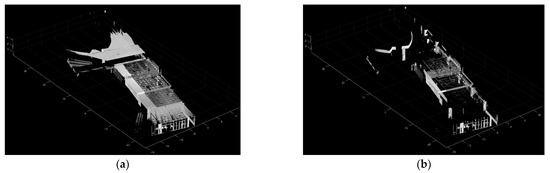

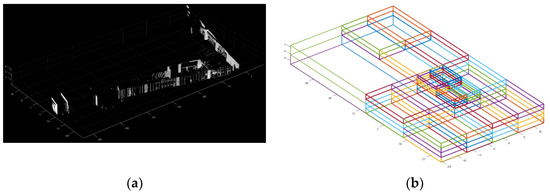

Figure 2 illustrates the process of estimating the wall point cloud. In Figure 2a, the input point cloud is visualized, comprising a variety of points. In Figure 2b, the estimated wall point cloud is presented, with wall structures highlighted. However, there are also some noise points present that do not belong to the walls.

Figure 2.

An example of the estimated wall point cloud. (a) Input point cloud; (b) The estimated wall point cloud. Points belonging to wall structures have been segmented, along with some noise points that are not part of the walls.

2.2.3. RANSAC-Based Wall Extraction with Perpendicular Constraint

After the estimation of the wall point cloud in the previous step, it was observed that some noise points were also included, as depicted in Figure 2b. To robustly model the wall plane, the M-SAC algorithm [71], which is an extension of the RANSAC algorithm [72], is adopted for the segmented point cloud. In the RANSAC approach, fitting is considered successful if inlier points exist within the specified threshold, without further assessing how close these points are to the desired plane within the threshold. However, the M-SAC algorithm evaluates the sum of the distances between all points and the model. In essence, it utilizes a quality measure that considers how closely the inlier points align with the desired model. This refined criterion allows for a more accurate and reliable assessment of the model fitting process. In this study, the threshold distance was established at 0.05 m, considering the construction tolerance standard stipulated by the Korean Construction Standard Specification [70].

The majority of indoor building environments adhere to the Manhattan world condition, characterized by geometric properties such as orthogonality or parallelism among wall structures. Consequently, during the wall-plane extraction process, these perpendicular constraints were imposed to identify robust matching features. The initial wall plane was first extracted from the segmented point cloud using the M-SAC algorithm. The normal vector of this initial wall plane was designated as the reference vector. Subsequently, a direction vector perpendicular to the reference vector along the z-axis was computed, and the perpendicular wall plane was derived using this direction vector. This iterative process continued until the number of points within the extracted plane fell below 1000. For clear separation of the walls, a minimum distance of 1 m was maintained between each extracted plane. The wall-plane extraction process using the M-SAC algorithm can be summarized using Algorithm 1.

| Algorithm 1: The wall-plane extraction with the M-SAC algorithm |

| # Definition 1: ‘’ is defined as the set of segmented wall points. |

| # Definition 2: ‘plane_refer’ is defined as a set of wall planes with the reference vector. |

| # Definition 3: ‘plane_ortho’ is defined as a set of the perpendicular wall planes. |

| Input: |

Output: plane_refer, plane_ortho

|

Figure 3 depicts the M-SAC-based wall extraction process. In Figure 3a, the input point cloud is visualized, having been segmented in the preceding section. Figure 3b presents the extracted wall-point cloud, with non-wall points distinctly removed.

Figure 3.

An example of the M-SAC-based wall extraction. (a) Input point cloud; (b) The extracted wall-point cloud. Non-wall points distinctly removed.

2.3. Coarse Registration with Plane Matching

After extracting the wall planes from both the reference and target point clouds, the subsequent task involves matching the target planes with the reference planes. Potential rotation angles for coarse registration are determined by assessing the alignment of normal vectors of each wall plane. For each potential rotation angle, overlapping scores are computed through point-to-point displacement. The coarse registration is then confirmed using the rotation angle that yields the highest score, and matching patches are identified for the subsequent fine registration. In this process, an MNO-based point cloud indexing approach is employed to minimize computational demands.

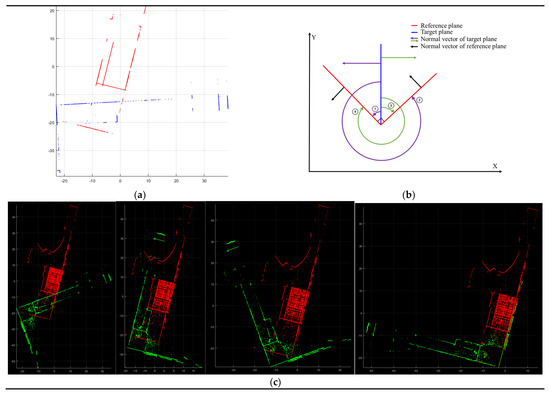

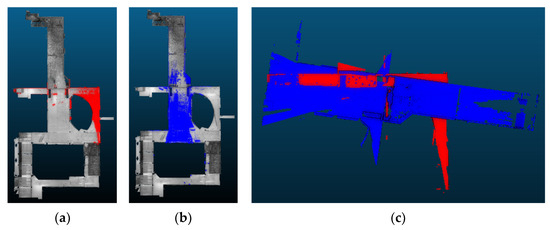

2.3.1. Calculation of Rotation Angles for the Coarse Registration

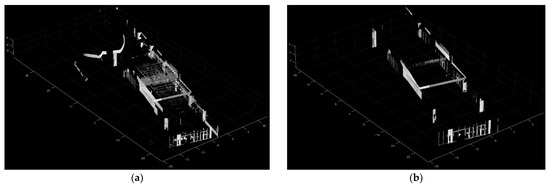

Based on the assumption that all walls are either parallel or perpendicular to each other, a set of rotation candidates, four rotation angles around the Z axis, is proposed for the target wall plane relative to the reference wall plane. In Figure 4a, a top view displays the reference wall planes in red and the target wall planes in blue. Since all target wall planes are either parallel or perpendicular to one another, calculating angular transformations is simplified. This is achieved by aligning a normal vector from one target wall plane to the normal vectors of the two reference wall planes. From the target wall plane, two opposing cases of the normal vector can be estimated. This leads to four distinct angular relationship scenarios, as depicted in Figure 4b. As a result, four temporary transformed target point clouds (green) are generated based on the set of rotation candidates (Figure 4c).

Figure 4.

A set of rotation candidates for the target plane matching in top view. (a) The reference wall planes in red and the target wall planes in blue; (b) A set of rotation candidates: four rotation angles around the Z-axis; (c) Four temporary transformed target point cloud (green) cases generated based on the set of rotation candidates.

2.3.2. MNO-Based Point Cloud Indexing

To determine the rotation angle for coarse registration from the angle candidates, a point-to-point displacement-based overlapping score was employed, as detailed in Section 2.3.3. However, calculating the displacement between two-point clouds demands substantial computational resources, as it involves processing each point in both point clouds individually. To tackle this challenge, a data structure approach based on the modifiable nested octree (MNO) indexing method was proposed.

Several research works have illustrated the impact of the point cloud’s data structure on computational efficiency [10,15]. MNO, a data structure for point clouds, has been recognized as an effective indexing method for handling and visualizing extensive point clouds [74,75]. Leveraging these attributes, this study utilized an MNO structure based on the point cloud during the point-to-point displacement computation. The MNO indexing is initially conducted with of the reference point cloud (Figure 2b). Figure 5 exemplifies MNO indexing [10].

Figure 5.

MNO indexing result with the of the reference point cloud. Outcome of point cloud indexing achieved through utilization of the MNO structure. Points indexed within the same MNO node are depicted in the same color.

2.3.3. Overlapping Score with Point-to-Point Displacement

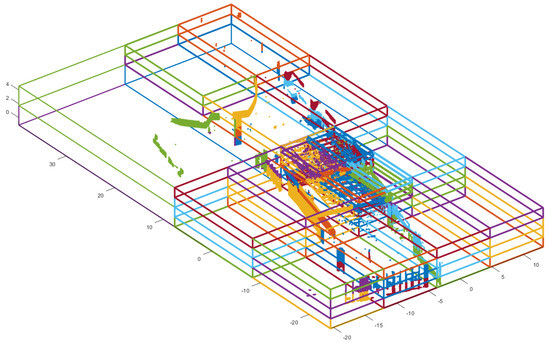

During this section, a data structure-based point-to-point displacement calculation was employed to compute overlapping scores. This computation concludes the coarse registration process and identifies the matching patches necessary for the subsequent fine registration. The target point cloud is indexed within the pre-existing MNO of the reference point cloud, established in the previous section. This indexing facilitates the computation of displacements for points within each individual MNO node in both the target and reference clouds, resulting in a significant reduction in computation time [10,76]. Figure 6 shows the indexing process of the target point cloud with the constructed MNO structure. The target point cloud (Figure 6a) is located on the MNO structure (Figure 6b), and the points located in each MNO node index to that node. Figure 6c presents the indexed target point cloud. The points within the same MNO node are depicted in the same color, while the points out of the MNO structure are depicted in black.

Figure 6.

An example of the indexing process with the constructed MNO structure. (a) Input target point cloud; (b) The constructed MNO structure; (c) The indexed target point cloud. The points within the same MNO node are depicted in the same color, while the points out of the MNO structure are depicted in black.

Following the indexing process, the target and reference points within each individual MNO node are retrieved, and their displacements are calculated. In this research, displacement is defined as the distance between a point in the first point cloud and the closest point in the second point cloud to that point. Once the displacements of all points within each MNO node are computed, points with displacements surpassing the user-defined threshold are eliminated (lines 15 and 18 in Algorithm 2). Subsequently, the overlapping score is derived by comparing the ratio of remaining points (considered as overlapping points) to the initial number of points. Lines 21–23 in Algorithm 2 briefly describe this process.

| Algorithm 2: Calculation of the overlapping score in the MNO structure |

| # Definition 4: of the reference point cloud. |

| # Definition 5: of the target point cloud. |

| # Definition 6: ‘threshold’ is defined as a user-defined threshold. |

| # Definition 7: ‘overlap_score’ is defined as the overlapping score. |

| # Definition 8: ‘displacement2point’ is a function for calculating the displacement. |

| Input: reference_, target_, threshold |

Output: overlap_score

|

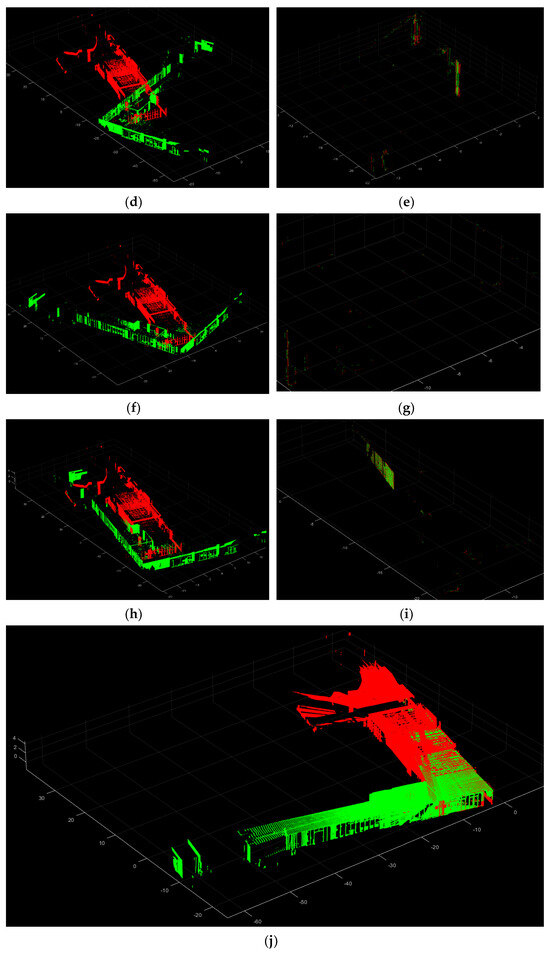

After calculating the overlapping scores for each potential rotation angle, the rotation angle with the highest score is chosen as the final transformation for the coarse registration. Figure 7 illustrates an example of the final step of coarse registration with the overlapping score. In Figure 7a, you can see the reference point cloud (red) and the target point cloud (blue) as examples. Figure 7b,d,f,h shows the results (green) after applying each potential rotation angle. Correspondingly, Figure 7c,e,g,i shows the overlapping points within a displacement threshold of 0.5 m, between the reference point cloud (red) and the transformed target point cloud (green) pairs from Figure 7b,d,f,h. It is clear that the pair shown in Figure 7b,c has the most overlapping points compared to the other pairs, resulting in the highest overlapping score. As a result, the scenario shown in Figure 7b is selected as the coarse registration outcome, and the overlapping points (Figure 7c) are identified as the matching patches for the subsequent fine registration. Figure 7j provides a visual representation of the coarse registration outcome in this example.

Figure 7.

An example of the coarse registration result with the overlapping score. An example of the coarse registration process with a displacement threshold of 0.5 m. (a) The reference point cloud (red) and the target point cloud (blue); (b,d,f,h) The results (green) after applying each potential rotation angle; (c,e,g,i) The overlapping points between the reference point cloud (red) and the transformed target point cloud (green) pairs; (j) The coarse registration outcome. In this case, the overlapping points (c) are identified as the matching patches for the subsequent fine registration.

2.4. Fine Registration with Horizontality Constrained ICP

For the fine registration, the point-to-plane ICP algorithm [77,78] is employed to achieve a precise estimation of the registration parameters using the coarse registered point cloud. The fundamental concept involves minimizing point-to-plane correspondences, which relate to the sum of the squared distances between a point and the tangent plane at its corresponding point, and determining the rigid transformation parameters between these points [64,79]. This process can be viewed as an optimization challenge that aims to minimize the error by reducing the cumulative Euclidean distances between the sets of points and corresponding planes. The objective function for each ICP iteration is to find , the optimized rigid transformation matrix, which is mathematically expressed in Equation (2) [77,78].

The terms are defined as follows:

However, this study operates under the assumption that only the rotation around the z-axis is considered. Under this assumption, a 3D rigid-body transformation is composed of a rotation matrix and a translation matrix ,

where

And

, and are rotations of 0, 0 and radians about the -axis, -axis and -axis, respectively.

Equation (2) represents a least-squares optimization problem, the solution of which necessitates determining the values of four parameters: , , , and .

Subsequently, Equation (2) can be reformulated as a nonlinear equation involving the four parameters , , , and .

Given N pair of point correspondences, all nonlinear equations can be linearized using a Taylor series approximation, which can be expressed as follows [80]:

where

With

In this study, the Levenberg-Marquardt optimization method is employed. Consequently, the vector of least-squares corrections in the system of equations is given by:

The term is defined as a follow:

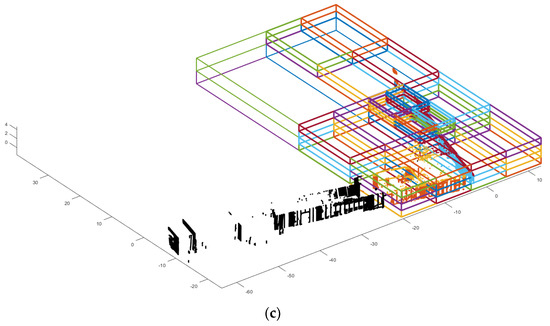

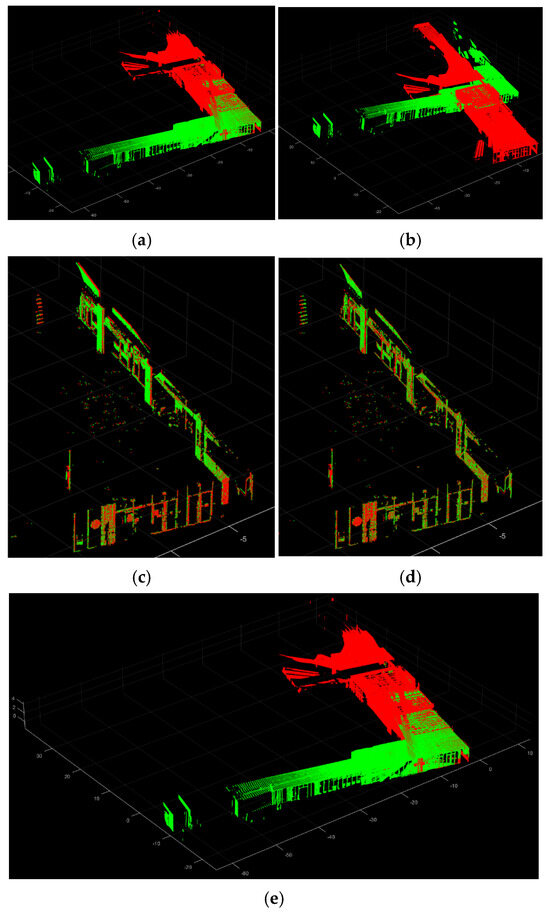

However, if the overlap between two point clouds is insufficient, the ICP algorithm’s objective of minimizing error by reducing cumulative Euclidean distances among sets of points and correspondeding planes will not be effectively achieved. This is because the algorithm optimizes the error for all points, and inadequate overlap can lead to inaccurate registration results. To address this, matching patches from the previous phase can be used. In Figure 8a,b, an example of horizontality constrained ICP registration result using a point cloud with low overlap is shown, where the registration has generally failed. However, with the application of the algorithm to the matching patches, as depicted in Figure 8c,d, precise registration results are obtained. The final registration parameters are then applied to the target point cloud, as shown in Figure 8e, demonstrating the successful outcome of the fine registration. The registration process was iterated for all scan point clouds in accordance with the order of scanning performed by the stop-and-go scanning system.

Figure 8.

An example of the fine registration. (a) Raw point cloud; (b) Failed registration result; (c) The input matching patches; (d) the fine registration of the matching patches; (e) The fine registration result.

3. Experimental Results

To verify the feasibility of the proposed approach, experiments were conducted in three steps: (1) data acquisition using a stop-and-go scanning system [16] in a real indoor environment; (2) registration process with the proposed approach and validation of its performance; (3) performance comparison with the conventional approach and several benchmark datasets. Experiments for the registration process were performed using MATLAB 2023b on a system with an AMD Ryzen 7 3700X CPU @3.59 GHz processor and 16 GB of RAM.

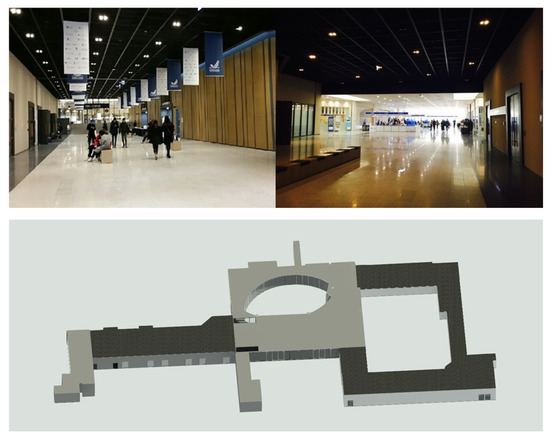

3.1. Test Site

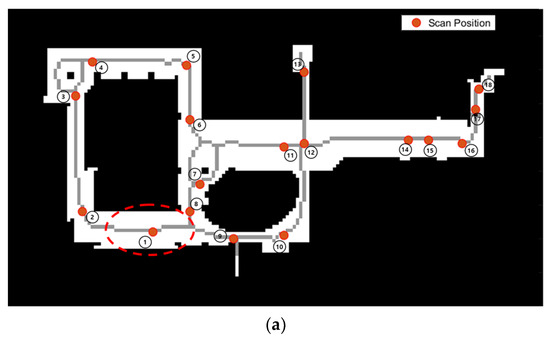

Baekyangnuri, situated at Yonsei University in the Republic of Korea, encompasses diverse research and educational support facilities, parking zones, and cultural spaces. For the test site, a common corridor was chosen due to its extensive indoor expanse, necessitating multiple scan points. This corridor typifies a structure with elongated passages, incorporating elements like windows, doors, and stairs. Notably, the corridor layout also includes circular segments, with floor heights varying across different sections (depicted in Figure 9).

Figure 9.

The scenery and BIM of the test site.

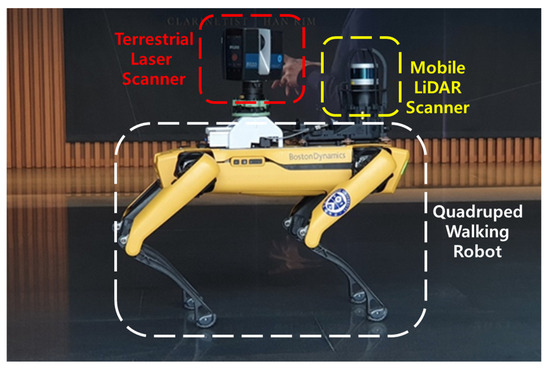

3.2. Data Collection with a Stop-and-Go Scanning System

In this study, data acquisition was carried out using the stop-and-go scanning system and developed in the previous study [16]. The quadruped walking robot, Boston Dynamics Spot [81], was employed to the stop-and-go scanning system. It was equipped with a MLS, Velodyne VLP [82], to enhance the robot localization, and a TLS, FARO Focus 3D laser scanner [83], which is capable of rectifying auto-leveling discrepancies up to 2 degrees of misalignment for precise 3D mapping, as depicted in Figure 10. The FARO scanner was wirelessly connected to the robot system via a fixed IP. Boston Dynamics Spot is a quadrupedal robot designed for mobility across challenging terrains and rugged surfaces, including steps and stairs [16,84]. Notably, it features a robust obstacle avoidance system that encompasses both fixed and dynamic obstacles. This system provides the robot with a broad navigational space, allowing for flexible safety constraints such as safe distances and floor slopes. The parameter values for the stop-and-go scanning system in this study are summarized in Table 2. Regarding the numbering and location of scan positions, these were determined based on our previous research on scan planning [16]. The optimal scan positions were calculated through a 3D visibility analysis involving ray tracing of the BIM geometry, and the number of scan positions was selected using the proposed optimization algorithm.

Figure 10.

The stop-and-go scanning system developed in [16]. The quadruped walking robot with MLS for localization of the robot and TLS for the stop-and-go scanning process.

Table 2.

The parameters of the scan system.

3.3. Registration Results

Before initiating the registration process, it is imperative to unify the coordinate systems of both the scanning system and the reference point cloud, with the latter serving as the starting point of the registration process. Subsequently, a fundamental surveying process was conducted using a total station to acquire control points for the real-world test site. Figure 11a illustrates the distribution of control points (CP) across the test site in relation to the reference point cloud, which is numbered as 1. For 3D coordinate transformation, a minimum of three CPs are mandatory. In this study, seven CPs were surveyed and employed for least squares adjustment to enhance the transformation performance. The locations of these CPs were strategically chosen to be easily identifiable by the total station, such as the corners of columns, doors, and walls. A total of 7 CPs were surveyed, and the coordinate systems of the scanning system and the reference point cloud are unified using these CPs. Subsequently, prior location information from the scanning system and the reference point cloud became available for the proposed registration approach.

Figure 11.

Control points for the test site. (a) Distribution map of scanning positions and CPs. The number of scan positions depicts the registration order. (b) View of CPs within blue dotted circles, captured by the total station; (c) View of CPs points within blue dotted circles, extracted from the reference point cloud data.

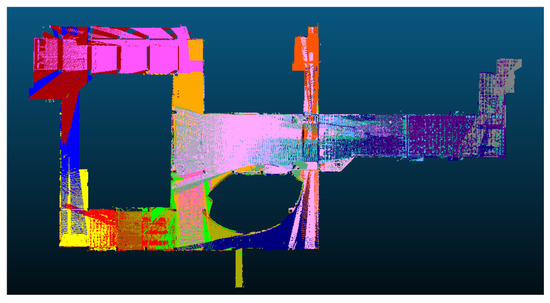

Figure 12 depicts the registration result of the proposed approach. The colors within the point clouds represent the variations among each scan station. This depiction validates the effectiveness of the proposed method in handling expansive scenes without encountering registration failures.

Figure 12.

The visualization of registered point cloud. The point clouds are colored according to the difference in each scan station.

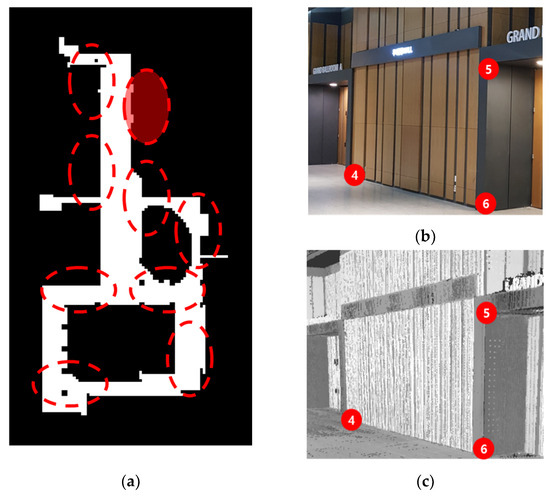

To conduct a quantitative accuracy analysis, a fundamental surveying process was executed using a total station to validate the quality and reliability of the registered point cloud data. The accuracy assessment was conducted following the method proposed in [6,7], wherein well-distributed and easily identifiable points acquired from a highly accurate total station serve as reference targets for comparison with corresponding points obtained from the registered point cloud data. Figure 13a illustrates the distribution of target points (TP) across the test site. Red dotted circles indicate the locations of assessment targets. Figure 13b,c provides both a real-world view and point cloud data of the shaded circle. Identifiable target points, such as column corners, doors, and walls, were acquired from both the total station and the registered point cloud data. It should be noted that a minimum of three TPs were obtained for each scan station. The positional accuracy was evaluated using the root mean square error (RMSE) as defined in Equation (13).

The terms are defined as follows:

Figure 13.

Assessment of target points. (a) Distribution map of target points. Target points are indicated by red dotted circles; (b) View of target points within a red dotted circle, captured by the total station; (c) View of target points within a red dotted circle, extracted from the registered point cloud data.

A total of 35 target points were used for validation. For example, the numbers in red circles in Figure 13b,c indicate the target number. The calculated RMSE value was 0.044 m, underscoring the performance of the proposed method, especially considering that the spatial resolution of sampling in this study is 0.05 m.

Table 3 presents details regarding the collected scan data, computation time, and the successful registration rate (SRR). The SSR is an evaluation metric utilized in various registration studies [28,41]. σp was used to represent the predefined threshold (in this study σp = 0.1 m) for position error of surveying points, , in each scan point cloud. Successful registration (SR) for each scan is then calculated by Equation (14).

Table 3.

The description of the registration performance.

So, the SRR is defined by Equation (15).

where is the number is the number of SR and N is the number of total scans.

The total point count amounted to 186 million. The registration process took 424.2 s to complete. For the overlapping ratio, the overlapping score values outlined in Section 2.3.3 were utilized. The mean overlapping ratio for all scan registration pairs stood at 29.89%. Notably, despite the minimum overlapping ratio being 13.09%, the SSR was 100%, signifying the absence of registration failure cases.

Subsequently, the performance of the proposed approach was compared with two state-of-the-art multi-view registration approaches: MPCGR [85] and HL-MRF [28], using the acquired point cloud dataset. For fair comparisons, the point cloud dataset was filtered using a voxel grid with a 0.05 m spatial resolution and translated to the scan position to ensure the same conditions for initial translation approximation. Table 4 presents the performance for each method. Both approaches failed to register the multiple-point clouds, while the proposed approach succeeded. This is because conventional registration approaches do not account for cases of low overlap between point cloud pairs.

Table 4.

The comparison of the registration performance.

3.4. Comparison of Registration Results with Other Approaches

In this section, the performance of the proposed approach was compared with existing point cloud registration methods. Five modern point cloud registration methods were selected for this comparative analysis, including fast-descriptors-based [86], CoBigICP [87], 2D-line feature-based [88], LSG-CPD [89], and WES-ICP [90]. For fair comparisons, the input point cloud data were filtered using a voxel grid with a 0.05 m spatial resolution and translated to the scan position to maintain the consistent conditions for initial translation approximation. For the common parameter, the maximum iteration number for the registration process is set at 100. All the experiments are implemented in MATLAB 2023b on a single-thread system with an AMD Ryzen 7 3700X CPU @3.59 GHz processor and 16 GB of RAM.

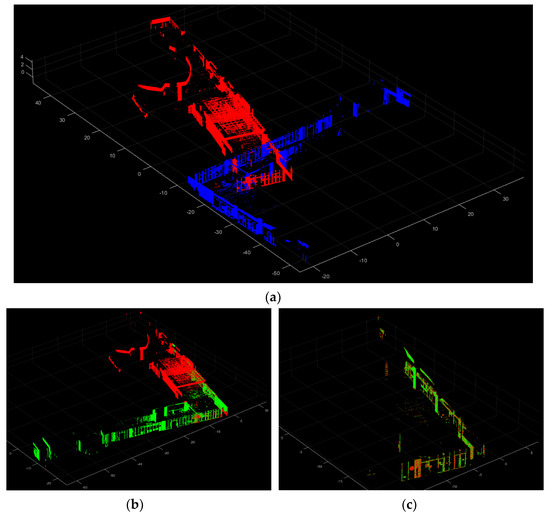

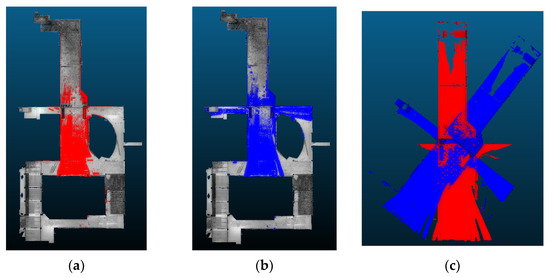

The methods were tested on both the highest overlapped point cloud pair from the acquired point cloud dataset (Figure 14), which has a max overlapping ratio of 53.09%, and the lowest overlapped point cloud pair (Figure 15), which has a minimum overlapping ratio of 13.09%. The registration accuracy was evaluated using the RMSE value with the TPs, as in the previous section. The three evaluation metrics, SR (Equation (9)), RMSE (Equation (13)), and computation time, are used for the comparisons.

Figure 14.

The highest overlapped scan pair. (a) The reference point cloud (red) in the entire point cloud scene; (b) The target point cloud (blue) in the entire point cloud scene; (c) The raw target point cloud with the reference point cloud.

Figure 15.

The lowest overlapped scan pair. (a) The reference point cloud (red) in the entire point cloud scene; (b) The target point cloud (blue) in the entire point cloud scene; (c) The raw target point cloud with the reference point cloud.

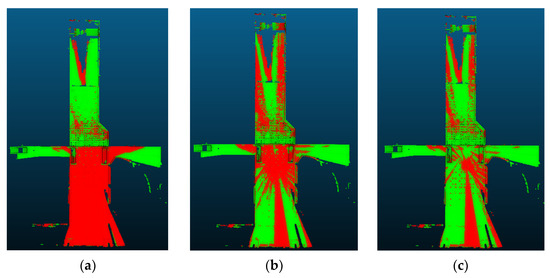

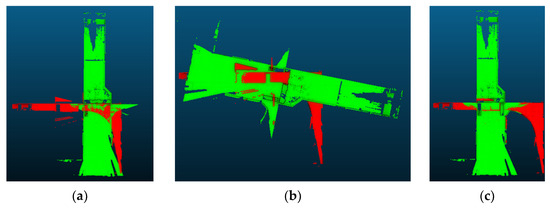

Table 5 presents the registration performance for each method on the highest overlapped point cloud pair. Among the compared methods, only three methods, including 2D-line feature-based [88], LSG-CPD [89], and the proposed approach, show the successful registration results with RMSE values under 0.1 m. The proposed approach represents the best registration accuracy and the fastest computation speed. Figure 16 illustrates the three successful registration results, along with color-coding of the reference point cloud (red) and the registered point cloud (green).

Table 5.

Comparison of registration performances with the highest overlapped scan pair.

Figure 16.

The registration results for the highest overlapped scan pair. (a) 2D-line-featured-based approach [88]; (b) LSG-CPD [89]; (c) The proposed approach.

With those three successful approaches, the registration performance for each method on the lowest overlapped point cloud pair is compared. Table 6 presents the registration performance. It is shown that only the proposed approach presents a successful registration result with the fastest computation time. Figure 17 illustrates the three registration results, along with the color-coding of the reference point cloud (red) and the registered point cloud (green). This demonstrated that the proposed registration method is robust for the low-overlapping point cloud data.

Table 6.

Comparison of registration performances with the lowest overlapped scan pair.

Figure 17.

The registration results for the lowest overlapped scan pair. (a) 2D-line-featured-based approach [88]; (b) LSG-CPD [89]; (c) The proposed approach.

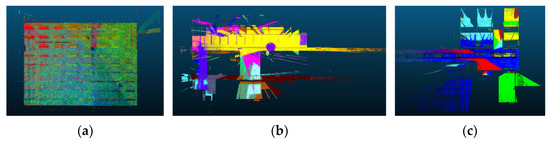

3.5. Comparison of Registration Results on the Benchmark Datasets

In this section, the performance of the proposed approach was assessed using three datasets from different benchmarks: ETH–Office [69], RESSO—(e) and (i) [44]. Specifically, the ETH–Office dataset was captured from an indoor office room using a Faro Focus 3D laser scanner system. Given the confined nature of indoor spaces, larger overlaps are generally observed. RESSO—(e) and (i) were collected from indoor corridors using a Leica ScanStation C10. Each of these datasets provides the ground truth transformation parameters for every scan pose, allowing the transformation error to be determined using Equation (16) [28,43].

The term is defined as a follow:

As with Section 3.3, two state-of-the-art multi-view registration algorithms, MPCGR and HL-MRF, were selected to compare with the proposed method using the three benchmark datasets. The scan positions were assumed using the translation values of the ground truth, incorporating random errors up to 0.05 m. Additionally, random angular errors along the Z-axis were artificially included, with values up to 5 degrees.

For the other registration approaches, the input point cloud was translated to the scan position to ensure consistent conditions for the initial translation approximation. Table 7 presents the comparison among these algorithms. The RMSE for rotation errors of the proposed approach is 0.102, 0.729, and 0.761 (deg), respectively, while the RMSE for translation errors is 0.038, 0.065, and 0.048 (m). The computation times for the three datasets are 33.21, 60.13, and 38.84 s, respectively. MPCGR failed for all datasets, while HL-MRF successfully registered the ETH–Office and RESSO—(i) datasets. However, the proposed method achieves state-of-the-art accuracy with a notable increase in time performance across all datasets. Figure 18 illustrates the three registration results.

Table 7.

Comparison of registration performances on the benchmark datasets.

Figure 18.

The registration results of the proposed approach on the bench datasets. (a) ETH–Office [69]; (b) RESSO—(e) [44]; (c) RESSO—(i) [44].

4. Discussion

The proposed automated point cloud registration method for the stop-and-go system offers a reliable and expedited means of generating 3D point cloud maps of job sites, independent of human input. In contrast, manual registration methods not only require more time but also introduce uncertainties related to the registration quality, heavily dependent on the surveyor’s skills and experience.

The majority of man-made buildings typically adhere to the Manhattan world assumption, where walls are parallel or perpendicular to each other and perpendicular to ceilings and floors [91,92,93]. The proposed approach is specifically optimized for indoor environments that conform to this assumption, exhibiting enhanced registration performance compared to traditional methods. Given its design and efficacy, this approach is highly applicable to general indoor environments comprising multiple rooms and corridors.

Four distinct comparisons were conducted with conventional methods to validate different facets of the proposed approach: (1) To evaluate the overall registration performance using scan data acquired by the stop-and-go system; (2) To assess pairwise registration performance for point cloud pairs with the highest overlap; (3) To analyze pairwise registration performance for point cloud pairs with the lowest overlap; (4) To evaluate the overall registration performance with benchmark datasets. Each comparison involved several conventional methods, effectively showcasing the proposed approach’s efficiency across a variety of scenarios.

A common challenge in indoor environments, such as corridors, is the occurrence of low-overlap scan pairs, which often lead to registration failures. Traditional registration approaches struggle to overcome this issue. However, our proposed method demonstrates robust registration performance even with low-overlapped scan data, benefiting from the prior information provided by the mobile robot.

The validation carried out using the stop-and-go scanning system affirms the method’s efficacy in real-world indoor settings. This approach is novel in its combination of autonomous data acquisition with a quadruped walking robot and includes a comprehensive evaluation of the registration process. The study thus presents a valuable strategy for enhancing the efficiency of automated registration processes for such scanning systems.

The proposed method has some limitations. The first one is that this study heavily depends on the precondition of considering only rotation during the coarse registration, which is limited to around the z-axis. This assumption requires a reliable auto-leveling function in TLS. In fact, many commercial solutions for automated point cloud registration operate on the practical presumption that TLS can ensure automated leveling, subsequently performing their registration processes based on 2D registration, much like the method proposed in this study. Furthermore, it is crucial to note that advancements in TLS technology are steadily improving its automated leveling capabilities. To counteract potential problems stemming from mis-leveling, a preprocessing step for fine Z-axis alignment has been additionally integrated into this study, despite the existing limitations of current auto-leveling technology.

The second is that the proposed approach is exclusively applicable to man-made indoor environments adhering to the Manhattan world condition. While the majority of indoor building environments continue to conform to the Manhattan world condition, modern architecture is increasingly incorporating nonlinear elements, such as curved walls and sliding structural components. Addressing and including these types of nonlinear structural elements in the approach would contribute to enhanced performance and wider applicability.

5. Conclusions

In this study, an automated point cloud registration approach optimized for a stop-and-go scanning system is proposed to efficiently register the 3D scan data using the localization of the scanning system as prior knowledge. The proposed approach consists of three primary stages. In the initial phase, the input point cloud is obtained from the stop-and-go scanning system in the previous research [16], and perpendicular constrained wall planes are extracted. This involves voxel filtering and fine alignment to the Z-axis, followed by the identification of wall-related points based on their normal vectors. The subsequent step employs the M-SAC algorithm to extract wall-plane models with perpendicular constraints. During the second phase, the coarse registration step involves aligning wall planes constrained perpendicularly, and the initial position of the scan system is utilized. To ascertain potential rotation angles, the alignment of normal vectors on each wall plane is assessed, and the calculation of overlapping scores for these angles occurs through an analysis of point-to-point displacement. The third phase encompasses executing fine registration using the proposed horizontality constrained ICP algorithm. The matching patches extracted for the overlapping score in the second phase are utilized for the fine registration process.

The major contributions of this study in the field of automatic point cloud registration are as follows: Firstly, the proposed approach exhibits robust registration results in scenarios with low-overlapped scan data. Secondly, it demonstrates superior registration performance compared to conventional methods. Thirdly, validation using the stop-and-go scanning system confirms the reliability of the proposed approach for scan registration in real-world indoor environments. The proposed approach is the first to tackle automatic registration with autonomous data acquisition using a quadruped walking robot, along with a comprehensive evaluation of the registration process. Lastly, to the best of our knowledge, no studies have yet focused on point cloud registration with an acquired point cloud dataset from stop-and-go scanning systems. As such, this study is valuable in proposing a strategy to enhance the efficiency of the automated registration process for these scanning systems.

Future work will address the limitations of the proposed approach. This method is tailored for registering point clouds from scenes predominantly featuring planar structures, thus making it especially suitable for scans of man-made indoor environments. Therefore, the approach might struggle with outdoor scans or scans of individual objects characterized by curved surfaces. Additionally, precise and accurate localization performance of the scanning system is a prerequisite, which can be facilitated by state-of-the-art mobile platforms.

Author Contributions

Conceptualization, S.P. and J.H.; methodology, S.P. and J.H.; software, S.P and M.H.N.; validation, S.P., S.J. and J.H.; formal analysis, S.P.; investigation, S.P.; resources, J.H.; data curation, S.P. and S.Y.; writing—original draft preparation, S.P.; writing—review and editing, S.P., S.J., M.H.N., S.Y. and J.H.; visualization, S.P.; supervision, J.H.; project administration, J.H.; funding acquisition, J.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by a grant (2021-MOIS37-003) (RS-2021-ND631021) of Intelligent Technology Development Program on Disaster Response and Emergency Management funded by Ministry of Interior and Safety (MOIS, Korea).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author, upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Laczkowski, K.; Asutosh, P.; Rajagopal, N.; Sandrone, P. How OEMs Can Seize the High-Tech Future in Agriculture and Construction. Available online: https://www.mckinsey.com/~/media/McKinsey/Industries/Automotive%20and%20Assembly/Our%20Insights/How%20OEMs%20can%20seize%20the%20high%20tech%20future%20in%20agriculture%20and%20construction/How-OEMs-can-seize-the-high-tech-future-in-agriculture-and-construction.pdf (accessed on 6 November 2023).

- Mill, T.; Alt, A.; Liias, R. Combined 3D building surveying techniques-terrestrial laser scanning (TLS) and total station surveying for BIM data management purposes. J. Civ. Eng. Manag. 2013, 19, 23–32. [Google Scholar] [CrossRef]

- Tamke, M.; Zwierzycki, M.; Evers, H.L.; Ochmann, S.; Vock, R.; Wessel, R. Tracking changes in buildings over time-Fully automated reconstruction and difference detection of 3D scan and BIM files. In Proceedings of the 34th Education & Research in Computer Aided Architectural Design in Europe Conference, Oulu, Finland, 22–26 August 2016; pp. 643–651. [Google Scholar]

- Yang, H.; Omidalizarandi, M.; Xu, X.; Neumann, I. Terrestrial laser scanning technology for deformation monitoring and surface modeling of arch structures. Compos. Struct. 2017, 169, 173–179. [Google Scholar] [CrossRef]

- Soilán, M.; Sánchez-Rodríguez, A.; del Río-Barral, P.; Perez-Collazo, C.; Arias, P.; Riveiro, B. Review of laser scanning technologies and their applications for road and railway infrastructure monitoring. Infrastructures 2019, 4, 58. [Google Scholar] [CrossRef]

- Jung, J.; Yoon, S.; Ju, S.; Heo, J. Development of Kinematic 3D Laser Scanning System for Indoor Mapping and As-Built BIM Using Constrained SLAM. Sensors 2015, 15, 26430–26456. [Google Scholar] [CrossRef] [PubMed]

- Jung, J.; Hong, S.; Yoon, S.; Kim, J.; Heo, J. Automated 3D Wireframe Modeling of Indoor Structures from Point Clouds Using Constrained Least-Squares Adjustment for As-Built BIM. J. Comput. Civ. Eng. 2016, 30, 04015074. [Google Scholar] [CrossRef]

- Jung, J.; Stachniss, C.; Ju, S.; Heo, J. Automated 3D volumetric reconstruction of multiple-room building interiors for as-built BIM. Adv. Eng. Inform. 2018, 38, 811–825. [Google Scholar] [CrossRef]

- Han, S.; Cho, H.; Kim, S.; Jung, J.; Heo, J. Automated and efficient method for extraction of tunnel cross sections using terrestrial laser scanned data. J. Comput. Civ. Eng. 2013, 27, 274–281. [Google Scholar] [CrossRef]

- Park, S.; Ju, S.; Yoon, S.; Nguyen, M.H.; Heo, J. An efficient data structure approach for BIM-to-point-cloud change detection using modifiable nested octree. Automat. Constr. 2021, 132, 103922. [Google Scholar] [CrossRef]

- Zhang, C.; Arditi, D. Advanced Progress Control of Infrastructure Construction Projects Using Terrestrial Laser Scanning Technology. Infrastructures 2020, 5, 83. [Google Scholar] [CrossRef]

- Bassier, M.; Vincke, S.; De Winter, H.; Vergauwen, M. Drift Invariant Metric Quality Control of Construction Sites Using BIM and Point Cloud Data. ISPRS Int. J. Geoinf. 2020, 9, 545. [Google Scholar] [CrossRef]

- Ahn, J.; Wohn, K. Interactive scan planning for heritage recording. Multimed. Tools. Appl. 2016, 75, 3655–3675. [Google Scholar] [CrossRef]

- Baik, A. From point cloud to jeddah heritage BIM nasif historical house—Case study. Digit. Appl. Archaeol. Cult. Herit. 2017, 4, 1–18. [Google Scholar] [CrossRef]

- Nguyen, M.H.; Yoon, S.; Ju, S.; Park, S.; Heo, J. B-EagleV: Visualization of Big Point Cloud Datasets in Civil Engineering Using a Distributed Computing Solution. J. Comput. Civ. Eng. 2022, 36, 04022005. [Google Scholar] [CrossRef]

- Park, S.; Yoon, S.; Ju, S.; Heo, J. BIM-based scan planning for scanning with a quadruped walking robot. Automat. Constr. 2023, 152, 104911. [Google Scholar] [CrossRef]

- Qi, Y.; Coops, N.C.; Daniels, L.D.; Butson, C.R. Comparing tree attributes derived from quantitative structure models based on drone and mobile laser scanning point clouds across varying canopy cover conditions. ISPRS J. Photogramm. Remote Sens. 2022, 192, 49–65. [Google Scholar] [CrossRef]

- Warchoł, A.; Karaś, T.; Antoń, M. Selected Qualitative Aspects of Lidar Point Clouds: Geoslam Zeb-Revo and Faro Focus 3D X130. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2023, 205–212. [Google Scholar] [CrossRef]

- Kim, P.; Park, J.; Cho, Y. As-is Geometric Data Collection and 3D Visualization through the Collaboration between UAV and UGV. In Proceedings of the International Symposium on Automation and Robotics in Construction (ISARC), Banff, AB, Canada, 21–24 May 2019; pp. 544–551. [Google Scholar]

- Blaer, P.S.; Allen, P.K. Data acquisition and view planning for 3-D modeling tasks. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 417–422. [Google Scholar]

- Chow, J.C.K.; Lichti, D.D.; Hol, J.D.; Bellusci, G.; Luinge, H. Imu and multiple RGB-D camera fusion for assisting indoor stop-and-go 3D terrestrial laser scanning. Robotics 2014, 3, 247–280. [Google Scholar] [CrossRef]

- Tuttas, S.; Braun, A.; Borrmann, A.; Stilla, U. Comparision of Photogrammetric Point Clouds with BIM Building elements for Construction Progress Monitoring. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, Zurich, Switzerland, 5–7 September 2014; p. 341. [Google Scholar]

- Prieto, S.; Quintana, B.; Adán, A.; Vázquez, A.S. As-is building-structure reconstruction from a probabilistic next best scan approach. Rob. Auton. Syst. 2017, 94, 186–207. [Google Scholar] [CrossRef]

- Frías, E.; Díaz-Vilariño, L.; Balado, J.; Lorenzo, H. From BIM to Scan Planning and Optimization for Construction Control. Remote Sens. 2019, 11, 1963. [Google Scholar] [CrossRef]

- Cheng, L.; Chen, S.; Liu, X.; Xu, H.; Wu, Y.; Li, M.; Chen, Y. Registration of Laser Scanning Point Clouds: A Review. Sensors 2018, 18, 1641. [Google Scholar] [CrossRef]

- Dong, Z.; Liang, F.; Yang, B.; Xu, Y.; Zang, Y.; Li, J.; Wang, Y.; Dai, W.; Fan, H.; Hyyppä, J. Registration of large-scale terrestrial laser scanner point clouds: A review and benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 163, 327–342. [Google Scholar] [CrossRef]

- Cai, Z.; Chin, T.-J.; Bustos, A.P.; Schindler, K. Practical optimal registration of terrestrial LiDAR scan pairs. ISPRS J. Photogramm. Remote Sens. 2019, 147, 118–131. [Google Scholar] [CrossRef]

- Wu, H.; Yan, L.; Xie, H.; Wei, P.; Dai, J. A hierarchical multiview registration framework of TLS point clouds based on loop constraint. ISPRS J. Photogramm. Remote Sens. 2023, 195, 65–76. [Google Scholar] [CrossRef]

- Knopp, J.; Prasad, M.; Willems, G.; Timofte, R.; Van Gool, L. Hough Transform and 3D SURF for Robust Three Dimensional Classification. In Proceedings of the 11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; pp. 589–602. [Google Scholar]

- Sipiran, I.; Bustos, B. Harris 3D: A robust extension of the Harris operator for interest point detection on 3D meshes. Vis. Comput. 2011, 27, 963–976. [Google Scholar] [CrossRef]

- Zheng, L.; Yu, M.; Song, M.; Stefanidis, A.; Ji, Z.; Yang, C. Registration of Long-Strip Terrestrial Laser Scanning Point Clouds Using RANSAC and Closed Constraint Adjustment. Remote Sens. 2016, 8, 278. [Google Scholar] [CrossRef]

- Ge, X. Automatic markerless registration of point clouds with semantic-keypoint-based 4-points congruent sets. ISPRS J. Photogramm. Remote Sens. 2017, 130, 344–357. [Google Scholar] [CrossRef]

- Guo, Y.; Sohel, F.; Bennamoun, M.; Wan, J.; Lu, M. An Accurate and Robust Range Image Registration Algorithm for 3D Object Modeling. IEEE Trans. Multimed. 2014, 16, 1377–1390. [Google Scholar] [CrossRef]

- Cheng, L.; Wu, Y.; Tong, L.; Chen, Y.; Li, M. Hierarchical Registration Method for Airborne and Vehicle LiDAR Point Cloud. Remote Sens. 2015, 7, 13921–13944. [Google Scholar] [CrossRef]

- Al-Durgham, K.; Habib, A. Association-Matrix-Based Sample Consensus Approach for Automated Registration of Terrestrial Laser Scans Using Linear Features. Photogramm. Eng. Remote Sens. 2014, 80, 1029–1039. [Google Scholar] [CrossRef]

- Prokop, M.; Shaikh, S.A.; Kim, K.-S. Low Overlapping Point Cloud Registration Using Line Features Detection. Remote Sens. 2020, 12, 61. [Google Scholar] [CrossRef]

- Wu, H.; Scaioni, M.; Li, H.; Li, N.; Lu, M.; Liu, C. Feature-constrained registration of building point clouds acquired by terrestrial and airborne laser scanners. J. Appl. Remote. Sens. 2014, 8, 083587. [Google Scholar] [CrossRef]

- Kim, C.; Habib, A.; Pyeon, M.; Kwon, G.-r.; Jung, J.; Heo, J. Segmentation of Planar Surfaces from Laser Scanning Data Using the Magnitude of Normal Position Vector for Adaptive Neighborhoods. Sensors 2016, 16, 140. [Google Scholar] [CrossRef] [PubMed]

- Kim, P.; Chen, J.; Cho, Y.K. Automated Point Cloud Registration Using Visual and Planar Features for Construction Environments. J. Comput. Civ. Eng. 2018, 32, 04017076. [Google Scholar] [CrossRef]

- Theiler, P.W.; Schindler, K. Automatic Registration of Terrestrial Laser Scanner Point Clouds Using Natural Planar Surfaces. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Melbourne, Australia, 25 August–1 September 2012; pp. 173–178. [Google Scholar]

- Xu, Y.; Boerner, R.; Yao, W.; Hoegner, L.; Stilla, U. Pairwise coarse registration of point clouds in urban scenes using voxel-based 4-planes congruent sets. ISPRS J. Photogramm. Remote Sens. 2019, 151, 106–123. [Google Scholar] [CrossRef]

- Favre, K.; Pressigout, M.; Marchand, E.; Morin, L. A Plane-based Approach for Indoor Point Clouds Registration. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 7072–7079. [Google Scholar]

- Wei, P.; Yan, L.; Xie, H.; Huang, M. Automatic coarse registration of point clouds using plane contour shape descriptor and topological graph voting. Automat. Constr. 2022, 134, 104055. [Google Scholar] [CrossRef]

- Chen, S.; Nan, L.; Xia, R.; Zhao, J.; Wonka, P. PLADE: A Plane-Based Descriptor for Point Cloud Registration With Small Overlap. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2530–2540. [Google Scholar] [CrossRef]

- Zou, Z.; Lang, H.; Lou, Y.; Lu, J. Plane-based global registration for pavement 3D reconstruction using hybrid solid-state LiDAR point cloud. Automat. Constr. 2023, 152, 104907. [Google Scholar] [CrossRef]

- Zeng, A.; Song, S.; Niessner, M.; Fisher, M.; Xiao, J.; Funkhouser, T. 3DMatch: Learning Local Geometric Descriptors from RGB-D Reconstructions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 199–208. [Google Scholar]

- Ao, S.; Hu, Q.; Yang, B.; Markham, A.; Guo, Y. Spinnet: Learning a general surface descriptor for 3d point cloud registration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 11753–11762. [Google Scholar]

- Li, C.; Xia, Y.; Yang, M.; Wu, X. Study on TLS Point Cloud Registration Algorithm for Large-Scale Outdoor Weak Geometric Features. Sensors 2022, 22, 5072. [Google Scholar] [CrossRef]

- Ma, J.W.; Czerniawski, T.; Leite, F. Semantic segmentation of point clouds of building interiors with deep learning: Augmenting training datasets with synthetic BIM-based point clouds. Automat. Constr. 2020, 113, 103144. [Google Scholar] [CrossRef]

- Besl, P.; McKay, N. Method for registration of 3-D shapes. In Proceedings of the Robotics ‘91, Boston, MA, USA; 1992. [Google Scholar]

- Grant, D.; Bethel, J.; Crawford, M. Point-to-plane registration of terrestrial laser scans. ISPRS J. Photogramm. Remote Sens. 2012, 72, 16–26. [Google Scholar] [CrossRef]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. In Proceedings of the Robotics: Science and systems, Seattle, WA, USA, 28 June–1 July 2009; p. 435. [Google Scholar]

- Zhang, J.; Yao, Y.; Deng, B. Fast and Robust Iterative Closest Point. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3450–3466. [Google Scholar] [CrossRef] [PubMed]

- Biber, P.; Strasser, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003), Las Vegas, NV, USA, 27–31 October 2003; Volume 2743, pp. 2743–2748. [Google Scholar]

- Takeuchi, E.; Tsubouchi, T. A 3-D Scan Matching using Improved 3-D Normal Distributions Transform for Mobile Robotic Mapping. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 3068–3073. [Google Scholar]

- Das, A.; Waslander, S.L. Scan registration with multi-scale k-means normal distributions transform. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 2705–2710. [Google Scholar]

- Magnusson, M.; Lilienthal, A.; Duckett, T. Scan registration for autonomous mining vehicles using 3D-NDT. J. Field Robot. 2007, 24, 803–827. [Google Scholar] [CrossRef]

- Kurazume, R.; Oshima, S.; Nagakura, S.; Jeong, Y.; Iwashita, Y. Automatic large-scale three dimensional modeling using cooperative multiple robots. Comput. Vis. Image Underst. 2017, 157, 25–42. [Google Scholar] [CrossRef]

- Liu, M.; Sun, X.; Wang, Y.; Shao, Y.; You, Y. Deformation Measurement of Highway Bridge Head Based on Mobile TLS Data. IEEE Access 2020, 8, 85605–85615. [Google Scholar] [CrossRef]

- Zhong, L.; Liu, P.; Wang, L.; Wei, Z.; Guan, H.; Yu, Y. A Combination of Stop-and-Go and Electro-Tricycle Laser Scanning Systems for Rural Cadastral Surveys. ISPRS Int. J. Geoinf. 2016, 5, 160. [Google Scholar] [CrossRef]

- Ge, X.; Hu, H.; Wu, B. Image-Guided Registration of Unordered Terrestrial Laser Scanning Point Clouds for Urban Scenes. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9264–9276. [Google Scholar] [CrossRef]

- Pavan, N.L.; dos Santos, D.R.; Khoshelham, K. Global Registration of Terrestrial Laser Scanner Point Clouds Using Plane-to-Plane Correspondences. Remote Sens. 2020, 12, 1127. [Google Scholar] [CrossRef]

- Lin, Y.; Hyyppä, J.; Kukko, A. Stop-and-Go Mode: Sensor Manipulation as Essential as Sensor Development in Terrestrial Laser Scanning. Sensors 2013, 13, 8140–8154. [Google Scholar] [CrossRef]

- Mohammed, H.; Alsubaie, N.M.; Elhabiby, M.; El-sheimy, N. Registration of time of flight terrestrial laser scanner data for stop-and-go mode. In Proceedings of the International Archives of the Photogramm. Remote Sensing and Spatial Information Sciences, Denver, CO, USA, 17–20 November 2014; pp. 287–291. [Google Scholar]

- Knechtel, J.; Klingbeil, L.; Haunert, J.H.; Dehbi, Y. Optimal Position and Path Planning for Stop-And-Go Laserscanning for the Acquisition of 3d Building Models. In Proceedings of the ISPRS Annals of the Photogramm. Remote Sensing and Spatial Information Sciences, Nice, France, 6–11 June 2022; pp. 129–136. [Google Scholar]

- Foryś, P.; Sitnik, R.; Markiewicz, J.; Bunsch, E. Fast adaptive multimodal feature registration (FAMFR): An effective high-resolution point clouds registration workflow for cultural heritage interiors. Herit. Sci. 2023, 11, 190. [Google Scholar] [CrossRef]

- Borrmann, D.; Nüchter, A.; Ðakulović, M.; Maurović, I.; Petrović, I.; Osmanković, D.; Velagić, J. A mobile robot based system for fully automated thermal 3D mapping. Adv. Eng. Inform. 2014, 28, 425–440. [Google Scholar] [CrossRef]

- Brenner, C.; Dold, C.; Ripperda, N. Coarse orientation of terrestrial laser scans in urban environments. ISPRS J. Photogramm. Remote Sens. 2008, 63, 4–18. [Google Scholar] [CrossRef]

- Theiler, P.W.; Wegner, J.D.; Schindler, K. Globally consistent registration of terrestrial laser scans via graph optimization. ISPRS J. Photogramm. Remote Sens. 2015, 109, 126–138. [Google Scholar] [CrossRef]

- Korea Construction Standards Center. Available online: https://www.kcsc.re.kr/Search/ListCodes/5010# (accessed on 6 November 2023).

- Torr, P.H.S.; Zisserman, A. MLESAC: A New Robust Estimator with Application to Estimating Image Geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Hoppe, H.; DeRose, T.; Duchamp, T.; McDonald, J.; Stuetzle, W. Surface reconstruction from unorganized points. In Proceedings of the 19th Annual Conference on Computer Graphics and Interactive Techniques, Chicago, IL, USA, 1 July 1992; pp. 71–78. [Google Scholar]

- Nguyen, M.H.; Yoon, S.; Park, S.; Heo, J. A demonstration of B-EagleV Visualizing massive point cloud directly from HDFS. In Proceedings of the 2019 IEEE International Conference on Big Data, Los Angeles, CA, USA, 9–12 December 2019; pp. 4121–4124. [Google Scholar]

- Schütz, M. Potree: Rendering Large Point Clouds in Web Browsers. Ph.D. Thesis, Delft University of Technology, Delft, The Netherlands, 2016. [Google Scholar]

- Yoon, S.; Ju, S.; Park, S.; Heo, J. A Framework Development for Mapping and Detecting Changes in Repeatedly Collected Massive Point Clouds. In Proceedings of the International Symposium on Automation and Robotics in Construction, Banff, AB, Canada, 21–24 May 2019; pp. 603–609. [Google Scholar]

- Low, K.-L. Linear Least-Squares Optimization for Point-to-Plane Icp Surface Registration. Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=17d770dc285254508840cdffadf797b516f60f89 (accessed on 6 November 2023).

- Rusinkiewicz, S. A symmetric objective function for ICP. ACM Trans. Graph. 2019, 4, 85. [Google Scholar] [CrossRef]

- Salvi, J.; Matabosch, C.; Fofi, D.; Forest, J. A review of recent range image registration methods with accuracy evaluation. Image Vis. Comput. 2007, 25, 578–596. [Google Scholar] [CrossRef]

- Ghilani, C.D. Adjustment Computations: Spatial Data Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2017. [Google Scholar]