Scenario Generation for Autonomous Vehicles with Deep-Learning-Based Heterogeneous Driver Models: Implementation and Verification

Abstract

1. Introduction

2. Scenario Generation Method

2.1. Problem Description

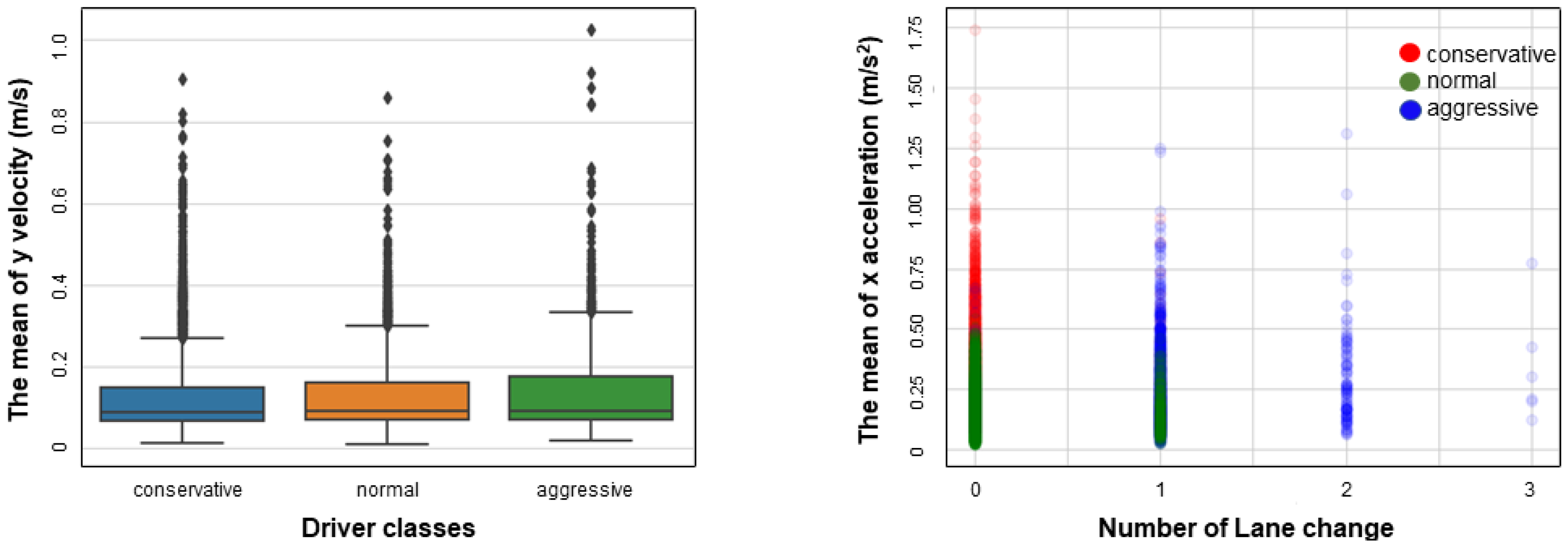

2.2. Datasets

2.3. Heterogeneous Driver Modeling

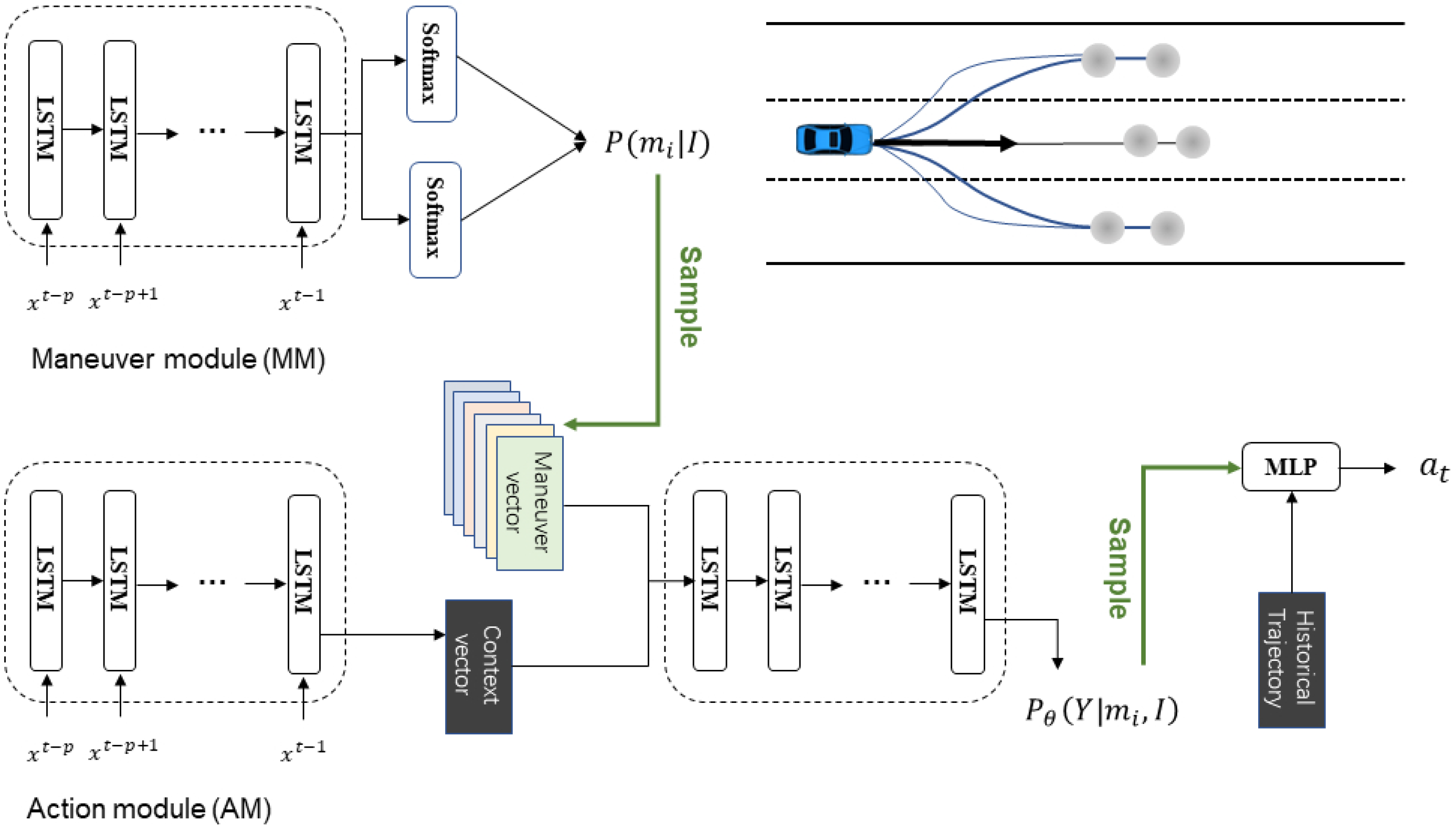

- Maneuver model (MM): estimates maneuver probabilities from the scene context.

- Action model (AM): Generates possible future terminal areas on the basis of selected maneuvers and then samples the endpoint from the terminal area as the intention feature. The endpoint and historical trajectory serve as input to generate the next action.

2.3.1. Maneuver Module (MM)

2.3.2. Action Module (AM)

2.3.3. Model Training

2.4. Implementation and Verification

2.4.1. Intelligent Driver Model

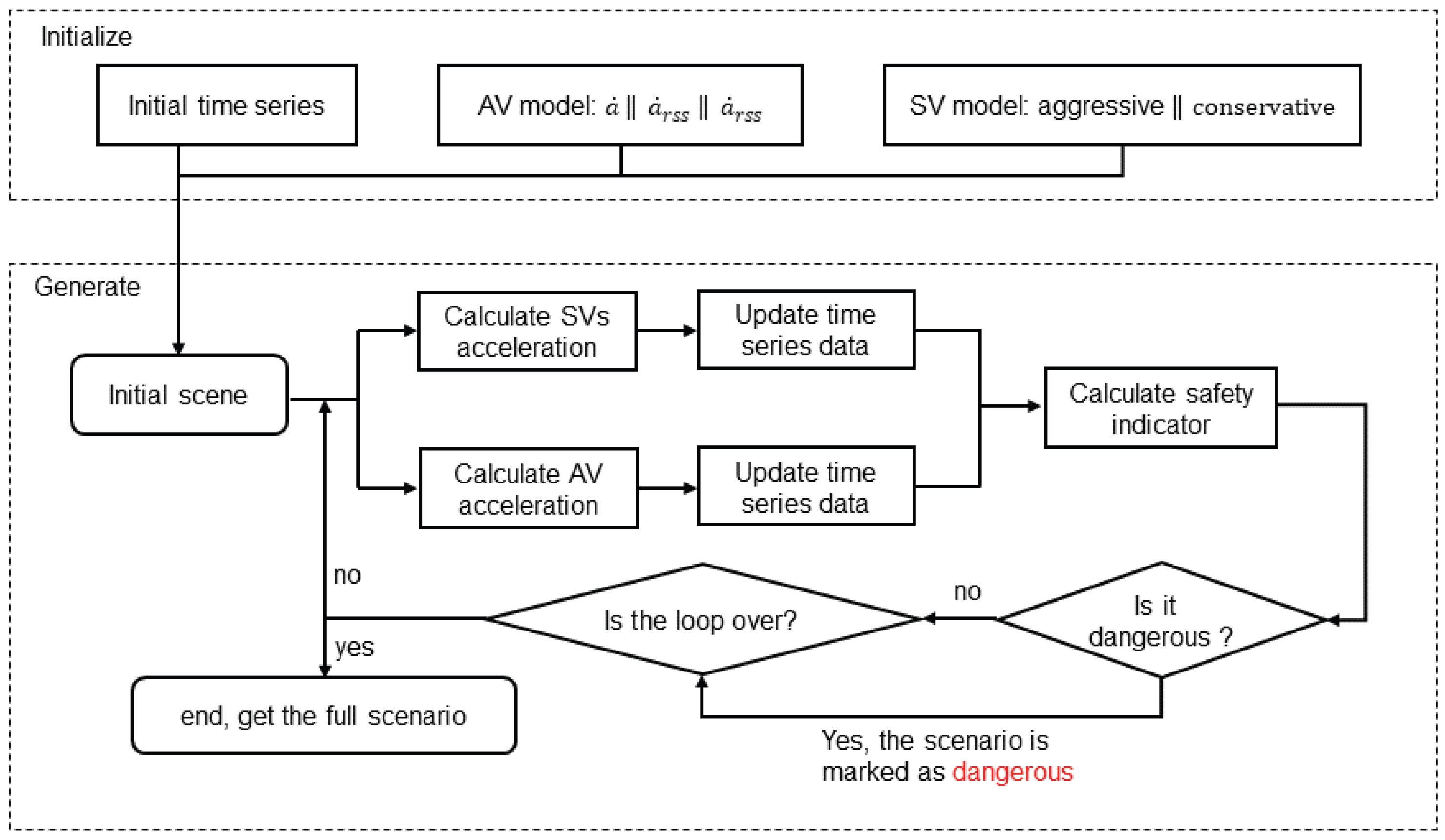

2.4.2. Driving Strategies

2.4.3. Simulation Scheme

| Algorithm 1:Inference algorithm for SV. |

|

3. Results and Analysis

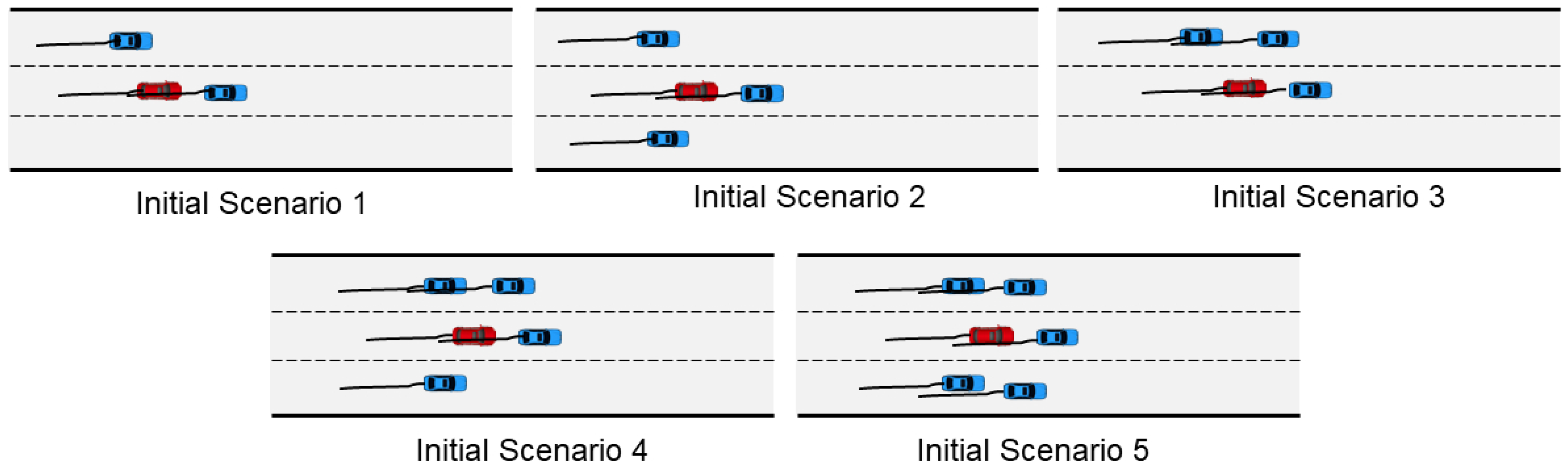

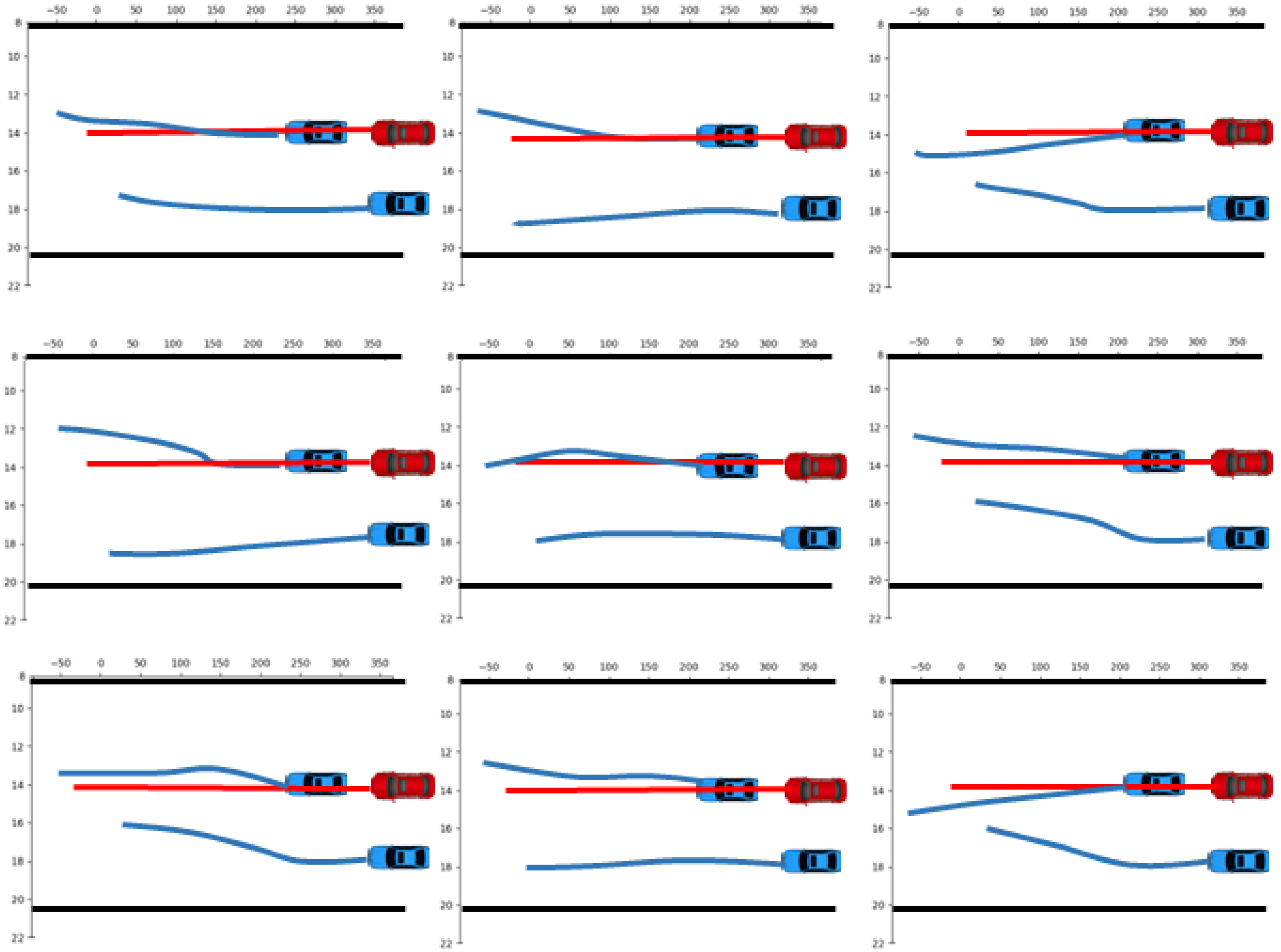

3.1. Implementation of Scenario Generation

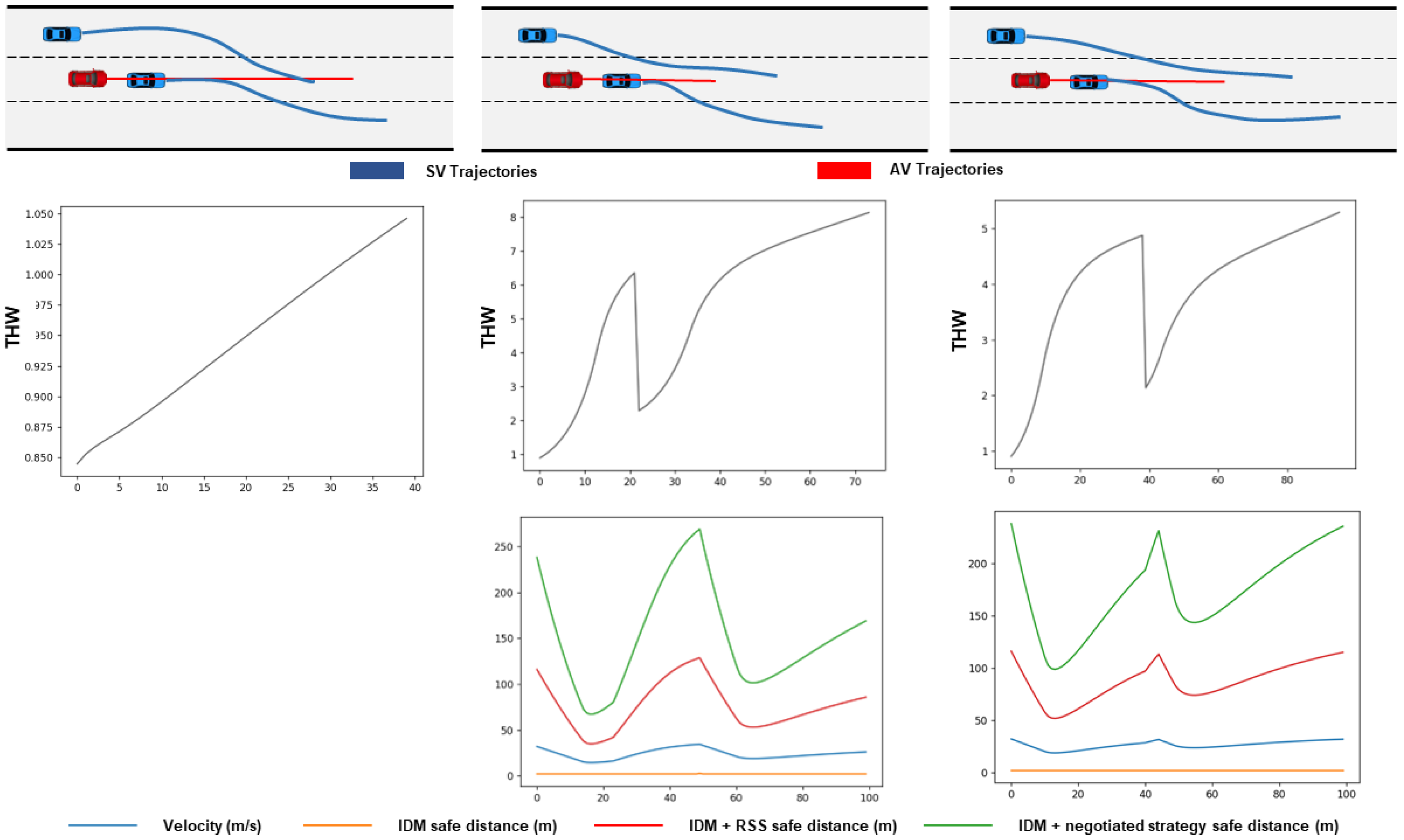

3.2. Verification

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, S.; Capretz, L.F. An analysis of testing scenarios for automated driving systems. In Proceedings of the 2021 IEEE International Conference on Software Analysis, Evolution and Reengineering (SANER), Honolulu, HI, USA, 9–12 March 2021; pp. 622–629. [Google Scholar]

- Li, L.; Huang, W.-L.; Liu, Y.; Zheng, N.-N.; Wang, F.-Y. Intelligence testing for autonomous vehicles: A new approach. IEEE Trans. Intell. Veh. 2016, 1, 158–166. [Google Scholar] [CrossRef]

- Li, L.; Wang, X.; Wang, K.; Lin, Y.; Xin, J.; Chen, L.; Xu, L.; Tian, B.; Ai, Y.; Wang, J.; et al. Parallel testing of vehicle intelligence via virtual-real interaction. Sci. Robot. 2019, 4, eaaw4106. [Google Scholar] [CrossRef]

- Ma, Y.; Sun, C.; Chen, J.; Cao, D.; Xiong, L. Verification and validation methods for decision-making and planning of automated vehicles: A review. IEEE Trans. Intell. Veh. 2022, 7, 480–498. [Google Scholar] [CrossRef]

- Wang, F.-Y.; Song, R.; Zhou, R.; Wang, X.; Chen, L.; Li, L.; Zeng, L.; Zhou, J.; Teng, S.; Zhu, X. Verification and validation of intelligent vehicles: Objectives and efforts from china. IEEE Trans. Intell. Veh. 2022, 7, 164–169. [Google Scholar] [CrossRef]

- Zhou, R.; Liu, Y.; Zhang, K.; Yang, O. Genetic algorithm-based challenging scenarios generation for autonomous vehicle testing. IEEE J. Radio Freq. Identif. 2022, 6, 928–933. [Google Scholar] [CrossRef]

- Li, L.; Zheng, N.; Wang, F.-Y. A theoretical foundation of intelligence testing and its application for intelligent vehicles. IEEE Trans. Intell. Transp. Syst. 2020, 22, 6297–6306. [Google Scholar] [CrossRef]

- Zhao, D.; Lam, H.; Peng, H.; Bao, S.; LeBlanc, D.J.; Nobukawa, K.; Pan, C.S. Accelerated evaluation of automated vehicles safety in lane-change scenarios based on importance sampling techniques. IEEE Trans. Intell. Transp. Syst. 2016, 18, 595–607. [Google Scholar] [CrossRef] [PubMed]

- Huang, Z.; Lam, H.; LeBlanc, D.J.; Zhao, D. Accelerated evaluation of automated vehicles using piecewise mixture models. IEEE Trans. Intell. Transp. Syst. 2017, 19, 2845–2855. [Google Scholar] [CrossRef]

- Althoff, M.; Lutz, S. Automatic generation of safety-critical test scenarios for collision avoidance of road vehicles. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1326–1333. [Google Scholar]

- Sun, J.; Zhang, H.; Zhou, H.; Yu, R.; Tian, Y. Scenario-based test automation for highly automated vehicles: A review and paving the way for systematic safety assurance. IEEE Trans. Intell. Transp. Syst. 2021, 23, 14088–14103. [Google Scholar] [CrossRef]

- Zhang, X.; Li, F.; Wu, X. Csg: Critical scenario generation from real traffic accidents. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 9 October–13 November 2020; pp. 1330–1336. [Google Scholar]

- Tuncali, C.E.; Fainekos, G.; Ito, H.; Kapinski, J. Sim-atav: Simulation-based adversarial testing framework for autonomous vehicles. In Proceedings of the 21st International Conference on Hybrid Systems: Computation and Control (Part of CPS Week), Porto, Portugal, 11–13 April 2018; pp. 283–284. [Google Scholar]

- Najm, W.G.; Toma, S.; Brewer, J. Depiction of Priority Light-Vehicle Pre-Crash Scenarios for Safety Applications Based on Vehicle-to-Vehicle Communications; Tech Report; National Highway Traffic Safety Administration: Washington, DC, USA, 2013. [Google Scholar]

- Antić, B.; Čabarkapa, M.; Čubranić-Dobrodolac, M.; Čičević, S. The Influence of Aggressive Driving Behavior and Impulsiveness on Traffic Accidents. 2018. Available online: https://rosap.ntl.bts.gov/view/dot/36298 (accessed on 5 April 2023).

- Bıçaksız, P.; Özkan, T. Impulsivity and driver behaviors, offences and accident involvement: A systematic review. Transp. Res. Part Traffic Psychol. Behav. 2016, 38, 194–223. [Google Scholar] [CrossRef]

- Berdoulat, E.; Vavassori, D.; Sastre, M.T.M. Driving anger, emotional and instrumental aggressiveness, and impulsiveness in the prediction of aggressive and transgressive driving. Accid. Anal. Prev. 2013, 50, 758–767. [Google Scholar] [CrossRef]

- Ge, J.; Xu, H.; Zhang, J.; Zhang, Y.; Yao, D.; Li, L. Heterogeneous driver modeling and corner scenarios sampling for automated vehicles testing. J. Adv. Transp. 2022, 2022, 8655514. [Google Scholar] [CrossRef]

- Arslan, G.; Marden, J.R.; Shamma, J.S. Autonomous vehicle-target assignment: A game-theoretical formulation. J. Dyn. Syst. Meas. Control. Trans. ASME 2007, 129, 584–596. [Google Scholar] [CrossRef]

- Bhattacharyya, R.; Wulfe, B.; Phillips, D.J.; Kuefler, A.; Morton, J.; Senanayake, R.; Kochenderfer, M.J. Modeling human driving behavior through generative adversarial imitation learning. IEEE Trans. Intell. Transp. Syst. 2022, 24, 2874–2887. [Google Scholar] [CrossRef]

- Aksjonov, A.; Nedoma, P.; Vodovozov, V.; Petlenkov, E.; Herrmann, M. A novel driver performance model based on machine learning. IFAC-PapersOnLine 2018, 51, 267–272. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Cao, D. Personalized vehicle trajectory prediction based on joint time-series modeling for connected vehicles. IEEE Trans. Veh. Technol. 2020, 69, 1341–1352. [Google Scholar] [CrossRef]

- Zhao, H.; Gao, J.; Lan, T.; Sun, C.; Sapp, B.; Varadarajan, B.; Shen, Y.; Shen, Y.; Schmid, C.; Li, C.; et al. Tnt: Target-driven trajectory prediction. In Proceedings of the 5th Annual Conference on Robot Learning, London, UK, 8–11 November 2021; pp. 895–904. [Google Scholar]

- Tian, W.; Wang, S.; Wang, Z.; Wu, M.; Zhou, S.; Bi, X. Multi-modal vehicle trajectory prediction by collaborative learning of lane orientation, vehicle interaction, and intention. Sensors 2022, 22, 4295. [Google Scholar] [CrossRef] [PubMed]

- Gupta, A.; Johnson, J.; Fei-Fei, L.; Savarese, S.; Alahi, A. Social gan: Socially acceptable trajectories with generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2255–2264. [Google Scholar]

- Fang, L.; Jiang, Q.; Shi, J.; Zhou, B. Tpnet: Trajectory proposal network for motion prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6797–6806. [Google Scholar]

- Zhang, Y.; Sun, H.; Zhou, J.; Pan, J.; Hu, J.; Miao, J. Optimal vehicle path planning using quadratic optimization for baidu apollo open platform. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 978–984. [Google Scholar]

- Deo, N.; Trivedi, M.M. Convolutional social pooling for vehicle trajectory prediction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1468–1476. [Google Scholar]

- Alahi, A.; Goel, K.; Ramanathan, V.; Robicquet, A.; Fei-Fei, L.; Savarese, S. Social lstm: Human trajectory prediction in crowded spaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 961–971. [Google Scholar]

- Krajewski, R.; Bock, J.; Kloeker, L.; Eckstein, L. The highd dataset: A drone dataset of naturalistic vehicle trajectories on german highways for validation of highly automated driving systems. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2118–2125. [Google Scholar]

- Bellmund, J.L.; Gärdenfors, P.; Moser, E.I.; Doeller, C.F. Navigating cognition: Spatial codes for human thinking. Science 2018, 362, eaat6766. [Google Scholar] [CrossRef]

- Deo, N.; Trivedi, M.M. Multi-modal trajectory prediction of surrounding vehicles with maneuver based lstms. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1179–1184. [Google Scholar]

- Malhotra, P.; Ramakrishnan, A.; Anand, G.; Vig, L.; Agarwal, P.; Shroff, G. Lstm-based encoder-decoder for multi-sensor anomaly detection. arXiv 2016, arXiv:1607.00148. [Google Scholar]

- Gardner, M.W.; Dorling, S. Artificial neural networks (the multilayer perceptron)—a review of applications in the atmospheric sciences. Atmos. Environ. 1998, 32, 2627–2636. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Mehta, P.; Bukov, M.; Wang, C.-H.; Day, A.G.; Richardson, C.; Fisher, C.K.; Schwab, D.J. A high-bias, low-variance introduction to machine learning for physicists. Phys. Rep. 2019, 810, 1–124. [Google Scholar] [CrossRef] [PubMed]

- Treiber, M.; Hennecke, A.; Helbing, D. Congested traffic states in empirical observations and microscopic simulations. Phys. Rev. E 2000, 62, 1805. [Google Scholar] [CrossRef] [PubMed]

- Shalev-Shwartz, S.; Shammah, S.; Shashua, A. On a formal model of safe and scalable self-driving cars. arXiv 2017, arXiv:1708.06374. [Google Scholar]

- Zhao, C.; Li, L.; Pei, X.; Li, Z.; Wang, F.-Y.; Wu, X. A comparative study of state-of-the-art driving strategies for autonomous vehicles. Accid. Prev. 2021, 150, 105937. [Google Scholar] [CrossRef] [PubMed]

- Minderhoud, M.M.; Bovy, P.H. Extended time-to-collision measures for road traffic safety assessment. Accid. Anal. Prev. 2001, 33, 89–97. [Google Scholar] [CrossRef]

- Hayward, J.C. Near miss determination through use of a scale of danger. Highw. Res. Rec. 1972, 384, 24–34. [Google Scholar]

- Borsos, A.; Farah, H.; Laureshyn, A.; Hagenzieker, M. Are collision and crossing course surrogate safety indicators transferable? a probability based approach using extreme value theory. Accid. Anal. Prev. 2020, 143, 105517. [Google Scholar] [CrossRef]

- Shoaeb, A.; El-Badawy, S.; Shawly, S.; Shahdah, U.E. Time headway distributions for two-lane two-way roads: Case study from dakahliya governorate, egypt. Innov. Infrastruct. Solut. 2021, 6, 1–18. [Google Scholar] [CrossRef]

- Dendorfer, P.; Osep, A.; Leal-Taixé, L. Goal-gan: Multimodal trajectory prediction based on goal position estimation. In Proceedings of the Asian Conference on Computer Vision, Kyoto, Japan, 30 November–4 December 2020. [Google Scholar]

| Parameter | Description | Value |

|---|---|---|

| Desired speed | 40 m/s | |

| Acceleration exponent | 4 | |

| Minimal gap | 2 m | |

| T | Desired time headway | 2 s |

| Maximal acceleration | 6 m/s | |

| b | Comfortable deceleration | 3 m/s |

| Parameter | Description | Value |

|---|---|---|

| Response time | 2/3 s | |

| Maximal acceleration | 6 m/s | |

| Minimal deceleration | 3 m/s |

| Model | NLL | Cross Entropy |

|---|---|---|

| Aggressive | 2.43 | 0.018 |

| Conservative | 2.56 | 0.025 |

| Original | 2.17 | 0.021 |

| CS-LSTM | 3.30 | - |

| Initial Scenario | All SVs Aggressive | All SVs Conservative | Front SVs Aggressive | Back SVs Aggressive | Original Driver Model |

|---|---|---|---|---|---|

| 1 | 7% | 0% | 0% | 5% | 0% |

| 2 | 1% | 0% | 0% | 5% | 0% |

| 3 | 11% | 0% | 1% | 6% | 1% |

| 4 | 10% | 1% | 0% | 13% | 2% |

| 5 | 9% | 1% | 0% | 6% | 2% |

| IDM | IDM + RSS | IDM + Negotiated Strategy | |

|---|---|---|---|

| THW | 0–1 s | 0–8 s | 0–5 s |

| Safety distance | 2–30 m | >50 m | >40 m |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao , L.; Zhou, R.; Zhang, K. Scenario Generation for Autonomous Vehicles with Deep-Learning-Based Heterogeneous Driver Models: Implementation and Verification. Sensors 2023, 23, 4570. https://doi.org/10.3390/s23094570

Gao L, Zhou R, Zhang K. Scenario Generation for Autonomous Vehicles with Deep-Learning-Based Heterogeneous Driver Models: Implementation and Verification. Sensors. 2023; 23(9):4570. https://doi.org/10.3390/s23094570

Chicago/Turabian StyleGao , Li, Rui Zhou, and Kai Zhang. 2023. "Scenario Generation for Autonomous Vehicles with Deep-Learning-Based Heterogeneous Driver Models: Implementation and Verification" Sensors 23, no. 9: 4570. https://doi.org/10.3390/s23094570

APA StyleGao , L., Zhou, R., & Zhang, K. (2023). Scenario Generation for Autonomous Vehicles with Deep-Learning-Based Heterogeneous Driver Models: Implementation and Verification. Sensors, 23(9), 4570. https://doi.org/10.3390/s23094570