Cluster-Locating Algorithm Based on Deep Learning for Silicon Pixel Sensors

Abstract

1. Introduction

- 1.

- In regional detection algorithms [14], samples (images) are divided into partitions as a prerequisite for consistency judgment. Each partition contains the maximum number of consistent pixels. For example, if we want to locate the area of a cluster, we start from the seed pixel in the cluster, which has the highest energy, then look for fired pixels in the seed pixel’s neighborhood, and take the fired pixel as the new seed pixel. This domain expansion process is repeated until no more fired pixels exist. The key to this method is to set reasonable guidelines for domain expansion.

- 2.

- Edge detection methods, such as Canny [15], the Sobel operator, etc., aim to find items’ edges. The performance of edge detection algorithms highly depends on the quality of the edges’ features. For example, if we want to use edge detection methods to locate clusters, we must obtain the edge information of the clusters. However, the edges of the clusters may be blurred, which cannot guarantee the continuity and closure of the edges. In this case, the detection accuracy can be relatively poor. Thus, the edge detection method has weak robustness for cluster location.

- 3.

- A clustering algorithm [16] is an unsupervised machine learning algorithm. For example, when we want to locate clusters, a clustering algorithm will divide each data frame into two dissecting subsets: the background and the foreground (clusters in our case). Taking the value of each pixel as the input, certain distance measurement methods (Euclidean distance, Manhattan distance, etc.) calculate the similarity between the data in each subset. The above process is continuously and iteratively optimized so that the data in the same subset are as similar to each other as possible, and the data in different subsets are as different as possible. Finally, we obtain two sets: the pixels of the objects (clusters) and the background pixels. Clustering algorithms are easy to deploy and execute. However, they are usually sensitive to isolated pixels and do not utilize the spatial information provided by the pixels in the samples. As a result, clustering algorithms highly rely on the features’ quality.

2. Method

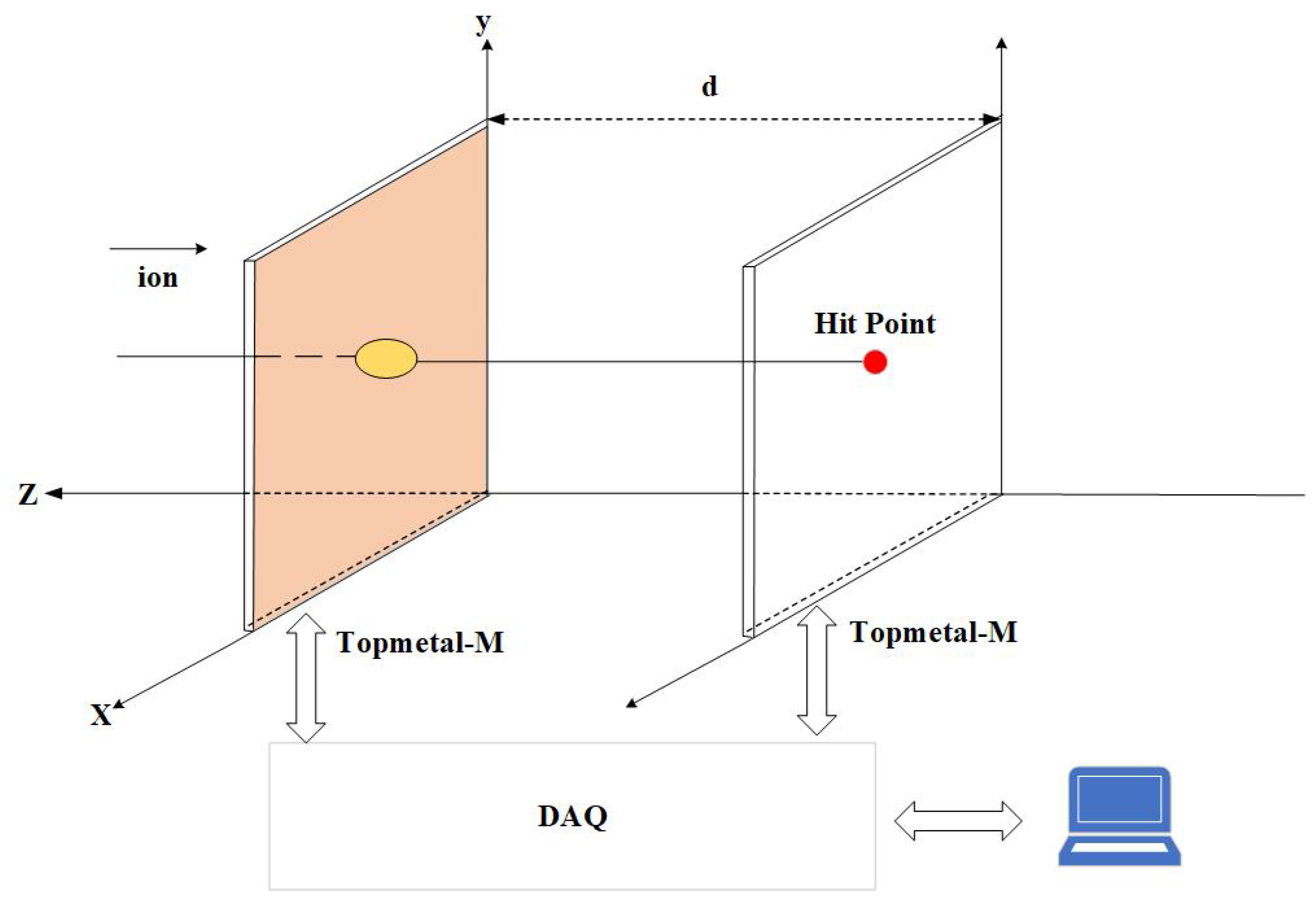

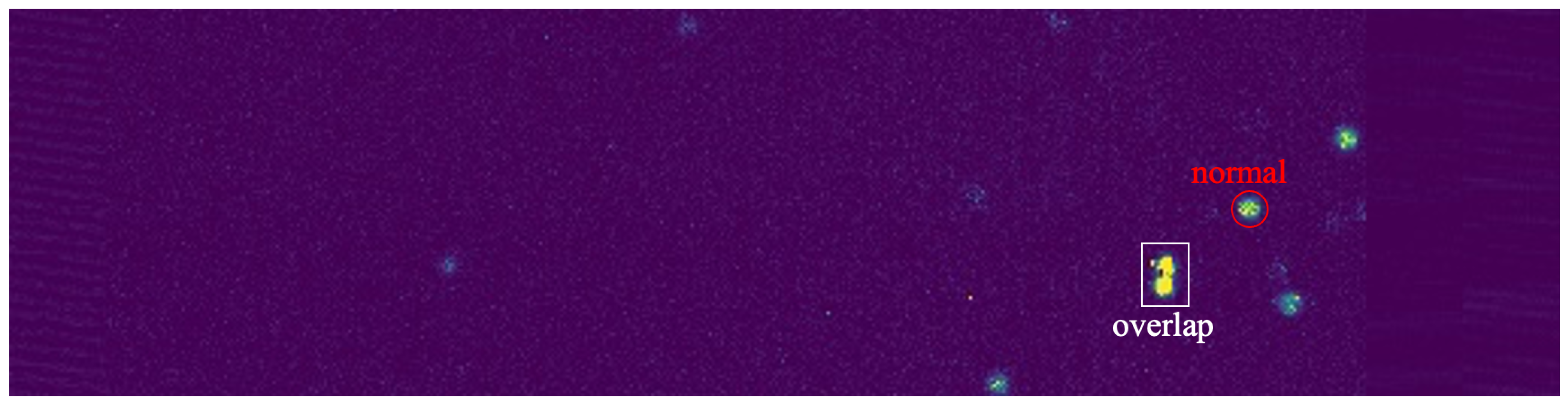

- We performed a beam test on the Topmetal-M [6] silicon pixel sensor at the External Target Facility at the Heavy Ion Research Facility in Lanzhou. In this test, we recorded the cluster data induced by energy of 320 MeV/u, with an average beam intensity of several thousand /s. After pre-processing, we used the images that contained the cluster data to form the dataset for training and verification.

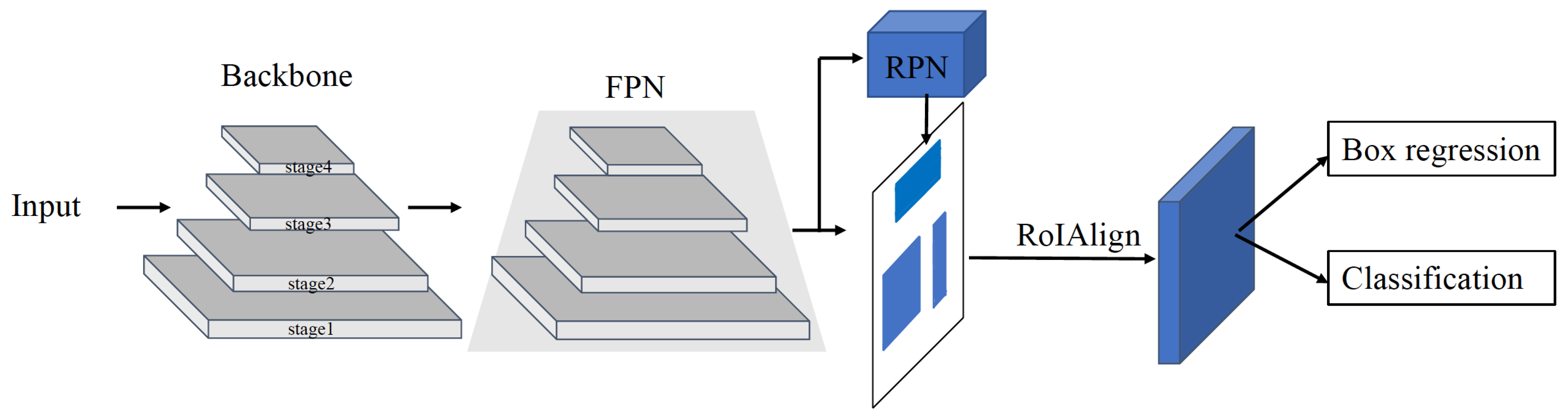

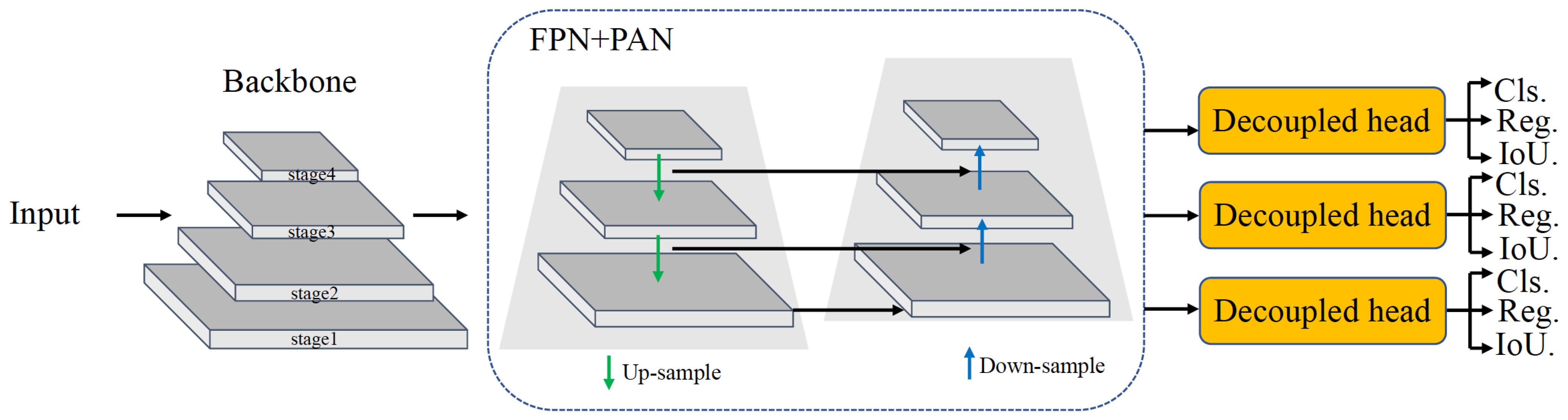

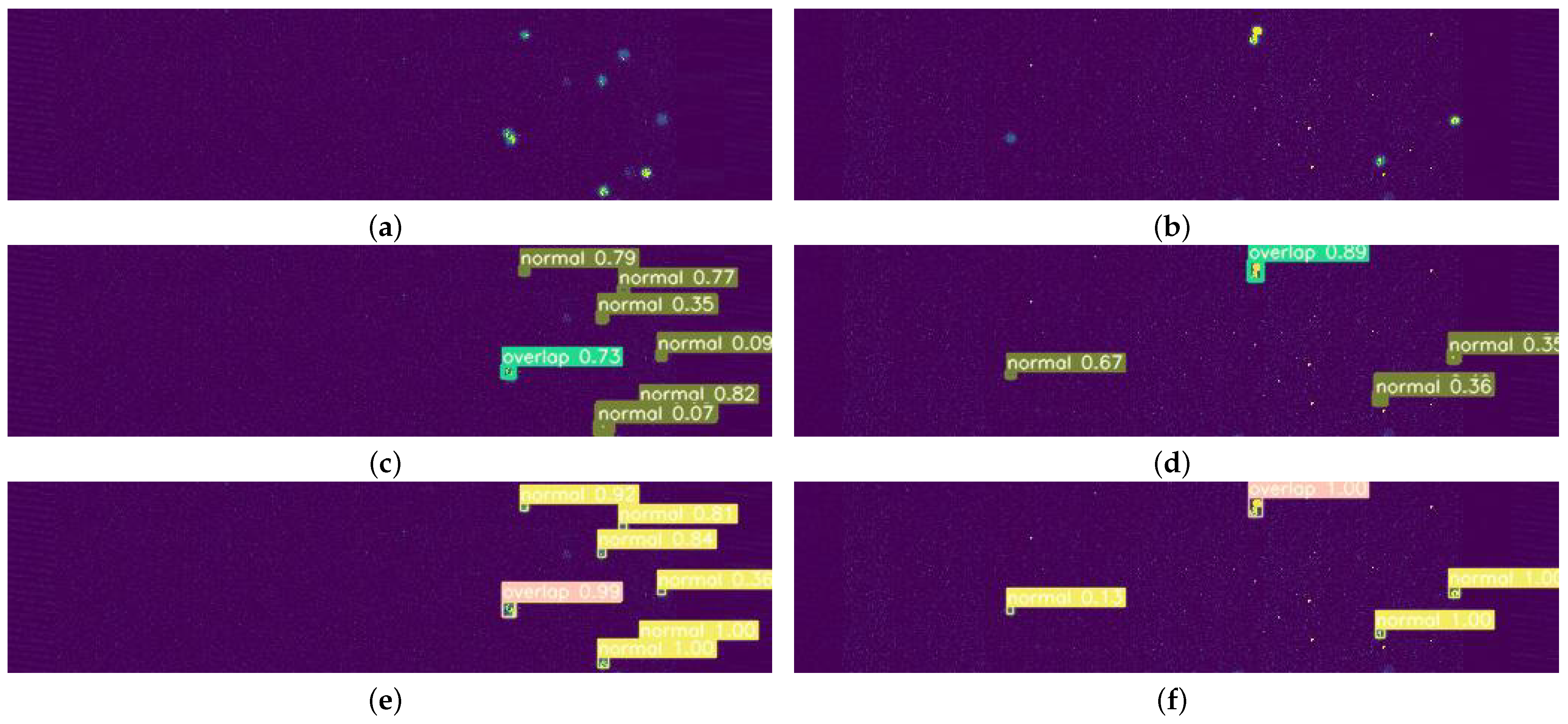

- We constructed both one- and two-stage detection algorithms, as shown in Figure 1 and Figure 2. We use Transformer-based and CNN-based backbones for feature extraction at different stages in the one- and two-stage detection algorithms. Additionally, each model comes in four different sizes. The two-stage detection algorithms first generate region proposals from images and then generate the final object boxes from the region proposals, while the one-stage object detection algorithms do not need the region proposal stage and directly generate the object’s class probability and position coordinate values.

2.1. The Backbone Network and Its Variants

2.2. The Two-Stage Model

2.3. The One-Stage Model

- 1.

- The backbone for feature extraction over an entire image is Swin Transformer and ConvNeXt. Swin Transformer constructs hierarchical feature maps, achieves linear computation complexity by computing self-attention locally within non-overlapping windows, and introduces shifted windows for cross-window connections to enhance the modeling power. ConvNeXt adopts a hierarchical structure and uses a larger kernel size in convolution to increase the receptive field.

- 2.

- The neck used to aggregate feature maps from different stages consists of an FPN and PAN, which enhance the entire feature hierarchy with the accurate localization of signals in lower layers by bottom-up path augmentation. To correspond to the output of three different scales, the FPN is constructed based on the feature map from backbone stages 2∼4.

- 3.

- The head used to predict the classes and bounding boxes of objects is YOLOX [44], which is divided into the classification, regression, and IoU branches.

3. Dataset Establishment

3.1. Cluster Data Generation in Heavy-Ion Campaigns

3.2. Cluster Data Pre-Processing

- 1.

- For F frames, the mean and variance of each position are determined; represents the i-th row, j-th column.

- 2.

- According to the criterion, the value at each position of the F frame data is retained at a value of , and values exceeding are eliminated.

- 3.

- The values reserved at each position are averaged to obtain the value ’ of each position, which is used as background information and denoted as b.

4. Experimental Results

4.1. Implementation Details and Performance

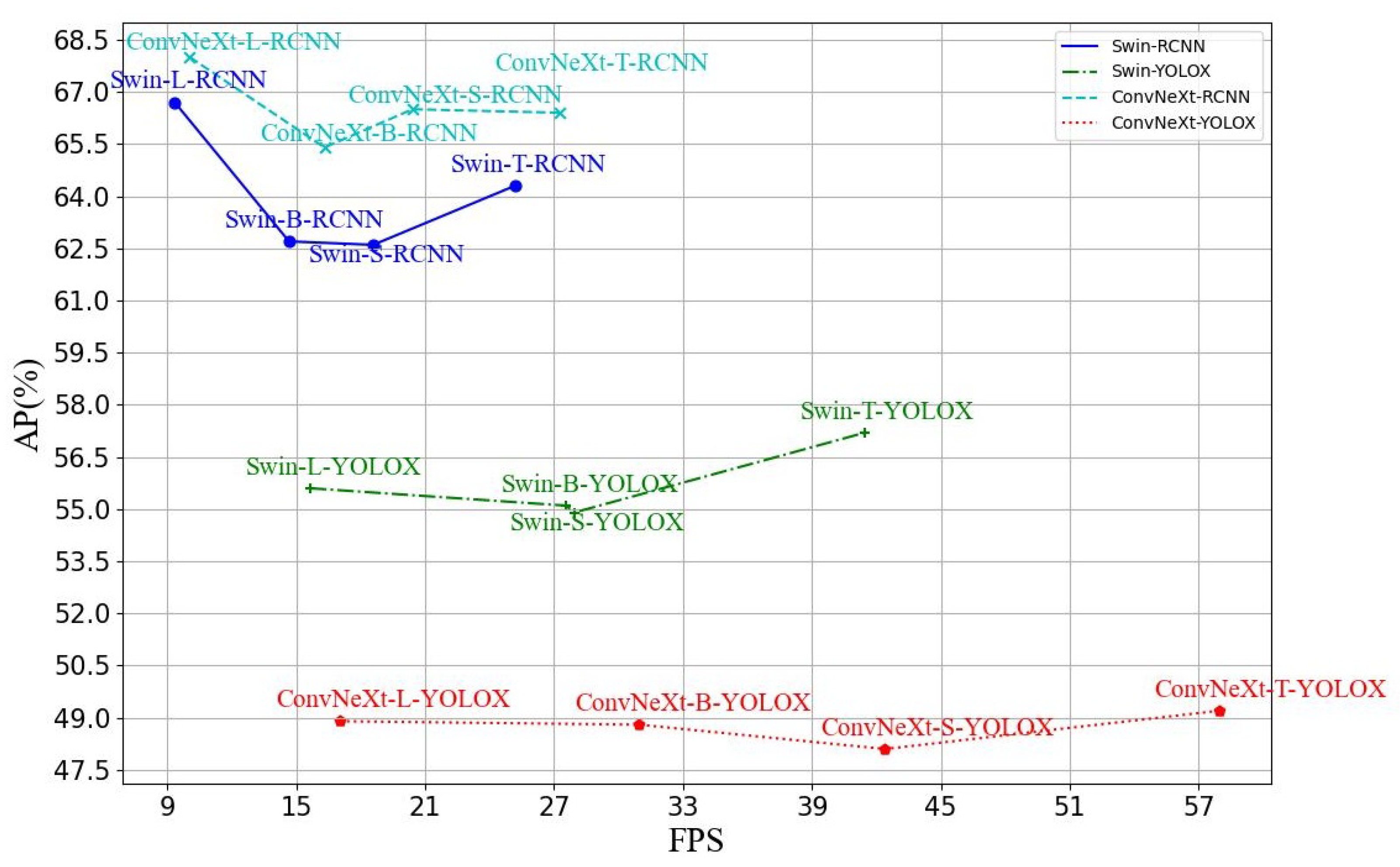

4.2. Comparison between Object Detection Algorithms

4.3. Detection Efficiency and Fake Rate

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wei, B. Results from Lanzhou K450 heavy ion cyclotron. In Proceedings of the 1989 IEEE Particle Accelerator Conference, Accelerator Science and Technology IEEE, Chicago, IL, USA, 20–23 March 1989; pp. 29–33. [Google Scholar]

- Yang, J.; Xia, J.; Xiao, G.; Xu, H.; Zhao, H.; Zhou, X.; Ma, X.; He, Y.; Ma, L.; Gao, D.; et al. High Intensity heavy ion Accelerator Facility (HIAF) in China. Nuclear Instruments and Methods in Physics Research Section B: Beam Interactions with Materials and Atoms. In Proceedings of the XVIth International Conference on ElectroMagnetic Isotope Separators and Techniques Related to their Applications, Matsue, Japan, 2–7 December 2012. [Google Scholar]

- Šuljić, M. ALPIDE: The Monolithic Active Pixel Sensor for the ALICE ITS upgrade. J. Instrum. 2016, 11, C11025. [Google Scholar] [CrossRef]

- Valin, I.; Hu-Guo, C.; Baudot, J.; Bertolone, G.; Besson, A.; Colledani, C.; Claus, G.; Dorokhov, A.; Doziere, G.; Dulinski, W.; et al. A reticle size CMOS pixel sensor dedicated to the STAR HFT. J. Instrum. 2012, 7, C01102. [Google Scholar] [CrossRef]

- Yang, P.; Niu, X.; Zhou, W.; Tian, Y.; Wang, Q.; Huang, J.; Wang, Y.; Fu, F.; Cao, B.; Xie, Z.; et al. Design of Nupix-A1, a Monolithic Active Pixel Sensor for heavy-ion physics. Nucl. Instruments Methods Phys. Res. Sect. Accel. Spectrometers Detect. Assoc. Equip. 2022, 1039, 167019. [Google Scholar] [CrossRef]

- Ren, W.; Zhou, W.; You, B.; Fang, N.; Wang, Y.; Yang, H.; Zhang, H.; Wang, Y.; Liu, J.; Li, X.; et al. Topmetal-M: A novel pixel sensor for compact tracking applications. Nucl. Instruments Methods Phys. Res. Sect. Accel. Spectrometers Detect. Assoc. Equip. 2020, 981, 164557. [Google Scholar] [CrossRef]

- Anderle, D.P.; Bertone, V.; Cao, X.; Chang, L.; Chang, N.; Chen, G.; Chen, X.; Chen, Z.; Cui, Z.; Dai, L.; et al. Electron-ion collider in China. Front. Phys. 2021, 16, 64701. [Google Scholar] [CrossRef]

- Liu, J.; Zhou, Z.; Wang, D.; Zhou, S.Q.; Sun, X.M.; Ren, W.P.; You, B.H.; Gao, C.S.; Xiao, L.; Yang, P.; et al. Prototype of single-event effect localization system with CMOS pixel sensor. Nucl. Sci. Tech. 2022, 33, 136. [Google Scholar] [CrossRef]

- Zinchenko, A.; Pismennaia, V.; Vodopyanov, A.; Chabratova, G. Development of Algorithms for Cluster Finding and Track Reconstruction in the Forward Muon Spectrometer of ALICE Experiment. 2005. Available online: https://cds.cern.ch/record/865586/files/p276.pdf (accessed on 5 August 2005).

- Atlas Collaboration. A neural network clustering algorithm for the ATLAS silicon pixel detector. J. Instrum. 2014, 9, P09009. [Google Scholar]

- Baffioni, S.; Charlot, C.; Ferri, F.; Futyan, D.; Meridiani, P.; Puljak, I.; Rovelli, C.; Salerno, R.; Sirois, Y. Electron reconstruction in CMS. Eur. Phys. J. C 2007, 49, 1099–1116. [Google Scholar] [CrossRef]

- Bassi, G.; Giambastiani, L.; Lazzari, F.; Morello, M.J.; Pajero, T.; Punzi, G. A Real-Time FPGA-Based Cluster Finding Algorithm for LHCb Silicon Pixel Detector. In Proceedings of the EPJ Web of Conferences, Rome, Italy, 13–17 September 2021; EDP Sciences: Ulis, France, 2021; Volume 251, p. 04016. [Google Scholar]

- Schledt, D.; Kebschull, U.; Blume, C. Developing a cluster-finding algorithm with Vitis HLS for the CBM-TRD. Nucl. Instruments Methods Phys. Res. Sect. Accel. Spectrometers Detect. Assoc. Equip. 2023, 1047, 167797. [Google Scholar] [CrossRef]

- Wang, Y.; Fu, F.; Wang, J.; Lai, F.; Zhou, W.; Yan, X.; Yang, H.; Zhao, C. Design of a fast-stop centroid finder for Monolithic Active Pixel Sensor. J. Instrum. 2019, 14, C12006. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Mikuni, V.; Canelli, F. Unsupervised clustering for collider physics. Phys. Rev. D 2021, 103, 092007. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, H.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; NanoCode012; ChristopherSTAN; Changyu, L.; Laughing; Tkianai; Hogan, A.; Lorenzomammana; et al. Ultralytics/yolov5: v3.1—Bug Fixes and Performance Improvements. 2020. Available online: https://zenodo.org/record/4154370#.ZD6sG85BxPY (accessed on 29 October 2020).

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet Classification with Deep Convolutional Neural Networks. Neural Inf. Process. Syst. 2012, 25, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Sun, C.; Shrivastava, A.; Singh, S.; Gupta, A. Revisiting Unreasonable Effectiveness of Data in Deep Learning Era. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 843–852. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 9992–10002. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 11966–11976. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 9626–9635. [Google Scholar] [CrossRef]

- Wu, Y.; Chen, Y.; Yuan, L.; Liu, Z.; Wang, L.; Li, H.; Fu, Y. Rethinking Classification and Localization for Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10183–10192. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Kong, J.; Qian, Y.; Zhao, H.; Yang, H.; She, Q.; Yue, K.; Ke, L.; Yan, J.; Su, F.; Wang, S.; et al. Development of the readout electronics for the HIRFL-CSR array detectors. J. Instrum. 2019, 14, P02012. [Google Scholar] [CrossRef]

- Zhao, C.; Yang, X.; Fu, F.; Wang, Y.; Lai, F.; Tian, Y.; Li, Y.; Wang, X.; Li, R.; Yang, H. Study of the charge sensing node in the MAPS for therapeutic carbon ion beams. J. Instrum. 2019, 14, C05006. [Google Scholar] [CrossRef]

- Cui, X.; Scogland, T.; de Supinski, B.; Feng, W. Performance Evaluation of the NVIDIA Tesla V100: Block Level Pipelining vs. Kernel Level Pipelining. Dallas: SC18. 2018. Available online: https://sc18.supercomputing.org/proceedings/tech_poster/poster_files/post151s2-file3.pdf (accessed on 12 November 2018).

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. Unitbox: An advanced object detection network. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 516–520. [Google Scholar]

- Zhang, H.; Cissé, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond Empirical Risk Minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Uijlings, J.R.; Van De Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

| Backbone | Channels | Blocks |

|---|---|---|

| ConvNeXt-T | [96, 192, 384, 768] | [3, 3, 9, 3] |

| ConvNeXt-S | [96, 192, 384, 768] | [3, 3, 27, 3] |

| ConvNeXt-B | [128, 256, 512, 1025] | [3, 3, 9, 3] |

| ConvNeXt-L | [192, 384, 768, 1536] | [3, 3, 9, 3] |

| Backbone | Channels | Layers | Heads |

|---|---|---|---|

| Swin-T | [96, 192, 384, 768] | [2, 2, 6, 2] | [3, 6, 12, 24] |

| Swin-S | [96, 192, 384, 768] | [2, 2, 18, 2] | [3, 6, 12, 24] |

| Swin-B | [128, 256, 512, 1025] | [2, 2, 18, 2] | [4, 8, 16, 32] |

| Swin-L | [192, 384, 768, 1536] | [2, 2, 18, 2] | [6, 12, 24, 48] |

| Models | AP | AP | AP | FPS | Params.(M) | FLOPs(G) |

|---|---|---|---|---|---|---|

| ConvNeXt-T-YOLOX | 49.2 | 85.0 | 54.0 | 57.94 | 38.44 | 8.64 |

| Swin-T-YOLOX | 57.2 (+8.0) | 90.0 | 66.7 | 41.47 | 38.12 | 9.93 |

| ConvNeXt-S-YOLOX | 48.1 | 84.0 | 50.4 | 42.40 | 62.34 | 14.55 |

| Swin-S-YOLOX | 54.9 (+6.8) | 87.2 | 64.1 | 27.93 | 61.71 | 17.42 |

| ConvNeXt-B-YOLOX | 48.8 | 83.9 | 53.6 | 30.96 | 110.48 | 25.74 |

| Swin-B-YOLOX | 55.1 (+6.3) | 87.7 | 64.9 | 27.57 | 109.65 | 30.93 |

| ConvNeXt-L-YOLOX | 48.9 | 84.4 | 51.0 | 17.04 | 247.80 | 57.67 |

| Swin-L-YOLOX | 55.6 (+6.7) | 87.1 | 65.5 | 15.64 | 246.56 | 69.52 |

| Models | AP | AP | AP | FPS | Params.(M) | FLOPs(G) |

|---|---|---|---|---|---|---|

| Swin-T-RCNN | 64.3 | 90.8 | 82.6 | 25.18(−16.29) | 44.72 | 27.65 |

| ConvNeXt-T-RCNN | 66.4 (+2.1) | 91.9 | 83.9 | 27.24 (−30.70) | 45.03 | 26.36 |

| Swin-S-RCNN | 62.6 | 89.4 | 80.1 | 18.58(−9.35) | 66.02 | 34.76 |

| ConvNeXt-S-RCNN | 66.5 (+3.9) | 93.3 | 82.3 | 20.41 (−21.99) | 66.64 | 31.88 |

| Swin-B-RCNN | 62.7 | 90.1 | 81.1 | 14.66(−12.91) | 104.03 | 45.85 |

| ConvNeXt-B-RCNN | 65.4 (+2.7) | 90.3 | 82.0 | 16.31 (−14.65) | 104.86 | 40.65 |

| Swin-L-RCNN | 66.7 | 92.8 | 84.8 | 9.34(−6.30) | 212.50 | 77.46 |

| ConvNeXt-L-RCNN | 68.0 (+1.3) | 93.4 | 86.2 | 10.04 (−7.00) | 213.74 | 65.61 |

| Methods | Fake Clusters |

|---|---|

| SS | 0 |

| Swin-T-YOLOX | 0 |

| ConvNeXt-T-YOLOX | 0 |

| ConvNeXt-L-RCNN | 0 |

| ConvNeXt-T-RCNN | 0 |

| Methods | Detected True Clusters | Detected Clusters | Detection Efficiency (%) | Fake Rate (%) |

|---|---|---|---|---|

| SS | 650 | 672 | 98.49 | 3.23 |

| Swin-T-YOLOX | 648 | 811 | 98.18 | 20.10 |

| ConvNeXt-T-YOLOX | 646 | 863 | 97.88 | 25.14 |

| ConvNeXt-L-RCNN | 641 | 641 | 97.12 | 0 |

| ConvNeXt-T-RCNN | 629 | 629 | 95.30 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mai, F.; Yang, H.; Wang, D.; Chen, G.; Gao, R.; Chen, X.; Zhao, C. Cluster-Locating Algorithm Based on Deep Learning for Silicon Pixel Sensors. Sensors 2023, 23, 4383. https://doi.org/10.3390/s23094383

Mai F, Yang H, Wang D, Chen G, Gao R, Chen X, Zhao C. Cluster-Locating Algorithm Based on Deep Learning for Silicon Pixel Sensors. Sensors. 2023; 23(9):4383. https://doi.org/10.3390/s23094383

Chicago/Turabian StyleMai, Fatai, Haibo Yang, Dong Wang, Gang Chen, Ruxin Gao, Xurong Chen, and Chengxin Zhao. 2023. "Cluster-Locating Algorithm Based on Deep Learning for Silicon Pixel Sensors" Sensors 23, no. 9: 4383. https://doi.org/10.3390/s23094383

APA StyleMai, F., Yang, H., Wang, D., Chen, G., Gao, R., Chen, X., & Zhao, C. (2023). Cluster-Locating Algorithm Based on Deep Learning for Silicon Pixel Sensors. Sensors, 23(9), 4383. https://doi.org/10.3390/s23094383