DOPESLAM: High-Precision ROS-Based Semantic 3D SLAM in a Dynamic Environment

Abstract

1. Introduction

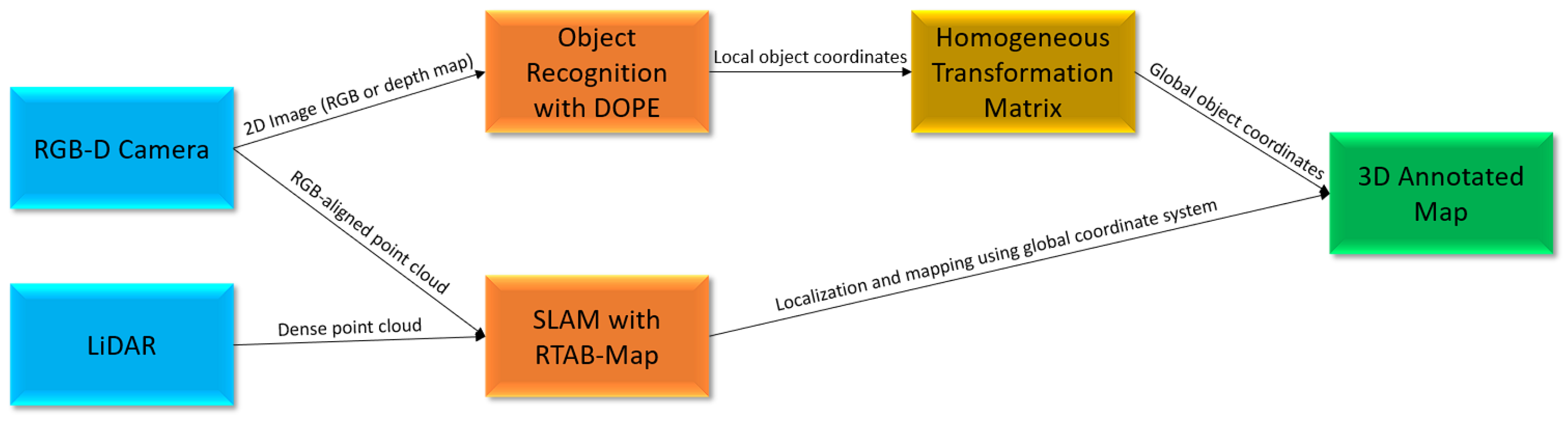

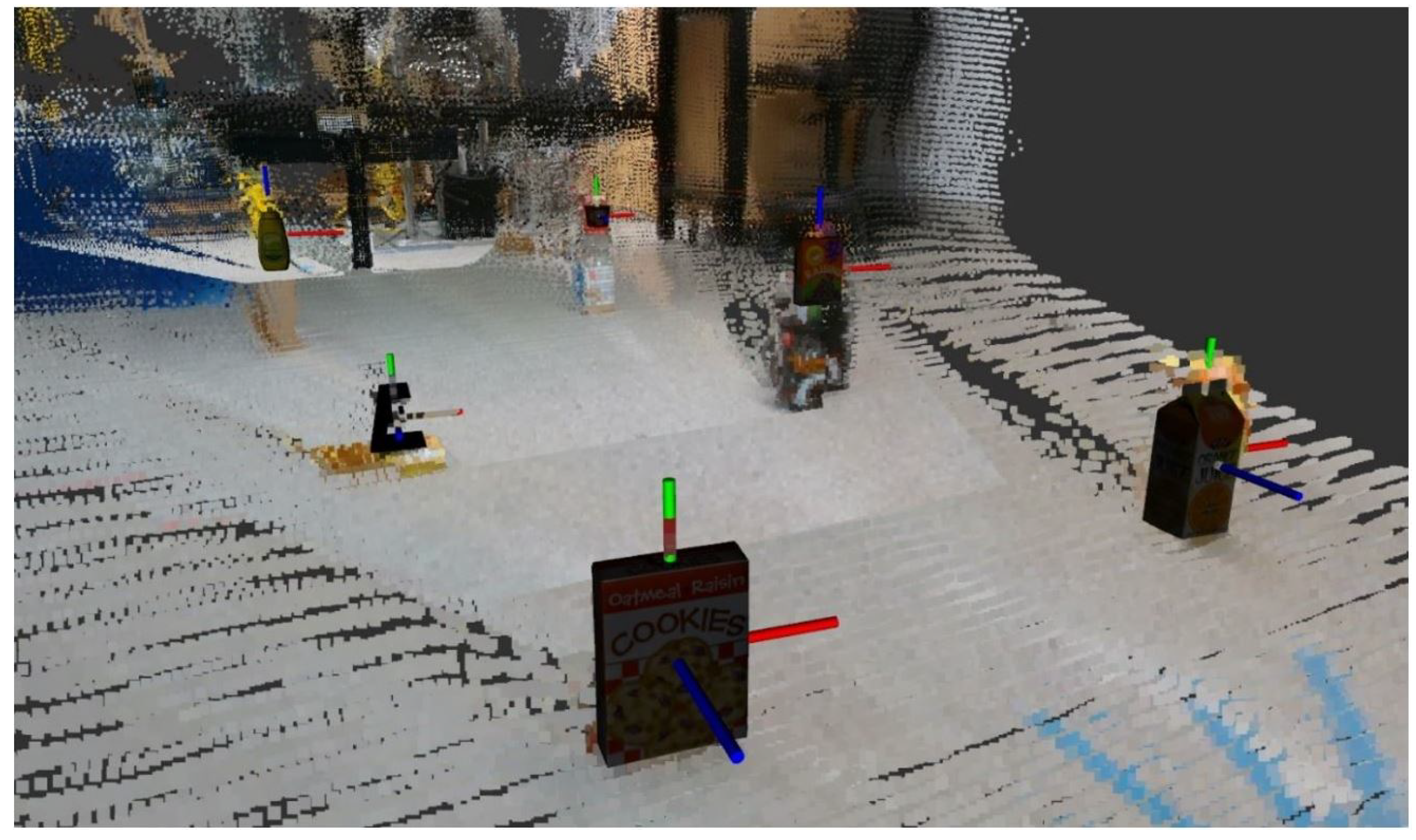

- We propose an integration of Deep Object Pose Estimation (DOPE) and Real-Time Appearance-Based Mapping (RTAB-Map) through loose-coupled parallel fusion to generate and annotate 3D maps.

- We present a solution that enhances the precision of the system by leveraging DOPE’s belief map system to filter out uncertain key points when predicting bounding box locations.

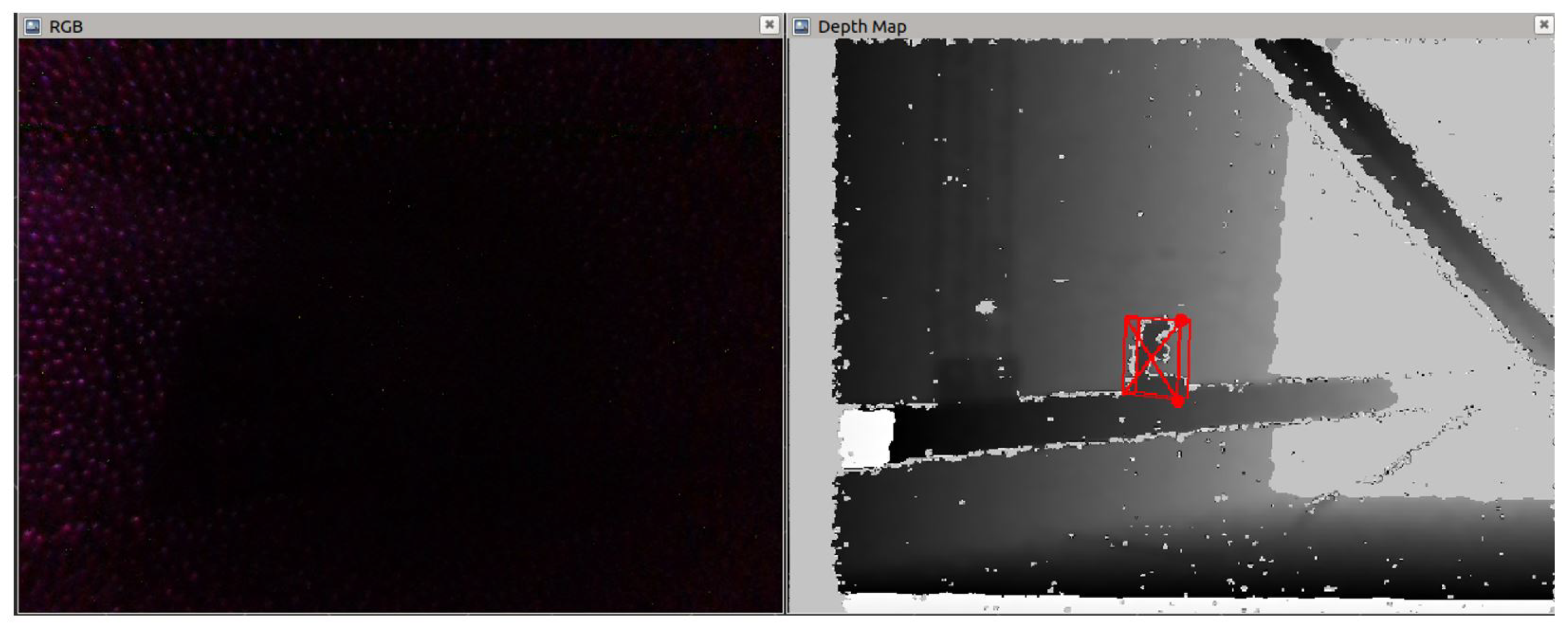

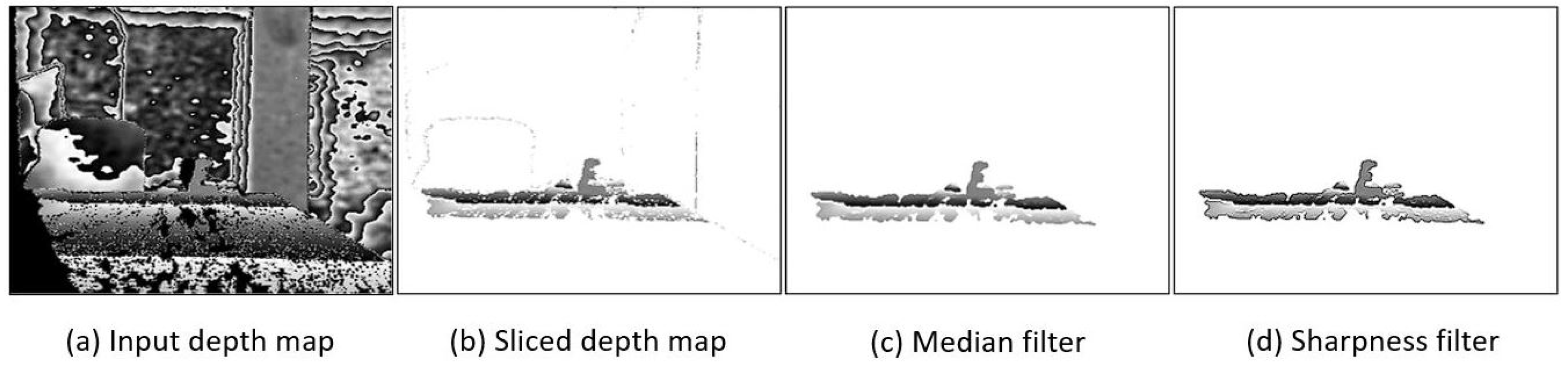

- We propose an enhancement to the original DOPE algorithm to enable shape-based object recognition using depth maps, which can identify objects in complete darkness.

- Finally, we apply a range of experiments to evaluate and quantify the overall performance of the proposed solution.

2. Related Work

3. System Components

3.1. Semantic Annotation

3.2. Simultaneous Localization and Mapping

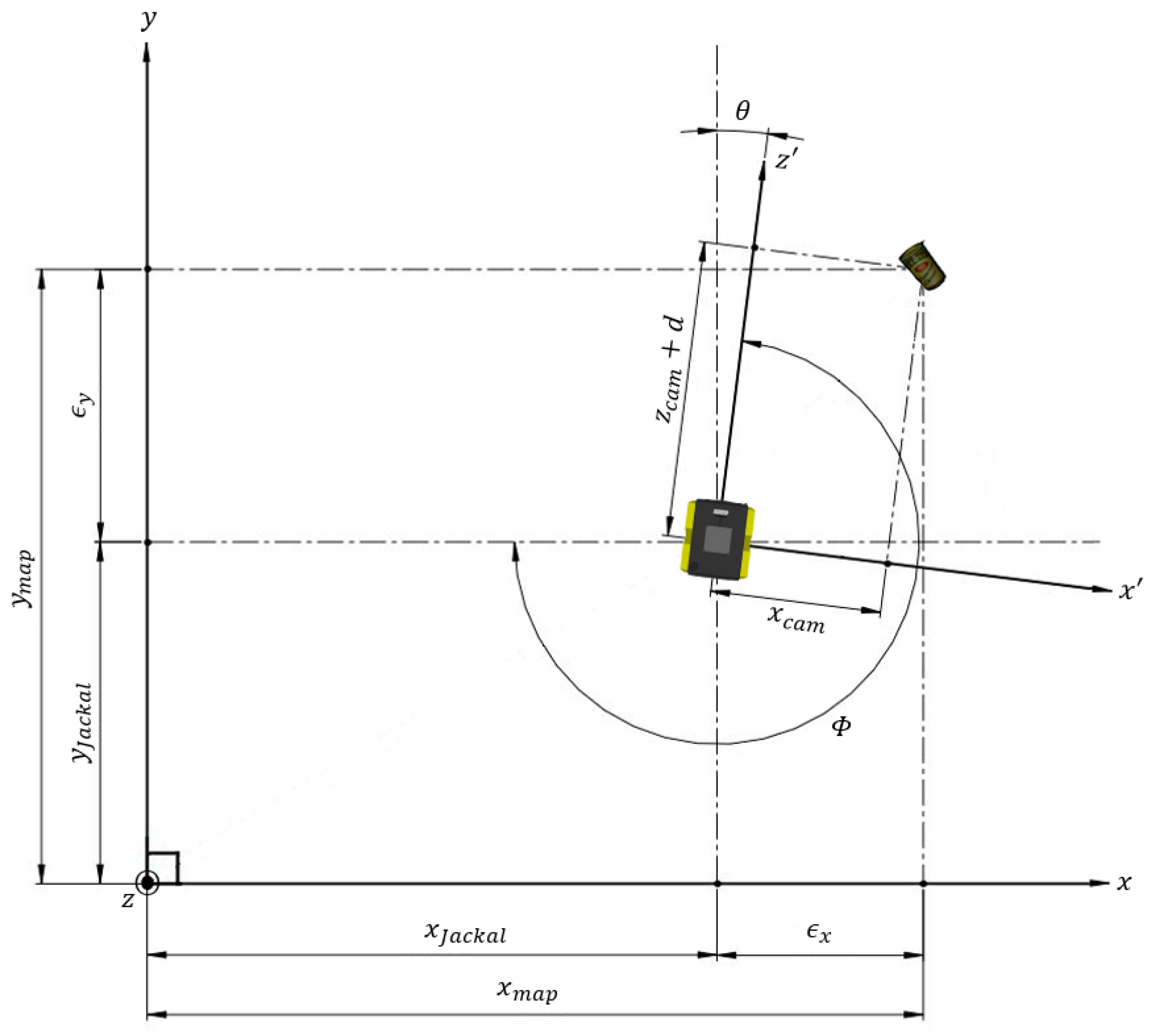

3.3. Semantic SLAM Fusion

3.4. Depth Map Object Recognition

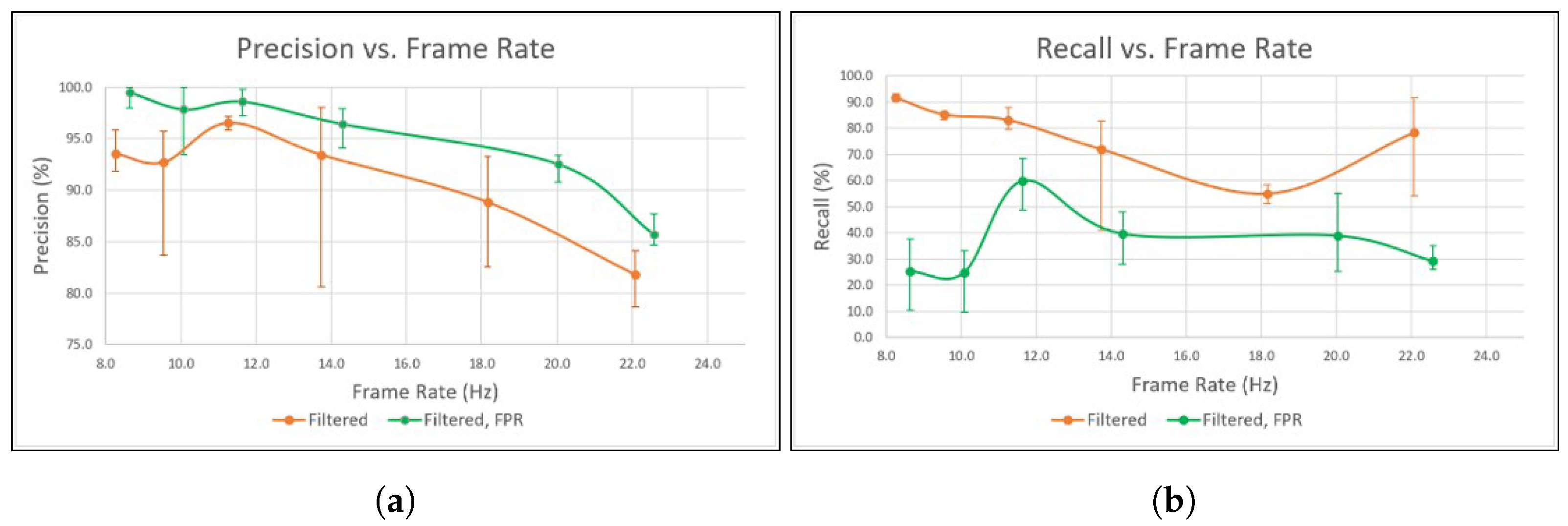

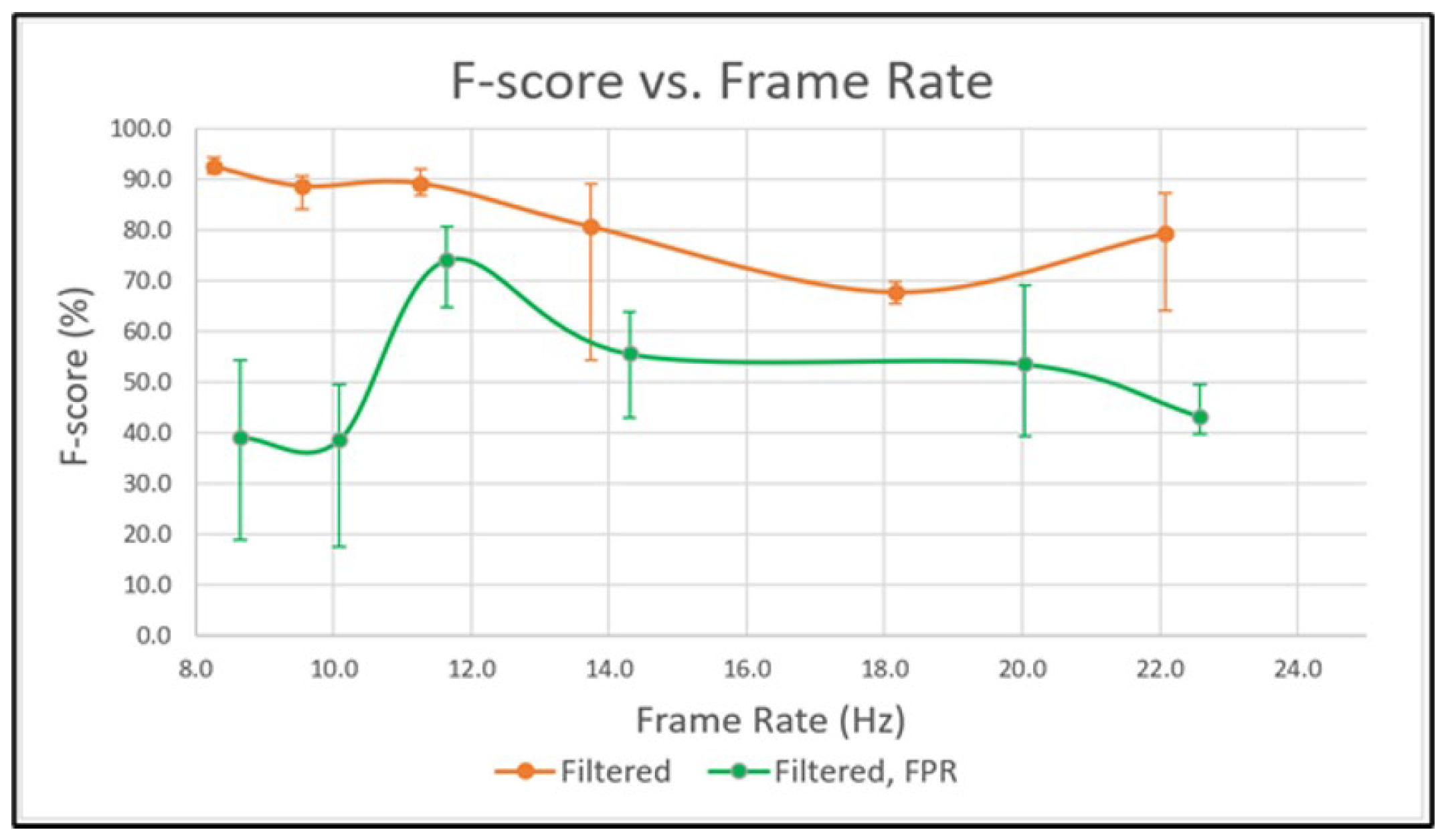

3.5. Precision Enhancement

3.6. Persistent State Observation

4. Methodology

4.1. Hardware

4.2. Depth Estimation and Dataset Size

4.3. Precision Quantification

4.4. Object Localization

5. Results and Discussion

5.1. Depth Estimation and Dataset Size

5.2. Precision Quantification

5.3. Object Localization

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shan, T.; Englot, B. Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Koide, K.; Miura, J.; Menegatti, E. A portable three-dimensional LIDAR-based system for long-term and wide-area people behavior measurement. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419841532. [Google Scholar] [CrossRef]

- Rosinol, A.; Violette, A.; Abate, M.; Hughes, N.; Chang, Y.; Shi, J.; Gupta, A.; Carlone, L. Kimera: From SLAM to spatial perception with 3D dynamic scene graphs. Int. J. Robot. Res. 2021, 40, 1510–1546. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Labbé, M.; Michaud, F. RTAB-Map as an open-source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation. J. Field Robot. 2019, 36, 416–446. [Google Scholar] [CrossRef]

- Chen, S.; Zhou, B.; Jiang, C.; Xue, W.; Li, Q. A lidar/visual slam backend with loop closure detection and graph optimization. Remote Sens. 2021, 13, 2720. [Google Scholar] [CrossRef]

- Li, Y.; Ibanez-Guzman, J. Lidar for autonomous driving: The principles, challenges, and trends for automotive lidar and perception systems. IEEE Signal Process. Mag. 2020, 37, 50–61. [Google Scholar] [CrossRef]

- Yu, J.; Choi, H. YOLO MDE: Object detection with monocular depth estimation. Electronics 2021, 11, 76. [Google Scholar] [CrossRef]

- Tremblay, J.; To, T.; Sundaralingam, B.; Xiang, Y.; Fox, D.; Birchfield, S. Deep object pose estimation for semantic robotic grasping of household objects. arXiv 2018, arXiv:1809.10790. [Google Scholar]

- Kasaei, S.H.; Tomé, A.M.; Lopes, L.S.; Oliveira, M. GOOD: A global orthographic object descriptor for 3D object recognition and manipulation. Pattern Recognit. Lett. 2016, 83, 312–320. [Google Scholar] [CrossRef]

- Chen, Z.; Zhang, W.; Li, F.; Shi, Y.; Wang, Y.; Nie, F.; Zhu, C.; Huang, Q. A research on the fusion of semantic segment network and SLAM. In Proceedings of the 2019 IEEE International Conference on Advanced Robotics and Its Social Impacts (ARSO), Beijing, China, 31 October–2 November 2019; pp. 304–309. [Google Scholar]

- Rosinol, A.; Abate, M.; Chang, Y.; Carlone, L. Kimera: An open-source library for real-time metric-semantic localization and mapping. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 1689–1696. [Google Scholar]

- Bowman, S.L.; Atanasov, N.; Daniilidis, K.; Pappas, G.J. Probabilistic data association for semantic slam. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1722–1729. [Google Scholar]

- McCormac, J.; Handa, A.; Davison, A.; Leutenegger, S. Semanticfusion: Dense 3d semantic mapping with convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 4628–4635. [Google Scholar]

- Li, C.; Xiao, H.; Tateno, K.; Tombari, F.; Navab, N.; Hager, G.D. Incremental scene understanding on dense SLAM. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 574–581. [Google Scholar]

- Zheng, L.; Zhu, C.; Zhang, J.; Zhao, H.; Huang, H.; Niessner, M.; Xu, K. Active scene understanding via online semantic reconstruction. Comput. Graph. Forum 2019, 38, 103–114. [Google Scholar] [CrossRef]

- Chen, X.; Milioto, A.; Palazzolo, E.; Giguere, P.; Behley, J.; Stachniss, C. Suma++: Efficient lidar-based semantic slam. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 4530–4537. [Google Scholar]

- To, T.; Tremblay, J.; McKay, D.; Yamaguchi, Y.; Leung, K.; Balanon, A.; Cheng, J.; Hodge, W.; Birchfield, S. NDDS: NVIDIA deep learning dataset synthesizer. In Proceedings of the CVPR 2018 Workshop on Real World Challenges and New Benchmarks for Deep Learning in Robotic Vision, Salt Lake City, UT, USA, 18–22 June 2018; Volume 22. [Google Scholar]

- Tobin, J.; Fong, R.; Ray, A.; Schneider, J.; Zaremba, W.; Abbeel, P. Domain randomization for transferring deep neural networks from simulation to the real world. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 23–30. [Google Scholar]

- Reway, F.; Hoffmann, A.; Wachtel, D.; Huber, W.; Knoll, A.; Ribeiro, E. Test method for measuring the simulation-to-reality gap of camera-based object detection algorithms for autonomous driving. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 1249–1256. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Mansour, M.; Davidson, P.; Stepanov, O.; Piché, R. Towards Semantic SLAM: 3D Position and Velocity Estimation by Fusing Image Semantic Information with Camera Motion Parameters for Traffic Scene Analysis. Remote Sens. 2021, 13, 388. [Google Scholar] [CrossRef]

- Labbe, M.; Michaud, F. Appearance-based loop closure detection for online large-scale and long-term operation. IEEE Trans. Robot. 2013, 29, 734–745. [Google Scholar] [CrossRef]

- Labbé, M.; Michaud, F. Memory management for real-time appearance-based loop closure detection. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 1271–1276. [Google Scholar]

- Foix, S.; Alenya, G.; Torras, C. Lock-in time-of-flight (ToF) cameras: A survey. IEEE Sens. J. 2011, 11, 1917–1926. [Google Scholar] [CrossRef]

- Liu, Y.; Cheng, M.M.; Hu, X.; Wang, K.; Bai, X. Richer convolutional features for edge detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3000–3009. [Google Scholar]

- Hinterstoisser, S.; Lepetit, V.; Ilic, S.; Holzer, S.; Bradski, G.; Konolige, K.; Navab, N. Model based training, detection and pose estimation of texture-less 3d objects in heavily cluttered scenes. In Proceedings of the Computer Vision–ACCV 2012: 11th Asian Conference on Computer Vision, Daejeon, Republic of Korea, 5–9 November 2012; Revised Selected Papers, Part I 11. Springer: Berlin/Heidelberg, Germany, 2013; pp. 548–562. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Guns, R.; Lioma, C.; Larsen, B. The tipping point: F-score as a function of the number of retrieved items. Inf. Process. Manag. 2012, 48, 1171–1180. [Google Scholar] [CrossRef]

- Tremblay, J.; Tyree, S.; Mosier, T.; Birchfield, S. Indirect object-to-robot pose estimation from an external monocular rgb camera. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 4227–4234. [Google Scholar]

| 50 K | 100 K | 200 K | 300 K | 400 K | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| D. | Acc | D. | Acc | D. | Acc | D. | Acc | D. | Acc | |||||||||||

| P1 | 0.2 | −4.7 | 4.7 | 93.3 | 0.1 | −4.6 | 4.6 | 93.4 | −0.2 | −6.1 | 6.1 | 91.2 | −0.1 | −5.7 | 5.7 | 91.8 | −0.2 | −5.5 | 5.5 | 92.2 |

| P2 | 1.4 | −4.6 | 4.8 | 95.4 | 1.9 | −3.2 | 3.7 | 96.5 | 0.2 | −1.0 | 1.0 | 99.0 | −0.2 | −2.2 | 2.2 | 98.0 | 0.2 | 0.8 | 0.9 | 99.3 |

| P3 | 0.2 | 0.4 | 0.5 | 99.6 | 0.3 | −0.5 | 0.6 | 99.7 | −1.3 | −5.7 | 5.8 | 95.3 | −1.0 | −5.5 | 5.6 | 95.5 | −1.3 | −7.1 | 7.3 | 94.1 |

| P4 | 0.0 | −4.9 | 4.9 | 96.5 | 0.1 | −4.9 | 4.9 | 96.5 | 0.1 | −5.5 | 5.5 | 96.0 | 0.1 | −4.7 | 4.7 | 96.6 | 0.2 | −4.8 | 4.8 | 96.6 |

| P5 | −0.8 | 6.8 | 6.8 | 95.8 | −1.1 | 3.4 | 3.5 | 97.9 | 0.1 | −1.6 | 1.6 | 99.1 | −0.9 | 3.8 | 3.9 | 97.6 | −2.4 | 13.3 | 13.5 | 91.7 |

| Avg. | 0.2 | −1.4 | 4.3 | 96.1 | 0.3 | −2.0 | 3.5 | 96.8 | −0.2 | −3.9 | 4.0 | 96.1 | −0.4 | −2.9 | 4.4 | 95.9 | −0.7 | −0.7 | 6.4 | 94.8 |

| Std. | 0.7 | 4.6 | 2.1 | 2.1 | 1.0 | 3.1 | 1.6 | 2.1 | 0.6 | 2.2 | 2.3 | 2.9 | 0.4 | 3.6 | 1.3 | 2.2 | 1.0 | 7.5 | 4.1 | 2.8 |

| Original | FPR | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Orange Juice | Yogurt | Mustard | Raisins | Cookies | Custom | Average | Orange Juice | Yogurt | Mustard | Raisins | Cookies | Custom | Average | |

| 15.1 | 53.2 | 22.4 | 66.5 | 87.3 | 141.5 | 64.3 | 9.8 | 24.4 | 15.3 | 7.1 | 87.3 | 19.2 | 27.2 | |

| 7.4 | 63.7 | 4.0 | 73.1 | 7.6 | 79.2 | 39.2 | 5.2 | 16.4 | 0.4 | 1.3 | 7.6 | 4.1 | 5.8 | |

| 9.6 | 8.8 | 10.6 | 6.8 | 2.1 | 26.8 | 10.8 | 5.4 | 9.7 | 9.4 | 10.6 | 2.1 | 8.3 | 7.6 | |

| l | 19.4 | 83.5 | 25.1 | 99.1 | 87.6 | 164.4 | 79.8 | 12.4 | 31.0 | 18.0 | 12.8 | 87.6 | 21.3 | 30.5 |

| N | Y | N | Y | Y | Y | N | N | N | N | Y | N | |||

| Best | Worst | |||||||||||||

| Orange Juice | Yogurt | Mustard | Raisins | Cookies | Custom | Average | Orange Juice | Yogurt | Mustard | Raisins | Cookies | Custom | Average | |

| 4.0 | 9.8 | 15.1 | 4.2 | 2.7 | 5.8 | 6.9 | 111.6 | 214.5 | 214.3 | 171.1 | 87.3 | 141.5 | 156.7 | |

| 1.2 | 11.8 | 0.3 | 1.9 | 3.8 | 1.0 | 3.3 | 101.5 | 161.8 | 56.7 | 129.3 | 7.6 | 79.2 | 89.4 | |

| 6.1 | 5.9 | 9.5 | 9.9 | 9.2 | 9.3 | 8.3 | 6.7 | 14.4 | 9.1 | 6.4 | 2.1 | 26.8 | 10.9 | |

| l | 7.4 | 16.4 | 17.8 | 10.9 | 10.3 | 11.0 | 12.3 | 151.0 | 269.1 | 221.9 | 214.5 | 87.6 | 164.4 | 184.4 |

| N | N | N | N | N | N | Y | Y | Y | Y | Y | Y | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roch, J.; Fayyad, J.; Najjaran, H. DOPESLAM: High-Precision ROS-Based Semantic 3D SLAM in a Dynamic Environment. Sensors 2023, 23, 4364. https://doi.org/10.3390/s23094364

Roch J, Fayyad J, Najjaran H. DOPESLAM: High-Precision ROS-Based Semantic 3D SLAM in a Dynamic Environment. Sensors. 2023; 23(9):4364. https://doi.org/10.3390/s23094364

Chicago/Turabian StyleRoch, Jesse, Jamil Fayyad, and Homayoun Najjaran. 2023. "DOPESLAM: High-Precision ROS-Based Semantic 3D SLAM in a Dynamic Environment" Sensors 23, no. 9: 4364. https://doi.org/10.3390/s23094364

APA StyleRoch, J., Fayyad, J., & Najjaran, H. (2023). DOPESLAM: High-Precision ROS-Based Semantic 3D SLAM in a Dynamic Environment. Sensors, 23(9), 4364. https://doi.org/10.3390/s23094364