Graph Representation Learning and Its Applications: A Survey

Abstract

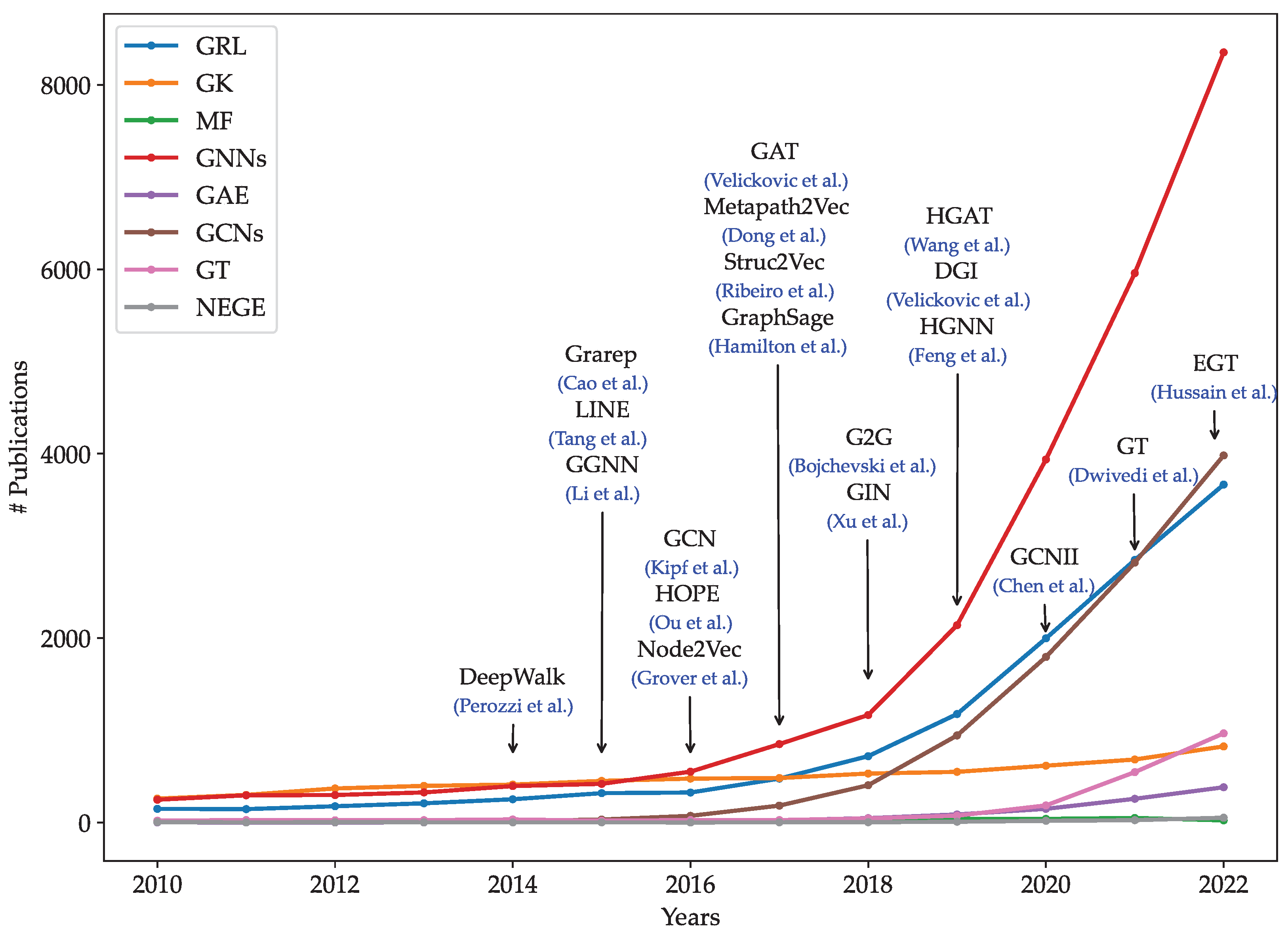

1. Introduction

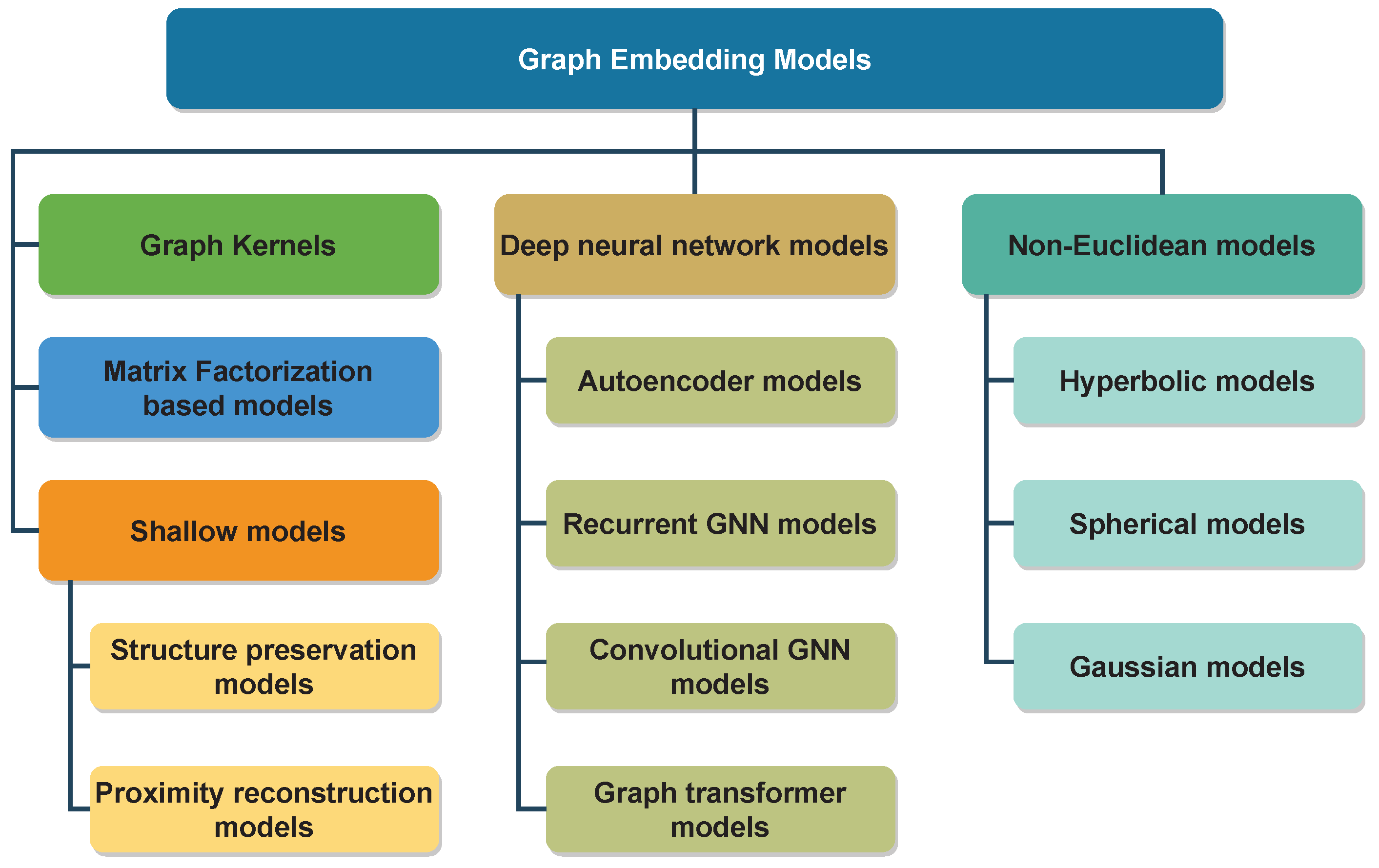

- This paper presents a taxonomy of graph embedding models based on various algorithms and strategies.

- We provide readers with an in-depth analysis of an overview of graph embedding models with different types of graphs ranging from static to dynamic and from homogeneous to heterogeneous graphs.

- This paper presents graph transformer models, which have achieved remarkable results in a deeper understanding of graph structures in recent years.

- We cover applications of graph representation learning in various areas, from constructing graphs to applying models in specific tasks.

- We discuss the challenges and future directions of existing graph embedding models in detail.

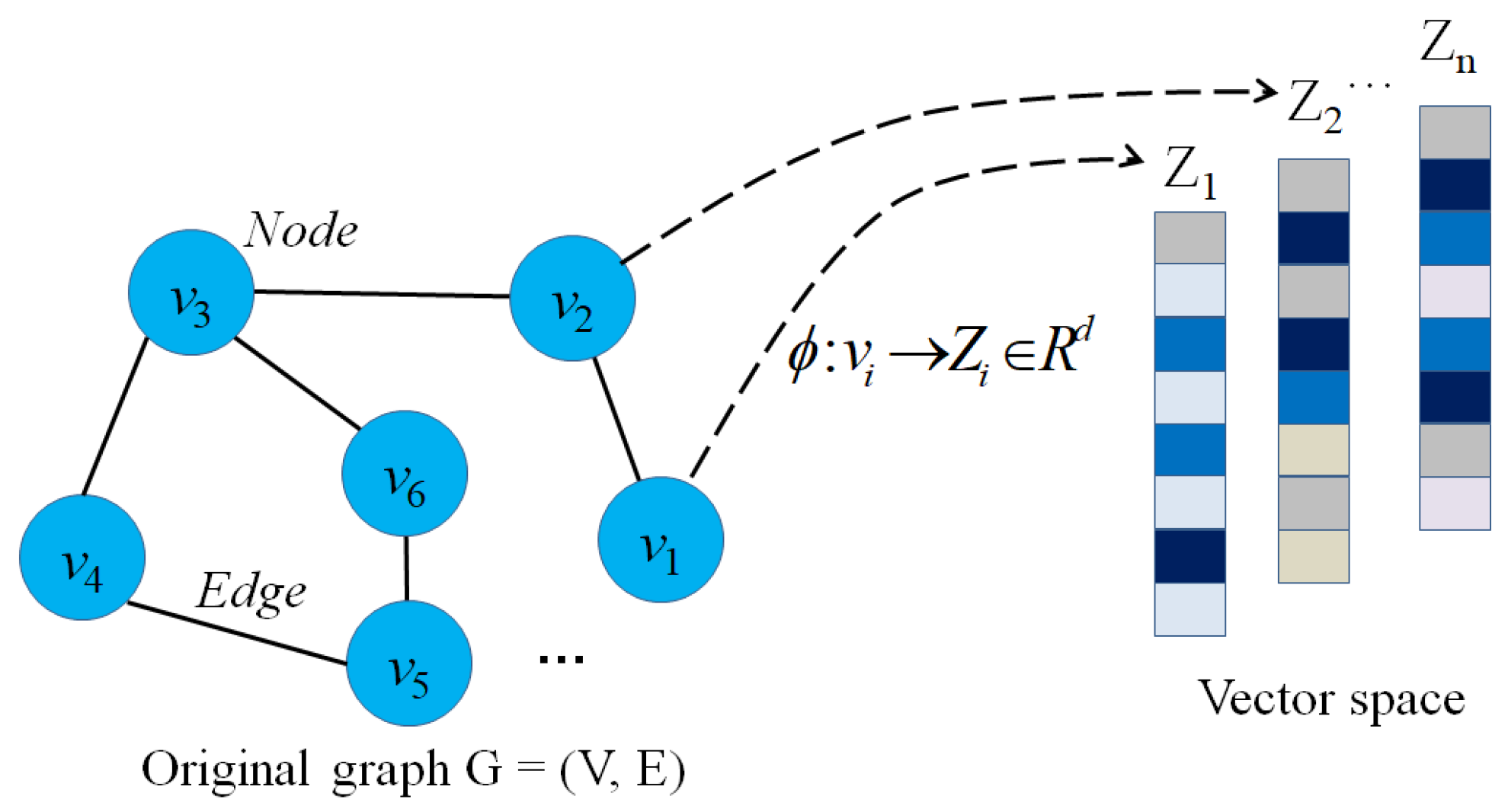

2. Problem Description

- Directed graph: When for any , then the graph G is called an undirected graph, and G is directed graph otherwise.

- Weighted graph: is a graph in which each edge is assigned a specific weight value. Therefore, the adjacency matrix could be presented as: , where is the weight of the edge .

- Signed graph: When , the graph G is called signature/signed graph. The graph G could have all positive signed edges when for any , and G could have all negative signed edges otherwise.

- Attributed graph: A graph is an attributed graph where V, E is the set of nodes and edges, respectively, and X is the matrix of node attributes with size . Furthermore, we could also have the matrix X as the matrix of edge input attribute with size where m is the number of edges for any .

- Hyper graph: A hyper graph G could be represented as , where V denotes the set of nodes and E denotes a set of hyperedge. Each hyperedge can connect multiple nodes and is assigned a weight . The hypergraph G could be represented by an incidence matrix H size with entries if , and otherwise.

- Heterogeneous graph: A heterogeneous graph is defined as where V, and E are the set of nodes and edges, respectively, is the mapping function: , and the mapping function with , describe the set of node types and edge types, respectively, and + is the sum of the number of node types and edge types.

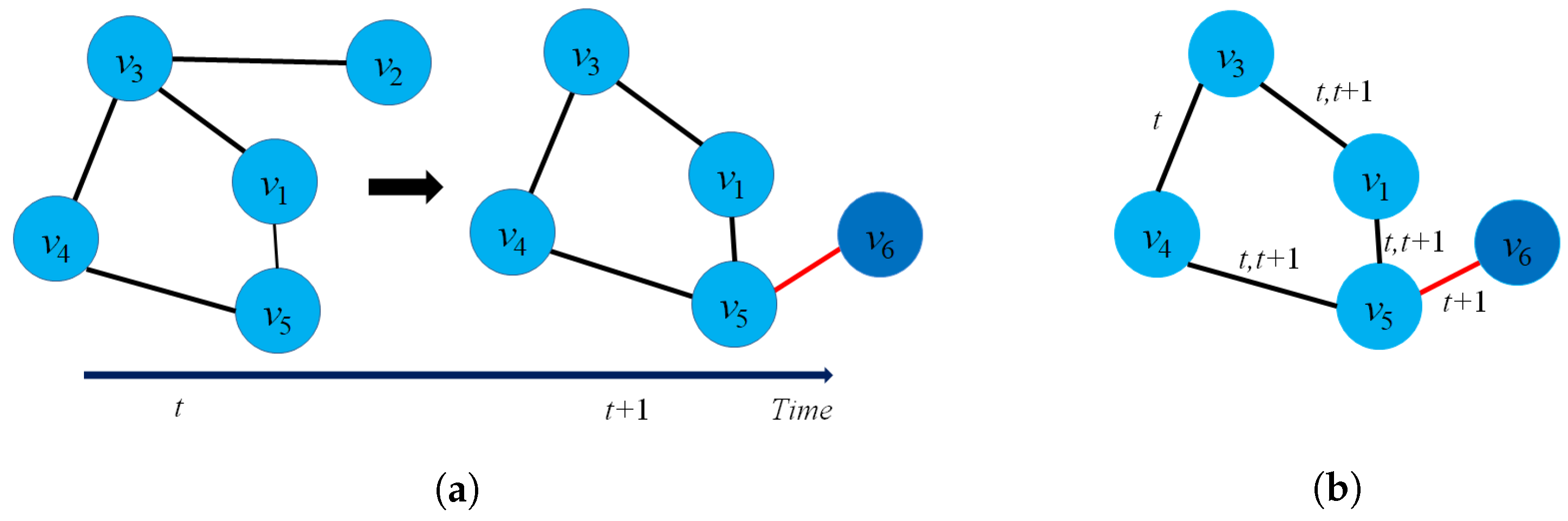

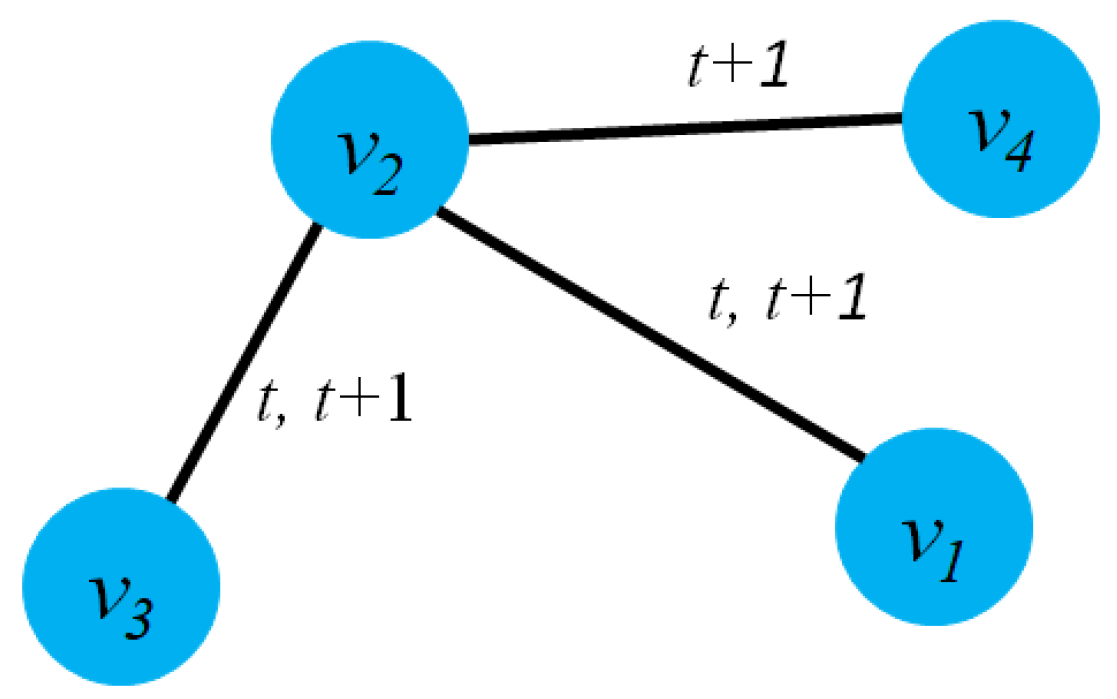

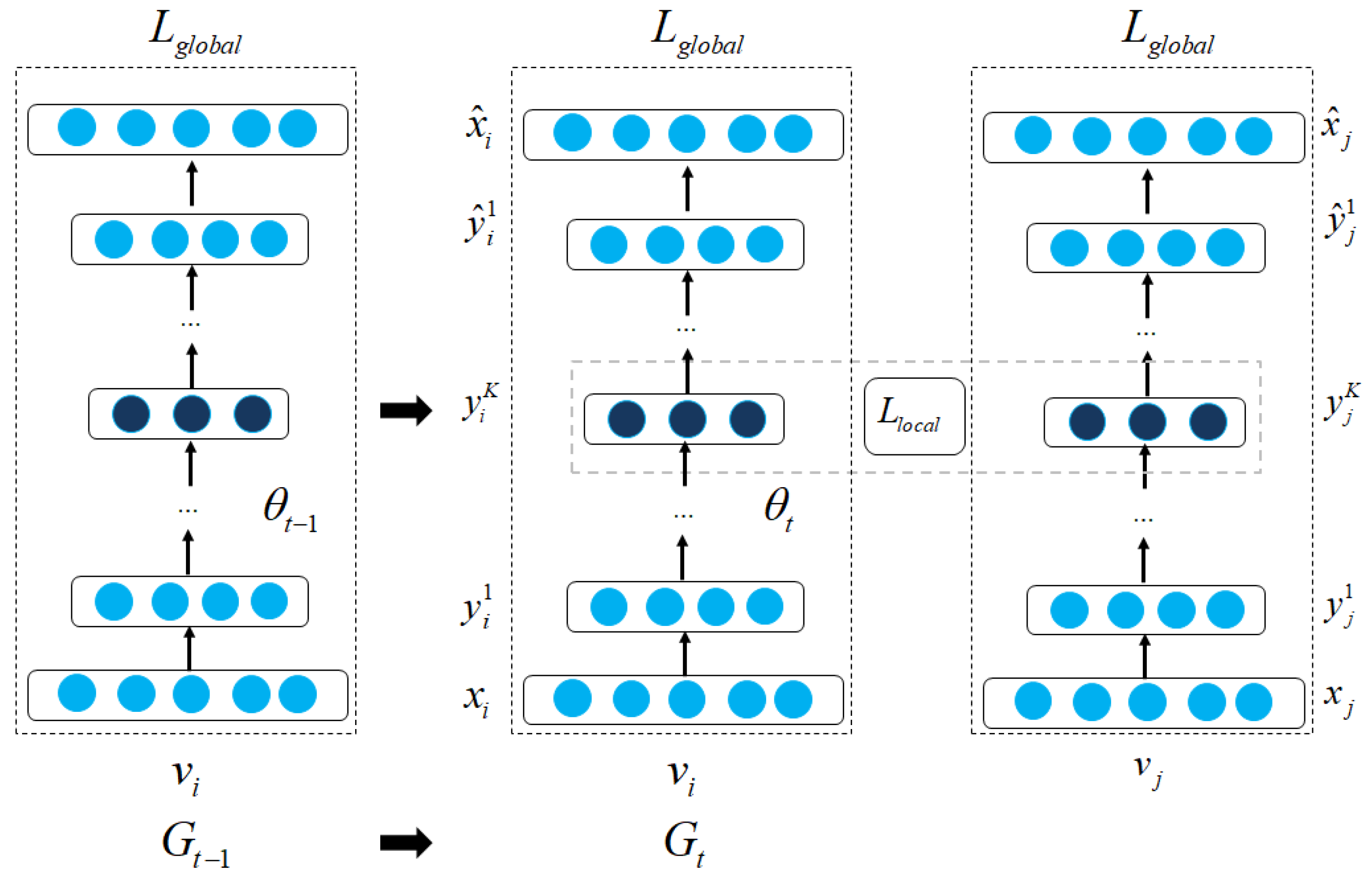

- Temporal dynamic graph embedding: A temporal dynamic embedding is a projection function , where and describes the collection of graph during time interval .

- Topological dynamic graph embedding: A topological dynamic graph embedding for graph for nodes is a mapping function , where .

3. Graph Representation Learning Models

3.1. Graph Kernels

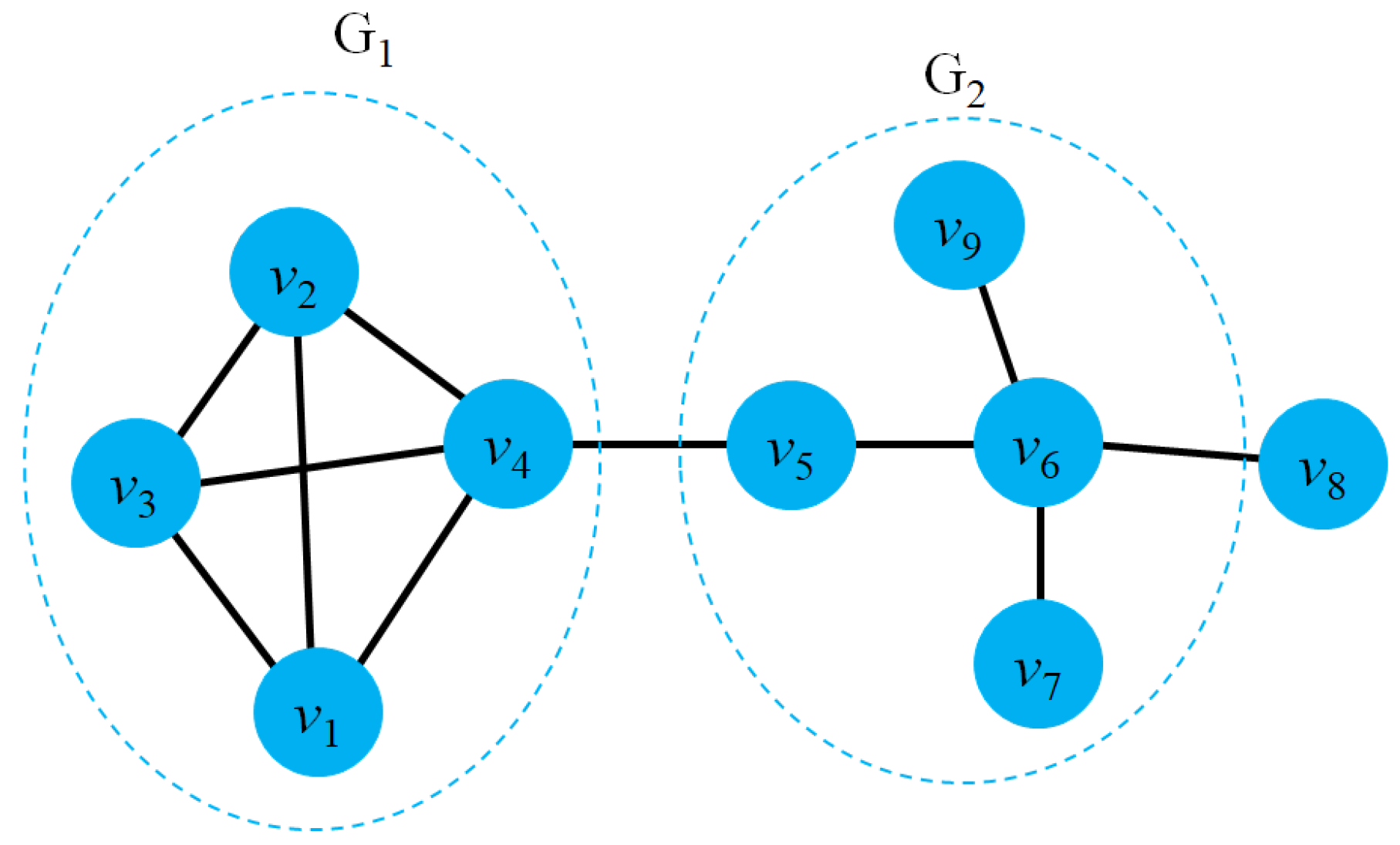

- Kernels for graphs: Kernels for graphs aim to measure the similarity between graphs. The similarity between the two graphs (isomorphism) could be explained as follows: Given two undirected graphs and , and are isomorphic if they exist a bimodal mapping function such that , a and b are contiguous on if and are contiguous on .

- Kernels on graphs: To embed nodes in graphs, kernel methods refer to finding a function that maps pairs of nodes to latent space using particular similarity measures. Formally, graph kernels could be defined as: Given a graph , a function is a kernel on G if there is a mapping function such that for any node pairs .

- Coverage: The graph kernels are one of the most useful functions to measure the similarity between graph entities by performing several strategies to find a kernel in graphs. This could be seen as a generalization of the traditional statistical methods [116].

- Efficiency: Several kernel tricks have been proposed to reduce the computational cost of kernel methods on graphs [117]. Kernel tricks could reduce the number of spatial dimensions and computational complexity on substructures while still providing efficient kernels.

- Missing entities: Most kernel models could not learn node embeddings for new nodes. In the real world, graphs are dynamic, and their entities could evolve. Therefore, the graph kernels must re-learn graphs every time a new node is added, which is time-consuming and difficult to apply in practice.

- Dealing with weights: Most graph kernel models do not consider the weighted edges, which could lead to structural information loss. This could reduce the possibility of graph representation in the hidden space.

- Computational complexity: Graph kernels are an NP-hard class [109]. Although several kernel-based models aim to reduce the computational time by considering the distribution of substructures, this may increase the complexity and reduce the ability to capture the global structure.

3.2. Matrix Factorization-Based Models

- The Laplacian eigenmaps: To learn representations of a graph , these approaches first represent G as a Laplacian matrix L where and D is the degree matrix [41]. In the matrix L, the positive values depict the degree of nodes, and negative values are the weights of the edges. The matrix L could be decomposed to find the smallest number from eigenvalues which are considered node embeddings. The optimal node embedding , therefore, could be computed using an objective function:

- Node proximity matrix factorization: The objective of these models is to decompose node proximity matrix into small-sized matrices directly. In other words, the proximity of nodes in graphs will be preserved in the latent space. Formally, given a proximity matrix M, the models try to optimize the distance between two pair nodes and , which could be defined as:

| Models | Graph Types | Tasks | Loss Function |

|---|---|---|---|

| SLE [39] | Static graphs | Node classification | |

| [120] | Attributed graphs | Node classification | |

| [7] | Attributed graphs | Community detection | |

| LPP [42] | Attributed graphs | Node classification | |

| [121] | Attributed graphs | Graph reconstruction | |

| [40] | Static graphs | Node clustering | |

| GLEE [122] | Attributed graphs | Graph reconstruction, Link prediction | |

| LPP [42] | Static graphs | Node classification | |

| Grarep [15] | Static graphs | Node classification, Node clustering | |

| NECS [123] | Static graphs | Graph reconstruction, Link prediction, Node classification | |

| HOPE [5] | Static graphs | Graph reconstruction Link prediction, Node classification | |

| [124] | Static graphs | Link prediction | |

| AROPE [86] | Static graphs | Graph reconstruction, Link prediction, Node classification | |

| ProNE [43] | Static graphs | Node classification | |

| ATP [6] | Static graphs | Link prediction | |

| [125] | Static graphs | Graph partition | |

| NRL-MF [126] | Static graphs | Node classification |

| Models | Graph Types | Tasks | Loss Function |

|---|---|---|---|

| DBMM [134] | Dynamic graphs | Node classification, Node clustering | |

| [135] | Dynamic graphs | Link prediction | |

| [136] | Dynamic graphs | Link prediction | |

| LIST [137] | Dynamic graphs | Link prediction | |

| TADW [131] | Attributed graphs | Node classification | |

| PME [138] | Heterogeneous graphs | Link prediction | |

| EOE [139] | Heterogeneous graphs | Node classification | |

| [130] | Heterogeneous graphs | Link prediction | |

| ASPEM [140] | Heterogeneous graphs | Node classification, Link prediction | |

| MELL [141] | Heterogeneous graphs | Link prediction | |

| PLE [142] | Attributed graphs | Node classification |

- Training data requirement: The matrix factorization-based models do not need much data to learn embeddings. Compared to other methods, such as neural network-based models, these models bring advantages in case there is little training data.

- Coverage: Since the graphs are presented as Laplacian matrix L, or transition matrix M, then the models could capture all the proximity of the nodes in the graphs. The connection of all the pairs of nodes is observed at least once time under the matrix that makes the models could be able to handle sparsity graphs.

- Computational complexity: The matrix factorization suffers from time complexity and memory complexity for large graphs with millions of nodes. The main reason is the time it takes to decompose the matrix into a product of small-sized matrices [15].

- Missing values: Models based on matrix factorization cannot handle incomplete graphs with unseen and missing values [143,144]. When the graph data are insufficient, the matrix factorization-based models could not learn generalized vector embeddings. Therefore, we need neural network models that can generalize graphs and better predict entities in graphs.

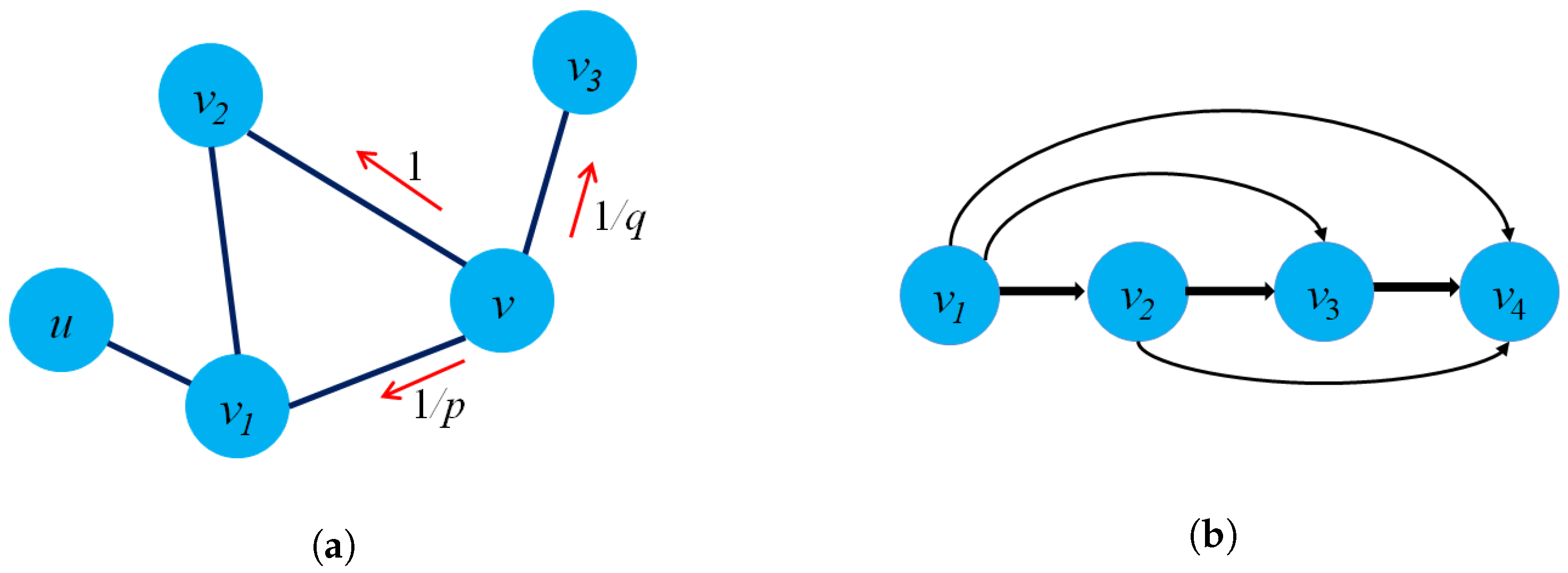

3.3. Shallow Models

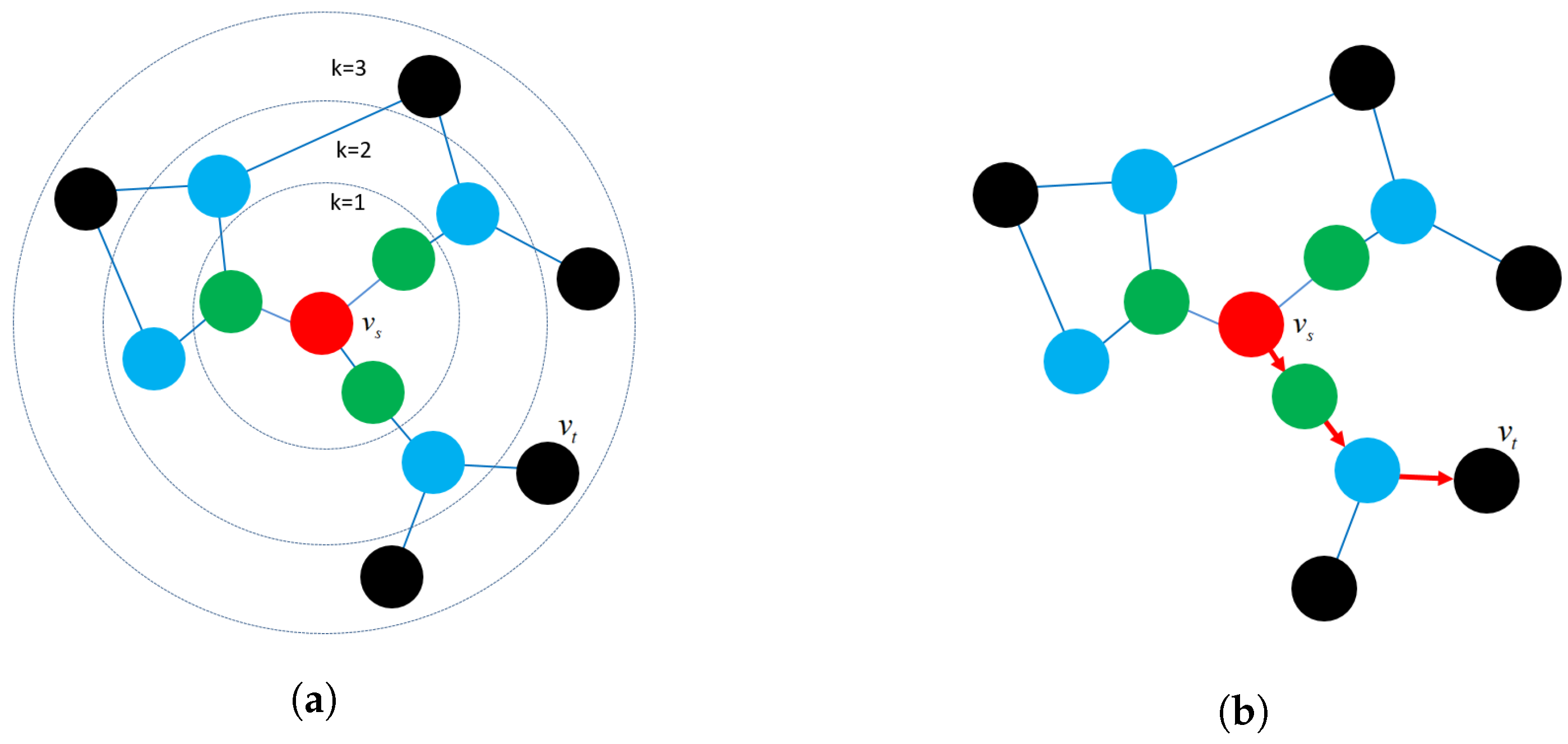

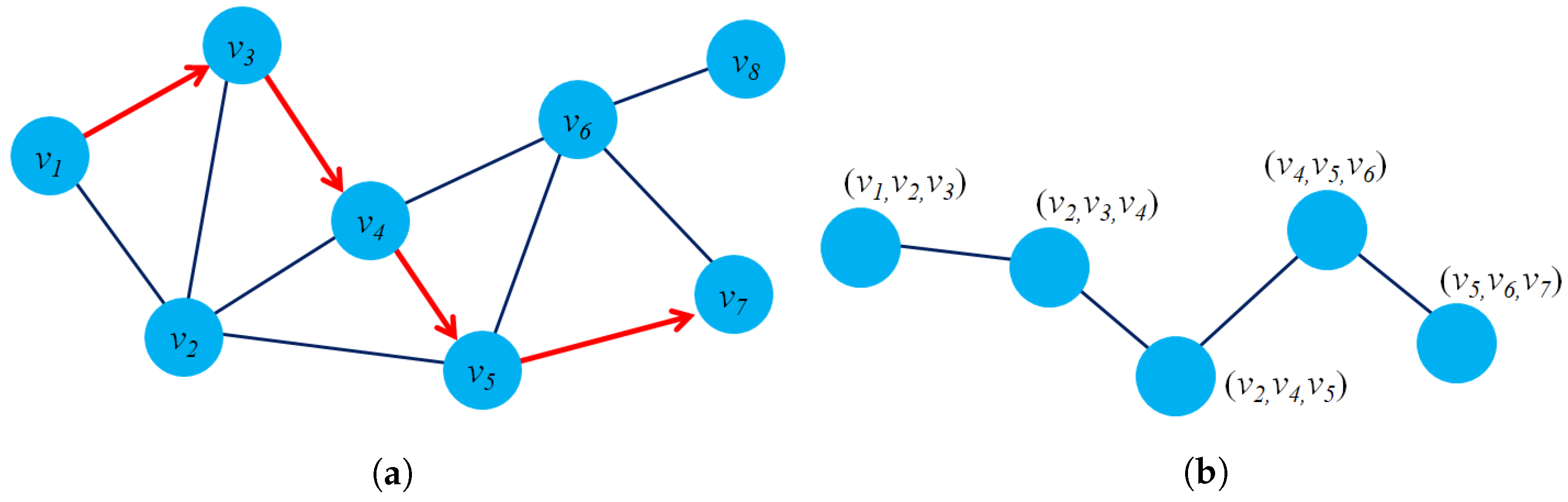

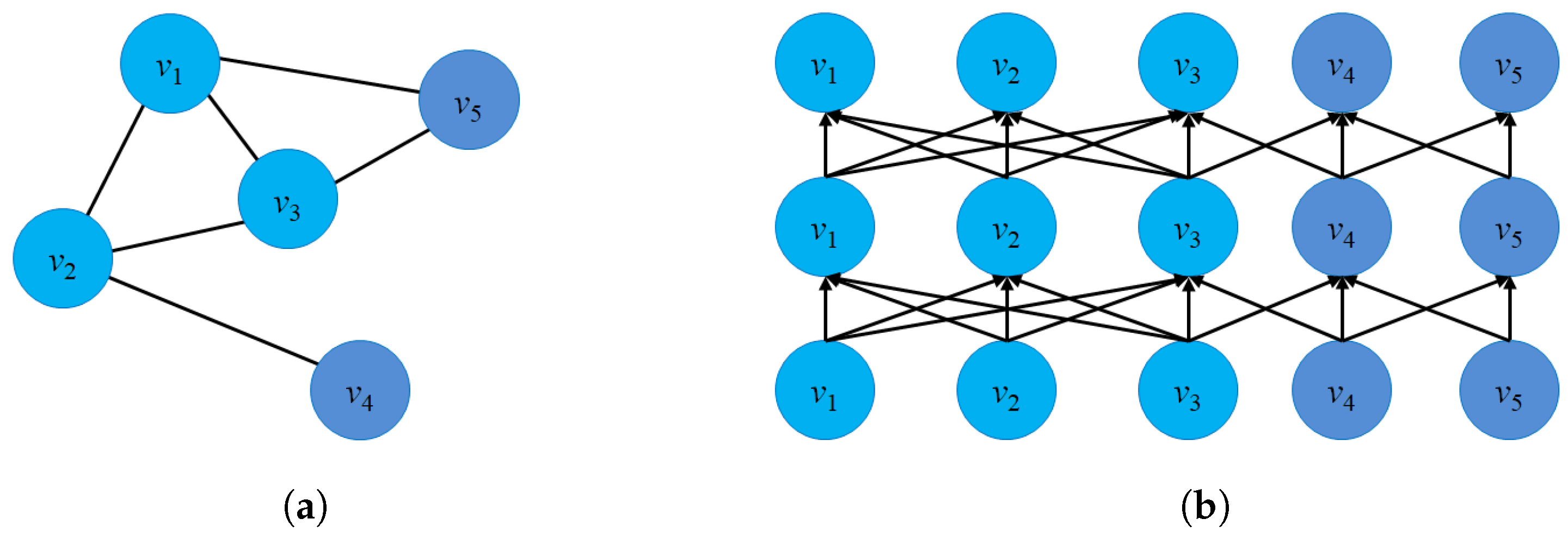

- Structure preservation: The primary concept of these approaches is to define sampling strategies that could capture the graph structure within fixed-length samples. Several sampling techniques could capture both local and global graph structures, such as random-walk sampling, role-based sampling, and edge reconstruction. The model then applies shallow neural network algorithms to learn vector embeddings in the latent space in an unsupervised learning manner. Figure 6a shows an example of a random-walk-based sampling technique in a graph from a source node to a target node .

- Proximity reconstruction: It refers to preserving a k-hop relationship between nodes in graphs. The relation between neighboring nodes in the k-hop distance should be preserved in the latent space. For instance, Figure 6b presents a 3-hop proximity from the source node .

- Unseen nodes: When there is a new node in graphs, the shallow models cannot learn embeddings for new nodes. To obtain embedding for new nodes, the models must update new patterns, for example, re-execute random-walk sampling to generate new paths for new nodes, and then the models must be re-trained to learn embeddings. The re-sampling and re-training procedures make it impractical to apply them in practice.

- Node features: Shallow models such as DeepWalk and Node2Vec mainly work suitably on homogeneous graphs and ignore information about the attributes/labels of nodes. However, in the real world, many graphs have attributes and labels that could be informative for graph representation learning. Only a few studies have investigated the attributes and labels of nodes, and edges. However, the limitations of domain knowledge when working with heterogeneous and dynamic graphs have made the model inefficient and increased the computational complexity.

- Parameter sharing: One of the problems of shallow models is that these models cannot share the parameters during the training process. From the statistical perspective, parameter sharing could reduce the computational time and the number of weight updates during the training process.

3.3.1. Structure-Preservation Models

| Models | Graph Types | Tasks | Loss Function |

|---|---|---|---|

| DeepWalk [14] | Static graphs | Node classification | |

| Node2Vec [4] | Static graphs | Node classification, Link prediction | |

| WalkLets [147] | Static graphs | Node classification | |

| Div2Vec [149] | Static graphs | Link prediction | |

| Static graphs | Node classification | ||

| Node2Vec+ [148] | Static graphs | Node classification | |

| Struct2Vec [21] | Static graphs | Node classification | |

| DiaRW [150] | Static graphs | Node classification, Link prediction | |

| Role2Vec [151] | Attributed graphs | Link prediction | |

| NERD [152] | Directed graphs | Link Prediction, Graph Reconstruction, Node classification | |

| Sub2Vec [153] | Static graphs | Community detection, graph classification | |

| Subgraph2Vec [145] | Static graphs | Graph classification, Clustering | |

| RUM [89] | Static graphs | Node classification, Graph reconstruction | |

| Gat2Vec [154] | Attributed graphs | Node classification, Link prediction | |

| ANRLBRW [155] | Attributed graphs | Node classification | |

| Gl2Vec [88] | Static graphs | Node classification |

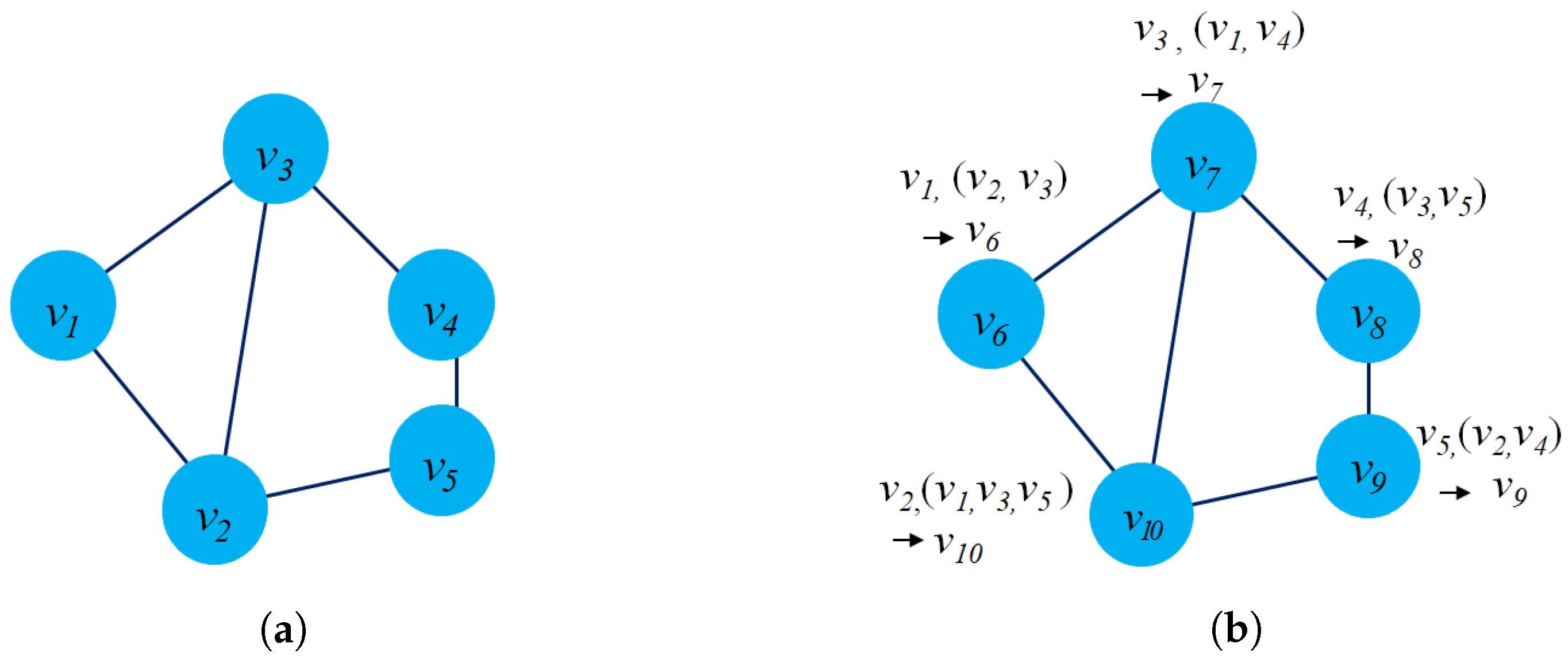

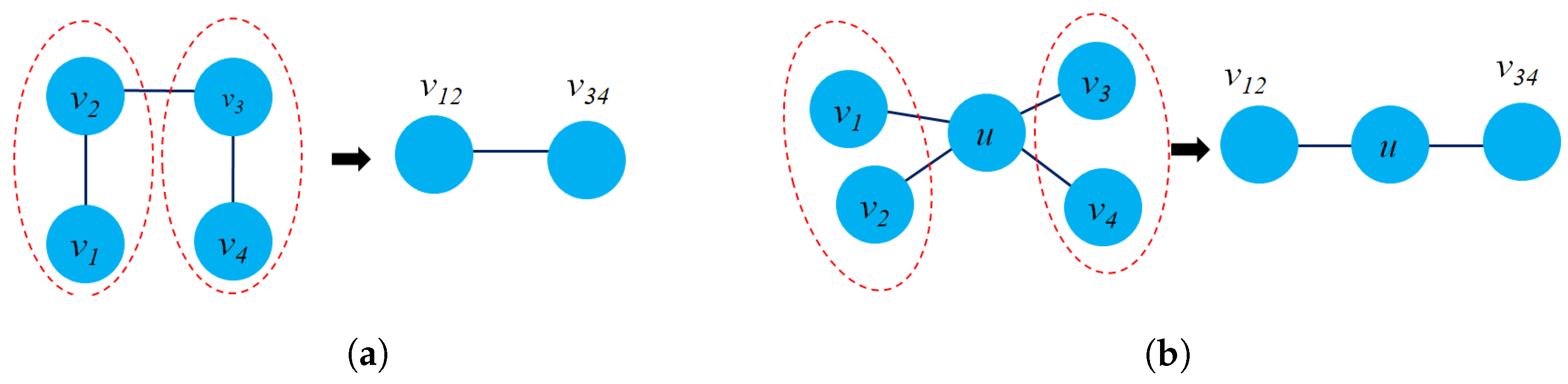

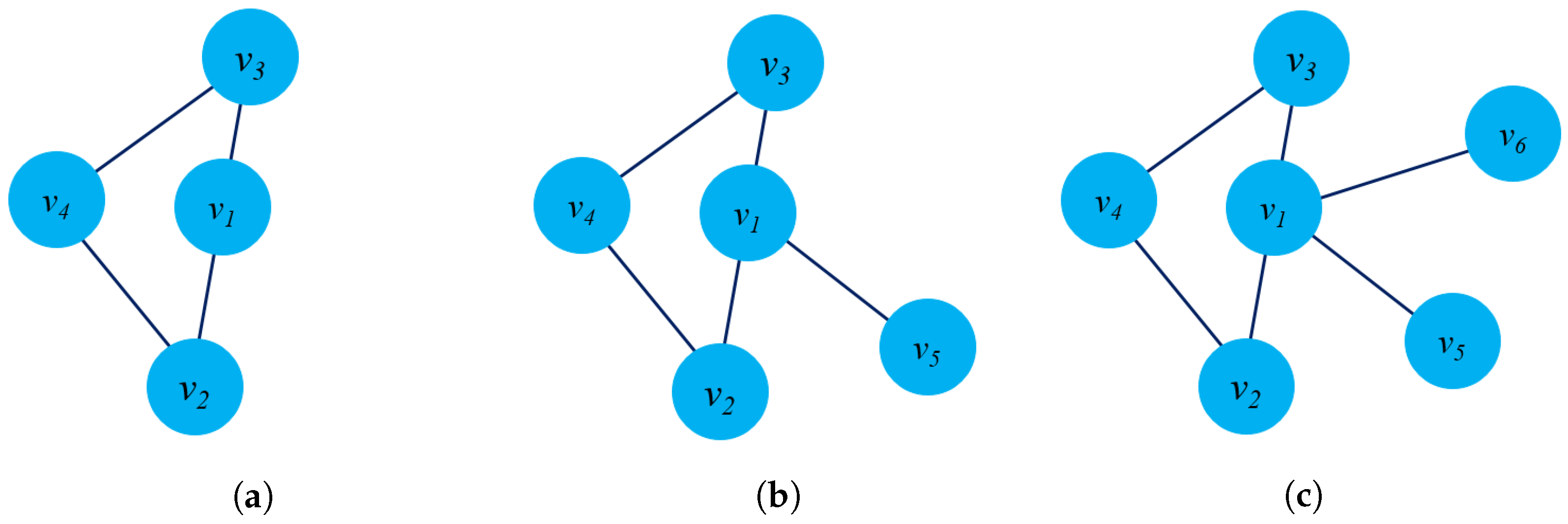

- Generating nodes in graph : Each new node v in graph is a tuple in the original graph G. Therefore, they can map the triangle patterns of the original graph to the new graph for structure preservation.

- Generating edges of graph : Each edge of the new graph is formed from two nodes that have two edges in common in the original graph. For example, the edge denotes that we the edge in the original graph G.

- Computational complexity: Unlike kernel models and matrix factorization-based models, which require considerable computational costs, structure preservation models could learn embeddings with an efficient time. This effectiveness comes from search-based sampling strategies and the model generalizability from the training process.

- Classification tasks: Since the models aim to find structural neighbor relationships from a target node, these show power in problems involving node classification. In almost all graphs, nodes that have the same label tend to be connected at a small, fixed-length distance. This is a strength of models based on preserving structure in problems related to classification tasks.

- Transductive learning: Most models cannot learn node embeddings that have not been seen in the training data. To learn new node embeddings, the model should re-sample the graph structure and learn the new samples again which could be time-consuming.

- Missing connection problem: Many graphs have sparse connections and missing connections between nodes in the real world. However, most structure-preservation models cannot handle missing connections between nodes since the sampling strategies could not be able to capture these connections. In the case of a random-walk-based sampling strategy, for example, these models only capture graph structure when nodes are linked together.

- Parameter sharing: These models could only learn node embeddings for individual nodes and do not share parameters. The absence of sharing parameters could reduce the effectiveness of learning representation.

3.3.2. Proximity Reconstruction Models

| Models | Graph Types | Objective | Loss Function |

|---|---|---|---|

| LINE [16] | Static graphs | Node classification | |

| APP [76] | Static graphs | Link prediction | |

| PALE [77] | Static graphs | Link prediction | |

| CVLP [182] | Attributed graphs | Link prediction | |

| [183] | Static graphs | Link prediction | |

| HARP [178] | Static graphs | Node classification | |

| PTE [179] | Heterogeneous graphs | Link prediction | |

| Hin2Vec [180] | Heterogeneous graphs | Node classification, link prediction | |

| [78] | Heterogeneous graphs | Node classification | |

| [184] | Signed graphs | Link prediction | |

| [185] | Heterogeneous graphs | Node classification, Node clustering | |

| [186] | Heterogeneous graphs | Link prediction | |

| [187] | Static graphs | Node classification | |

| [188] | Heterogeneous graph | Graph reconstruction, link prediction, node classification | |

| ProbWalk [189] | Static graphs | Node classification, link prediction | |

| [190] | Static graphs | Node classification, link prediction | |

| NEWEE [191] | Static graphs | Node classification, link prediction | |

| DANE [192] | Attributed graphs | Node classification, Link prediction | |

| CENE [193] | Attributed graphs | Node classification | |

| HSCA [194] | Attributed graphs | Node classification |

- Inter-graph proximity: Proximity-based models not only explore proximity between nodes in a single graph but can also are applied for proximity reconstruction across different graphs with common nodes [183]. These methods can preserve the structural similarity of nodes in other graphs which are entirely different from other models. In the case of models based on structure-preservation strategies, these must re-learn node embeddings in other graphs.

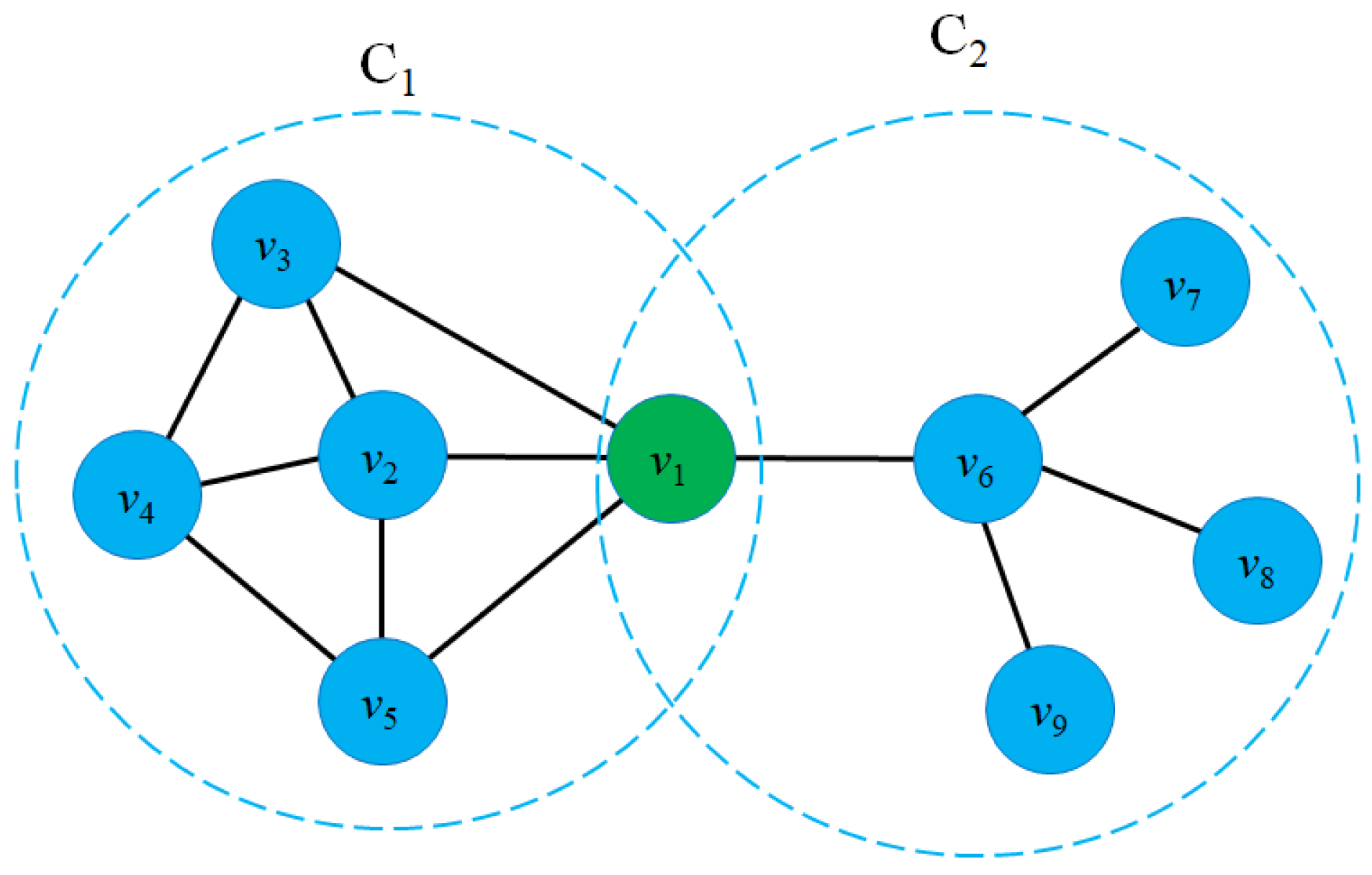

- Proximity of nodes belonging to different clusters: In the context of clusters with different densities and sizes, proximity reconstruction-based models could capture nodes that are close to each other but in different clusters. This feature shows an advantage over structure reconstruction-based models, which tend to favor searching for neighboring nodes in the same cluster.

- Link prediction and node classification problem: Since structural identity is based on proximity between nodes, two nodes with similar neighborhoods should be close in the vector space. For instance, the LINE model considered preserving the 1st-order and 2nd-order proximity between two nodes. As a result, proximity reconstruction provides remarkable results for link prediction and node classification tasks [16,76,77].

- Weighted edges problems: Most proximity-based models do not consider the weighted edges between nodes. These models consider proximity based only on the number of connections shared without weights which could lead to structural loss.

- Capturing the whole graph structure: Proximity-based models mostly focus on 1st-order and 2nd-order proximity which cannot specify the global structure of graphs. A few models try to capture the higher-order proximity of nodes in graphs, but there is a problem with the computational complexity.

3.4. Deep Neural Network-Based Models

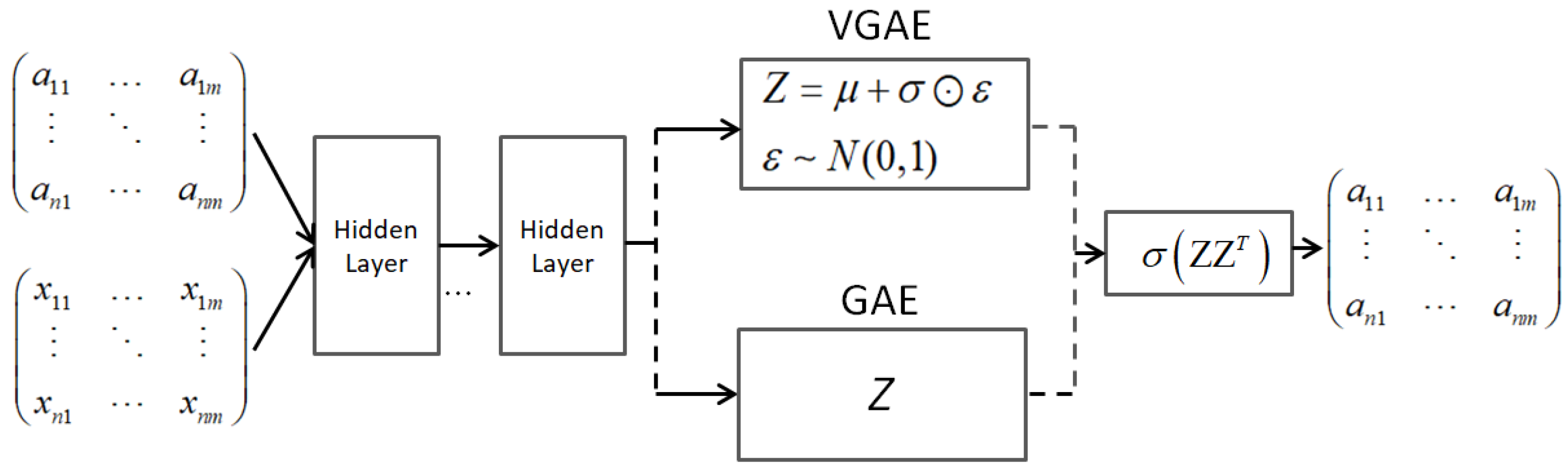

3.4.1. Graph Autoencoders

3.4.2. Recurrent Graph Neural Networks

- Diffusion pattern and multiple relations: RGNNs show superior learning ability when dealing with diffuse information, and they can handle multi-relational graphs where a single node has many relations. This feature is achieved due to the ability to update the states of each node in each hidden layer.

- Parameter sharing: RGNNs could share parameters across different locations, which could be able to capture the sequence node inputs. This advantage could reduce computational complexity during the training process with fewer parameters and increase the performance of the models.

3.4.3. Convolutional Graph Neural Networks

- Computational complexity: The spectral decomposition of the Laplacian matrix into matrices containing eigenvectors is time-consuming. During the training process, the dot product of the U, , and matrices also increase the training time.

- Difficulties for handling large-scale graphs: Since the number of parameters for the kernels also corresponds to the number of nodes in graphs. Therefore, spectral models could not be suitable for large-scale graphs.

- Difficulties for considering graph dynamicity: To apply convolution filters to graphs and train the model, the graph data must be transformed to the spectral domain in the form of a Laplacian matrix. Therefore, when the graph data changes, in the case of dynamic graphs, the model is not applicable to capture changes in dynamic graphs.

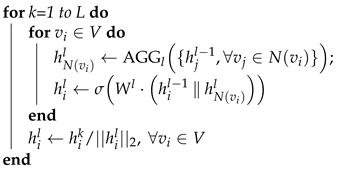

| Algorithm 1: GraphSAGE algorithm. The model first takes the node features as inputs. For each layer, the model aggregates the information from neighbors and then updates the hidden state of each node . |

Input: : The graph G with set of nodes V and set of edges E. : The input features of node L: The depth of hidden layers, : Differentiable aggregator functions : The set of neighbors of node . Output: : Vector representations for .  |

- A corruption function : This function aims to generate negative examples from an original graph with several changes in structure and properties.

- An encoder . The goal of function is to encode nodes into vector space so that presents vector embeddings of all nodes in graphs.

- Readout function . This function maps all embedding nodes into a single vector (supernode).

- A discriminator compares vector embeddings against the global vector of the graph by calculating a score between 0 and 1 for each vector embedding.

- Attention score: At layer l, the model takes a set of features of a node as inputs and the output . An attention score measures the importance of neighbor nodes to the target node could be computed as:where , and are trainable weights, denotes the concatenation.

- Normalization: The score then is normalized comparable across all neighbors of node using the SoftMax function:

- Aggregation: After normalization, the embeddings of node could be computed by aggregating states of neighbor nodes which could be computed as:

- Parameter sharing: Deep neural network models share weights during the training phase to reduce training time and training parameters while increasing the performance of the models. In addition, the parameter-sharing mechanism allows the model to learn multi-tasks.

- Inductive learning: The outstanding advantage of deep models over shallow models is that deep models can support inductive learning. This makes deep-learning models capable of generalizing to unseen nodes and having practical applicability.

- Over-smoothing problem: When capturing the graph structure and entity relationships, CGNNs rely on an aggregation mechanism that captures information from neighboring nodes for target nodes. This results in stacking multiple graph convolutional layers to capture higher-order graph structure. However, increasing the depth of convolution layers could lead to over-smoothing problems [252]. To overcome this drawback, models based on transformer architecture have shown several improvements compared to CGNNs using self-attention.

- The ability on disassortative graphs: Disassortative graphs are graphs where nodes with different labels tend to be linked together. However, the aggregation mechanism in GNN samples all the features of the neighboring nodes even though they have different labels. Therefore, the aggregation mechanism is the limitation and challenge of GNNs for disassortative graphs in classification tasks.

3.4.4. Graph Transformer Models

- Structural encoding-based graph transformer: These models focus on various positional encoding schemes to capture absolute and relative information about entity relationships and graph structure. Structural encoding strategies are mainly suitable for tree-like graphs since the models should capture the hierarchical relations between the target nodes and their parents as well as the interaction with other nodes of the same level.

- GNNs as an auxiliary module: GNNs bring a powerful mechanism in terms of aggregating local structural information. Therefore, several studies try integrating message-passing and GNN modules with a graph transformer encoder as an auxiliary.

- Edge channel-based attention: The graph structure could be viewed as the combination of the node and edge features and the ordered/unordered connection between them. From this perspective, we do not need GNNs as an auxiliary module. Recently, several models have been proposed to capture graph structure in depth as well as apply graph transformer architecture based on the self-attention mechanism.

3.5. Non-Euclidean Models

3.5.1. Hyperbolic Embedding Models

3.5.2. Spherical Embedding Models

3.5.3. Gaussian Embedding Models

4. Applications

4.1. Computer Vision

4.2. Natural Language Processing

4.3. Computer Security

4.4. Bioinformatics

4.5. Social Media Analysis

4.6. Recommendation Systems

4.7. Smart Cities

4.8. Computational Social Science

4.9. Digital Humanity

4.10. Semiconductor Manufacturing

4.11. Weather Forecasting

5. Evaluation Methods

5.1. Benchmark Datasets

5.2. Downstream Tasks and Evaluation Metrics

5.3. Libraries for Graph Representation Learning

6. Challenges and Future Research Directions

- Graph representation in a suitable geometric space: Euclidean space may not capture the graph structure sufficiently and lead to structural information loss.

- The trade-off between the graph structure and node features: Most graph embedding models suffer from noise from non-useful neighbor node features. This could lead to a trade-off between structure preservation and node feature representation, which can be the future research direction.

- Dynamic graphs: Many real-world graphs show dynamic behaviors representing entities’ dynamic structure and properties, bringing a potential research direction.

- Over-smoothing problem: Most GNN models suffer from this problem. The graph transformer model could only handle the over-smoothing problem in several cases.

- Disassortative graphs: Most graph representation learning models suffer from this problem. Several solutions have been proposed but have yet to fully solve to the whole extent.

- Pre-trained models: Pre-trained models could be beneficial to handle the little availability of node labels. However, a few graph embedding models have been pre-trained on specific tasks and small domains.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Open-Source Implementations

| Model | Category | URL |

|---|---|---|

| [70] | Hyperbolic space | https://github.com/facebookresearch/poincare-embeddings |

| [293] | Hyperbolic space | http://snap.stanford.edu/hgcn/ |

| [294] | Hyperbolic space | https://github.com/facebookresearch/hgnn |

| Graph2Gauss [23] | Gaussian embedding | https://github.com/abojchevski/graph2gauss |

References

- Rossi, R.A.; Ahmed, N.K. The Network Data Repository with Interactive Graph Analytics and Visualization. In Proceedings of the 29th Conference on Artificial Intelligence (AAAI 2015), Austin, TX, USA, 25–30 January 2015; AAAI Press: Austin, TX, USA, 2015; pp. 4292–4293. [Google Scholar]

- Ahmed, Z.; Zeeshan, S.; Dandekar, T. Mining biomedical images towards valuable information retrieval in biomedical and life sciences. Database J. Biol. Databases Curation 2016, 2016, baw118. [Google Scholar] [CrossRef]

- Hamilton, W.L. Graph Representation Learning. In Synthesis Lectures on Artificial Intelligence and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar] [CrossRef]

- Grover, A.; Leskovec, J. node2vec: Scalable Feature Learning for Networks. In Proceedings of the 22nd International Conference on Knowledge Discovery and Data Mining (SIGKDD 2016), San Francisco, CA, USA, 13–17 August 2016; ACM: San Francisco, CA, USA, 2016; pp. 855–864. [Google Scholar] [CrossRef]

- Ou, M.; Cui, P.; Pei, J.; Zhang, Z.; Zhu, W. Asymmetric Transitivity Preserving Graph Embedding. In Proceedings of the 22nd International Conference on Knowledge Discovery and Data Mining (ACM SIGKDD 2016), San Francisco, CA, USA, 13–17 August 2016; ACM: San Francisco, CA, USA, 2016; pp. 1105–1114. [Google Scholar] [CrossRef]

- Sun, J.; Bandyopadhyay, B.; Bashizade, A.; Liang, J.; Sadayappan, P.; Parthasarathy, S. ATP: Directed Graph Embedding with Asymmetric Transitivity Preservation. In Proceedings of the 33th Conference on Artificial Intelligence (AAAI 2019), Honolulu, HI, USA, 27 January–1 February 2019; AAAI Press: Honolulu, HI, USA, 2019. [Google Scholar] [CrossRef]

- Cavallari, S.; Zheng, V.W.; Cai, H.; Chang, K.C.; Cambria, E. Learning Community Embedding with Community Detection and Node Embedding on Graphs. In Proceedings of the 26th Conference on Information and Knowledge Management (CIKM 2017), Singapore, 6–10 November 2017; ACM: Singapore, 2017; pp. 377–386. [Google Scholar] [CrossRef]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A Comprehensive Survey on Graph Neural Networks. IEEE Trans. Neural Networks Learn. Syst. 2021, 32, 4–24. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Liu, W.; Pei, Y.; Wang, L.; Zha, D.; Fu, T. Dynamic Network Embedding by Semantic Evolution. In Proceedings of the International Joint Conference on Neural Networks (IJCNN 2019), Budapest, Hungary, 14–19 July 2019; IEEE: Budapest, Hungary, 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Mitrovic, S.; De Weerdt, J. Dyn2Vec: Exploiting dynamic behaviour using difference networks-based node embeddings for classification. In Proceedings of the 15th International Conference on Data Science (ICDATA 2019), Las Vegas, NV, USA, 29 July–1 August 2019; CSREA Press: Las Vegas, NV, USA, 2019; pp. 194–200. [Google Scholar]

- Lee, J.B.; Rossi, R.A.; Kim, S.; Ahmed, N.K.; Koh, E. Attention Models in Graphs: A Survey. ACM Trans. Knowl. Discov. Data 2019, 13, 62.1–62.25. [Google Scholar] [CrossRef]

- Xia, F.; Sun, K.; Yu, S.; Aziz, A.; Wan, L.; Pan, S.; Liu, H. Graph Learning: A Survey. IEEE Trans. Artif. Intell. 2021, 2, 109–127. [Google Scholar] [CrossRef]

- Chen, F.; Wang, Y.C.; Wang, B.; Kuo, C.C. Graph representation learning: A survey. APSIPA Trans. Signal Inf. Process. 2020, 9, e15. [Google Scholar] [CrossRef]

- Perozzi, B.; Al-Rfou, R.; Skiena, S. DeepWalk: Online learning of social representations. In Proceedings of the 20th International Conference on Knowledge Discovery and Data Mining (KDD 2014), New York, NY, USA, 25–30 January 2015; ACM: New York, NY, USA, 2015; pp. 701–710. [Google Scholar] [CrossRef]

- Cao, S.; Lu, W.; Xu, Q. GraRep: Learning Graph Representations with Global Structural Information. In Proceedings of the 24th International Conference on Information and Knowledge Management (CIKM 2015), Melbourne, VIC, Australia, 19–23 October 2015; ACM: Melbourne, VIC, Australia, 2015; pp. 891–900. [Google Scholar] [CrossRef]

- Tang, J.; Qu, M.; Wang, M.; Zhang, M.; Yan, J.; Mei, Q. LINE: Large-scale Information Network Embedding. In Proceedings of the 24th International Conference on World Wide Web (WWW 2015), Florence, Italy, 18–22 May 2015; ACM: Florence, Italy, 2015; pp. 1067–1077. [Google Scholar] [CrossRef]

- Li, Y.; Tarlow, D.; Zemel, R.S. Gated Graph Sequence Neural Networks. In Proceedings of the 4th International Conference on Learning Representations (ICLR 2016), San Juan, PR, USA, 2–4 May 2016; ICLR: San Juan, PR, USA, 2016. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. In Proceedings of the 5th International Conference on Learning Representations (ICLR 2017), Toulon, France, 24–26 April 2017; OpenReview.net: Toulon, France, 2017. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph Attention Networks. arXiv 2017, arXiv:1710.10903. [Google Scholar] [CrossRef]

- Dong, Y.; Chawla, N.V.; Swami, A. metapath2vec: Scalable Representation Learning for Heterogeneous Networks. In Proceedings of the 23rd International Conference on Knowledge Discovery and Data Mining (SIGKDD 2017), Halifax, NS, Canada, 13–17 August 2017; ACM: Halifax, NS, Canada, 2017; pp. 135–144. [Google Scholar] [CrossRef]

- Ribeiro, L.F.R.; Saverese, P.H.P.; Figueiredo, D.R. struc2vec: Learning Node Representations from Structural Identity. In Proceedings of the 23rd International Conference on Knowledge Discovery and Data Mining (KDD 2017), Halifax, NS, Canada, 13–17 August 2017; ACM: Halifax, NS, Canada, 2017; pp. 385–394. [Google Scholar] [CrossRef]

- Hamilton, W.L.; Ying, Z.; Leskovec, J. Inductive Representation Learning on Large Graphs. In Proceedings of the 30th Annual Conference on Neural Information Processing Systems (NeurIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Guyon, I., von Luxburg, U., Bengio, S., Wallach, H.M., Fergus, R., Vishwanathan, S.V.N., Garnett, R., Eds.; NeurIPS: Long Beach, CA, USA, 2017; pp. 1024–1034. [Google Scholar]

- Bojchevski, A.; Günnemann, S. Deep Gaussian Embedding of Graphs: Unsupervised Inductive Learning via Ranking. In Proceedings of the 6th International Conference on Learning Representations (ICLR 2018), Vancouver, BC, Canada, 30 April–3 May 2018; OpenReview.net: Vancouver, BC, Canada, 2018. [Google Scholar]

- Xu, K.; Hu, W.; Leskovec, J.; Jegelka, S. How Powerful are Graph Neural Networks? In Proceedings of the 7th International Conference on Learning Representations (ICLR 2019), New Orleans, LA, USA, 6–9 May 2019; OpenReview.net: New Orleans, LA, USA, 2019. [Google Scholar]

- Wang, X.; Ji, H.; Shi, C.; Wang, B.; Ye, Y.; Cui, P.; Yu, P.S. Heterogeneous Graph Attention Network. In Proceedings of the World Wide Web Conference (WWW 2019), San Francisco, CA, USA, 13–17 May 2019; ACM: San Francisco, CA, USA, 2019; pp. 2022–2032. [Google Scholar] [CrossRef]

- Velickovic, P.; Fedus, W.; Hamilton, W.L.; Liò, P.; Bengio, Y.; Hjelm, R.D. Deep Graph Infomax. In Proceedings of the 7th International Conference on Learning Representations (ICLR 2019), New Orleans, LA, USA, 6–9 May 2019; OpenReview.net: New Orleans, LA, USA, 2019. [Google Scholar]

- Feng, Y.; You, H.; Zhang, Z.; Ji, R.; Gao, Y. Hypergraph Neural Networks. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence (AAAI 2019), Honolulu, HI, USA, 27 January–1 February 2019; AAAI Press: Honolulu, HI, USA, 2019; pp. 3558–3565. [Google Scholar] [CrossRef]

- Chen, M.; Wei, Z.; Huang, Z.; Ding, B.; Li, Y. Simple and Deep Graph Convolutional Networks. In Proceedings of the 37th International Conference on Machine Learning (ICML 2020), Virtual Event, 13–18 July 2020; PMLR: Virtual Event, 2020; Volume 119, pp. 1725–1735. [Google Scholar]

- Dwivedi, V.P.; Bresson, X. A Generalization of Transformer Networks to Graphs. arXiv 2020, arXiv:2012.09699. [Google Scholar]

- Hussain, M.S.; Zaki, M.J.; Subramanian, D. Global Self-Attention as a Replacement for Graph Convolution. In Proceedings of the 28th Conference on Knowledge Discovery and Data Mining (KDD 2022), Washington, DC, USA, 14–18 August 2022; ACM: Washington, DC, USA, 2022; pp. 655–665. [Google Scholar] [CrossRef]

- Weisfeiler, B.; Leman, A. The reduction of a graph to canonical form and the algebra which appears therein. NTI Ser. 1968, 2, 12–16. [Google Scholar]

- Nikolentzos, G.; Siglidis, G.; Vazirgiannis, M. Graph Kernels: A Survey. J. Artif. Intell. Res. 2021, 72, 943–1027. [Google Scholar] [CrossRef]

- Shervashidze, N.; Schweitzer, P.; van Leeuwen, E.J.; Mehlhorn, K.; Borgwardt, K.M. Weisfeiler-Lehman Graph Kernels. J. Mach. Learn. Res. 2011, 12, 2539–2561. [Google Scholar]

- Morris, C.; Kersting, K.; Mutzel, P. Glocalized Weisfeiler-Lehman Graph Kernels: Global-Local Feature Maps of Graphs. In Proceedings of the International Conference on Data Mining (ICDM 2017), New Orleans, LA, USA, 18–21 November 2017; IEEE Computer Society: New Orleans, LA, USA, 2017; pp. 327–336. [Google Scholar] [CrossRef]

- Nguyen, D.H.; Nguyen, C.H.; Mamitsuka, H. Learning Subtree Pattern Importance for Weisfeiler-Lehman Based Graph Kernels. Mach. Learn. 2021, 110, 1585–1607. [Google Scholar] [CrossRef]

- Ye, W.; Tian, H.; Chen, Q. Graph Kernels Based on Multi-scale Graph Embeddings. arXiv 2022, arXiv:2206.00979. [Google Scholar] [CrossRef]

- Orsini, F.; Frasconi, P.; Raedt, L.D. Graph Invariant Kernels. In Proceedings of the 24th International Joint Conference on Artificial Intelligence (IJCAI 2015), Buenos Aires, Argentina, 25–31 July 2015; AAAI Press: Buenos Aires, Argentina, 2015; pp. 3756–3762. [Google Scholar]

- Belkin, M.; Niyogi, P. Laplacian Eigenmaps and Spectral Techniques for Embedding and Clustering. In Proceedings of the 14th Annual Conference on Neural Information Processing Systems (NIPS 2001), Vancouver, BC, Canada, 3–8 December 2001; Dietterich, T.G., Becker, S., Ghahramani, Z., Eds.; MIT Press: Vancouver, BC, Canada, 2001; pp. 585–591. [Google Scholar]

- Gong, C.; Tao, D.; Yang, J.; Fu, K. Signed Laplacian Embedding for Supervised Dimension Reduction. In Proceedings of the 28th Conference on Artificial Intelligence (AAAI 2014), Québec City, QC, Canada, 27–31 July 2014; AAAI Press: Québec City, QC, Canada, 2014; pp. 1847–1853. [Google Scholar]

- Allab, K.; Labiod, L.; Nadif, M. A Semi-NMF-PCA Unified Framework for Data Clustering. IEEE Trans. Knowl. Data Eng. 2017, 29, 2–16. [Google Scholar] [CrossRef]

- Belkin, M.; Niyogi, P. Laplacian Eigenmaps for Dimensionality Reduction and Data Representation. Neural Comput. 2003, 15, 1373–1396. [Google Scholar] [CrossRef]

- He, X.; Niyogi, P. Locality Preserving Projections. In Proceedings of the 16th Neural Information Processing Systems (NIPS 2003), Vancouver and Whistler, BC, Canada, 8–13 December 2003; MIT Press: Vancouver/Whistler, BC, Canada, 2003; pp. 153–160. [Google Scholar]

- Zhang, J.; Dong, Y.; Wang, Y.; Tang, J.; Ding, M. ProNE: Fast and Scalable Network Representation Learning. In Proceedings of the 28th International Joint Conference on Artificial Intelligence (IJCAI 2019), Macao, China, 10–16 August 2019; ijcai.org: Macao, China, 2019; pp. 4278–4284. [Google Scholar] [CrossRef]

- Gori, M.; Monfardini, G.; Scarselli, F. A new model for learning in graph domains. In Proceedings of the International Joint Conference on Neural Networks (IJCNN 2005), Montreal, QC, Canada, 31 July–4 August 2005; IEEE: Montreal, QC, Canada, 2005; Volume 2, pp. 729–734. [Google Scholar] [CrossRef]

- Scarselli, F.; Gori, M.; Tsoi, A.C.; Hagenbuchner, M.; Monfardini, G. The Graph Neural Network Model. IEEE Trans. Neural Netw. 2009, 20, 61–80. [Google Scholar] [CrossRef]

- Zhao, J.; Li, C.; Wen, Q.; Wang, Y.; Liu, Y.; Sun, H.; Xie, X.; Ye, Y. Gophormer: Ego-Graph Transformer for Node Classification. arXiv 2021, arXiv:2110.13094. [Google Scholar]

- Schmitt, M.; Ribeiro, L.F.R.; Dufter, P.; Gurevych, I.; Schütze, H. Modeling Graph Structure via Relative Position for Better Text Generation from Knowledge Graphs. arXiv 2020, arXiv:2006.09242. [Google Scholar]

- Zhang, C.; Swami, A.; Chawla, N.V. SHNE: Representation Learning for Semantic-Associated Heterogeneous Networks. In Proceedings of the 12th International Conference on Web Search and Data Mining (WSDM 2019), Melbourne, VIC, Australia, 11–15 February 2019; ACM: Melbourne, VIC, Australia, 2019; pp. 690–698. [Google Scholar] [CrossRef]

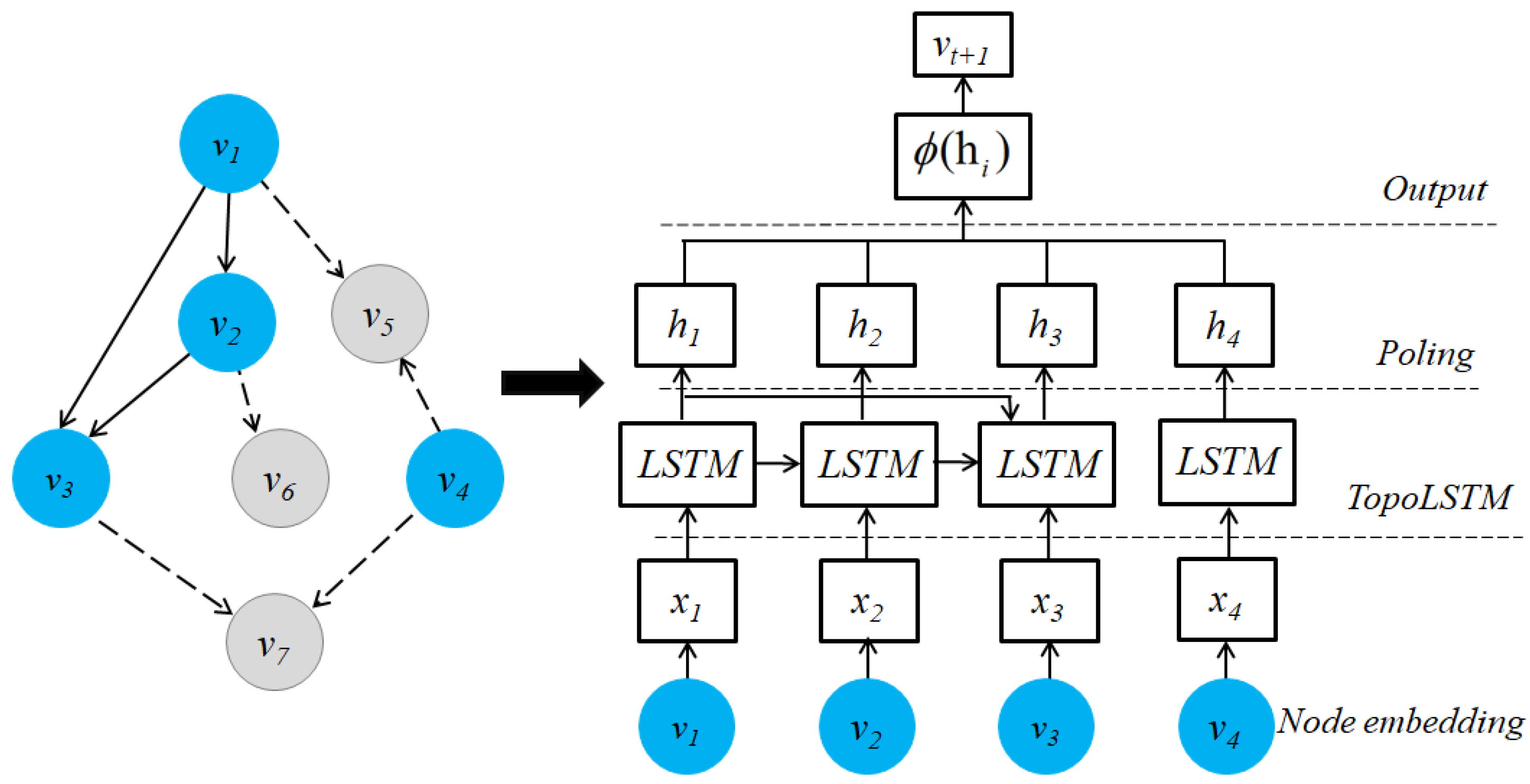

- Wang, J.; Zheng, V.W.; Liu, Z.; Chang, K.C. Topological Recurrent Neural Network for Diffusion Prediction. In Proceedings of the International Conference on Data Mining (ICDM 2017), New Orleans, LA, USA, 18–21 November 2017; Raghavan, V., Aluru, S., Karypis, G., Miele, L., Wu, X., Eds.; IEEE Computer Society: New Orleans, LA, USA, 2017; pp. 475–484. [Google Scholar] [CrossRef]

- Wang, D.; Cui, P.; Zhu, W. Structural Deep Network Embedding. In Proceedings of the 22nd International Conference on Knowledge Discovery and Data Mining (SIGKDD 2016), San Francisco, CA, USA, 13–17 August 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 1225–1234. [Google Scholar] [CrossRef]

- Tu, K.; Cui, P.; Wang, X.; Wang, F.; Zhu, W. Structural Deep Embedding for Hyper-Networks. In Proceedings of the 32nd Conference on Artificial Intelligence (AAAI 2018), New Orleans, LA, USA, 2–7 February 2018; AAAI Press: New Orleans, LA, USA, 2018; pp. 426–433. [Google Scholar]

- Chen, J.; Ma, T.; Xiao, C. FastGCN: Fast Learning with Graph Convolutional Networks via Importance Sampling. In Proceedings of the 6th International Conference on Learning Representations (ICLR 2018), Vancouver, BC, Canada, 30 April–3 May 2018; OpenReview.net: Vancouver, BC, Canada, 2018. [Google Scholar]

- Zeng, H.; Zhou, H.; Srivastava, A.; Kannan, R.; Prasanna, V.K. GraphSAINT: Graph Sampling Based Inductive Learning Method. In Proceedings of the 8th International Conference on Learning Representations (ICLR 2020, Addis Ababa, Ethiopia, 26–30 April 2020; OpenReview.net: Addis Ababa, Ethiopia, 2020. [Google Scholar]

- Jiang, H.; Cao, P.; Xu, M.; Yang, J.; Zaïane, O.R. Hi-GCN: A hierarchical graph convolution network for graph embedding learning of brain network and brain disorders prediction. Comput. Biol. Med. 2020, 127, 104096. [Google Scholar] [CrossRef]

- Li, R.; Huang, J. Learning Graph While Training: An Evolving Graph Convolutional Neural Network. arXiv 2017, arXiv:1708.04675. [Google Scholar]

- Bruna, J.; Zaremba, W.; Szlam, A.; LeCun, Y. Spectral Networks and Locally Connected Networks on Graphs. In Proceedings of the 2nd International Conference on Learning Representations (ICLR 2014), Banff, AB, Canada, 14–16 April 2014; ICLR: Banff, AB, Canada, 2014. [Google Scholar]

- Seo, M.; Lee, K.Y. A Graph Embedding Technique for Weighted Graphs Based on LSTM Autoencoders. J. Inf. Process. Syst. 2020, 16, 1407–1423. [Google Scholar] [CrossRef]

- Brody, S.; Alon, U.; Yahav, E. How Attentive are Graph Attention Networks? In Proceedings of the 10th International Conference on Learning Representations (ICLR 2022), Virtual Event, 25–29 April 2022; OpenReview.net: Virtual Event, 2022. [Google Scholar]

- Zhu, J.; Yan, Y.; Zhao, L.; Heimann, M.; Akoglu, L.; Koutra, D. Beyond Homophily in Graph Neural Networks: Current Limitations and Effective Designs. In Proceedings of the 33rd Annual Conference on Neural Information Processing Systems (NeurIPS 2020), Virtual Event, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; NeurIPS: Virtual Event, 2020. [Google Scholar]

- Suresh, S.; Budde, V.; Neville, J.; Li, P.; Ma, J. Breaking the Limit of Graph Neural Networks by Improving the Assortativity of Graphs with Local Mixing Patterns. In Proceedings of the 27th Conference on Knowledge Discovery and Data Mining (KDD 2021), Singapore, 14–18 August 2021; Zhu, F., Ooi, B.C., Miao, C., Eds.; ACM: Singapore, 2021; pp. 1541–1551. [Google Scholar] [CrossRef]

- Nguyen, D.Q.; Nguyen, T.D.; Phung, D. Universal Graph Transformer Self-Attention Networks. In Proceedings of the Companion Proceedings of the Web Conference 2022 (WWW 2022), Lyon, France, 25–29 April 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 193–196. [Google Scholar] [CrossRef]

- Zhu, J.; Li, J.; Zhu, M.; Qian, L.; Zhang, M.; Zhou, G. Modeling Graph Structure in Transformer for Better AMR-to-Text Generation. In Proceedings of the Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP 2019), Hong Kong, China, 3–7 November 2019; Association for Computational Linguistics: Hong Kong, China, 2019; pp. 5458–5467. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, H.; Xia, C.; Sun, L. Graph-Bert: Only Attention is Needed for Learning Graph Representations. arXiv 2020, arXiv:2001.05140. [Google Scholar]

- Shiv, V.L.; Quirk, C. Novel positional encodings to enable tree-based transformers. In Proceedings of the 32nd Annual Conference on Neural Information Processing Systems 2019 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; NeurIPS: Vancouver, BC, Canada, 2019; pp. 12058–12068. [Google Scholar]

- Wang, X.; Tu, Z.; Wang, L.; Shi, S. Self-Attention with Structural Position Representations. In Proceedings of the Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP 2019), Hong Kong, China, 3–7 November 2019; Association for Computational Linguistics: Hong Kong, China, 2019; pp. 1403–1409. [Google Scholar] [CrossRef]

- Shi, Y.; Huang, Z.; Feng, S.; Zhong, H.; Wang, W.; Sun, Y. Masked Label Prediction: Unified Message Passing Model for Semi-Supervised Classification. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence (IJCAI 2021), Montreal, QC, Canada, 19–27 August 2021; Zhou, Z., Ed.; ijcai.org: Montreal, QC, Canada, 2021; pp. 1548–1554. [Google Scholar] [CrossRef]

- Ying, C.; Cai, T.; Luo, S.; Zheng, S.; Ke, G.; He, D.; Shen, Y.; Liu, T. Do Transformers Really Perform Badly for Graph Representation? In Proceedings of the 34th Annual Conference on Neural Information Processing Systems 2021 (NeurIPS 2021), Virtual Event, 6–14 December 2021; Ranzato, M., Beygelzimer, A., Dauphin, Y.N., Liang, P., Vaughan, J.W., Eds.; nips.cc: Virtual Event, 2021; pp. 28877–28888. [Google Scholar]

- Zhang, Y.; Wang, X.; Shi, C.; Jiang, X.; Ye, Y. Hyperbolic Graph Attention Network. IEEE Trans. Big Data 2022, 8, 1690–1701. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, X.; Shi, C.; Liu, N.; Song, G. Lorentzian Graph Convolutional Networks. In Proceedings of the The Web Conference (WWW 2021), Ljubljana, Slovenia, 19–23 April 2021; ACM/IW3C2: Ljubljana, Slovenia, 2021; pp. 1249–1261. [Google Scholar] [CrossRef]

- Nickel, M.; Kiela, D. Poincaré Embeddings for Learning Hierarchical Representations. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; nips.cc: Long Beach, CA, USA, 2017; pp. 6338–6347. [Google Scholar]

- Wang, X.; Zhang, Y.; Shi, C. Hyperbolic Heterogeneous Information Network Embedding. In Proceedings of the 33rd Conference on Artificial Intelligence (AAAI 2019), Honolulu, HI, USA, 27 January–1 February 2019; AAAI Press: Honolulu, HI, USA, 2019; pp. 5337–5344. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Variational Graph Auto-Encoders. arXiv 2016, arXiv:1611.07308. [Google Scholar]

- Zhang, D.; Yin, J.; Zhu, X.; Zhang, C. Network Representation Learning: A Survey. IEEE Trans. Big Data 2020, 6, 3–28. [Google Scholar] [CrossRef]

- Hamilton, W.L.; Ying, R.; Leskovec, J. Representation Learning on Graphs: Methods and Applications. arXiv 2017, arXiv:1709.05584. [Google Scholar]

- Cai, H.; Zheng, V.W.; Chang, K.C. A Comprehensive Survey of Graph Embedding: Problems, Techniques, and Applications. IEEE Trans. Knowl. Data Eng. 2018, 30, 1616–1637. [Google Scholar] [CrossRef]

- Zhou, C.; Liu, Y.; Liu, X.; Liu, Z.; Gao, J. Scalable Graph Embedding for Asymmetric Proximity. In Proceedings of the 31th Conference on Artificial Intelligence (AAAI 2017), San Francisco, CA, USA, 4–9 February 2017; AAAI Press: San Francisco, CA, USA, 2017; pp. 2942–2948. [Google Scholar]

- Man, T.; Shen, H.; Liu, S.; Jin, X.; Cheng, X. Predict Anchor Links across Social Networks via an Embedding Approach. In Proceedings of the 25th International Joint Conference on Artificial Intelligence (IJCAI 2016), New York, NY, USA, 9–15 July 2016; IJCAI/AAAI Press: New York, NY, USA, 2016; pp. 1823–1829. [Google Scholar]

- Gui, H.; Liu, J.; Tao, F.; Jiang, M.; Norick, B.; Han, J. Large-Scale Embedding Learning in Heterogeneous Event Data. In Proceedings of the 16th IEEE International Conference on Data Mining (ICDM 2016), Barcelona, Spain, 12–15 December 2016; IEEE Computer Society: Barcelona, Spain, 2016; pp. 907–912. [Google Scholar] [CrossRef]

- Goyal, P.; Ferrara, E. Graph embedding techniques, applications, and performance: A survey. Knowl.-Based Syst. 2018, 151, 78–94. [Google Scholar] [CrossRef]

- Casteigts, A.; Flocchini, P.; Quattrociocchi, W.; Santoro, N. Time-varying graphs and dynamic networks. Int. J. Parallel Emergent Distrib. Syst. 2012, 27, 387–408. [Google Scholar] [CrossRef]

- Li, J.; Dani, H.; Hu, X.; Tang, J.; Chang, Y.; Liu, H. Attributed Network Embedding for Learning in a Dynamic Environment. In Proceedings of the Conference on Information and Knowledge Management (CIKM 2017), Singapore, 6–10 November 2017; ACM: Singapore, 2017; pp. 387–396. [Google Scholar] [CrossRef]

- Chen, C.; Tao, Y.; Lin, H. Dynamic Network Embeddings for Network Evolution Analysis. arXiv 2019, arXiv:1906.09860. [Google Scholar]

- Shervashidze, N.; Vishwanathan, S.V.N.; Petri, T.; Mehlhorn, K.; Borgwardt, K.M. Efficient graphlet kernels for large graph comparison. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics (AISTATS 2009), Clearwater Beach, FL, USA, 16–18 April 2009; JMLR.org: Clearwater Beach, FL, USA, 2009; Volume 5, pp. 488–495. [Google Scholar]

- Togninalli, M.; Ghisu, M.E.; Llinares-López, F.; Rieck, B.; Borgwardt, K.M. Wasserstein Weisfeiler-Lehman Graph Kernels. In Proceedings of the 32nd Annual Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; NeurIPS: Vancouver, BC, Canada, 2019; pp. 6436–6446. [Google Scholar]

- Kang, U.; Tong, H.; Sun, J. Fast Random Walk Graph Kernel. In Proceedings of the 12th International Conference on Data Mining (ICDM 2012), Anaheim, CA, USA, 26–28 April 2012; SIAM/Omnipress: Anaheim, CA, USA, 2012; pp. 828–838. [Google Scholar] [CrossRef]

- Zhang, Z.; Cui, P.; Wang, X.; Pei, J.; Yao, X.; Zhu, W. Arbitrary-Order Proximity Preserved Network Embedding. In Proceedings of the 24th International Conference on Knowledge Discovery & Data Mining (KDD 2018), London, UK, 19–23 August 2018; ACM: London, UK, 2018; pp. 2778–2786. [Google Scholar] [CrossRef]

- Dareddy, M.R.; Das, M.; Yang, H. motif2vec: Motif Aware Node Representation Learning for Heterogeneous Networks. In Proceedings of the International Conference on Big Data (IEEE BigData), Los Angeles, CA, USA, 9–12 December 2019; IEEE: Los Angeles, CA, USA, 2019; pp. 1052–1059. [Google Scholar] [CrossRef]

- Tu, K.; Li, J.; Towsley, D.; Braines, D.; Turner, L.D. gl2vec: Learning feature representation using graphlets for directed networks. In Proceedings of the 19th International Conference on Advances in Social Networks Analysis and Mining (ASONAM 2019), Vancouver, BC, Canada, 27–30 August 2019; ACM: Vancouver, BC, Canada, 2019; pp. 216–221. [Google Scholar] [CrossRef]

- Yu, Y.; Lu, Z.; Liu, J.; Zhao, G.; Wen, J. RUM: Network Representation Learning Using Motifs. In Proceedings of the 35th International Conference on Data Engineering (ICDE 2019), Macao, China, 8–11 April 2019; IEEE: Macao, China, 2019; pp. 1382–1393. [Google Scholar] [CrossRef]

- Hu, Q.; Lin, F.; Wang, B.; Li, C. MBRep: Motif-Based Representation Learning in Heterogeneous Networks. Expert Syst. Appl. 2022, 190, 116031. [Google Scholar] [CrossRef]

- Shao, P.; Yang, Y.; Xu, S.; Wang, C. Network Embedding via Motifs. ACM Trans. Knowl. Discov. Data 2021, 16, 44. [Google Scholar] [CrossRef]

- De Winter, S.; Decuypere, T.; Mitrović, S.; Baesens, B.; De Weerdt, J. Combining Temporal Aspects of Dynamic Networks with Node2Vec for a More Efficient Dynamic Link Prediction. In Proceedings of the International Conference on Advances in Social Networks Analysis and Mining (IEEE/ACM 2018), Barcelona, Spain, 28–31 August 2018; IEEE Press: Barcelona, Spain, 2018; pp. 1234–1241. [Google Scholar]

- Huang, X.; Song, Q.; Li, Y.; Hu, X. Graph Recurrent Networks With Attributed Random Walks. In Proceedings of the 25th International Conference on Knowledge Discovery & Data Mining (KDD 2019), Anchorage, AK, USA, 4–8 August 2019; ACM: Anchorage, AK, USA, 2019; pp. 732–740. [Google Scholar] [CrossRef]

- Sperduti, A.; Starita, A. Supervised neural networks for the classification of structures. IEEE Trans. Neural Netw. 1997, 8, 714–735. [Google Scholar] [CrossRef] [PubMed]

- Chiang, W.; Liu, X.; Si, S.; Li, Y.; Bengio, S.; Hsieh, C. Cluster-GCN: An Efficient Algorithm for Training Deep and Large Graph Convolutional Networks. In Proceedings of the 25th International Conference on Knowledge Discovery & Data Mining (KDD 2019), Anchorage, AK, USA, 4–8 August 2019; ACM: Anchorage, AK, USA, 2019; pp. 257–266. [Google Scholar] [CrossRef]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering. In Proceedings of the 29th Annual Conference on Neural Information Processing Systems (NeurIPS 2016), Barcelona, Spain, 5–10 December 2016; NeurIPS: Barcelona, Spain, 2016; pp. 3837–3845. [Google Scholar]

- Li, Q.; Han, Z.; Wu, X. Deeper Insights Into Graph Convolutional Networks for Semi-Supervised Learning. In Proceedings of the 32nd Conference on Artificial Intelligence (AAAI-18), New Orleans, LA, USA, 2–7 February 2018; McIlraith, S.A., Weinberger, K.Q., Eds.; AAAI Press: New Orleans, LA, USA, 2018; pp. 3538–3545. [Google Scholar]

- Li, G.; Xiong, C.; Thabet, A.K.; Ghanem, B. DeeperGCN: All You Need to Train Deeper GCNs. arXiv 2020, arXiv:2006.07739. [Google Scholar]

- Lin, K.; Wang, L.; Liu, Z. Mesh Graphormer. In Proceedings of the International Conference on Computer Vision (ICCV 2021), Montreal, QC, Canada, 10–17 October 2021; IEEE: Montreal, QC, Canada, 2021; pp. 12919–12928. [Google Scholar] [CrossRef]

- Rong, Y.; Bian, Y.; Xu, T.; Xie, W.; Wei, Y.; Huang, W.; Huang, J. Self-Supervised Graph Transformer on Large-Scale Molecular Data. In Proceedings of the 34th Annual Conference on Neural Information Processing Systems (NeurIPS 2020), Virtual Event, 6–12 December 2020; NeurIPS: Virtual event, 2020. [Google Scholar]

- Yao, S.; Wang, T.; Wan, X. Heterogeneous Graph Transformer for Graph-to-Sequence Learning. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (ACL 2020), Virtual Event, 5–10 July 2020; ACL: Virtual Event, 2020; pp. 7145–7154. [Google Scholar] [CrossRef]

- Nickel, M.; Kiela, D. Learning Continuous Hierarchies in the Lorentz Model of Hyperbolic Geometry. In Proceedings of the 35th International Conference on Machine Learning (ICML 2018), Stockholm, Sweden, 10–15 July 2018; mlr.press: Stockholm, Sweden, 2018; Volume 80, pp. 3776–3785. [Google Scholar]

- Cao, Z.; Xu, Q.; Yang, Z.; Cao, X.; Huang, Q. Geometry Interaction Knowledge Graph Embeddings. In Proceedings of the 36th Conference on Artificial Intelligence (AAAI 2022), Virtual Event, 22 February–1 March 2022; AAAI Press: Virtual Event, 2022; pp. 5521–5529. [Google Scholar]

- Santos, L.D.; Piwowarski, B.; Gallinari, P. Multilabel Classification on Heterogeneous Graphs with Gaussian Embeddings. In Proceedings of the Machine Learning and Knowledge Discovery in Databases—European Conference (ECML PKDD 2016), Riva del Garda, Italy, 19–23 September 2016; Springer: Riva del Garda, Italy, 2016; Volume 9852, pp. 606–622. [Google Scholar] [CrossRef]

- Kriege, N.M. Comparing Graphs: Algorithms & Applications. Ph.D. Thesis, Technische Universität, Dortmund, Germany, 2015. [Google Scholar]

- Kondor, R.; Shervashidze, N.; Borgwardt, K.M. The graphlet spectrum. In Proceedings of the 26th Annual International Conference on Machine Learning (ICML 2009), Montreal, QC, Canada, 14–18 June 2009; ACM: Montreal, QC, Canada, 2009; Volume 382, pp. 529–536. [Google Scholar] [CrossRef]

- Feragen, A.; Kasenburg, N.; Petersen, J.; de Bruijne, M.; Borgwardt, K.M. Scalable kernels for graphs with continuous attributes. In Proceedings of the 27th Annual Conference on Neural Information Processing Systems (NeurIPS 2013), Lake Tahoe, NV, USA, 5–8 December 2013; Burges, C.J.C., Bottou, L., Ghahramani, Z., Weinberger, K.Q., Eds.; NeurIPS: Lake Tahoe, NV, USA, 2013; pp. 216–224. [Google Scholar]

- Morris, C.; Kriege, N.M.; Kersting, K.; Mutzel, P. Faster Kernels for Graphs with Continuous Attributes via Hashing. In Proceedings of the 16th International Conference on Data Mining (ICDM 2016), Barcelona, Spain, 12–15 December 2016; IEEE Computer Society: Barcelona, Spain, 2016; pp. 1095–1100. [Google Scholar] [CrossRef]

- Borgwardt, K.M.; Kriegel, H. Shortest-Path Kernels on Graphs. In Proceedings of the 5th International Conference on Data Mining (ICDM 2005), Houston, TX, USA, 27–30 November 2005; IEEE Computer Society: Houston, TX, USA, 2005; pp. 74–81. [Google Scholar] [CrossRef]

- Kashima, H.; Tsuda, K.; Inokuchi, A. Marginalized Kernels Between Labeled Graphs. In Proceedings of the 20th International Conference Machine Learning (ICML 2003), Washington, DC, USA, 21–24 August 2003; AAAI Press: Washington, DC, USA, 2003; pp. 321–328. [Google Scholar]

- Rahman, M.; Hasan, M.A. Link Prediction in Dynamic Networks Using Graphlet. In Proceedings of the Machine Learning and Knowledge Discovery in Databases—European Conference (ECML PKDD 2016), Riva del Garda, Italy, 19–23 September 2016; Springer: Riva del Garda, Italy, 2016; Volume 9851, pp. 394–409. [Google Scholar] [CrossRef]

- Rahman, M.; Saha, T.K.; Hasan, M.A.; Xu, K.S.; Reddy, C.K. DyLink2Vec: Effective Feature Representation for Link Prediction in Dynamic Networks. arXiv 2018, arXiv:1804.05755. [Google Scholar]

- Béres, F.; Kelen, D.M.; Pálovics, R.; Benczúr, A.A. Node embeddings in dynamic graphs. Appl. Netw. Sci. 2019, 4, 64.1–64.25. [Google Scholar] [CrossRef]

- Cuturi, M. Sinkhorn Distances: Lightspeed Computation of Optimal Transport. In Proceedings of the 27th Annual Conference on Neural Information Processing Systems (NeurIPS 2013), Lake Tahoe, NV, USA, 5–8 December 2013; NeurIPS: Lake Tahoe, NV, USA, 2013; pp. 2292–2300. [Google Scholar]

- Floyd, R.W. Algorithm 97: Shortest path. Commun. ACM 1962, 5, 345. [Google Scholar] [CrossRef]

- Kriege, N.M.; Johansson, F.D.; Morris, C. A survey on graph kernels. Appl. Netw. Sci. 2020, 5, 6. [Google Scholar] [CrossRef]

- Urry, M.; Sollich, P. Random walk kernels and learning curves for Gaussian process regression on random graphs. J. Mach. Learn. Res. 2013, 14, 1801–1835. [Google Scholar]

- Bunke, H.; Irniger, C.; Neuhaus, M. Graph Matching—Challenges and Potential Solutions. In Proceedings of the 13th Image Analysis and Processing— International Conference (ICIAP 2005), Cagliari, Italy, 6–8 September 2005; Springer: Cagliari, Italy, 2005; Volume 3617, pp. 1–10. [Google Scholar] [CrossRef]

- Hofmann, T.; Buhmann, J.M. Multidimensional Scaling and Data Clustering. In Proceedings of the 7th Advances in Neural Information Processing Systems (NIPS 1994), Denver, CO, USA, 1 January 1994; Tesauro, G., Touretzky, D.S., Leen, T.K., Eds.; MIT Press: Denver, CO, USA, 1994; pp. 459–466. [Google Scholar]

- Han, Y.; Shen, Y. Partially Supervised Graph Embedding for Positive Unlabelled Feature Selection. In Proceedings of the 25th International Joint Conference on Artificial Intelligence (IJCAI 2016), New York, NY, USA, 9–15 July 2016; IJCAI/AAAI Press: New York, NY, USA, 2016; pp. 1548–1554. [Google Scholar]

- Cai, D.; He, X.; Han, J. Spectral regression: A unified subspace learning framework for content-based image retrieval. In Proceedings of the 15th International Conference on Multimedia 2007 (ICM 2007), Augsburg, Germany, 24–29 September 2007; ACM: Augsburg, Germany, 2007; pp. 403–412. [Google Scholar] [CrossRef]

- Torres, L.; Chan, K.S.; Eliassi-Rad, T. GLEE: Geometric Laplacian Eigenmap Embedding. J. Complex Netw. 2020, 8, cnaa007. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Y.; Zhang, T.; Zhang, J.; Chang, Y. Learning Network Embedding with Community Structural Information. In Proceedings of the 28th International Joint Conference on Artificial Intelligence (IJCAI 2019), Macao, China, 10–16 August 2019; ijcai.org: Macao, China, 2019; pp. 2937–2943. [Google Scholar] [CrossRef]

- Coskun, M. A high order proximity measure for linear network embedding. NiğDe öMer Halisdemir üNiversitesi MüHendislik Bilim. Derg. 2022, 11, 477–483. [Google Scholar] [CrossRef]

- Ahmed, A.; Shervashidze, N.; Narayanamurthy, S.M.; Josifovski, V.; Smola, A.J. Distributed large-scale natural graph factorization. In Proceedings of the 22nd International World Wide Web Conference (WWW 2013), Rio de Janeiro, Brazil, 13–17 May 2013; International World Wide Web Conferences Steering Committee/ACM: Rio de Janeiro, Brazil, 2013; pp. 37–48. [Google Scholar] [CrossRef]

- Lian, D.; Zhu, Z.; Zheng, K.; Ge, Y.; Xie, X.; Chen, E. Network Representation Lightening from Hashing to Quantization. IEEE Trans. Knowl. Data Eng. 2022, 35, 5119–5131. [Google Scholar] [CrossRef]

- Katz, L. A new status index derived from sociometric analysis. Psychometrika 1953, 18, 39–43. [Google Scholar] [CrossRef]

- Page, L.; Brin, S.; Motwani, R.; Winograd, T. The PageRank Citation Ranking: Bringing Order to the Web; Technical Report 1999-66; Previous number = SIDL-WP-1999-0120; Stanford InfoLab: Stanford, CA, USA, 1999. [Google Scholar]

- Adamic, L.A.; Adar, E. Friends and neighbors on the Web. Soc. Netw. 2003, 25, 211–230. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, Z.; Sun, M. Representation Learning for Measuring Entity Relatedness with Rich Information. In Proceedings of the 24th International Joint Conference on Artificial Intelligence (IJCAI 2015), Buenos Aires, Argentina, 25–31 July 2015; AAAI Press: Buenos Aires, Argentina, 2015; pp. 1412–1418. [Google Scholar]

- Yang, C.; Liu, Z.; Zhao, D.; Sun, M.; Chang, E.Y. Network Representation Learning with Rich Text Information. In Proceedings of the 24th International Joint Conference on Artificial Intelligence (IJCAI 2015), Buenos Aires, Argentina, 25–31 July 2015; AAAI Press: Buenos Aires, Argentina, 2015; pp. 2111–2117. [Google Scholar]

- Yang, R.; Shi, J.; Xiao, X.; Yang, Y.; Bhowmick, S.S. Homogeneous Network Embedding for Massive Graphs via Reweighted Personalized PageRank. Proc. Vldb Endow. 2020, 13, 670–683. [Google Scholar] [CrossRef]

- Qiu, J.; Dong, Y.; Ma, H.; Li, J.; Wang, C.; Wang, K.; Tang, J. NetSMF: Large-Scale Network Embedding as Sparse Matrix Factorization. In Proceedings of the World Wide Web Conference (WWW 2019), San Francisco, CA, USA, 13–17 May 2019; ACM: New York, NY, USA, 2019; pp. 1509–1520. [Google Scholar] [CrossRef]

- Rossi, R.A.; Gallagher, B.; Neville, J.; Henderson, K. Modeling dynamic behavior in large evolving graphs. In Proceedings of the 6th International Conference on Web Search and Data Mining (WSDM 2013), Rome, Italy, 4–8 February 2013; ACM: Rome, Italy, 2013; pp. 667–676. [Google Scholar] [CrossRef]

- Yu, W.; Aggarwal, C.C.; Wang, W. Temporally Factorized Network Modeling for Evolutionary Network Analysis. In Proceedings of the 10th International Conference on Web Search and Data Mining (WSDM 2017), Cambridge, UK, 6–10 February 2017; ACM: Cambridge, UK, 2017; pp. 455–464. [Google Scholar] [CrossRef]

- Zhu, L.; Guo, D.; Yin, J.; Steeg, G.V.; Galstyan, A. Scalable Temporal Latent Space Inference for Link Prediction in Dynamic Social Networks. IEEE Trans. Knowl. Data Eng. 2016, 28, 2765–2777. [Google Scholar] [CrossRef]

- Yu, W.; Cheng, W.; Aggarwal, C.C.; Chen, H.; Wang, W. Link Prediction with Spatial and Temporal Consistency in Dynamic Networks. In Proceedings of the 26th International Joint Conference on Artificial Intelligence (IJCAI 2017), Melbourne, Australia, 19–25 August 2017; ijcai.org: Melbourne, Australia, 2017; pp. 3343–3349. [Google Scholar] [CrossRef]

- Chen, H.; Yin, H.; Wang, W.; Wang, H.; Nguyen, Q.V.H.; Li, X. PME: Projected Metric Embedding on Heterogeneous Networks for Link Prediction. In Proceedings of the 24th International Conference on Knowledge Discovery & Data Mining (KDD 2018), London, UK, 19–23 August 2018; ACM: London, UK, 2018; pp. 1177–1186. [Google Scholar] [CrossRef]

- Xu, L.; Wei, X.; Cao, J.; Yu, P.S. Embedding of Embedding (EOE): Joint Embedding for Coupled Heterogeneous Networks. In Proceedings of the 10th International Conference on Web Search and Data Mining (WSDM 2017), Cambridge, UK, 6–10 February 2017; ACM: Cambridge, UK, 2017; pp. 741–749. [Google Scholar] [CrossRef]

- Shi, Y.; Gui, H.; Zhu, Q.; Kaplan, L.M.; Han, J. AspEm: Embedding Learning by Aspects in Heterogeneous Information Networks. In Proceedings of the International Conference on Data Mining (SDM 2018), San Diego, CA, USA, 3–5 May 2018; SIAM: San Diego, CA, USA, 2018; pp. 144–152. [Google Scholar] [CrossRef]

- Matsuno, R.; Murata, T. MELL: Effective Embedding Method for Multiplex Networks. In Proceedings of the Companion Proceedings of the Web Conference 2018 (WWW 2018), Lyon, France, 23–27 April 2018; International World Wide Web Conferences Steering Committee: Geneva, Switzerland; ACM: Lyon, France, 2018; pp. 1261–1268. [Google Scholar] [CrossRef]

- Ren, X.; He, W.; Qu, M.; Voss, C.R.; Ji, H.; Han, J. Label Noise Reduction in Entity Typing by Heterogeneous Partial-Label Embedding. In Proceedings of the 22nd International Conference on Knowledge Discovery and Data Mining (KDD 2016), San Francisco, CA, USA, 13–17 August 2016; ACM: San Francisco, CA, USA, 2016; pp. 1825–1834. [Google Scholar] [CrossRef]

- Dettmers, T.; Minervini, P.; Stenetorp, P.; Riedel, S. Convolutional 2D Knowledge Graph Embeddings. In Proceedings of the 32nd Conference on Artificial Intelligence (AAAI 2018), New Orleans, LA, USA, 2–7 February 2018; AAAI Press: New Orleans, LA, USA, 2018; pp. 1811–1818. [Google Scholar]

- Safavi, T.; Koutra, D. CoDEx: A Comprehensive Knowledge Graph Completion Benchmark. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP 2020), Virtual Event, 16–20 November 2020; Webber, B., Cohn, T., He, Y., Liu, Y., Eds.; ACL: Virtual Event, 2020; pp. 8328–8350. [Google Scholar] [CrossRef]

- Narayanan, A.; Chandramohan, M.; Chen, L.; Liu, Y.; Saminathan, S. subgraph2vec: Learning Distributed Representations of Rooted Sub-graphs from Large Graphs. arXiv 2016, arXiv:1606.08928. [Google Scholar]

- Wang, C.; Wang, C.; Wang, Z.; Ye, X.; Yu, P.S. Edge2vec: Edge-based Social Network Embedding. ACM Trans. Knowl. Discov. Data 2020, 14, 45.1–45.24. [Google Scholar] [CrossRef]

- Perozzi, B.; Kulkarni, V.; Chen, H.; Skiena, S. Don’t Walk, Skip!: Online Learning of Multi-scale Network Embeddings. In Proceedings of the International Conference on Advances in Social Networks Analysis and Mining 2017 (ASONAM 2017), Sydney, Australia, 31 July–3 August 2017; ACM: Sydney, Australia, 2017; pp. 258–265. [Google Scholar] [CrossRef]

- Liu, R.; Hirn, M.J.; Krishnan, A. Accurately Modeling Biased Random Walks on Weighted Graphs Using Node2vec+. arXiv 2021, arXiv:2109.08031. [Google Scholar]

- Jeong, J.; Yun, J.; Keam, H.; Park, Y.; Park, Z.; Cho, J. div2vec: Diversity-Emphasized Node Embedding. In Proceedings of the 14th Workshops on Recommendation in Complex Scenarios and the Impact of Recommender Systems co-located with 14th ACM Conference on Recommender Systems (RecSys 2020), Virtual Event, 25 September 2020; CEUR-WS.org: Virtual Event, 2020; Volume 2697. [Google Scholar]

- Zhang, Y.; Shi, Z.; Feng, D.; Zhan, X.X. Degree-Biased Random Walk for Large-Scale Network Embedding. Future Gener. Comput. Syst. 2019, 100, 198–209. [Google Scholar] [CrossRef]

- Ahmed, N.K.; Rossi, R.A.; Lee, J.B.; Willke, T.L.; Zhou, R.; Kong, X.; Eldardiry, H. Role-Based Graph Embeddings. IEEE Trans. Knowl. Data Eng. 2022, 34, 2401–2415. [Google Scholar] [CrossRef]

- Khosla, M.; Leonhardt, J.; Nejdl, W.; Anand, A. Node Representation Learning for Directed Graphs. In Proceedings of the Machine Learning and Knowledge Discovery in Databases—European Conference (ECML PKDD 2019), Dublin, Ireland, 10–14 September 2018; Springer: Würzburg, Germany, 2019; Volume 11906, pp. 395–411. [Google Scholar] [CrossRef]

- Adhikari, B.; Zhang, Y.; Ramakrishnan, N.; Prakash, B.A. Sub2Vec: Feature Learning for Subgraphs. In Proceedings of the 22nd Advances in Knowledge Discovery and Data Mining - Pacific-Asia Conference (PAKDD 2018), Melbourne, VIC, Australia, 3–6 June 2018; Springer: Melbourne, VIC, Australia, 2018; Volume 10938, pp. 170–182. [Google Scholar] [CrossRef]

- Sheikh, N.; Kefato, Z.; Montresor, A. Gat2vec: Representation Learning for Attributed Graphs. Computing 2019, 101, 187–209. [Google Scholar] [CrossRef]

- Dou, W.; Zhang, W.; Weng, Z. An Attributed Network Representation Learning Method Based on Biased Random Walk. Procedia Comput. Sci. 2020, 174, 291–298. [Google Scholar] [CrossRef]

- Narayanan, A.; Chandramohan, M.; Venkatesan, R.; Chen, L.; Liu, Y.; Jaiswal, S. graph2vec: Learning Distributed Representations of Graphs. arXiv 2017, arXiv:1707.05005. [Google Scholar]

- Yang, D.; Qu, B.; Yang, J.; Cudré-Mauroux, P. Revisiting User Mobility and Social Relationships in LBSNs: A Hypergraph Embedding Approach. In Proceedings of the The World Wide Web Conference (WWW 2019), San Francisco, CA, USA, 13–17 May 2019; ACM: San Francisco, CA, USA, 2019; pp. 2147–2157. [Google Scholar] [CrossRef]

- Zhu, Y.; Guan, Z.; Tan, S.; Liu, H.; Cai, D.; He, X. Heterogeneous hypergraph embedding for document recommendation. Neurocomputing 2016, 216, 150–162. [Google Scholar] [CrossRef]

- Hussein, R.; Yang, D.; Cudré-Mauroux, P. Are Meta-Paths Necessary?: Revisiting Heterogeneous Graph Embeddings. In Proceedings of the 27th International Conference on Information and Knowledge Management (CIKM 2018), Torino, Italy, 22–26 October 2018; ACM: Torino, Italy, 2018; pp. 437–446. [Google Scholar] [CrossRef]

- Zhang, H.; Qiu, L.; Yi, L.; Song, Y. Scalable Multiplex Network Embedding. In Proceedings of the 27th International Joint Conference on Artificial Intelligence (IJCAI 2018), Stockholm, Sweden, 13–19 July 2018; ijcai.org: Stockholm, Sweden, 2018; pp. 3082–3088. [Google Scholar] [CrossRef]

- Lee, S.; Park, C.; Yu, H. BHIN2vec: Balancing the Type of Relation in Heterogeneous Information Network. In Proceedings of the 28th International Conference on Information and Knowledge Management (CIKM 2019), Beijing, China, 3–7 November 2019; ACM: Beijing, China, 2019; pp. 619–628. [Google Scholar] [CrossRef]

- Li, Y.; Patra, J.C. Genome-wide inferring gene-phenotype relationship by walking on the heterogeneous network. Bioinformatics 2010, 26, 1219–1224. [Google Scholar] [CrossRef] [PubMed]

- Joodaki, M.; Ghadiri, N.; Maleki, Z.; Shahreza, M.L. A scalable random walk with restart on heterogeneous networks with Apache Spark for ranking disease-related genes through type-II fuzzy data fusion. J. Biomed. Informatics 2021, 115, 103688. [Google Scholar] [CrossRef]

- Tian, Z.; Guo, M.; Wang, C.; Xing, L.; Wang, L.; Zhang, Y. Constructing an integrated gene similarity network for the identification of disease genes. In Proceedings of the International Conference on Bioinformatics and Biomedicine (BIBM 2016), Shenzhen, China, 15–18 December 2016; IEEE Computer Society: Shenzhen, China, 2016; pp. 1663–1668. [Google Scholar] [CrossRef]

- Luo, J.; Liang, S. Prioritization of potential candidate disease genes by topological similarity of protein-protein interaction network and phenotype data. J. Biomed. Sci. 2015, 53, 229–236. [Google Scholar] [CrossRef]

- Lee, O.-J.; Jeon, H.; Jung, J.J. Learning multi-resolution representations of research patterns in bibliographic networks. J. Inf. 2021, 15, 101126. [Google Scholar] [CrossRef]

- Du, B.; Tong, H. MrMine: Multi-resolution Multi-network Embedding. In Proceedings of the 28th International Conference on Information and Knowledge Management (CIKM 2019), Beijing, China, 3–7 November 2019; ACM: Beijing, China, 2019; pp. 479–488. [Google Scholar] [CrossRef]

- Lee, O.-J.; Jung, J.J. Story embedding: Learning distributed representations of stories based on character networks. Artif. Intell. 2020, 281, 103235. [Google Scholar] [CrossRef]

- Sajjad, H.P.; Docherty, A.; Tyshetskiy, Y. Efficient Representation Learning Using Random Walks for Dynamic Graphs. arXiv 2019, arXiv:1901.01346. [Google Scholar]

- Heidari, F.; Papagelis, M. EvoNRL: Evolving Network Representation Learning Based on Random Walks. In Proceedings of the 7th International Conference on Complex Networks and Their Applications (COMPLEX NETWORKS 2018), Cambridge, UK, 11–13 December 2018; Springer: Cambridge, UK, 2018; Volume 812, pp. 457–469. [Google Scholar] [CrossRef]

- Nguyen, G.H.; Lee, J.B.; Rossi, R.A.; Ahmed, N.K.; Koh, E.; Kim, S. Continuous-Time Dynamic Network Embeddings. In Proceedings of the Companion of The Web Conference (WWW 2018), Lyon, France, 23–27 April 2018; ACM: Lyon, France, 2018; pp. 969–976. [Google Scholar] [CrossRef]

- Pandhre, S.; Mittal, H.; Gupta, M.; Balasubramanian, V.N. STwalk: Learning trajectory representations in temporal graphs. In Proceedings of the India Joint International Conference on Data Science and Management of Data (COMAD/CODS 2018), Goa, India, 11–13 January 2018; ACM: Goa, India, 2018; pp. 210–219. [Google Scholar] [CrossRef]

- Singer, U.; Guy, I.; Radinsky, K. Node Embedding over Temporal Graphs. In Proceedings of the 28th International Joint Conference on Artificial Intelligence (IJCAI 2019), Macao, China, 10–16 August 2019; ijcai.org: Macao, China, 2019; pp. 4605–4612. [Google Scholar] [CrossRef]

- Mahdavi, S.; Khoshraftar, S.; An, A. dynnode2vec: Scalable Dynamic Network Embedding. In Proceedings of the International Conference on Big Data (IEEE BigData 2018), Seattle, WA, USA, 10–13 December 2018; IEEE: Seattle, WA, USA, 2018; pp. 3762–3765. [Google Scholar] [CrossRef]

- Lin, D.; Wu, J.; Yuan, Q.; Zheng, Z. T-EDGE: Temporal WEighted MultiDiGraph Embedding for Ethereum Transaction Network Analysis. Front. Phys. 2020, 8, 204. [Google Scholar] [CrossRef]

- Lee, O.-J.; Jung, J.J.; Kim, J. Learning Hierarchical Representations of Stories by Using Multi-Layered Structures in Narrative Multimedia. Sensors 2020, 20, 1978. [Google Scholar] [CrossRef] [PubMed]

- Lee, O.-J.; Jung, J.J. Story Embedding: Learning Distributed Representations of Stories based on Character Networks (Extended Abstract). In Proceedings of the 29th International Joint Conference on Artificial Intelligence (IJCAI 2020), Yokohama, Japan, 7–15 January 2021; Bessiere, C., Ed.; ijcai.org: Yokohama, Japan, 2020; pp. 5070–5074. [Google Scholar] [CrossRef]

- Chen, H.; Perozzi, B.; Hu, Y.; Skiena, S. HARP: Hierarchical Representation Learning for Networks. In Proceedings of the 32nd Conference on Artificial Intelligence (AAAI-18), New Orleans, LA, USA, 2–7 February 2018; AAAI Press: New Orleans, LA, USA, 2018; pp. 2127–2134. [Google Scholar]

- Tang, J.; Qu, M.; Mei, Q. PTE: Predictive Text Embedding through Large-scale Heterogeneous Text Networks. In Proceedings of the 21th International Conference on Knowledge Discovery and Data Mining (SIGKDD 2015), Sydney, Australia, 10–13 August 2015; ACM: Sydney, Australia, 2015; pp. 1165–1174. [Google Scholar] [CrossRef]

- Fu, T.; Lee, W.; Lei, Z. HIN2Vec: Explore Meta-paths in Heterogeneous Information Networks for Representation Learning. In Proceedings of the Conference on Information and Knowledge Management (CIKM 2017), Singapore, 6–10 November 2017; ACM: Singapore, 2017; pp. 1797–1806. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On Information and Sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Wei, X.; Xu, L.; Cao, B.; Yu, P.S. Cross View Link Prediction by Learning Noise-resilient Representation Consensus. In Proceedings of the 26th International Conference on World Wide Web (WWW 2017), Perth, Australia, 3–7 April 2017; ACM: Perth, Australia, 2017; pp. 1611–1619. [Google Scholar] [CrossRef]

- Liu, L.; Cheung, W.K.; Li, X.; Liao, L. Aligning Users across Social Networks Using Network Embedding. In Proceedings of the 25th International Joint Conference on Artificial Intelligence (IJCAI 2016), New York, NY, USA, 9–15 July 2016; IJCAI/AAAI Press: New York, NY, USA, 2016; pp. 1774–1780. [Google Scholar]

- Wang, S.; Tang, J.; Aggarwal, C.C.; Chang, Y.; Liu, H. Signed Network Embedding in Social Media. In Proceedings of the International Conference on Data Mining (SIAM 2017), Houston, Texas, USA, 27–29 April 2017; SIAM: Houston, TX, USA, 2017; pp. 327–335. [Google Scholar] [CrossRef]

- Huang, Z.; Mamoulis, N. Heterogeneous Information Network Embedding for Meta Path based Proximity. arXiv 2017, arXiv:1701.05291. [Google Scholar]

- Zhang, Q.; Wang, H. Not All Links Are Created Equal: An Adaptive Embedding Approach for Social Personalized Ranking. In Proceedings of the 39th International Conference on Research and Development in Information Retrieval (SIGIR 2016), Pisa, Italy, 17–21 July 2016; ACM: Pisa, Italy, 2016; pp. 917–920. [Google Scholar] [CrossRef]

- Wang, J.; Lu, Z.; Song, G.; Fan, Y.; Du, L.; Lin, W. Tag2Vec: Learning Tag Representations in Tag Networks. In Proceedings of the World Wide Web Conference (WWW 2019), San Francisco, CA, USA, 13–17 May 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 3314–3320. [Google Scholar] [CrossRef]

- Fu, G.; Yuan, B.; Duan, Q.; Yao, X. Representation Learning for Heterogeneous Information Networks via Embedding Events. In Proceedings of the 26th International Conference (ICONIP 2019), Sydney, Australia, 12–15 December 2019; Springer: Sydney, Australia, 2019; Volume 11953, pp. 327–339. [Google Scholar] [CrossRef]

- Wu, X.; Pang, H.; Fan, Y.; Linghu, Y.; Luo, Y. ProbWalk: A random walk approach in weighted graph embedding. In Proceedings of the 10th International Conference of Information and Communication Technology (ICICT 2020), Wuhan, China, 13–15 November 2020; Elsevier Procedia: Wuhan, China, 2021; Volume 183, pp. 683–689. [Google Scholar] [CrossRef]

- Li, Q.; Cao, Z.; Zhong, J.; Li, Q. Graph Representation Learning with Encoding Edges. Neurocomputing 2019, 361, 29–39. [Google Scholar] [CrossRef]

- Li, Q.; Zhong, J.; Li, Q.; Cao, Z.; Wang, C. Enhancing Network Embedding with Implicit Clustering. In Proceedings of the 24th Database Systems for Advanced Applications (DASFAA 2019), Chiang Mai, Thailand, 22–25 April 2019; Springer: Chiang Mai, Thailand, 2019; Volume 11446, pp. 452–467. [Google Scholar] [CrossRef]

- Gao, H.; Huang, H. Deep Attributed Network Embedding. In Proceedings of the 27th International Joint Conference on Artificial Intelligence (IJCAI 2018), Stockholm, Sweden, 13–19 July 2018; Lang, J., Ed.; ijcai.org: Stockholm, Sweden, 2018; pp. 3364–3370. [Google Scholar] [CrossRef]

- Sun, X.; Guo, J.; Ding, X.; Liu, T. A General Framework for Content-enhanced Network Representation Learning. arXiv 2016, arXiv:1610.02906. [Google Scholar]

- Zhang, D.; Yin, J.; Zhu, X.; Zhang, C. Homophily, Structure, and Content Augmented Network Representation Learning. In Proceedings of the 16th International Conference on Data Mining (ICDM 2016), Barcelona, Spain, 12–15 December 2016; Bonchi, F., Domingo-Ferrer, J., Baeza-Yates, R., Zhou, Z., Wu, X., Eds.; IEEE Computer Society: Barcelona, Spain, 2016; pp. 609–618. [Google Scholar] [CrossRef]

- Chen, H.; Anantharam, A.R.; Skiena, S. DeepBrowse: Similarity-Based Browsing Through Large Lists (Extended Abstract). In Proceedings of the 10th International Conference on Similarity Search and Applications (SISAP 2017), Munich, Germany, 4–6 October 2017; Beecks, C., Borutta, F., Kröger, P., Seidl, T., Eds.; Springer: Munich, Germany, 2017; Volume 10609, pp. 300–314. [Google Scholar] [CrossRef]

- Cao, S.; Lu, W.; Xu, Q. Deep Neural Networks for Learning Graph Representations. In Proceedings of the 30th Conference on Artificial Intelligence (AAAI 2016), Phoenix, AZ, USA, 12–17 February 2016; Schuurmans, D., Wellman, M.P., Eds.; AAAI Press: Phoenix, AZ, USA, 2016; pp. 1145–1152. [Google Scholar]

- Shen, X.; Chung, F. Deep Network Embedding for Graph Representation Learning in Signed Networks. Trans. Cybern. 2020, 50, 1556–1568. [Google Scholar] [CrossRef]

- Goyal, P.; Kamra, N.; He, X.; Liu, Y. DynGEM: Deep Embedding Method for Dynamic Graphs. arXiv 2018, arXiv:1805.11273. [Google Scholar]