Multi-Head Spatiotemporal Attention Graph Convolutional Network for Traffic Prediction

Abstract

1. Introduction

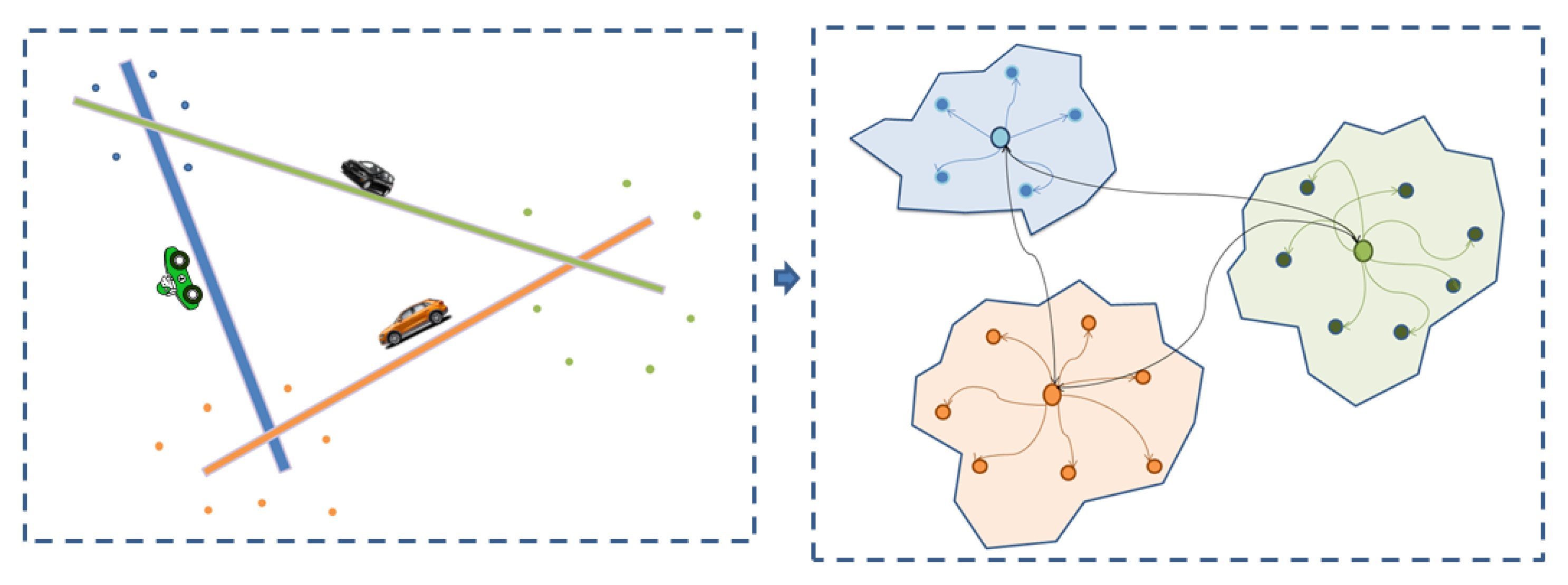

- This study proposes a spatiotemporal multi-head attention graph convolutional network to model the non-Euclidean temporal interrelationships between road networks to predict traffic flow.

- The proposed model translates traffic features into learned embedding representations with a graph convolution network and then transforms them as time series sequences into a recurrent network using internal states and memory to model the temporal representations.

- This study estimates the importance of features via a multi-head attention mechanism to generate a context vector of significant weights, emphasizing relevant features which play the most crucial role in the traffic forecast at a particular time.

2. Related Work

2.1. Traffic Prediction

2.2. Graph Convolutional Networks

2.3. Attention Mechanism

3. Multi-Head Spatiotemporal Attention GCN

3.1. Graph Convolutional Network (GCN)

3.2. Gated Recurrent Unit (GRU)

3.3. Attention Model

3.4. Loss Function

3.5. Overview

4. Experimental Analysis

4.1. Dataset

4.2. Metrics

- Mean absolute error (MAE): The MAE is also known as the -norm loss and is computed as the mean sum of the absolute error, which is the absolute difference between the absolute values and the actual values. The MAE is non-negative, and it is more suitable for penalizing smaller errors. The best MAE score is 0.0, and it is computed as shown in Equation (12).

- Root mean square error (RMSE): The RMSE is the square root of the squared error differences, and it measures the standard deviation of the predicted errors or how spread out the errors are. This details how concentrated the predicted data are along the line of best fit and is computed as shown in Equation (13).

- The score or the coefficient of determination expresses the distribution of the variance of actual labels that the independent variables in the model have interpreted. The best score is 1, and it measures the model’s capability to predict new data correctly. It is computed as shown in Equation (14).

- Explained variation (VAR): Explained variation measures the proportion of model variation influenced by actual factors in the data rather than the error variance. It is computed as shown in Equation (15).

- Accuracy: Accuracy describes how close the model prediction is to the actual values, whereby a perfect accuracy would result in a score of 1. It is defined as shown in Equation (16).where y is the actual traffic value, is the predicted value, is the average of y, and represents the Frobenius norm.

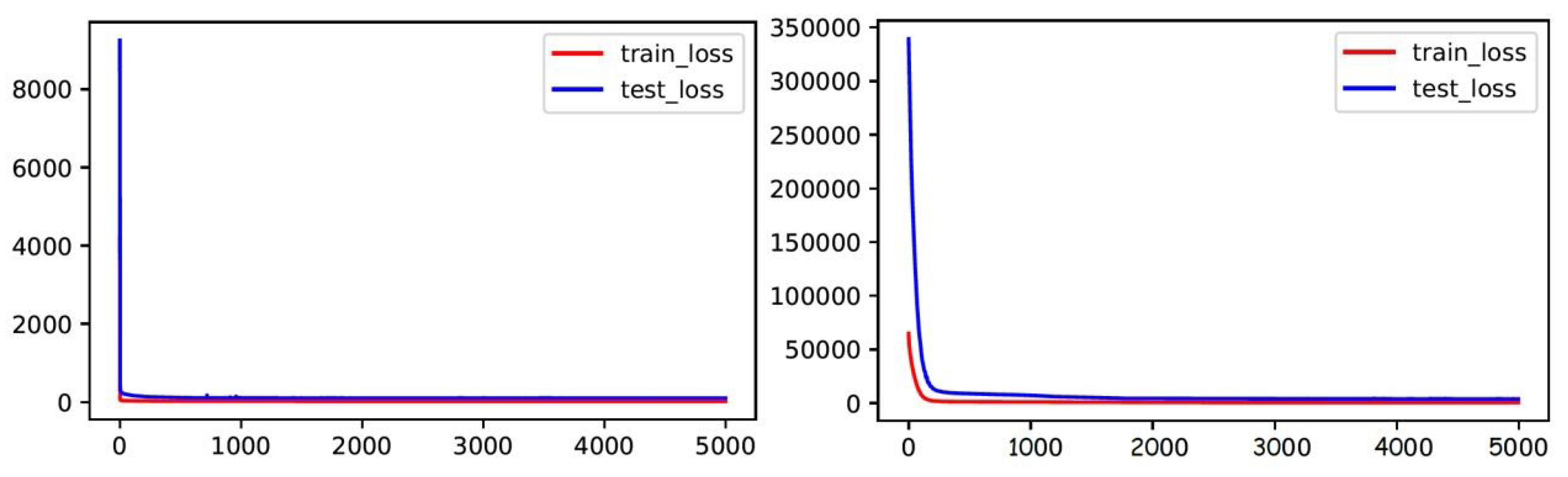

4.3. Training Details

4.4. Baselines

- History average model (HA) [48]: this technique computes the prediction by averaging the total amount of available historical data by classifying them into periods.

- Graph convolutional network model (GCN) [49]: the GCN model uses the feature and adjacency matrix attributes to model the spatial features of the traffic data by treating the roads as nodes alongside their connectivity.

- Gated recurrent unit model (GRU) [34]: to handle the temporal complexity of the urban roads, a recurrent model with a gated flow of states is implemented to follow the sequential time analysis.

4.5. Experimental Results

4.6. Ablation Study

- Temporal graph convolutional network (T-GCN) [34]: this technique also combines a recurrent network with a graph convolutional network to manage the complex spatiotemporal topological structure; however, any attention model is used.

- Attention temporal graph convolutional network (A3T-GCN) [38]: the A3T-GCN model explores the impact of a different attention mechanism (soft attention model) on traffic forecasts.

4.7. Visualization Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zheng, C.; Fan, X.; Wang, C.; Qi, J. GMAN: A Graph Multi-Attention Network for Traffic Prediction. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence (AAAI 20), New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Chen, J.; Liao, S.; Hou, J.; Wang, K.; Wen, J. GST-GCN: A Geographic-Semantic-Temporal Graph Convolutional Network for Context-aware Traffic Flow Prediction on Graph Sequences. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 1604–1609. [Google Scholar]

- Yao, H.; Tang, X.; Wei, H.; Zheng, G.; Li, Z. Revisiting Spatial-Temporal Similarity: A Deep Learning Framework for Traffic Prediction. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI ’19), Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Dai, R.; Xu, S.; Gu, Q.; Ji, C.; Liu, K. Hybrid Spatio- Temporal Graph Convolutional Network: Improving Traffic Prediction with Navigation Data. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Long Beach, CA, USA, 6–10 July 2020. [Google Scholar]

- Tawfeek, M.; El-Basyouny, K. Estimating Traffic Volume on Minor Roads at Rural Stop-Controlled Intersections using Deep Learning. Transp. Res. Rec. 2019, 2673, 108–116. [Google Scholar] [CrossRef]

- Heber, H.; Elisabete, A.; Heriberto, P.; Irantzu, A.; María, G.; Ribero, M.; Fernandez, K. Managing Traffic Data through Clustering and Radial Basis Functions. Sustainability 2021, 13, 2846. [Google Scholar]

- Rehman, A.U.; Rehman, S.U.; Khan, M.U.; Alazab, M.; Thippa Reddy, G. CANintelliIDS: Detecting In-Vehicle Intrusion Attacks on a Controller Area Network Using CNN and Attention-Based GRU. IEEE Trans. Netw. Sci. Eng. 2021, 8, 1456–1466. [Google Scholar]

- Sun, X.; Ma, S.; Li, Y.; Wang, D.; Li, Z.; Wang, N.; Gui, G. Enhanced Echo-State Restricted Boltzmann Machines for Network Traffic Prediction. IEEE Internet Things J. 2020, 7, 1287–1297. [Google Scholar] [CrossRef]

- Yin, X.; Wu, G.; Wei, J.; Shen, Y.; Qi, H.; Yin, B. A Comprehensive Survey on Traffic Prediction. arXiv 2020, arXiv:2004.08555. [Google Scholar]

- Peng, W.; Hong, X.; Chen, H.; Zhao, G. Learning Graph Convolutional Network for Skeleton-based Human Action Recognition by Neural Searching. arXiv 2020, arXiv:1911.04131. [Google Scholar] [CrossRef]

- LeCun, Y.; Haffner, P.; Bottou, L.; Bengio, Y. Object Recognition with Gradient-Based Learning. In Shape, Contour and Grouping in Computer Vision; Springer: Berlin/Heidelberg, Germany, 1999. [Google Scholar]

- Chen, L.; Wu, L.; Hong, R.; Zhang, K.; Wang, M. Re-visiting Graph based Collaborative Filtering: A Linear Residual Graph Convolutional Network Approach. arXiv 2020, arXiv:2001.10167. [Google Scholar]

- Oluwasanmi, A.; Aftab, M.U.; Alabdulkreem, E.; Kumeda, B.; Baagyere, E.; Qin, Z. CaptionNet: Automatic End-to-End Siamese Difference Captioning Model with Attention. IEEE Access 2019, 7, 106773–106783. [Google Scholar] [CrossRef]

- Chen, W.; Chen, L.; Xie, Y.; Cao, W.; Gao, Y.; Feng, X. Multi-Range Attentive Bicomponent Graph Con- volutional Network for Traffic Forecasting. arXiv 2020, arXiv:1911.12093. [Google Scholar]

- Oluwasanmi, A.; Frimpong, E.; Aftab, M.U.; Baagyere, E.; Qin, Z.; Ullah, K. Fully Convolutional CaptionNet: Siamese Difference Captioning Attention Model. IEEE Access 2019, 7, 175929–175939. [Google Scholar] [CrossRef]

- Yang, G.; Wen, J.; Yu, D.; Zhang, S. Spatial-Temporal Dilated and Graph Convolutional Network for traffic pre-diction. In Proceedings of the 2020 Chinese Automation Congress (CAC), Shanghai, China, 6–8 November 2020; pp. 802–806. [Google Scholar]

- Yan, Z.; Yang, K.; Wang, Z.; Yang, B.; Kaizuka, T.; Nakano, K. Time to lane change and completion prediction based on Gated Recurrent Unit Network. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 102–107. [Google Scholar]

- Xiaoming, S.; Qi, H.; Shen, Y.; Wu, G.; Yin, B. A Spatial-Temporal Attention Approach for Traffic Prediction. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4909–4918. [Google Scholar]

- Oluwasanmi, A.; Aftab, M.U.; Shokanbi, A.; Jackson, J.; Kumeda, B.; Qin, Z. Attentively Conditioned Generative Adversarial Network for Semantic Segmentation. IEEE Access 2020, 22, 31733–31741. [Google Scholar] [CrossRef]

- Yang, L. Uncertainty prediction method for traffic flow based on K-nearest neighbor algorithm. J. Intell. Fuzzy Syst. 2020, 39, 1489–1499. [Google Scholar] [CrossRef]

- Luo, C.; Huang, C.; Cao, J.; Lu, J.; Huang, W.; Guo, J.; Wei, Y. Short-Term Traffic Flow Prediction Based on Least Square Support Vector Machine with Hybrid Optimization Algorithm. Neural Process. Lett. 2019, 50, 2305–2322. [Google Scholar] [CrossRef]

- Li, Z.; Jiang, S.; Li, L.; Li, Y. Building sparse models for traffic flow prediction: An empirical comparison between statistical heuristics and geometric heuristics for Bayesian network approaches. Transp. Transp. Dyn. 2019, 7, 107–123. [Google Scholar] [CrossRef]

- Ahmed, M.S.; Cook, A.R. Analysis of freeway traffic time-series data by using Box-Jenkins techniques. Transp. Res. Rec. 1979, 722, 1–9. [Google Scholar]

- Ma, X.; Dai, Z.; He, Z.; Ma, J.; Wang, Y.; Wang, Y. Learning Traffic as Images: A Deep Convolutional Neural Network for Large-Scale Transportation Network Speed Prediction. Sensors 2017, 17, 818. [Google Scholar] [CrossRef]

- Chung, J.; Lc¸ehre, C.G.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Fu, X.; Luo, W.; Xu, C.; Zhao, X. Short-Term Traffic Speed Prediction Method for Urban Road Sections Based on Wavelet Transform and Gated Recurrent Unit. Math. Probl. Eng. 2020, 2020, 3697625. [Google Scholar] [CrossRef]

- Osipov, V.; Nikiforov, V.; Zhukova, N.; Miloserdov, D. Urban traffic flows forecasting by recurrent neural networks with spiral structures of layers. Neural Comput. Appl. 2020, 32, 14885–14889. [Google Scholar] [CrossRef]

- Navarro-Espinoza, A.; López-Bonilla, O.R.; García-Guerrero, E.E.; Tlelo-Cuautle, E.; López-Mancilla, D.; Hernández-Mejía, C.; Inzunza-González, E. Traffic Flow Prediction for Smart Traffic Lights Using Machine Learning Algorithms. Technologies 2022, 10, 5. [Google Scholar] [CrossRef]

- Zhang, J.; Zheng, Y.; Qi, D.; Li, R.; Yi, X. Dnn-based prediction model for spatio-temporal data. In Proceedings of the 24th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Burlingame, CA, USA, 31 October–3 November 2016; pp. 1–4. [Google Scholar]

- Kawada, K.; Yokoya, Y. Prediction of Collision risk Based on Driver’s Behavior in Anticipation of a Traffic Accident Risk: (Classification of Videos from Front Camera by Using CNN and RNN). In Proceedings of the Transportation and Logistics Conference, Dubrovnik, Croatia, 12–14 June 2019. [Google Scholar]

- Lv, Z.; Xu, J.; Zheng, K.; Yin, H.; Zhao, P.; Zhou, X. LC-RNN: A Deep Learning Model for Traffic Speed Prediction. In Proceedings of the 32nd International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018. [Google Scholar]

- Guo, J.; Liu, Y.; Yang, Q.; Wang, Y.; Fang, S. GPS-based citywide traffic congestion forecasting using CNN-RNN and C3D hybrid model. Transp. A Transp. Sci. 2020, 17, 190–211. [Google Scholar] [CrossRef]

- Zhao, L.; Song, Y.; Zhang, C.; Liu, Y.; Wang, P.; Lin, T.; Deng, M.; Li, H. T-GCN: A Temporal Graph Convolutional Network for Traffic Prediction. IEEE Trans. Intell. Transp. Syst. 2020, 21, 3848–3858. [Google Scholar] [CrossRef]

- Zhang, Q.; Jin, Q.; Chang, J.; Xiang, S.; Pan, C. Kernel- Weighted Graph Convolutional Network: A Deep Learning Approach for Traffic Forecasting. In Proceedings of the 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 1018–1023. [Google Scholar]

- Wei, L.; Yu, Z.; Jin, Z.; Xie, L.; Huang, J.; Cai, D.; He, X.; Hua, X. Dual Graph for Traffic Forecasting. IEEE Access 2019. [Google Scholar] [CrossRef]

- Guo, K.; Hu, Y.; Qian, Z.; Liu, H.; Zhang, K.; Sun, Y.; Gao, J.; Yin, B. Optimized Graph Convolution Recurrent Neural Network for Traffic Prediction. IEEE Trans. Intell. Transp. Syst. 2021, 22, 1138–1149. [Google Scholar] [CrossRef]

- Zhu, J.; Song, Y.; Zhao, L.; Li, H. A3T-GCN: Attention Temporal Graph Convolutional Network for Traffic Forecasting. arXiv 2020, arXiv:2006.11583. [Google Scholar]

- Zhu, J.; Wang, Q.; Tao, C.; Deng, H.; Zhao, L.; Li, H. AST-GCN: Attribute-Augmented Spatiotemporal Graph Convolutional Network for Traffic Forecasting. IEEE Access 2021, 9, 35973–35983. [Google Scholar] [CrossRef]

- Wu, T.; Hsieh, C.; Chen, Y.; Chi, P.; Lee, H. Hand-crafted Attention is All You Need? A Study of Attention on Self- supervised Audio Transformer. arXiv 2020, arXiv:2006.05174. [Google Scholar]

- Oluwasanmi, A.; Aftab, M.U.; Qin, Z.; Son, N.T.; Doan, T.; Nguyen, S.B.; Nguyen, S.H.; Nguyen, G.H. Features to Text: A Comprehensive Survey of Deep Learning on Se- mantic Segmentation and Image Captioning. Complexity 2021, 2021, 5538927. [Google Scholar]

- Guo, S.; Lin, Y.; Feng, N.; Song, C.; Wan, H. Attention Based Spatial-Temporal Graph Convolutional Networks for Traffic Flow Forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI ’19), Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Zhang, H.; Liu, J.; Tang, Y.; Xiong, G. Attention based Graph Convolution Networks for Intelligent Traffic Flow Analysis. In Proceedings of the IEEE 16th International Conference on Automation Science and Engineering (CASE), Hong Kong, China, 20–21 August 2020; pp. 558–563. [Google Scholar]

- Song, Q.; Ming, R.; Hu, J.; Niu, H.; Gao, M. Graph Attention Convolutional Network: Spatiotemporal Modeling for Urban Traffic Prediction. In Proceedings of the IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–6. [Google Scholar]

- Zhang, K.; He, F.; Zhang, Z.; Lin, X.; Li, M. Graph attention temporal convolutional network for traffic speed forecasting on road networks. Transp. B Transp. Dyn. 2021, 9, 153–171. [Google Scholar] [CrossRef]

- Xie, Y.; Xiong, Y.; Zhu, Y. SAST-GNN: A Self-Attention Based Spatio-Temporal Graph Neural Network for Traffic Prediction. In Proceedings of the International Conference on Database Systems for Advanced Applications, Jeju, Repulic of Korea, 20–24 September 2020. [Google Scholar]

- Cai, L.; Janowicz, K.; Mai, G.; Yan, B.; Zhu, R. Traffic transformer: Capturing the continuity and periodicity of time series for traffic forecasting. Trans. GIS 2020, 24, 736–755. [Google Scholar] [CrossRef]

- Liu, J.; Guan, W. A summary of traffic flow forecasting methods. J. Highway Transp. Res. Develop. 2004, 21, 82–85. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

| Time (min) | Metric | SZ-Taxi | Los-Loop |

|---|---|---|---|

| 0.8553 | 0.8659 | ||

| Var | 0.8553 | 0.8659 | |

| 15 | MAE | 2.6624 | 3.0126 |

| RMSE | 3.8017 | 4.8812 | |

| Accuracy | 0.7372 | 0.9183 | |

| 0.8499 | 0.8153 | ||

| Var | 0.8499 | 0.8153 | |

| 30 | MAE | 2.7101 | 3.4800 |

| RMSE | 3.8801 | 5.6744 | |

| Accuracy | 0.7360 | 0.8992 | |

| 0.8471 | 0.7694 | ||

| Var | 0.8473 | 0.7694 | |

| 45 | MAE | 2.7221 | 4.1098 |

| RMSE | 3.9014 | 6.6127 | |

| Accuracy | 0.7301 | 0.8875 | |

| 0.8466 | 0.7521 | ||

| Var | 0.8466 | 0.7553 | |

| 60 | MAE | 2.7361 | 4.2190 |

| RMSE | 3.9678 | 7.0165 | |

| Accuracy | 0.7274 | 0.8812 |

| Metric | Time | SZ-Taxi | Los-Loop | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| HA [48] | GCN [49] | GRU [34] | T-GCN [34] | A3T-GCN [38] | MHSA–GCN | HA [48] | GCN [49] | GRU [34] | T-GCN [38] | A3T-GCN [27] | MHSA–GCN | ||

| 15 min | 0.8307 | 0.6654 | 0.8329 | 0.8541 | 0.8512 | 0.8553 | 0.7121 | 0.6843 | 0.8576 | 0.8634 | 0.8653 | 0.8659 | |

| 30 min | 0.8307 | 0.6616 | 0.8249 | 0.8456 | 0.8493 | 0.8499 | 0.7121 | 0.6402 | 0.7957 | 0.8098 | 0.8137 | 0.8153 | |

| 45 min | 0.8307 | 0.6589 | 0.8198 | 0.8441 | 0.8474 | 0.8471 | 0.7121 | 0.5999 | 0.7446 | 0.7679 | 0.7694 | 0.7694 | |

| 60 min | 0.8307 | 0.6564 | 0.8266 | 0.8422 | 0.8454 | 0.8466 | 0.7121 | 0.5583 | 0.6980 | 0.7283 | 0.7407 | 0.7521 | |

| Var | 15 min | 0.8307 | 0.6655 | 0.8329 | 0.8541 | 0.8512 | 0.8553 | 0.7121 | 0.6844 | 0.8577 | 0.8634 | 0.8653 | 0.8659 |

| 30 min | 0.8307 | 0.6617 | 0.8250 | 0.8457 | 0.8493 | 0.8499 | 0.7121 | 0.6404 | 0.7958 | 0.8100 | 0.8137 | 0.8153 | |

| 45 min | 0.8307 | 0.6590 | 0.8199 | 0.8441 | 0.8474 | 0.8473 | 0.7121 | 0.6001 | 0.7451 | 0.7684 | 0.7705 | 0.7694 | |

| 60 min | 0.8307 | 0.6564 | 0.8267 | 0.8423 | 0.8454 | 0.8466 | 0.7121 | 0.5593 | 0.6984 | 0.7290 | 0.7415 | 0.7553 | |

| MAE | 15 min | 2.7815 | 4.2367 | 2.5955 | 2.7117 | 2.6840 | 2.6624 | 4.0145 | 5.3525 | 3.0602 | 3.1802 | 3.1365 | 3.0126 |

| 30 min | 2.7815 | 4.2647 | 2.6906 | 2.7410 | 2.7038 | 2.7101 | 4.0145 | 5.6118 | 3.6505 | 3.7466 | 3.6610 | 3.4800 | |

| 45 min | 2.7815 | 4.2844 | 2.7743 | 2.7612 | 2.7261 | 2.7221 | 4.0145 | 5.9534 | 4.0915 | 4.1158 | 4.1712 | 4.1098 | |

| 60 min | 2.7815 | 4.3034 | 2.7712 | 2.7889 | 2.7391 | 2.7361 | 4.0145 | 6.2892 | 4.5186 | 4.6021 | 4.2343 | 4.2190 | |

| RMSE | 15 min | 4.2951 | 5.6596 | 3.9994 | 3.9265 | 3.8989 | 3.8017 | 7.4427 | 7.7922 | 5.2182 | 5.1264 | 5.0904 | 4.8812 |

| 30 min | 4.2951 | 5.6918 | 4.0942 | 3.9663 | 3.9228 | 3.8801 | 7.4427 | 8.3353 | 6.2802 | 6.0598 | 5.9974 | 5.6744 | |

| 45 min | 4.2951 | 5.7142 | 4.1534 | 3.9859 | 3.9461 | 3.9014 | 7.4427 | 8.8036 | 7.0343 | 6.7065 | 6.6840 | 6.6127 | |

| 60 min | 4.2951 | 5.7361 | 4.0747 | 4.0048 | 3.9707 | 3.9678 | 7.4427 | 9.2657 | 7.6621 | 7.2677 | 7.0990 | 7.0165 | |

| Accuracy | 15 min | 0.7008 | 0.6107 | 0.7249 | 0.7299 | 0.7318 | 0.7372 | 0.8733 | 0.8673 | 0.9109 | 0.9127 | 0.9133 | 0.9183 |

| 30 min | 0.7008 | 0.6085 | 0.7184 | 0.7272 | 0.7302 | 0.7360 | 0.8733 | 0.8581 | 0.8931 | 0.8968 | 0.8979 | 0.8992 | |

| 45 min | 0.7008 | 0.6069 | 0.7143 | 0.7258 | 0.7286 | 0.7301 | 0.8733 | 0.8500 | 0.8801 | 0.8857 | 0.8861 | 0.8875 | |

| 60 min | 0.7008 | 0.6054 | 0.7197 | 0.7243 | 0.7269 | 0.7274 | 0.8733 | 0.8421 | 0.8694 | 0.8762 | 0.8790 | 0.8812 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oluwasanmi, A.; Aftab, M.U.; Qin, Z.; Sarfraz, M.S.; Yu, Y.; Rauf, H.T. Multi-Head Spatiotemporal Attention Graph Convolutional Network for Traffic Prediction. Sensors 2023, 23, 3836. https://doi.org/10.3390/s23083836

Oluwasanmi A, Aftab MU, Qin Z, Sarfraz MS, Yu Y, Rauf HT. Multi-Head Spatiotemporal Attention Graph Convolutional Network for Traffic Prediction. Sensors. 2023; 23(8):3836. https://doi.org/10.3390/s23083836

Chicago/Turabian StyleOluwasanmi, Ariyo, Muhammad Umar Aftab, Zhiguang Qin, Muhammad Shahzad Sarfraz, Yang Yu, and Hafiz Tayyab Rauf. 2023. "Multi-Head Spatiotemporal Attention Graph Convolutional Network for Traffic Prediction" Sensors 23, no. 8: 3836. https://doi.org/10.3390/s23083836

APA StyleOluwasanmi, A., Aftab, M. U., Qin, Z., Sarfraz, M. S., Yu, Y., & Rauf, H. T. (2023). Multi-Head Spatiotemporal Attention Graph Convolutional Network for Traffic Prediction. Sensors, 23(8), 3836. https://doi.org/10.3390/s23083836