A Multi-Modal Under-Sensorized Wearable System for Optimal Kinematic and Muscular Tracking of Human Upper Limb Motion

Abstract

1. Introduction

2. Methods

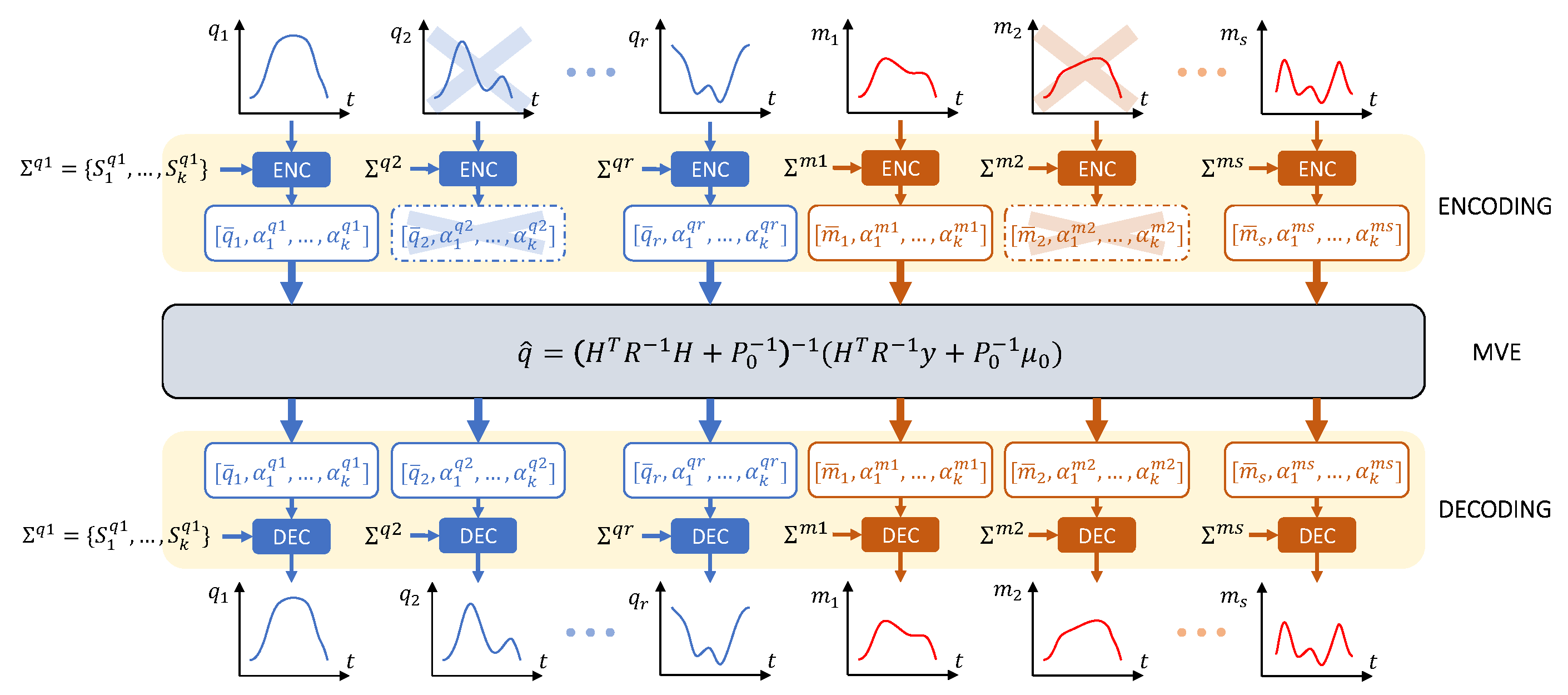

2.1. Theoretical Foundations: Minimum Variance Estimation (MVE)

2.1.1. Encoding and Decoding Phases: Functional Principal Component Analysis

2.1.2. Estimation Phase: Minimum Variance Estimation

2.2. Musculoskeletal Model and Sensor Choice

2.3. Unscented Kalman Filter for Joint Angles Estimation via IMUs

3. Experimental Setup

3.1. IMU Processing

3.2. IMU Frames Calibration

3.3. EMG Processing

3.4. From XSENS Quaternions to Joint Angles

4. Results

4.1. UKF Validation

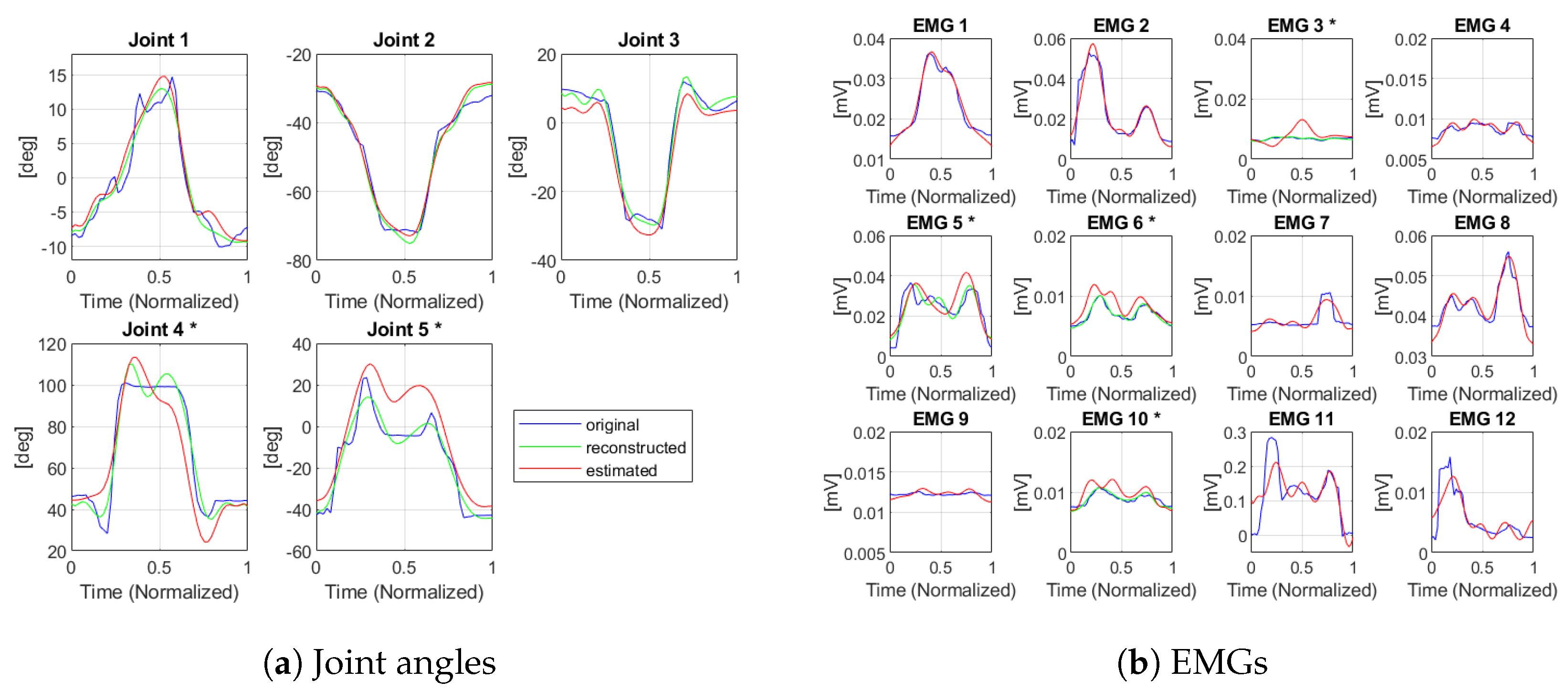

4.2. MVE Validation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bai, L.; Pepper, M.G.; Yan, Y.; Phillips, M.; Sakel, M. Low Cost Inertial Sensors for the Motion Tracking and Orientation Estimation of Human Upper Limbs in Neurological Rehabilitation. IEEE Access 2020, 8, 54254–54268. [Google Scholar] [CrossRef]

- Worsey, M.T.; Espinosa, H.G.; Shepherd, J.B.; Thiel, D.V. Inertial sensors for performance analysis in combat sports: A systematic review. Sports 2019, 7, 28. [Google Scholar] [CrossRef] [PubMed]

- Rana, M.; Mittal, V. Wearable sensors for real-time kinematics analysis in sports: A review. IEEE Sens. J. 2020, 21, 1187–1207. [Google Scholar] [CrossRef]

- Lasota, P.A.; Fong, T.; Shah, J.A. A survey of methods for safe human-robot interaction. Found. Trends Robot. 2017, 5, 261–349. [Google Scholar] [CrossRef]

- Maurice, P.; Malaisé, A.; Amiot, C.; Paris, N.; Richard, G.J.; Rochel, O.; Ivaldi, S. Human movement and ergonomics: An industry-oriented dataset for collaborative robotics. Int. J. Robot. Res. 2019, 38, 1529–1537. [Google Scholar] [CrossRef]

- Ranavolo, A.; Ajoudani, A.; Cherubini, A.; Bianchi, M.; Fritzsche, L.; Iavicoli, S.; Sartori, M.; Silvetti, A.; Vanderborght, B.; Varrecchia, T.; et al. The Sensor-Based Biomechanical Risk Assessment at the Base of the Need for Revising of Standards for Human Ergonomics. Sensors 2020, 20, 5750. [Google Scholar] [CrossRef] [PubMed]

- Shafti, A.; Ataka, A.; Lazpita, B.U.; Shiva, A.; Wurdemann, H.A.; Althoefer, K. Real-time robot-assisted ergonomics. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1975–1981. [Google Scholar]

- Cop, C.P.; Cavallo, G.; van’t Veld, R.C.; Koopman, B.F.; Lataire, J.; Schouten, A.C.; Sartori, M. Unifying system identification and biomechanical formulations for the estimation of muscle, tendon and joint stiffness during human movement. Prog. Biomed. Eng. 2021, 3, 033002. [Google Scholar] [CrossRef]

- Holzbaur, K.R.; Murray, W.M.; Gold, G.E.; Delp, S.L. Upper limb muscle volumes in adult subjects. J. Biomech. 2007, 40, 742–749. [Google Scholar] [CrossRef]

- Sengupta, A.; Cao, S. mmPose-NLP: A Natural Language Processing Approach to Precise Skeletal Pose Estimation Using mmWave Radars. In IEEE Transactions on Neural Networks and Learning Systems; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar]

- Zhao, M.; Li, T.; Abu Alsheikh, M.; Tian, Y.; Zhao, H.; Torralba, A.; Katabi, D. Through-wall human pose estimation using radio signals. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7356–7365. [Google Scholar]

- Filippeschi, A.; Schmitz, N.; Miezal, M.; Bleser, G.; Ruffaldi, E.; Stricker, D. Survey of motion tracking methods based on inertial sensors: A focus on upper limb human motion. Sensors 2017, 17, 1257. [Google Scholar] [CrossRef]

- Kok, M.; Hol, J.D.; Schön, T.B. Using Inertial Sensors for Position and Orientation Estimation. arXiv 2017, arXiv:1704.06053. [Google Scholar]

- Rampichini, S.; Vieira, T.M.; Castiglioni, P.; Merati, G. Complexity analysis of surface electromyography for assessing the myoelectric manifestation of muscle fatigue: A review. Entropy 2020, 22, 529. [Google Scholar] [CrossRef] [PubMed]

- Rocha, V.d.A.; do Carmo, J.C.; Nascimento, F.A.d.O. Weighted-cumulated S-EMG muscle fatigue estimator. IEEE J. Biomed. Health Inform. 2017, 22, 1854–1862. [Google Scholar] [CrossRef] [PubMed]

- Jebelli, H.; Lee, S. Feasibility of wearable electromyography (EMG) to assess construction workers’ muscle fatigue. In Advances in Informatics and Computing in Civil and Construction Engineering; Springer: Berlin/Heidelberg, Germany, 2019; pp. 181–187. [Google Scholar]

- Woodward, R.B.; Shefelbine, S.J.; Vaidyanathan, R. Pervasive monitoring of motion and muscle activation: Inertial and mechanomyography fusion. IEEE/ASME Trans. Mechatron. 2017, 22, 2022–2033. [Google Scholar] [CrossRef]

- Pacchierotti, C.; Sinclair, S.; Solazzi, M.; Frisoli, A.; Hayward, V.; Prattichizzo, D. Wearable haptic systems for the fingertip and the hand: Taxonomy, review, and perspectives. IEEE Trans. Haptics 2017, 10, 580–600. [Google Scholar] [CrossRef]

- Latash, M.L. One more time about motor (and non-motor) synergies. Exp. Brain Res. 2021, 239, 2951–2967. [Google Scholar] [CrossRef]

- Zatsiorsky, V.M.; Latash, M.L. Prehension synergies. Exerc. Sport Sci. Rev. 2004, 32, 75. [Google Scholar] [CrossRef]

- Latash, M.L. Neurophysiological Basis of Movement; Human Kinetics: Champaign, IL, USA, 2008. [Google Scholar]

- Scano, A.; Dardari, L.; Molteni, F.; Giberti, H.; Tosatti, L.M.; d’Avella, A. A comprehensive spatial mapping of muscle synergies in highly variable upper-limb movements of healthy subjects. Front. Physiol. 2019, 10, 1231. [Google Scholar] [CrossRef]

- Averta, G.; Valenza, G.; Catrambone, V.; Barontini, F.; Scilingo, E.P.; Bicchi, A.; Bianchi, M. On the time-invariance properties of upper limb synergies. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1397–1406. [Google Scholar] [CrossRef]

- Van Criekinge, T.; Vermeulen, J.; Wagemans, K.; Schröder, J.; Embrechts, E.; Truijen, S.; Hallemans, A.; Saeys, W. Lower limb muscle synergies during walking after stroke: A systematic review. Disabil. Rehabil. 2020, 42, 2836–2845. [Google Scholar] [CrossRef]

- Chvatal, S.A.; Ting, L.H. Voluntary and reactive recruitment of locomotor muscle synergies during perturbed walking. J. Neurosci. 2012, 32, 12237–12250. [Google Scholar] [CrossRef]

- Catalano, M.G.; Grioli, G.; Farnioli, E.; Serio, A.; Piazza, C.; Bicchi, A. Adaptive synergies for the design and control of the Pisa/IIT SoftHand. Int. J. Robot. Res. 2014, 33, 768–782. [Google Scholar] [CrossRef]

- Ciocarlie, M.T.; Allen, P.K. Hand posture subspaces for dexterous robotic grasping. Int. J. Robot. Res. 2009, 28, 851–867. [Google Scholar] [CrossRef]

- Averta, G.; Della Santina, C.; Valenza, G.; Bicchi, A.; Bianchi, M. Exploiting upper-limb functional principal components for human-like motion generation of anthropomorphic robots. J. Neuroeng. Rehabil. 2020, 17, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Ficuciello, F.; Palli, G.; Melchiorri, C.; Siciliano, B. Experimental evaluation of postural synergies during reach to grasp with the UB Hand IV. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1775–1780. [Google Scholar]

- Bianchi, M.; Salaris, P.; Bicchi, A. Synergy-based hand pose sensing: Reconstruction enhancement. Int. J. Robot. Res. 2013, 32, 396–406. [Google Scholar] [CrossRef]

- Bianchi, M.; Salaris, P.; Bicchi, A. Synergy-based hand pose sensing: Optimal glove design. Int. J. Robot. Res. 2013, 32, 407–424. [Google Scholar] [CrossRef]

- Ciotti, S.; Battaglia, E.; Carbonaro, N.; Bicchi, A.; Tognetti, A.; Bianchi, M. A synergy-based optimally designed sensing glove for functional grasp recognition. Sensors 2016, 16, 811. [Google Scholar] [CrossRef] [PubMed]

- Kuismin, M.O.; Sillanpää, M.J. Estimation of covariance and precision matrix, network structure, and a view toward systems biology. Wiley Interdiscip. Rev. Comput. Stat. 2017, 9, e1415. [Google Scholar] [CrossRef]

- Averta, G.; Iuculano, M.; Salaris, P.; Bianchi, M. Optimal Reconstruction of Human Motion From Scarce Multimodal Data. IEEE Trans.-Hum.-Mach. Syst. 2022, 52, 833–842. [Google Scholar] [CrossRef]

- Ramsay, J.O.; Hooker, G.; Graves, S. Functional Data Analysis with R and MATLAB; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Peppoloni, L.; Filippeschi, A.; Ruffaldi, E.; Avizzano, C.A. A novel 7 degrees of freedom model for upper limb kinematic reconstruction based on wearable sensors. In Proceedings of the 2013 IEEE 11th International Symposium on Intelligent Systems and Informatics (SISY); IEEE: Piscataway, NJ, USA, 2013; pp. 105–110. [Google Scholar]

- Saito, A.; Kizawa, S.; Kobayashi, Y.; Miyawaki, K. Pose estimation by extended Kalman filter using noise covariance matrices based on sensor output. Robomech J. 2020, 7, 1–11. [Google Scholar] [CrossRef]

- Averta, G.; Barontini, F.; Catrambone, V.; Haddadin, S.; Handjaras, G.; Held, J.P.; Hu, T.; Jakubowitz, E.; Kanzler, C.M.; Kühn, J.; et al. U-Limb: A multi-modal, multi-center database on arm motion control in healthy and post-stroke conditions. GigaScience 2021, 10, giab043. [Google Scholar] [CrossRef]

- Müller, M. Dynamic time warping. In Information Retrieval for Music and Motion; Springer: Berlin/Heidelberg, Germany, 2007; pp. 69–84. [Google Scholar]

- Tedaldi, D.; Pretto, A.; Menegatti, E. A robust and easy to implement method for IMU calibration without external equipments. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA); IEEE: Piscataway, NJ, USA, 2014; pp. 3042–3049. [Google Scholar]

- Vasconcelos, J.F.; Elkaim, G.; Silvestre, C.; Oliveira, P.; Cardeira, B. Geometric approach to strapdown magnetometer calibration in sensor frame. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 1293–1306. [Google Scholar] [CrossRef]

- Bleser, G.; Hendeby, G.; Miezal, M. Using egocentric vision to achieve robust inertial body tracking under magnetic disturbances. In Proceedings of the 2011 10th IEEE International Symposium on Mixed and Augmented Reality; IEEE: Piscataway, NJ, USA, 2011; pp. 103–109. [Google Scholar]

- Farago, E.; Macisaac, D.; Suk, M.; Chan, A.D.C. A Review of Techniques for Surface Electromyography Signal Quality Analysis. IEEE Rev. Biomed. Eng. 2022, 16, 472–486. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, S.; Yu, T.; Zhang, Y.; Ye, G.; Cui, H.; He, C.; Jiang, W.; Zhai, Y.; Lu, C.; et al. Ultra-conformal skin electrodes with synergistically enhanced conductivity for long-time and low-motion artifact epidermal electrophysiology. Nat. Commun. 2021, 12, 4880. [Google Scholar] [CrossRef]

- Potvin, J.; Brown, S. Less is more: High pass filtering, to remove up to 99% of the surface EMG signal power, improves EMG-based biceps brachii muscle force estimates. J. Electromyogr. Kinesiol. 2004, 14, 389–399. [Google Scholar] [CrossRef]

- El-Gohary, M.; McNames, J. Human joint angle estimation with inertial sensors and validation with a robot arm. IEEE Trans. Biomed. Eng. 2015, 62, 1759–1767. [Google Scholar] [CrossRef]

- Slade, P.; Habib, A.; Hicks, J.L.; Delp, S.L. An open-source and wearable system for measuring 3D human motion in real-time. IEEE Trans. Biomed. Eng. 2021, 69, 678–688. [Google Scholar] [CrossRef] [PubMed]

- Alizadegan, A.; Behzadipour, S. Shoulder and elbow joint angle estimation for upper limb rehabilitation tasks using low-cost inertial and optical sensors. J. Mech. Med. Biol. 2017, 17, 1750031. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Liu, H.; Hartmann, Y.; Schultz, T. A Practical Wearable Sensor-based Human Activity Recognition Research Pipeline. In Proceedings of the 15th International Joint Conference on Biomedical Engineering Systems and Technologies, Online, 9–11 February 2022; pp. 847–856. [Google Scholar]

- Hartmann, Y.; Liu, H.; Schultz, T. Interactive and Interpretable Online Human Activity Recognition. In Proceedings of the 2022 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops), Pisa, Italy, 21–25 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 109–111. [Google Scholar]

| RMS Error | NRMS Error | Correlation | |

|---|---|---|---|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bonifati, P.; Baracca, M.; Menolotto, M.; Averta, G.; Bianchi, M. A Multi-Modal Under-Sensorized Wearable System for Optimal Kinematic and Muscular Tracking of Human Upper Limb Motion. Sensors 2023, 23, 3716. https://doi.org/10.3390/s23073716

Bonifati P, Baracca M, Menolotto M, Averta G, Bianchi M. A Multi-Modal Under-Sensorized Wearable System for Optimal Kinematic and Muscular Tracking of Human Upper Limb Motion. Sensors. 2023; 23(7):3716. https://doi.org/10.3390/s23073716

Chicago/Turabian StyleBonifati, Paolo, Marco Baracca, Mariangela Menolotto, Giuseppe Averta, and Matteo Bianchi. 2023. "A Multi-Modal Under-Sensorized Wearable System for Optimal Kinematic and Muscular Tracking of Human Upper Limb Motion" Sensors 23, no. 7: 3716. https://doi.org/10.3390/s23073716

APA StyleBonifati, P., Baracca, M., Menolotto, M., Averta, G., & Bianchi, M. (2023). A Multi-Modal Under-Sensorized Wearable System for Optimal Kinematic and Muscular Tracking of Human Upper Limb Motion. Sensors, 23(7), 3716. https://doi.org/10.3390/s23073716