Real-Time Safe Landing Zone Identification Based on Airborne LiDAR

Abstract

1. Introduction

1.1. Motivation

1.2. Research Contributions

2. Related Work

2.1. Digital Surface Map (DSM)

2.2. Extracting Slope from DSM

2.3. SLZ Identification

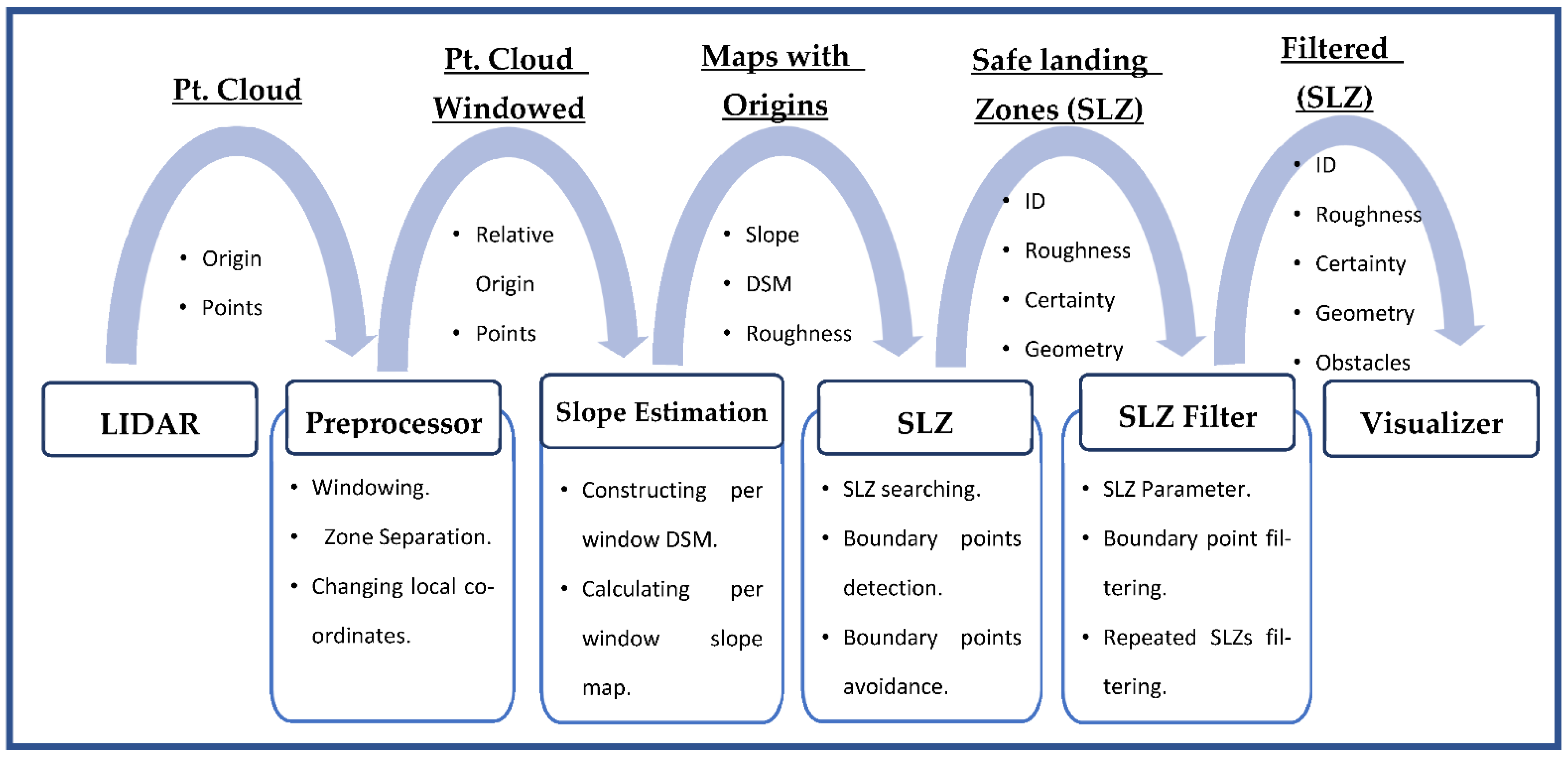

3. Methodologies

3.1. LiDAR Point Cloud Preprocessing

3.1.1. Windowing

3.1.2. Zone Separation

3.1.3. Changing Local Coordinates

- : The x-component of the Cartesian coordinate of a LiDAR point.

- : The y-component of the Cartesian coordinate of a LiDAR point.

- and are the distances in meters between the position of the aircraft currently, near the boundaries of the zone, and the position of the previous zone origin, in X and Y directions, respectively. They are calculated using Equations (10) and (11), which are explained in Section 3.2.

3.2. Slope Estimation

3.2.1. Constructing DSM

Outliers’ Points Removal

Defining the Boundaries of the Provided Point in This Time Window

- The offset is the number of pixels from the beginning of the terrain model to the first changed pixel in this time window;

- The range is the number of pixels between the offset and the farthest-changing pixel in this time window.

Populating All LiDAR Point Clouds in the Per-Window DSM

- : The Z component of a point inside this pixel.

- : The number of points in this pixel.

3.2.2. Calculating Slope and Roughness

- : The elements in raw and column of the elevation matrix.

- : The horizontal distance in the Y direction of the element in raw

- : The horizontal distance in the X direction of the element in raw .

3.2.3. Constructing the Total Slope Map, the Total DSM, and the Total Roughness Map

- The value of that pixel of the total path slope map will be the average of the old one and the new one of the per-window slope maps;

- The value of that pixel of the total path slope map will be the maximum of the two values (the new and the old ones).

3.2.4. Constructing the Total Binary Map

3.2.5. New Map Creation

- : The aircraft latitude when reached the zone boundary.

- : The aircraft longitude when reached the zone boundary.

- : The latitude of the origin of the previous zone.

- : The longitude of the origin of the previous zone.

- : The radius of Earth.

- : The aircraft altitude when reached the zone boundary.

3.3. SLZ Identification

3.3.1. SLZ Search

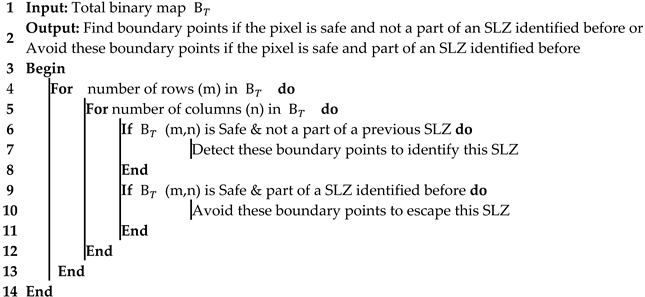

| Algorithm 1: SLZ Search |

|

3.3.2. Boundary Point Detection

- : The latitude of the boundary points of this SLZ.

- : The longitude of the boundary points of this SLZ.

- : The latitude of the origin of the zone containing this SLZ.

- : The longitude of the origin of the zone containing this SLZ.

- : The y component of the boundary points of this SLZ.

- : The x component of the boundary points of this SLZ.

- : The altitude of the boundary points of this SLZ.

- : The radius of Earth.

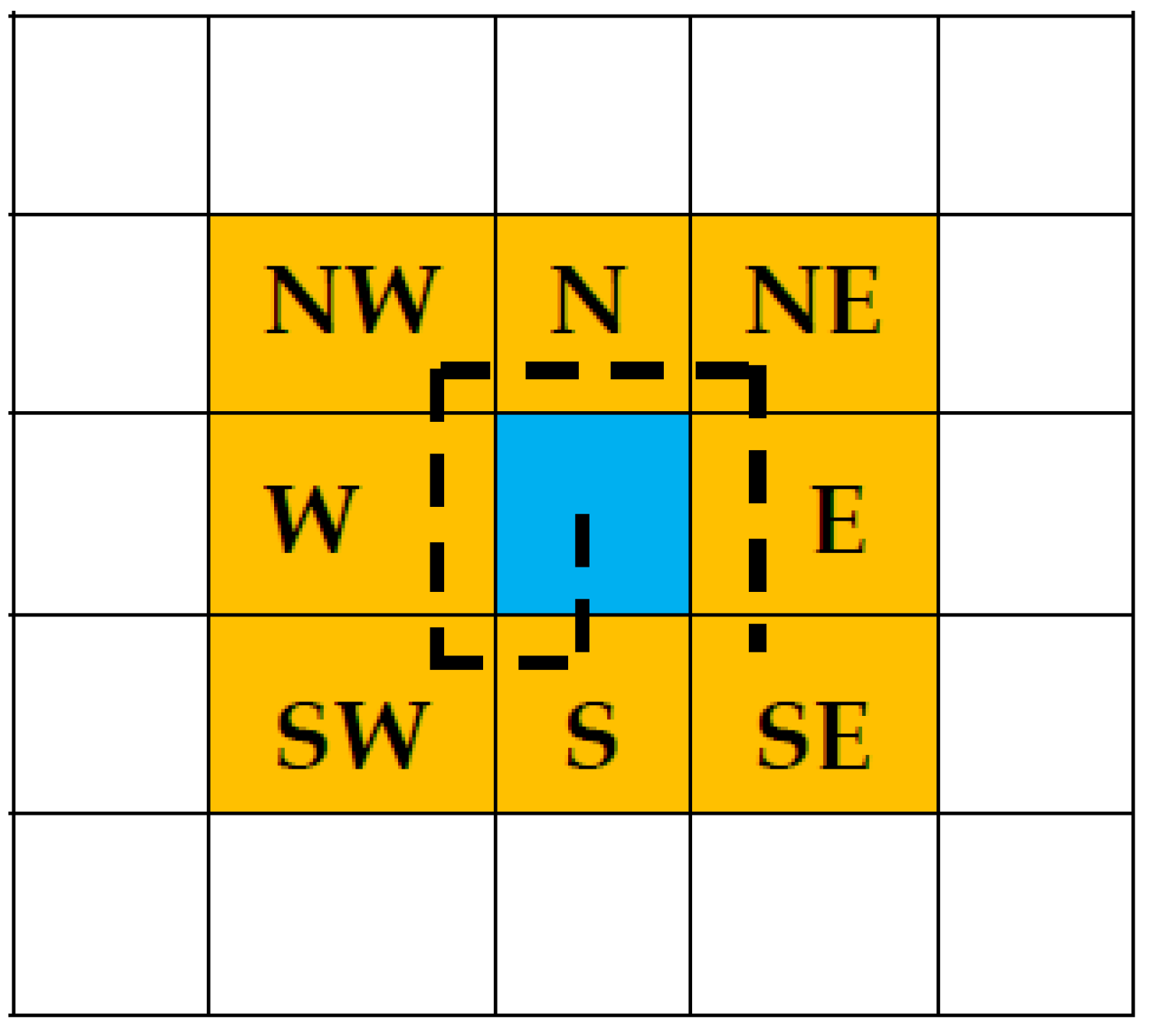

- : The X component of Moore-neighbor pixel.

- : The Y component of Moore-neighbor pixel.

- : The X component of the previous boundary point.

- : The Y component of the previous boundary point.

- : It is a vector containing the number needed to be added to the previous boundary point to obtain all Moore neighbors. The array is shown in Table 1.

- : The index of the Moore-neighbor vector (), which ranges from 0 to 8.

3.3.3. Boundary Point Avoidance

3.4. SLZ Filtering

3.4.1. SLZ Parameter Calculation

- Safe cells have a slope with a value less than the predetermined slope threshold;

- Unsafe cells have a slope with a value higher than the predetermined slope threshold;

- Uncertain cells, which have no elevation value and mean no LiDAR point, were reflected from this cell;

- Certain unsafe cells, which have an elevation value, have their height determined. In addition to that, they are unsafe cells.

- : The certainty of this SLZ.

- : The number of safe cells in this SLZ.

- : The total number of cells in this SLZ.

- : The total number of certain cells in this SLZ.

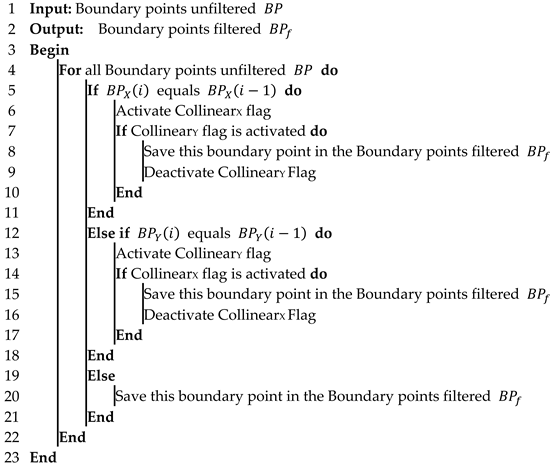

3.4.2. Boundary Point Filtering

| Algorithm 2: Boundary Point Filtering |

|

3.4.3. Repeated SLZ Filtering

- In case the SLZ is completely repeated, this SLZ is ignored;

- In case the SLZ intersects or partially repeats a previous SLZ, the repetition ratio and the area threshold are calculated, and according to their values, the SLZ is considered repeated or not;

- If it is repeated, the new SLZ will replace the previous one;

- If it is not repeated, both the new SLZ and the previous one will remain in effect.

- : The number of points on the new SLZ that are located inside the previous one.

- : The total number of boundary points of the new SLZ.

- : The minimum ratio between the area of the previous SLZ and that of the current one. (Determined by the user).

- : Area of the previous SLZ.

- In cases where the value of the repetition ratio () is one, it is considered the same SLZ, and the new SLZ is ignored;

- In cases where the repetition ratio () is higher than the repetition ratio threshold (determined by the user), and the area of the current SLZ is higher than the area threshold (), it is considered a repeated SLZ, and the new SLZ will replace the previous one;

- Otherwise, both the new SLZ and the previous one will remain in existence.

4. Experimental Setup

5. Results and Discussions

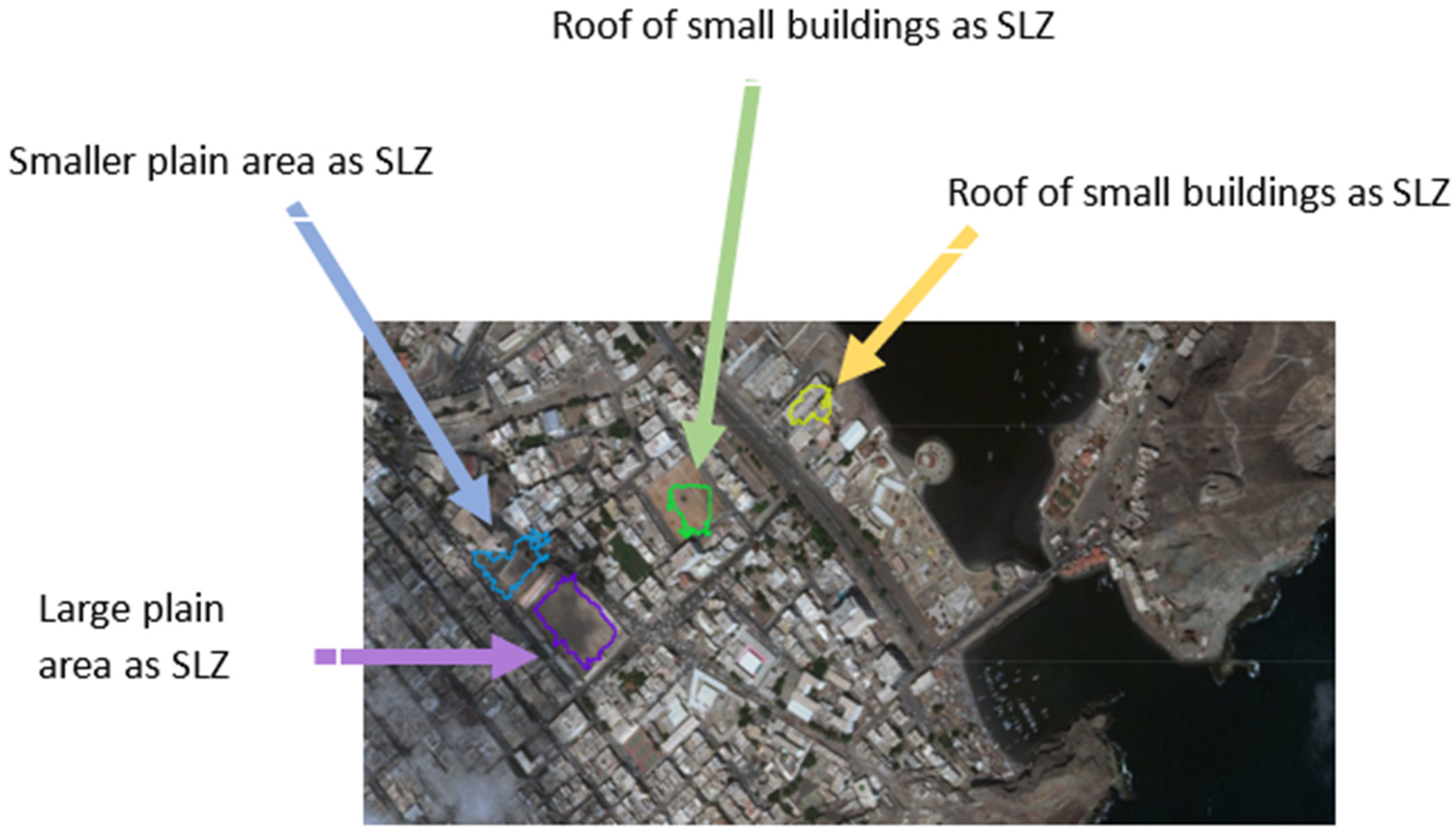

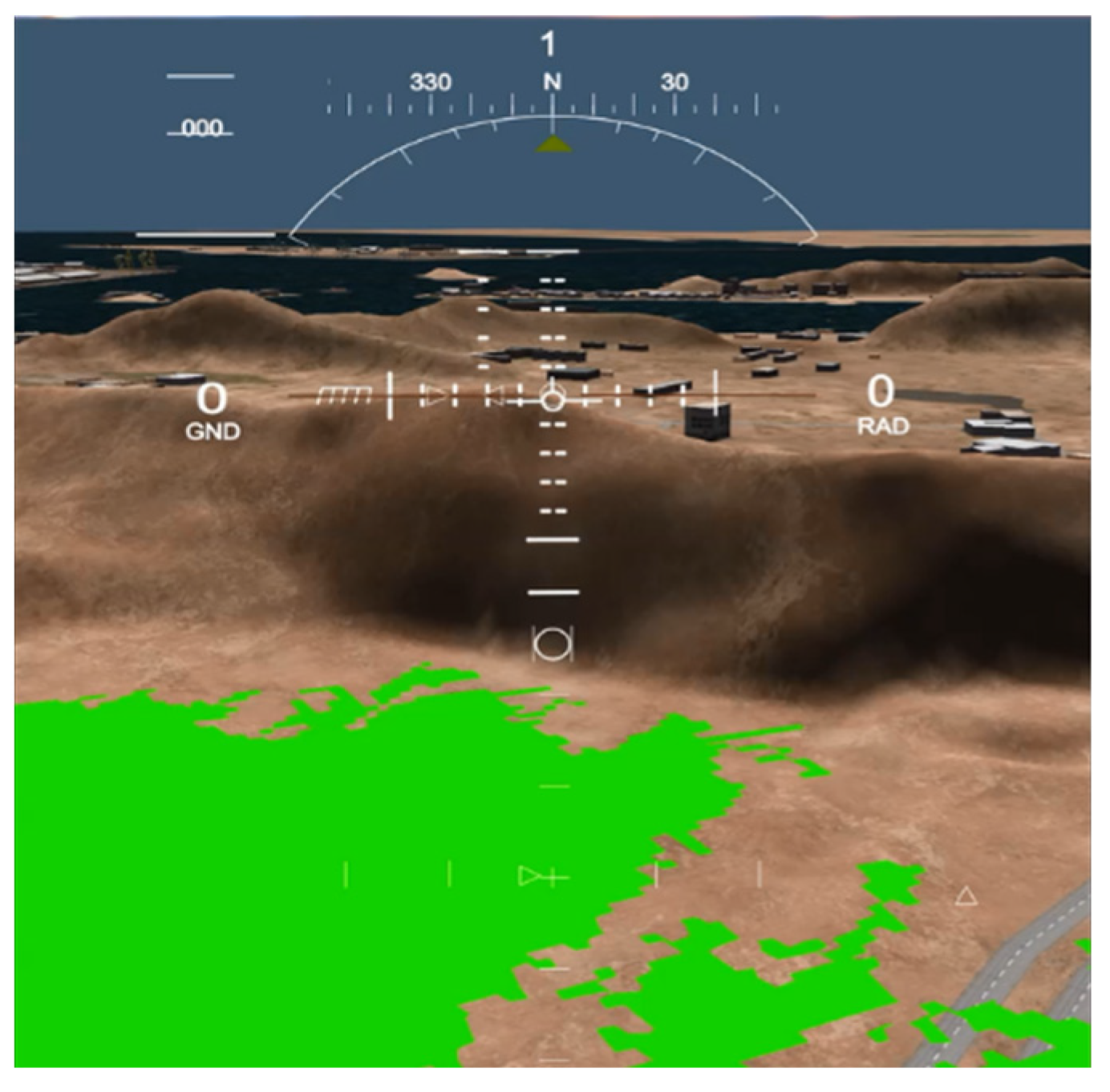

5.1. SLZ Identification

- The large plain area could be detected as a safe place to land as it is a grass court in a stadium (violet SLZ); also, the stadium seats are not a part of this SLZ, which shows how accurate it is;

- The smaller plain area could be detected as a safe place to land as it is part of the street and part of the entrance to the stadium (blue SLZ);

- The roof of the large building could be detected as a safe place to land (green SLZ). It is also noticed that some parts of the roof are not included in the SLZ; this is because there was not enough LiDAR point cloud for the rest of the roof;

- The roof of small buildings as a safe place to land (yellow SLZ).

- Normal road could be detected as a safe place to land (dark blue SLZ);

- Small house yards could be detected as a safe place to land (light blue SLZ).

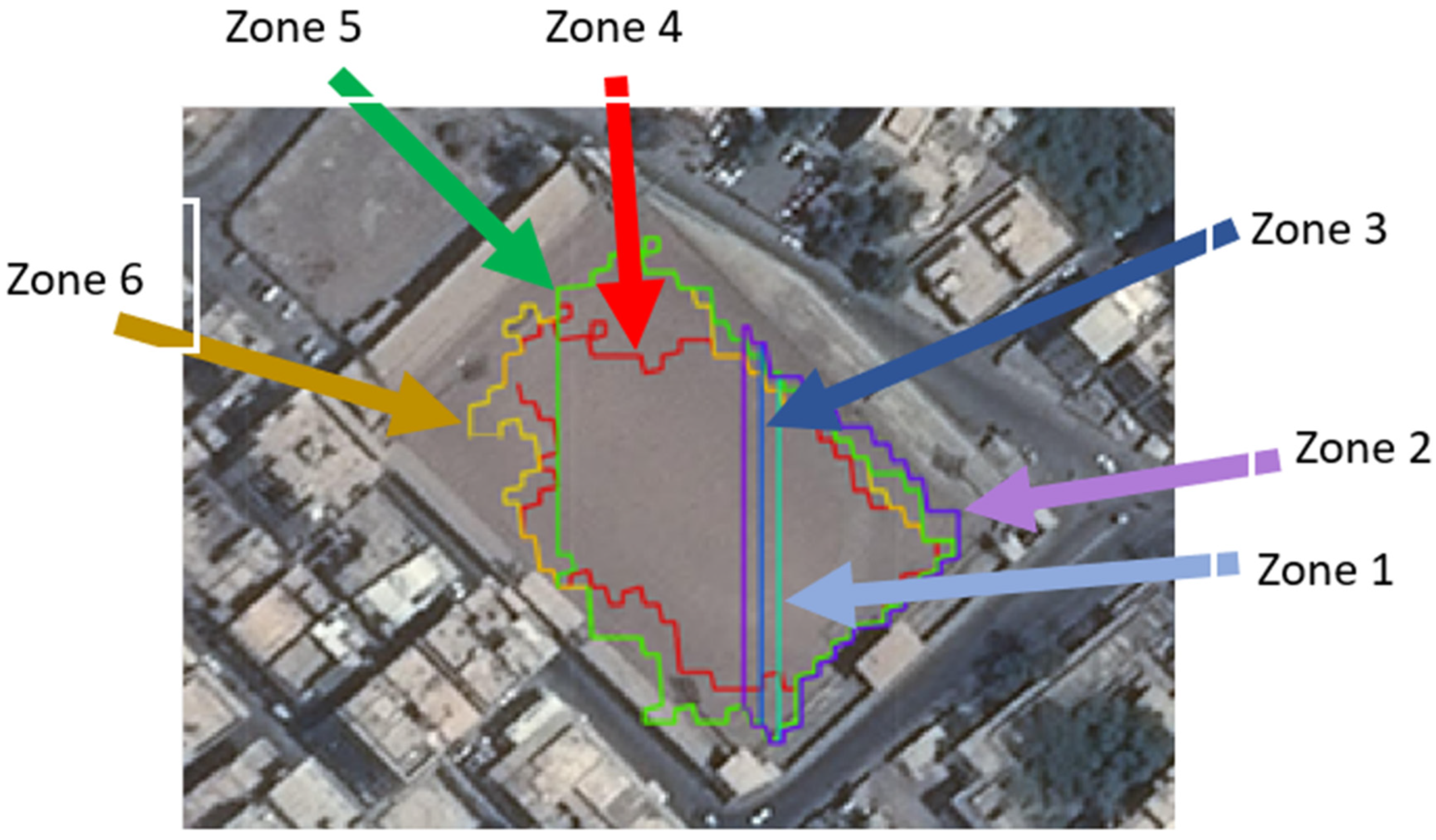

5.2. Including Only SLZs Having Enough Space for Landing

- The court is in a stadium (dark blue SLZ), having a length of 106 m and a width of 73 m;

- The entrance to the stadium (light green SLZ), has a length of 55 m and a width of 62 m.

5.3. Re-Use Zone index When It Is a Morphing of an Existing Zone

5.4. SLZ Error Evaluation

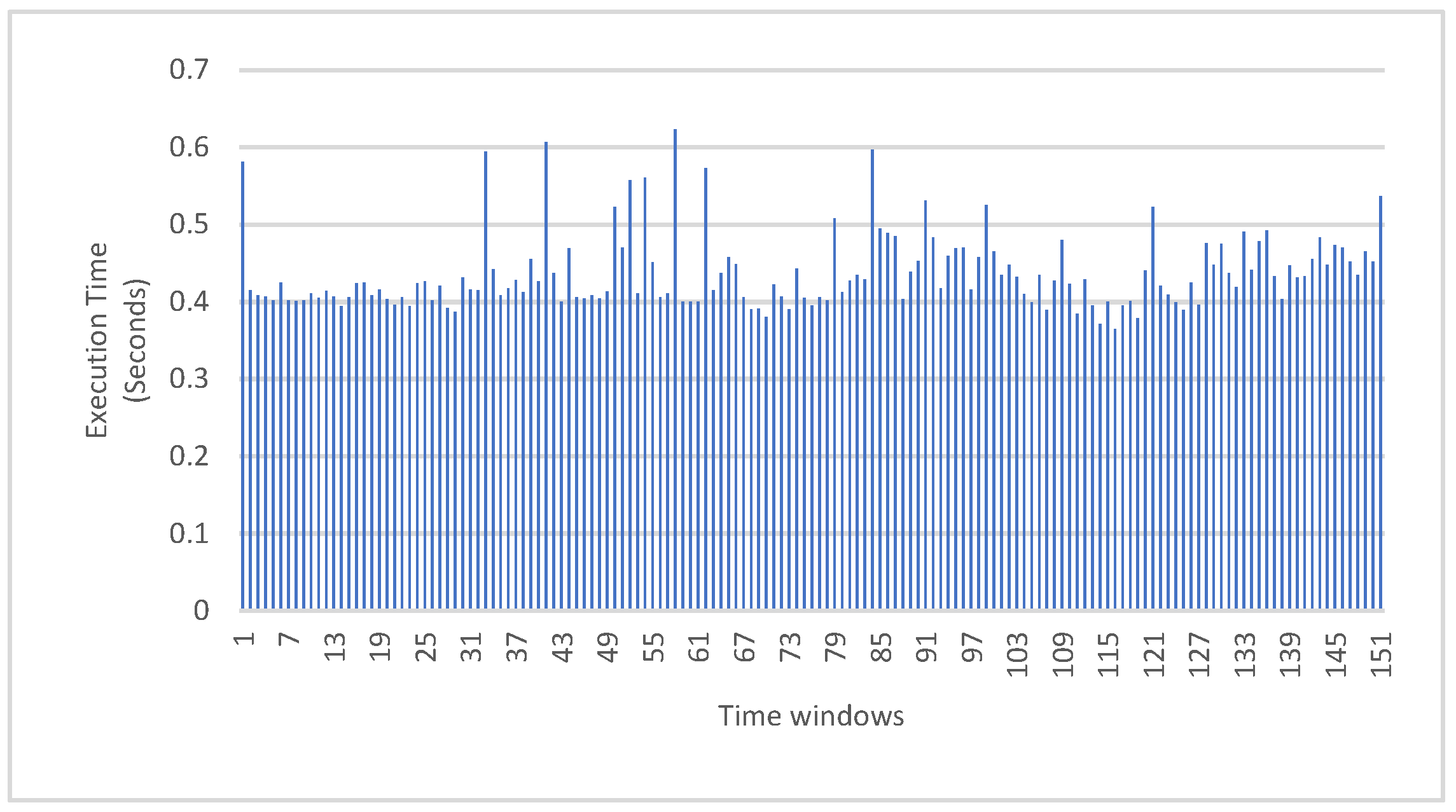

5.5. Overall Algorithm Elapsed Time

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gonçalves, G. Analysis of interpolation errors in urban digital surface models. In Proceedings of the 7th International Symposium on Spatial Accuracy Assessment in Natural Resources and Environmental Sciences, Lisbon, Portugal, 5–7 July 2006. [Google Scholar]

- Church, P.; Matheson, J.; Owens, B. Mapping of ice, snow and water using aircraft-mounted LiDAR. In Degraded Visual Environments: Enhanced, Synthetic, and External Vision Solutions; SPIE: Baltimore, MD, USA, 2016. [Google Scholar]

- Loureiro, G.; Dias, A.M.; Almeida, J. Emergency Landing Spot Detection Algorithm for Unmanned Aerial Vehicles. Remote Sens. 2021, 13, 1930. [Google Scholar] [CrossRef]

- Sankaralingam, S.; Vengatesan, R.; Chandrasekaran, S.; Rajkumar, S.; Suresh, S. LiDAR-Based Terrain Analysis for Safe Landing Site Selection in Unmanned Aerial Vehicles. J. Unmanned Veh. Syst. 2022, 10, 1–13. [Google Scholar]

- Moosmann, F.; Pink, O.; Stiller, C. Segmentation of 3D Lidar Data in non-flat Urban Environments. In Proceedings of the IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009. [Google Scholar]

- Lohan, D.; Kovács, F.; Szirányi, T. Rapid Identification of Safe Landing Zones for Autonomous Aerial Systems Using Multi-spectral LiDAR Data. Remote Sens. 2019, 11, 1744. [Google Scholar]

- Zhao, B.; Wu, Q.; Zhao, Y.; Yu, Y. Automated Detection and Characterization of Safe Landing Zones on the Lunar Surface Using LiDAR Data. Planet. Space Sci. 2020, 180, 104781. [Google Scholar]

- Li, H.; Ye, C.; Guo, Z.; Wei, R.; Wang, L.; Li, J. A Fast Progressive TIN Densification Filtering Algorithm for Airborne LiDAR Data Using Adjacent Surface Information. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 12492–12503. [Google Scholar] [CrossRef]

- Balaram, S.; Cavanagh, P.D.; Barlow, N.G.; Chien, S.A.; Fuchs, T.J.; Willson, R.C. Automated Landing Site Detection for Mars Rovers Using LiDAR and Image Data. J. Field Robot. 2021, 38, 337–355. [Google Scholar]

- Zinger, S.; Nikolova, M.; Roux, M.; Maître, H. 3D resampling for airborne laser data of urban areas. Int. Arch. Photogramm. Remote Sens. 2002, 34, 55–61. [Google Scholar]

- Hyyppä, J.; Yu, X.; Hyyppä, H.; Vastaranta, M.; Holopainen, M.; Kukko, A.; Alho, P. Advances in Forest Inventory Using Airborne Laser Scanning. Remote Sens. 2012, 4, 1190–1207. [Google Scholar] [CrossRef]

- Khosravipoura, A.; Skidmorea, A.K.; Isenburgb, M. Generating spike-free digital surface models using LiDAR raw point clouds: A new approach for forestry applications. Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 104–114. [Google Scholar] [CrossRef]

- Vianello, A.; Cavalli, M.; Tarolli, P. LiDAR-derived slopes for headwater channel network analysis. Catena 2009, 76, 97–106. [Google Scholar] [CrossRef]

- Amenta, N.; Attali, D.; Devillers, O. Complexity of Delaunay triangulation for points on lower-dimensional polyhedra. In Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 7–9 January 2007. [Google Scholar]

- Behan, A. On the matching accuracy of rasterised scanning laser altimeter data. Int. Arch. Photogramm. Remote Sens. 2000, 33, 75–82. [Google Scholar]

- Warrena, S.D.; Hohmannb, M.G.; Auerswaldc, K.; Mitasovad, H. An evaluation of methods to determine slope using digital elevation data. Catena 2004, 58, 215–233. [Google Scholar] [CrossRef]

- Hodgson, M.E. Comparison of angles from surface slope/aspect algorithms. Cartogr. Geogr. Inf. Syst. 1998, 85, 173–185. [Google Scholar] [CrossRef]

- Fleming, M.D.; Hoffer, R.M. Machine processing of Landsat MSS data and DMA topographic data for forest cover types mapping. In Proceedings of the Symposium on Machine Processing of Remotely Sensed Data, West Lafayette, IN, USA, 27–29 June 1979; Purdue University: West Lafayette, IN, USA; pp. 377–390. [Google Scholar]

- Horn, B.K. Hill shading and the reflectance map. Proc. IEEE 1981, 69, 14–47. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, Y.; Chen, X.; Ma, T. An Algorithm to Extract More Accurate Slopes from DEMs. IEEE Geosci. Remote Sens. Lett. 2016, 13, 939–942. [Google Scholar] [CrossRef]

- Nie, S.; Wang, C.; Dong, P.; Li, G.; Xi, X.; Wang, P.; Yang, X. A Novel Model for Terrain Slope Estimation Using ICESat/GLAS Waveform Data. IEEE Trans. Geosci. Remote Sens. 2018, 56, 217–227. [Google Scholar] [CrossRef]

- Brady, T.; Schwartz, J. ALHAT System Architecture and Operational Concept. In Proceedings of the IEEE Aerospace Conference, Big Sky, MT, USA, 3–10 March 2007. [Google Scholar]

- Carrilho, A.; Galo, M.; Santos, R.C. Statistical Outlier Detection Method for Airborne Lidar Data. In Proceedings of the Innovative Sensing—From Sensors to Methods and Applications, The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Karlsruhe, Germany, 10–12 October 2018; pp. 87–92. [Google Scholar]

- Burrough, P.A.; McDonnell, R.A. Spatial Analysis Using Continuous fields. In Principles of Geographical Information Systems; Oxford University Press: New York, NY, USA, 1998; pp. 190–195. [Google Scholar]

- Noureldin, A.; Karamat, T.B.; Georgy, J. Fundamentals of Inertial Navigation, Satellite-Based Positioning and Their Integration; Springer: Berlin, Germany, 2013. [Google Scholar]

- Rajashekar, R.P.; Amarnadh, V.; Bhaskar, M. Evaluation of Stopping Criterion in Contour. Int. J. Comput. Sci. Inf. Technol. 2012, 3, 3888–3894. [Google Scholar]

- Estrada, F.; Elder, J. Multi-Scale Contour Extraction Based on Natural Image Statistics. In Proceedings of the 2006 Conference on Computer Vision and Pattern Recognition Workshop (CVPRW’06), New York, NY, USA, 17–22 June 2006. [Google Scholar]

| i | 0 | 1 |

|---|---|---|

| 0 | −1 | 0 |

| 1 | −1 | −1 |

| 2 | 0 | −1 |

| 3 | 1 | −1 |

| 4 | 1 | 0 |

| 5 | 1 | 1 |

| 6 | 0 | 1 |

| 7 | −1 | 1 |

| Operating System | Windows 7 Enterprise |

| Service pack | 1 |

| RAM | 32.0 GB |

| System type | 64 bit |

| Processor | |

| Processor count | 2 |

| Processor Type | Intel Xeon® |

| Processor Model | E5-2650 v4 |

| # of Cores | 12 |

| # of Threads | 24 |

| Processor Base frequency | 2.20 GHZ |

| Max Turbo Frequency | 2.90 GHz |

| Cache | 30 M.B. (Smart Cache) |

| Sensor | |

| Range | Up to 1000 m |

| Multi-return | Up to 7 returns |

| Accuracy | <2.5 cm (typical) |

| Precision | <2.0 cm (typical) |

| Laser | |

| Wavelength | 1550 nm |

| Physical | |

| Dimensions | 17.8 × 17.8 × 33.8 cm (7.0 × 7.0 × 13.3 inches) |

| Weight (without cables) | 11.8 kg (26.0 lbs) |

| Operating Voltage | 18–36 VDC |

| Power Consumption | 110 W (typical), 220 W maximum |

| Preprocessing Parameters | Comment | ||

|---|---|---|---|

| Time window | 1,000,000 | The time window (in microseconds) | Each point has a timestamp. The current simulator readout is configured at 1 M every second. |

| Slope Estimation Parameters | |||

| Max. slope | 40° | The maximum slope | 40° is chosen for better visualization of the color map. |

| Thre. value | 4° | Slope threshold value | This threshold is defined by the helicopter landing standards. |

| SLZ Parameters (irregular) | |||

| SLZ Min. Square Side | 24 m | The minimum side length of a landing zone to be considered as an SLZ in the Near mode | This dimension is defined by the helicopter landing standards. |

| SLZ Filter | |||

| Confidence factor | 0.86 | Confidence factor in finding the SLZ certainty | This number is picked high enough to land in a zone that has returns with high power/certainty to avoid false detections. |

| Repeat ratio threshold | 0.8 | The ratio between the number of boundary points in an old SLZ and that in a new SLZ to be considered as repeated SLZ | As explained, the SLZs with common boundary points and areas combine if these ratios are high enough. |

| Area ratio threshold | 0.9 | The ratio between the number of area of an old SLZ to that in a new SLZ to be considered as repeated SLZ | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Massoud, A.; Fahmy, A.; Iqbal, U.; Givigi, S.; Noureldin, A. Real-Time Safe Landing Zone Identification Based on Airborne LiDAR. Sensors 2023, 23, 3491. https://doi.org/10.3390/s23073491

Massoud A, Fahmy A, Iqbal U, Givigi S, Noureldin A. Real-Time Safe Landing Zone Identification Based on Airborne LiDAR. Sensors. 2023; 23(7):3491. https://doi.org/10.3390/s23073491

Chicago/Turabian StyleMassoud, Ali, Ahmed Fahmy, Umar Iqbal, Sidney Givigi, and Aboelmagd Noureldin. 2023. "Real-Time Safe Landing Zone Identification Based on Airborne LiDAR" Sensors 23, no. 7: 3491. https://doi.org/10.3390/s23073491

APA StyleMassoud, A., Fahmy, A., Iqbal, U., Givigi, S., & Noureldin, A. (2023). Real-Time Safe Landing Zone Identification Based on Airborne LiDAR. Sensors, 23(7), 3491. https://doi.org/10.3390/s23073491