1. Introduction

The volume and velocity of Big Remote Sensing Data poses a significant computational and storage challenge to modern applications [

1]. If data are not transformed into immediate information, the generating of actionable intelligence will be delayed. This challenges the human capacity to store and review the data after the fact [

2]. Detecting small objects in real time using remote sensors is an ongoing and challenging problem [

3]. One challenge is where an object(s) is located far away from the sensor, in which its size naturally appears to be much smaller. A sensor’s sensitivity diminishes as the distance from the target increases. Another key challenge is when the environmental conditions can be dynamic (i.e., weather conditions, sunlight, obstructions, etc.). Poor environmental conditions (e.g., low visibility) can reduce the visual quality of a target(s). A combination of these factors can contribute to the target having a low Signal-to-Noise Ratio (SNR). There is also the challenge of the Limit of Detection (LOD), whereby the remote sensors are bounded by what is observable in each image frame. In other words, what a human sees in a single image frame is limited by what the sensor captures in one frame. When a small foreground object is very close to the background noise (e.g., low SNR), humans cannot accurately observe and label the data. Therefore, it cannot be used to train a machine classifier, where it helps the machine learn to identify similar objects in the future. In this paper, we present a new method, a “Multi-frame Moving Object Detection System (MMODS)”, to overcome the LOD of modern remote sensing in a detection system.

Deep learning methods have gained wide popularity in object recognition [

4]. For example, methods such as You Only Look Once (YOLO) [

5] and Mask R-CNN [

6] have shown that they can achieve high accuracy for “large size” object identification. Small size object identification remains a significant challenge [

7]. Machine learning methods are generally applicable to targets containing high SNR and high resolution that relies on human-observed labeled data. When the target’s SNR of a remote sensor is close to the noise and the object size is too small (few pixels), humans cannot accurately observe and label the data for a machine classifier to be trained. The lack of features on a target makes it undesirable to model the machine detector using a deep neural network with a high risk of overfitting on the noise.

Traditional real-time moving object detection techniques typically involve “Background Subtraction” [

8]. As image frames are continuously flowing into the system, an estimate of the background is computed at each time step. When a new frame arrives, the background estimate from the previous frame will be subtracted to produce a “Difference Frame”. Thresholding can then be applied on the Difference Frame to provide foreground and background discrimination [

9]. Popular background estimation approaches such as the Gaussian Mixture Model (GMM) [

10] attempts to estimate an image pixel’s background intensity using multiple Gaussian models. Principal Components of Pursuit (PCP) [

11] attempts to decompose an image into sparse and low-rank components, where the low-rank components correspond to the stationary background. Subspace tracking techniques [

12] model the background as a linear combination of basis images, where the background’s basis vectors are updated at each time step with a forgetting factor. Despite many recent advances in change detection [

13], the frame-by-frame change detection approach is insufficient to detect low SNR targets with a manageable false alarm rate. By dropping the detection threshold close to the noise level, it will result in high levels of false alarms. Thus, there is a need to enhance the target’s SNR before applying a detection threshold.

Prior work has been carried out to improve the target’s SNR through matching and the integration of target signals. Reed et al. [

14] introduced a 3D matched filter method to enhance the SNR of a constant velocity moving target by integrating the target’s signal according to its constant velocity motion over a framed sequence. This method assumes a known number of targets and its constant motion trajectory. The Track-Before-Detect (TBD) approach [

15] incorporates the use of dynamic motion modeling, such as the Kalman Filter [

16,

17] and the Particle filter [

18,

19], to predict and integrate target motion over multiple frames to enhance a target’s SNR. However, the TBD approach is generally limited to slow moving targets as performance tends to degrade for high-speed targets [

20]. A TBD approach using multiple motion models [

21] has been demonstrated in simulations to better track maneuverable targets. However, it is not clear how the technique performs against real data. More recently, a constrained velocity filter approach [

3] demonstrated significant improvement in low SNR target detection using real data collected by a remote video camera. This method uses a combination of known path constraints and the target’s motion model to improve its SNR by integrating target signals over a pre-determined path. However, the requirement to have a pre-determined path hinders the ability to apply the method in unconstrained areas or when a path constraint is unknown.

In this paper, we introduce a new multi-frame detection to enhance a target’s SNR. Our method demonstrates a significant improvement over the traditional single-frame detection approach.

3. Results and Discussions

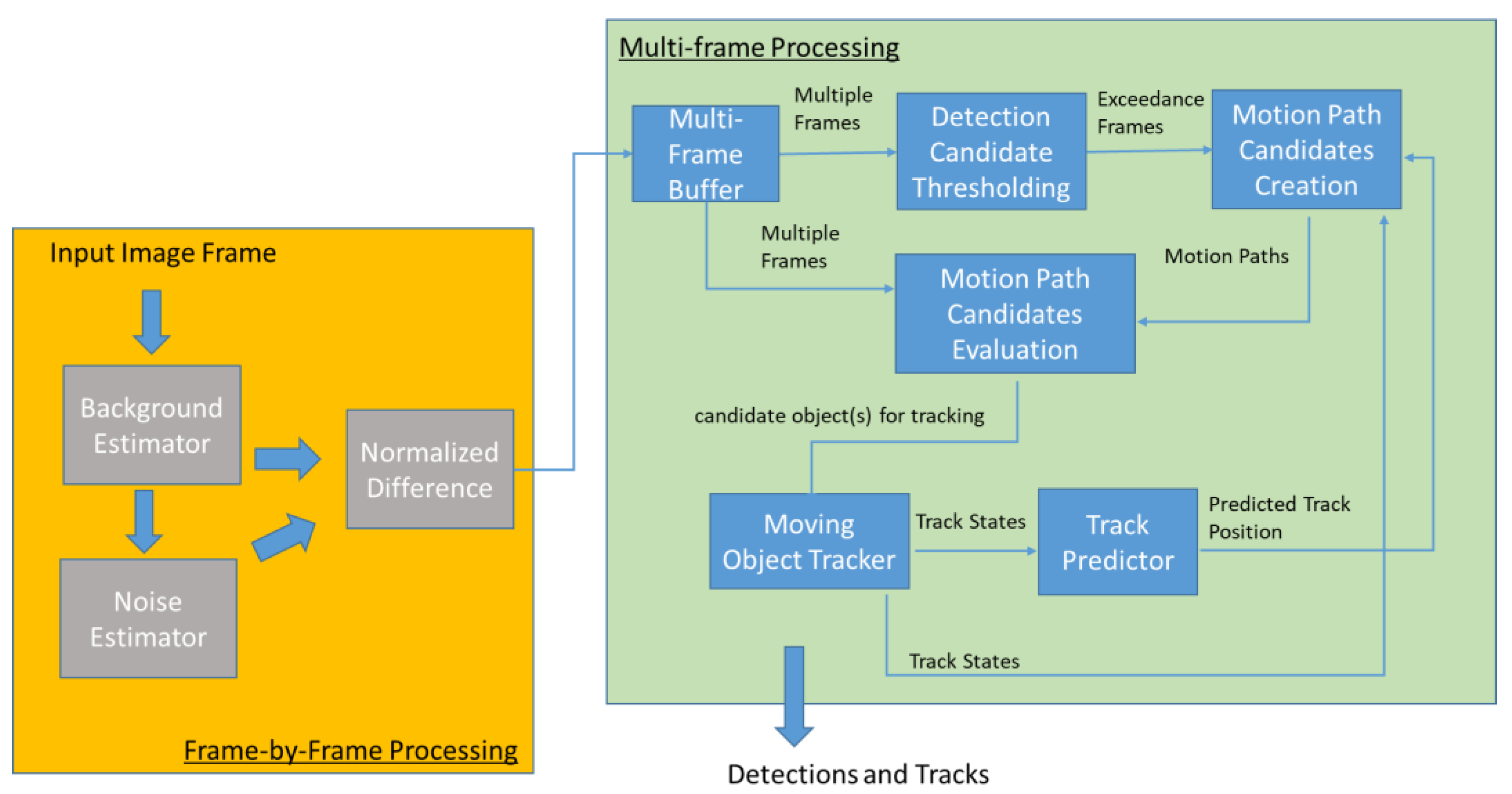

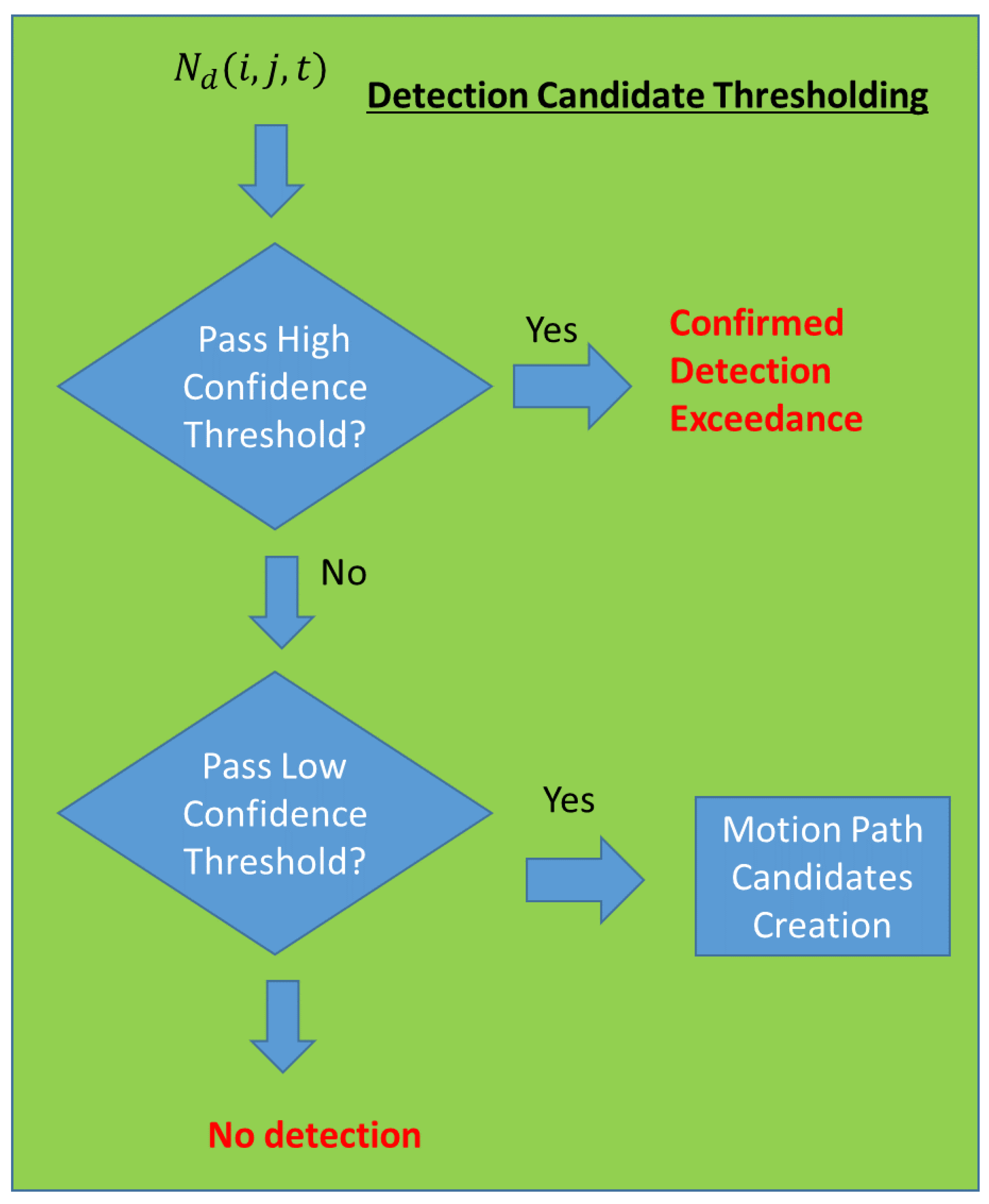

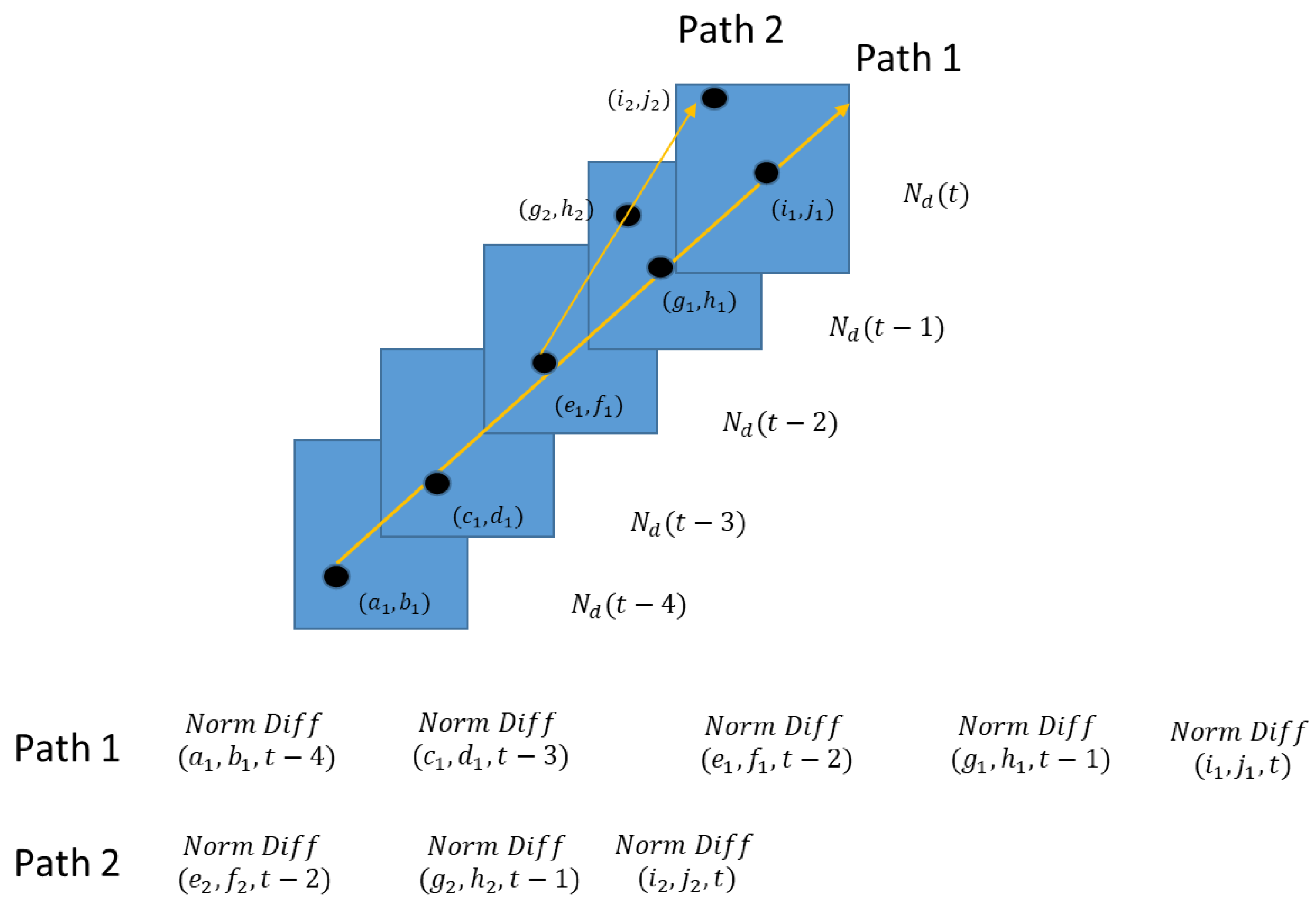

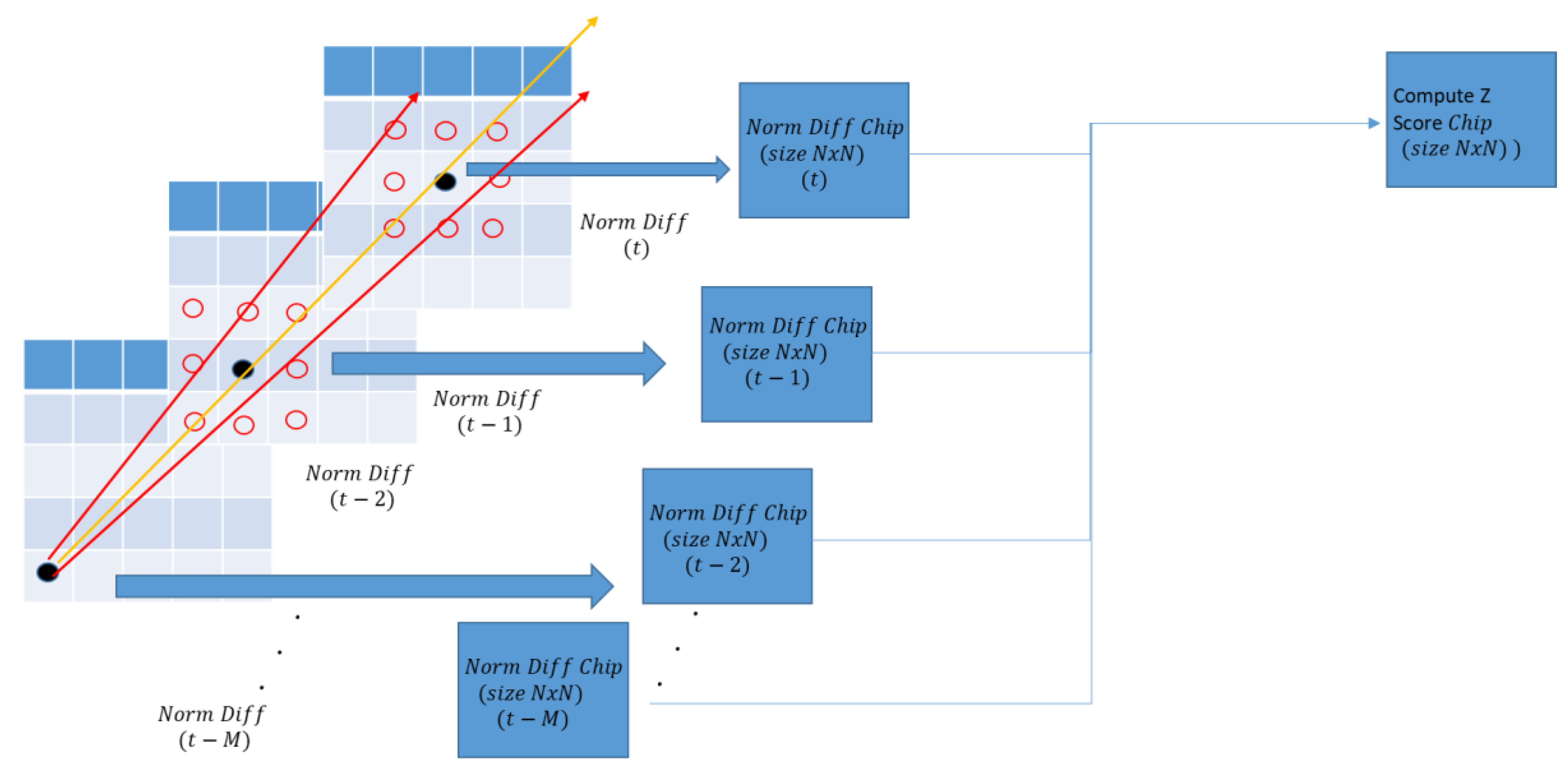

To measure the performance improvement of MMODS, we compared a baseline detection system without MMODS versus a detection system with MMODS. A detailed comparison of the system framework is depicted in

Figure 8. As depicted in

Figure 8, most components were the same except for the MMODS’s design having an additional multi-frame processing component. Both systems used the exact same background estimator in(Equation (1)) and noise estimator in (Equation (2)), and the normalized difference calculation in [Equation (5)]. This comparison setup was used so that we could measure the precise benefit of the MMODS design, as compared to the baseline design. The frame buffer window MMODS used in this experiment was 7.

A comparison of the Receiver Operational Characteristic (ROC) curve is depicted in

Figure 9. As shown in the curve, to maintain a false alarm of 0, the baseline method could only achieve 30% probability of detection. However, with MMODS, it achieved 90% probability of detection (an improvement factor of 3) at the same false alarm rate. However, we acknowledge that our MMODS method did not reach a probability detection of 100% because some of the targeted SNRs were extremely close, with a 1:1 ratio.

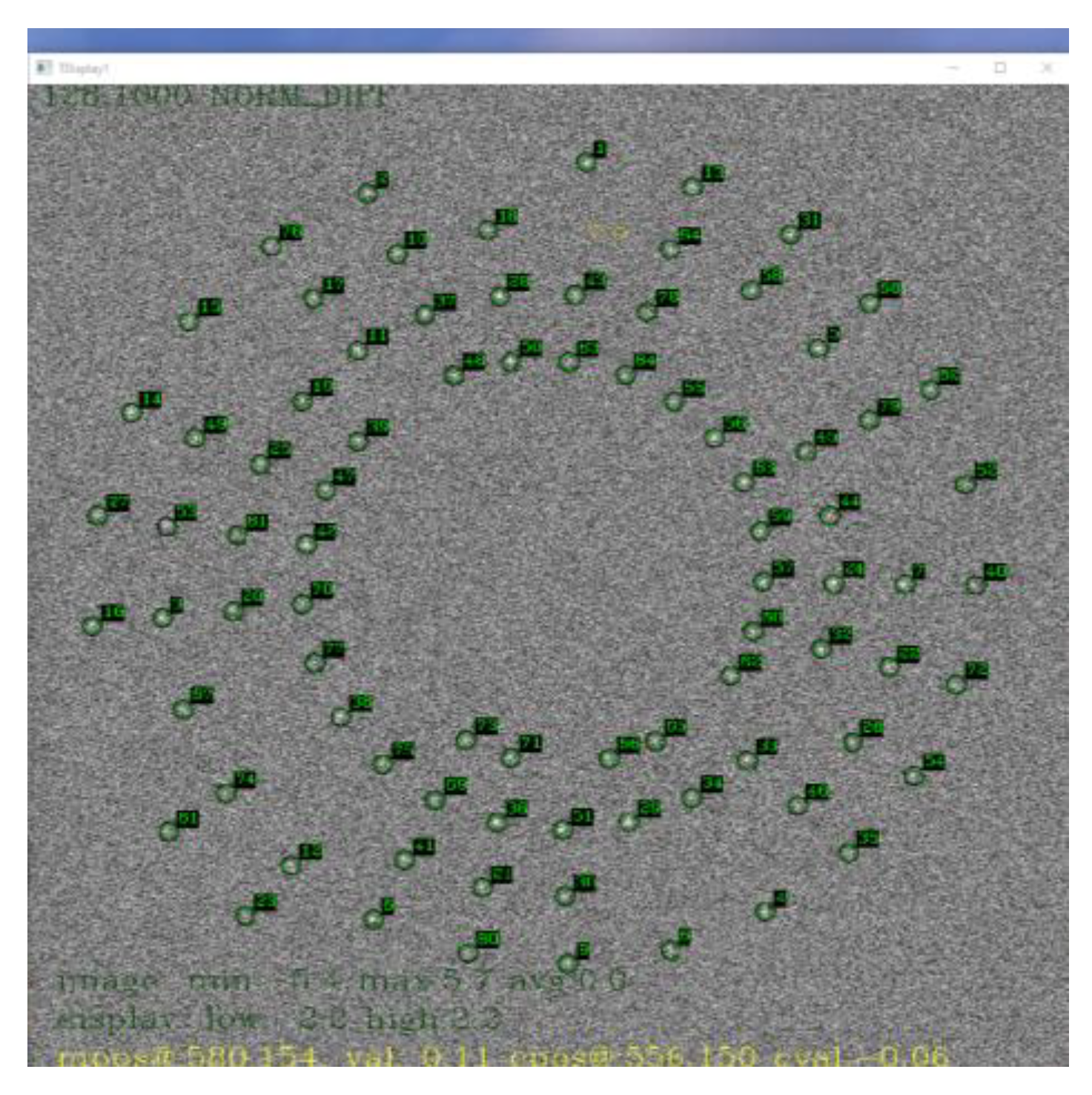

An overlay of target indicators on the background subtracted image is depicted in

Figure 10. The small moving targets were difficult to see on the background subtracted image.

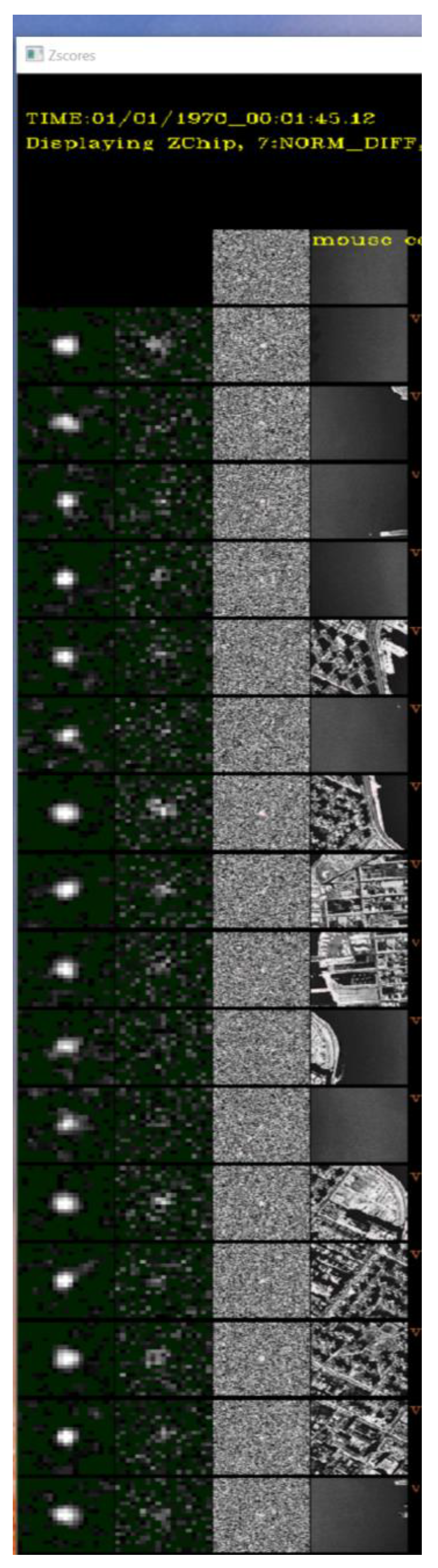

To perform quality assessment,

Figure 11 shows a comparison of the MMODS enhanced targeted region (column 1) versus the baseline targeted region (column 2). Both comparison chips were displayed in the same 2× resolution so that we could zoom-in to compare the quality of the SNR enhancement of the small, low-SNR target. When viewed side-by-side, the quality (e.g., SNR) of the MMODS-detected target region in

Figure 11, column 1, had a much better quality than the one without MMODS in

Figure 11, column 2. For reference comparison, the background subtracted region (column 3) and the original raw frame region (column 4) around the target area is displayed side-by-side in

Figure 11 column 3, and

Figure 11 column 4, respectively. This again confirms that the targets could not be seen in both background subtracted images and in the original raw image, but it was detected under MMODS.

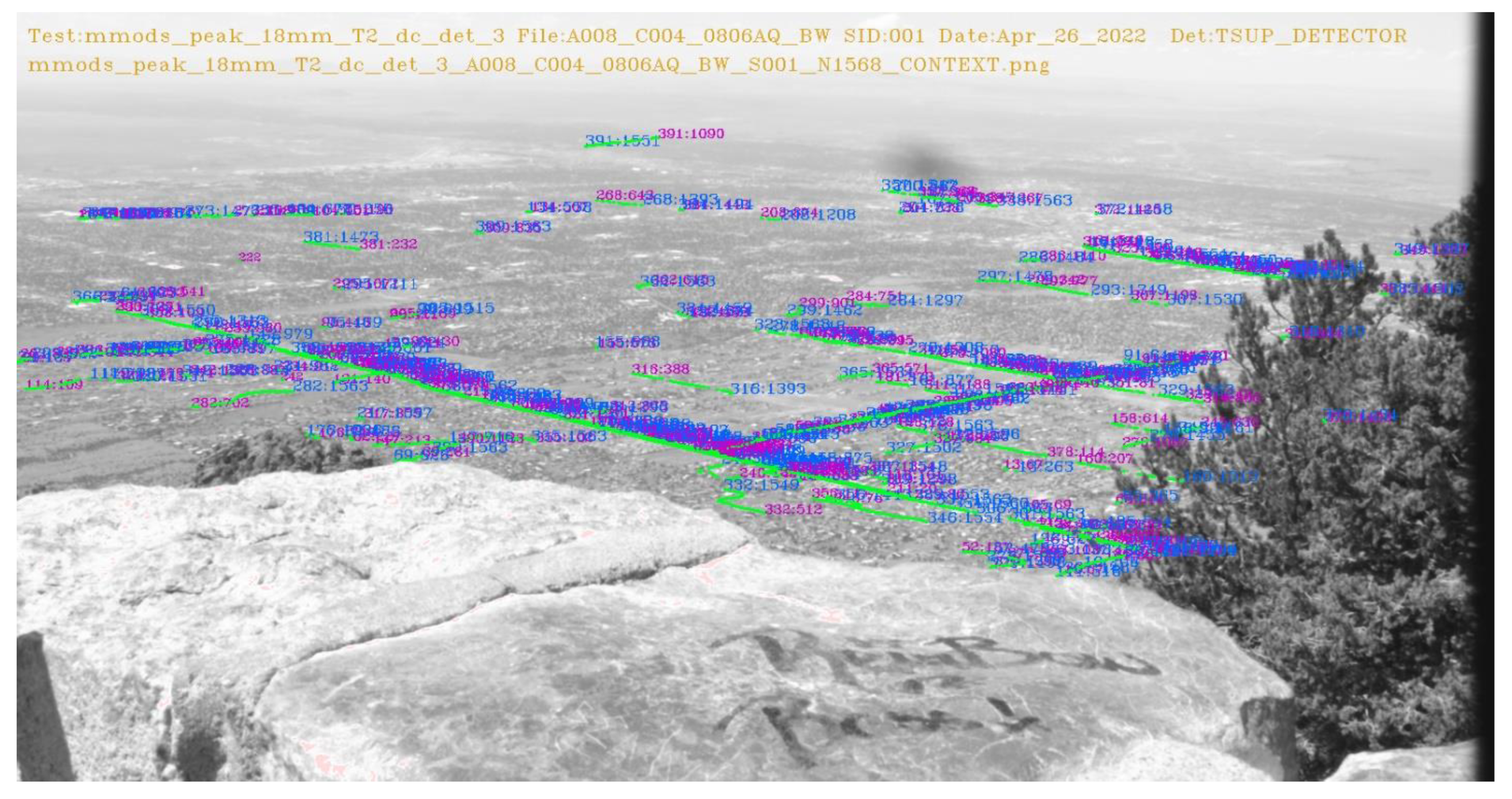

We also processed the video frames we collected at Sandia Mountain Peak in

Table 2 using a remote camera with specifications as shown in

Table 3 under MMODS. The detections from MMODS across the entire frame history were overlayed on the raw image depicted in

Figure 12. With all the detections well aligned with the roads, it is very likely MMODS detects the vehicles because MMODS does not require a priori knowledge about the roads. A comparison of target chips is depicted in

Figure 13. The SNR enhancement is very comparable to our simulated results.

A ROC curve comparison was not generated for this dataset because (1) ground truth vehicle position was not available for this dataset; and (2) we were unable to humanly label the targets with absolute certainty due to poor resolution and low visibility of the targets. However, the fine alignment between the detected position of the targets and the roads, as well as similarities of the detection enhancement in target chips depicted in

Figure 13, strongly suggest that MMODS detected true targets with very low false alarm rates on this dataset.

3.1. Significance of Contribution and Impact to Modern Remote Sensing System

Detecting small and low visible objects in real time is a challenging task for human-monitored security systems. The task becomes even more difficult if one human analyst must simultaneously monitor multiple displays in real time. Human eyes are not capable of integrating signals to enhance an object’s SNR. If the signal is too low to be recognized by human vision, the target signal might be missed. This innovative technology can intelligently find and correlate signals, and then integrate them across a linear set of video frame sequences to increase the target’s SNR and overcome the LOD. Hence, the MMODS technique can detect signals that cannot be normally observed by the human eye or even by some sensors.

MMODS was created to help overcome these challenges for remote sensors. MMODS fills in a major gap by providing a new capability to detect the smallest, finest, and lowest visible object that a human would have difficulty identifying in real time. When a sensor is “sensing”, MMODS intelligently matches and integrates target signals as video frames that are flowing into the system to increase an individual target’s SNR. The SNR enhancement is made possible because moving target signals are correlated over a temporal frame sequence, but the noise is generally uncorrelated (random). Hence, when correlated signals are integrated, they increase by a linear factor, but the random noise does not increase by the same amount. By accurately matching and integrating correlated target energy over multiple frames, MMODS overcomes the LOD in a frame-by-frame processing system.

We have demonstrated in a modern PC with a GPU card that MMODS can achieve real-time performance with modern megapixel cameras that detect a large quantity of targets. In comparison, most existing techniques can only support the simultaneous detection of a low number of targets [

14,

15,

16,

17,

18,

19,

20]. It is impossible for a human eye to monitor 10 million pixels at a given time, but MMODS can do all this in real time. The MMODS’s distributed computing solution utilizes a GPU which favorably scales large image sizes and many targets.

We show in

Figure 9 that, by using a frame buffer of 7, MMODS can improve modern detection sensitivity over the existing systems by 300%. This technology enhances a sensor’s ability to detect low visibility targets under challenging environmental conditions, such as low-lighting and low intensity conditions, especially in a far range monitoring system where sensitivity generally diminishes. MMODS also provides lower false alarm rates over the current existing system. The frame-by-frame change detection approach [

8,

9,

10,

11,

12,

13] typically employed by sensors is insufficient to detect low SNR targets with a manageable false alarm rate. This is because lowering the detection threshold for each video frame results in high levels of false alarms. MMODS enhances the target’s SNR before applying any detection threshold. This allows the detection system to increase its sensitivity without the same false alarm penalty. Depending on the specific application, both the background estimator and the noise estimator can be replaced with more sophisticated methods to achieve better background and noise modeling. After Difference Frames are normalized by noise, the output can be transmitted to MMODS to further enhance a target’s SNR. MMODS can also act as a SNR booster in a frame-by-frame detection system.

MMODS can reliably and accurately detect both fast- and slow-moving objects with high accuracy. In comparison, modern theoretical techniques [

21,

22] are generally limited to slow-moving targets. Furthermore, the modern long exposure configuration used in “Frame-Based Sensors” can enhance the static object’s SNR, but it generally results in a “blur” or “streak” effect [

24]. Modern “Event-Based Sensors” can detect fast moving targets by responding to a brightness change in every pixel, but it does not improve a target’s SNR [

25]. Hence, a low detection threshold could introduce many false alarms. MMODS, however, improves the target’s detection sensitivity (SNR improvement) without introducing a motion “blur” or “streak” effect, which would probably introduce a large error in a detected object’s position. MMODS can achieve subpixel resolution accuracy in the object’s detected position, which is beyond even the nominal pixel resolution accuracy.

3.2. Application to Remote Sensing

MMODS can be realized as a software application that operates on a computer workstation with a Graphics Processing Unit (GPU) card installed. In real-time surveillance monitoring applications, continuous image streams observed by real-time remote sensors can be sent over to the MMODS processing station. The data transfer can be accomplished through various methods, such as transfer through a network cable, transfer data through a network, or downlinking data to a receiver and then to the MMODS. Once the data is received, each image frame can be processed through the MMODS. It can then intelligently match and integrate target signals as video frames flowing into the system to perform SNR enhancement. The detected target location, as well the target’s estimated motion state—such as its predicted position and predicted velocity generated by MMODS—can be outputted to the analyst’s workstation for display and reporting.

3.3. Limitation

While MMODS does not require speed or acceleration to be precisely known, the technology requires a known upper bound in terms of the velocity and acceleration of the targets. This information is usually easily obtainable, such as the maximum speed of a car, plane, etc. The upper bound is used to keep the system from spending wasteful computation searching for an unreasonable target trajectory. If unsure, one can always set a conservative upper bound estimate to help prevent this from happening. There is also a trade-off involved between the number of frames used in the integration window versus real-time latency. While the longer integration window improves SNR, it also increases real-time latency. For example, for a seven-frame integration window [f()], where represents the frame received at time , object detection at time can only be performed after frame is received, thus contributing to a delay reported by three frames as compared to a frame-by-frame detection system. This additional latency is usually negligible in modern sensors with high frame rates, but it is worth noting in design considerations.