Abstract

Defect inspection is essential in the semiconductor industry to fabricate printed circuit boards (PCBs) with minimum defect rates. However, conventional inspection systems are labor-intensive and time-consuming. In this study, a semi-supervised learning (SSL)-based model called PCB_SS was developed. It was trained using labeled and unlabeled images under two different augmentations. Training and test PCB images were acquired using automatic final vision inspection systems. The PCB_SS model outperformed a completely supervised model trained using only labeled images (PCB_FS). The performance of the PCB_SS model was more robust than that of the PCB_FS model when the number of labeled data is limited or comprises incorrectly labeled data. In an error-resilience test, the proposed PCB_SS model maintained stable accuracy (error increment of less than 0.5%, compared with 4% for PCB_FS) for noisy training data (with as much as 9.0% of the data labeled incorrectly). The proposed model also showed superior performance when comparing machine-learning and deep-learning classifiers. The unlabeled data utilized in the PCB_SS model helped with the generalization of the deep-learning model and improved its performance for PCB defect detection. Thus, the proposed method alleviates the burden of the manual labeling process and provides a rapid and accurate automatic classifier for PCB inspections.

1. Introduction

During the fabrication process of printed circuit boards (PCBs), the presence of fine particles or flaws in copper patterns can cause PCBs to malfunction [1,2]. Therefore, defect screening is a crucial step in the semiconductor manufacturing process for PCBs. Defects can be caused by many factors, including materials, techniques, equipment, and processing substrates [1]. Various inspection systems, such as automated visual inspection (AVI) [2,3], X-ray imaging [4], ultrasonic imaging [5], and thermal imaging [6], have been used to detect defects. Advanced AVI systems utilize high-resolution cameras and defect detection algorithms to inspect PCB surfaces at the panel level [2]. Similar to AVI systems, automatic final vision inspection (AFVI) systems can be used to inspect the surfaces of PCBs at the strip level to detect various types of defects such as cracks, nicks, protrusions, and foreign materials.

Various defect detection algorithms have been utilized in AVI and AFVI systems, such as reference-based [7], rule-based [8,9], and learning-based approaches [10,11]. The majority of these algorithms attempt to find similarities between the reference and inspected images. Nadaf and Kolkure used a subtraction algorithm to detect differences from reference images of typical defect regions on PCBs [7]. The primary purpose of reference-based approaches is to determine precise alignments between the reference and test images [12]. Oguz et al. introduced a design-rule-based defect detection algorithm for verifying the requirements of the geometric design rules of conductor spacings, trace widths, and land widths for digital binary PCB images [9]. Benedek proposed a rule-based framework for generating parent–child relationships based on an intensity histogram to detect soldering defects in PCBs [8].

Recently, the use of learning-based algorithms for defect inspection has increased rapidly. Zhang et al. utilized a support vector machine (SVM) to recognize and classify defect regions by extracting histograms and geometric features from PCB images [11]. Deep-learning approaches have also been employed to assist in filtering false defect images prior to manual verification [2]. Deng et al. proposed an automatic real-time defect classification approach based on deep learning to determine real defects in regions of interest in PCBs [10]. These methods can reduce the burden of manual verification by facilitating the classification of defects. Although deep learning-based defect detection models are expected to alleviate the cost of manual labeling once developed, they require a manual labeling process to supply a significant amount of data for training the model.

Previous studies have employed a transfer-learning approach to reduce the amount of training data [13,14]. Miao et al. employed a cost-sensitive Siamese network based on transfer learning to differentiate defects in PCBs [14]. Imoto et al. developed an automatic defect classification system that employed unreliably labeled data to train a convolutional neural network model to classify multiple defect types [10]. Although previous studies have proven the feasibility of the deep-learning approach for defect detection, the model performance remains limited by the shortage and incorrect labeling of training data. He et al. proposed a semi-supervised learning (SSL)-based model to detect defect locations in a DeepPCB dataset [15]. This approach required pairs of defect-free templates and defective test images for comparison. Shi et al. utilized an adversarial SSL method to train only normal PCB samples and detected extremely small, unknown defects during the test [16]. Although the network can be trained with lesser data for the SSL model, the use of golden template images (i.e., normal images) for training has disadvantages, such as a lack of flexibility for variations (e.g., misalignment and discoloration) and the need to acquire and store numerous reference patterns [1,12].

When defect cases are relatively broad and the differences between defect and non-defect cases are small, the chances of false detection by the defect detection algorithms (e.g., golden template images or machine-learning approaches) of the AFVI system remain high. Hence, following the inspection of the AFVI system, human operators verify whether the defects detected by the AFVI system are true or false to reduce the false detection rate. This manual operation incurs high labor costs and is time-consuming. Manual inspection remains a problem owing to the shortage of labor and expertise. In this study, an SSL approach, PCB_SS, is developed. It can detect defects in PCBs from strip-level images acquired using an AFVI system. The PCB_SS model utilizes labeled data and leverages unlabeled data for training with various augmentations. While varying the amount of labeled data, the performance of the PCB SS model was compared to that of a fully supervised approach, PCB FS, which uses only labeled data. Moreover, the accuracies of the PCB_SS and PCB_FS models were evaluated using noisy (incorrectly labeled) training data in an error-resilience test. To compare the performances of the models for defect detection, extreme gradient boosting (XGBoost) and EfficientNet-B5 were chosen for the machine-learning and deep-learning approaches, respectively.

The highlights of this study are as follows:

- (1)

- An SSL approach is developed for significantly varying defect cases with small differences from the non-defect cases, where the golden template and machine-learning approaches are less effective.

- (2)

- By employing the FixMatch concept with varying augmentations, the PCB_SS model was trained using images classified as defects from the AFVI system with unlabeled and manually labeled target data.

- (3)

- An error-resilience test was performed for false detection. The PCB_SS model outperformed PCB_FS for incorrectly labeled data.

- (4)

- By alleviating the false defect detection from the AFVI systems, PCB_SS can reduce the burden of manual defect detection, requiring intensive labor with expertise in PCB inspection.

2. Materials and Methods

2.1. Data Generation

2.1.1. Data Acquisition

PCB images were acquired using an AFVI system (S-400SM; Pixel, Inc., Pyeongtaek, Republic of Korea). The field of view of the AFVI is 27.8 × 27.8 mm, and the resolution of the acquired image is 5.4 μm/pixel. The AFVI system automatically detects defect candidates using rule-based methods from the originally captured region for inspection (5120 × 5120 pixels), as shown in Figure 1a. The suspected defects were then saved as smaller-cropped images (250 × 250 pixels), with defects located at the center of the images. These defect-candidate images were further reviewed by experienced human operators using a software tool called an “image-based verification system” and classified as either true or false defects.

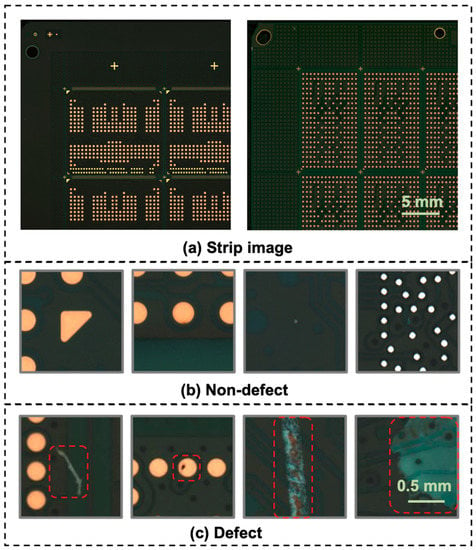

Figure 1.

Examples of (a) strip-level PCB images and (b) non-defect and (c) defect images acquired by the AFVI system: the red dashed boxes in (c) represent the defect locations on the circuit board.

Figure 1a shows examples of strip-level PCB images. Figure 1b,c show the data collected as defects from the strip-level PCB images using the AFVI system. Figure 1b shows that the board patch images passed manual screening without defects or with very small imperfections, which did not affect the functional integrity of the board. Figure 1c shows example images with defects of various shapes, sizes, and colors. In this study, 500 different strip-level PCBs were utilized. PCBs had defect issues, with approximately 90% being foreign materials and scratches, while 10% were cracks, nicks, discoloration, and protrusions. Although the defect rates varied for the PCB boards, approximately 40 defect images were acquired per strip-level PCB from the AFVI system, with an average 90% chance of false detection.

2.1.2. Data Preparation

Images of the board patches were resized to 100 × 100 pixels for training and testing. The training data were split into labeled and unlabeled data. For the experiments, the number of labeled data was varied as 250, 500, 1000, 2000, and 4000 (half of the labeled data were from either the defect or non-defect classes). The remaining training data were unlabeled (16,909 images in total) to facilitate the generalization of the PCB_SS model [17]. Validation and test data (500 and 1500 images, respectively) were used to verify and evaluate the model performance during and after training. Table 1 summarizes the data used in this study. To evaluate the error resistances of the models with noisy training data, approximately 0%, 3%, 9%, and 12.5% of the labeled data from the 4000 labeled sets (Label_4000 in Table 1) were relabeled incorrectly, as shown in Table 2.

Table 1.

Dataset used in this study.

Table 2.

Dataset for the model error-resilience test.

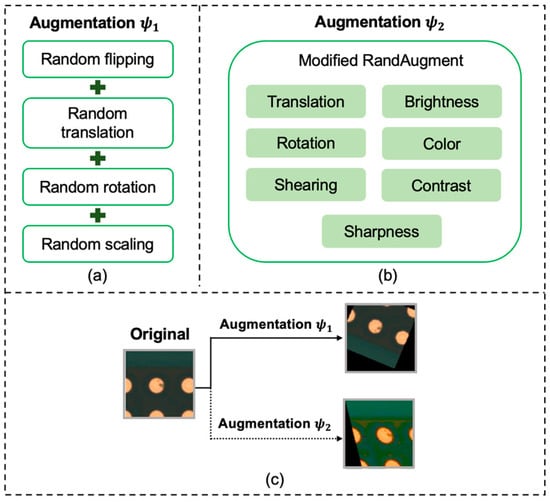

Inspired by FixMatch [17,18,19], different augmentation strategies have been applied to labeled and unlabeled data. The augmentation methods are illustrated in Figure 2. Figure 2a illustrates the augmentation () of the labeled data of PCB_SS. Augmentation comprised random horizontal/vertical flipping, translation, rotation, and scaling. For augmentation , as shown in Figure 2b, two transformations (translation, rotation, shearing, sharpness, contrast, color, and brightness) were randomly selected, along with the magnitudes of these transformations for a batch of images. The same predictions were expected from the unlabeled data when perturbed by two different augmentations (i.e., and ). For the PCB_FS model, augmentation was used. The range of the transformations is listed in Table 3. For each epoch, all the input training data for the PCB_SS and PCB_FS models were replaced by the augmented data.

Figure 2.

Augmentation process used in this study: (a) augmentation for labeled data and unlabeled data for pseudo prediction (), (b) augmentation for unlabeled data (), and (c) examples of images augmented by the two types.

Table 3.

List of transformations and their ranges.

2.2. Deep-Learning Approach

2.2.1. PCB_FS Model

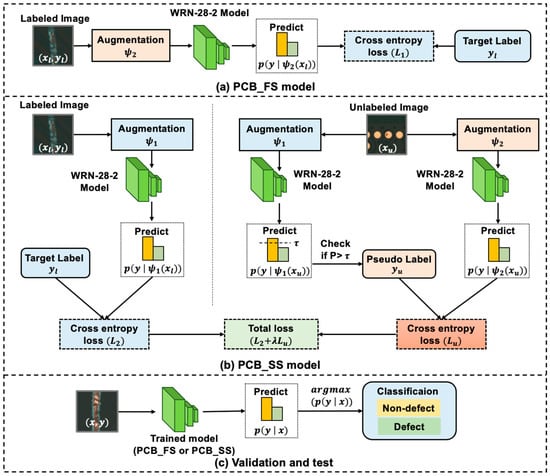

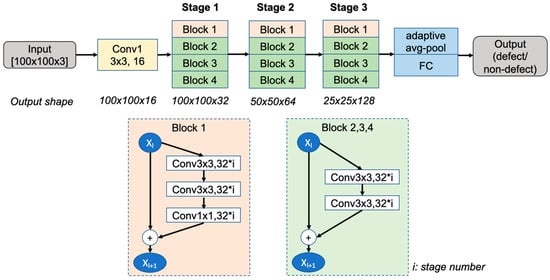

Figure 3a shows the process of training the PCB_FS model using only the labeled data for the baseline. The input image is first augmented () and fed into the model. WideResNet-28-2 (WRN-28-2) [20] was selected as the network architecture for this study. The architecture of the WRN-28-2 is shown in Figure 4. This model is a wider version of the residual network (ResNet) and consists of 1.47 million parameters, 28 convolution layers, and a widening factor of 2 compared with the original ResNet. The final layer outputs the probabilities of the class labels (i.e., two classes for classifying defects and non-defects) and predicts the class with the highest probability. The loss between the target and predicted classes was defined based on the cross-entropy (CE) loss.

Figure 3.

Pipelines for the (a) PCB_FS and (b) PCB_SS models; (c) classification process of the validation and test datasets for the trained model from either (a) PCB_FS or (b) PCB_SS (: labeled image, : target label, : unlabeled image, : pseudo-label, p: probabilities, : threshold of pseudo-label, WRN-28-2: WideResnet-28-2, x, y: validation and test images and their labels).

Figure 4.

Architecture of WideResNet-28-2 (WRN-28-2) (FC: fully connected layer).

2.2.2. PCB_SS Model

The PCB_SS model used labeled data, as in the PCB_FS model, as shown in Figure 3a, with augmentation on the labeled data and leveraged the unlabeled data to improve generalization, as shown in Figure 3b. For unlabeled data, after applying augmentation , the model predicted the class of the image with the highest probability and assigned a pseudo-label if the probability was higher than a predefined threshold τ. The pseudo-label was used to compare the predicted output class from the augmentation to compute the unsupervised loss function. The supervised and unsupervised loss functions were also calculated based on the CE loss. The implemented condition for the class with the highest probability of pseudo-labeling is as follows:

where and denote the supervised and unsupervised loss functions, respectively; is the probability distribution of each class predicted by the model for the augmented version of the labeled image (); μ is the relative coefficient of the unlabeled images in a batch; is the pseudo-label; and q denotes the probability distribution of each class predicted by the model for the augmented version of the unlabeled image (). The total loss was calculated as follows:

where is the coefficient of unlabeled loss, which is a fixed scalar hyperparameter that determines the ratio of unsupervised to supervised losses. The value of was set to in this study (Table 4).

Table 4.

Hyperparameter configuration for the training process.

2.2.3. Network Training

Table 4 lists the hyperparameter configuration used to train the PCB_FS and PCB_SS models. The parameters of the convolutional and fully connected layers were initialized by the He and Xavior (Glorot) initializations, respectively [21,22]. The total loss in a batch was backpropagated and used to update the model weights using stochastic gradient descent (SGD) optimization with Nestorov momentum [23]. To accelerate the training, the learning rate was initially set to 0.001 for both models and scheduled during training using a cosine learning rate decay [18,23]: where and denote the initial learning rate, the current training step, and the total number of training steps, respectively. The threshold () for the pseudo-label of the unlabeled data was 0.9. After updating the gradients of the data in a batch, the parameters of the model were averaged over the training iterations using the exponential moving average (EMA) method to avoid fluctuation by applying a larger coefficient ( = 0.999) to the recent weights [24].

where α denotes the smoothing coefficient (EMA decay), and are the weights of the EMA model in the current and previous steps, respectively, and denotes the gradient updated model at the current step. The validation set was evaluated after a training period of 256 iterations. The weight decay coefficient is a regularization factor used to decrease the weights during the optimization.

The pseudocode of the PCB_SS model is as follows (Algorithm 1).

| Algorithm 1 Pseudocode of PCB_SS model |

|

2.3. Performance Evaluation

To evaluate the performance of the PCB_FS and PCB_SS models, all models were trained three times. The classification process is illustrated in Figure 3c. To compute the number of misclassified data points over the test data, the error rate was computed as follows:

where and denote the number of false-positives, false-negatives, true-positives, and true-negatives, respectively, for the defect class. The average error rates for the test data from the three training sessions were calculated.

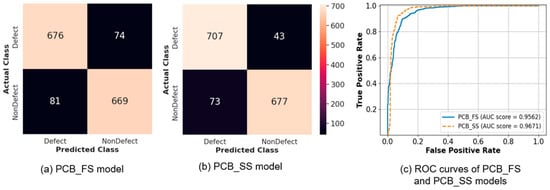

The best PCB_FS and PCB_SS models were selected from the top three trained models and compared in terms of recall, precision, area under the curve (AUC) score, confusion matrix, and receiver operating characteristics (ROC) curve [25]. Detecting a defect case has a higher priority than detecting a non-defect case. Thus, the recall (i.e., true-positive rate) of the defect class is an important metric for verifying the validity of the proposed method. Precision indicates the proportion of correct defect predictions for all the defects classified by the model. The ROC curve shows the true-positive rate against the false-positive rate by varying the probability thresholds for the prediction. To visualize the regions in which the model concentrates for the decision, gradient-weighted class activation mapping (Grad-CAM++) [26] was utilized.

To further evaluate the performance of the proposed model, a machine-learning algorithm was applied and compared. First, the features were extracted and quantized using a scale-invariant feature transform (SIFT) algorithm [27]. XGBoost (learning rate 0.15, gamma 0.4, maximum depth 10, and minimum sum of instance weight 5) used the extracted features [28,29]. Grid-search cross-validation was used to set the optimal hyperparameters for the best performance [30]. In addition, the performance of the proposed model was compared with that of a deep-learning classifier. EfficientNet-B5 was utilized with the same hyperparameters as in the PCB_FS model.

3. Results

3.1. Learning Curves

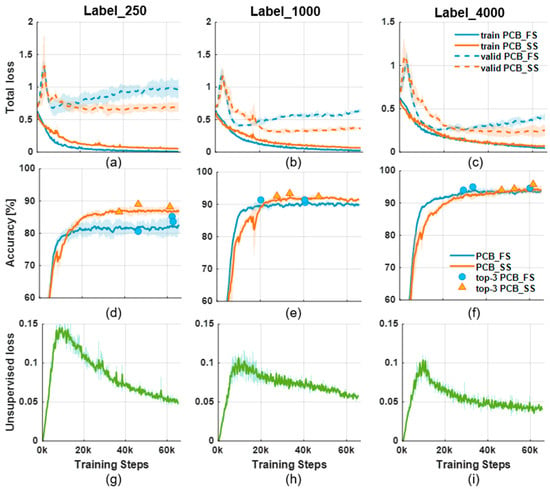

Figure 5 shows the learning curves of the PCB_FS and PCB_SS models when trained with 250, 1000, and 4000 labeled images. Figure 5a–c show the total losses during the training of the PCB_FS and PCB_SS models using 250, 1000, and 4000 labeled data, respectively. Overall, increasing the number of labeled data does not result in significant differences in the training losses for the PCB_FS and PCB_SS models. However, the validation loss decreased noticeably with increasing numbers of labeled data. The validation loss of the PCB_SS model was lower than that of the PCB_FS model because the PCB_SS model was generalized by leveraging unlabeled data. The accuracies shown in Figure 5d–f for the validation set also show that the PCB_SS model outperformed the PCB_FS model. Figure 5g–i show the unsupervised losses from the PCB_SS model during training. In the early training stage (0–10,000 training steps), the unsupervised losses increase because a large number of unlabeled data have a higher probability of a false class than the threshold value (see Equation (3). This indicates that the model parameters were initially learned from the features extracted from the labeled data. Subsequently, the PCB_SS model starts to learn the features from the unlabeled data to minimize the unsupervised loss gradually.

Figure 5.

Learning curves during training for Label_250, Label_1000, and Label_4000: (a–c) total losses on training data (solid lines) and validation losses (dotted lines), (d–f) validation accuracies (circle and triangle: training steps of top three accuracies for PCB_FS and PCB_SS, respectively), and (g–i) unsupervised losses on the unlabeled data of the PCB_SS model.

3.2. Performance Evaluation

Table 5 shows the error rates of the test data inferred from the PCB_FS and PCB_SS models trained with different numbers of labeled data. Overall, the error rate decreased when the models were trained using a larger number of labeled samples. The PCB_SS model outperformed the PCB_FS model in all the cases. The error rates of the PCB_SS model were lower than those of the PCB_FS model by 8.25% and 3.40% for Label_250 and Label_4000, respectively. In addition, the PCB_SS model with Label_500 (a mean error rate of 11.98%) achieved results comparable to those of the PCB_FS model with Label_4000 (a mean error rate of 11.22%).

Table 5.

Error rates (%) of test data with different numbers of labeled data (Best result in bold).

The best models for PCB_FS and PCB_SS learning were obtained from Label_4000. The confusion matrices for the best PCB_FS and PCB_SS models are shown in Figure 6a,b, and the ROC curves for these models are shown in Figure 6c. The number of misclassified images was higher for the PCB_FS model in both classes (defects and non-defects). Table 6 summarizes the performance of the proposed models (PCB_SS and PCB_FS), XGBoost, and EfficientNet-B5. Deep-learning models obtained better classification results than machine-learning algorithms. The proposed PCB_SS model outperformed XGBoost, EfficientNet, and PCB_FS by 15.2, 4.1, and 2.9%, respectively, in terms of accuracy. Overall, the proposed PCB_SS model achieved greater recall, precision, and AUC scores than the other models, as shown in Table 6.

Figure 6.

Confusion matrix of the (a) PCB_FS and (b) PCB_SS models, and (c) ROC curves for the two models (solid: PCB_FS, dotted: PCB_SS).

Table 6.

Classification performances of the PCB_FS and PCB_SS models and the machine-learning (XG-Boost) and deep-learning (EfficientNet) approaches (Best result in bold).

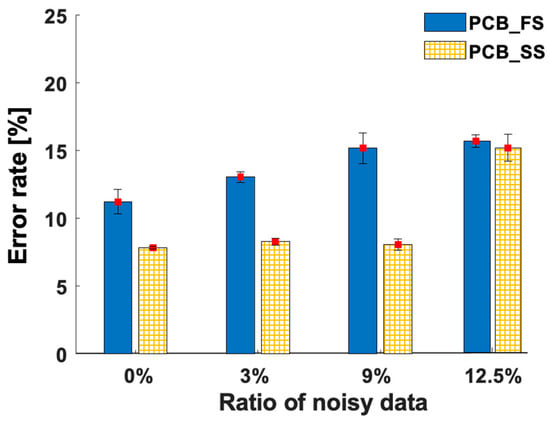

Table 7 and Figure 7 illustrate the error rates of the test data from the error-resilience test of the deep-learning models. The error rate of the PCB_FS model increased by 4% when the noisy data ratio was increased to 9%. Meanwhile, the error rate of the PCB_SS model was relatively consistent. The increase in the error rate of the PCB_SS model was less than 0.5%, whereas the noisy data ratio varied from 0 to 9%. When the noisy data ratio increased to 12.5%, the error rate of the PCB_SS model was similar to that of the PCB_FS model. Thus, the PCB_SS model was more robust to noisy data (i.e., incorrectly labeled data) than the PCB_FS model when the noisy data ratio was lower than 12.5%.

Table 7.

Error rates (%) of test data with different ratios of noisy data (Best result in bold).

Figure 7.

Error rates (%) of the test data with different ratios of noisy data.

In the error-resilience test, unsupervised loss helped the PCB_SS model resist noisy data in the labeled set. Meanwhile, the PCB_FS model performed worse when incorrectly labeled data were present in the training set. Table 8 lists the error rates of the unlabeled data from the error-resilience tests of the models. The error rates of the test data were associated with those of the unlabeled data. When the noisy data ratio is from 0% to 9%, the error rates of the unlabeled and test data of the PCB_SS model are consistent, while those of the PCB_FS model increase corresponding to noisy data ratios of 4.6% and 3.9% for unlabeled data (Table 8) and test data (Table 7), respectively. With a noisy data ratio of 12.5%, the error rate of the unlabeled data increased by 3.3% (Table 8), and that of the test data increased by 7.1% (Table 7). This result also proves the effect of incorrectly labeled data on the pseudo-labels assigned by the model.

Table 8.

Error rates (%) of unlabeled data with different noisy data ratios (Best result in bold).

3.3. Parameter Optimization

Augmentation is a critical factor in the model performance. Table 9 lists the error rates of the PCB_FS and PCB_SS models with various augmentations in the Label_4000 dataset. For PCB_SS, the augmentation on the labeled and unlabeled data for pseudo-label prediction was replaced by no augmentation () and augmentation . With no augmentation, the performance of both models degraded. The PCB_FS and PCB_SS models yielded the best performance with augmentation and augmentation , respectively.

Table 9.

Error rates of test data of PCB_FS and PCB_SS models with varying augmentations (: no augmentation, and and are selected augmentations, Best result in bold).

Table 10 lists the error rates along with the recall and precision of the defect class of the PCB_SS model with varying thresholds (0.4, 0.7, 0.9, and 1.0). Assigning an appropriate threshold value (τ = 0.9, Table 10) allows high-quality unlabeled images, with which the model can make inferences with high confidence, to contribute to the reduction in unsupervised loss. A high threshold is expected to improve the performance of the pseudo-labeling [18]. Considering the abrupt error rate increase at 1.0 and the highest recall value at 0.9, a threshold of 0.9 was chosen in this study.

Table 10.

Test set metrics of the PCB_SS model using different thresholds (τ) (Best result in bold).

The coefficient of unlabeled loss () is another parameter that affects the performance of the model. Initially, the loss of labeled data was more critical than that of unlabeled data. However, the effect of unlabeled data increases during training [18]. In the early epochs, most of the pseudo-labels of the unlabeled data were incorrect (i.e., in Equation (3)). As the model learns more features from labeled data, the probability of a pseudo-label increases due to the neighbor distribution, and more unlabeled data can exceed the threshold (i.e., in Equation (3)). Therefore, the effect of the unlabeled loss increases during training, regardless of the initial value of λ.

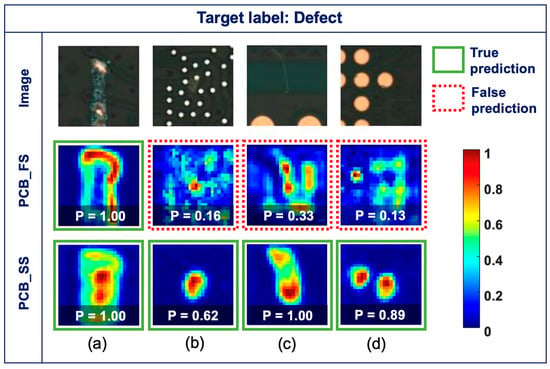

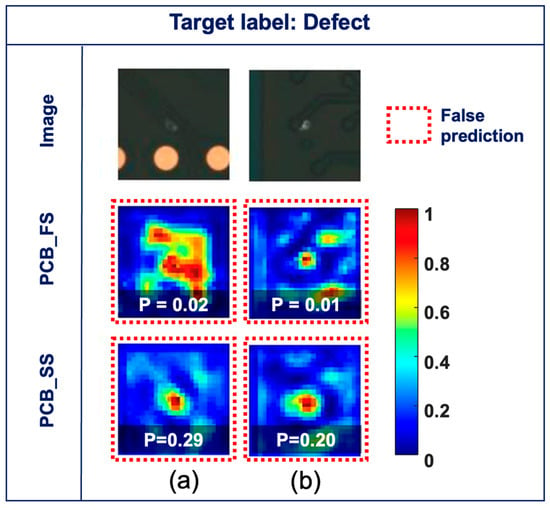

3.4. Gradient Visualization (Grad-CAM)

Figure 8 shows the original images (top row) and Grad-CAM maps obtained from the PCB_FS (middle row) and PCB_SS (bottom row) models for the defect classes and their corresponding probabilities. Figure 8a shows the images classified correctly by the PCB_FS and PCB_SS models with high confidence (i.e., the probabilities of the PCB_FS and PCB_SS models are 1.00). While the Grad-CAM maps from the PCB_FS model focused on the defect boundaries, those from the PCB_SS model focused on the defects more precisely. Figure 8b–d show hard cases of defects (e.g., unclear objects, thin scratches, and small pin holes). The PCB_FS model provides false predictions (i.e., classified as non-defects), which correspond to an incorrect focus from Grad-CAM, and prediction probabilities of 0.16, 0.33, and 0.13 for the input images in Figure 8b–d, respectively. In contrast, the PCB_SS model showed high activation at the defect locations with true predictions and prediction probabilities of 0.62, 1.00, and 0.89 for the input images in Figure 8b–d, respectively. Although the models were trained without information on the defect locations, decisions from the models were made based on the detected features.

Figure 8.

Original images (top row) and Grad-CAM maps obtained using the PCB_FS model (middle row) and PCB_SS model (bottom row) (a) true predictions for both PCB_FS and PCB_SS models, (b–d) false and true predictions for PCB_FS and PCB_SS models, respectively (green solid box: true prediction, red dotted box: false prediction).

4. Discussion

This study demonstrated the robustness of the PCB_SS model for defect classification when the labeled data were limited or incorrectly labeled for training. The proposed PCB_SS model can benefit from the inspection procedure by reducing the burden of manual inspection. Although the SSL approach for the PCB_SS model was adopted from the FixMatch model [18], the applications of which are limited to object-specific classification, the defect detection in this study was related to feature-related recognition rather than object detection. Furthermore, optimal augmentations for labeled and unlabeled data were selected to improve the effectiveness of the model in increasing the diversity of data without label changes (Table 9). In the SSL approach, it is difficult for the model to generate a neighbor connection between the perturbed versions of the unlabeled data and labeled data with no augmentation ( or augmentation . Thus, the labeled and unlabeled data for the pseudo-label prediction of the PCB_SS model were augmented by augmentation . Augmentation , as shown in Figure 2a, can increase the variation in the dataset for various types, shapes, and sizes of defects. The training process for the labeled data can be accelerated because of the model with different augmented data after each evaluation step [19]. Labeled data are critical for creating the underlying feature space, and SSL takes advantage of prior knowledge of the domain and data distribution to relate the data and labels to improve the classification performance [17,18,24,31,32]. For the PCB_FS model, augmentation , as shown in Figure 2b, comprehensively generates diverse variations in the training dataset and reduces the search space of the transformations [19,32]. The computational time for inspecting a strip-level PCB image was 164 GFLOPs, which required 25 ms, using the resources utilized in this study.

Table 6 shows a comparison of the proposed model with the machine-learning (XGBoost) and deep-learning (EfficientNet) approaches. The results show that the proposed model is efficient for PCB defect detection, especially when the differences between the defect and non-defect images are small and the defect cases vary widely. Because the percentage of misclassified defect patches from the AFVI system is high (90%, on average), it is crucial to utilize a deep-learning approach to enhance the inspection process to alleviate the false detection of the AFVI system and thus further require manual validation. Based on this comparison, the current backbone model (i.e., WRN-28-2) is superior to EfficientNet. However, various deep-learning models can be selected as the backbone for the future work.

Training the network using unlabeled data and adding unsupervised loss made it possible to generalize the parameters of the PCB_SS model (i.e., overfitting was avoided) (Figure 5). This generalization of the model can help classify unseen data, which were not previously introduced into the training data, based on the information learned from the unlabeled data. As shown in Figure 5, the difference in the validation losses between the PCB_FS and PCB_SS models was higher than the difference in the validation accuracies between the models. This can occur when the model is less certain about the prediction, resulting in a higher loss even though the model predicts correctly. The Grad-CAM maps (Figure 8) visually confirmed that the model extracted features from the defect regions for decision making. Furthermore, the augmentation process did not affect the decision features, although it modified the training images. In addition, the PCB_SS model can resist noisy data better than the PCB_FS model (Table 7 and Figure 7).

In this study, the error rate of the unlabeled data with a probability exceeding the predefined threshold (τ) for the PCB_SS model decreased as the number of labeled data increased from 250 to 4000 (15.94% and 3.95% for Label_250 and Label_4000, respectively). This proves that a larger number of correctly labeled data can provide more guidance for the learning procedure, which also improves the clustering process for unlabeled data. The quality of the pseudo-labels predicted from the unlabeled data is critical for obtaining a high accuracy with the PCB_SS model. Further investigations can be conducted on the dynamic threshold to adaptively select appropriate unlabeled samples to avoid degradation of the overall performance. As wider variations were expected for defect cases than for non-defect cases, the proportions of unlabeled data for defects and non-defects were imbalanced (i.e., more defects than non-defect data), as shown in Table 1. The results of the confusion matrices (Figure 6) demonstrated that the reduction in the false prediction of the defect class (42%) between the PCB_FS and PCB_SS models was more significant than that of the non-defect class (10%). Considering that the main objective of inspection is not to miss the defects in PCBs, imbalanced data can improve the classification performance. As demonstrated in the confusion matrix (Figure 6), the PCB_SS model had fewer false predictions than the PCB_FS model for the test set. Figure 9 shows the Grad-CAM maps of the two defect images that were misclassified by the PCB_FS and PCB_SS models. Both defects are related to a foreign object (e.g., dust), which is difficult to detect visually. Although the images were misclassified, the Grad-CAM maps showed that the PCB_SS model still concentrated on the defect location. However, the prediction results were also affected by the low activation (approximately 0.3–0.4) of the surrounding regions. Further analysis must be conducted to develop a model that is less affected by the activation of the surrounding region [33].

Figure 9.

Examples of defect images (a,b) misclassified by both models.

The current study had several limitations. The PCB_SS model expects the ROI images to locate defect candidates from the AFVI system. Thus, it is necessary to acquire patch images to apply the current approach to whole PCBs or assembled PCBs (i.e., PCBAs). When new circuit patterns are tested, the proposed model may require further updates by training with additional circuit-pattern images. The new training process can be performed efficiently by utilizing transfer learning from the previous weights of the model. For the best Label_4000 model, the percentage of unlabeled data with a probability higher than the threshold () was only 88%. The PCB_SS model requires further improvement by creating close connections between the labeled and unlabeled data [34].

The results of this study demonstrate that the PCB_SS model performs effectively when a limited number of data are labeled or a portion of them are incorrectly labeled. The proposed model was most effective when the defect cases varied significantly with small changes from the non-defect cases. Combining this method with the AFVI system is expected to significantly minimize the need for manual inspection for false detections and potentially reduce the cost incurred in the PCB manufacturing process.

5. Conclusions

The proposed PCB_SS model can effectively detect defects in PCB images when trained using labeled and unlabeled data. The unsupervised loss of unlabeled data perturbed by two different augmentations contributes to improving the performance of the PCB_SS model for cases with data-labeling shortages or errors. Further research should focus on investigating and building a robust SSL model for inspection systems to analyze multiple types of PCB defects and error resistance with higher proportions of noisy data. In addition, wider and deeper variants of WRN-28-2 and advanced models, such as Transformers, will be employed as the backbone to improve the capacity of the deep-learning model [35,36,37]. Furthermore, this study can be extended to classify various types of PCB [13,38].

Author Contributions

Conceptualization, S.P. and T.T.A.P.; methodology, T.T.A.P. and H.C.; software, T.T.A.P. and D.K.T.T.; validation, T.T.A.P. and S.P.; data curation, H.C.; writing—original draft preparation, T.T.A.P. and D.K.T.T.; writing—review and editing, H.C. and S.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF), funded by the Ministry of Science and ICT (grant number NRF-2023R1A2C2003737).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Moganti, M.; Erçal, F.; Dagli, C.H.; Tsunekawa, S. Automatic PCB Inspection Algorithms: A Survey. Comput. Vis. Image Underst. 1996, 63, 287–313. [Google Scholar] [CrossRef]

- Zheng, X.; Zheng, S.; Kong, Y.; Chen, J. Recent advances in surface defect inspection of industrial products using deep learning techniques. Int. J. Adv. Manuf. Technol. 2021, 113, 35–58. [Google Scholar] [CrossRef]

- Huang, S.-H.; Pan, Y.-C. Automated visual inspection in the semiconductor industry: A survey. Comput. Ind. 2015, 66, 1–10. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, M.; Zhang, Z. Microfocus X-ray printed circuit board inspection system. Optik 2014, 125, 4929–4931. [Google Scholar] [CrossRef]

- Wang, F.; Yue, Z.; Liu, J.; Qi, H.; Sun, W.; Chen, M.; Wang, Y.; Yue, H. Quantitative imaging of printed circuit board (PCB) delamination defects using laser-induced ultrasound scanning imaging. J. Appl. Phys. 2022, 131, 053101. [Google Scholar] [CrossRef]

- Wagh, C.R.; Baru, V.B. Detection of Faulty Region on Printed Circuit Board With IR Thermography. Int. J. Sci. Eng. Res. 2013, 4, 544523. [Google Scholar]

- Nadaf, M.; Kolkure, M.V.S. Detection of Bare PCB Defects by using Morphology Technique. Bus. Mater. Sci. 2016, 120142102. [Google Scholar]

- Benedek, C. Detection of soldering defects in Printed Circuit Boards with Hierarchical Marked Point Processes. Pattern Recognit. Lett. 2011, 32, 1535–1543. [Google Scholar] [CrossRef]

- Oguz, S.H.; Onural, L. An automated system for design-rule-based visual inspection of printed circuit boards. In Proceedings of the 1991 IEEE International Conference on Robotics and Automation, Sacramento, CA, USA, 9–11 April 1991; Volume 2693, pp. 2696–2701. [Google Scholar]

- Deng, Y.-S.; Luo, A.-C.; Dai, M.-J. Building an Automatic Defect Verification System Using Deep Neural Network for PCB Defect Classification. In Proceedings of the 2018 4th International Conference on Frontiers of Signal Processing (ICFSP), Poitiers, France, 24–27 September 2018; pp. 145–149. [Google Scholar]

- Zhang, Z.-Q.; Wang, X.; Liu, S.; Sun, L.; Chen, L.; Guo, Y.-M. An Automatic Recognition Method for PCB Visual Defects. In Proceedings of the 2018 International Conference on Sensing, Diagnostics, Prognostics, and Control (SDPC), Xi’an, China, 15–17 August 2018; pp. 138–142. [Google Scholar]

- Taha, E.M.; Emary, E.E.; Moustafa, K. Automatic Optical Inspection for PCB Manufacturing: A Survey. Int. J. Sci. Eng. Res. 2014, 5, 1095–1102. [Google Scholar]

- Imoto, K.; Nakai, T.; Ike, T.; Haruki, K.; Sato, Y. A CNN-Based Transfer Learning Method for Defect Classification in Semiconductor Manufacturing. IEEE Trans. Semicond. Manuf. 2019, 32, 455–459. [Google Scholar] [CrossRef]

- Miao, Y.; Liu, Z.; Wu, X.; Gao, J. Cost-Sensitive Siamese Network for PCB Defect Classification. Comput. Intell. Neurosci. 2021, 2021, 7550670. [Google Scholar] [CrossRef]

- He, F.; Tang, S.; Mehrkanoon, S.; Huang, X.; Yang, J. A Real-time PCB Defect Detector Based on Supervised and Semi-supervised Learning. In Proceedings of the ESANN, Bruges, Belgium, 2–4 October 2020. [Google Scholar]

- Shi, W.; Zhang, L.; Li, Y.; Liu, H. Adversarial semi-supervised learning method for printed circuit board unknown defect detection. J. Eng. 2020, 2020, 505–510. [Google Scholar] [CrossRef]

- Xie, Q.; Dai, Z.; Hovy, E.H.; Luong, M.-T.; Le, Q.V. Unsupervised Data Augmentation for Consistency Training. arXiv 2019, arXiv:1904.12848. [Google Scholar]

- Sohn, K.; Berthelot, D.; Li, C.-L.; Zhang, Z.; Carlini, N.; Cubuk, E.D.; Kurakin, A.; Zhang, H.; Raffel, C. FixMatch: Simplifying Semi-Supervised Learning with Consistency and Confidence. arXiv 2020, arXiv:abs/2001.07685. [Google Scholar]

- Cubuk, E.D.; Zoph, B.; Shlens, J.; Le, Q.V. Randaugment: Practical automated data augmentation with a reduced search space. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 3008–3017. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Wide Residual Networks. arXiv 2016, arXiv:abs/1605.07146. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the AISTATS, Sardinia, Italy, 13–15 May 2010. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Loshchilov, I.; Hutter, F. SGDR: Stochastic Gradient Descent with Warm Restarts. arXiv 2017, arXiv:1608.03983. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Proceedings of the NIPS, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Chattopadhyay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM++: Improved Visual Explanations for Deep Convolutional Networks. arXiv 2018, arXiv:1710.11063. [Google Scholar]

- Chiu, L.C.; Chang, T.S.; Chen, J.Y.; Chang, N.Y. Fast SIFT design for real-time visual feature extraction. IEEE Trans. Image Process. 2013, 22, 3158–3167. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.-C.; Lin, C.-J. Libsvm: A library for Support Vector Machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Rosebrock, A. Grid Search Hyperparameter Tuning with Scikit-Learn (GridSearchCV). Available online: https://www.pyimagesearch.com/2021/05/24/grid-search-hyperparameter-tuning-with-scikit-learn-gridsearchcv/ (accessed on 1 April 2022).

- Yang, X.; Song, Z.; King, I.; Xu, Z. A Survey on Deep Semi-supervised Learning. arXiv 2021, arXiv:abs/2103.00550. [Google Scholar] [CrossRef]

- Pham, H.; Xie, Q.; Dai, Z.; Le, Q.V. Meta Pseudo Labels. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 21–25 June 2021; pp. 11552–11563. [Google Scholar]

- Lee, D.; Kim, S.; Kim, I.; Cheon, Y.; Cho, M.; Han, W.-S. Contrastive Regularization for Semi-Supervised Learning. arXiv 2022, arXiv:abs/2201.06247. [Google Scholar]

- Amorim, W.P.; Falcão, A.X.; Papa, J.P.; Carvalho, M.H. Improving semi-supervised learning through optimum connectivity. Pattern Recognit. 2016, 60, 72–85. [Google Scholar] [CrossRef]

- Jin, J.; Feng, W.; Lei, Q.; Gui, G.; Li, X.; Deng, Z.; Wang, W. Defect Detection of Printed Circuit Boards Using EfficientDet. In Proceedings of the 2021 IEEE 6th International Conference on Signal and Image Processing (ICSIP), Nanjing, China, 9–11 July 2021; pp. 287–293. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 9992–10002. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2019, arXiv:abs/1905.11946. [Google Scholar]

- Huang, W.; Wei, P. A PCB Dataset for Defects Detection and Classification. arXiv 2019, arXiv:abs/1901.08204. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).