Abstract

We propose an omnidirectional measurement method without blind spots by using a convex mirror, which in principle does not cause chromatic aberration, and by using vertical disparity by installing cameras at the top and bottom of the image. In recent years, there has been significant research in the fields of autonomous cars and robots. In these fields, three-dimensional measurements of the surrounding environment have become indispensable. Depth sensing with cameras is one of the most important sensors for recognizing the surrounding environment. Previous studies have attempted to measure a wide range of areas using fisheye and full spherical panoramic cameras. However, these approaches have limitations such as blind spots and the need for multiple cameras to measure all directions. Therefore, this paper describes a stereo camera system that uses a device capable of taking an omnidirectional image with a single shot, enabling omnidirectional measurement with only two cameras. This achievement was challenging to attain with conventional stereo cameras. The results of experiments confirmed an improvement in accuracy of up to 37.4% compared to previous studies. In addition, the system succeeded in generating depth image that can recognize distances in all directions in a single frame, demonstrating the possibility of omnidirectional measurement with two cameras.

1. Introduction

1.1. Backgrounds

In recent years, there has been a great deal of research and development on driver assistance systems for automobiles, fully automated driving, and unmanned search robots for disaster areas. As representative examples of autonomous driving technology, we will introduce the unmanned vehicle “R2” [1], the serving robot “BellaBot” [2], and the electric vehicle “e-palette” [3].

The “R2” [1] is an unmanned vehicle developed by Nuro, an American self-driving technology company. They launched a service to transport medical equipment, medicines, food, and beverages to assist medical personnel responding to a new type of coronavirus infection (COVID-19).

The BellaBot [2] is a cat-shaped meal delivery robot invented by Pudu. It can provide a stable, non-contact food delivery service and has already been introduced in many restaurants in Japan.

The “e-Palette” [3] is an electric vehicle developed by Toyota Motor Corporation exclusively for Autono-MaaS (autonomous mobility as a service). As a Worldwide Mobility Partner of the Olympic and Paralympic Games, Toyota provided more than a dozen e-Palettes (Tokyo 2020 specifications), the first Toyota electric vehicles dedicated for Autono-MaaS, to support the transportation of athletes and Games officials as buses that traveled around the athlete village. The e-Palettes were used as buses to transport athletes and Games officials around the village.

In these fields, forward distance measurement and three-dimensional measurement of the surrounding environment are indispensable. There are various types of sensors, but depth sensing using cameras is the most widely used and one of the most important sensors for recognizing the surrounding environment. Depth sensing with cameras has been studied for a long time and is widely used because of its high measurement density, wide measurement range, and diversity of information obtained.

1.2. Existing Technology

Depth sensing using cameras is often based on a technique called stereo matching, which uses two cameras to calculate the disparity between two corresponding pixel regions from a pair of images to obtain the distance [4]. There have been many studies on distance measurement by stereo matching, especially for automatic guidance. In such cases, a wider range of measurements is important.

In the study of Hirotaka Iida et al. [5], a fisheye lens was used as a stereo camera for distance measurement, to enable a wider range of measurements that are impossible with ordinary cameras. However, the angle of view of a fisheye lens is generally about 180°, which restricts the measurement range.

In the study by Changhee Won et al. [6], the team addressed the limitation of Iida et al. [5] by utilizing four fisheye cameras and measuring the distance in all directions. Although this method allows for stable measurement in all directions, it requires double the number of cameras compared to the method of Iida et al. [5]

Schönbein, Miriam et al. proposed a method [7,8] in which omnidirectional visual sensors are installed in front of the vehicle roof and on the left and right sides. In this method, two cameras are used for omnidirectional measurement, but the accuracy of the measurement is not sufficient because one of the sensors is reflected in the image, and the large difference between the images makes matching from the image development difficult.

Aoki et al. [9] used a stereo omnidirectional camera consisting of two omnidirectional cameras to estimate depth. In estimating depth, they extract feature points from the least distorted portion of the fisheye image captured by the omnidirectional camera. However, their method has the following problems: depth is estimated only from the least distorted part of the image, and it is difficult to estimate the depth of the entire circumference at the same time due to the distortion of the fisheye lens. Moreover, extracting feature points is difficult in their method.

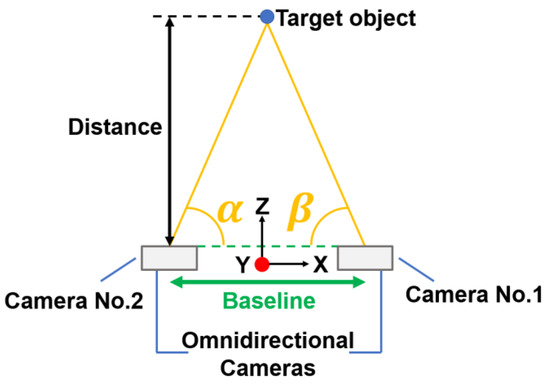

In the study by Tanaka Shunya et al. [10], a commercially available all-sky camera [11] was utilized to perform object detection through machine learning and azimuth angle calculation to achieve omnidirectional measurement using two cameras. However, this method faced a drawback as it resulted in a blind spot in the camera’s extended baseline (Figure 1) that could not be detected, so it was not a perfect omnidirectional measurement. Despite capturing an omnidirectional image by the back-to-back method, the fisheye camera lens faced the issue of chromatic aberration. To eliminate this chromatic aberration, multiple lenses must be stacked. Since it is impossible to mount multiple lenses on a small all-sky camera, it is impossible to eliminate chromatic aberration. Therefore, we considered that other omnidirectional imaging methods should be used to achieve more accurate measurements.

Figure 1.

Image of the method of Tanaka Shunya et al.

This research uses a method with no blind spots by using an omnidirectional camera with a convex mirror, which in principle does not cause chromatic aberration. The mirror used is a hyperbolic mirror. This technique was adopted because it is the most superior in terms of visibility in measurement among various omnidirectional imaging techniques and matches the conditions of our method. The measurement using hyperbolic mirrors is a new method using vertical disparity by installing cameras at the top and bottom of the image. This method solves the problem of conventional methods, where a complete omnidirectional measurement is not possible because the other camera is also included in the image. The purpose of this research is to detect obstacles and to create a sensor that can be used for obstacle detection with automatic guidance, similar to the conventional stereo method. To demonstrate the usefulness of this method, we conducted a measurement experiment to verify the measurement accuracy and a depth image generation experiment to verify whether measurement in all directions is possible. In the measurement experiment, the results were compared with those of the conventional method to verify the accuracy. The conventional method compared was Tanaka’s method [10], which was selected as the one with the best measurement accuracy among the conventional studies that have performed measurements under similar conditions. While the conventional method had a relative error of up to 40%, the new method succeeded in reducing the error to a maximum of 2.6%. In addition, when comparing the relative error for each distance, the accuracy was better than that of the conventional method at all points.

2. Materials and Methods

2.1. Proposed System Configuration

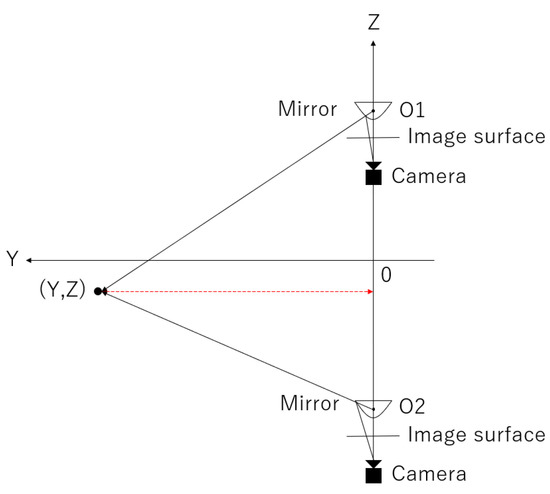

A conceptual diagram of the proposed method is shown in Figure 2. The proposed system consists of two convex mirrors and two cameras. The camera is fixed vertically upward and a convex mirror is attached to the camera lens. By capturing the image reflected in the convex mirror from directly below, it is possible to capture an omnidirectional image in one shot. This device is henceforth referred to as the omnidirectional visual sensor. Another similar device is installed vertically. By combining the images captured by these two omnidirectional visual sensors, it is possible to achieve distance measurement and depth image generation in all directions.

Figure 2.

Conceptual diagram of the proposed system.

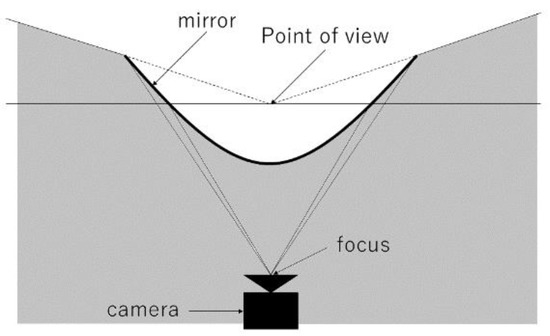

2.2. Omnidirectional Visual Sensor

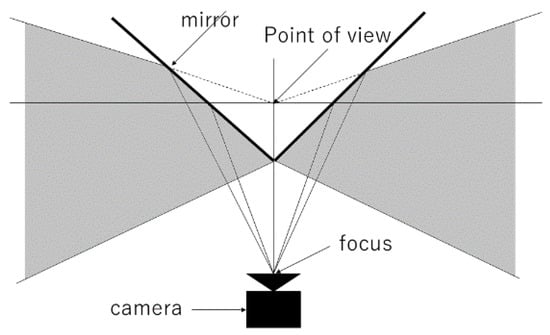

The omnidirectional visual sensor is described below. As shown in Figure 2, the omnidirectional visual sensor consists of a convex mirror and a camera facing vertically upward and can capture all directions in a single shot. Three types of possible convex mirrors were considered: conical, spherical, and hyperbolic. As shown in Figure 3, the method using a conical mirror provided high lateral resolution, but reflected light rays from below did not enter the lens, making it difficult to capture the feet.

Figure 3.

Conical mirror.

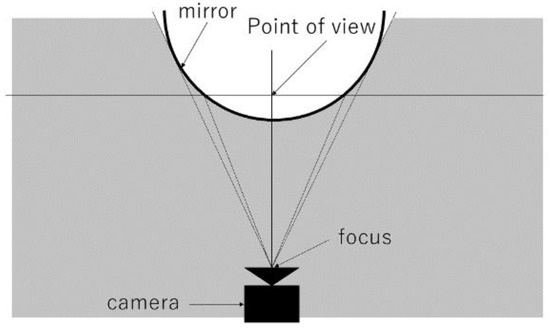

As shown in Figure 4, in the method using a spherical mirror, the closer one gets to the outer edge of the mirror, the larger the area to be imaged concerning the area to be projected, resulting in good resolution of the feet, but the camera itself contains a large area, and objects in the distance or on the side are not well captured as if compressed.

Figure 4.

Spherical mirror.

In contrast, hyperbolic surfaces offer a distinct advantage, as shown in Figure 5, as the upper field of view has the same high resolution as the method using a conical mirror while avoiding the limitations of the lower field of view found in spherical mirrors. Therefore, the hyperbolic surface has the advantage in the field of view of both methods using conical and spherical mirrors in that it is side-centered and also provides a foot view. Therefore, hyperbolic mirrors are used in the present system.

Figure 5.

Conceptual diagram of the omnidirectional visual sensor.

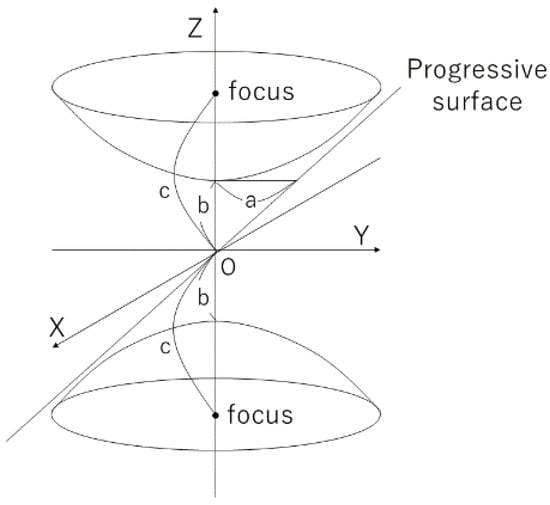

2.3. Panoramic Expansion

Since a hyperbolic mirror is used, distortion occurs during imaging. In addition, since we want to use vertical epipolar lines for measurement, this method performs panoramic expansion on an omnidirectional image. First, the optics of the hyperbolic mirror is described. A 2-leaf hyperbolic surface is used for the hyperbolic surface in this method. As shown in Figure 6, a 2-leaf hyperbolic surface is a surface obtained by rotating a hyperbola around (Z-axis). The characteristic of the hyperbola, which has two foci , and , is also retained in the hyperbolic surface. Moreover, as shown in Figure 5, consider 3-dimensional coordinates O-XYZ with the Z-axis as the vertical axis. In this case, the 2-leaf hyperbolic surface can be expressed by the following equation.

Figure 6.

Bilobed hyperbolic surface.

Note that and are constants that define the shape of the hyperbola. In this method, the hyperbolic surface in the region of among the two leaves is used as a mirror.

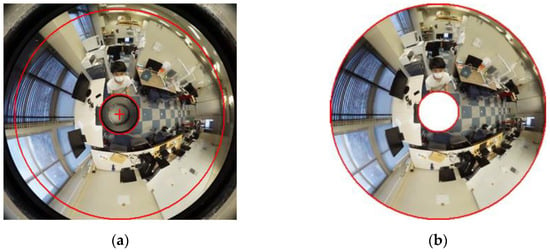

Next, the method of developing an omnidirectional image used in this method into a panoramic image is explained using an actual photograph as an example. Figure 7 is an omnidirectional image taken.

Figure 7.

Omnidirectional image.

First, to extract only the area to be used for measurement, an omnidirectional image taken is cropped in the area surrounded by red as shown in Figure 8a,b. The area to be cropped is determined according to the shooting range of the camera used for the measurement.

Figure 8.

Crop image: (a) before cropping; (b) after cropping.

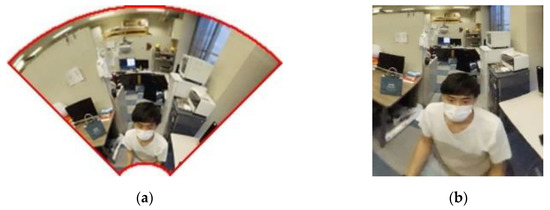

Next, the image is divided into four equal parts as shown in Figure 9.

Figure 9.

Quadranting the image.

Next, a perspective projection transformation is performed on each quadratically divided image using the following equation. The panoramic expansion of an omnidirectional image using a hyperbolic mirror can be expanded using the following equations [12].

where , , are points in three-dimensional coordinates; x, y are points in the image coordinate system; , are the image center coordinates; , , are the mirror parameters of the hyperbolic surface; and f is the focal length. Since the mirror parameters and focal length are known, expansion is possible. The results of the expansion of the quadratically divided image are shown in Figure 10a,b.

Figure 10.

Cropping image: (a) image before expansion; (b) image after expansion.

Finally, as shown in Figure 11, the panoramic image is completed by connecting the four developed images.

Figure 11.

Panoramic image.

2.4. Vertical Disparity Stereo Matching

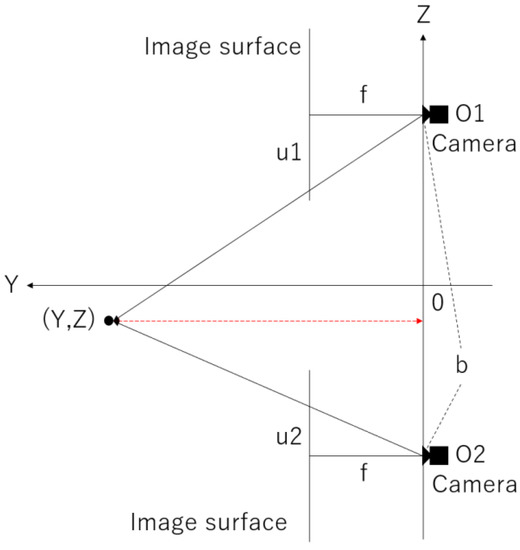

The following is an explanation regarding the principle of stereo matching using vertical disparity. First, a diagram of Figure 2 considering a certain vertical cut plane is shown in Figure 12.

Figure 12.

Conceptual diagram of a certain longitudinal cut plane.

The focal distance f is between the upper and lower virtual panoramic cameras, the distance (baseline) is between the virtual panoramic cameras, the object to be measured is at , the centers of the left and right panoramic images are and , respectively, and the deviations from these positions are expressed as and , respectively. From the similarity condition of the triangles, using the ratio

and solving for , we obtain

This allows us to find the distance to the object.

3. Results

3.1. Purpose of the Experiment

Measurement experiments were conducted to verify the accuracy of this method. In addition, to verify the possibility of measuring in all directions, a depth image generation experiment of a panoramic image was conducted.

3.2. Measurement Experiment

3.2.1. Experimental Method

In this experiment, the same experiment as in the previous study [10] was conducted for comparison with the previous study [10]. Target persons were photographed at 1.0 m intervals in the distance range of 1.0 m to 5.0 m from the omnidirectional stereo camera. Distance measurements were taken 20 times, and the average distance between the camera and the object was used as the experimental result. Relative error was used to evaluate accuracy; relative error is calculated by the following equation.

RE: relative error

AV: actual value

MV: measured value

To confirm the measurement accuracy, we took measurements at five different points (1.00 m, 2.00 m, 3.00 m, 4.00 m, and 5.00 m) with 0.00 m directly underneath the device, and recorded the results.

The measurement range was determined based on the maximum speed of Toyota Motor Corporation’s fully automated vehicle, the e-Palette [3], as well as the stopping distance. The stopping distance was calculated as the sum of the empty run distance due to program processing time and the e-Palette’s braking distance.

The empty run distance is

and the braking distance is determined by

In this case, a coefficient of friction of 0.5 is assumed to account for bad weather conditions.

Since the maximum speed of the e-Palette is 5.28 (m/s) and the processing time of the current program is approximately 0.033 (s), the empty run distance is

Since the maximum speed of the e-Palette is 19 (km/h) and the coefficient of friction of the road in rain is 0.5, the braking distance is

Therefore, the total stopping distance including the empty run distance and the braking distance is approximately 3.01 (m). In addition, considering the size of the vehicle itself, the measurement accuracy of the distance up to 5 m is recorded in this case.

Details of the equipment used in the experiment are shown in Table 1.

Table 1.

Details of the equipment used in the experiment.

The distance between cameras (baseline) b was set to 24.6 cm.

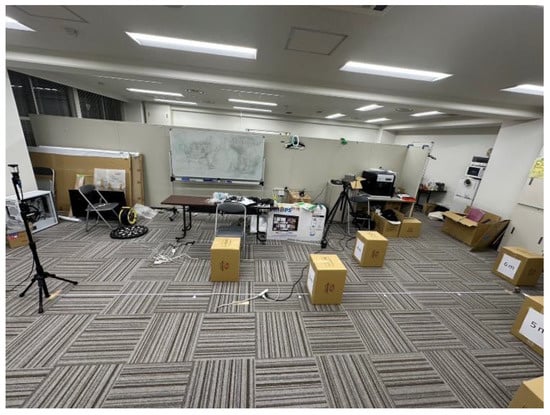

The experimental environment is shown in Figure 13.

Figure 13.

The experimental environment.

Measurements were taken at five points at distances of 1.00 m, 2.00 m, 3.00 m, 4.00 m, and 5.00 m from the camera. The object of measurement was the cardboard shown in the Figure 14. The correct distance was measured with a tape measure from directly under the camera.

Figure 14.

The object of measurement cardboard.

3.2.2. Experimental Results

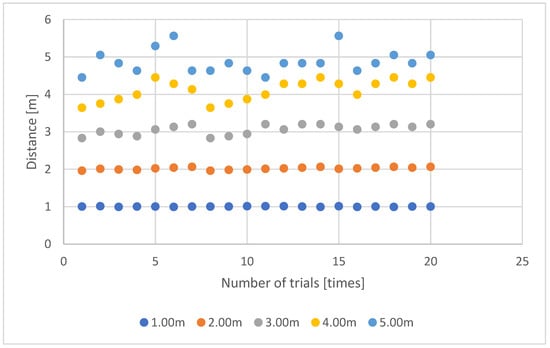

The proposed method’s results are graphically depicted in Figure 15. Meanwhile, Table 2 summarizes the outcomes of the proposed method and the conventional method.

Figure 15.

A graphical representation of the results.

Table 2.

Experimental results of the proposed method and conventional method.

3.2.3. Consideration

To validate the accuracy, the data were compared with data from a previous study. While the conventional method had a relative error of up to 40%, the new method succeeded in reducing the error to a maximum of 2.6%. In addition, the relative error for each distance was compared, and the accuracy was higher at all locations.

However, as shown in Figure 15, the stability of the measurements decreases as the distance from the camera increases. This is due to the characteristics of stereo measurement, where the accuracy is determined by the actual length of one pixel in the image, which is influenced by the lens, camera resolution, shooting distance, and baseline distance between cameras. In addition, since the stereo correspondence point pixel in the camera image plate has a size, the point to be measured can only be identified within a certain range in the actual space, which results in measurement errors. Typically, errors are larger in the depth direction from the camera. While lengthening the baseline can reduce the depth error, it can result in larger errors for shorter distances or even make measurements impossible. Therefore, stereo image measurement systems require appropriate baseline settings for the specific conditions in which they will be used. Thus, this issue can be resolved by adjusting the baseline according to the device being mounted.

These results demonstrate the effectiveness of this study in terms of accuracy.

3.3. Depth Image Generation Experiment

3.3.1. Experimental Method

Since the accuracy was verified in Section 3.2, this experiment verifies whether omnidirectional measurement is possible. In order to confirm whether this method is capable of omnidirectional measurement, an experiment was conducted to generate a depth image of a panoramic image. The method used for depth image generation is called the SGM method [13].

3.3.2. Experimental Results

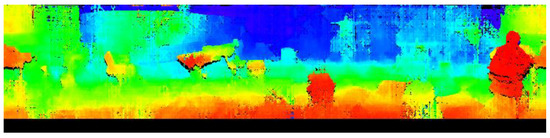

Figure 16.

Original image.

Figure 17.

Depth image.

For visual clarity, depth is replaced by hue. Undetected areas are output in black.

3.3.3. Consideration

Through experiments, our proposed method was able to output an omnidirectional depth image that enabled confirmation of distances in all directions. It was confirmed that the system was able to detect obstacles such as humans, desks, chairs, boxes, and walls, indicating that it can be applied to autonomous driving. However, false detections were observed for featureless objects and areas, as well as for areas where the same features were repeatedly seen due to the reliance on block matching, which requires image features for detection. Despite this limitation, the primary goal of this project is obstacle detection and textured objects can be detected effectively. It can be used for obstacle detection with automatic guidance in the same way as the conventional stereo method. In the conventional method, it is impossible to measure in the complete omnidirectional direction as it includes the other camera in the image.

4. Discussion

We conducted two experiments to discuss the issues and potential solutions of the proposed system. From the measurement experiment of Measurement Experiment, we found that the system improved up to 37.5% in Relative error value compared to the conventional method. However, as mentioned in the Consideration, there was a problem with the lower measurement accuracy the further away the camera was from the object. To address this problem, we proposed changing the camera resolution, shooting distance, and baseline. By setting the baseline appropriately for the defined measurement range, we could expect stable measurements even in distant areas. However, a larger baseline would require a bigger device, which could result in a loss of mobility or design quality. Alternatively, increasing the camera resolution could improve depth direction accuracy but at a higher cost. Therefore, we concluded that the system should be flexible enough to change the baseline and camera parameters according to the machine’s size, maximum speed, and required measurement distance for autonomous driving. By doing so, we can ensure the system’s adaptability to different situations while maintaining accurate and stable measurements.

In Depth Image Generation Experiment, the proposed method successfully generated an omnidirectional depth image, allowing for distance checking in all directions. Additionally, the method detected various obstacles, including people, desks, chairs, boxes and walls, suggesting its potential use for autonomous driving. However, false detections were observed in featureless and repetitive areas, which is a common limitation of block matching that relies on image features. While the study did not consider this false detection as a significant issue since the main focus was obstacle recognition, further improvements are required if more precise detection is necessary. In this study, only feature points were used for detection, but we believe that the detection rate can be improved by using deep learning. Depth estimation methods using stereo vision with prior learning have been studied in recent years. Advances in image recognition technology, especially deep learning, have expanded research on depth estimation from images and videos. In depth estimation by stereo viewing using machine learning [14,15,16], stereo images captured by left and right cameras and correct depth maps (ground truth) acquired by LiDAR and millimeter wave radar are pretrained as teacher data. Stereo images captured under similar conditions are used as test data. By inputting the correct depth map (ground truth) obtained by LiDAR or millimeter wave radar as the teacher data, it is now possible to detect featureless areas and areas where the same features appear repeatedly, which has been difficult to achieve in the past. Therefore, in the future, the proposed method can be improved by using such a model to enhance the accuracy of depth images.

5. Conclusions

In this study, we proposed an omnidirectional measurement method with no blind spots by using a convex mirror, which in principle does not cause chromatic aberration, and by using vertical disparity by placing cameras above and below the image. Two experiments were conducted to demonstrate the effectiveness of this research, which achieved a wider measurement range and a smaller number of cameras while still allowing obstacle detection similar to the conventional stereo method.

Compared to the previous study [5], we achieved about twice the measurement range. Compared to the previous study [6], omnidirectional measurement is now possible with half the number of cameras. Moreover, compared to the previous studies [7,8,9,10], the new system enables complete omnidirectional measurement without any blind spots. Furthermore, the accuracy was improved by 37.4% compared to the previous study [10], which had the best accuracy among the studies that enabled measurement with two cameras. We consider this result to be a remarkable achievement in stereo measurement.

Author Contributions

Conceptualization and manuscript preparation, Y.O.; manuscript review S.K. and Y.Z.; project administration, A.K. supervision, Y.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- BellaBot. Pudu Robotics. Available online: https://www.pudurobotics.com/jp/product/detail/bellabot (accessed on 19 February 2023).

- Nuro. Helping the Heroes during COVID-19. Available online: https://medium.com/nuro/helping-the-heroes-during-covid-19-49c189f216a2 (accessed on 19 February 2023).

- TOYOTA. e-Palette. Available online: https://global.toyota/jp/newsroom/corporate/29933339.html (accessed on 19 February 2023).

- Scharstein, D.; Szeliski, R. A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Iida, H.; Ji, Y.; Umeda, K.; Ohashi, A.; Fukuda, D.; Kaneko, S.; Murayama, J.; Uchida, Y. High-accuracy Range Image Generation by Fusing Binocular and Motion Stereo Using Fisheye Stereo Camera. In Proceedings of the 2020 IEEE/SICE International Symposium on System Integration (SII), Honolulu, HI, USA, 12–15 January 2020. [Google Scholar]

- Won, C.; Ryu, J.; Lim, J. End-to-End Learning for Omnidirectional Stereo Matching With Uncertainty Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3850–3862. [Google Scholar] [CrossRef] [PubMed]

- Schönbein, M.; Kitt, B.; Lauer, M. Environmental Perception for Intelligent Vehicles Using Catadioptric Stereo Vision Systems. In Proceedings of the ECMR, Örebro, Sweden, 7–9 September 2011. [Google Scholar]

- Schönbein, M.; Geiger, A. Omnidirectional 3d reconstruction in augmented manhattan worlds. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014. [Google Scholar]

- Aoki, T.; Mengcheng, S.; Watanabe, H. Position Estimation and Distance Measurement from Omnidirectional Cameras. 80th Inf. Process. Soc. Jpn. 2018, 2018, 265–266. [Google Scholar]

- Tanaka, S.; Inoue, Y. Outdoor Human Detection with Stereo Omnidirectional Cameras. J. Robot. Mechatron. 2020, 32, 1193–1199. [Google Scholar] [CrossRef]

- Aghayari, S.; Saadatseresht, M.; Omidalizarandi, M.; Neumann, I. Geometric calibration of full spherical panoramic Ricoh-Theta camera. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences IV-1/W1 (2017), Hannover, Germany, 6–9 June 2017; Volume 4, pp. 237–245. [Google Scholar]

- Yamazawa, K.; Yagi, Y.; Yachida, M. Omnidirectional imaging with hyperboloidal projection. In Proceedings of the 1993 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS’93), Yokohama, Japan, 26–30 July 1993; Volume 2. [Google Scholar]

- Hirschmuller, H. Accurate and efficient stereo processing by semi-global matching and mutual information. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 2. [Google Scholar]

- Cheng, X.; Zhong, Y.; Harandi, M.; Dai, Y.; Chang, X.; Li, H.; Drummond, T.; Ge, Z. Hierarchical neural architecture search for deep stereo matching. Adv. Neural Inf. Process. Syst. 2020, 33, 22158–22169. [Google Scholar]

- Lipson, L.; Teed, Z.; Deng, J. Raft-stereo: Multilevel recurrent field transforms for stereo matching. In Proceedings of the 2021 International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021. [Google Scholar]

- Li, J.; Wang, P.; Xiong, P.; Cai, T.; Yan, Z.; Yang, L.; Liu, J.; Fan, H.; Liu, S. Practical stereo matching via cascaded recurrent network with adaptive correlation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).