Author Contributions

Conceptualization, E.G.G., I.A.H. M.U.H., O.-M.H.S., and S.L.; methodology, I.A.H., and S.L.; software, E.G.G., M.U.H., O.-M.H.S., and S.L.; validation, M.U.H., and I.A.H.; formal analysis, I.A.H., and M.U.H.; investigation, I.A.H.; resources, E.G.G., O.-M.H.S., and S.L.; data curation, E.G.G., I.A.H., M.U.H., O.-M.H.S., and S.L.; writing—original draft preparation, E.G.G., M.U.H., O.-M.H.S., and S.L.; writing—review and editing, M.U.H.; visualization, E.G.G., O.-M.H.S., and S.L.; supervision, I.A.H.; project administration, I.A.H.; funding acquisition, I.A.H., and M.U.H. All authors have read and agreed to the published version of the manuscript.

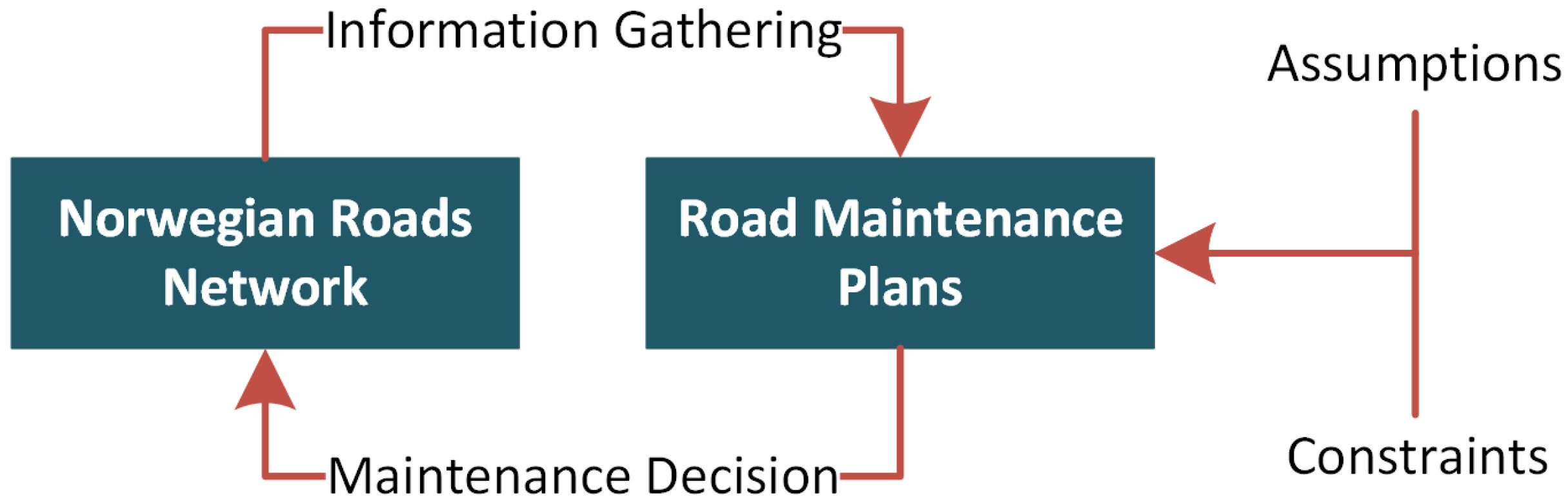

Figure 1.

Conceptual design of PdM planning for road infrastructure.

Figure 1.

Conceptual design of PdM planning for road infrastructure.

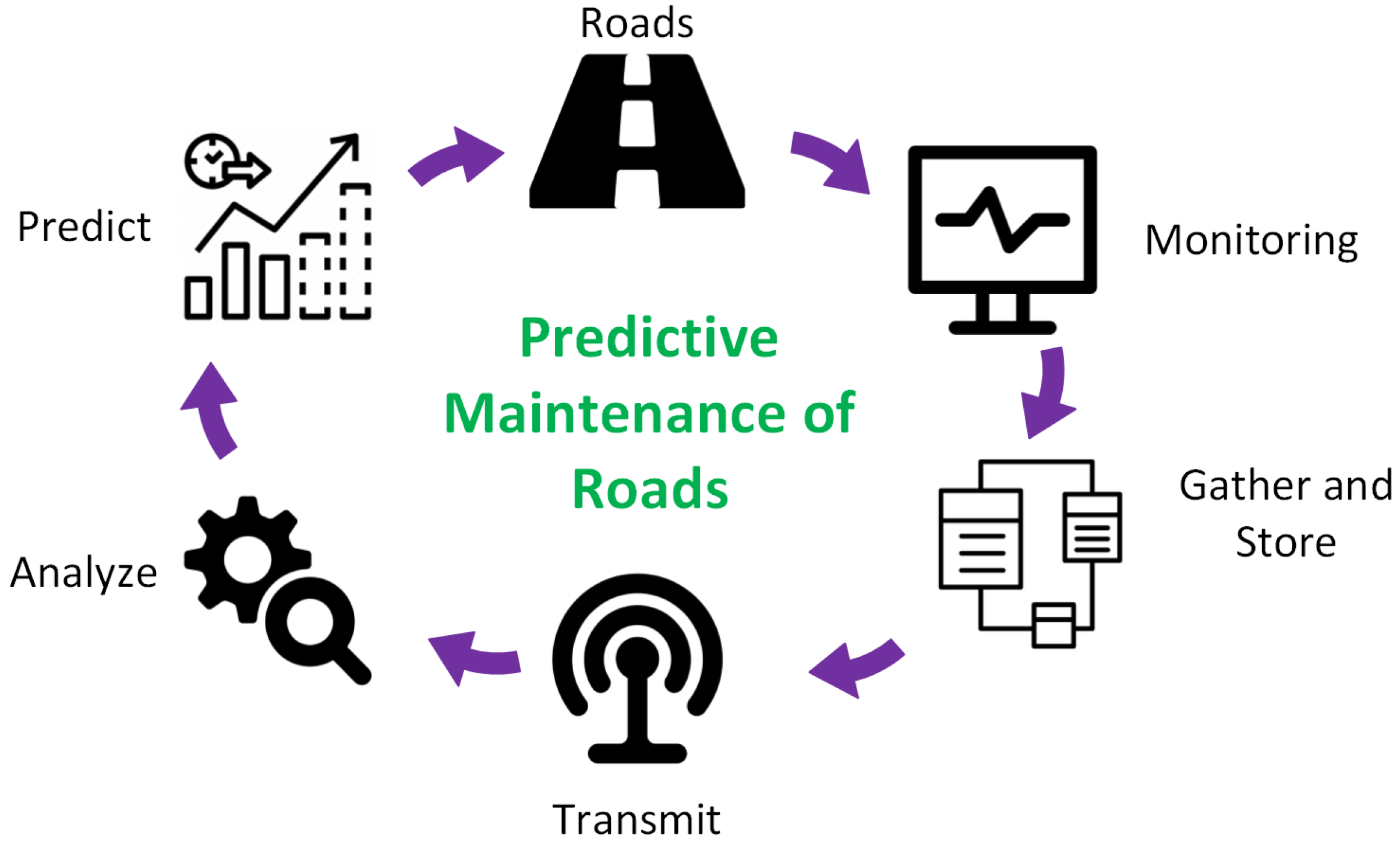

Figure 2.

PdM-based framework for the maintenance of road networks.

Figure 2.

PdM-based framework for the maintenance of road networks.

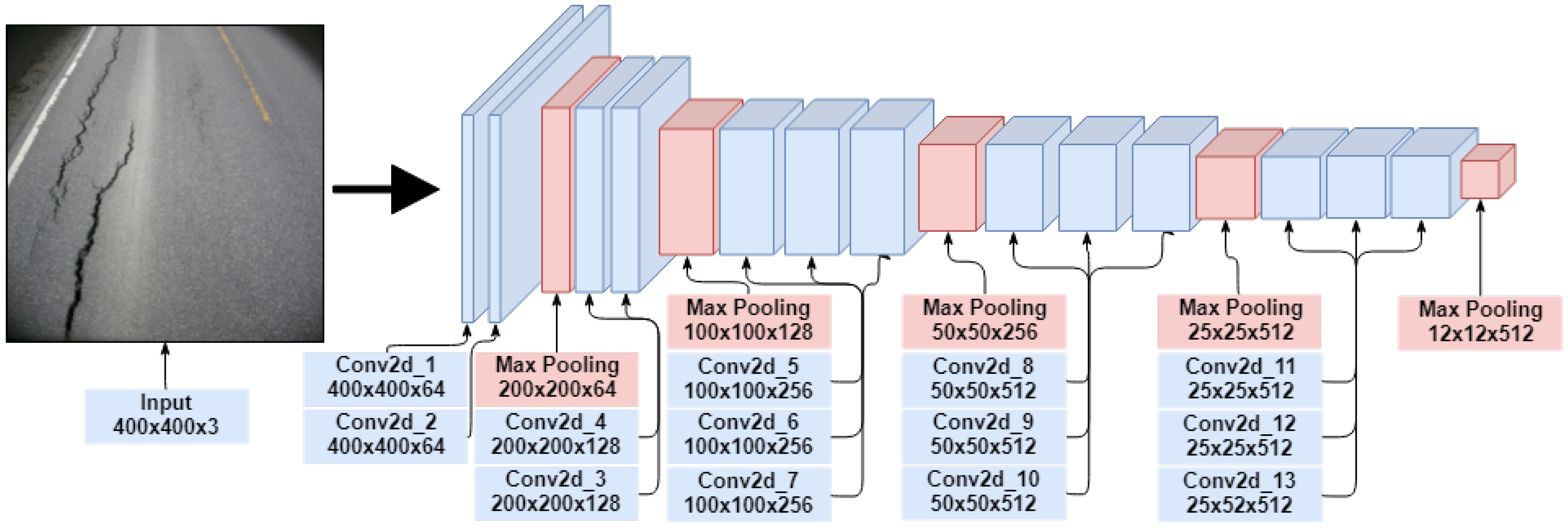

Figure 3.

An illustration of the convolution and max-pooling layers of the VGG-16 network used in the CNN.

Figure 3.

An illustration of the convolution and max-pooling layers of the VGG-16 network used in the CNN.

Figure 4.

Process flow for PdM deployment.

Figure 4.

Process flow for PdM deployment.

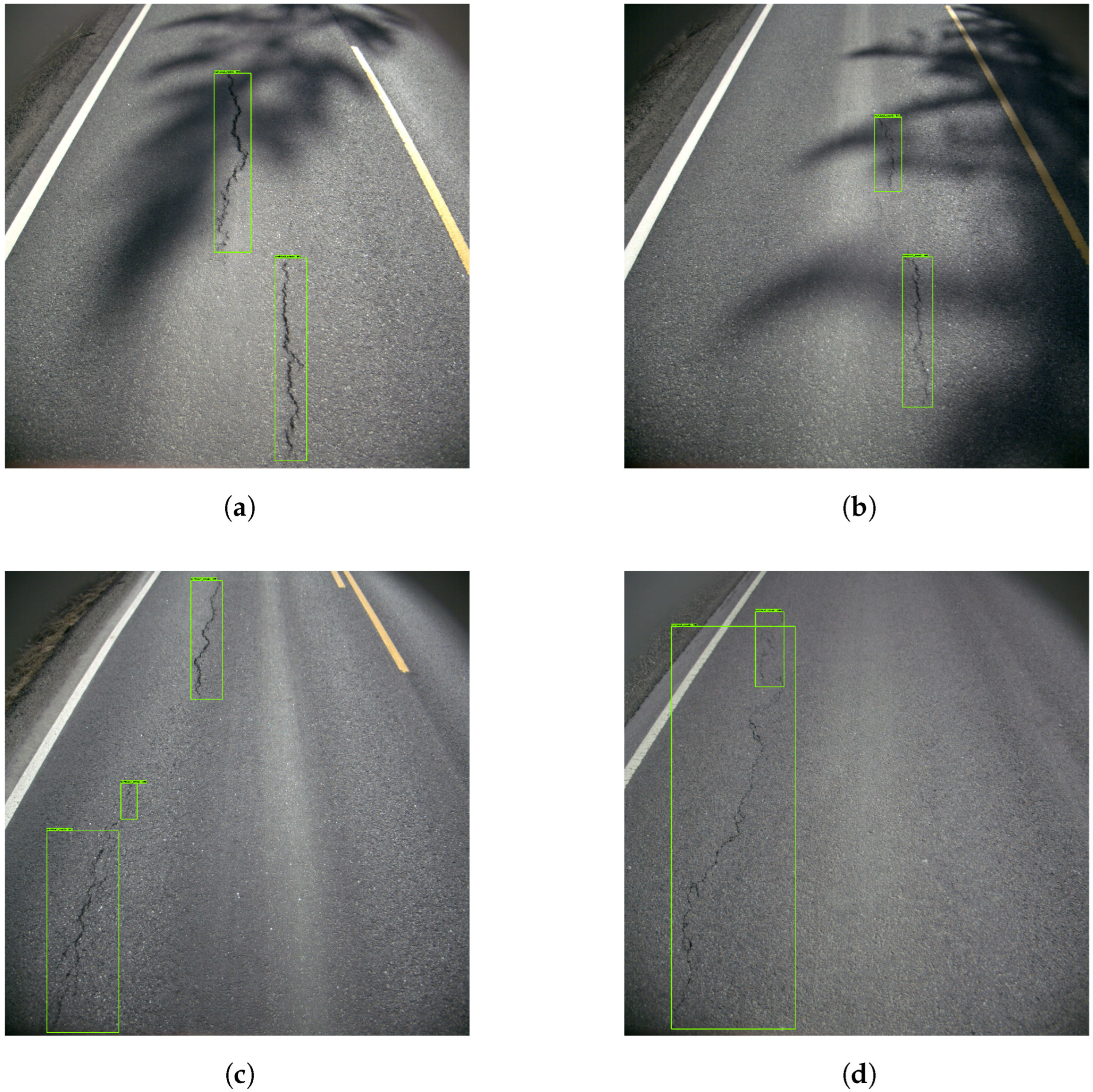

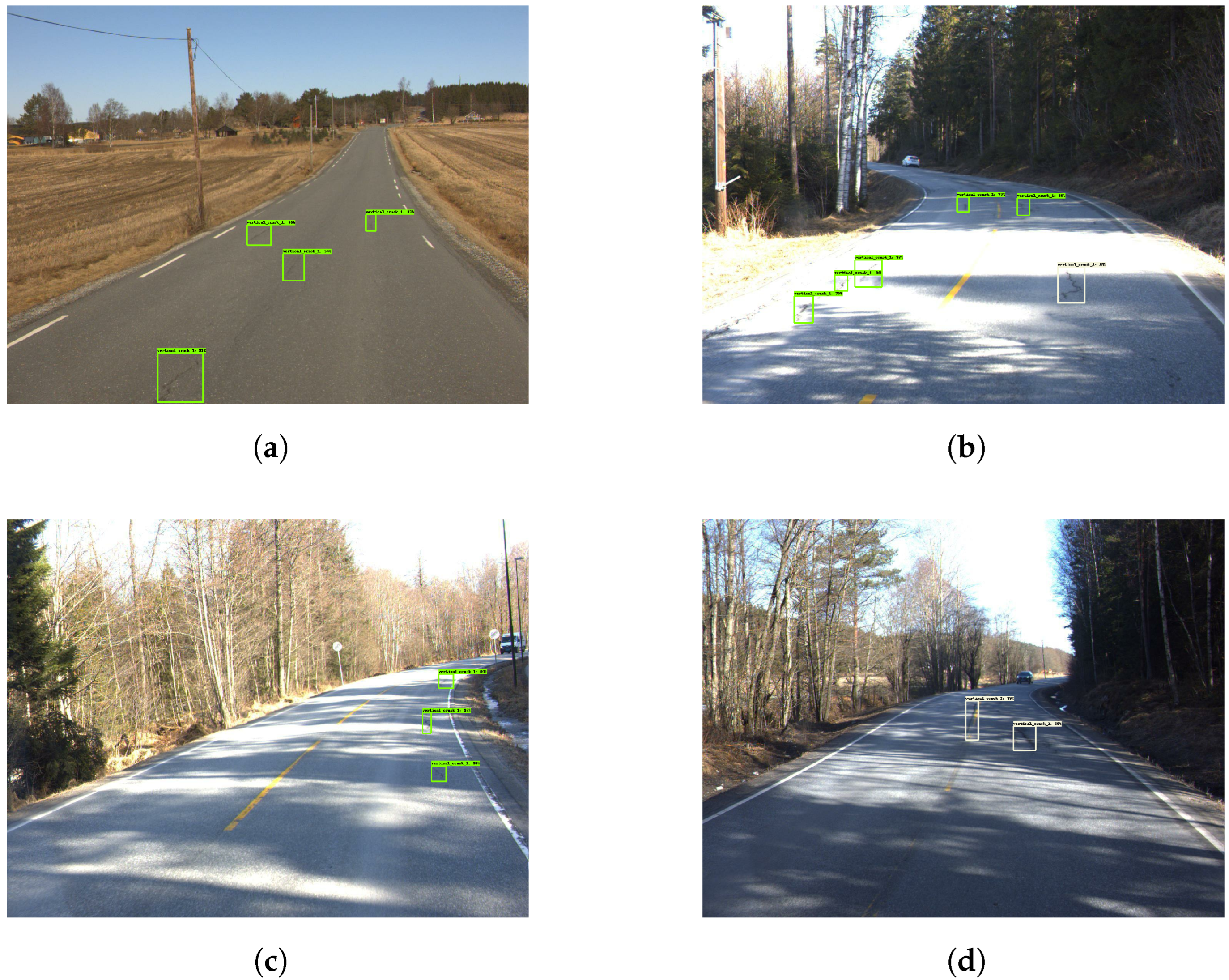

Figure 5.

Examples of poor vertical crack detection. The green boxes represents the vertical cracks.

Figure 5.

Examples of poor vertical crack detection. The green boxes represents the vertical cracks.

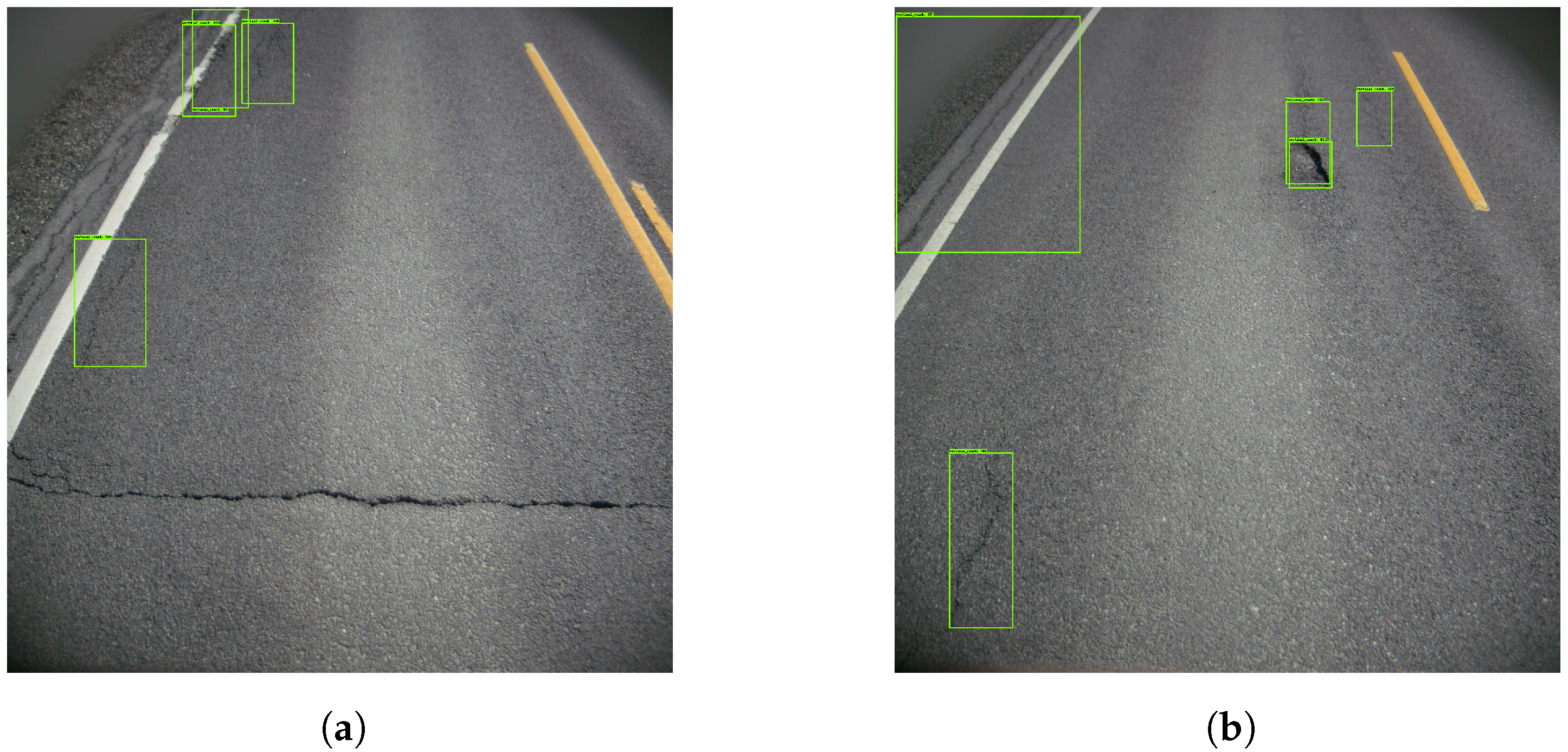

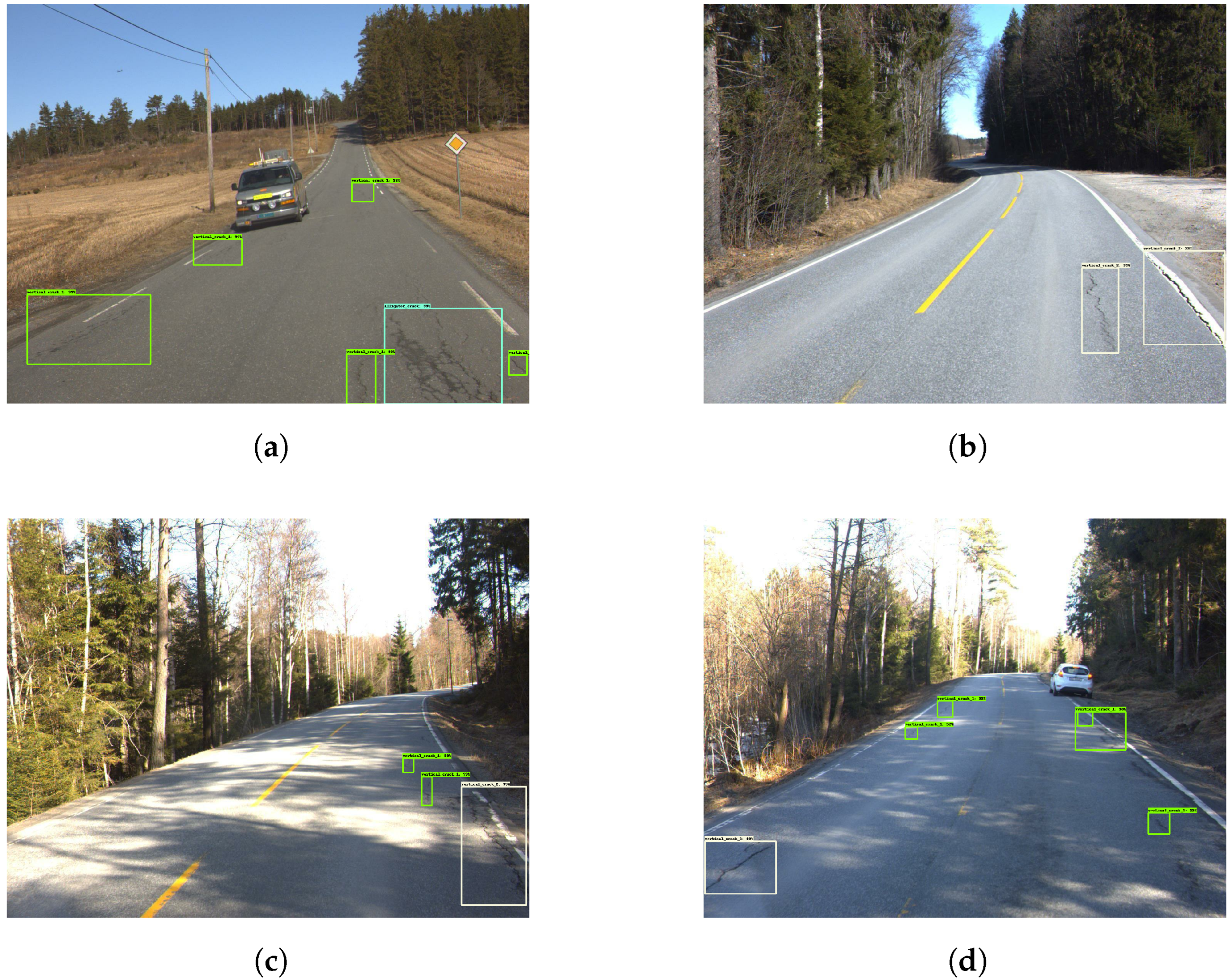

Figure 6.

Examples of missed cracks. The green boxes represents the vertical cracks.

Figure 6.

Examples of missed cracks. The green boxes represents the vertical cracks.

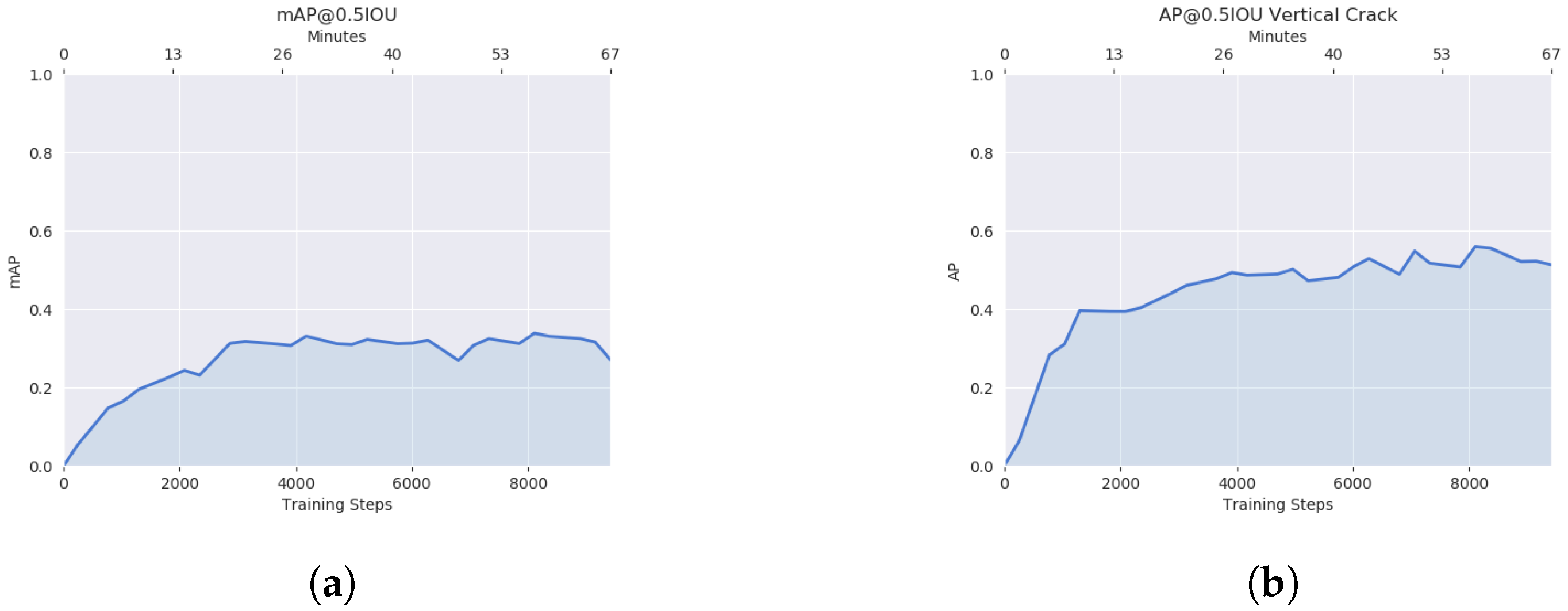

Figure 7.

mAP and AP of the ‘vertical crack’ in Model 1. (a) Mean average precision. (b) Average precision of the ‘Vertical Crack’.

Figure 7.

mAP and AP of the ‘vertical crack’ in Model 1. (a) Mean average precision. (b) Average precision of the ‘Vertical Crack’.

Figure 8.

Examples of good vertical crack detection. The green boxes represents the vertical cracks.

Figure 8.

Examples of good vertical crack detection. The green boxes represents the vertical cracks.

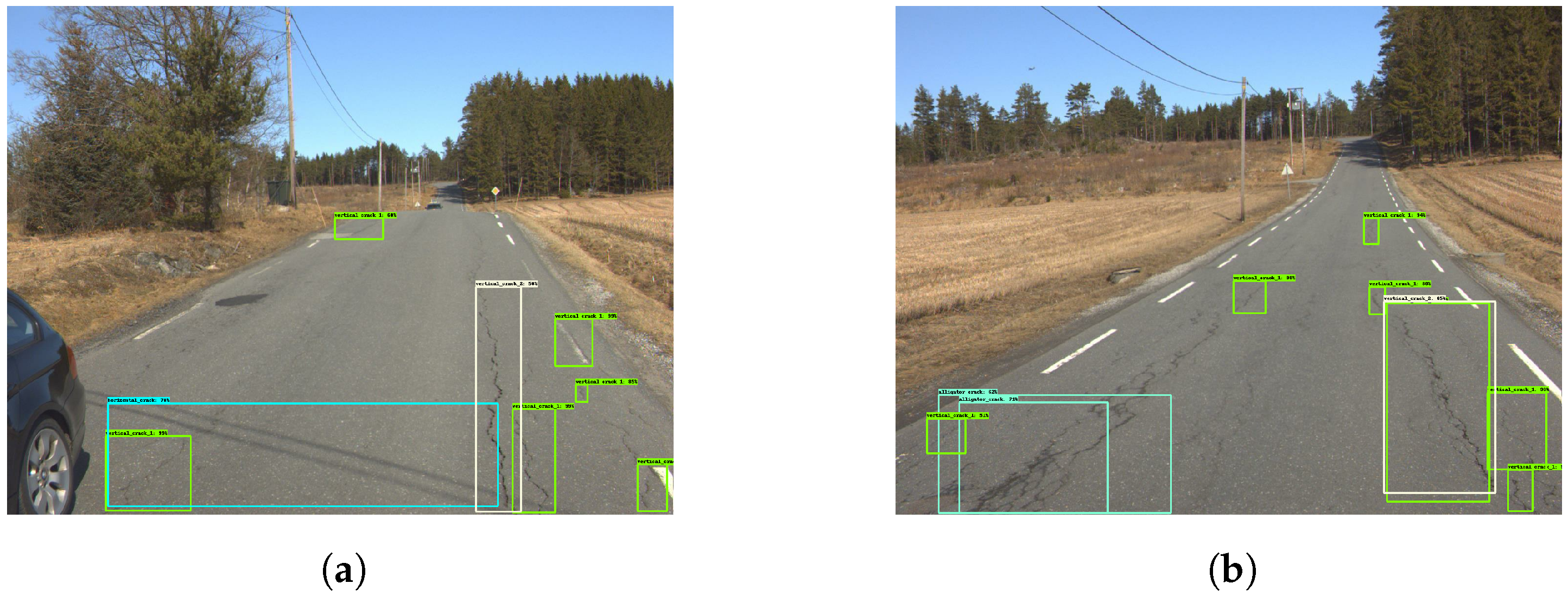

Figure 9.

Good damage detection. The green and yellow boxes represent the vertical cracks while cyan colored boxes are the horizontal cracks.

Figure 9.

Good damage detection. The green and yellow boxes represent the vertical cracks while cyan colored boxes are the horizontal cracks.

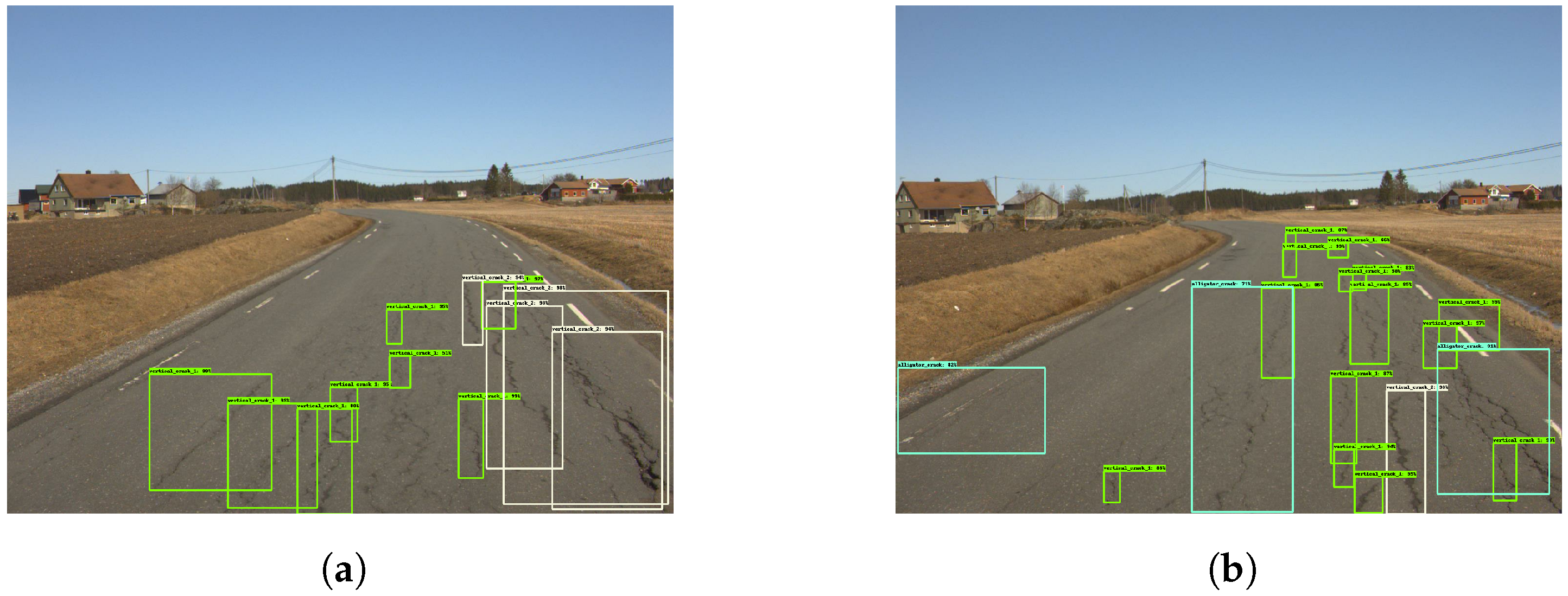

Figure 10.

Cluttered detection—Model 2. The green and yellow boxes represent the vertical cracks while cyan colored boxes are the horizontal cracks.

Figure 10.

Cluttered detection—Model 2. The green and yellow boxes represent the vertical cracks while cyan colored boxes are the horizontal cracks.

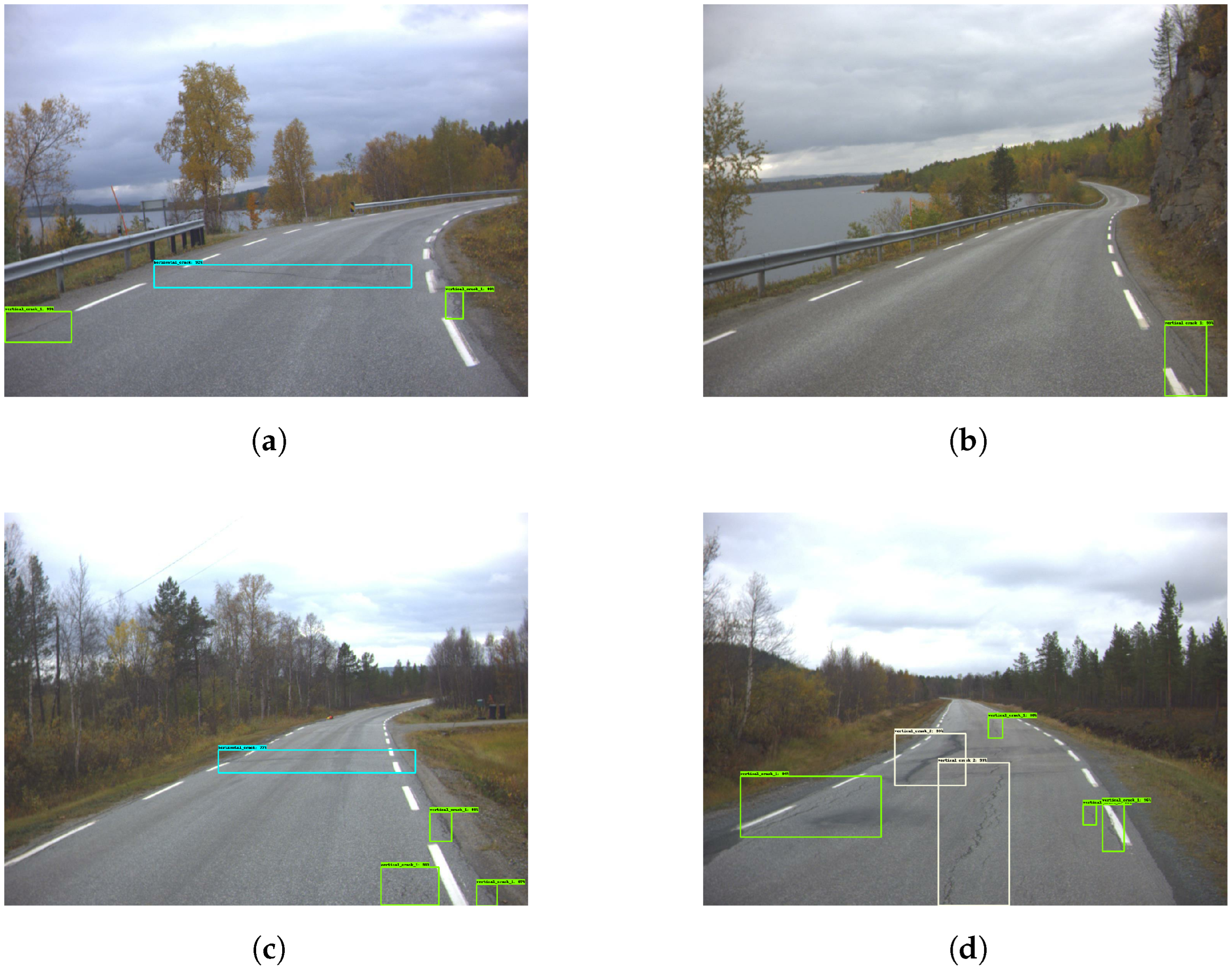

Figure 11.

Damage detection—Model 2. The green and yellow boxes represent the vertical cracks while cyan colored boxes are the horizontal cracks.

Figure 11.

Damage detection—Model 2. The green and yellow boxes represent the vertical cracks while cyan colored boxes are the horizontal cracks.

Figure 12.

Good damage detection—Model 3. The green and yellow boxes represent the vertical cracks.

Figure 12.

Good damage detection—Model 3. The green and yellow boxes represent the vertical cracks.

Figure 13.

Low sensitivity in damage detection—Model 3. The green and yellow boxes represent the vertical cracks while cyan colored boxes are the horizontal cracks.

Figure 13.

Low sensitivity in damage detection—Model 3. The green and yellow boxes represent the vertical cracks while cyan colored boxes are the horizontal cracks.

Figure 14.

Poor damage detection—Model 3. The green and yellow boxes represent the vertical cracks while cyan colored boxes are the horizontal cracks.

Figure 14.

Poor damage detection—Model 3. The green and yellow boxes represent the vertical cracks while cyan colored boxes are the horizontal cracks.

Figure 15.

Good damage detection—Model 4. The green and yellow boxes represent the vertical cracks while cyan colored boxes are the horizontal cracks.

Figure 15.

Good damage detection—Model 4. The green and yellow boxes represent the vertical cracks while cyan colored boxes are the horizontal cracks.

Figure 16.

Missed damage detection—Model 4. The green and yellow boxes represent the vertical cracks while cyan colored boxes are the horizontal cracks.

Figure 16.

Missed damage detection—Model 4. The green and yellow boxes represent the vertical cracks while cyan colored boxes are the horizontal cracks.

Figure 17.

Mixed boxes—Model 4. The green box represents the vertical cracks while cyan colored box is the horizontal cracks.

Figure 17.

Mixed boxes—Model 4. The green box represents the vertical cracks while cyan colored box is the horizontal cracks.

Figure 18.

VGG16 binary training statistics. The statistics for the model remain close to static throughout the training phase.

Figure 18.

VGG16 binary training statistics. The statistics for the model remain close to static throughout the training phase.

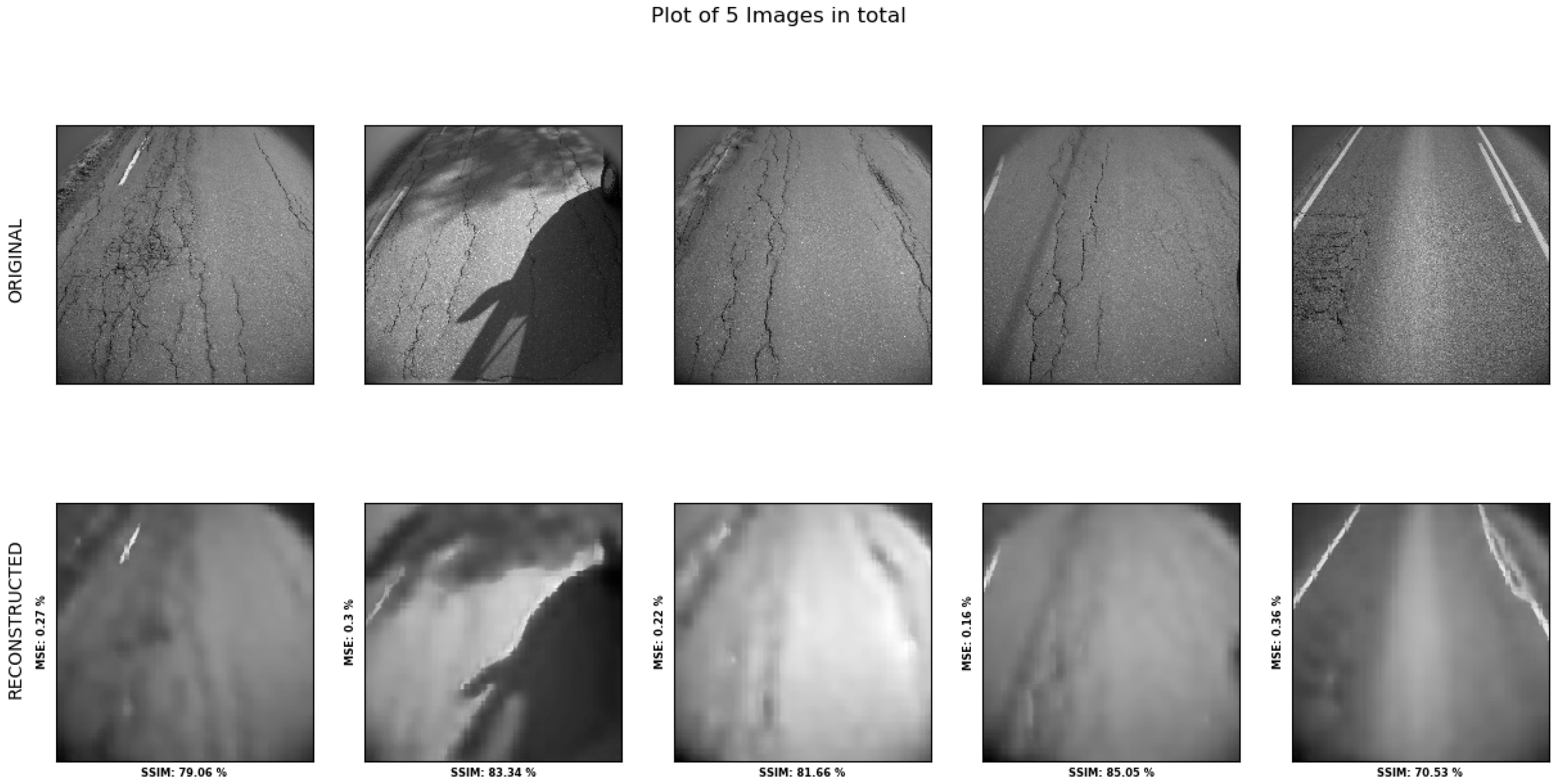

Figure 19.

Model performance of reconstruction visualized, with the bottom road showing the reconstructed images and the top row showing the original image. This involves tests of no-, low-, and medium-damage classes.

Figure 19.

Model performance of reconstruction visualized, with the bottom road showing the reconstructed images and the top row showing the original image. This involves tests of no-, low-, and medium-damage classes.

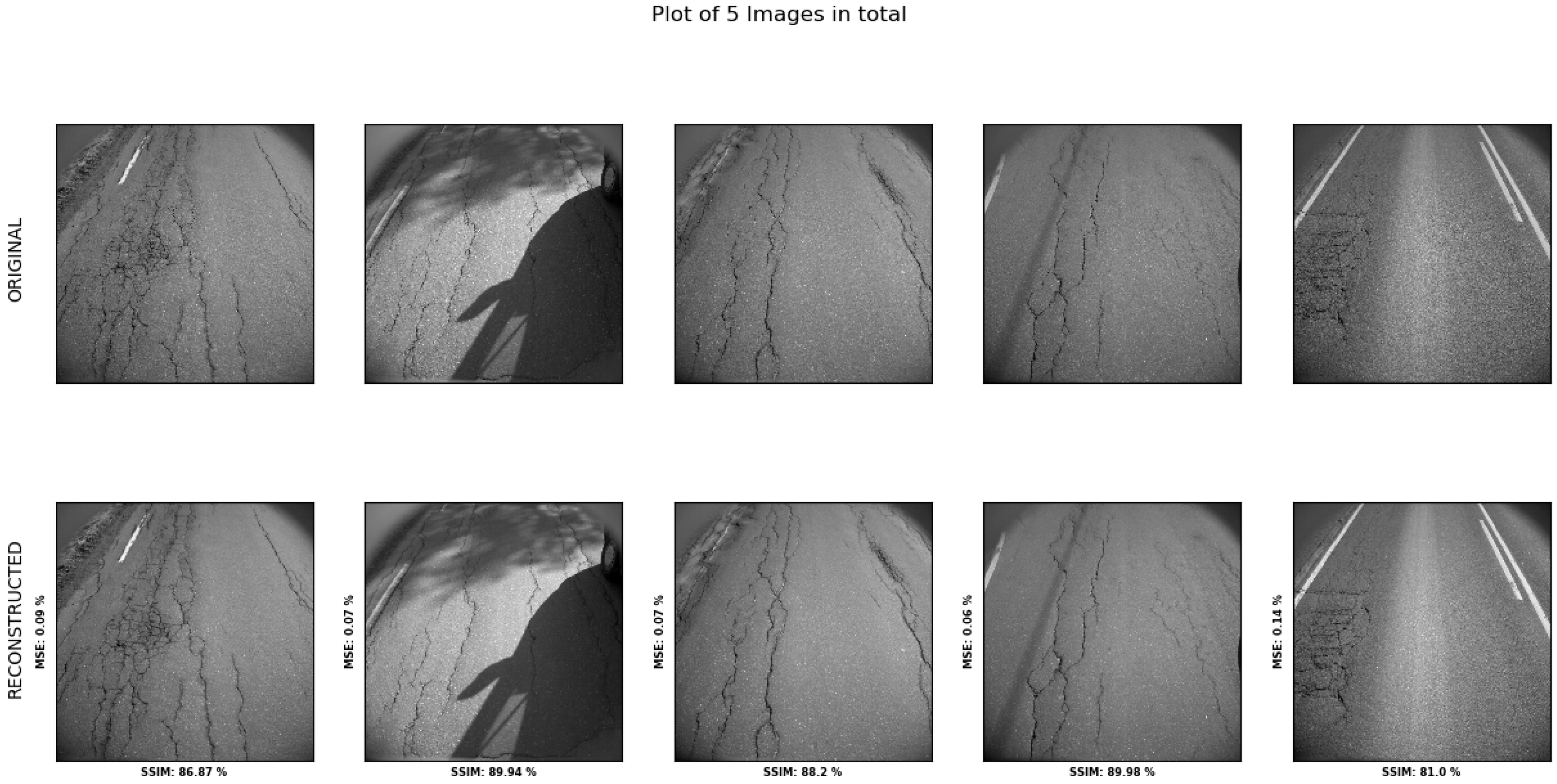

Figure 20.

Model performance with adjusted hyperparameters visualized, with the bottom road showing the reconstructed images and the top row showing the original image. These are for tests of no-, low-, and medium-damage classes.

Figure 20.

Model performance with adjusted hyperparameters visualized, with the bottom road showing the reconstructed images and the top row showing the original image. These are for tests of no-, low-, and medium-damage classes.

Table 1.

Evaluation results of Model 1.

Table 1.

Evaluation results of Model 1.

| Damage | Precision@0.5IOU | Recall@0.5IOU | F1-Score@0.5IOU |

|---|

| Vertical crack | 67% | 79% | 73% |

| Horizontal crack | NaN | 0% | NaN |

| Alligator crack | 75% | 50% | 60% |

| Pothole | NaN | 0% | NaN |

Table 2.

Confusion matrix of Model 1.

Table 2.

Confusion matrix of Model 1.

| Actual | Predicted |

|---|

| Vertical | Horizontal | Alligator | Pothole | None |

|---|

| Vertical | 130 | 0 | 0 | 0 | 34 |

| Horizontal | 0 | 0 | 0 | 0 | 9 |

| Alligator | 3 | 0 | 3 | 0 | 0 |

| Pothole | 2 | 0 | 0 | 0 | 0 |

| None | 58 | 0 | 1 | 0 | 0 |

Table 3.

Table for precision and recall Model 2.

Table 3.

Table for precision and recall Model 2.

| Damage | Precision@0.5IOU | Recall@0.5IOU | F1-Score@0.5IOU |

|---|

| Vertical crack 1 | 66% | 51% | 57% |

| Vertical crack 2 | 55% | 50% | 52% |

| Horizontal crack | 66% | 70% | 68% |

| Alligator crack | 18% | 33% | 23% |

| Pothole | 43% | 26% | 32% |

Table 4.

Confusion matrix of Model 2.

Table 4.

Confusion matrix of Model 2.

| Actual | Predicted |

|---|

| Vertical 1 | Horizontal | Alligator | Pothole | Vertical 2 | None |

|---|

| Vertical 1 | 598 | 11 | 56 | 4 | 35 | 480 |

| Horizontal | 4 | 89 | 1 | 0 | 2 | 32 |

| Alligator | 12 | 0 | 19 | 1 | 1 | 24 |

| Pothole | 3 | 1 | 0 | 6 | 0 | 13 |

| Vertical 2 | 46 | 7 | 2 | 0 | 86 | 31 |

| None | 244 | 27 | 27 | 3 | 33 | 0 |

Table 5.

Precision and recall of Model 3.

Table 5.

Precision and recall of Model 3.

| Damage | Precision@0.5IOU | Recall@0.5IOU | F1-Score@0.5IOU |

|---|

| Vertical crack 1 | 72% | 42% | 53% |

| Horizontal crack | 77% | 48% | 59% |

| Alligator crack | 23% | 28% | 25% |

| Pothole | 80% | 17% | 28% |

| Vertical crack 2 | 63% | 47% | 54% |

Table 6.

Confusion matrix of Model 3.

Table 6.

Confusion matrix of Model 3.

| Actual | Predicted |

|---|

| Vertical 1 | Horizontal | Alligator | Pothole | Vertical 2 | None |

|---|

| Vertical 1 | 497 | 8 | 43 | 0 | 28 | 608 |

| Horizontal | 8 | 62 | 1 | 0 | 3 | 54 |

| Alligator | 10 | 0 | 16 | 0 | 1 | 30 |

| Pothole | 2 | 0 | 0 | 4 | 0 | 17 |

| Vertical 2 | 44 | 1 | 2 | 1 | 81 | 43 |

| None | 130 | 10 | 9 | 0 | 16 | 0 |

Table 7.

Precision and recall of Model 4.

Table 7.

Precision and recall of Model 4.

| Damage Type | Precision@0.5IOU | Recall@0.5IOU | F1-Score@0.5IOU |

|---|

| Vertical crack 1 | 72% | 38% | 50% |

| Horizontal crack | 79% | 32% | 46% |

| Alligator crack | 19% | 23% | 21% |

| Pothole | 100% | 13% | 23% |

| Vertical crack 2 | 56% | 51% | 53% |

Table 8.

Confusion matrix of Model 4.

Table 8.

Confusion matrix of Model 4.

| Actual | Predicted |

|---|

| Vertical 1 | Horizontal | Alligator | Pothole | Vertical 2 | None |

|---|

| Vertical 1 | 449 | 6 | 33 | 0 | 39 | 657 |

| Horizontal | 2 | 41 | 0 | 0 | 3 | 82 |

| Alligator | 9 | 0 | 13 | 0 | 3 | 32 |

| Pothole | 1 | 1 | 1 | 3 | 1 | 16 |

| Vertical 2 | 38 | 1 | 4 | 0 | 87 | 42 |

| None | 122 | 3 | 16 | 0 | 22 | 0 |

Table 9.

Confusion matrix of Model 5.

Table 9.

Confusion matrix of Model 5.

| Actual | Predicted |

|---|

| Vertical | Horizontal | Alligator | Pothole | None |

|---|

| Vertical | 68 | 0 | 4 | 0 | 92 |

| Horizontal | 2 | 1 | 0 | 0 | 6 |

| Alligator | 1 | 0 | 5 | 0 | 0 |

| Pothole | 1 | 0 | 0 | 0 | 1 |

| None | 12 | 0 | 1 | 0 | 0 |

Table 10.

Precision and recall Model 5.

Table 10.

Precision and recall Model 5.

| Damage Type | Precision@0.5IOU | Recall@0.5IOU | F1-Score@0.5IOU |

|---|

| Vertical crack 1 | 81% | 41% | 54% |

| Horizontal crack | 100% | 11% | 20% |

| Alligator crack | 50% | 83% | 62% |

| Pothole | NaN | 0% | NaN |

Table 11.

Precision and recall Model 6.

Table 11.

Precision and recall Model 6.

| Damage Type | Precision@0.5IOU | Recall@0.5IOU | F1-Score@0.5IOU |

|---|

| Vertical crack 1 | 92% | 29% | 44% |

| Horizontal crack | NaN | 0% | NaN |

| Alligator crack | 100% | 17% | 29% |

| Pothole | NaN | 0 | NaN |

Table 12.

Confusion matrix of Model 6.

Table 12.

Confusion matrix of Model 6.

| Actual | Predicted |

|---|

| Vertical | Horizontal | Alligator | Pothole | None |

|---|

| Vertical | 47 | 0 | 0 | 0 | 117 |

| Horizontal | 0 | 0 | 0 | 0 | 9 |

| Alligator | 3 | 0 | 1 | 0 | 2 |

| Pothole | 0 | 0 | 0 | 0 | 2 |

| None | 1 | 0 | 0 | 0 | 0 |

Table 13.

Confusion matrix of Model 7.

Table 13.

Confusion matrix of Model 7.

| Actual | Predicted |

|---|

| Vertical 1 | Horizontal | Alligator | Pothole | Vertical 2 | None |

|---|

| Vertical 1 | 120 | 2 | 0 | 0 | 2 | 1060 |

| Horizontal | 8 | 3 | 0 | 0 | 0 | 117 |

| Alligator | 7 | 0 | 1 | 0 | 0 | 49 |

| Pothole | 0 | 0 | 0 | 4 | 0 | 19 |

| Vertical 2 | 26 | 3 | 0 | 0 | 21 | 122 |

| None | 25 | 0 | 0 | 1 | 6 | 0 |

Table 14.

Precision and recall for Model 7.

Table 14.

Precision and recall for Model 7.

| Damage Type | Precision@0.5IOU | Recall@0.5IOU | F1-Score@0.5IOU |

|---|

| Vertical crack 1 | 65% | 10% | 17% |

| Horizontal crack | 38% | 2% | 4% |

| Alligator crack | 100% | 2% | 4% |

| Pothole | 80% | 17% | 28% |

| Vertical crack 2 | 72% | 12% | 21% |

Table 15.

Confusion matrix for VGG16 binary classification.

Table 15.

Confusion matrix for VGG16 binary classification.

| | Predicted |

|---|

| | Damage | No damage |

| Damage | 15 | 60 |

| No damage | 45 | 141 |

Table 16.

Classification report from testing on 261 images.

Table 16.

Classification report from testing on 261 images.

| Condition | Precision | Recall | F1-Score | Support |

|---|

| Damage | 0.25 | 0.20 | 0.22 | 75 |

| No damage | 0.70 | 0.76 | 0.73 | 186 |

| Macro average | 0.48 | 0.48 | 0.48 | 261 |

| Weighted average | 0.57 | 0.60 | 0.58 | 261 |

Table 17.

Sampled results for five images per class.

Table 17.

Sampled results for five images per class.

| Samples | No Damage | Low Damage | Medium Damage | High Damage |

|---|

| MSE | SSIM | MSE | SSIM | MSE | SSIM | MSE | SSIM |

|---|

| 1 | 0.22 | 77.07 | 0.22 | 77.41 | 0.24 | 77.18 | 0.27 | 79.06 |

| 2 | 0.21 | 78.64 | 0.23 | 80.11 | 0.22 | 78.66 | 0.22 | 81.66 |

| 3 | 0.14 | 86.17 | 0.15 | 84.51 | 0.18 | 82.13 | 0.16 | 85.05 |

| 4 | 0.28 | 74.43 | 0.15 | 81.57 | 0.37 | 69.24 | 0.36 | 70.53 |

| 5* | 0.17 | 86.76 | 0.11 | 89.66 | 0.16 | 85.25 | 0.3 | 83.34 |

Table 18.

Average mean over 150 samples.

Table 18.

Average mean over 150 samples.

| | No Damage | Low Damage | Medium Damage | High Damage |

|---|

| SSIM | 0.76897 | 0.78254 | 0.78887 | 0.81260 |

Table 19.

SSIM averaged over 150 samples for a model training 20 epochs. indicates the change in the results given by = results 20 epochs − results 10 epochs.

Table 19.

SSIM averaged over 150 samples for a model training 20 epochs. indicates the change in the results given by = results 20 epochs − results 10 epochs.

| | No Damage | Low Damage | Medium Damage | High Damage |

|---|

| SSIM | 0.76745 | 0.78532 | 0.78851 | 0.81507 |

| −0.00152 | +0.00278 | −0.00036 | +0.00247 |

Table 20.

Sampling of images with a slight difference in results for five images per class.

Table 20.

Sampling of images with a slight difference in results for five images per class.

| Samples | No Damage | Low Damage | Medium Damage | High Damage |

|---|

| MSE | SSIM | MSE | SSIM | MSE | SSIM | MSE | SSIM |

|---|

| 1 | 0.21 | 77.19 | 0.22 | 77.4 | 0.24 | 77.17 | 0.27 | 79.06 |

| 2 | 0.15 | 82.19 | 0.22 | 80.12 | 0.22 | 78.75 | 0.22 | 81.65 |

| 3 | 0.14 | 86.19 | 0.14 | 84.61 | 0.18 | 82.13 | 0.16 | 85.04 |

| 4 | 0.23 | 74.54 | 0.15 | 81.56 | 0.36 | 69.28 | 0.34 | 70.56 |

| 5* | 0.16 | 86.79 | 0.1 | 89.72 | 0.16 | 85.31 | 0.3 | 83.42 |

Table 21.

Hyper reconstruction of images from each class.

Table 21.

Hyper reconstruction of images from each class.

| Samples | No Damage | Low Damage | Medium Damage | High Damage |

|---|

| MSE | SSIM | MSE | SSIM | MSE | SSIM | MSE | SSIM |

|---|

| 1 | 0.1 | 84.5 | 0.09 | 85.57 | 0.09 | 85.71 | 0.09 | 86.87 |

| 2 | 0.07 | 88.19 | 0.07 | 89.56 | 0.08 | 86.64 | 0.07 | 88.2 |

| 3 | 0.05 | 90.72 | 0.06 | 89.56 | 0.07 | 88.26 | 0.06 | 89.98 |

| 4 | 0.11 | 82.71 | 0.07 | 87.1 | 0.15 | 79.98 | 0.14 | 81.0 |

| 5* | 0.04 | 91.91 | 0.03 | 93.37 | 0.05 | 90.63 | 0.07 | 89.94 |

Table 22.

SSIM averaged over 150 samples for a modified model (training 10 epochs).

Table 22.

SSIM averaged over 150 samples for a modified model (training 10 epochs).

| | No Damage | Low Damage | Medium Damage | High Damage |

|---|

| SSIM | 0.85113 | 0.84675 | 0.86361 | 0.88097 |

Table 23.

Final reconstruction of images from each class with a slight difference of results.

Table 23.

Final reconstruction of images from each class with a slight difference of results.

| Samples | No Damage | Low Damage | Medium Damage | High Damage |

|---|

| MSE | SSIM | MSE | SSIM | MSE | SSIM | MSE | SSIM |

|---|

| 1 | 0.08 | 87.23 | 0.08 | 88.35 | 0.07 | 88.44 | 0.07 | 89.31 |

| 2 | 0.05 | 90.29 | 0.06 | 89.92 | 0.06 | 89.25 | 0.06 | 90.32 |

| 3 | 0.04 | 92.35 | 0.05 | 91.43 | 0.06 | 90.34 | 0.05 | 91.75 |

| 4 | 0.09 | 85.85 | 89.29 | 0.06 | 0.13 | 83.72 | 0.11 | 84.58 |

| 5* | 0.03 | 93.44 | 0.03 | 94.47 | 0.04 | 92.26 | 0.06 | 91.67 |

Table 24.

Comparison of the fine-tuned object detection models.

Table 24.

Comparison of the fine-tuned object detection models.

| Name | Architecture | Feature Extractor | Pre-Trained Dataset | Dataset | mAP |

|---|

| Model 1 | Faster R-CNN | Inception ResNet V2 Astrous | MS-COCO | Vegdekke | 30% |

| Model 2 | Faster R-CNN | Inception ResNet V2 Astrous | MS-COCO | P18 | 20% |

| Model 3 | Faster R-CNN | ResNet 101 | KITTI | P18 | 15% |

| Model 4 | Faster R-CNN | Inception ResNet V2 Astrous | Oidv4 | P18 | 14% |

| Model 5 | SSD | MobileNet V1 | MS-COCO | Vegdekke | 40% |

| Model 6 | SSD | Inception V2 | MS-COCO | Vegdekke | 20% |

| Model 7 | SSD | MobileNet V1 | MS-COCO | P18 | 5% |

Table 25.

Damage occurrences in the Veidekke dataset.

Table 25.

Damage occurrences in the Veidekke dataset.

| Crack Types | Training | Evaluation |

|---|

| Vertical crack | 1765 | 164 |

| Horizontal crack | 66 | 9 |

| Alligator crack | 85 | 6 |

| Pothole | 16 | 2 |

Table 26.

Damage occurrences in the P18 dataset.

Table 26.

Damage occurrences in the P18 dataset.

| Crack Types | Training | Evaluation |

|---|

| Vertical crack 1 | 3530 | 1184 |

| Vertical crack 2 | 392 | 172 |

| Horizontal crack | 503 | 128 |

| Alligator crack | 233 | 57 |

| Pothole | 81 | 23 |