Abstract

The popularity of dogs has been increasing owing to factors such as the physical and mental health benefits associated with raising them. While owners care about their dogs’ health and welfare, it is difficult for them to assess these, and frequent veterinary checkups represent a growing financial burden. In this study, we propose a behavior-based video summarization and visualization system for monitoring a dog’s behavioral patterns to help assess its health and welfare. The system proceeds in four modules: (1) a video data collection and preprocessing module; (2) an object detection-based module for retrieving image sequences where the dog is alone and cropping them to reduce background noise; (3) a dog behavior recognition module using two-stream EfficientNetV2 to extract appearance and motion features from the cropped images and their respective optical flow, followed by a long short-term memory (LSTM) model to recognize the dog’s behaviors; and (4) a summarization and visualization module to provide effective visual summaries of the dog’s location and behavior information to help assess and understand its health and welfare. The experimental results show that the system achieved an average F1 score of 0.955 for behavior recognition, with an execution time allowing real-time processing, while the summarization and visualization results demonstrate how the system can help owners assess and understand their dog’s health and welfare.

1. Introduction

At the beginning of the 20th century, structural changes in South Korea’s society, such as the spread of individualism, the rise in divorce rates, and the decline in fertility rates, contributed to the aging of the population and a significant increase in single-person households [1]. For people living alone, to cope with loneliness, many opted for raising pets to serve as an alternative to family members and help them with stress [2,3]. This led to an increase in the number of households with pets from 3.59 million in 2012 to 6.04 million in 2020, which represents 30% of the country’s households [4,5].

Among the pets commonly owned, dogs are a popular choice because of the benefits associated with raising them. They motivate to engage in activities, such as walking and exercising together, helping owners sustain their physical [6,7,8] and mental health [9,10,11]. In fact, dogs are currently leading the pet market in South Korea, with a total of approximately 5.2 million raised in 2021 and a presence in 80% of households with pets [4,12]. However, while the number of dogs raised remained relatively stable between 2019 and 2020 [13], data from the Korea Data Exchange (DEX) showed a 60% increase in veterinary hospital visits during the same period [14]. While this shows that owners care for their dogs’ health and are spending money and time to maintain it [4], the increasing veterinary costs have reached an average of $72 per visit, which represents a burden for more than 82% of owners [15]. This financial burden increases when owners take their dogs for random checkups in the hope of discovering and treating health issues. For these reasons, owners need to better understand the health (physical and mental) and welfare of their dogs so as to limit veterinary visits to the necessary ones, rather than performing frequent checkups.

Although medical conditions of some dogs require tests and expert opinions for diagnosis, research has shown that there exists a strong bond between a dog’s welfare and health and its behavior [16,17,18,19]. In fact, not only can monitoring a dog’s behavior help the owner understand their pet’s health state and improve the accuracy of the expert’s diagnosis, but in some cases, behavior is the only clinical sign that experts can rely on [20,21,22]. Thus, monitoring dogs’ behavioral information is necessary because—when accurately collected—such data can help the owner discover abnormalities and provide useful insight to the expert in making an accurate diagnosis. Consequently, this would help the expert start the treatment early, improving the chances of a quick recovery and reducing veterinary visits, which would relieve some of their financial burden.

Although owners generally tend to take notice of their dogs’ behaviors, it is difficult for them to do so constantly, especially since it is common for dogs to be left unattended. For example, in South Korea, dogs spend an average of five to seven hours alone at home [4], a time during which owners cannot directly observe their behaviors [23]. Hence, owners are likely to miss key behavioral signs and pattern changes that can help assess their dogs’ states, especially because some signs are displayed mainly when the dog perceives its owner’s absence [23,24,25]. In fact, dogs with physical and separation-related health issues when left alone tend to display restlessness, increased activity, pacing, and some undesirable behaviors, such as excessive vocalization, unlike healthy dogs, which are likely to mainly show long periods of passive behavior and locomotion [26]. Owing to that, and since the owner’s presence can cause the dog to synchronize its behavior with theirs [27], keep it attentive to the owner and trigger interactions, such as playing, which can affect the dog’s behavior [28], it is important to observe the dog’s behavior when alone and keep track of them [29]. This can provide more consistent information about its behavioral changes. Therefore, in this paper, we propose a method to monitor a dog in an illuminated indoor environment, recognize its behavior, and provide an effective way to visualize it to help owners and experts better understand their health and welfare.

After reviewing previous studies on dog behavior recognition, we identified related studies [30,31,32,33,34,35,36,37], which are summarized in Table 1.

Table 1.

Some recent studies on dog behavior recognition (published 2013–2022).

Different methods have been introduced to recognize dog behavior. In this context, dog behavior refers to static (e.g., sitting and sleeping) and active behaviors (e.g., walking, barking, and jumping). Early methods used statistical classification [30], DTW distance [31], and discriminant analysis [32] to classify selected dog behaviors, providing proof of concept. Later, with the improvement in machine learning techniques and the increase in their popularity in different fields of research [36,38,39,40], more methods have been employed to recognize dog behaviors. For instance, Chambers et al. [34] aimed to validate dog behavior recognition, with a focus on eating and drinking, using FilterNet and a large crowd-sourced dataset. Using their method, Aich et al. [33] trained an artificial neural network (ANN) on sensor data to detect dog behaviors and classified their emotional states (positive, neutral, or negative) based on their tail movements. In addition, Wang et al. [36] classified dogs’ head and body postures separately using long short-term memory (LSTM) on sensor data, and then used complex event processing (CEP) to better differentiate between behaviors with similar body postures. Kim et al. [37] used a convolutional neural network (CNN) with LSTM on fused image and wearable sensors data to compensate for the shortcomings of this latter caused by noise in a multimodal system to improve the classification of a dog’s behaviors. By contrast, Fux et al. [35] used object detection to track a dog inside a clinical setting, extracted features from its movements, such as straightness, and classified them using random forest to recognize ADHD-like behaviors.

While these methods achieved good results, their main purpose was to confirm the possibility of recognizing dog behaviors: they focused mainly on and concluded their work on dog behavior classification. However, with only that as a result, understanding a dog’s health and welfare remains a challenging task for owners, because relying solely on the recognized behaviors and manually reading through them to try to gain insight is impractical, time-consuming and may result in missing important details. Instead, in this paper, we propose a method that provides owners with summarization and visualization of their dog’s recognized behaviors to help them effectively visualize them and perceive important patterns and behavioral changes that would help assess its health and welfare. Furthermore, for better assessment, it is required at times to retrieve and visualize some behaviors to examine their intensity and significance [41,42], which makes it necessary for this method to collect video data as input. Most studies did not consider extracting the dog’s location information, which can be useful in detecting movement patterns [35]. In addition, only a few studies have considered real-time processing, which is needed to ensure quick feedback in cases of an alarming increase in the frequency of poor welfare-related behaviors that require immediate attention [41,42]. Finally, because the owner’s presence can influence the dog, to ensure a consistent observation of behaviors, including a preprocessing step to select only the data where the dog is alone without any person present can provide an additional advantage. This further supports the need for using image data in our system as it facilitates the detection of the presence of subjects of interest (i.e., dogs and people) in the input data. Hence, in this study, to overcome the limitations of previous studies, we used a camera-based system to monitor, recognize, and provide summarization and visualization of a dog’s location and behaviors when left unattended to help effectively understand its health and welfare. Accordingly, the system includes the following features.

- Handling video data containing dogs and people, matching real-life situations, and automatically detecting and selecting the data where the dog is alone.

- Tracking the dog’s location and performing behavior recognition in real time to provide live visualization and urgent alerts to owners.

- Summarizing the dog’s video using its tracked location data and recognized behaviors and providing an effective visualization that helps draw insightful information to understand and assess its health and welfare.

We propose an end-to-end system for dog video summarization based on detected behaviors and location information. The system uses the object detection model You Only Learn One Representation (YOLOR) to detect the dog’s location, followed by an adapted sequence bounding box matching algorithm to correct missed detections before selecting only sequences of images where the dog is alone. Subsequently, the images are cropped to focus on the dog and a two-stream CNN-LSTM is used for behavior recognition based on RGB and optical flow images. Both outputs from YOLOR and CNN-LSTM are stored as log data and then used to generate graphs to provide effective and efficient visualization summaries for owners and dog experts to assess the dog’s physical and mental health and welfare.

2. Related Work

The most important task in our system is the summarization of the dog’s video data based on its recognized behaviors to provide a visualization of the results using analytical tools. Thus, to select a suitable approach for this task, we first explored the existing video summarization methods.

Video summarization is a research field that aims to create a concise summary of a video by selecting the most important parts. It is generally divided into scene-based, where visual features are extracted to select key frames [43], and content-based [44], which uses information related to the content and semantics in the video, such as objects [45] and actions [46,47]. In terms of action-based summarization, although close to our purpose, the proposed methods mainly targeted humans and relied on motion [46,48], making them unsuitable for our system because we targeted dogs’ behaviors, which include not only actions (active behaviors) but also static behaviors that lack motion. However, because this type of summarization is performed in two steps, action recognition and summarization, by following a similar approach and using a behavior recognition method as a first step, we can achieve our summarization purpose. Accordingly, it is important to select an approach that delivers accurate recognition of dog behavior for our system to ensure good summarization performance. To this end, we examined recent methods used for animal behavior recognition to select a suitable method for dog behaviors and found that models based on the CNN-LSTM approach have been widely used for their performance. For instance, Chen et al. [49] used VGG16-LSTM to recognize aggressive behaviors of pigs in pig farms for injury prevention, and Chen et al. [50] used ResNet50-LSTM to classify the drinking behaviors of pigs to verify their adequate water intake. Recently, EfficientNet-BiFPN-LSTM was proposed by Yin et al. [51] to recognize the motion behavior of a single cow to help with farm monitoring. Each of these methods showed good results in terms of behavior recognition, proving that the method is suitable for our goal. However, because the background and presence of multiple animals make it difficult to extract optical flows, these methods rely on a single-stream CNN-LSTM, using only RGB frames. Because the use of both RGB for appearance and optical flow for motion can lead to better recognition performance [52], guaranteeing by that a more accurate summarization, especially in our system, which targets both active and static behaviors, a two-stream CNN-LSTM model is more appropriate for us to use.

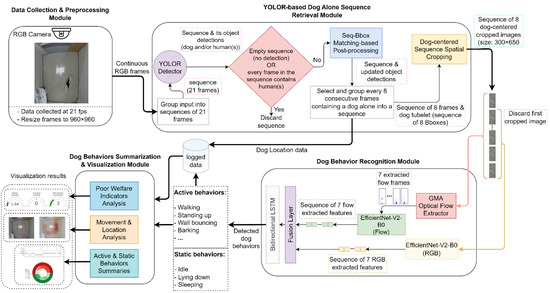

Accordingly, we propose an end-to-end system for behavior-based dog video summarization using four modules. The first module collects and preprocesses the image sequences and forwards them to the second module, namely, the YOLOR-based dog-alone sequence retrieval module. At this level, object detection is performed using YOLOR-P6 [53] to detect dogs and people, followed by sequence bounding box matching [54] to correct missed detections. The YOLOR-P6 model was used because it provides one of the best speed and accuracy tradeoffs among the object detection models. Further processing is performed to select sequences of frames containing a dog alone, and their detection results are saved as a dog’s location log data. After that, the selected frames are spatially cropped with a focus on the dog to reduce background noise and location dependence. The sequences of cropped images are then forwarded to the dog behavior recognition module, where a two-stream EfficientNetV2B0 is used to extract features from the RGB and optical flow frames, generated using the global motion aggregation (GMA) algorithm [55] and then a bidirectional LSTM to extract temporal features from the cropped dog sequences. The specific models and algorithms used in this module were selected because they guarantee good performance and inference speed. Finally, the detected behaviors are saved as log data and forwarded to the dog-behavior-based video summarization and visualization module. At this stage, data visualization techniques are utilized to generate an effective visual summarization of the dog’s location and behavior through different graphs to help identify patterns and features that can allow a better understanding and assessment of the dog’s health and welfare.

3. Behavior-Based Dog Video Summarization System

The architecture of the proposed behavior-based dog video summarization system is illustrated in Figure 1.

Figure 1.

Overall architecture of the proposed behavior-based dog video summarization system.

3.1. Data Collection and Preprocessing Module

The image data used by the system were transmitted at a frame rate of 21 fps from a top-down angle RGB camera installed in the ceiling to minimize occlusions and overlapping with people and other objects. This angle also provides an intuitive way of localizing the objects of interest inside a room and studying their movements, making it commonly used in monitoring systems [49,50,51,52,56]. In this module, the collected RGB images are resized to . pixels to match the input size of the YOLOR model and are then forwarded to the next module.

3.2. YOLOR-Based Dog-Alone Sequence Retrieval Module

The data received at this stage contain frames with a dog alone, frames with one or more people, frames with people and a dog, or frames of an empty room. To ensure consistent observations and prevent the owner’s presence from influencing the dog’s behavior [27], this module selects only sequences of frames where the dog is alone and discards the remaining ones. YOLOR object detection was first used to detect humans and dogs in each sequence. If a sequence contains no detections or contains at least one human detection in every image, it is discarded. Otherwise, the sequence bounding box (Seq-Bbox) matching algorithm is applied to handle missed detections. Subsequently, the image sequences containing a dog alone were retrieved, and their corresponding bounding boxes were saved as dog location log data. Finally, an algorithm was applied to crop the images in each sequence to focus on the dog using a unified padded bounding box, generated from the dog’s bounding boxes. In short, this module receives continuous RGB frames as the input and output sequences of eight dog-focused cropped images. The entire process is described in detail in the following subsections.

3.2.1. Dog and Human Object Detection

Owing to recent advances in deep learning, the field of object detection has shown continuous improvements in speed and performance and led to the development of methods and models targeting several areas of research and covering different scenarios [57,58]. Among the existing object detection models, You Only Look Once (YOLO) [59] and its variants have attracted considerable attention because of their performance and real-time inference speed, making them a popular choice for monitoring systems [37,56,60,61].

In this module, the YOLOR model was used for object detection of dogs and humans. YOLOR is a recent YOLO variant with a network that integrates both explicit and implicit knowledge to learn one general representation. More specifically, the YOLOR-P6 subvariant, which offers one of the best performance and speed tradeoffs guaranteeing real-time inference, was used for human and dog detection on each frame received and output the class, score, and bounding boxes of every object detected. First, the frames received from the previous module were grouped into sequences of 21 consecutive frames for object detection and analysis of each sequence separately. The sequence length was set to 21 frames to match the camera’s frame rate. After object detection is performed on the images in a sequence, if there is no detection in any frame of the sequence, or if at least one human is detected in every frame of the sequence, the whole sequence is discarded and the module moves to process the next sequence. Otherwise, the sequence and its detection results are retained for further analysis to retrieve from it sequences of data containing a dog alone. However, because YOLOR is a per-frame detection model, when performing object detection on a sequence of frames such as in this case, missed detections can occur due to factors such as unusual object poses [62]. To ensure the correct retrieval of frame sequences with a dog alone, it is important to handle such missed detections. In general, when the detection model targets a single object, methods such as linear interpolation can be used directly to replace the missing detections based on the detections in the closest surrounding frames [37]. However, as we target multiple objects (a dog and humans), in some scenarios when there is a missed detection, nearby frames can contain multiple detections. In such a case, to select the exact detections that need to be used from nearby frames to generate the missed detection, we first need to identify and match in a sequence all the detections that correspond to each object. For this purpose, the Seq-Bbox matching postprocessing method proposed by Belhassen et al. [54], which specifically performs this matching, was used, as explained in the next section. Accordingly, the sequences of the 21 frames that were not discarded are forwarded with their corresponding detection results to the Seq-Bbox matching postprocessing unit to handle missed detections.

3.2.2. Seq-Bbox Matching-Based Postprocessing

This unit of the module takes a sequence of 21 frames and its detections as input, uses Seq-Bbox matching to correct any missed detections, and then outputs sequences of eight frames containing a dog alone and their detection results. The Seq-Bbox matching postprocessing algorithm used here is the one proposed in [54], and it uses the Seq-Bbox matching technique to match detections every two consecutive frames based on the distance score shown in Equation (1).

where intersection over union (IoU) represents the geographical proximity of two bounding boxes and the dot product of the two classification score vectors depicts the similarity in the semantics of bounding boxes i and j.

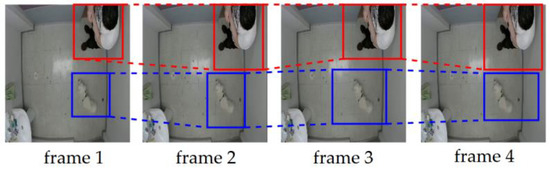

Through the matching of detections in a sequence, tubelets that represent sequences of bounding boxes specific to objects detected in a sequence are generated. Figure 2 shows examples of a tubelet of a human marked in red and a tubelet of a dog marked in blue.

Figure 2.

Examples of tubelets. The tubelet in red represents the human’s bounding boxes and the tubelet in blue represents the dog’s bounding boxes.

After generating tubelets and to generate missed detections, the bounding boxes of the last frame belonging to each tubelet are matched with the bounding boxes of the first frame of each tubelet , given that the first frame of starts temporally later than the last frame of . Once the two tubelets are linked, the missing boxes in between are generated using bilinear interpolation. Figure 3 shows an example of tubelets linking a tubelet (in red) and (in blue) and the missed detections generated through bilinear interpolation (in green).

Figure 3.

Example of linking two tubelets, in red and in blue, and generating missed detections (green dotted boxes).

Furthermore, to prevent matching tubelets of different objects, a threshold is used to limit the accepted temporal interval between the candidate tubelets and and only link tubelets if the number of frames between the last frame of and the first frame of is less than . Following the experimental method used in [54] to select a value for , the optimal value for on our dataset was set to .

Through Seq-Bbox matching postprocessing, missed detections are reduced in each sequence of 21 frames, and for each object, a tubelet is generated. Subsequently, tubelet matching and linking are applied between every current and previous sequence and to generate any missed detections in between. Once the final tubelets are generated, they are analyzed to select the sequences of frames containing dog tubelets that do not overlap with human tubelets, as these represent sequences of images with a dog alone, and then the sequences are regrouped in sequences of eight frames. Unlike in the previous unit, where sequences contained 21 frames, at this stage, the grouping of frames with a dog alone uses eight frames as sequence length to match the input size of the EfficientNet-LSTM. Subsequently, new sequences of length 8 are forwarded to the next unit with their corresponding dog tubelets. Meanwhile, the dog’s bounding boxes from each frame, which represent the dog’s location tracking, are saved as log data.

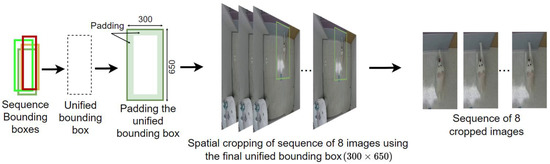

3.2.3. Dog-Centered Sequence Spatial Cropping

At this point, and to improve the behavior recognition results, the images in each sequence are spatially cropped to put more focus on the dog. This helps concentrate learning on our specific target region to explore its contextual features, reduce the impact of less significant elements, and prevent the model from associating actions with specific locations [52,63]. Accordingly, this unit receives sequences of eight frames with its dog tubelets and since only spatial cropping is performed at this level, the sequence length is maintained, and hence the output is a sequence of eight spatially cropped images.

Because the dog’s tubelets are already detected through the YOLOR model and Seq-Bbox matching, it is possible to use them for image cropping with a focus on the dog. Nevertheless, because every bounding box in the tubelet has a different size, directly using them to crop the dog in every frame of the sequence will result in a sequence of cropped images of different heights and weights. When resized to match the image input size of the CNN-LSTM model, the proportions of the dog will differ from one frame to the next in the sequence, which will negatively affect the optical flow and image feature extraction. Thus, for cropping while maintaining the dog’s proportions, we propose a simple algorithm to unify the bounding boxes across each tubelet and use the unified bounding box to crop every frame in the sequence.

The algorithm proceeds by examining the , , and values of every bounding box in a sequence of eight frames, selecting the smallest values of and and the highest values of and . These values are used to define a unified bounding box that includes all areas covered by the bounding boxes in a sequence. Furthermore, to ensure that all cropped sequences have a similar size, the dataset was analyzed to approximate the largest possible height and width of a unified bounding box, which was found to be , and padding was used on the unified box to reach that size. The algorithm first attempts to add padding equally from each side of the bounding box vertically and horizontally; however, when the padding exceeds the limit of the total image size on one side, excess is added to the other side. Finally, the unified and padded bounding box was used to crop every image in the sequence to produce a sequence of eight dog-centered cropped images (dog-centered tubelet). The process of generating, padding, and using a unified bounding box to spatially crop images in a sequence is illustrated in Figure 4.

Figure 4.

Process of generating and utilizing a unified padded bounding box for spatial cropping on a sequence. In this scenario, padding is applied equally on each side of the box.

The cropped images were then resized to to match the input size of the CNN-LSTM before being forwarded to the behavior recognition module.

3.3. Dog Behavior Recognition Module

In this module, we used a CNN-LSTM model for dog behavior recognition, as it has been demonstrated to be efficient and perform well, making it popular for use in animal behavior recognition [49,50,51]. In particular, as shown in the comparative analysis presented in the work done by Yin et al. [51], when used as a spatial feature extractor, the EfficientNet [64] model was able to outperform other commonly used CNN models such as VGG16 and ResNet50 and matched the results of DenseNet169 in behavior recognition while using significantly fewer parameters. For this reason, we adopted a similar approach in our system, but instead of the EfficientNetV1, we used EfficientNetV2, which was introduced by Tan et al. [65] to solve some of the bottlenecks of its predecessor. This was accomplished through a combination of scaling and training-aware neural architecture search (NAS), with the extensive use of MBConv and fused MBConv in early layers to increase both training speed and parameter efficiency [52,64,65]. This makes it a suitable choice for our architecture because it can guarantee real-time application through fast inference. More specifically, the B0 variant was selected because it had the lowest number of parameters. Because using both RGB and optical flow can lead to improved results [52,66], in this module, we use a two-stream CNN-LSTM to recognize dog behaviors, using EfficientNetV2 and a bidirectional LSTM (Bi-LSTM). The Bi-LSTM was selected because of its good performance with time-series data and capability to integrate future and past information when predicting, which allows it to better define time boundaries for actions [52].

Accordingly, the sequence of eight cropped images received in this module was used for dog behavior recognition through a two-stream EfficientNetV2B0-Bi-LSTM model. The sequence was first input to the GMA [55] algorithm to extract the optical flow between every two consecutive RGB cropped images. Consequently, each sequence of eight cropped images is used by GMA to extract flow and generate a sequence of seven optical flow images. The GMA algorithm was used because it provides one of the lowest end-point error (EPE) values on the Sintel dataset benchmark [67] while ensuring low latency. Subsequently, due to the mismatch between the sequence of seven flow frames and the sequence of eight cropped RGB images, the first cropped RGB frame is dropped to allow both streams of data (RGB and flow) to have the same sequence length of seven frames, which will later guarantee that the LSTM layer receives a sequence with time steps of consistent features vector size. At this stage, each sequence is fed to its respective EfficientNetV2 model that was pretrained on similar data to serve as a spatial feature extractor to learn appearance features from the RGB sequence and motion features from the optical flow sequence. For use as a feature extractor, the last dense layer of both EfficientNetV2 models was removed to output a feature vector of size 1280 for each sequence of RGB and flow. Subsequently, both sequences are fed to a fusion layer, to be concatenated before being fed to the Bi-LSTM as one sequence of seven fused feature vectors, each of size 2560. At the Bi-LSTM level, the temporal features in the sequence were extracted and a softmax layer used to classify them and recognize the dog’s behavior, which was then saved in the database as log data and simultaneously forwarded to the next module. The behaviors recognized in this module are described in more detail in Section 4.1.

3.4. Dog Behavior Summarization and Visualization Module

At this stage, the dog’s detected location and behavior are simultaneously received by this module to provide real-time feedback and saved as log data in the database. Simple postprocessing is applied by grouping the detected behaviors to define their occurrence, length, and temporal boundaries. In addition, in this module, data visualization techniques were used to convert the saved location information and summarize the behaviors from the log data into an effective visual representation. By doing so, the system can help owners and experts identify behavioral features, anomalies, and patterns to better understand the dog’s health and welfare.

The following subsections present the different types of graphical and visual summarization used to cover different aspects of dog behavior that can help assess their state.

3.4.1. Poor-Welfare Indicator Monitoring

Ensuring that a dog benefits from good welfare is one of the main responsibilities of an owner, which is why it is important for them to understand when their dogs are showing signs of possible poor welfare, and to manage and improve it. While precisely recognizing this is a difficult task, previous research has defined some dogs’ behaviors as possible indicators of poor welfare when they are displayed in high frequencies. Accordingly, we selected potentially abnormal behaviors displayed by the dogs in our data collection experiments to demonstrate how they can be used to detect possible poor welfare. Table 2 shows these behaviors and the frequency or time threshold starting from where the behavior becomes significant for poor welfare [68].

Table 2.

Description of potentially abnormal behaviors and their thresholds.

These behaviors can potentially cause physical harm to the dog and disturbance to the community and may require quick intervention. Therefore, providing a visualization of their level of occurrence in real time represents an effective way for owners to monitor them. For this purpose, we employ gauge charts to display the measure of occurrence for each of those behaviors to visualize when they reach their respective thresholds, and owners can choose to be notified when that happens.

3.4.2. Dog Movement Visual Summary

A dog’s movement and locomotion can provide a basis for assessing aspects of its health and welfare. In fact, factors such as ambulation patterns and restlessness levels have been associated with health and welfare issues such as physical pain, stress, and hyperactivity-related disorders [22,69,70,71]. In addition, some movement patterns, such as pacing and circling, are considered signs of anxiety in dogs [72,73]. Therefore, our system provides a visual summary of the dog’s spatial movement based on the logged location information to allow owners and dog experts to observe and look for similar signs.

The dog’s movement visual summary is presented in two graphs. The first is a heatmap generated using the saved bounding boxes of the dog to represent the areas covered by the dog’s movement to better understand the dog’s movements and activity level, whereas the second represents the trajectory of the dog’s movement. This second graph uses the centroid of the bounding boxes from the log data and connects them to each other using a color map in order to define the timeline of the trajectory. In addition, circles were used to highlight the beginning and end points of a dog’s movement.

3.4.3. Summarization of Dog’s Displayed Behaviors

Two graphs were used to summarize and visualize the displayed behaviors of the dogs. The first is a nested doughnut chart that displays the percentage of active and static behaviors alongside the specific behaviors belonging to each category, which provides a general understanding of the dog’s displayed behaviors as a whole and allows observation of changes in the behaviors displayed. This is important, as increases or decreases in the frequencies at which some behaviors occur are a common sign of health and welfare issues [21,22,74]. A scatterplot was employed as a timeline to visualize the dogs’ behaviors and their time duration. Based on this, patterns such as the phases of resting and sleeping, which are important welfare indicators [75], can be observed, in addition to the levels and types of activity displayed.

4. Experimental Results

4.1. Data Collection and Datasets

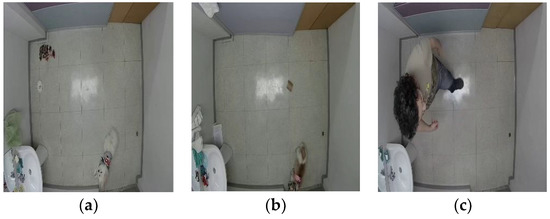

The data were collected in a laboratory with a CCTV camera (Hikvision DS-2CE56D0T-IRMM (2.8MM), Hangzhou, China) recording frames with a resolution of sampled at 21 fps, mounted on the ceiling at a direct top-down angle with the participation of two small dogs: an 8-year-old rescue shih tzu and a 2-year-old spitz. The experiments’ recordings were conducted in a room located in Sejong City, South Korea, with the consent and presence of the owners and under continuous direct or remote supervision when the dog was left alone inside the recording area. The first few visits were done to ensure that the dogs had time to discover and familiarize themselves with the environment to avoid causing them any stress when collecting data. In addition, the recordings were limited to a maximum of 20 to 30 min with breaks when needed to prevent any discomfort, water was provided along with the dog’s own toys and items used at home, and the room temperature was maintained at a comfortable level. The camera recordings started before the arrival of the dog, during the breaks, and after the experiments were conducted to collect diverse data, including frames of the empty room, humans with and without the dog to be used for the object detection model training. The setup of the room was changed slightly to produce a different background, and people were invited to the recording area on different occasions for daily life tasks for the purpose of obtaining more real-life data. Figure 5 shows examples of the collected data.

Figure 5.

Examples of image data collected: (a) example of data collected with a spitz; (b) example of data collected with a shih tzu; (c) example of data collected with a human.

The image data of both humans and dogs were labeled with bounding boxes for object detection training, and only the dog was labeled for behavior recognition in the data where it was alone. The dataset used for object detection contained 12,000 samples from the shih tzu, 16,000 samples from the spitz, and 15,000 samples from different people. In order to select the length of the images sequence used as input to the EfficientNet-LSTM model, we considered the findings of the analysis done by Zhang et al. [66], where they compared results of a two-stream approach with different input sequence lengths on various datasets. Consequently, we determined that a sequence of length eight frames, which generally leads to good results, would work for our model, and this was further validated through our experimental results. Table 3 shows the number of sequences of eight images used for the behavior recognition training for each of the behaviors displayed by the dog in the data collection experiments alongside their descriptions. External stimuli were limited to avoid any bias and to collect data on the dogs’ naturally displayed behaviors. Because some of the dogs’ collected behaviors were less frequent than others, the samples of the frequent ones were limited to reduce the dataset imbalance while selecting random samples from different experiments.

Table 3.

Dog behavior description and dataset used for the behavior recognition model training.

4.2. Experimental Environment and Setup

The system implementation and the experiments were all conducted using Python 3.8 in an Anaconda environment on a computer running Windows 10 with an Intel i7 8700 K CPU, 32 GB of RAM, and an RTX 2080Ti GPU. The YOLOR object detection model was trained using the PyTorch library following the officially recommended environment, whereas the EfficientNetV2 and LSTM models were trained with the TensorFlow 2.8 library. The GMA code was based on the official implementation. Both object detection and behavior labeling were performed using the ViTBAT [76] software, and a Python script was implemented to convert the bounding box labels to the YOLO format. It is also worth mentioning that the YOLOR-P6 model was used to automatically generate object detection labels after it was trained on part of the data that were manually labeled to speed up the labeling process on the remaining data. The tool introduced in [77] was used to calculate the mean average precision (mAP) for object detection evaluation. The visualization tools were implemented using Matplotlib and Plotly libraries.

4.3. Performance Evaluation

4.3.1. Evaluation Metrics

The following Equations (2)–(5) represent the different metrics used for the evaluation of the models in the second and third modules of our proposed system.

where mAP is the mean average precision, AP is the average precision, C is the number of classes, true positive (TP) denotes the dog’s behaviors that are correctly classified as true, false positive (FP) is the number of falsely identified dog behaviors, and false negative (FN) is the number of behaviors incorrectly classified as false.

4.3.2. YOLOR-P6 Dog-Alone Sequence Retrieval Results

The data used for the YOLOR-P6 object detection model training included samples of labeled images of dogs and people and images of an empty room. The dataset was divided according to a ratio of 8:2, resulting in training samples with 34,400 images and the validation samples with 8600 images. YOLOR-P6 was trained for 300 epochs with an input size of pixels using default hyperparameters. To evaluate and compare YOLOR-P6 object detection with and without the seq-Bbox matching algorithm to confirm the effectiveness of the latter, the mean average precision (mAP) and latency in milliseconds were calculated, and the results are presented in Table 4.

Table 4.

Average precision (AP), mean average precision (mAP) and average inference time of YOLOR with and without seq-bBox matching postprocessing.

As seen in Table 4, the seq-Bbox matching postprocessing improves the results of the YOLOR-P6 model, especially the AP of the dog which reached 0.962, while only requiring 0.19 ms in additional latency.

Furthermore, precision, recall, and F1 score were used to evaluate the effectiveness of the dog-alone retrieval module and confirm that it can accurately retrieve sequences of data containing a dog alone. We used a different set of sequences of eight images containing four different scenarios: a dog alone, one or more people, a dog with one or more people, and images of an empty room. The results, as seen in Table 5, confirm the efficacy of this method in differentiating between the different types of sequences and hence its efficacy in retrieving the sequences of data where the dog is alone.

Table 5.

YOLOR-based dog sequence retrieval performance using precision, recall and F1 score.

Although the average precision (AP) for people detection was relatively low, the results of the retrieval were significantly better, and this is due to the fact that some of the scenarios used in both evaluations (Table 4 and Table 5) contained multiple people, and in the most challenging ones, some of them stood too close to each other, which affected the object detection results. However, for the same scenarios, the detection of at least one person allows the sequence retrieval module to correctly classify them, especially in the dog and person scenarios, which explains the good retrieval results. Based on these results, we can confirm that this module performs well and provides accurate tracking of a dog’s location information and retrieval of sequences of a dog alone.

4.3.3. Dog Behavior Recognition Results

In this section, we present the results of two experiments. The first is used to validate the effectiveness of the EfficientNetV2-LSTM model proposed in this paper using Precision, Recall and F1 score, and the second is to compare the results of the behavior recognition, with other models used for action-based summarization and behavior recognition. The data used in both the experiments are listed in Table 3. To train the models, the dataset was divided into training, validation, and testing data at a ratio of 7:2:1. EfficientNetV2 used for RGB images was trained for 60 epochs, whereas the one used for optical flow images was trained for 150 epochs, both of which were trained using the Adam optimizer with a learning rate of 0.00005 and an input size of . In contrast, the Bi-LSTM network consists of one layer of 60 hidden units, uses a dropout rate of 0.5, and 0.1 recurrent dropout, and it was trained for 200 epochs using the Adam optimizer with a learning rate of 0.0005 and categorical cross-entropy as a loss function. A softmax activation layer was used on top of the LSTM to classify the results and recognize the behaviors.

The results of the first experiment show that our proposed method achieved an average F1 score of 0.955, as shown in Table 6, confirming the performance of the proposed method. As seen from the results, such behaviors as “idle” have relatively lower accuracy, as it is sometimes hard to differentiate it from other behaviors with similar postures, such as “lying down” or “walking”, when the dog’s walking speed is low. Similarly, “standing up” and “wall bouncing” sometimes share similar features in posture and motion, which can lead to misclassification.

Table 6.

Proposed method behavior recognition performance using precision, recall and F1 score.

In the second experiment, other recent methods for action and behavior recognition were used to compare their recognition results with our proposed method. The TDMap–CNN method by Elharrouss et al. [46] used in their proposed action-based summarization method, VGG16-LSTM [49] and ResNet50-LSTM [50] used for animal behavior recognition, and our proposed EfficientNet-LSTM, using full-size images and cropped images as input data. The TDMap-CNN [46] was originally designed to generate a background using cosine similarity and to employ the generated background for segmentation and track people. However, in our case, as there were instances where the dog remained inactive for long periods, the generated background ended up including the dog and affected segmentation. To address this issue, for each video used, we manually selected a portion in which the dog was in constant motion to generate the background and use it. On the other hand, the second and third models were trained as single-stream CNN-LSTM, using only the RGB images and following the network and parameters available in their respective papers. The last two models are based on the two-stream EfficientNetV2-LSTM, where the first one was trained on the original full images, and the second was trained on the cropped images obtained from the dog-centered sequence spatial cropping.

Table 7 shows a comparison of dog behavior recognition performance results using each model. As shown in the table, the EfficientNet-LSTM model performed better than the other methods. In addition, the results proved the effectiveness of using dog-centered cropped images, as opposed to full images as input data, which confirms our initial assumption about the impact that the background can have on the recognition and validates the benefit of the cropping unit that was included in the system. The TDMap-CNN showed a lower F1 score compared to the other models because the method relies heavily on motion information to classify actions, and this leads to misclassifications when used with static behaviors that contain little motion. On the other hand, one-stream methods rely solely on appearance features, which affect the recognition of behaviors with similar postures. This further confirms the effectiveness of exploiting both appearance and motion features when recognizing static and active behaviors, as is the case with the proposed method. Finally, the proposed method was evaluated for the inference time required for the system to operate, including YOLOR-P6 Seq-Bbox matching object detection, sequence retrieval, spatial cropping, GMA-based optical flow extraction, and EfficientNet-LSTM inference. The total was 0.226 s/image, which confirms the capacity of the system to execute in real time.

Table 7.

Comparison of dog behavior recognition performance using F1 score.

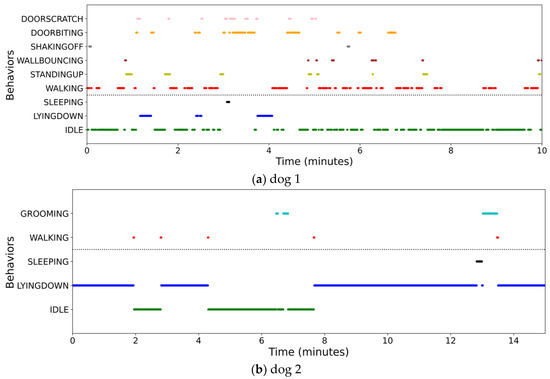

4.3.4. Dog Behavior Summarization and Visualization Results

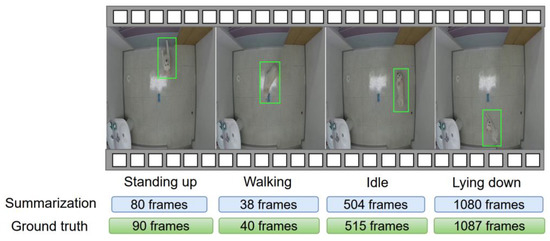

To demonstrate the effectiveness of the dog behavior-based summarization, a timeline of the recognized and summarized behaviors from a dog video is shown in Figure 6, along with the ground truth. As seen in the figure, our method delivers summarization results that are comparable with the displayed behaviors and their lengths, which further confirms its performance.

Figure 6.

Behavior-based video summarization results on video containing different dog behaviors.

The following figures demonstrate the visual summary of the logged dog’s behaviors and movement patterns provided by the system to help the owner and experts understand and monitor the dog’s health and welfare.

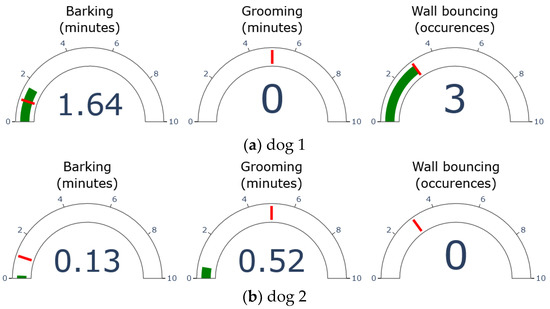

Figure 7 shows an example of the real-time visualization of poor-welfare indicators using a gauge chart for each behavior during a 5 min session. The threshold is represented by the red line in each chart based on the values in Table 2. The current value for each behavior is indicated in green and displayed numerically inside each gauge. Both barking and grooming are tracked as continuous data in minutes, while wall bouncing is a discrete value for the number of times it occurred.

Figure 7.

Gauge chart visualization results for monitoring of dog’s poor-welfare indicators: (a) example of scenario with dog 1; (b) example of scenario with dog 2.

The charts shown in Figure 7a,b correspond to the visualization of the shih tzu’s (dog 1) and spitz’s (dog 2) poor-welfare indicators, respectively. As seen in the figures, the first dog’s wall-bouncing and barking levels both exceeded the set threshold, indicating a higher possibility of a welfare issue. Due to its past as a rescue dog, dog 1 seems to be showing signs of anxiety in this scenario. In contrast, dog 2 displayed some barking and grooming, but both were at a normal level below their respective thresholds.

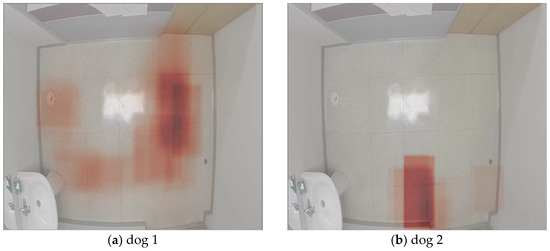

Figure 8 presents a summary of the dogs’ movements around the room as a heatmap to highlight the areas where they have spent significant amounts of time. Areas with a darker shade of red correspond to places where the dog spent most of its time.

Figure 8.

Heatmap visualization of areas where the dog spends longer time: (a) example of scenario with dog 1; (b) example of scenario with dog 2.

In the scenario in Figure 8, both heatmaps used the tracked location data of each dog for 3.5 min right after they were left alone in the room to help assess their reaction. These first minutes are important to monitor as the period during which dogs with some health issues are likely to display abnormal behavioral patterns [78,79]. Dog 1 in Figure 8a covered a wide area of the room in a short period of time, indicating a high level of restlessness and movement, which are indicators of stress and anxiety [80,81]. Figure 8b shows how dog 2 spent that time near the exit from where its owner left, which is considered a normal behavior of attachment that healthy dogs commonly display [79].

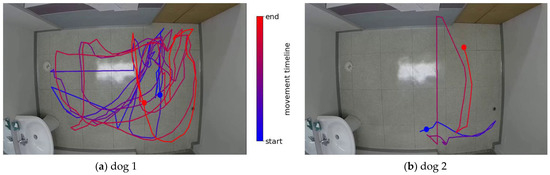

To further detail the dog’s movement summary, the visual tool shown in Figure 9 was used to show the dog-specific trajectory.

Figure 9.

Trajectory visualization tool for displaying dog’s movement patterns: (a) example of scenario with dog 1; (b) example of scenario with dog 2.

The scenarios shown in Figure 9 represent the same ones used in Figure 8 to detail both dogs’ movement trajectories starting from the blue circle and following the color map shown in the legend and ending in the red circle. As seen in Figure 9a, dog 1 shows signs of restlessness, pacing, and a few occurrences of circling, all of which indicate a level of anxiety and possible welfare issues. In contrast, dog 2 in Figure 9b shows a simpler trajectory with a lack of exploration and activity, which suggests a relaxed state.

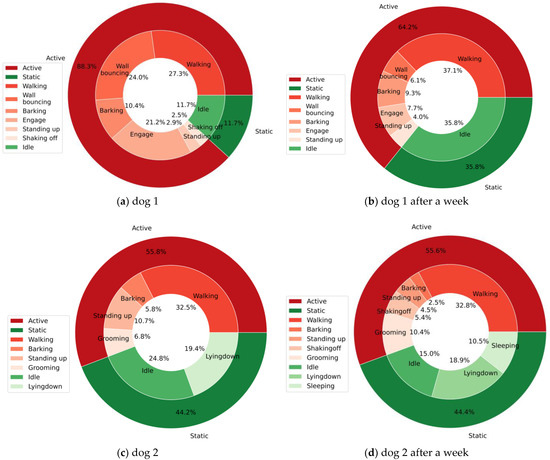

Another visualization provided by the system is the proportion of detected dog behaviors based on logged data using a doughnut chart. The chart shows the proportion of active and static behaviors as a general category and includes a nested level detailing the proportion of specific behaviors. Examples of these visual summaries are shown in Figure 10.

Figure 10.

Nested doughnut charts for visual summarization of dog’s static and active behaviors: (a,b) example of two scenarios a week apart with dog 1; (c,d) example of two scenarios a week apart with dog 2.

Figure 10a,b both represent the proportions of the behaviors displayed by dog 1, with the first being from an early session during which the dog was still not well accustomed to the new environment, and the second representing a later session where the proportion of static behaviors relatively increased. In addition, we noticed a significant decrease in some indicators of poor welfare, such as wall bouncing, which represents a positive change and suggests that the dog’s welfare is improving. On the other hand, Figure 10c,d for dog 2 show consistency in the level of static and active behaviors displayed by dog 2 and no significant increase or decrease in activities that could indicate health and welfare issues.

Finally, Figure 11 shows the scatterplot used to draw a timeline of the dog’s behaviors to observe when and for how long behaviors occurred and to visualize the level of activity displayed. Through this timeline, it is possible to understand the dog’s behavioral patterns through time (in minutes) and monitor its level of rest and sleep, which are also important factors related to its health and welfare [75].

Figure 11.

Timeline for visualization of activity and resting behaviors displayed by a dog: (a) example of scenario with dog 1; (b) example of scenario with dog 2.

Figure 11a shows the timeline of dog 1 behaviors, where the static ones are listed below and separated from the active ones by a dotted line. Dog 1 in this scenario displayed a high level of activity, and although there were times when it was idle and lying down, those behaviors were scattered, and active behaviors occurred throughout the session. In addition, the dog scratched and bit the door repeatedly, indicating its attempts to escape due to lack of comfort, and did not have continuous moments of rest. In contrast, dog 2, as seen in Figure 11b, displayed a good level of comfort during the session when it spent most of its time lying down or idle, both static behaviors related to resting. Further monitoring of such rest behaviors can help owners monitor the dog’s health states because some health issues can cause an increase and decrease in resting behaviors.

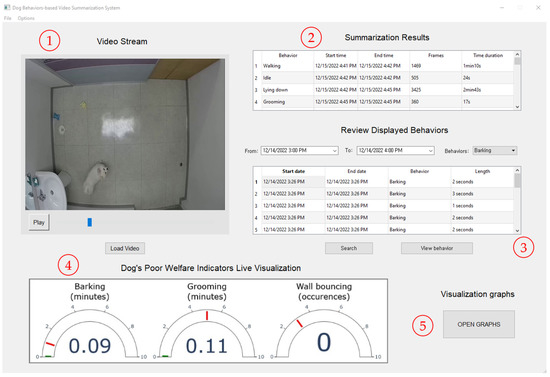

4.3.5. System Graphical User Interface

A graphical user interface, shown in Figure 12 and Figure 13 below, was implemented to allow the user to easily monitor the dog’s behaviors and generate the summarization and visualization of the results.

Figure 12.

Screenshot of the main interface of the proposed system: ① controls to load and start processing a video stream; ② table displaying the behaviors summarization results; ③ controls to search for logged dog behaviors and rewatch them; ④ live display of the visualization of dog’s poor-welfare indicators; ⑤ button to open the visualization graphs interface shown in Figure 13.

Figure 13.

Secondary interface of the proposed system accessible through the main interface from Figure 12. ① Controls used to select the period and visualization graphs to display; ② the generated graphs of the behavior summarization to help visualize and understand the dog’s state.

5. Conclusions

A dogs’ displayed behaviors can serve as indicators that help us to understand their health and welfare, which is why it is essential for owners to monitor and keep track of them to look out for relevant signs and behavioral patterns. To provide dog owners and experts with a suitable solution, we propose a real-time system to automatically summarize dogs’ videos based on their behaviors and provide effective visual tools to help understand and analyze the dogs’ movement and behaviors. The system is composed of four consecutive modules that collect data, retrieve sequences of images where the dog is alone and spatially crops them, recognizes the dog’s behaviors, saves both them and location information as log data, and summarizes and provides visualization of the saved dog’s movement and behaviors. Dog image sequence retrieval and spatial cropping were performed using the YOLOR-P6 model followed by Seq-Bbox matching to track and save the dog’s location data, and dog-focused cropped images were then generated from each sequence to improve the behavior recognition. Subsequently, in the behavior recognition module, the GMA algorithm is used to extract the optical flow, and then both RGB and optical flows are fed as input to a two-stream EfficientNetV2-Bi-LSTM that recognizes the displayed behavior. Finally, in the last module, the behaviors are summarized and visualization tools are utilized to generate effective visual summaries of the behaviors to help owners and experts understand the dog’s health and welfare and potentially discover issues. As demonstrated through the experimental results, our system achieves an F1 score of 0.955 in terms of behavior recognition, which also proves its performance in summarization, achieving better results than other recent methods used for behavior recognition and executing in 0.23 s/image on average. Furthermore, the experiments demonstrated the effectiveness of the dog’s behavior summarization and visualization in helping owners and experts understand and monitor their health and welfare. In our future work, we intend to introduce sound data into a multimodel system to detect vocalizations such as panting and whining, as they can also provide insight into their health. In addition, we will consider introducing a system for monitoring multiple dogs, and we will explore the use of an infrared camera to monitor and recognize dog behavior under low-light conditions during the night.

Author Contributions

Conceptualization, O.A., J.L., D.P. and Y.C.; methodology, O.A., J.L. and D.P.; validation, J.L., D.P. and Y.C.; data curation, O.A. and J.L.; writing—original draft preparation; O.A.; writing—review and editing, O.A., J.L. and D.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF), funded by the Ministry of Education (NRF-2020R1I1A3070835 and NRF-2021R1I1A3049475).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Min, D.; Lee, S.J. Factors associated with the presenteeism of single-person household employees in Korea: The 5th Korean working conditions survey (KWCS). J. Occup. Environ. Med. 2021, 63, 808–812. [Google Scholar] [CrossRef]

- Friedmann, E.; Gee, N.R.; Simonsick, E.M.; Studenski, S.; Resnick, B.; Barr, E.; Kitner-Triolo, M.; Hackney, A. Pet ownership patterns and successful aging outcomes in community dwelling older adults. Front. Vet. Sci. 2020, 7, 293. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Chun, B.C. Association between companion animal ownership and overall life satisfaction in Seoul, Korea. PLoS ONE 2021, 16, e0258034. [Google Scholar] [CrossRef] [PubMed]

- KB Financial Group Management Institute. 2021 Korea Pet Animals Report (In Korean). Available online: https://www.kbfg.com/kbresearch/report/reportView.do?reportId=2000160 (accessed on 20 November 2022).

- Animal, Plant and Fisheries Quarantine and Inspection Agency. Results of a Survey on Public Awareness about Animal Protection in 2012 (Summary). (In Korean). Available online: https://www.korea.kr/common/download.do?tblKey=EDN&fileId=206324 (accessed on 23 November 2022).

- Lee, H.S. A study on perception and needs of urban park users on off-leash recreation area. KIEAE J. 2010, 10, 49–55. [Google Scholar]

- Christian, H.; Bauman, A.; Epping, J.N.; Levine, G.N.; McCormack, G.; Rhodes, R.E.; Richards, E.; Rock, M.; Westgarth, C. Encouraging dog walking for health promotion and disease prevention. Am. J. Lifestyle Med. 2018, 12, 233–243. [Google Scholar] [CrossRef]

- Boisvert, J.A.; Harrell, W.A. Dog walking: A leisurely solution to pediatric and adult obesity? World Leis. J. 2014, 56, 168–171. [Google Scholar] [CrossRef]

- Enmarker, I.; Hellzén, O.; Ekker, K.; Berg, A.G.T. Depression in older cat and dog owners: The Nord-Trøndelag health study (HUNT)-3. Aging Ment. Health 2015, 19, 347–352. [Google Scholar] [CrossRef]

- Lee, H.S.; Song, J.G.; Lee, J.Y. Influences of dog attachment and dog walking on reducing loneliness during the covid-19 pandemic in Korea. Animals 2022, 12, 483. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.Y.; Youn, G.H. The Relationship between pet dog ownership and perception of loneliness: Mediation effects of physical health and social support. J. Inst. Soc. Sci. 2014, 25, 215–233. [Google Scholar] [CrossRef]

- Jobst, N. Number of Pet Dogs in South Korea 2010–2020. Available online: https://www.statista.com/statistics/661495/south-korea-dog-population/ (accessed on 19 November 2022).

- Ministry of Agriculture, Food and Rural Affairs. 8.6 Million Companion Animals Are Raised in 6.38 Million Households Nationwide. (In Korean). Available online: https://www.mafra.go.kr/bbs/mafra/68/248599/download.do (accessed on 19 November 2022).

- Kang, D. Companion Animal Market Is Booming... Visits to “Veterinary Hospital” Double in 3 Years. (In Korean). Available online: https://kdx.kr/news/view/148 (accessed on 15 November 2022).

- Consumers Union of Korea. The Average Medical Cost per Veterinary Hospital Is 84,000 Won, 8 out of 10 Consumers Bear Medical Expenses. Only 23% of Consumers Receive Information before Treatment. There Is a Need to Strengthen the Provision of Advance Information on Medical Expenses. (In Korean). Available online: https://kiri.or.kr/PDF/weeklytrend/20211206/trend20211206_10.pdf (accessed on 19 November 2022).

- Epstein, M.; Rodan, I.; Griffenhagen, G.; Kadrlik, J.; Petty, M.; Robertson, S.; Simpson, W. AAHA/AAFP pain management guidelines for dogs and cats. J. Am. Anim. Hosp. Assoc. 2015, 51, 67–84. [Google Scholar] [CrossRef]

- Azkona, G.; García-Belenguer, S.; Chacón, G.; Rosado, B.; León, M.; Palacio, J. Prevalence and risk factors of behavioural changes associated with age-related cognitive impairment in geriatric dogs. J. Small Anim. Pract. 2009, 50, 87–91. [Google Scholar] [CrossRef]

- Beaver, B.v.; Haug, L.I. Canine behaviors associated with hypothyroidism. J. Am. Anim. Hosp. Assoc. 2003, 39, 431–434. [Google Scholar] [CrossRef]

- Shihab, N.; Bowen, J.; Volk, H.A. Behavioral changes in dogs associated with the development of idiopathic epilepsy. Epilepsy Behav. 2011, 21, 160–167. [Google Scholar] [CrossRef] [PubMed]

- Storengen, L.M.; Boge, S.C.K.; Strøm, S.J.; Løberg, G.; Lingaas, F. A descriptive study of 215 dogs diagnosed with separation anxiety. Appl. Anim. Behav. Sci. 2014, 159, 82–89. [Google Scholar] [CrossRef]

- Ogata, N. Separation Anxiety in Dogs: What progress has been made in our understanding of the most common behavioral problems in dogs? J. Vet. Behav. Clin. Appl. Res. 2016, 16, 28–35. [Google Scholar] [CrossRef]

- Camps, T.; Amat, M.; Manteca, X. A review of medical conditions and behavioral problems in dogs and cats. Animals 2019, 9, 1133. [Google Scholar] [CrossRef] [PubMed]

- Rehn, T.; Keeling, L.J. The effect of time left alone at home on dog welfare. Appl. Anim. Behav. Sci. 2011, 129, 129–135. [Google Scholar] [CrossRef]

- De Assis, L.S.; Matos, R.; Pike, T.W.; Burman, O.H.P.; Mills, D.S. Developing diagnostic frameworks in veterinary behavioral medicine: Disambiguating separation related problems in dogs. Front. Vet. Sci. 2020, 6, 499. [Google Scholar] [CrossRef]

- Salonen, M.; Sulkama, S.; Mikkola, S.; Puurunen, J.; Hakanen, E.; Tiira, K.; Araujo, C.; Lohi, H. Prevalence, comorbidity, and breed differences in canine anxiety in 13,700 Finnish pet dogs. Sci. Rep. 2020, 10, 2962. [Google Scholar] [CrossRef] [PubMed]

- Scaglia, E.; Cannas, S.; Minero, M.; Frank, D.; Bassi, A.; Palestrini, C. Video analysis of adult dogs when left home alone. J. Vet. Behav. Clin. Appl. Res. 2013, 8, 412–417. [Google Scholar] [CrossRef]

- Duranton, C.; Bedossa, T.; Gaunet, F. Interspecific behavioural synchronization: Dogs present locomotor synchrony with humans. Sci. Rep. 2017, 7, 12384. [Google Scholar] [CrossRef] [PubMed]

- Rooney, N.J.; Bradshaw, J.W.S.; Robinson, I.H. A Comparison of dog-dog and dog-human play behaviour. Appl. Anim. Behav. Sci. 2000, 66, 235–248. [Google Scholar] [CrossRef]

- Sherman, B.L. Separation anxiety in dogs. Compend. Contin. Educ. Pract. Vet. 2008, 30, 27–42. [Google Scholar] [CrossRef]

- Ladha, C.; Hammerla, N.; Hughes, E.; Olivier, P.; Plötz, T. Dog’s life: Wearable activity recognition for dogs. In Proceedings of the 2013 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Zurich, Switzerland, 8–12 September 2013; Association for Computing Machinery: New York, NY, USA, 2013; pp. 415–418. [Google Scholar] [CrossRef]

- Kiyohara, T.; Orihara, R.; Sei, Y.; Tahara, Y.; Ohsuga, A. Activity recognition for dogs based on time-series data analysis. In Agents and Artificial Intelligence; Duval, B., van den Herik, J., Loiseau, S., Filipe, J., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 163–184. [Google Scholar] [CrossRef]

- Kumpulainen, P.; Valldeoriola, A.; Somppi, S.; Törnqvist, H.; Väätäjä, H.; Majaranta, P.; Surakka, V.; Vainio, O.; Kujala, M.v.; Gizatdinova, Y.; et al. Dog activity classification with movement sensor placed on the collar. In Proceedings of the Fifth International Conference on Animal-Computer Interaction, Atlanta, GA, USA, 4–6 December 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Aich, S.; Chakraborty, S.; Sim, J.S.; Jang, D.J.; Kim, H.C. The design of an automated system for the analysis of the activity and emotional patterns of dogs with wearable sensors using machine learning. Appl. Sci. 2019, 9, 4938. [Google Scholar] [CrossRef]

- Chambers, R.D.; Yoder, N.C.; Carson, A.B.; Junge, C.; Allen, D.E.; Prescott, L.M.; Bradley, S.; Wymore, G.; Lloyd, K.; Lyle, S. Deep learning classification of canine behavior using a single collar-mounted accelerometer: Real-world validation. Animals 2021, 11, 1549. [Google Scholar] [CrossRef] [PubMed]

- Fux, A.; Zamansky, A.; Bleuer-Elsner, S.; van der Linden, D.; Sinitca, A.; Romanov, S.; Kaplun, D. Objective Video-Based Assessment of Adhd-like Canine Behavior Using Machine Learning. Animals 2021, 11, 2806. [Google Scholar] [CrossRef]

- Wang, H.; Atif, O.; Tian, J.; Lee, J.; Park, D.; Chung, Y. Multi-level hierarchical complex behavior monitoring system for dog psychological separation anxiety symptoms. Sensors 2022, 22, 1556. [Google Scholar] [CrossRef]

- Kim, J.; Moon, N. Dog behavior recognition based on multimodal data from a camera and wearable device. Appl. Sci. 2022, 12, 3199. [Google Scholar] [CrossRef]

- Choi, Y.; Atif, O.; Lee, J.; Park, D.; Chung, Y. Noise-robust sound-event classification system with texture analysis. Symmetry 2018, 10, 402. [Google Scholar] [CrossRef]

- Hong, M.; Ahn, H.; Atif, O.; Lee, J.; Park, D.; Chung, Y. Field-applicable pig anomaly detection system using vocalization for embedded board implementations. Appl. Sci. 2020, 10, 6991. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 568–576. [Google Scholar]

- Palestrini, C.; Minero, M.; Cannas, S.; Rossi, E.; Frank, D. Video analysis of dogs with separation-related behaviors. Appl. Anim. Behav. Sci. 2010, 124, 61–67. [Google Scholar] [CrossRef]

- Landsberg, G.; Hunthausen, W.; Ackerman, L. Behavior Problems of the Dog and Cat, 3rd ed.; Saunders; Elsevier: New York, NY, USA, 2013; pp. 181–210. [Google Scholar]

- Goldman, D.B.; Curless, B.; Salesin, D.; Seitz, S.M. Schematic storyboarding for video visualization and editing. In Proceedings of the 2006 ACM Special Interest Group on Computer Graphics and Interactive Techniques Conference, Boston, MA, USA, 30 July–3 August 2006; Association for Computing Machinery: New York, NY, USA, 2006; pp. 862–871. [Google Scholar] [CrossRef]

- Li, Y.; Merialdo, B.; Rouvier, M.; Linares, G. Static and dynamic video summaries. In Proceedings of the 19th ACM International Conference on Multimedia, Scottsdale, AZ, USA, 28 November–1 December 2011; pp. 1573–1576. [Google Scholar] [CrossRef]

- Lee, Y.J.; Ghosh, J.; Grauman, K. Discovering important people and objects for egocentric video summarization. In Proceedings of the 2012 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1346–1353. [Google Scholar] [CrossRef]

- Elharrouss, O.; Almaadeed, N.; Al-Maadeed, S.; Bouridane, A.; Beghdadi, A. A combined multiple action recognition and summarization for surveillance video sequences. Appl. Intell. 2021, 51, 690–712. [Google Scholar] [CrossRef]

- Tejero-De-Pablos, A.; Nakashima, Y.; Sato, T.; Yokoya, N.; Linna, M.; Rahtu, E. Summarization of user-generated sports video by using deep action recognition features. IEEE Trans. Multimed. 2018, 20, 2000–2011. [Google Scholar] [CrossRef]

- Almaadeed, N.; Elharrouss, O.; Al-Maadeed, S.; Bouridane, A.; Beghdadi, A. A Novel approach for robust multi human action recognition and summarization based on 3D convolutional neural networks. arXiv 2019. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, W.; Steibel, J.; Siegford, J.; Wurtz, K.; Han, J.; Norton, T. Recognition of aggressive episodes of pigs based on convolutional neural network and long short-term memory. Comput. Electron. Agric. 2020, 169, 105166. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, W.; Steibel, J.; Siegford, J.; Han, J.; Norton, T. Classification of drinking and drinker-playing in pigs by a video-based deep learning method. Biosyst. Eng. 2020, 196, 1–14. [Google Scholar] [CrossRef]

- Yin, X.; Wu, D.; Shang, Y.; Jiang, B.; Song, H. Using an EfficientNet-LSTM for the recognition of single cow’s motion behaviours in a complicated environment. Comput. Electron. Agric. 2020, 177, 105707. [Google Scholar] [CrossRef]

- Singh, B.; Marks, T.K.; Jones, M.; Tuzel, O.; Shao, M. A multi-stream bi-directional recurrent neural network for fine-grained action detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1961–1970. [Google Scholar]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. You only learn one representation: Unified network for multiple tasks. arXiv 2021, arXiv:2105.04206. [Google Scholar]

- Belhassen, H.; Zhang, H.; Fresse, V.; Bourennane, E.B. Improving video object detection by Seq-Bbox matching. In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Prague, Czech Republic, 25–27 February 2019; Volume 5, pp. 226–233. [Google Scholar]

- Jiang, S.; Campbell, D.; Lu, Y.; Li, H.; Hartley, R. Learning to estimate hidden motions with global motion aggregation. arXiv 2021, arXiv:2104.02409. [Google Scholar]

- Bo, Z.; Atif, O.; Lee, J.; Park, D.; Chung, Y. GAN-based video denoising with attention mechanism for field-applicable pig detection system. Sensors 2022, 22, 3917. [Google Scholar] [CrossRef]

- Zhao, Y.; Cheng, J.; Zhou, W.; Zhang, C.; Pan, X. Infrared pedestrian detection with converted temperature map. In Proceedings of the 2019 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Lanzhou, China, 18–21 November 2019; pp. 2025–2031. [Google Scholar]

- Liu, K.Q.; Wang, J.Q. Fast dynamic vehicle detection in road scenarios based on pose estimation with Convex-Hull model. Sensors 2019, 19, 3136. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Wu, H.; He, S.; Deng, Z.; Kou, L.; Huang, K.; Suo, F.; Cao, Z. Fishery monitoring system with AUV based on YOLO and SGBM. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; pp. 4726–4731. [Google Scholar] [CrossRef]

- Sha, Z.; Feng, H.; Rui, X.; Zeng, Z. PIG Tracking utilizing fiber optic distributed vibration sensor and YOLO. J. Lightwave Technol. 2021, 39, 4535–4541. [Google Scholar] [CrossRef]

- Zhu, X.; Wang, Y.; Dai, J.; Yuan, L.; Wei, Y. Flow-guided feature aggregation for video object detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 408–417. [Google Scholar]

- Mishra, B.K.; Thakker, D.; Mazumdar, S.; Neagu, D.; Gheorghe, M.; Simpson, S. A novel application of deep learning with image cropping: A smart city use case for flood monitoring. J. Reliab. Intell. Environ. 2020, 6, 51–61. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller models and faster training. arXiv 2021. [Google Scholar] [CrossRef]

- Zhang, M.; Hu, H.; Li, Z.; Chen, J. Action Detection with Two-Stream Enhanced Detector. Vis. Comput. 2022, 39, 1193–1204. [Google Scholar] [CrossRef]

- Butler, D.J.; Wulff, J.; Stanley, G.B.; Black, M.J. A naturalistic open source movie for optical flow evaluation. In Proceedings of the European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012; pp. 611–625. [Google Scholar] [CrossRef]

- Stephen, J.M.; Ledger, R.A. An audit of behavioral indicators of poor welfare in kenneled dogs in the United Kingdom. J. Appl. Anim. Welf. Sci. 2005, 8, 79–96. [Google Scholar] [CrossRef] [PubMed]

- Della Rocca, G.; Gamba, D. Chronic pain in dogs and cats: Is there place for dietary intervention with micro-palmitoylethanolamide? Animals 2021, 11, 952. [Google Scholar] [CrossRef]

- Kaplun, D.; Sinitca, A.; Zamansky, A.; Bleuer-Elsner, S.; Plazner, M.; Fux, A.; van der Linden, D. Animal health informatics: Towards a generic framework for automatic behavior analysis. In Proceedings of the 12th International Conference on Health Informatics, Prague, Czech Republic, 22–24 February 2019. [Google Scholar]

- Bleuer-Elsner, S.; Zamansky, A.; Fux, A.; Kaplun, D.; Romanov, S.; Sinitca, A.; Masson, S.; van der Linden, D. Computational analysis of movement patterns of dogs with ADHD-like behavior. Animals 2019, 9, 1140. [Google Scholar] [CrossRef]

- Konok, V.; Kosztolányi, A.; Rainer, W.; Mutschler, B.; Halsband, U.; Miklósi, Á. Influence of owners’ attachment style and personality on their dogs’ (Canis familiaris) separation-related disorder. PLoS ONE 2015, 10, e0118375. [Google Scholar] [CrossRef] [PubMed]

- Raudies, C.; Waiblinger, S.; Arhant, C. Characteristics and welfare of long-term shelter dogs. Animals 2021, 11, 194. [Google Scholar] [CrossRef]

- Polgár, Z.; Blackwell, E.J.; Rooney, N.J. Assessing the welfare of kennelled dogs—A review of animal-based measures. Appl. Anim. Behav. Sci. 2019, 213, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Owczarczak-Garstecka, S.C.; Burman, O.H.P. Can sleep and resting behaviours be used as indicators of welfare in shelter dogs (Canis lupus familiaris)? PLoS ONE 2016, 11, e0163620. [Google Scholar] [CrossRef] [PubMed]

- Biresaw, T.A.; Nawaz, T.; Ferryman, J.; Dell, A.I. ViTBAT: Video tracking and behavior annotation tool. In Proceedings of the 2016 13th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Colorado Springs, CO, USA, 23–26 August 2016; pp. 295–301. [Google Scholar] [CrossRef]

- Cartucho, J.; Ventura, R.; Veloso, M. Robust object recognition through symbiotic deep learning in mobile robots. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 2336–2341. [Google Scholar] [CrossRef]

- Lund, J.D.; Jørgensen, M.C. Behaviour patterns and time course of activity in dogs with separation problems. Appl. Anim. Behav. Sci. 1999, 63, 219–236. [Google Scholar] [CrossRef]

- Parthasarathy, V.; Crowell-Davis, S.L. Relationship between attachment to owners and separation anxiety in pet dogs (Canis lupus familiaris). J. Vet. Behav. Clin. Appl. Res. 2006, 1, 109–120. [Google Scholar] [CrossRef]

- Sherman, B.L.; Mills, D.S. Canine anxieties and phobias: An update on separation anxiety and noise aversions. Vet. Clin. N. Am. Small Anim. Pract. 2008, 38, 1081–1106. [Google Scholar] [CrossRef] [PubMed]

- Kaur, J.; Seshadri, S.; Golla, K.H.; Sampara, P. Efficacy and safety of standardized ashwagandha (Withania somnifera) root extract on reducing stress and anxiety in domestic dogs: A randomized controlled trial. J. Vet. Behav. 2022, 51, 8–15. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).