Adaptive Reflection Detection and Control Strategy of Pointer Meters Based on YOLOv5s

Abstract

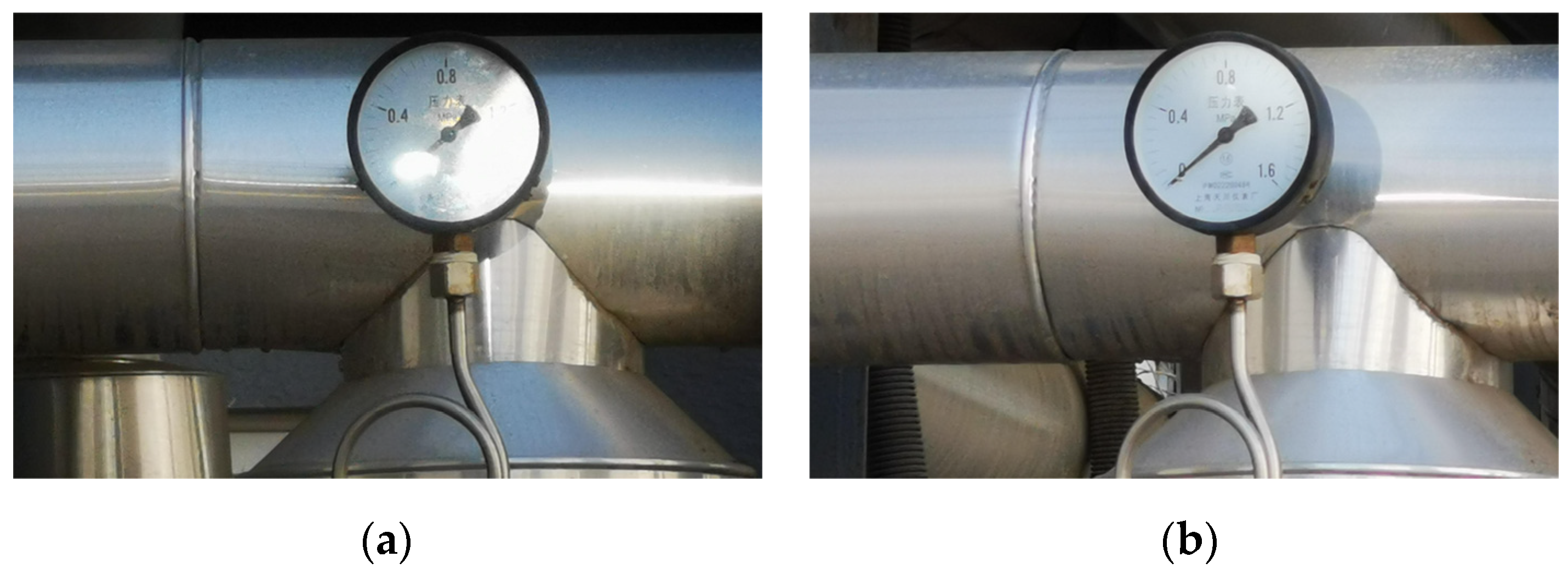

:1. Introduction

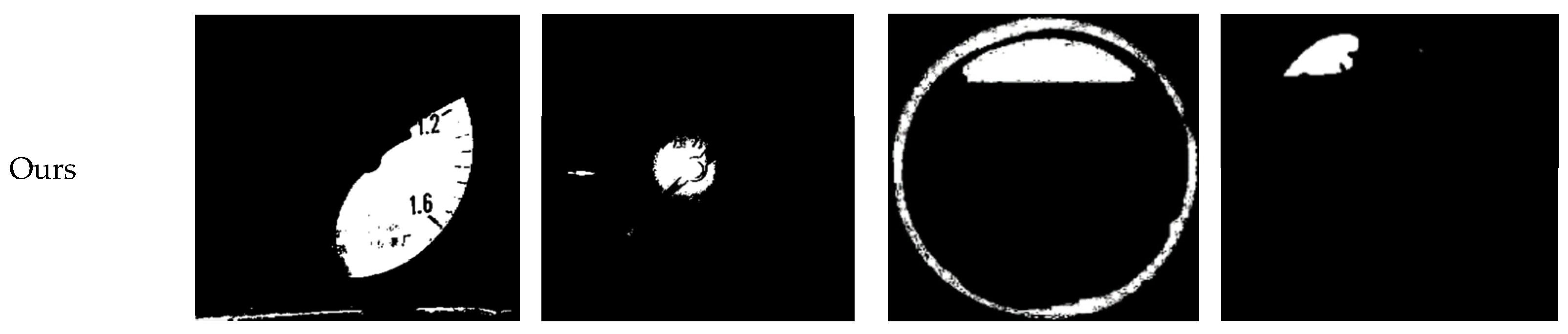

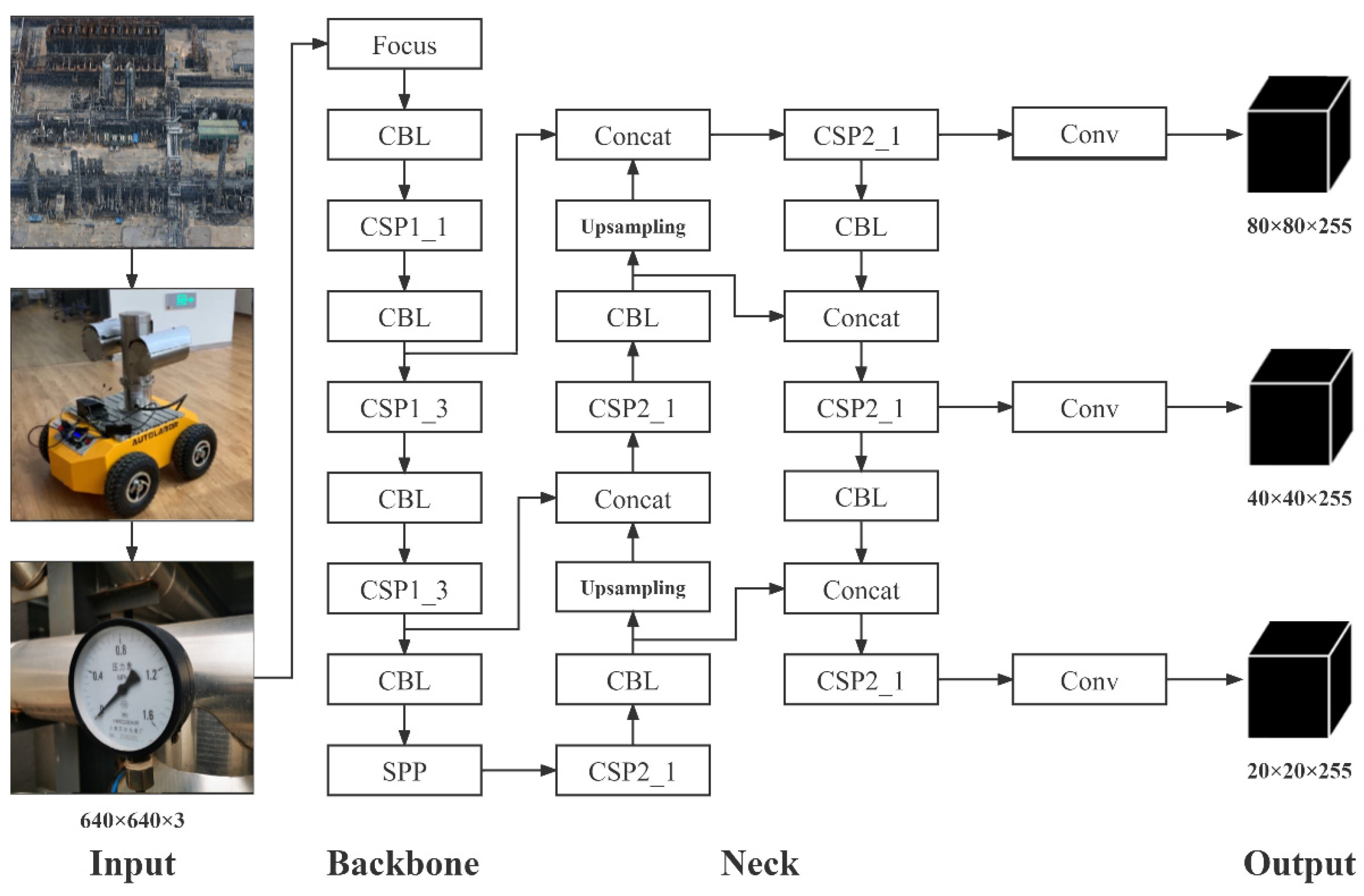

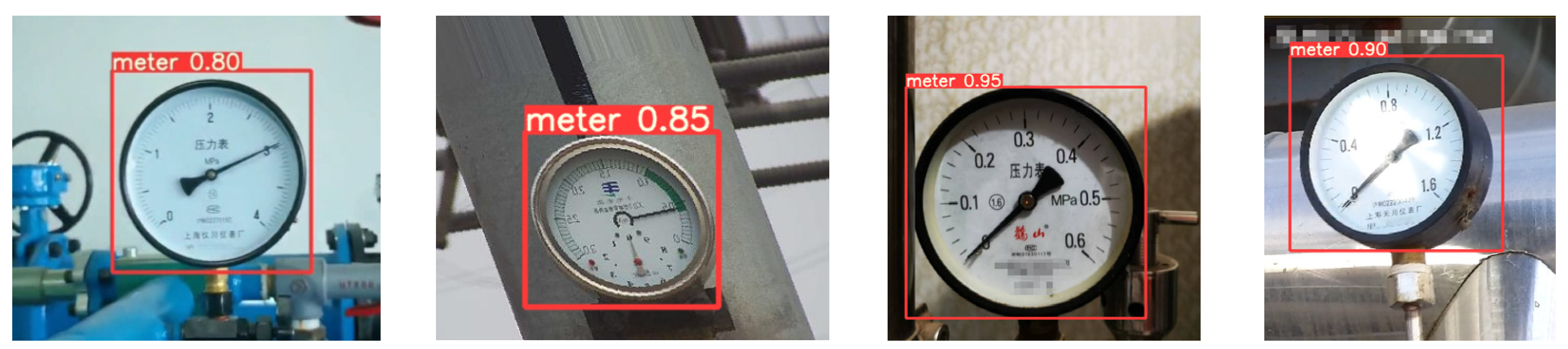

2. Pointer Meter Detection Based on Deep Learning

2.1. Deep Learning Image Acquisition

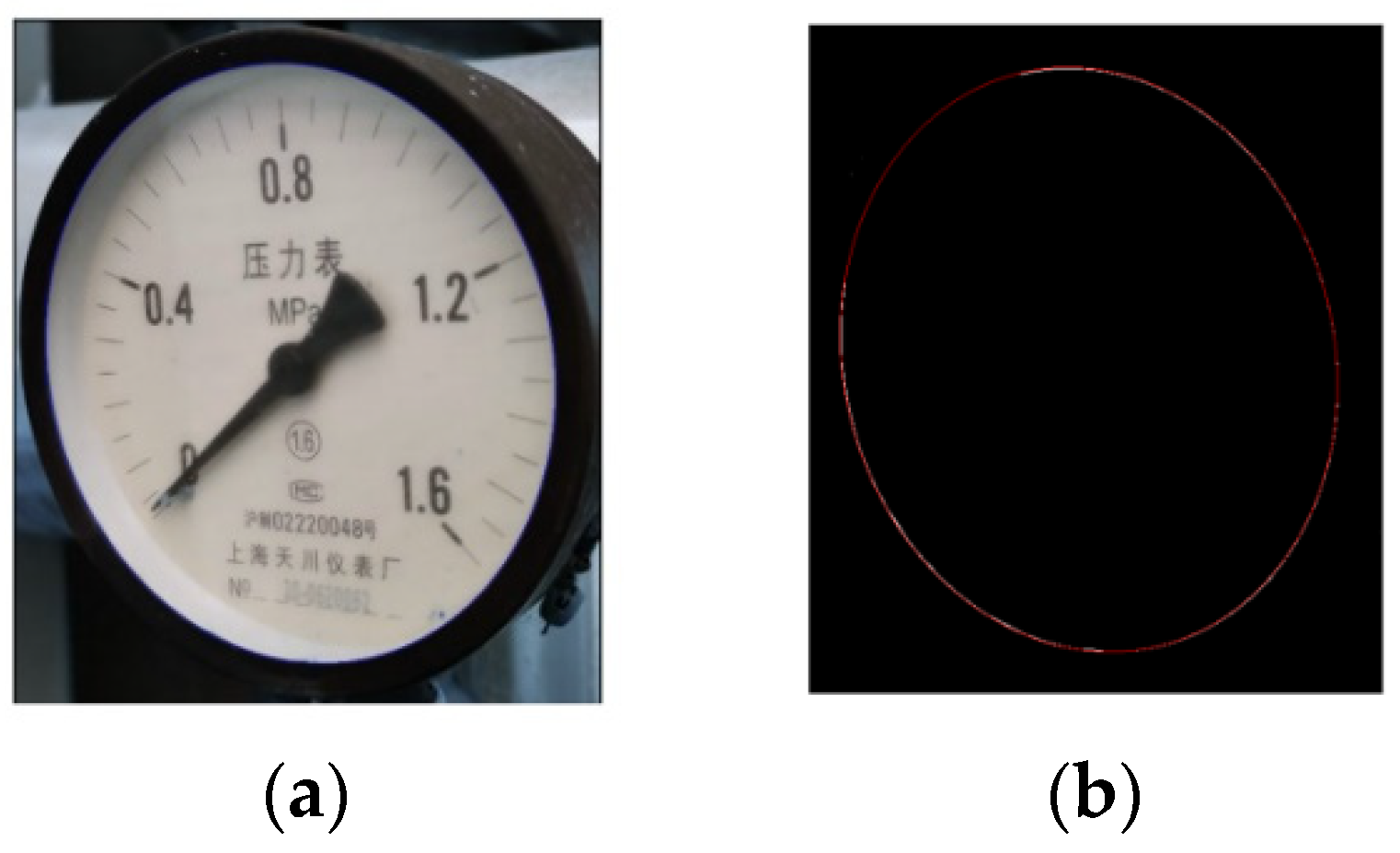

2.2. Image Perspective Transformation

3. Improved K-Means Algorithm Based on Curve Fitting

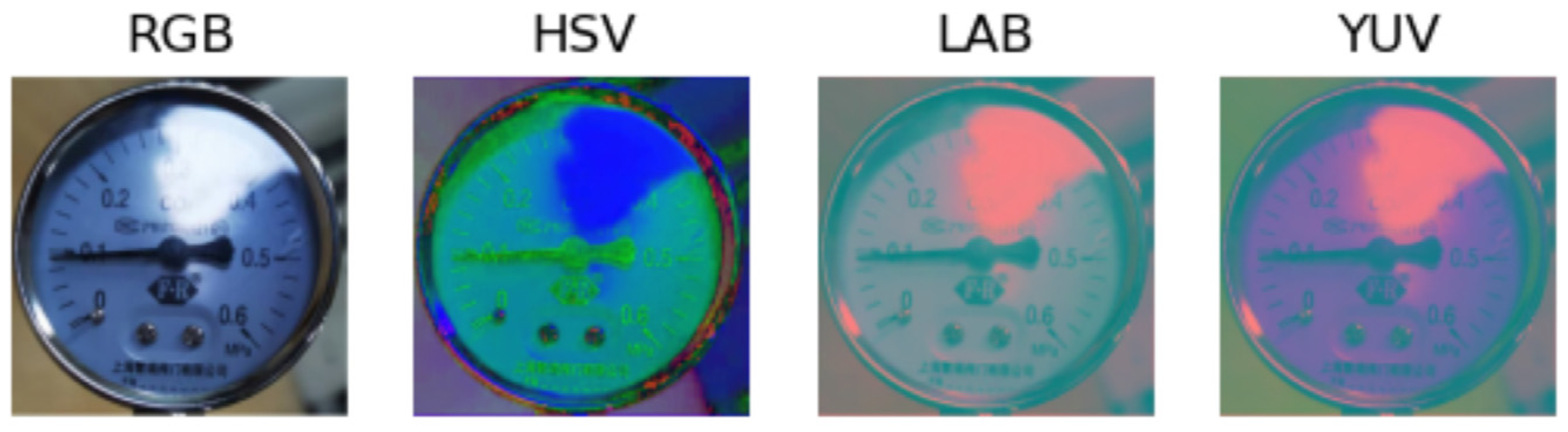

3.1. Color Model

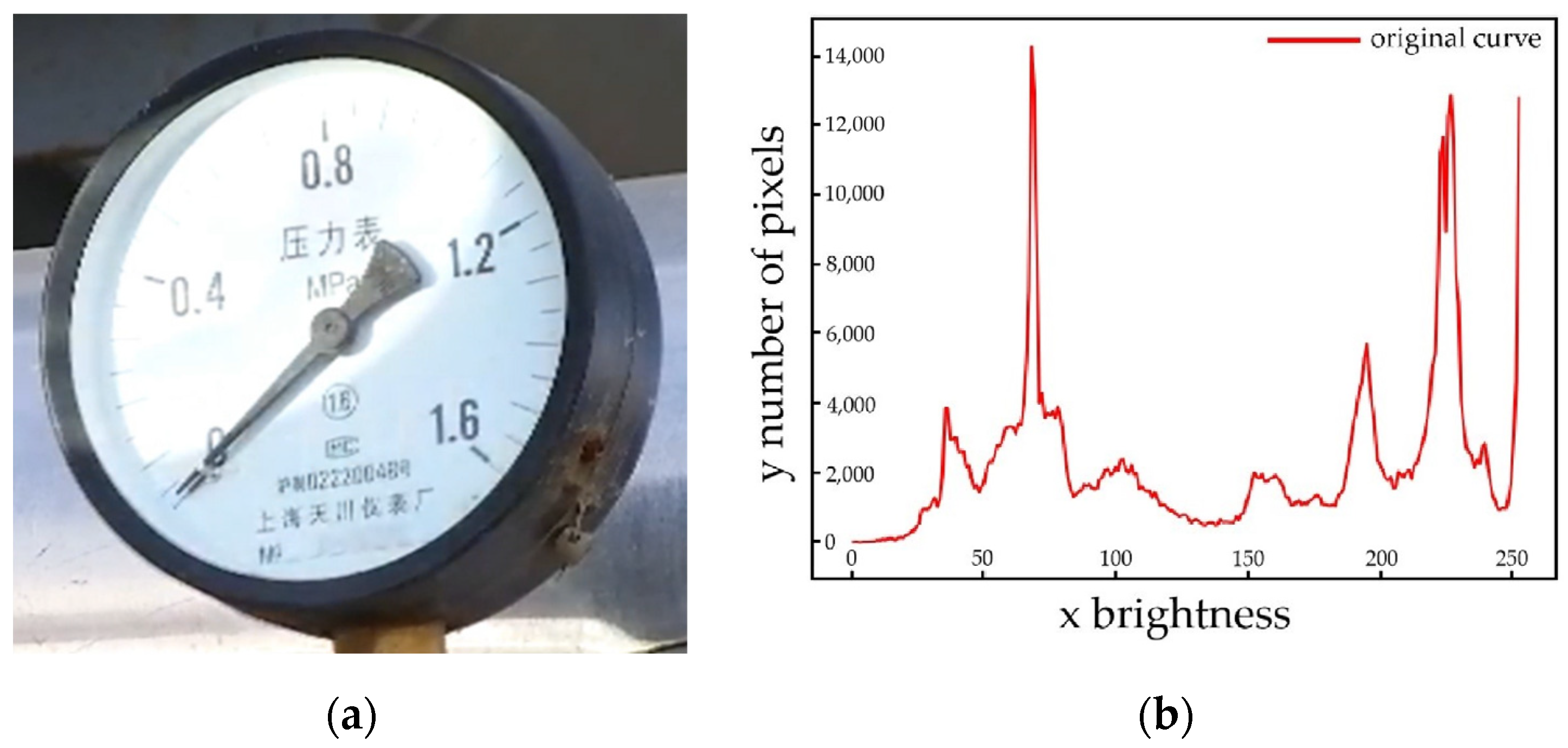

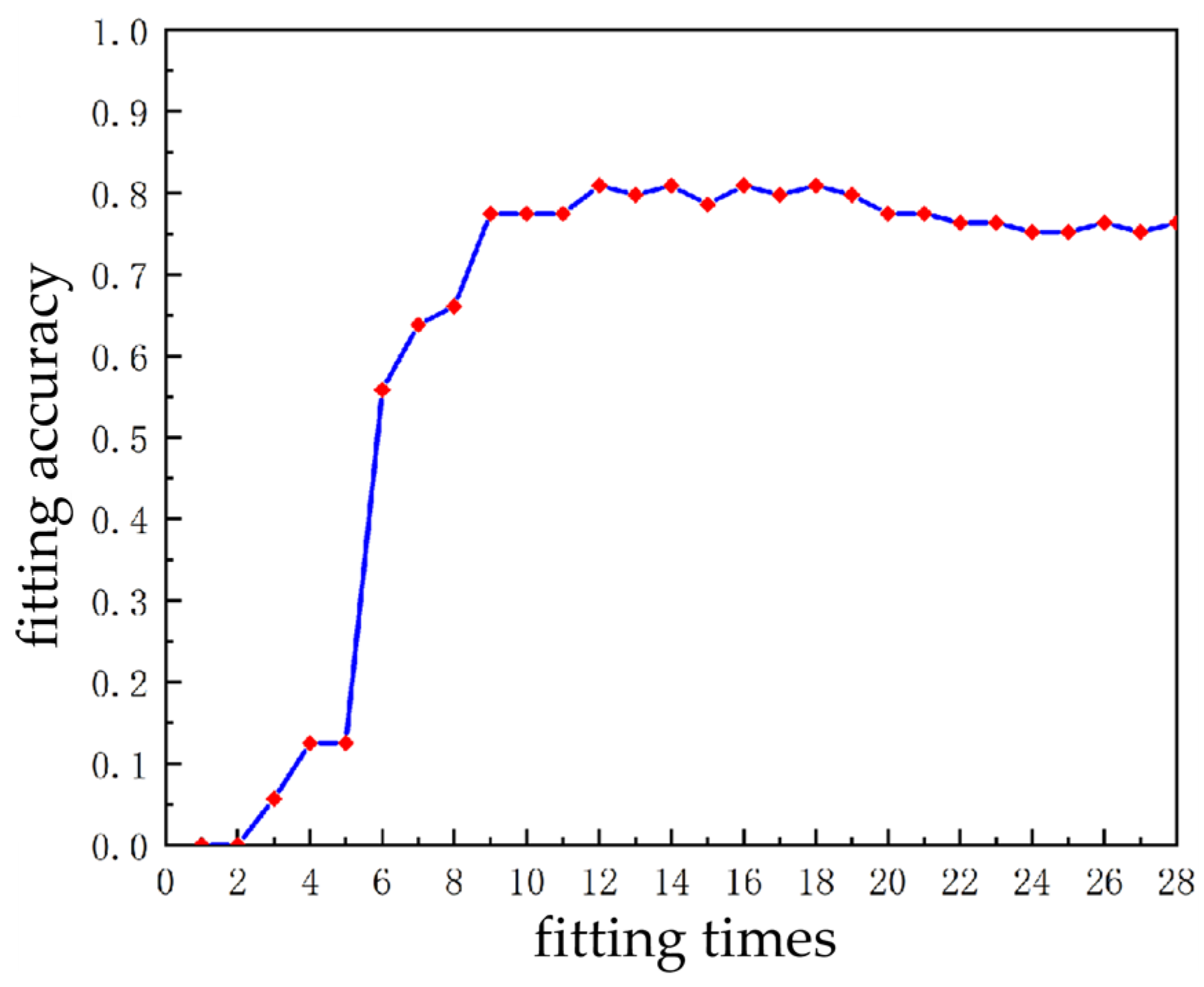

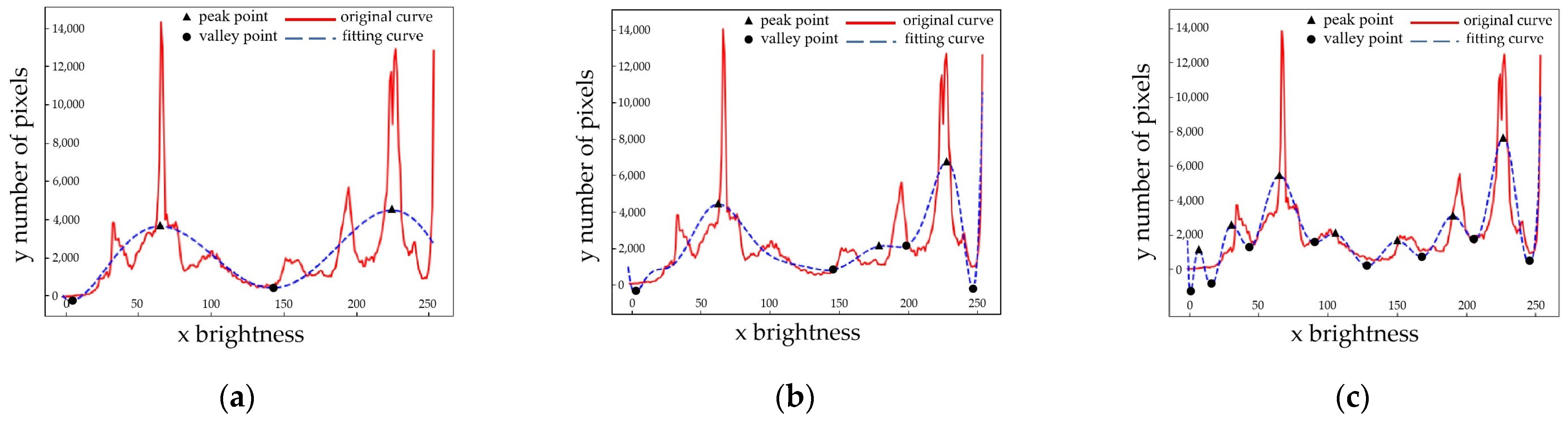

3.2. Polynomial Curve Fitting

3.3. Principle and Improvement of k-Means Algorithm

3.3.1. Principle of k-Means Algorithm

- (1)

- The k value is uncertain. The k value of the traditional k-means algorithm is given manually, and the k value for different objects is also different. Selecting the k value improperly will affect the clustering effect.

- (2)

- The clustering effect is sensitive to initial clustering center values. The initial values are generally given randomly, and they have relatively large contingency and are easy to fall into local convergence. Therefore, it cannot achieve the goal of global convergence.

3.3.2. Improved k-Means Algorithm

- (1)

- According to the peak information of the fitting curve to determine the k value adaptively.

- (2)

- According to the peaks and valleys information of the fitting curve to determine the values of the initial cluster centers.

- (1)

- First, an image is converted to a YUV color model to calculate the image brightness information histogram;

- (2)

- The brightness information histogram is fitted into a smooth curve by a 12th fitting curve;

- (3)

- Count the peak and valley information and add the two end-points to form the feature point set ;

- (4)

- Determine the optimal number of clusters according to the number of peaks m;

- (5)

- Determine the initial clustering center values according to the feature set of points ;

- (6)

- Finally, k clusters, are obtained by clustering calculation, i.e., there are k brightness levels. Eventually, the reflective area can be determined based on the highest brightness level.

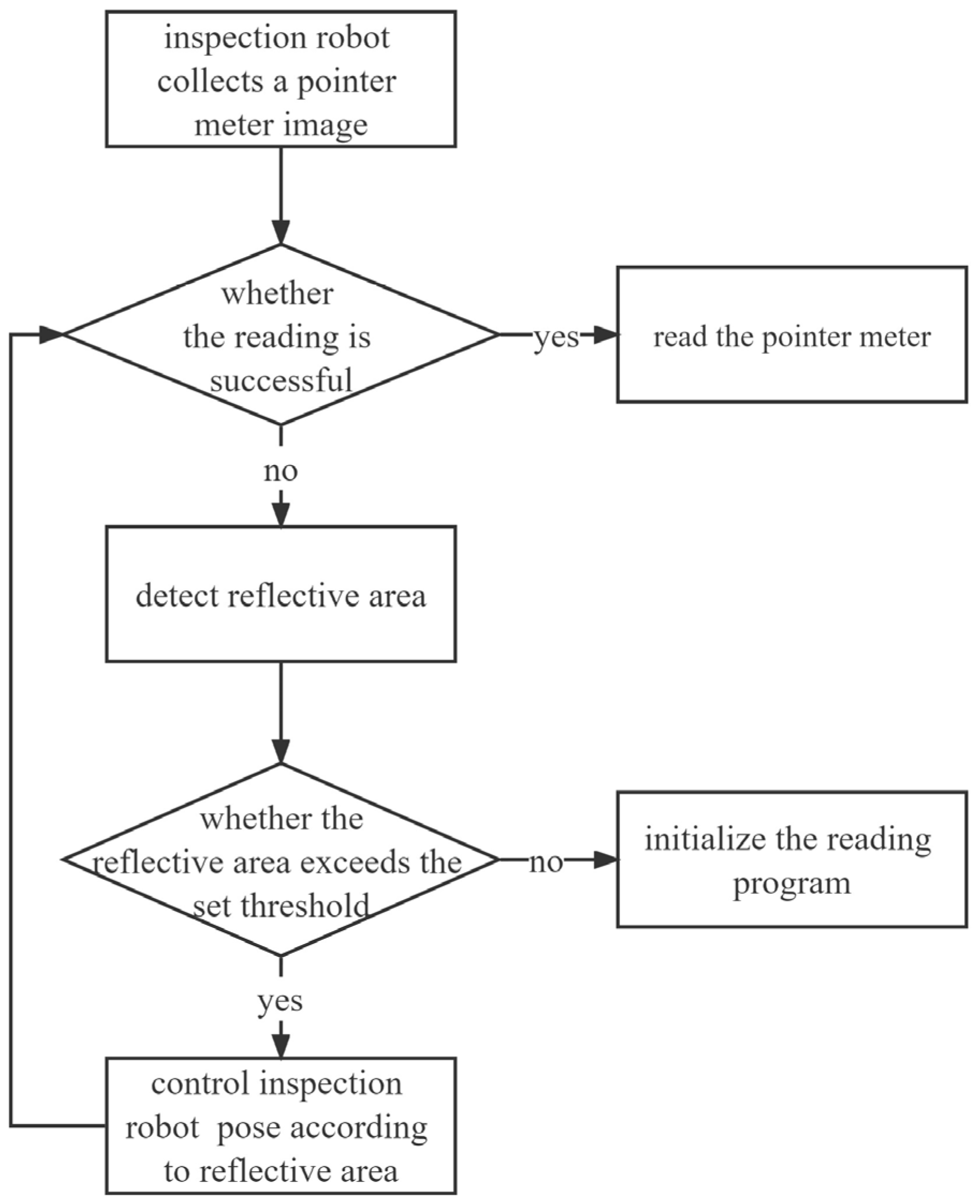

4. Inspection Robot Pose Adjustment

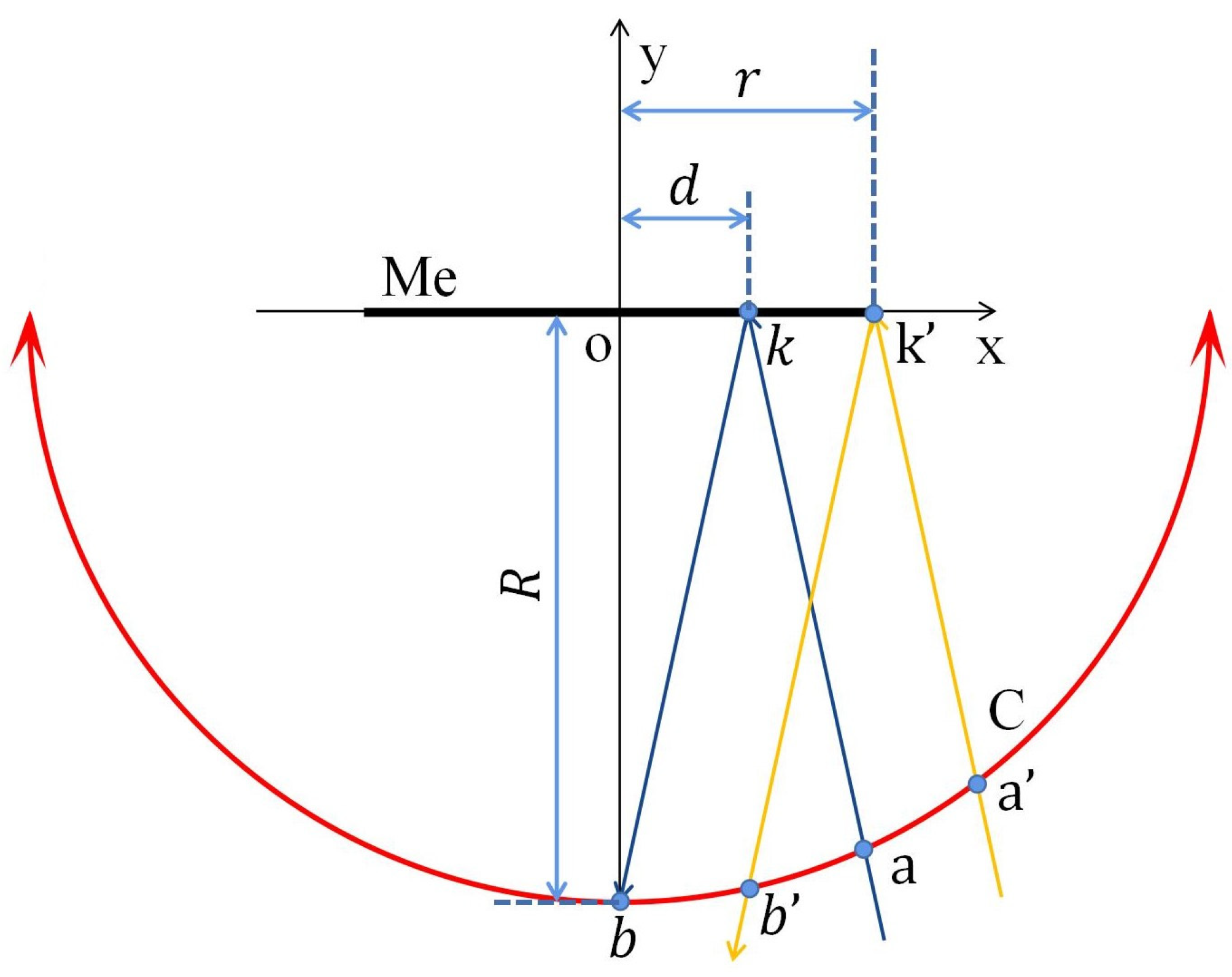

4.1. Determination of Motion Direction

4.2. Determination of Moving Distance

5. Experimental Platform and Test Verification

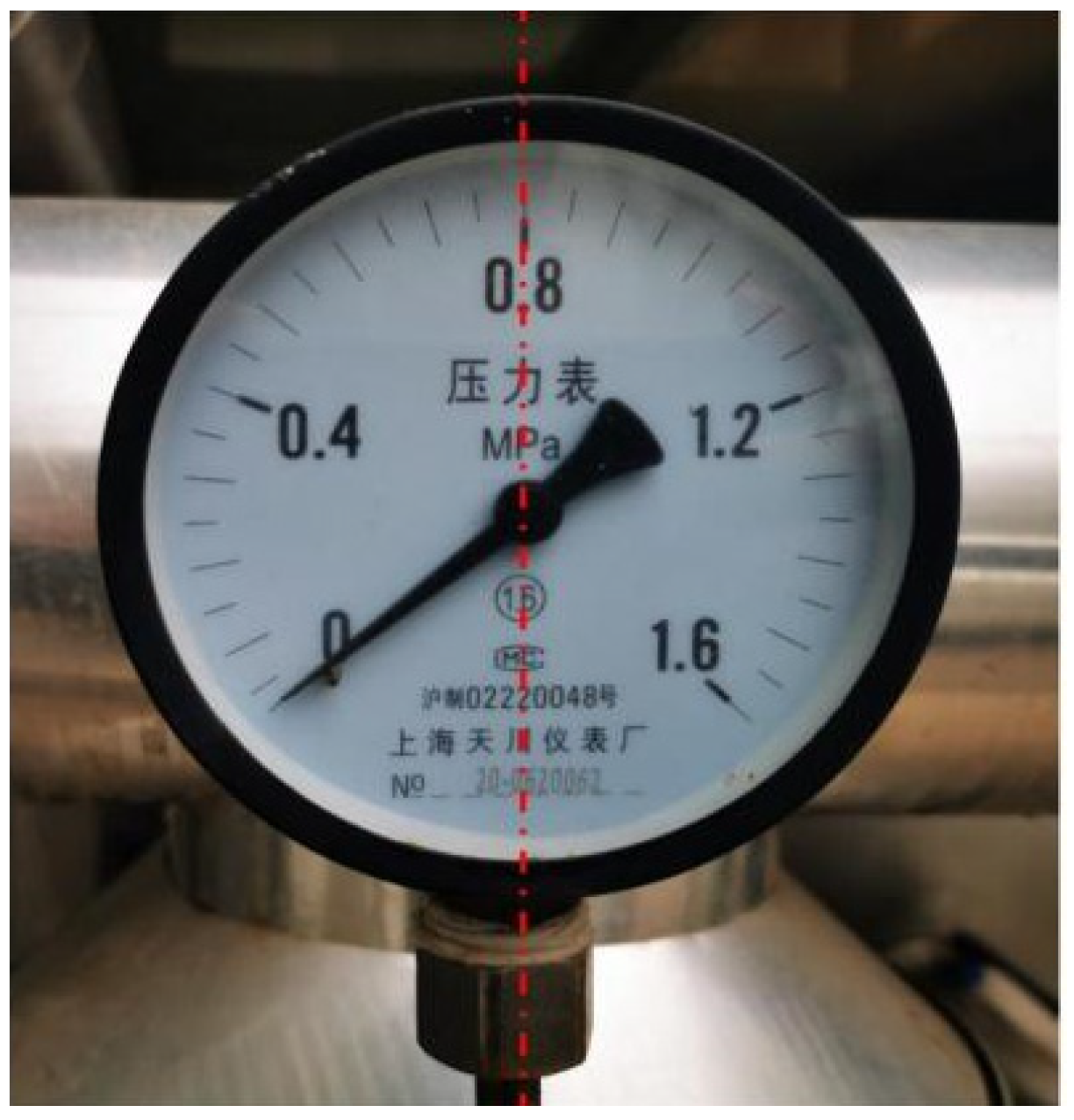

5.1. Experimental Platform and Detection Process

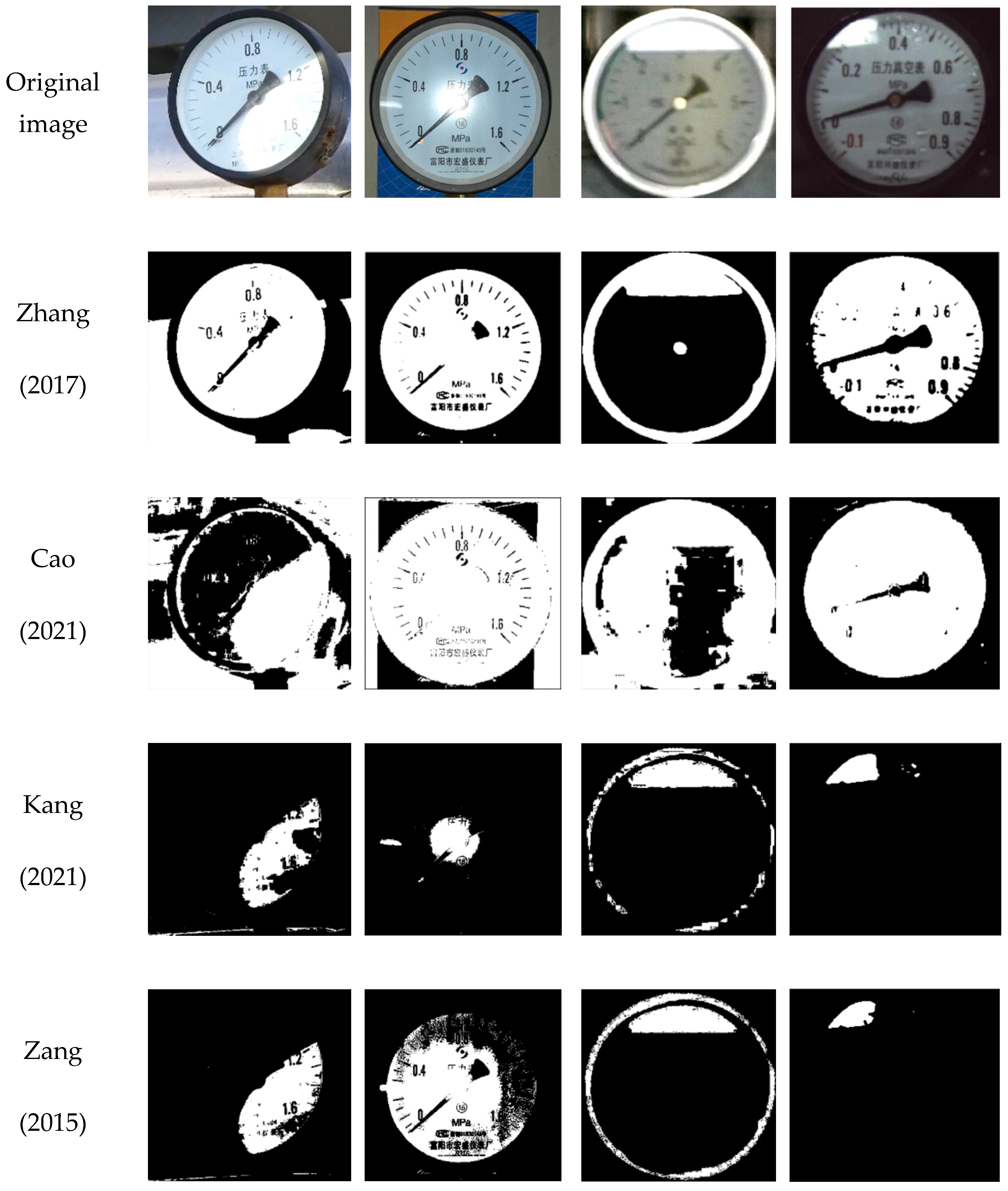

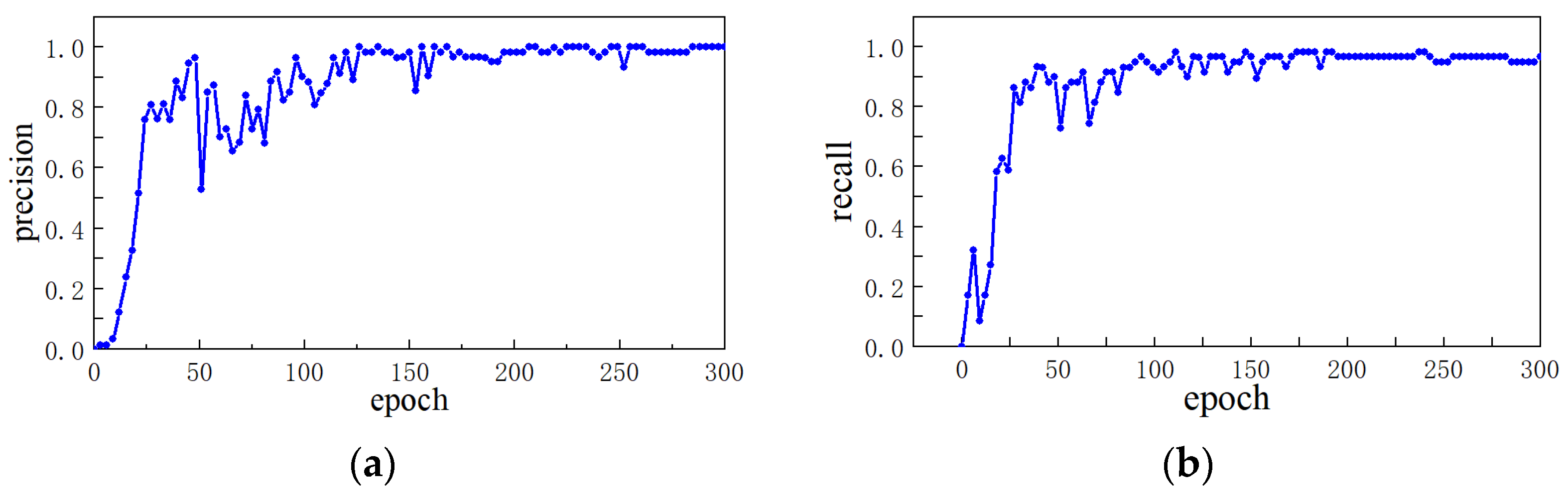

5.2. Reflective Area Detection

5.3. Robot Pose Adjustment

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wolff, L.B.; Bouh, T.E. Constraining Object Features Using a Polarization Reflectance Model. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 635–657. [Google Scholar] [CrossRef]

- Criminisi, A.; Pérez, P.M. Region Filling and Object Removal by Exemplar-Based Image Inpainting. IEEE Trans. Image Process. 2004, 13, 1200–1212. [Google Scholar] [CrossRef]

- Sato, Y.; Ikeuchi, K. Temporal-color Space Analysis of Reflection. J. Opt. Soc. Am. A 1994, 11, 2990–3002. [Google Scholar] [CrossRef]

- Liu, Y.; Granier, X. Online Tracking of Outdoor Lighting Variations for Augmented Reality with Moving Cameras. IEEE Trans. Vis. Comput. Graph. 2012, 18, 573–580. [Google Scholar]

- Zhang, J.; Zhang, Y.; Shi, G. Reflected Light Separation on Transparent Object Surface Based on Normal Vector Estimation. Acta Opt. Sin. 2021, 41, 526001. [Google Scholar]

- Islam, M.N.; Tahtali, M.; Pickering, M. Specular Reflection Detection and Inpainting in Transparent Object through MSPLFI. Remote Sens. 2021, 13, 455. [Google Scholar] [CrossRef]

- Hao, J.; Zhao, Y.; Peng, Q. A Specular Highlight Removal Algorithm for Quality Inspection of Fresh Fruits. Remote Sens. 2022, 14, 3215. [Google Scholar] [CrossRef]

- Zhai, Y.; Shah, M. Visual Attention Detection in Video Sequences Using Spatiotemporal and Cues; Conference on Multimedia: Santa Barbara, CA, USA, 2006; pp. 815–824. [Google Scholar]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-light Image Enhancement via Illumination Map Estimation. IEEE Trans. Image Process. 2016, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Shoji, T.; Roseline, K.F.Y. Appearance Estimation and Reconstruction of Glossy Object Surfaces Based on the Dichromatic Reflection Model. Color Res. Appl. 2022, 47, 1313–1329. [Google Scholar]

- Zhang, Y.; Long, Y. Research on the Algorithm of Rain Sensors Based on Vehicle-Mounted Cameras. J. Hunan Univ. Technol. 2017, 31, 37–42. (In Chinese) [Google Scholar]

- Cao, T.; Li, X.; Li, D.; Chen, J.; Liu, S.; Xiang, W.; Hu, Y. Illumination Estimation Based on Image Decomposition. Comput. Eng. Sci. 2021, 13, 1422–1428. (In Chinese) [Google Scholar]

- Kang, H.; Hwang, D.; Lee, J. Specular Highlight Region Restoration Using Image Clustering and Inpainting. J. Vis. Commun. Image Represent. 2021, 77, 103106. [Google Scholar] [CrossRef]

- Asif, M.; Song, H.; Chen, L.; Yang, J.; Frangi, A.F. Intrinsic layer based automatic specular reflection detection in endoscopic images. Comput. Biol. Med. 2021, 128, 104106. [Google Scholar]

- Nie, C.; Xu, C.; Li, Z.; Chu, L.; Hu, Y. Specular Reflections Detection and Removal for Endoscopic Images Based on Brightness Classification. Sensors 2023, 23, 974. [Google Scholar] [CrossRef]

- Cheng, S.; Zhou, G. Facial Expression Recognition Method Based on Improved VGG Convolutional Neural Network. Int. J. Pattern Recognit. Artif. Intell. 2020, 34, 2056003. [Google Scholar] [CrossRef]

- Tang, Y.; Huang, Z.; Chen, Z.; Chen, M.; Zhou, H.; Zhang, H.; Sun, J. Novel visual crack width measurement based on backbone double-scale features for improved detection automation. Eng. Struct. 2023, 274, 115158. [Google Scholar] [CrossRef]

- Wang, C.; Luo, T.; Zhao, L.; Tang, Y.; Zou, X. Window Zooming–Based Localization Algorithm of Fruit and Vegetable for Harvesting Robot. J. Mag. 2019, 7, 2925812. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Wu, F.; Duan, J.; Ai, P.; Chen, Z.; Yang, Z.; Zou, X. Rachis detection and three-dimensional localization of cut off point for vision-based banana robot. Comput. Electron. Agric. 2022, 198, 107079. [Google Scholar] [CrossRef]

- Wang, H.; Lin, Y.; Xu, X.; Chen, Z.; Wu, Z.; Tang, Y. A Study on Long-Close Distance Coordination Control Strategy for Litchi Picking. Agronomy 2022, 12, 1520. [Google Scholar] [CrossRef]

- Li, Z.; Wang, W.; Xue, C.; Jiang, R. Resource-Efficient Hardware Implementation of Perspective Transformation Based on Central Projection. Electronics 2022, 11, 1367. [Google Scholar] [CrossRef]

- Keipour, A.; Pereira, G.; Scherer, S. Real-Time Ellipse Detection for Robotics Applications. IEEE Robot. Autom. Lett. 2021, 6, 7009–7016. [Google Scholar] [CrossRef]

- Zang, X. Design and Research on the Vision Based Autonomous Instrument Recognition System of Surveillance Robots; Harbin Institute of Technology: Harbin, China, 2015. (In Chinese) [Google Scholar]

- Zhao, S.; Jiang, Y.; He, W.; Mei, Y.; Xie, X.; Wan, S. Detrended Fluctuation Analysis Based on Best-fit Polynomial. Front. Environ. Sci. 2022, 2, 1054689. [Google Scholar] [CrossRef]

- Wang, B.; Ding, X.; Wang, F.Y. Determination of Polynomial Degree in the Regression of Drug Combinations. IEEE/CAA J. Autom. Sin. 2017, 4, 41–47. [Google Scholar] [CrossRef]

- Wang, P. Energy Analysis Method of Computational Complexity. Int. Symp. Comput. Sci. Comput. Technol. 2008, 4, 682–685. [Google Scholar]

- Chen, J.; Zhong, C.; Chen, J.; Han, Y.; Zhou, J.; Wang, L. K-Means Clustering and Bidirectional Long- and Short-Term Neural Networks for Predicting Performance Degradation Trends of Built-In Relays in Meters. Sensors 2022, 22, 8149. [Google Scholar] [CrossRef]

- Zhang, P.; Gong, S.; Mittra, R. Beam-shaping Technique Based on Generalized Laws of Refraction and Reflection. IEEE Trans. Antennas Propag. 2017, 66, 771–779. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, J.; Ke, Y. A Detection and Recognition System of Pointer Meters in Substations. Measurement 2019, 152, 107333. [Google Scholar] [CrossRef]

- Zuo, L.; He, P.; Zhang, C.; Zhang, Z. A robust approach to reading recognition of pointer meters based on improved mask-RCNN. Neurocomputing 2020, 388, 90–101. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, D.; Deng, C.; Zhang, H.; Li, J.; Shi, B. Adaptive Reflection Detection and Control Strategy of Pointer Meters Based on YOLOv5s. Sensors 2023, 23, 2562. https://doi.org/10.3390/s23052562

Liu D, Deng C, Zhang H, Li J, Shi B. Adaptive Reflection Detection and Control Strategy of Pointer Meters Based on YOLOv5s. Sensors. 2023; 23(5):2562. https://doi.org/10.3390/s23052562

Chicago/Turabian StyleLiu, Deyuan, Changgen Deng, Haodong Zhang, Jinrong Li, and Baojun Shi. 2023. "Adaptive Reflection Detection and Control Strategy of Pointer Meters Based on YOLOv5s" Sensors 23, no. 5: 2562. https://doi.org/10.3390/s23052562

APA StyleLiu, D., Deng, C., Zhang, H., Li, J., & Shi, B. (2023). Adaptive Reflection Detection and Control Strategy of Pointer Meters Based on YOLOv5s. Sensors, 23(5), 2562. https://doi.org/10.3390/s23052562