Abstract

The realization of accurate fault diagnosis is crucial to ensure the normal operation of machines. At present, an intelligent fault diagnosis method based on deep learning has been widely applied in mechanical areas due to its strong ability of feature extraction and accurate identification. However, it often depends on enough training samples. Generally, the model performance depends on sufficient training samples. However, the fault data are always insufficient in practical engineering as the mechanical equipment often works under normal conditions, resulting in imbalanced data. Deep learning-based models trained directly with the imbalanced data will greatly reduce the diagnosis accuracy. In this paper, a diagnosis method is proposed to address the imbalanced data problem and enhance the diagnosis accuracy. Firstly, signals from multiple sensors are processed by the wavelet transform to enhance data features, which are then squeezed and fused through pooling and splicing operations. Subsequently, improved adversarial networks are constructed to generate new samples for data augmentation. Finally, an improved residual network is constructed by introducing the convolutional block attention module for enhancing the diagnosis performance. The experiments containing two different types of bearing datasets are adopted to validate the effectiveness and superiority of the proposed method in single-class and multi-class data imbalance cases. The results show that the proposed method can generate high-quality synthetic samples and improve the diagnosis accuracy presenting great potential in imbalanced fault diagnosis.

1. Introduction

The rolling bearing is an important part of the rotating machinery system, whose failures may cause huge economic losses or even threats to people’s lives. Therefore, it is of great significance to develop an advanced and effective fault diagnosis method to monitor bearing failures. In recent years, many data-driven fault diagnosis methods have been proposed based on machine learning and promoted the development of intelligent fault diagnosis technology [1,2,3]. Among them, deep learning (DL) methods have attracted much attention as they can process mechanical signals quickly and effectively, which contributes to reliable fault diagnosis results [4,5].

Vibration signals contain abundant health information and have been commonly used for bearing fault diagnosis [6,7]. Compared with knowledge-based and traditional machine learning methods, DL-based methods can automatically extract features from measured signals and achieve more accurate diagnoses. The convolutional neural network (CNN) is one of the commonly used DL-based methods for fault diagnosis. Chen et al. [8] constructed a CNN model for fault classification based on the time-frequency feature of vibration signals. Wang et al. [9] proposed a bottleneck layer-optimized CNN method for fault diagnosis based on rearranged data. Huang et al. [10] proposed a multi-scale cascade CNN to enhance the feature extract ability and diagnosis accuracy. Although CNN has made some progress in the field of fault diagnosis in recent years, there are still challenges.

The datasets for training CNN models are assumed abundant and balanced in the aforementioned works. However, the mechanical equipment operates in a normal state for a long-time in practical engineering, resulting in insufficient fault samples and imbalanced data occasions. The diagnosis model will sacrifice the accuracy of the minority class to obtain higher accuracy of the entire dataset facing imbalanced data, which greatly deteriorates the diagnosis accuracy [11]. Since under-sampling for the majority class samples will waste much information about the majority classes, methods focusing on the utilization of limited samples have been developed. The over-sampling method is a kind of data-based and effective method for the data augmentation of a minority class [12]. The synthetic minority over-sampling technique (SMOTE) is a typical over-sampling method [13], which has been adopted for data augmentation in fault diagnosis areas [14,15]. However, SMOTE generates new samples through interpolation in the existing samples, which will easily lead to over-fitting.

To address the above challenge, a generative adversarial network (GAN), which focuses on learning the distribution of the original real samples through an adversarial process, is adopted to solve the data imbalance problem. Once the GAN is well trained, its generator can generate samples with similar distribution to the real samples [16]. GAN has been widely applied to many fields, including medical image generation, image enhancement, neural style transfer, etc. [17,18]. GAN has also been introduced into the mechanical fault diagnosis field to deal with limited data problems. Wang et al. [19] constructed a deep convolution GAN (DCGAN) to generate new samples for minority class samples. Compared with raw signals, samples with enhanced features, such as features extracted from unsupervised learning [20], frequency spectrum [21,22], and time-frequency images [23,24,25] can improve the learning ability of GAN. However, GAN always faces the problem of unstable training, resulting in poor sample generations. Therefore, it is necessary to ensure the stability of the training process to generate more realistic samples, so as to improve the accuracy of fault diagnosis.

To address the above challenge, a fault diagnosis method for imbalanced is proposed aiming at improving the diagnosis accuracy from three aspects, which are multi-signal fusion, augmentation of high-quality data based on improved DCGAN (IDCGAN), and improved classifier The main contributions of this paper are summarized as follows:

- A data augmentation method based on IDCGAN is proposed to solve the imbalanced data problem. The self-attention mechanism is introduced in DCGAN to improve the generated sample quality, and the spectral normalization (SN), two time-scale update rules (TTUR), and Wasserstein distance and gradient penalty are applied for stabilizing the training process of DCGAN.

- A multi-signal fusion method is proposed to mitigate the effects of imbalanced data and improve the diagnosis performance. The synchronizing vibration signals measured by multiple sensors are separately transformed into 2-D time-frequency images, which are fused through the pooling and splicing operations to provide more fault features.

- An enhanced classifier based on residual networks is proposed to achieve higher diagnostic accuracy. The Convolutional Block Attention Module (CBAM) is introduced in the pre-trained residual network for better capturing the important space and channel features.

The remainder of the paper is organized as follows. In Section 2, the basic theories are given. In Section 3, the details of the proposed diagnosis framework are provided. In Section 4, The experimental studies based on two different rotating components are provided to validate the performance of the proposed method. Finally, the conclusions are presented.

2. Theoretical Backgrounds

2.1. Continuous Wavelet Transform

Compared with raw vibration signals, time-frequency images can provide more powerful state information [26], which contributes to better recognition results. CWT can provide the continuous translation and scaling of the wavelet basis function to obtain a class of wavelet family and transform time-series signals into other feature spaces. Therefore, CWT has been widely used for obtaining time-frequency images, whose definition is as follows:

where a represents the scaling factor, b is the translation factor, x(t) represents the input signals, Ψa,b(t) is the wavelet basis function (WBF), Ψ* represents the complex conjugate function of Ψa,b(t), and represents the internal product.

WBF plays an important role in the wavelet transform and different WBF analyses have different results under the same signal. Compared with some popular WBFs, such as Db10, Coif5, and Meyer, the Morlet WBF has fine regularity, a high similarity coefficient, and a definite analytical equation, which is more suitable for the application of vibration signals [24]. Therefore, the Morlet WBF is adopted in this paper.

2.2. Generative Adversarial Network

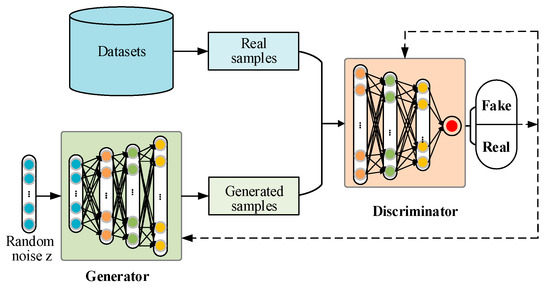

As shown in Figure 1, a standard GAN consists of two basic networks: a generator (G) and a discriminator (D). The G makes use of random noise to generate fake samples, which are sent to D for identification together with real samples. The D needs to distinguish whether the input sample is real or fake as far as possible. These two networks are trained alternately and compete with each other, presenting adversarial learning. The goal of this training is to achieve the Nash equilibrium and the G can generate samples with the same distribution as real samples. The optimization of GAN is to minimize the generator loss and maximize the discriminator loss. That is, the predicted value from D for both the real sample and the generated sample is 0.5. The training process can be described as follows:

where Pdata is the distribution of real samples, Pz represents the prior distribution of the input noise, G(z) is the generated sample by the generator, and D(·) represents the predicted value from the discriminator.

Figure 1.

The basic structure of standard GAN.

DCGAN is a variant of GAN, which adopts the convolution and transposed convolution layers to replace the initial fully connected layers in the original GAN. Besides, the ReLU is adopted as the activation function of the G network and the Leaky ReLU is used as the activation function of the D network. DCGAN has been widely used on many occasions due to its improved training stability and the high quality of the generated samples.

2.3. Wasserstein Distance and Gradient Penalty

Wasserstein distance is used to measure the distance between two probability distributions [27]. Its definition can be illustrated as follows:

where Pg and Pr represent the distribution of the generated sample and the raw sample, respectively. γ denotes an arbitrary joint distribution in the set of all joint distribution Π(Pr, Pg), E(x,y) means the expected value of the distance between x and y, and inf represents the infimum.

Wasserstein distance was introduced into GAN to increase the training stability [27]. However, Wasserstein GAN may have the problem of gradient explosion or gradient disappearance. Gradient penalty (GP) was introduced to improve the training stability of Wasserstein GAN and the quality of generated samples. The operation of GP can be expressed as follows:

where λ represents the gradient penalty coefficient, is the L2-norm value of the gradient, denotes the random sampling in the sample space containing real samples and generated samples.

2.4. Self-Attention Mechanism

The self-attention mechanism can effectively learn the non-local relationship of each important region in different samples [28,29]. In the self-attention mechanism, three 1 × 1 convolution layers with different output channels are applied to generate three different kinds of sub-feature maps (i.e., f(x), g(x), and h(x)) based on the input feature map x. Subsequently, the transposed matrix of sub-feature map f(x) is adopted to multiply g(x) to calculate corresponding attention weights, which are processed by the softmax activation function to obtain attention map βj,i. Then, the attention map and the output matrix h(x) are processed by the matrix multiplication to obtain the self-attention feature map, which is multiplied by a learnable coefficient α and added to the original input xj to obtain the final output feature map yj. The process of calculating self-attention maps can be expressed as follows:

where βj,i represents the attention score of the i-th pixel to the j-th pixel, N denotes the feature number of the input feature map

2.5. Convolutional Block Attention Module

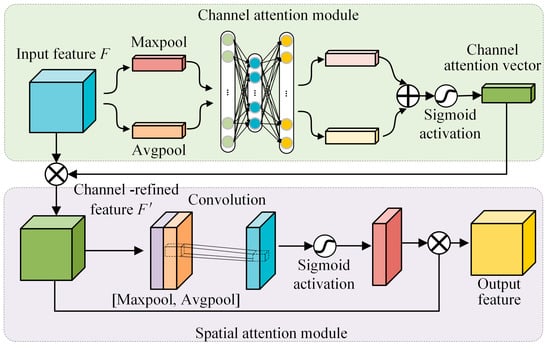

CBAM can enhance the representation of specific regions and effectively improve the performance of CNN [30,31]. The basic structure of CBAM is illustrated in Figure 2, which mainly consists of two separate attention functions: channel attention and spatial attention. In the channel attention submodule, the input feature of each channel is firstly squeezed by global average-pooling and global max-pooling operations to obtain the characteristic feature of the whole spatial area. Then, the above features are input into the shared multi-layer perceptron (MLP) network to obtain further representational features. Finally, the two representation features from MLP are merged and activated to obtain the final channel attention vector. The process of channel attention can be expressed as follows:

where ω represents the sigmoid activation function and F denotes the input feature map.

Figure 2.

The basic structure of the CBAM.

The structure of the spatial attention sub-module is shown in Figure 2, the channel refined feature is compressed through the global average-pooling and global max-pooling in the channel direction. Then, the two compressed spatial features are transformed into one channel through convolution operation. Finally, the spatial attention matrix is obtained after the sigmoid activation operation, which is multiplied by the channel refined feature to obtain the final output feature map. The process of the spatial attention module can be expressed as follows:

where F’ represents the channel-refined feature map, and b represents the size of the convolution kernel available in the convolution, which must be 3 or 7.

3. The Proposed Method

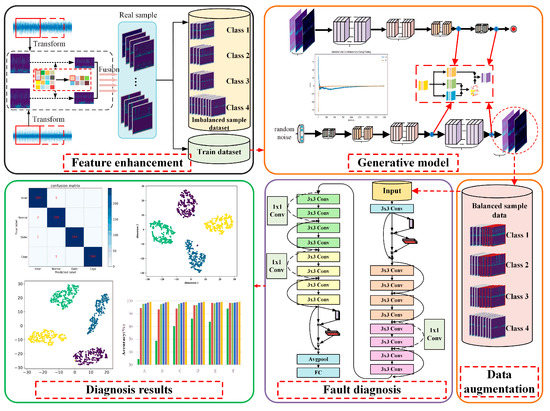

To address the imbalanced data problem and improve the fault diagnosis performance, a novel bearing diagnosis method is proposed. The framework of the proposed method is shown in Figure 3, which mainly contains the following three steps:

Figure 3.

The framework of the proposed diagnosis method with imbalanced data.

Step 1: Feature enhancement with time-frequency feature and multiple sensor signals. The measured vibration signals from multiple sensors or directions are used to construct initial pairs of data samples. Then, CWT is adopted to process the samples to obtain the corresponding time-frequency information. Finally, pairs of time-frequency features are squeezed through the pooling operation and then fused by a splicing operation.

Step 2: Data augmentation based on a generative model. Improved DCGANs are constructed and trained to capture the distribution of real samples of different classes. Once the models are well-trained, they are used to generate new synthetic samples to augment the imperfect dataset.

Step 3: Fault diagnosis with enhanced ResNet. A DL-based classifier is constructed by introducing residual network and CBAM to improve the feature extraction ability of the model. Moreover, the expanded balanced dataset is used for classifier training, which aims to further improve the fault diagnosis accuracy.

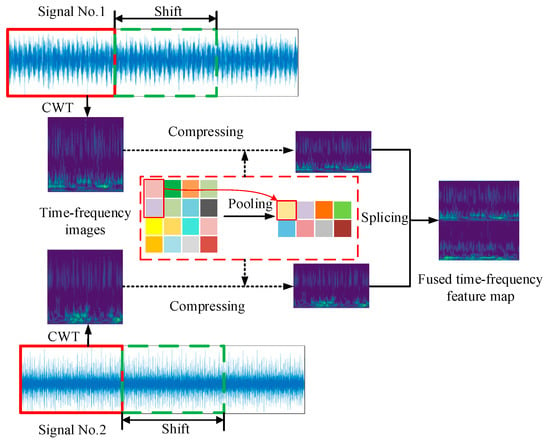

3.1. Feature Enhancement and Feature Fusion

Signals collected from different sensors or different directions can provide more health state information, which also benefits feature extraction and later classification. In addition, the time-frequency conversion of the signal through CWT can also provide more features compared with row time domain signals. Therefore, synchronizing signals collected from two different sensors are adopted to enhance the data feature in this study. The flowchart of feature enhancement and feature fusion strategy is shown in Figure 4. First, the synchronizing vibration signals are sliced into pairs of segments by sliding windows with fixed lengths. Subsequently, the pairs of signal segments are transformed by CWT to obtain corresponding time-frequency features, which are then squeezed through an average pooling operation with a size of 2 × 1. Finally, the squeezed features are merged by the up-down arrangement, and the image samples with fused time-frequency features are obtained.

Figure 4.

The flowchart of feature enhancement and feature fusion strategy.

3.2. Data Augmentation Based on Generative Model

Although DCGAN is beneficial for obtaining stable training, the unstable training problem still exists most of the time which will cause the mode collapse and gradient disappearance. In addition, SN is a novel weight normalization method that can achieve the Lipschitz constraint by constraining the L2 spectral norm of the weight matrix of each layer, and SN can enhance the training stability of GAN [32]. Therefore, an improved DCGAN (IDCGAN) is proposed by introducing the SN, Wasserstein distance with GP to enhance the training stability and generate satisfying samples [27]. The loss function of the proposed IDCGAN can be expressed as follows:

where D(x) and D(y) represent the corresponding predicted value from the discriminator of the real sample and the generated sample, respectively, μ is a weight constraint coefficient, and Pz denotes the joint distribution of the generated sample and the real sample. Once the Wasserstein distance and GP are introduced into DCGAN, the gradient tends to be near a certain stable value during the training process. Moreover, the Lipschitz constraint condition is further ensured by introducing the SN. These two strategies contribute to mitigating the vanishing gradient and exploding gradient problems.

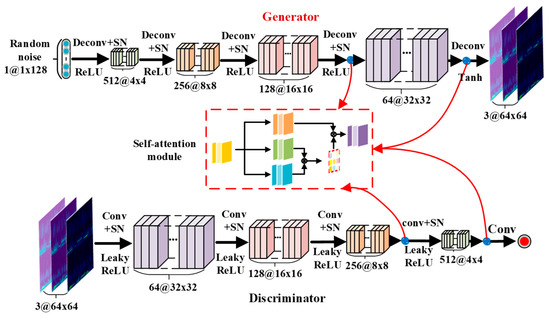

Since the convolution kernel of the method using DCGAN is fixed, it cannot capture the global information. In contrast, the self-attention mechanism can extract features by establishing relationships between local and remote regions. Therefore, the performance of the generated model and the quality of the generated samples can be further improved by introducing the self-attention mechanism. The structure of the proposed IDCGAN is shown in Figure 5. SN is arranged after each convolutional layer and deconvolutional layer of both the discriminator and generator in place of the original batch normalization. Besides, the self-attention modules are added after the last two layers of both the generator and discriminator.

Figure 5.

The basic structure of the improved DCGAN model.

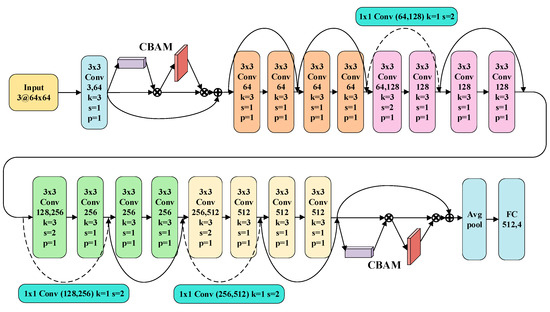

3.3. Fault Diagnosis Based on Enhanced ResNet

Increasing the depth of the CNN can improve feature extraction ability. However, this operation can also lead to the disappearance of gradients [10]. The introduction of ResNet can solve this problem [33]. Therefore, ResNet is selected as the backbone of the classifier. Since the pre-trained model can help the network converge quickly in new conditions, partial structures of ResNet-18 with pre-train parameters are adopted as the initial classifier model in this paper. Besides, CBAM is introduced to improve the performance of ResNet-based diagnostic models (named CBAM-ResNet). The structure of the CBAM-ResNet is shown in Figure 6, and the CBAM is added after the first convolution layer and the last residual block.

Figure 6.

The basic structure of the proposed CBAM-ResNet.

4. Experimental Verification

In this section, two bearing datasets with different failure mechanisms, i.e., the full life cycle bearing failure dataset from Xi’an Jiaotong University and the Changxing Sumyoung Technology Co., Ltd. (Changzhou, China) (XJTU-SY) [34], and the artificial failure dataset from Case Western Reserve University (CWRU) [35] are selected to validate the effectiveness of the proposed method with imbalanced data.

4.1. Case 1: Bearing Dataset from XJTU-SY

4.1.1. Dataset Description and Data Processing

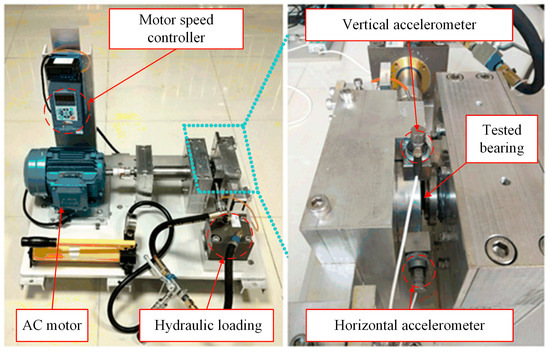

The bearing dataset from XJTU-SY was collected from a bearing test rig which is shown in Figure 7. It mainly consists of the following parts: an AC motor, a hydraulic loading device, a tested bearing, a motor speed controller, and two accelerometers for measuring vertical and horizontal vibration [34]. The vibration signals were measured with a sampling frequency of 25.6 kHz. The dataset contains complete run-to-failure data of bearings which were acquired by conducting many accelerated degradation experiments. A total of 32,768 data points (i.e., 1.28 s) are recorded for each sampling until serious failure occurs.

Figure 7.

The test rig of the XJTU-SY.

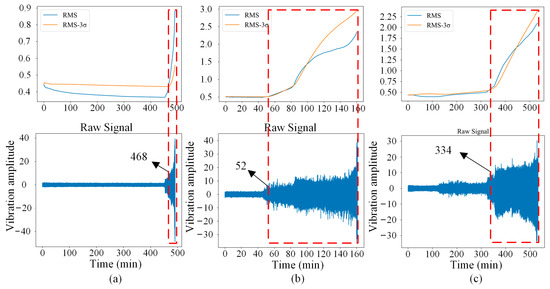

In this paper, the dataset collected under a rotational speed of 2250 rpm and a radial force of 11 kN is selected for experimental verification. Four different types of health states are considered for fault diagnosis, which are normal (Nor), inner race fault (IRF), outer race fault (ORF), and bearing cage fault (BCF). Since the dataset is a kind of full life cycle data, it is necessary to judge the initial occurred time of each fault from the whole data. Considering that the RMS-3σ rule is a smooth and more accurate fault identification method [36], the RMS-3σ rule is adopted to identify the early failure occurrence time point of each fault. The initial failure time of bearing 2–1, 2–2, and 2–3 (i.e., IRF, ORF, and BCF) are identified as 468, 52, and 334, respectively. That is, vibration signals after each initial failure point are used to construct data samples. The RMS-3σ results and corresponding initial failure points are shown in Figure 8.

Figure 8.

Signals and initial failure point of each fault. (a) IRF, (b) ORF, (c) BCF.

It is well known that each sample should contain at least one circle sampling point. In this dataset, 683 sampling points can be collected per revolution according to the following equation:

where fz represents the sampling frequency and n represents the rotating speed.

Therefore, a sliding window with a constant length of 1280 points is adopted to construct samples. Subsequently, Z-score standard normalization is adopted to process the time series samples, which are then converted to time-frequency images with a size of 64 × 64 × 3 based on CWT and subsequent feature fusion. Finally, a total of 750 samples of each class are obtained, among which 250 samples are randomly selected to form the testing dataset. Moreover, a different number of minority samples are selected from the remaining samples of each class for IDCGAN model training according to the relative experiment settings. That is, if 500 samples are used as the majority sample, 100 minority samples are selected for IDCGAN model training with an imbalanced ratio of 0.2.

4.1.2. Model Training and Sample Generation

In the training process of IDCGAN, the batch size and the iteration epoch are set as 32 and 2000 for each class, respectively. Besides, the discriminative ability of the discriminator is significantly stronger than the generative ability of the generator at the beginning; the TTUR strategy is adopted to balance the learning speed between the generator and the discriminator, which can improve the training stability. In this paper, the learning rates of the generator and the discriminator are 0.0001 and 0.0003, respectively.

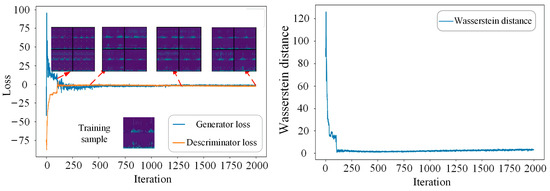

The losses of the generator and discriminator, Wasserstein distance, together with visualization of the generated samples are adopted to better show the training process. Taking the IRF class with a limited sample number of 100 as an example, 100 real IRF samples are randomly selected from the training database as a minority class, which is used for training the IDCGAN model. The training process is shown in Figure 9. It can be seen that the discriminator loss, the generator loss, and the Wasserstein distance converge quickly and the quality of the generated samples becomes better as the iteration increases. The Nash equilibrium training is almost achieved when the iteration number increases to 1750, and the sample quality is very close to the real sample.

Figure 9.

The training process of IDCGAN with 100 IRF samples.

4.1.3. Evaluation of the Generated Samples

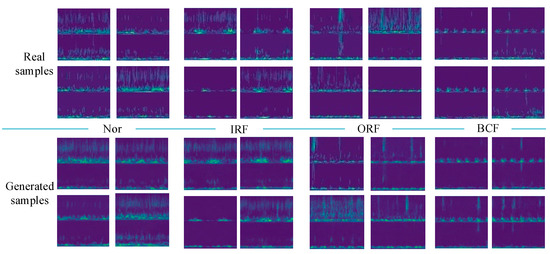

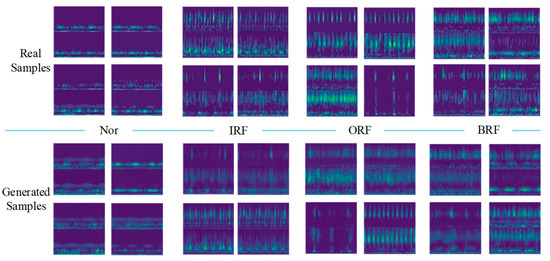

To evaluate the quality of the generated samples, visualization results of the real samples and the generated samples are presented. Taking the limited sample number of 100 as an example, 100 real samples of each health state are adopted to train IDCGANs and then generate new synthetic samples.

The comparison results of the generated samples and corresponding real samples are shown in Figure 10. It is clear that all classes of the generated samples show favorable consistency in time-frequency features and also have some diversity, which means that the IDCGANs have captured the distribution of the initial training data and can generate high-quality synthetic samples.

Figure 10.

Comparison between the real samples and the generated samples.

To quantitatively evaluate the quality of the generated sample and show the superiority of the proposed feature fusion strategy, experiments in Table 1 are conducted based on the GAN-train strategy [37]. In all experiments mentioned above, IDCGAN is used for sample generation and CBAM-ResNet is used for fault classification. Time-frequency images of vibration signals from a single sensor are adopted to train the classifier in experiment A while the other experiments are based on fused time-frequency images from multiple sensors. For experiment B, real samples are used for fault identification and the generated samples in other experiments are obtained from the IDCGANs training with the same real samples of experiment B. According to reference [37], the diagnosis accuracies of experiments B and C are called GAN-base and GAN-train, respectively. Moreover, the distribution of synthetic samples is quite similar to the real samples once the values of the GAN-base and GAN-train are close. Experiments D and E are adopted to show the similarity and diversity between real and generated samples, which can be regarded as the extension of the GAN-train strategy.

Table 1.

Experimental setup and results for evaluation of the generated samples.

As shown in Table 1, the diagnosis accuracy with information from single sensor (experiments A) is only 89.78% with a large standard deviation of 2.51%, which is increased to 96.89% with a smaller standard deviation of 1.21% by adopting the feature fusion method. This indicates that the proposed fusion method can provide more effective features which are beneficial for the classifier to learn more important information. By comparing experiments B and C, it can be seen that the GAN-train value with all generated samples is about 92.35%, which is close to the GAN-base value of 96.89% obtained based on real samples. In addition, both the diagnosis accuracy and standard deviation in experiment D are almost equal to the GAN-base value, which is obtained with half real samplesand half-generated samples of all classes. This further demonstrates the high similarity between the generated and real samples. In experiment E, the diagnosis accuracy is further improved to 98.83%, and the standard deviation is reduced to 0.61%, which proves the diversity of the samples generated by IDCGAN. From the above, it is reasonable to believe that the IDCGAN can be used to sample generation with similar diversity samples and for minority sample supplements.

4.1.4. Single-Class Imbalanced Fault Diagnosis

Different evaluation criteria have been adopted to evaluate the similarity between the synthetic samples and the real samples. However, this operation will cause excessive similarity between the synthetic samples and the real samples, which ignores the sample diversity and will cause information redundancy [38]. Therefore, the generated samples are directly applied to expand the initially limited dataset. Moreover, the diagnosis accuracy can also be used as an indirect criterion to evaluate the generation ability of different generative models.

To verify the effectiveness of the proposed method in cases with limited fault samples, the single-class and multi-class imbalanced fault diagnosis experiments are established. Different initial fault samples are adopted to train the generative models, which are then used to generate new samples for data augmentation and achieve balanced data. Considering that the ORF occurs more frequently in all the full life cycle bearing experiments in the XJTU-SY dataset, ORF is selected as the minority class fault in single-class imbalanced experiments. It should be mentioned that the number of the majority class is 500, and the initial minority samples with different imbalance ratios are selected for training the generative models and expanding the minority class to 500 in the experiments, which are 0.5, 0.2 and 0.1 corresponding 250, 100 and 50 samples. To prove the superiority of the proposed method and different data augmentation methods, i.e., SMOTE, adaptive synthetic sampling (ADASYN), SN-assisted DCGAN (SN-DCGAN), and DCGAN based on Wasserstein distance with GP (WGANGP) are adopted for comparison. Meanwhile, different classifiers, i.e., CNN, ResNet, and CBAM-ResNet are used for comparative experiments to reduce the impact of the classifier on the results and verify the superiority of the improved classifier.

The CNN model is just a partial structure of the CBAM-ResNet without the skip connections and CBAM, while the ResNet is the partial structure of CBAM-ResNet only without CBAM. Since the generation mechanisms of SMOTE and ADASYN are quite different from GAN-based methods and these methods cannot generate synchronizing signals, experiments based on a single sensor were also conducted. Experimental diagnosis results are detailed in Table 2, where the initial samples mean that the imbalanced samples are directly used for the training classifiers.

Table 2.

Results after data augmentation of ORF with different initial imbalance ratios.

As shown in Table 2, the diagnosis accuracy based on the initial imbalanced samples declines obviously with the increase in imbalance degree. For example, the accuracy based on multiple sensors decreases from 97.38% to 94.3% when the imbalance ratio decreases from 0.5 to 0.1, while the accuracy based on a single sensor possesses a similar decrease tendency but with much lower accuracy from 94.83% to 89.8%. Besides, the decline rate with the increased imbalance degree of multiple sensors is much slower than that of the single sensor.

The accuracies significantly increase once the initial imbalanced data are expanded by different synthesis methods whether data from a single sensor or multiple sensors are adopted. It can be concluded that the imbalanced samples have a great influence on the classifier performance, and the data augmentation method can attenuate the imbalance influence. Among the generative methods, the proposed IDCGAN obtains the best diagnosis performance with any of the adopted classifiers. Taking the imbalance ratio of 0.1 and the ResNet classifier as examples, the proposed IDCGAN with multi-sensor can achieve a diagnostic accuracy of 98.49%, which is much higher than that of the initial sample of 94.3%. Moreover, this is also higher than the accuracy of the SN-DCGAN and WGANGP, which are 98.12% and 97.04%, respectively. Taking an imbalance ratio of 0.2 and IDCGAN as examples, the accuracy based on CBAM-ResNet is 99.54%, which is higher than the accuracy of CNN and ResNet with values of 98.52% and 98.61%. Furthermore, the proposed IDCGAN with the CBAM-ResNet method can achieve the best diagnostic accuracies in almost all imbalance ratios.

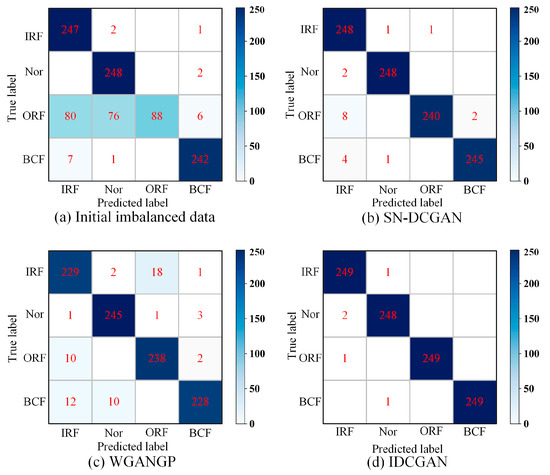

The confusion matrix is adopted to better illustrate the influence of different generative models on classification. Figure 11 shows the confusion matrixes based on the ResNet classifier under limited ORF conditions with an imbalance ratio of 0.1. It can be seen that a large number of ORF samples have been misclassified as other classes. For example, 80 and 76 ORF samples are misclassified as Nor and IRF while the classification of the majority class samples is correct. It indicates that the minority samples restrict the classifier from learning more valuable information. The misclassification of ORF is reduced when the synthetic samples generated by SN-DCGAN and WGANGP are adopted for data augmentation, and the misidentified samples are further reduced when the proposed IDCGAN is adopted.

Figure 11.

Confusion matrixes based on ResNet and different generation methods under limited ORF conditions with imbalance ratio of 0.1.

In addition, single-class imbalanced fault diagnosis experiments based on IRF or BCF with an initial imbalance ratio of 0.2 are also performed. The results are shown in Table 3. The diagnosis results are consistent with the results based on ORF imbalanced data. For the single sensor, IDCGAN has higher diagnostic accuracy than other data synthesis methods, i.e., SMOTE and ADASYN whichever fault is limited. Moreover, the diagnosis accuracy of all experiments based on IDCGAN can reach above 98% while the initial accuracy with imbalanced data is less than 93.5%. As for multiple sensors, IDCGAN can also achieve the highest accuracy compared with other data synthesis methods, i.e., SN-DCGAN and WGANGP with the same classifier. In addition, the diagnosis accuracy-based CBAM-ResNet is the highest among the three classifiers. For example, the accuracy based on IDCGAN and CBAM-ResNet can reach 99.41% after the data augmentation in BCF limited occasion, which is 4.32% higher than that of CNN and 1.45% higher than that of ResNet. In conclusion, the proposed IDCGAN can well grasp the time-frequency sample distribution and generate high-quality samples for data augmentation. Moreover, the proposed CBAM-ResNet model can enhance the diagnosis accuracy and achieve satisfying results.

Table 3.

Diagnosis results of different minority faults with imbalance ratio of 0.2.

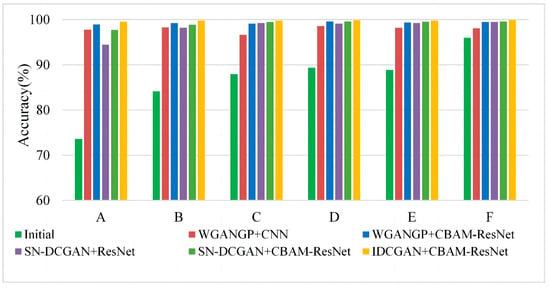

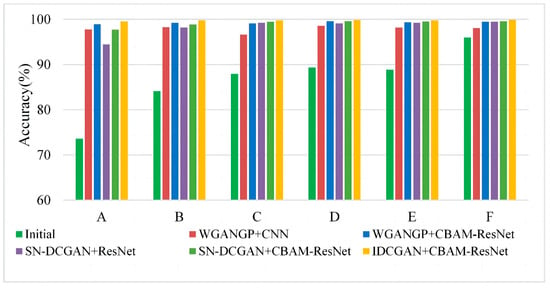

4.1.5. Multi-Class Imbalanced Fault Diagnosis

In practice engineering, there always exist multiple fault imbalance problems. Thus, experiments based on multi-class imbalanced faults are performed to demonstrate the effectiveness of the proposed method. In this section, different imbalance ratios of multiple fault classes are selected as the initial training data to simulate multi-class imbalance occasions, which are detailed in Table 4. It should be mentioned that the majority of classes have 500 samples and the presented results are based on expanded balanced data except for the initial samples. Corresponding experimental results are shown in Figure 12. It can be seen that the accuracies of limited data with three fault classes (i.e., experiment A, B, and C in Table 4) are worse than that of only two limited fault classes data (i.e., experiments D, E and F in Table 4), especially when the initial samples are adopted for training classifiers. It is clear that the misclassification will decrease as the number of minority sample classes decreases. Furthermore, experiment results of all multi-class imbalanced fault diagnoses in Figure 12 indicate that the IDCGAN with CBAM-ResNet proposed in this paper can achieve the best diagnosis results, which is consistent with the results of single-class imbalanced experiments. The above results show that the proposed method also has excellent diagnosis performance on multi-class imbalanced datasets.

Table 4.

Experiment description of multi-class imbalanced data.

Figure 12.

Experimental results based on multi-class imbalanced data.

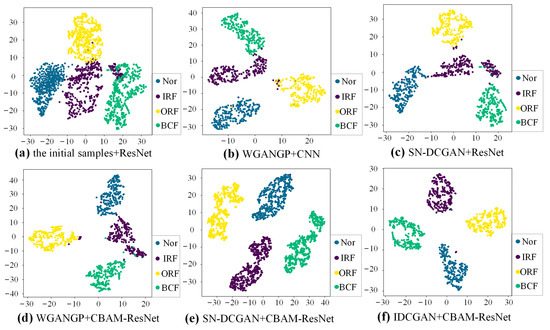

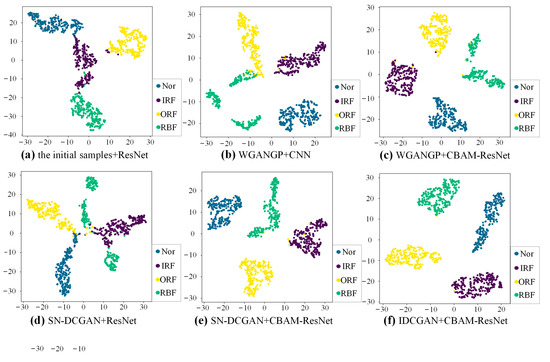

To better present the features of different generative methods and different classifiers, t-Distributed Stochastic Neighbor Embedding (t-SNE) is used as a qualitative method to evaluate the extracted high-dimensional sample feature. The t-SNE visualization results of extracted features before the fully connected layer of experiment A in Table 4 are shown in Figure 13. Each health state is represented by a special color. There exists apparent overlapping among the clusters of different health states with the initial samples, meaning that the classifier trained by imbalanced data cannot well identify samples of different health states. Comparing Figure 13a–c, it can be seen that the boundary becomes clearer once the classifier is trained with expanded balanced data. However, there still exist many overlaps when the CNN and the Resnet are adopted. The overlap has been improved by using the enhanced CBAM-ResNet, which is shown in Figure 13d,e. This also indicates the superiority of the proposed CBAM-ResNet. Compared with SN-DCGAN and WGANGP, the feature clusters are more compact when the IDCGAN is applied to the data augmentation. This indicates that self-attention can help to improve the quality of the generated samples which finally helps the classifier to better learn the deep features of the samples.

Figure 13.

t-SNE visualization with different data augmentation methods and classifiers.

4.2. Case 2: Bearing Dataset from CWRU

4.2.1. Dataset Description and Data Processing

The bearing dataset of CWRU is a kind of bearing dataset with artificial faults [35]. In this paper, the vibration signals collected under a load of 2 hp and with a sampling frequency of 12 kHz are adopted for the experimental validation. Moreover, signals measured by the acceleration sensors placed on the fan end (FE) and drive end (DE) are used as the basic multiple sensor data for the feature fusion. The same category fault with different dimensions is considered as one class, and finally, four health states are adopted, which are normal (Nor) state, inner race fault (IRF), outer race fault (ORF), and bearing roller fault (BRF).

The sliding window with overlap is adopted for the sample construction, whose window size and sliding step are set as 1280 and 480 points, respectively. Similar to Case 1, normalized synchronizing signal segments in the time domain from DE and FE are transformed to obtain the time-frequency feature with a size of 64 × 64 × 3 by CWT, which are then compressed and merged for the feature fusion. Finally, 750 time-frequency samples of each class are obtained. Moreover, 250 samples from each class are selected for model testing, and the remaining samples are randomly selected for the later generative model training according to experiment requirements.

4.2.2. Evaluation of the Generated Samples

Similar to the training process in Case 1, 100 samples of each class are used for training IDCGANs. Then, the well-trained GANs are applied to the sample generation. The visual comparison of the generated samples and corresponding real samples are shown in Figure 14. It can be seen that IDCGAN can generate high-quality samples that are remarkably similar to the corresponding real fused time-frequency samples, meaning that the IDCGANs have captured the distribution of real data and can generate high-quality synthetic samples with good similarity and some diversity.

Figure 14.

Comparison between the real samples and the generated samples with CWRU dataset.

The same strategy based on GAN-train is adopted to evaluate the performance of IDCGANs in this case. In this experiment, CBAM-ResNet is also adopted as the classifier for the fault diagnosis. As shown in Table 5, the diagnosis accuracy with the feature fusion method can reach 98.38%, which is 2.35% higher than that with a single sensor feature. Moreover, the standard deviation is much lower with the fusion time-frequency feature, showing the importance of the proposed fusion method. Besides, the GAN-train value with all generated samples from IDCGANs is about 97.58%, which is close to the GAN-base value of 98.38% and even a bit higher than the GAN-base with a single sensor. The accuracies and standard deviations of experiment B and experiment D are almost the same, which demonstrates the high similarity between the generated and real samples. In addition, seen from experiment E, the augmentation of generated samples can further increase the diagnostic accuracy to 99.17%, indicating that the generated samples also possess some diversity. In summary, IDCGAN is a reliable method that can be used for data generation and augmentation to improve the diagnostic performance of the classifier.

Table 5.

Experimental setup and results for evaluation of the generated samples.

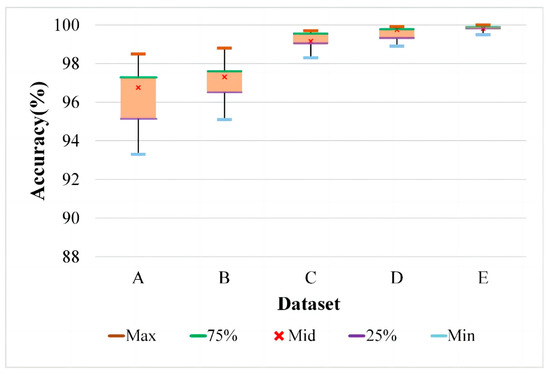

4.2.3. Single-Class Imbalanced Fault Diagnosis

In this section, BRF is selected as the minority fault class for the verification of imbalanced fault diagnosis. Fifty real samples of BRF are used for training the IDCGAN to generate new synthetic samples, which are gradually added to the initial data to alleviate the imbalanced degree; 500 real samples of each other class are randomly selected, which are combined with the augmented BRF samples to train a CBAM-ResNet for fault recognition. Finally, 500 synthetic samples generated by IDCGANs trained by 50 real samples of each class are added to further expand the balanced data to obtain better results. The components of data are shown in Table 6 and corresponding results are presented in Figure 15, in which the five markers in each box represent the maximum, upper quartile, median, lower quartile and a minimum of the 10 experimental results in each dataset. It can be observed that when the imbalance ratio of BRF is 0.1, the classifier cannot effectively learn the feature distinction among different health states, resulting in low accuracy and big fluctuations. With the increase in minority class samples, the diagnostic performance is significantly improved, and the accuracy is gradually stabilized. This shows that the samples generated by the data synthesis method IDCGAN are similar to the real samples.

Table 6.

Experiment components with different amounts of generated samples.

Figure 15.

Experiment results with different numbers of generated samples.

Similar to the single-class imbalance experiment in Case 1, in this case, the IRF is chosen as the minority fault to study the effectiveness of the proposed method with various initial imbalanced data.

The initial imbalance ratios are set as 0.5, 0.2, and 0.1, which are directly expanded to balance data based on different generative methods except for the initial sample. As shown in Table 7, the diagnosis results based on a multi-sensor are better than those based on a single-sensor. For example, the diagnosis accuracy increased from 90.12% to 96.58% and 95.35% to 98.78% after multi-sensors are adopted when the initial imbalance ratio is 0.1 and 0.5, respectively. Moreover, the misclassification phenomena based on different classifiers are more obvious as the initial imbalanced degree increases. Furthermore, all the data synthesis methods can significantly improve the accuracy compared with the initial imbalanced samples. The proposed IDCGAN method provides the highest improvement among different generative methods based on the same classifier. Meanwhile, the CBAM-ResNet can reach higher diagnosis accuracy with the same data synthesis method and imbalance ratio. Therefore, the proposed IDCGAN + CBAM-ResNet together with the fused time-frequency feature is an effective way to enhance the diagnosis accuracy facing imbalanced data.

Table 7.

Results after data augmentation of IRF with different initial imbalance ratios.

4.2.4. Multi-Class Imbalanced Fault Diagnosis

Similar to Case 1, the multi-class imbalanced fault diagnosis including the two classes and three classes of fault imbalance experiments are performed. For multi-class imbalanced experiments in this case, the BCF in case 1 is replaced by BRF while the other setups are the same. The comparison results are shown in Figure 16. It can be seen that if the initial samples are imbalanced and without an augmentation, the classifier will not be able to learn deep features effectively, resulting in misclassification with the decrease in the minority class samples. In each dataset, after the samples are balanced by these data synthesis methods, the diagnostic performance will be improved accordingly, and the IDCGAN-CBAM-ResNet can achieve better diagnostic results than other methods.

Figure 16.

Experimental results based on multi-class imbalanced data.

The t-SNE visualization results of high-dimensional features learned by different classifiers with different augmented training data are shown in Figure 17. The results are obtained based on the same experiment as case 1. It is clear that the feature clusters between different samples cannot be well distinguished with initial imbalanced data. The feature cluster boundaries of different classes based on CBAM-ResNet and expanded balance data are much clear than those of the CNN and ResNet, indicating the strong feature extraction ability of the proposed CBAM-ResNet. Besides, the feature clusters become compact and there is almost no overlap, meaning that the proposed IDCGAN has a strong sample generation ability and is superior to the generative methods used for comparisons.

Figure 17.

The t-SNE visualization results with different methods.

From the above, it can be concluded that the fused time-frequency feature based on the multi-signal fusion method is beneficial to provide more valuable information. The proposed IDCGAN can generate samples that have a similar distribution to real samples, which is better than the comparison methods. Moreover, the CBAM-ResNet can better distinguish the fused time-frequency feature of different faults than the comparison classifiers. Therefore, the proposed method can address the imbalanced data problem and enhance the diagnosis accuracy.

5. Conclusions

In order to reduce the unfavorable impact of imbalanced data in intelligence fault diagnosis and improve fault diagnosis accuracy, a novel bearing fault diagnosis method is proposed. Firstly, signals from different sensors are transformed into time-frequency images by CWT, which are then compressed and spliced to fuse the features of multiple sensor signals. Subsequently, the self-attention mechanism, SN, and Wasserstein distance with GP are introduced to construct the IDCGAN model to ensure a stable training process and improve the generated sample quality. Finally, the CBAM is introduced into the residual network to extract deep features of the augmented balance dataset for better fault diagnosis results. Experiments based on two different bearing datasets, i.e., the life-cycle bearing fault and manufactured bearing fault, have demonstrated that the proposed IDCGAN + CBAM-ResNet method together with the fused time-frequency feature is beneficial to generate high-quality samples and can achieve better diagnostic performance than the comparison methods, showing great potential in imbalanced fault diagnosis. However, the proposed IDCGAN is not applicable once the training sample is extremely scarce and cannot be applied to generate fault samples with unknown features. Therefore, corresponding research will be carried out to meet the challenges of data generation with 1 shot real sample and data generation of related but unknown features in the future.

Author Contributions

Conceptualization, C.D., Z.D., M.H. and Y.P.; Methodology, C.D., S.L. and J.M.; Software, Z.D., S.L. and M.H.; Validation, C.D., S.L. and J.M.; Formal Analysis, C.D., Z.D. and S.L.; Investigation, Z.D. and Y.P.; Resources, M.H.; Data Curation, M.H. and J.M.; Writing—Original Draft Preparation, Z.D. and Y.P. Writing—Review and Editing, C.D. and Z.D.; Visualization, Z.D. and Y.P.; Supervision, J.M.; Project Administration, C.D. and J.M.; Funding Acquisition, C.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China [grant number 51705058] and the Sichuan Science and Technology Program [grant number 2022YFG0225].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare that they have no known competing financial interest or personal relationship that could have appeared to influence the work reported in this paper.

References

- Lei, X.; Lu, N.; Chen, C.; Wang, C. An AVMD-DBN-ELM Model for Bearing Fault Diagnosis. Sensors 2022, 22, 9369. [Google Scholar] [CrossRef] [PubMed]

- Khan, S.; Yairi, T. A review on the application of deep learning in system health management. Mech. Syst. Signal Process. 2018, 107, 241–265. [Google Scholar] [CrossRef]

- Cheng, Y.; Zhu, H.; Wu, J.; Shao, X. Machine Health Monitoring Using Adaptive Kernel Spectral Clustering and Deep Long Short-Term Memory Recurrent Neural Networks. IEEE Trans. Ind. Inform. 2018, 15, 987–997. [Google Scholar] [CrossRef]

- Hakim, M.; Omran, A.A.B.; Inayat-Hussain, J.I.; Ahmed, A.N.; Abdellatef, H.; Abdellatif, A.; Gheni, H.M. Bearing Fault Diagnosis Using Lightweight and Robust One-Dimensional Convolution Neural Network in the Frequency Domain. Sensors 2022, 22, 5793. [Google Scholar] [CrossRef]

- Lei, Y.; Yang, B.; Jiang, X.; Jia, F.; Li, N.; Nandi, A.K. Applications of machine learning to machine fault diagnosis: A review and roadmap. Mech. Syst. Signal Process. 2020, 138, 106587. [Google Scholar] [CrossRef]

- Liu, Y.; Guo, L.; Wang, Q.; An, G.; Guo, M.; Lian, H. Application to induction motor faults diagnosis of the amplitude recovery method combined with FFT. Mech. Syst. Signal Process. 2010, 24, 2961–2971. [Google Scholar] [CrossRef]

- Zheng, J.; Liao, J.; Chen, Z. End-to-End Continuous/Discontinuous Feature Fusion Method with Attention for Rolling Bearing Fault Diagnosis. Sensors 2022, 22, 6489. [Google Scholar] [CrossRef]

- Chen, Z.; Li, C.; Sanchez, R.-V. Gearbox Fault Identification and Classification with Convolutional Neural Networks. Shock. Vib. 2015, 2015, 390134. [Google Scholar] [CrossRef]

- Wang, H.; Li, S.; Song, L.; Cui, L. A novel convolutional neural network based fault recognition method via image fusion of multi-vibration-signals. Comput. Ind. 2018, 105, 182–190. [Google Scholar] [CrossRef]

- Huang, W.; Cheng, J.; Yang, Y.; Guo, G. An improved deep convolutional neural network with multi-scale information for bearing fault diagnosis. Neurocomputing 2019, 359, 77–92. [Google Scholar] [CrossRef]

- Zhao, B.; Zhang, X.; Li, H.; Yang, Z. Intelligent fault diagnosis of rolling bearings based on normalized CNN considering data imbalance and variable working conditions. Knowl. -Based Syst. 2020, 199, 105971. [Google Scholar] [CrossRef]

- Wang, Y.-R.; Sun, G.-D.; Jin, Q. Imbalanced sample fault diagnosis of rotating machinery using conditional variational auto-encoder generative adversarial network. Appl. Soft Comput. 2020, 92, 106333. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Martin-Diaz, I.; Morinigo-Sotelo, D.; Duque-Perez, O.; Romero-Troncoso, R.D.J. Early Fault Detection in Induction Motors Using AdaBoost With Imbalanced Small Data and Optimized Sampling. IEEE Trans. Ind. Appl. 2016, 53, 3066–3075. [Google Scholar] [CrossRef]

- Mao, W.; He, L.; Yan, Y.; Wang, J. Online sequential prediction of bearings imbalanced fault diagnosis by extreme learning machine. Mech. Syst. Signal Process. 2017, 83, 450–473. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. arXiv 2014. [Google Scholar] [CrossRef]

- Diaz-Pinto, A.; Colomer, A.; Naranjo, V.; Morales, S.; Xu, Y.; Frangi, A.F. Retinal Image Synthesis and Semi-Supervised Learning for Glaucoma Assessment. IEEE Trans. Med Imaging 2019, 38, 2211–2218. [Google Scholar] [CrossRef]

- Heo, H.; Hwang, Y. Automatic Sketch Colorization using DCGAN. In Proceedings of the 18th International Conference on Control, Automation and Systems (ICCAS), PyeongChang, Republic of Korea, 17–20 October 2018. [Google Scholar]

- Wang, R.; Zhang, S.; Chen, Z.; Li, W. Enhanced generative adversarial network for extremely imbalanced fault diagnosis of rotating machine. Measurement 2021, 180, 109467. [Google Scholar] [CrossRef]

- Zhou, F.; Yang, S.; Fujita, H.; Chen, D.; Wen, C. Deep learning fault diagnosis method based on global optimization GAN for unbalanced data. Knowledge-Based Syst. 2019, 187, 104837. [Google Scholar] [CrossRef]

- Li, Z.; Zheng, T.; Wang, Y.; Cao, Z.; Guo, Z.; Fu, H. A Novel Method for Imbalanced Fault Diagnosis of Rotating Machinery Based on Generative Adversarial Networks. IEEE Trans. Instrum. Meas. 2020, 70, 1–17. [Google Scholar] [CrossRef]

- Guo, Q.; Li, Y.; Song, Y.; Wang, D.; Chen, W. Intelligent Fault Diagnosis Method Based on Full 1-D Convolutional Generative Adversarial Network. IEEE Trans. Ind. Inform. 2019, 16, 2044–2053. [Google Scholar] [CrossRef]

- Zhao, B.; Yuan, Q. Improved generative adversarial network for vibration-based fault diagnosis with imbalanced data. Measurement 2021, 169, 108522. [Google Scholar] [CrossRef]

- Liang, P.; Deng, C.; Wu, J.; Yang, Z.; Zhu, J.; Zhang, Z. Single and simultaneous fault diagnosis of gearbox via a semi-supervised and high-accuracy adversarial learning framework. Knowledge-Based Syst. 2020, 198, 105895. [Google Scholar] [CrossRef]

- Tao, H.; Wang, P.; Chen, Y.; Stojanovic, V.; Yang, H. An unsupervised fault diagnosis method for rolling bearing using STFT and generative neural networks. J. Frankl. Inst. 2020, 357, 7286–7307. [Google Scholar] [CrossRef]

- Verstraete, D.; Ferrada, A.; Droguett, E.L.; Meruane, V.; Modarres, M. Deep Learning Enabled Fault Diagnosis Using Time-Frequency Image Analysis of Rolling Element Bearings. Shock Vib. 2017, 2017, 5067651. [Google Scholar] [CrossRef]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; CourVille, A. Improved Training of Wasserstein GANs. arXiv 2017. [Google Scholar] [CrossRef]

- Zhang, H.; Goodfellow, I.; Metaxas, D.; Odena, A. Self-Attention Generative Adversarial Networks. arXiv 2018. [Google Scholar] [CrossRef]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef]

- Gao, Z.; Yang, G.; Li, E.; Liang, Z. Novel Feature Fusion Module-Based Detector for Small Insulator Defect Detection. IEEE Sensors J. 2021, 21, 16807–16814. [Google Scholar] [CrossRef]

- Miyato, T.; Kataoka, T.; Koyama, M.; Yoshida, Y. Spectral Normalization for Generative Adversarial Networks. arXiv 2018. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Wang, B.; Lei, Y.; Li, N.; Li, N. A Hybrid Prognostics Approach for Estimating Remaining Useful Life of Rolling Element Bearings. IEEE Trans. Reliab. 2018, 69, 401–412. [Google Scholar] [CrossRef]

- Bearing Data Center. Available online: http://csegroups.case.edu/bearingdatacenter/home (accessed on 15 July 2022).

- Li, N.; Lei, Y.; Lin, J.; Ding, S.X. An Improved Exponential Model for Predicting Remaining Useful Life of Rolling Element Bearings. IEEE Trans. Ind. Electron. 2015, 62, 7762–7773. [Google Scholar] [CrossRef]

- Shmelkov, K.; Schmid, C.; Alahari, K. How good is my GAN? In Lecture Notes in Computer Science; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; Volume 11206. [Google Scholar] [CrossRef]

- Zhang, T.; Chen, J.; Li, F.; Zhang, K.; Lv, H.; He, S.; Xu, E. Intelligent fault diagnosis of machines with small & imbalanced data: A state-of-the-art review and possible extensions. ISA Trans. 2021, 119, 152–171. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).