Lensless Three-Dimensional Imaging under Photon-Starved Conditions

Abstract

1. Introduction

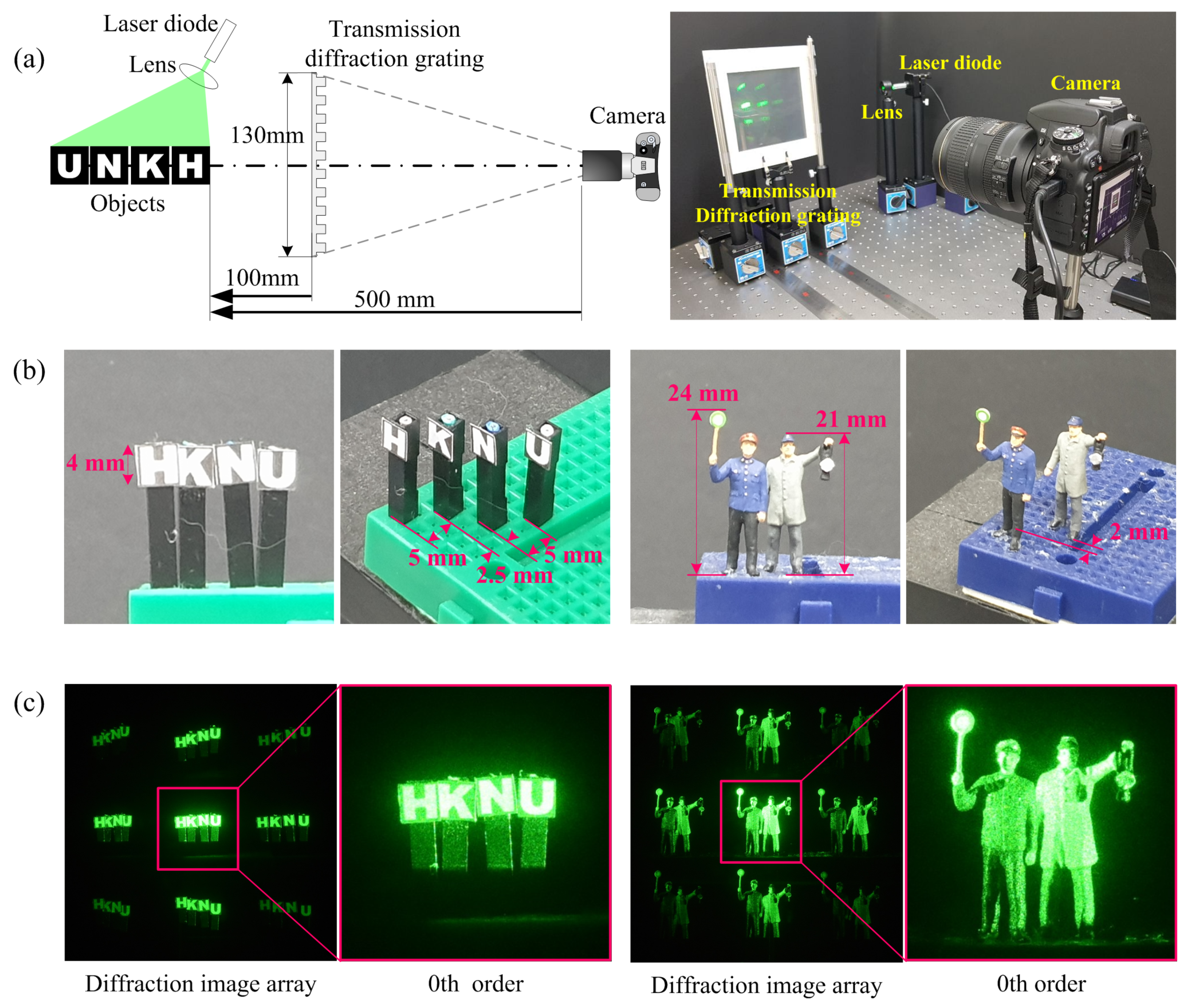

2. Lensless Three-Dimensional Imaging and Computational Reconstruction

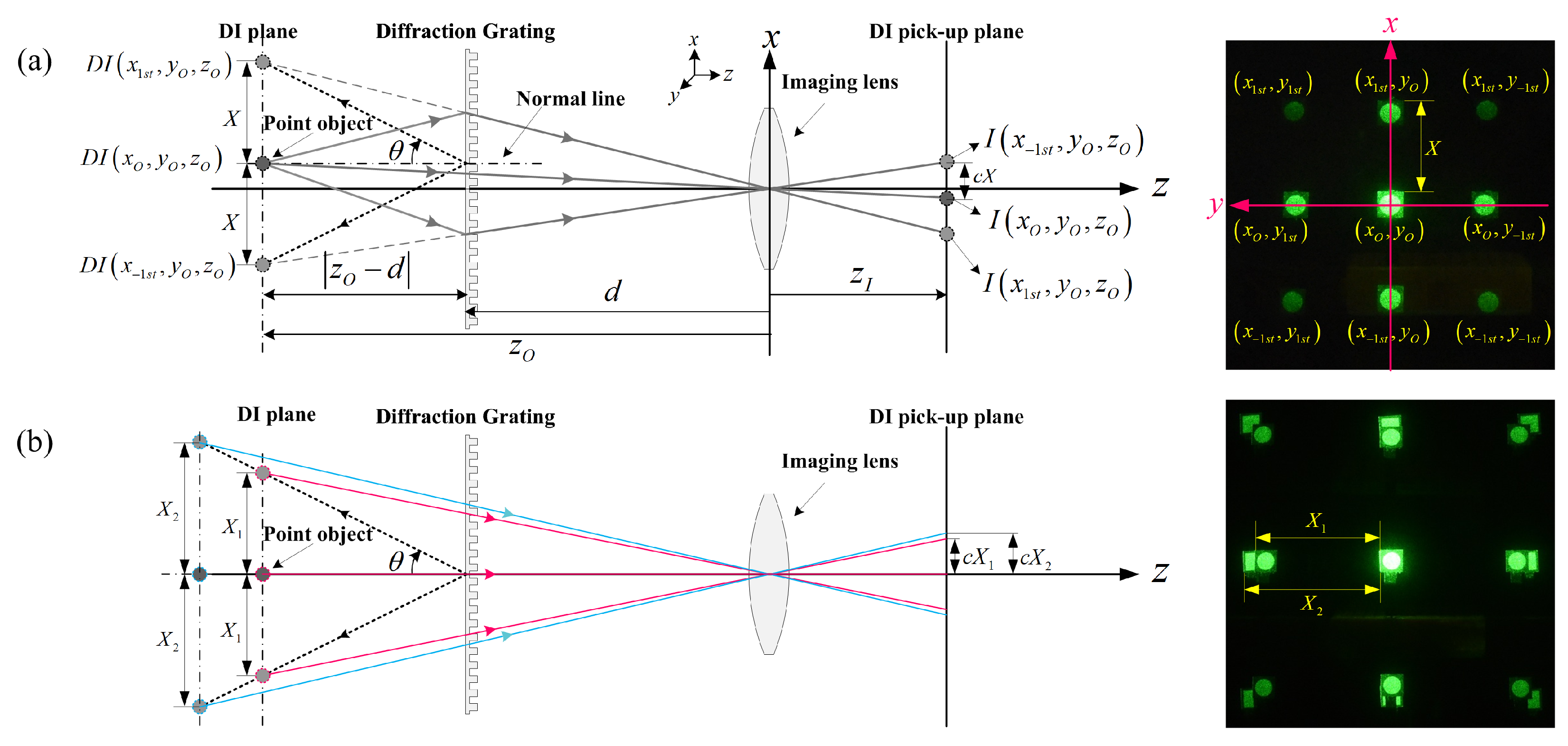

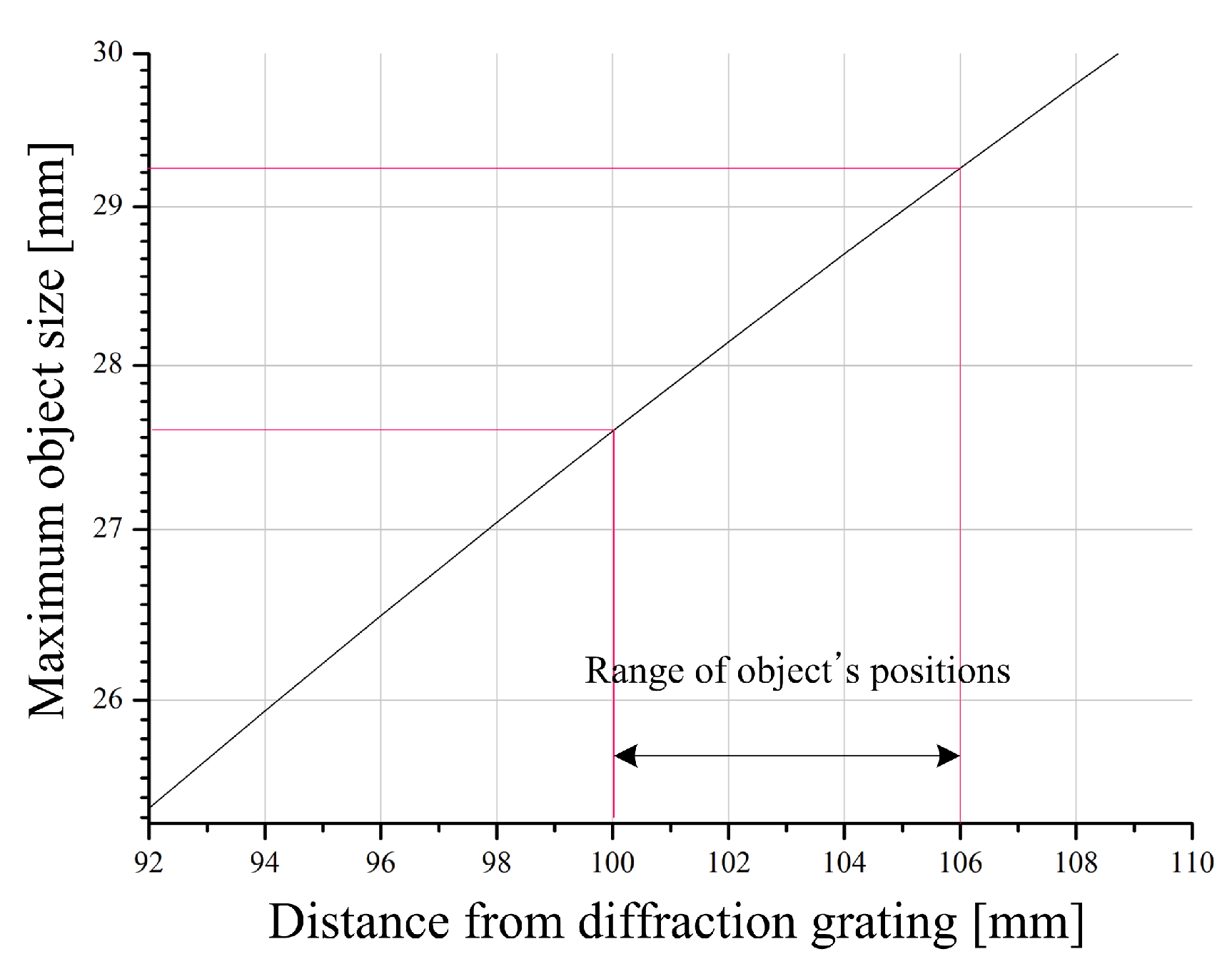

2.1. Geometric Relations

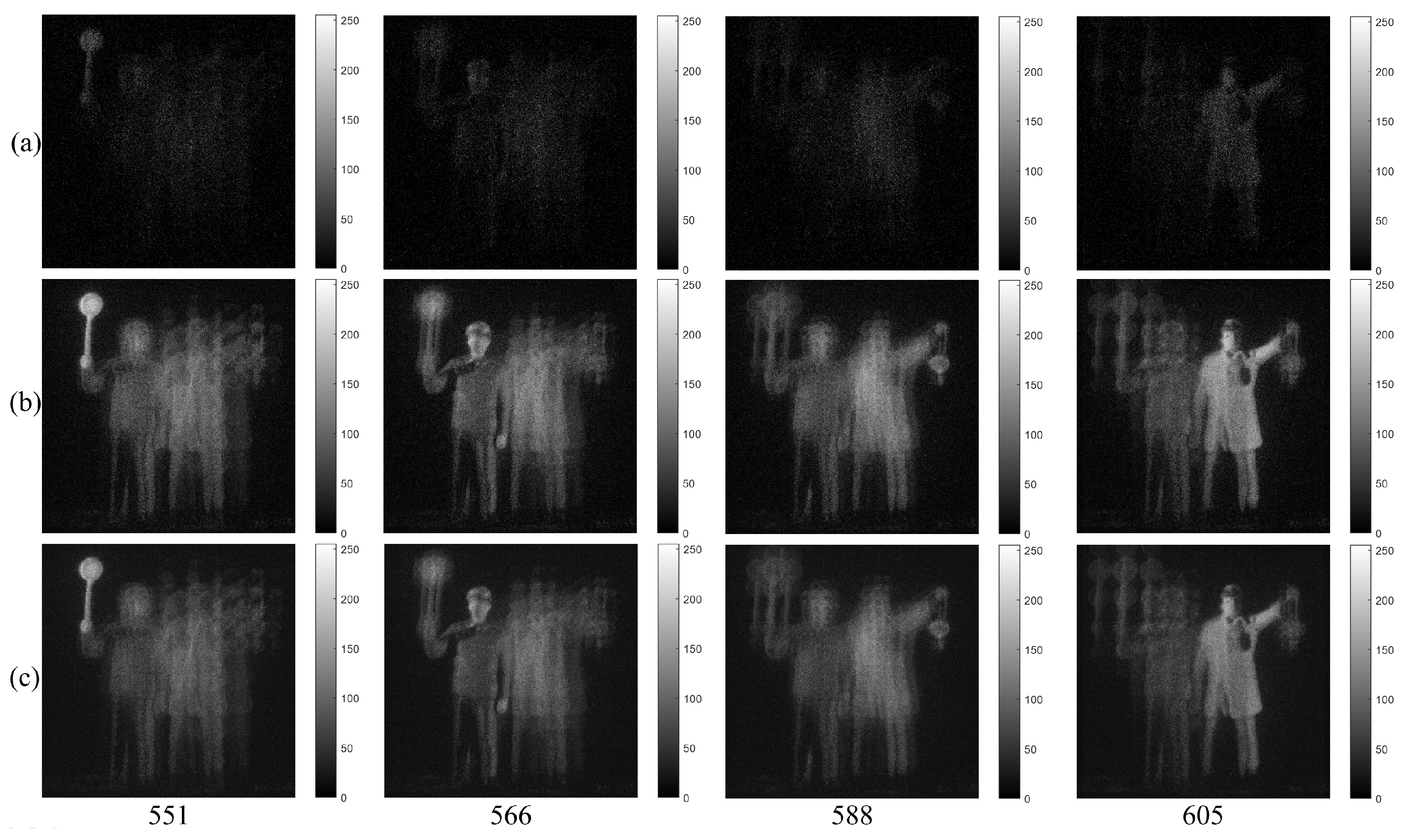

2.2. Imaging Formation of Diffraction Grating Imaging

2.3. Computational Reconstruction of Diffraction Grating Imaging

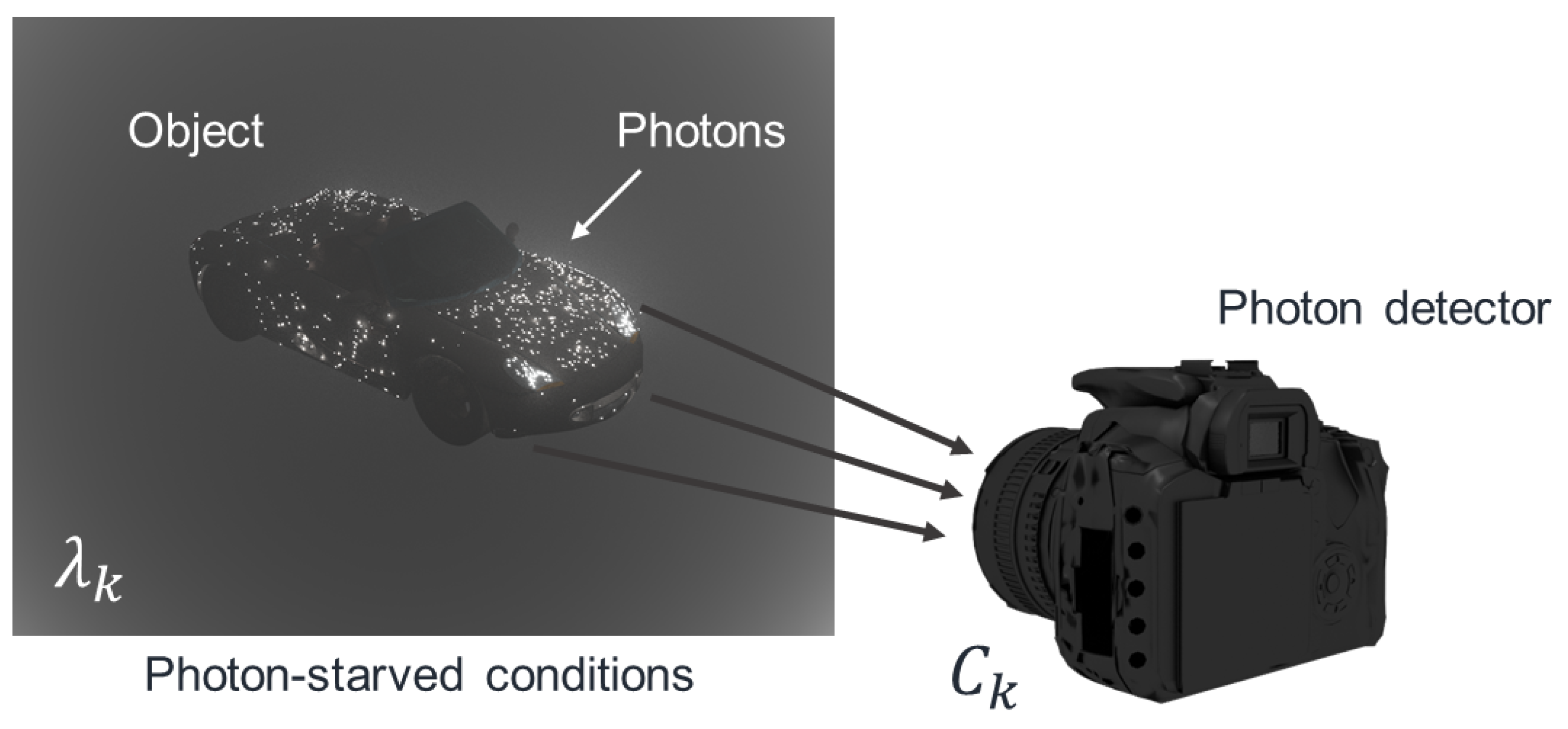

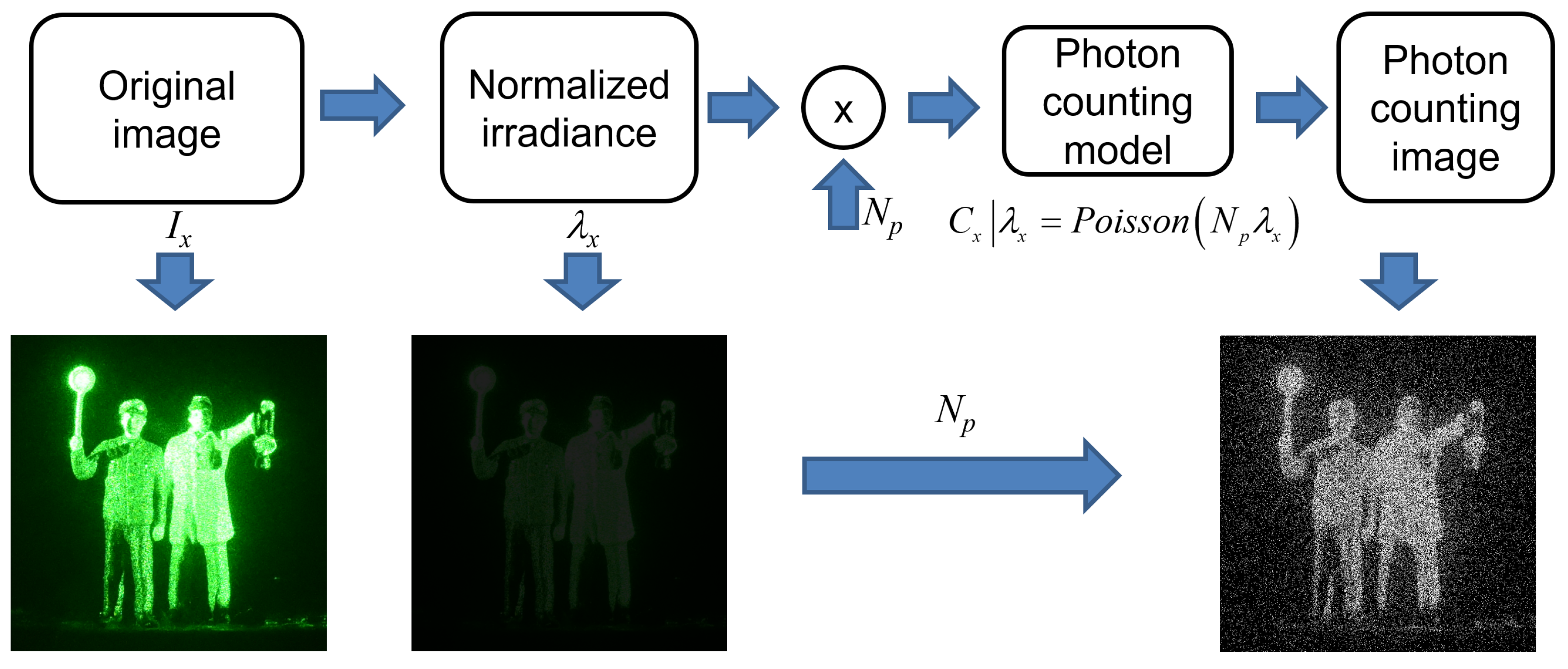

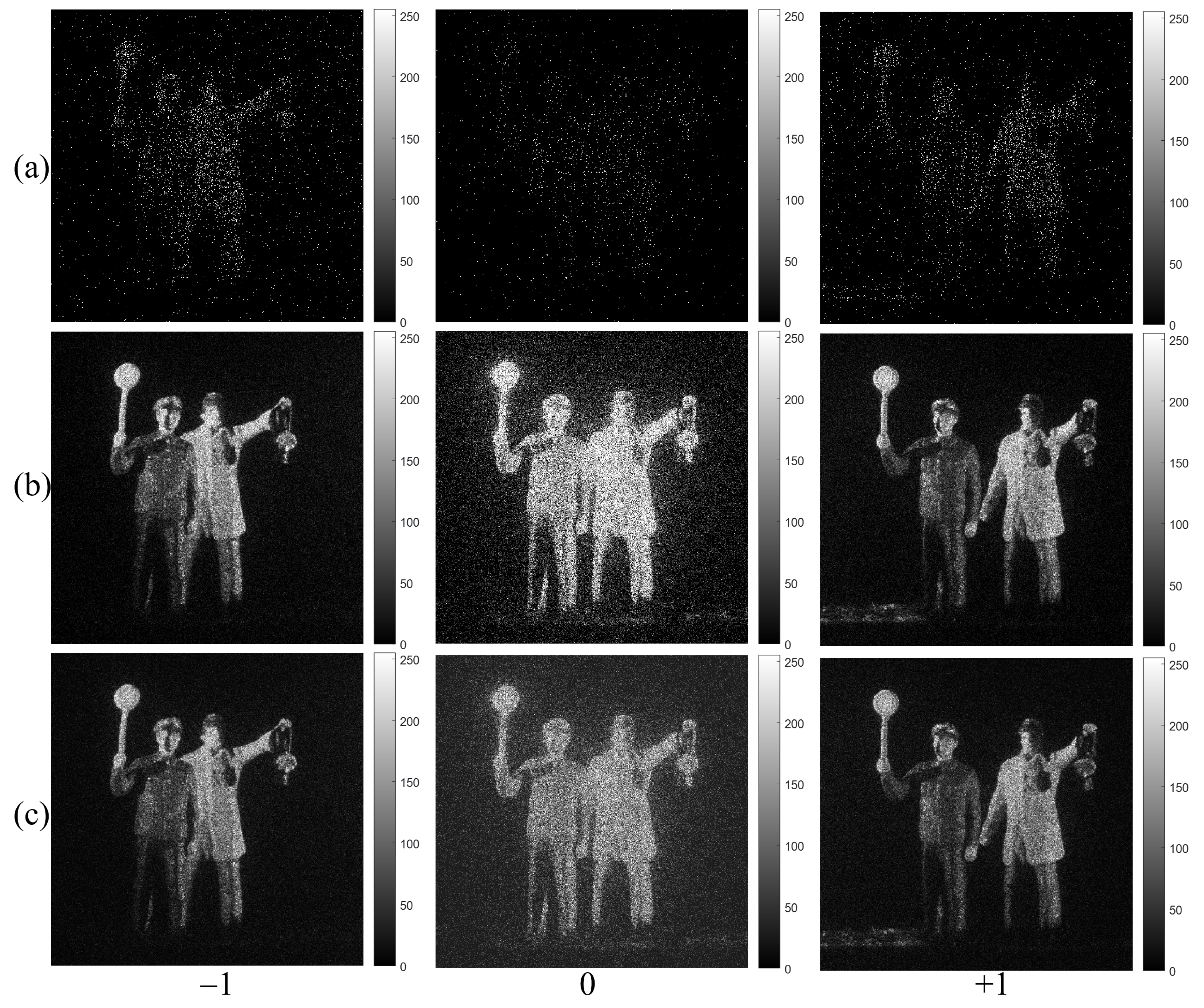

3. Photon Counting Method

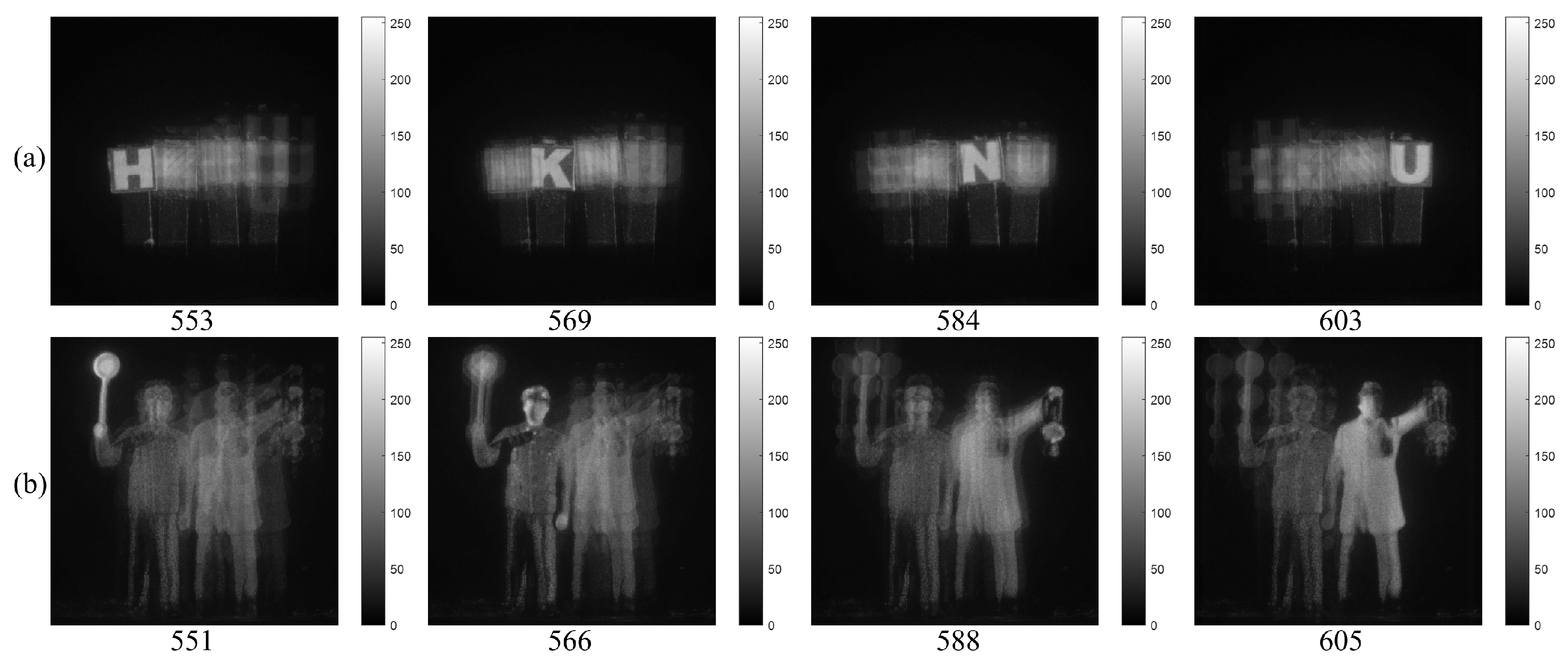

4. 3D Reconstruction under Photon-Starved Conditions Using Lensless 3D Imaging

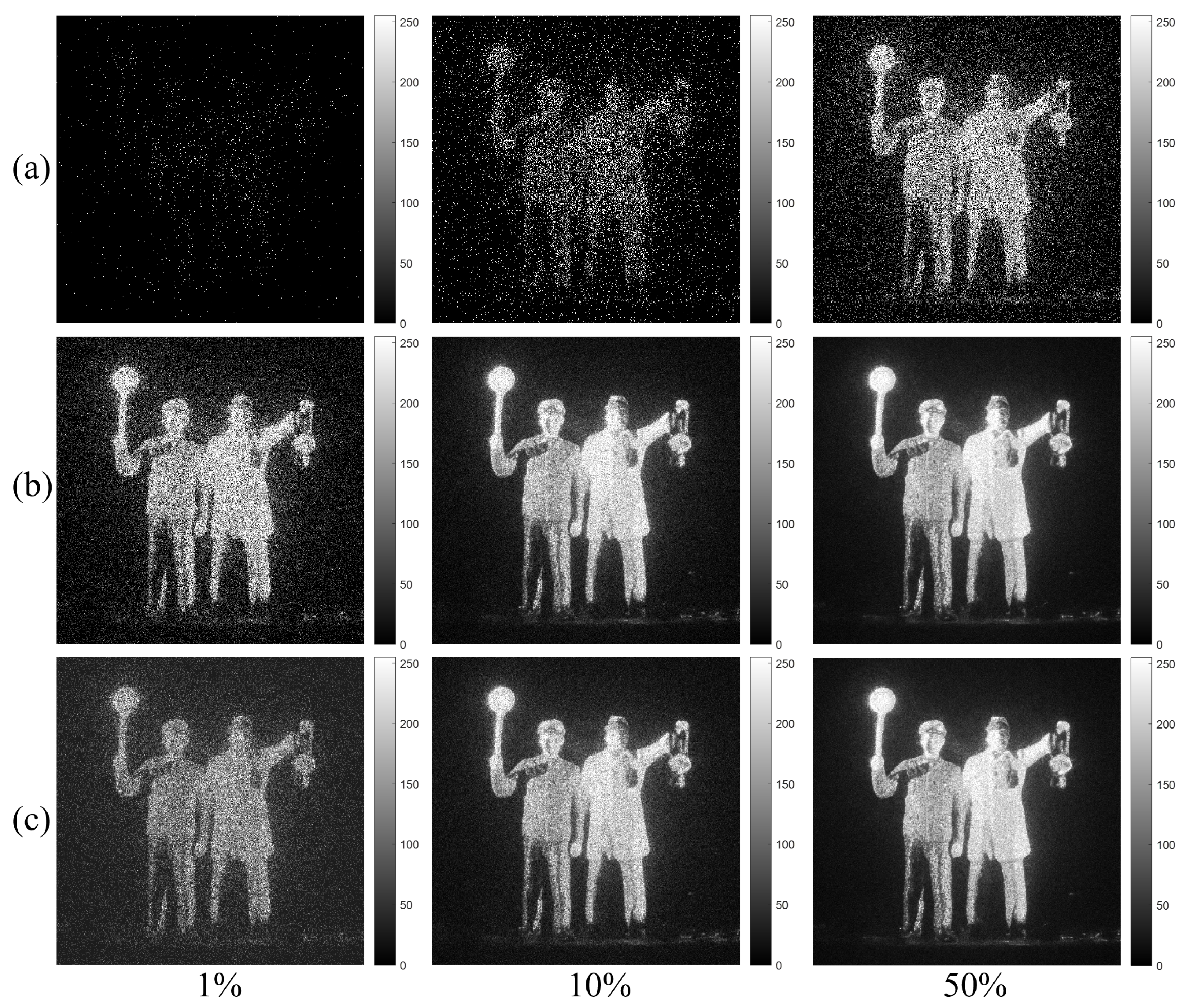

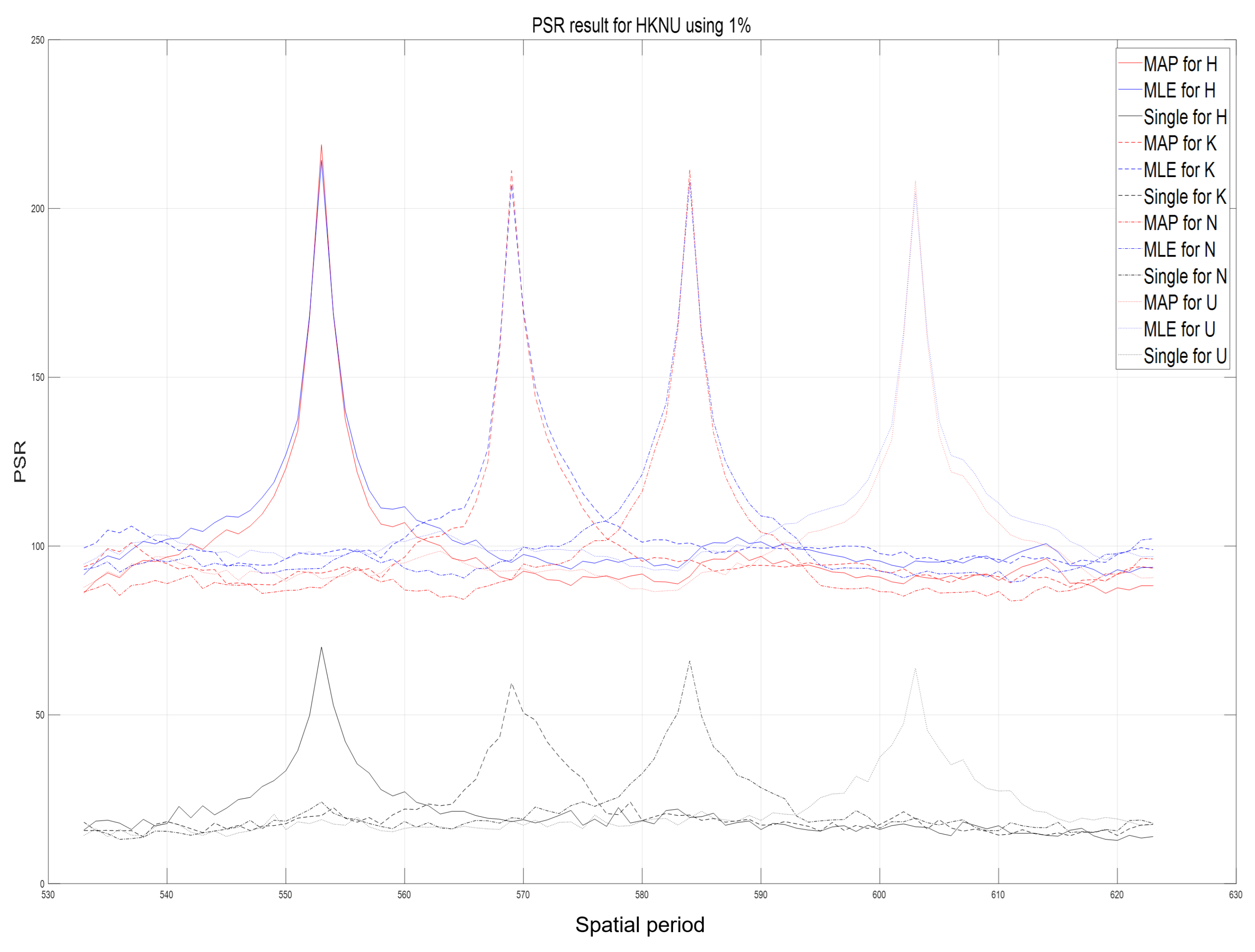

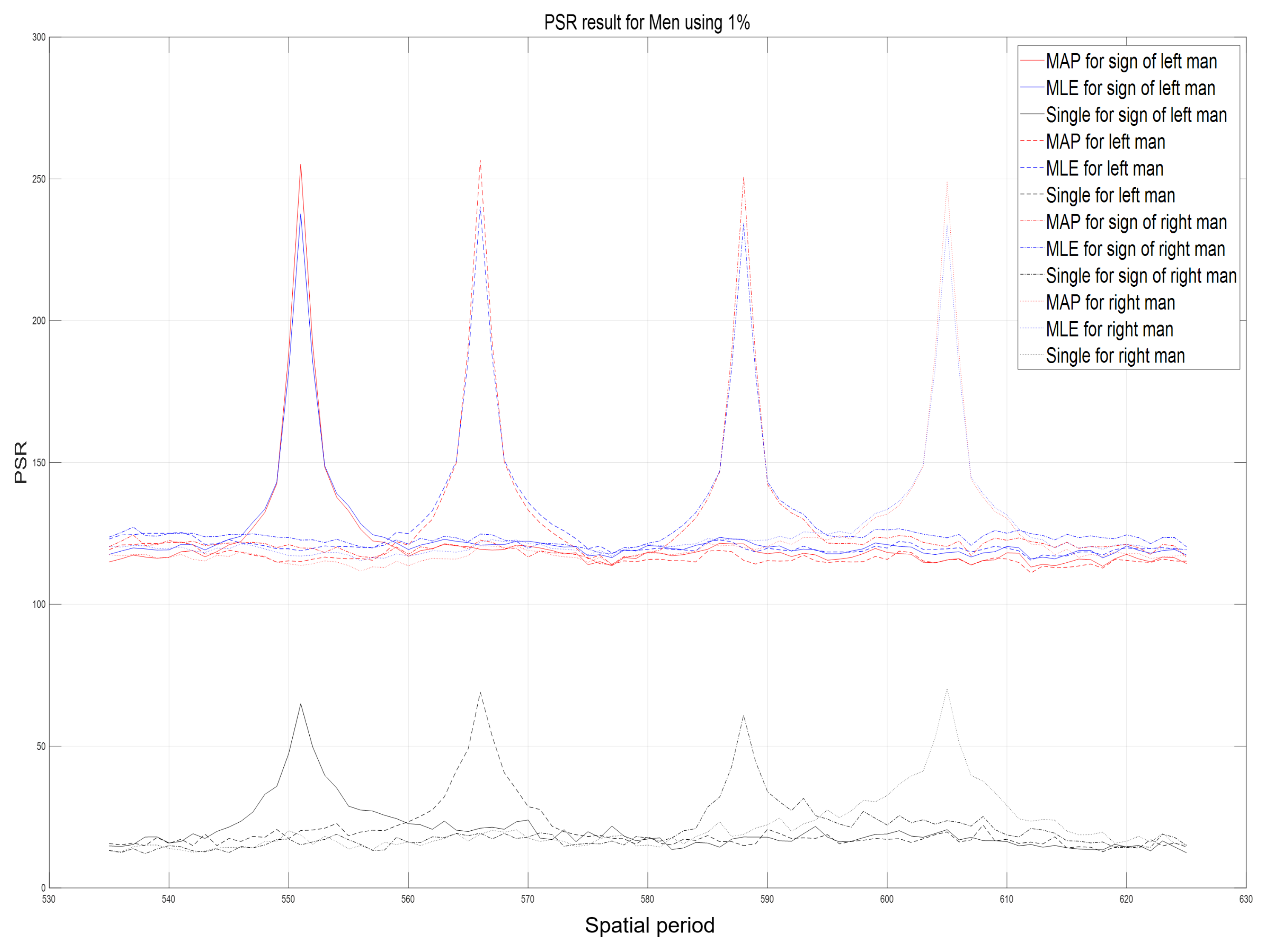

5. Experimental Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| MAP | Maximum A Posterior |

| MLE | Maximum Likelihood Estimation |

| DI | diffraction image |

| DIA | diffraction image Array |

References

- Lippmann, G. La photographie integrale. C. R. Acad. Sci. 1908, 146, 446–451. [Google Scholar]

- Arai, J.; Okano, F.; Hoshino, H.; Yuyama, I. Gradient index lens array method based on real time integral photography for three dimensional images. Appl. Opt. 1998, 37, 2034–2045. [Google Scholar] [CrossRef] [PubMed]

- Okano, F.; Arai, J.; Mitani, K.; Okui, M. Real-time integral imaging based on extremely high resolution video system. Proc. IEEE 2006, 94, 490–501. [Google Scholar] [CrossRef]

- Cho, M.; Daneshpanah, M.; Moon, I.; Javidi, B. Three-dimensional optical sensing and visualization using integral imaging. Proc. IEEE 2011, 99, 556–575. [Google Scholar]

- Jang, J.-S.; Javidi, B. Three-dimensional synthetic aperture integral imaging. Opt. Lett. 2002, 13, 1144–1146. [Google Scholar] [CrossRef]

- Lee, J.; Cho, M. Three-dimensional integral imaging with enhanced lateral and longitudinal resolutions using multiple pickup positions. Sensors 2022, 22, 9199. [Google Scholar] [CrossRef]

- Levoy, M. Light fields and computational imaging. IEEE Comput. Mag. 2006, 39, 46–55. [Google Scholar] [CrossRef]

- Martinez-Corral, M.; Javidi, B. Fundamentals of 3D imaging and displays: A tutorial on integral imaging, light-field, and plenoptic systems. Adv. Opt. Photonics 2018, 3, 512–566. [Google Scholar] [CrossRef]

- Antipa, N.; Kuo, G.; Heckel, R.; Mildenhall, B.; Bostan, E.; Ng, R.; Waller, L. DiffuserCam: Lensless single-exposure 3D imaging. Optica 2018, 5, 1–9. [Google Scholar] [CrossRef]

- Bagadthey, D.; Prabhu, S.; Khan, S.S.; Fredrick, D.T.; Boominathan, V.; Veeraraghavan, A.; Mitra, K. FlatNet3D: Intensity and absolute depth from single-shot lensless capture. J. Opt. Soc. Am. A 2022, 39, 1903–1912. [Google Scholar] [CrossRef]

- Jang, J.-Y.; Ser, J.-I.; Kim, E.-S. Wave-optical analysis of parallax-image generation based on multiple diffraction gratings. Opt. Lett. 2013, 38, 1835–1837. [Google Scholar] [CrossRef]

- Jang, J.-Y.; Yoo, H. Computational reconstruction for three-dimensional imaging via a diffraction grating. Opt. Express 2019, 27, 27820–27830. [Google Scholar] [CrossRef]

- Jang, J.-Y.; Yoo, H. Image Enhancement of Computational Reconstruction in Diffraction Grating Imaging Using Multiple Parallax Image Arrays. Sensors 2020, 20, 5137. [Google Scholar] [CrossRef] [PubMed]

- Jang, J.-Y.; Yoo, H. Computational Three-Dimensional Imaging System via Diffraction Grating Imaging with Multiple Wavelengths. Sensors 2021, 21, 6982. [Google Scholar] [CrossRef] [PubMed]

- Tavakoli, B.; Javidi, B.; Watson, E. Three dimensional visualization by photon counting computational integral imaging. Opt. Express 2008, 16, 4426–4436. [Google Scholar] [CrossRef]

- Jung, J.; Cho, M.; Dey, D.K.; Javidi, B. Three-dimensional photon counting integral imaging using Bayesian estimation. Opt. Lett. 2010, 35, 1825–1827. [Google Scholar] [CrossRef]

- Cho, M.; Mahalanobis, A.; Javidi, B. 3D passive photon counting automatic target recognition using advanced correlation filters. Opt. Lett. 2011, 36, 861–863. [Google Scholar] [CrossRef] [PubMed]

- Cho, M. Three-dimensional color photon counting microscopy using Bayesian estimation with adaptive priori information. Chin. Opt. Lett. 2015, 13, 070301. [Google Scholar]

- Cho, M.; Javidi, B. Three-dimensional photon counting imaging with axially distributed sensing. Sensors 2016, 16, 1184. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Cho, M.; Lee, M.-C. 3D visualization for extremely dark scenes using merging reconstruction and maximum likelihood estimation. J. Inf. Commun. Converg. Eng. 2021, 19, 102–107. [Google Scholar]

- Lee, J.; Lee, M.-C.; Cho, M. Three-dimensional photon counting imaging with enhanced visual quality. J. Inf. Commun. Converg. Eng. 2021, 19, 180–187. [Google Scholar]

- Lee, J.; Kurosaki, M.; Cho, M.; Lee, M.-C. Noise reduction for photon counting imaging using discrete wavelet transform. J. Inf. Commun. Converg. Eng. 2021, 19, 276–283. [Google Scholar]

- Lee, J.; Cho, M. Enhancement of three-dimensional image visualization under photon-starved conditions. Appl. Opt. 2022, 61, 6374–6382. [Google Scholar] [CrossRef]

- Lee, J.; Cho, M.; Lee, M.-C. 3D photon counting integral imaging by using multi-level decomposition. J. Opt. Soc. Am. A 2022, 39, 1434–1441. [Google Scholar] [CrossRef] [PubMed]

- Goodman, J.W. Statistical Optics, 2nd ed.; Wiley: New York, NY, USA, 2015. [Google Scholar]

- Cho, B.; Kopycki, P.; Martinez-Corral, M.; Cho, M. Computational volumetric reconstruction of integral imaging with improved depth resolution considering continuously non-uniform shifting pixels. Opt. Lasers Eng. 2018, 111, 114–121. [Google Scholar] [CrossRef]

- Inoue, K.; Cho, M. Visual quality enhancement of integral imaging by using pixel rearrangement technique with convolution operator (CPERTS). Opt. Lasers Eng. 2018, 111, 206–210. [Google Scholar] [CrossRef]

- Inoue, K.; Cho, M. Fourier focusing in integral imaging with optimum visualization pixels. Opt. Lasers Eng. 2020, 127, 105952. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jang, J.-Y.; Cho, M. Lensless Three-Dimensional Imaging under Photon-Starved Conditions. Sensors 2023, 23, 2336. https://doi.org/10.3390/s23042336

Jang J-Y, Cho M. Lensless Three-Dimensional Imaging under Photon-Starved Conditions. Sensors. 2023; 23(4):2336. https://doi.org/10.3390/s23042336

Chicago/Turabian StyleJang, Jae-Young, and Myungjin Cho. 2023. "Lensless Three-Dimensional Imaging under Photon-Starved Conditions" Sensors 23, no. 4: 2336. https://doi.org/10.3390/s23042336

APA StyleJang, J.-Y., & Cho, M. (2023). Lensless Three-Dimensional Imaging under Photon-Starved Conditions. Sensors, 23(4), 2336. https://doi.org/10.3390/s23042336