Abstract

Recently, the concept of the internet of things and its services has emerged with cloud computing. Cloud computing is a modern technology for dealing with big data to perform specified operations. The cloud addresses the problem of selecting and placing iterations across nodes in fog computing. Previous studies focused on original swarm intelligent and mathematical models; thus, we proposed a novel hybrid method based on two modern metaheuristic algorithms. This paper combined the Aquila Optimizer (AO) algorithm with the elephant herding optimization (EHO) for solving dynamic data replication problems in the fog computing environment. In the proposed method, we present a set of objectives that determine data transmission paths, choose the least cost path, reduce network bottlenecks, bandwidth, balance, and speed data transfer rates between nodes in cloud computing. A hybrid method, AOEHO, addresses the optimal and least expensive path, determines the best replication via cloud computing, and determines optimal nodes to select and place data replication near users. Moreover, we developed a multi-objective optimization based on the proposed AOEHO to decrease the bandwidth and enhance load balancing and cloud throughput. The proposed method is evaluated based on data replication using seven criteria. These criteria are data replication access, distance, costs, availability, SBER, popularity, and the Floyd algorithm. The experimental results show the superiority of the proposed AOEHO strategy performance over other algorithms, such as bandwidth, distance, load balancing, data transmission, and least cost path.

1. Introduction

Nowadays, cloud computing has become an essential part of the life of companies, large organizations, and big data. The internet of things uses cloud computing to transfer data through sensors in cloud environments [1,2,3,4,5,6,7]. Cloud computing provides many services to users and is pay-to-use. Cloud computing is also used in farms, networks, factories, companies, and other industrial environments [8,9,10,11,12,13,14,15]. The internet of things is also used in data transfer in many large and medium companies, military police, and medicine. Cloud computing consists of infrastructure (IaaS), platform as a service (PaaS), and top-layer software as a service (SaaS) [16,17,18,19,20,21,22]. In addition, cloud computing environments are cheaper than other systems and offer many advantages, such as pay-to-use, scalability, flexibility, high data availability, and system availability. Load balancing across the network is also essential to reduce user waiting time. Cloud computing provides maximum utilization of the available resources and optimal utilization according to each user’s budget [23,24,25,26,27].

Data condensation applications, including static and dynamic replication, relocation from remote geographic locations, and positioning near users were examined. There are three critical problems. (1) What data should be replicated? (2) When should the data be replicated? Finally, (3) where should the new replicas be placed? These are the three main open issues that must be handled for data replication in cloud computing [28]. Determining the optimal and shortest path is vital in addressing moving and positioning replication across nodes near users. Reduce user waiting by balancing data availability across nodes and file high availability across cloud computing. Replication technology saves data, improves performance balance, and fetches files from remote sites according to users’ demands. The proposed strategy also provides data to users from different geographical locations according to their budgets. It also determines the most popular files, and the proposed strategy determines and places them in the path of users [29,30].

Motivation and Contributions

This work’s motivation is using swarm intelligence to enhance file replication in an IoT-based cloud environment. The main issue of this problem is in the selection and placement data replication process for the least cost path, distance, SBER, reduced time, and cost. This is for quick access to data replication across nodes and the load reduction in the cloud environment. The three problems are: (1) what data should be replicated?; (2) when should the data be replicated?; and (3) where should the new replicas be placed? [28]. These three main open questions must be tackled for data replication in cloud computing. The primary importance is selecting and placing data replication in the data center with less time, response time, and cost, as well as using the least cost path.

The major contributions of the article are given as follows:

- Design a discrete AOEHO strategy for solving the dynamic data replication problem in a fog computing environment.

- Improving a swarm intelligent technique based on the hybrid aquila optimizer (AO) algorithm with the elephant herding optimization (EHO) for solving dynamic data replication problems in the fog computing environment.

- Developing a multi-objective optimization based on the proposed AOEHO to decrease the bandwidth to enhance the load balancing and cloud throughput. It evaluates data replication using seven criteria. These criteria are data replication access, distance, costs, availability, SBER, popularity, and the Floyd algorithm.

- The experimental results show the superiority of the AOEHO strategy performance over other algorithms, such as bandwidth, distance, load balancing, data transmission, and least cost path.

2. Related Work

Many related studies have researched data replication strategies in the cloud, as follows:

Create a schema for data replication among nodes while preserving privacy and secrecy in fog computing. K. Sarwar et al., in [31] suggested two cross-node replication privacy techniques that were implemented for data security, reliability, and authentication. Compared to other algorithms, the suggested approach fared better regarding memory usage, cost, confidentiality, and privacy.

D. Chen et al., suggested the first decentralized system, BOSSA, which works with all parties on blockchain platforms and shows data retrieval and repeatability. BOSSA also uses privacy-enhancing technology to stop decentralized peers, such as blockchain nodes, from drawing personal conclusions from public data. In order to use intelligent nodes on the Ethereal blockchain, we construct a BOSSA-based prototype and present the security analysis in the context of integrity, privacy, and reliability. Our thorough beta reviews show how workable our suggestion is [32].

The task of time, cost, and energy method optimization plan for task scheduling techniques. C. Li et al., in [33] introduced the Lagrange method to unwinding. This technique considers load balancing, storage, data dependency, data transfer, time, cost, and bandwidth to achieve the shortest data transmission time between nodes. A proposed fault-tolerant task scheduling approach is directed toward cloudlets. The experiments supported the effectiveness of the suggested approach in selecting the best site by the suggested algorithm and transferring data using cloud computing.

T. Shiet et al., presented a novel strategy called multi-cloud application deployment (MCApp). MCApp combines domain-specific big-node search with iterative mixed integer linear programming to streamline the deployment of data replication and user requests. The trials that validated the performance of the suggested approach using actual data and datasets show that MCApp performs noticeably better than other algorithms [34]. A. Majed et al. developed a hybrid strategy for peer-to-peer data replication in cloud environments. It efficiently selected the network’s best and most ideal nodes. Additionally, it chooses and positions the most accessible and often-used user data files. The outcomes of the experiments revealed enhanced network functionality and decreased user waiting [35].

C. LiA et al., suggested an approach based on the Lagrangian relaxation technique for cloud computing’s ideal data replication among nodes. Think about balancing transmission time, bandwidth, and loads. To save money and bandwidth, you can also use the Floyd algorithm. The outcomes demonstrated the suggested algorithm’s superiority to competing algorithms [36].

A. Khelifa et al., introduced a plan for regular and dynamic data replication in cloud computing. The proposed approach intends to decrease the time needed to investigate user requests, achieve load balancing, decrease waiting times, and expedite data access. Additionally, it speeds up data transmission and cloud computing transfer. Additionally, a fuzzy logic approach was implemented for replicating data among nodes using select and placement. It turned out that the suggested algorithm was better than other algorithms [37].

B. Mohammadi et al., presented a cloud computing algorithm for deciding on and configuring data replication among nodes. Reduce user wait times by utilizing the hybrid fuzzy logic and ant colony optimization technique to identify the most appropriate and effective nodes for placement data replication. The suggested algorithm fared better than the competition [38]. An overview of the given studies is presented in Table 1.

Table 1.

Comparison of the reviewed select and placement data replication in the cloud environment.

3. Suggested System and Discussion

3.1. Proposed System and Structure

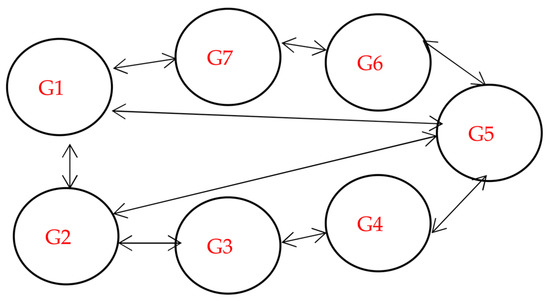

Figure 1 shows geographically dispersed nodes containing a host, virtual machines (VMS), memory, a CPU, a block, etc. The proposed system comprises file replication, cloudlets, files, blocks, DCs, hosts, VMS, brokers, replica management, and a replica catalog. In order to complete specific activities, such as accessing data replication across nodes or remote geographic locations, the broker acts as a mediator between the user and the DCs. The different DCs, f1, f2, and fn, are filled with many files and randomly dispersed to the other DCs in the following stages. The suggested system is split into two components: choosing and positioning dynamic data replication through the nodes and accessing data using the quickest and least expensive route. To place data replication close to users and select the quickest and the best route to the ideal contract, data replication determination is based on users’ most popular and easily accessible files over time. We combined MOO with HHO to achieve the shortest resource and lowest cost path among nodes in order to maximize cloud computing across nodes.

Figure 1.

Proposed model for data replication in fog computing.

The data centers can be represented in the model of DCs {dc1, dc2, …, dcs}. The host (PM) can be represented as PMs {pm1, pm2, …, pmy}. The virtual machines can be represented as VMs {vm1, vm2, …, vmz}. File can be represented F= {f1, f2, …, fx}. The block can be represented as B = {b1, b2, …, bm}. Geographically in fog computing, G = {g1, g2, …, gn}.

This section describes the strategy selection and placement of data replication using a hybrid aquila optimizer (AO) algorithm with the elephant herding optimization (EHO) in fog computing. We assume that our proposed system is composed of a certain number of fog nodes (consisting of region point of presences (RPOPs), local point of presences (LPOPs), and gateways), data centers (DCs), internet of things components, and equipment, such as RFID and sensors.

A region point of presence (RPOP) covers different geographical areas in the proposed strategy and a local point of presence (LPOP) in the proposed strategy. Services are deployed on data nodes or proposed fog nodes on IoT sensors. The fog broker, located on the fog nodes layer, is a crucial part of the suggested method. Task manager, resource monitoring service, and task scheduler are the three steps that make up fog broker. Our dynamic data replication approach based on IoT in cloud computing is essential to the fog computing system. A series of configurations are needed to transport data over fog computing, including selecting and placing data replication cross-nodes. We presume that our suggested strategy includes a specific number of fog nodes, such as data centers, IoT services, and DCs. We organized the suggested method from various geographical areas to select and place data replication between nodes in fog computing. Any DCs, fog nodes, or IoT sensors can be used to distribute services. The AO algorithm uses the EHO algorithm to transfer data via DCs with the least cost path and minimum bandwidth. MOO with a Floyd algorithm was also used to reduce the cost, bandwidth, and speed of data transmission in the fog cloud.

3.2. Aquila Optimizer (AO)

The aquila, a bird of prey, occupies second place after humans in intelligence because it has a remarkable ability to hunt and has higher capabilities than other animals. The following sections explain the aquila algorithm and how our proposed algorithm works [39].

- Step 1: Expanded exploration

Aquila rises high and detects the area of the place on a large scale, then attacks the prey vertically in the search area. The Equation can be represented as follows:

The can also be calculated as follows

, , t and T are the current iteration and max number of iteration, N is the population size, and R is random between 0 and 1.

- Step 2: Narrowed exploration

Aquila uses short methods to attack the prey within the specified area and circles around the prey. These are the most common ways to obtain and attack prey. The Equation can be represented as follows:

, D is the dimension size, where s and are constant values equal to 0.01 and 1.5, u and v are random numbers between 0 and 1, and y and x are used to present the spiral shape in the search. It can also be calculated as follows:

where r1 means the number of search cycles between 1 and 20, D1 is composed of integer numbers from 1 to the dimension size (D), and w equals 0.005.

- Step 3: Expanded exploitation

Aquila exploits the selected area of the foraging area and attacks the prey. Aquila uses methods to locate the prey area and attack vertically on it as a primary method. The behavior is represented as follows:

a and are the exploitation adjustment parameters fixed to 0.1 and UBj and LBj are the upper and lower bound of the problem.

- Step 4: Narrowed exploitation

Aquila chases the prey during its escape and the path it takes and attacks it on the ground. The equation can be represented as follows:

G1 denotes the movement parameter of aquila is a random number between [–1, 1]. G2 denotes the flight slope when chasing prey. X(t) is the current position, and QF(t) represents the quality function value.

3.3. Elephant Herding Optimization

3.3.1. Clan-Updating Operator

Elephants have habits according to their clan, and the mother leads the clan according to their nature [40,41]. The equations can be represented as follows:

where xnew, ci,j and xci,j present the new and old positions for elephant j in clan ci. xbest,ci is matriarch, representing the clan’s best elephant. a is in the range [0, 1], and r is in the range [0, 1]. The best elephant can be represented as follows:

is the center individual of clan ci, and is the range [0, 1].

The equations can be represented as follows in the d-th dimension:

represents the d-th dimension of the elephant individual, and nci indicates the number of elephants in clan ci.

3.3.2. Separating Operator

Male elephants leave the family separately when solving problems and improving them. The elephant with the worst fitness of every generation defines a group (class). The behavior can be represented as follows:

where upper and lower bound of the individual. indicates the worst individual in clan ci. Rand is between 0 and 1.

3.4. Proposed Swarm Intelligence for Data Replication

This section describes the proposed strategy to define and position replication across nodes in cloud computing environments. For the proposed technique, the shortest path, bandwidth, time, cost, and distance were calculated based on the internet of things via fog computing. Use iFogSim to test the proposed strategy.

3.4.1. Cost and Time of Replication

Cost is a major factor for users to request replication from different geographical locations. The cost varies from one user to another according to the proposed system, the different infrastructure, and the budget of each user. The equation is as follows:

where

Cost of data set

Data replica in the region

A binary decision variable q

Price of replica

Bandwidth network between replicas in the region

3.4.2. Shortest Paths Problem (SPP) between Nodes Based on the Floyd

The problem of choosing and arranging dynamic data replication across a geographically dispersed node to the shortest and best channel in terms of data transmission and bandwidth is addressed in this study [40]. In fog computing, the Floyd finds the shortest path between nodes. The weighted length between the shortest path among the DCs is typically obtained while applying the Floyd algorithm in fog computing. The following is a representation of the equations:

ai,j is a path from node I to node j matrix m

first weighted adjacency matrix A = [ai,j]m × m

The state transition equation is as follows (Equation):

map [I, J]: = min {map [I, k] + map [K, J], map [I, J]}

Map [I, J] demonstrates the shortest distance from I to j.

K is the breakpoint of exhausting I and j.

3.4.3. Popularity Degree of the Data File

Users who access a file frequently, especially recently, determine its popularity. The file that has been located, cloned, and placed between DCs has recently gained much popularity among users. The equation can be shown as follows:

Each file’s replication factor (RFi) is calculated based on the popularity degree as in Equation (25).

The dynamic threshold (TH) value is calculated as in Equation (23).

popularity degree

number of access

time-based forgetting factor

replica factor

number of replicas

size of the data file

3.4.4. System-Level Availability

The system’s overall high availability is known as system byte effective rate (SBER). Tasks for data replication should allow users access to all files. Access to the most popular files is made possible by regular user access. SBER maintains the file’s popularity and accessibility throughout the entire system. An illustration of the equation is as follows:

3.4.5. Placement of New Replicas

It places a dynamic data replica between nodes to choose the shortest possible distances. The best minimum path and the least expensive option for consumers are considered while placing data replication across DCs. Additionally, it can be shown as [28,29,30]:

3.5. Computational Complexity

Calculate the time complexity of the proposed strategy AOEHO from tasks IOT application for the number of data repetitions. Calculate the no. of nodes and AO with EHO. Suppose N represents the size of the population, D represents the number of dimensions, T represents the number of tasks, and C represents the cost. The EHO algorithm has a calculated complexity of O(T(D*N + C*N)). Based on the algorithm phase, AOEHO strategy, the time complexity is O(N). Hence, the AOEHO total time complexity is O(N*T*C) and O(N). The main procedure of the proposed method is given in Algorithm 1.

| Algorithm 1: The Proposed Algorithm AOEHO |

| Input: Regions, datacenters, data availability, minimum distance between regions, cost, time, SBER, fog nodes, popularity data file, max_iter, population size, and number of IoT tasks. Output: select and place data file replica optimal Begin Initialize no. of IoT tasks Initialize the population Initialize the population using the fitness function Initialize availability and unavailability probabilities Initialize replicas according to costs and time Initialize distance between regions Initialize popularity data file Initialize data replication costs and time Initialize optimal best data replica placement in DC solution Initialize the least cost path Initialize SBER Initialize RF Initialize budget repeat Initialization phase: Initialize the population X of the AO. Initialize the parameters of the AO. WHILE (t < T) Calculate the fitness function values. Determine the best-obtained solution according to the fitness values (Xbest(t)). FOR (i = 1,2,...,N) Update the mean value of the current solution XM(t). Update the x, y, G1, G2, Levy(D), etc. IF (t ≤ (2/3)*T) IF (rand ≤ 0.5) Update the current solution using Equation (1). Step 1: Expanded exploration (X1) IF (Fitness X1(t + 1) < Fitness X(t)) X(t) = X1(t + 1) IF (Fitness X1(t + 1) <Fitness (Xbest(t)) Xbest(t) = X1(t + 1) ENDIF ENDIF ELSE Update the current solution using Equation (3). Step 2: Narrowed exploration (X2) IF (Fitness X2(t + 1) <Fitness X(t)) X(t) = X1(t + 1) IF (Fitness X2(t + 1) <Fitness (Xbest(t)) Xbest(t) = X2(t + 1) ENDIF ENDIF ENDIF ELSE IF (rand ≤ 0.5) Update the current solution using Equation (11). Step 3: Expanded exploitation (X3) IF (Fitness X3(t + 1) <Fitness X(t)) X(t) = X3(t + 1) IF (Fitness X3(t + 1) <Fitness (Xbest(t)) Xbest(t) = X3(t + 1) ENDIF ENDIF ELSE Update the current solution using Equation (12). Step 4: Narrowed exploitation (X4) IF (Fitness X4(t + 1) <Fitness X(t)) X(t) = X4(t + 1) IF (Fitness X4(t + 1) <Fitness (Xbest(t)) Xbest(t) = X4(t + 1) ENDIF ENDIF ENDIF ENDIF ENDFOR ENDWHILE Return the best solution (Xbest). Apply Elephant Herding Optimization (EHO) to AO t++ End while Calculate the RF Calculate the distance between regions Calculate SBER Calculate the cost and time Return the optimal minimum data replica placement in the region. |

4. Experimental Evaluation

4.1. Configuration Details

The proposed system has been implemented on iFogSim. AOEHO selects and coordinates placement dynamic data replication between fog nodes. In this section, we discuss the configuration and fog cloud for the proposed system. The parameters settings are given in Table 2 [42,43,44].

Table 2.

Parameters data sets of the system.

4.2. Results and Discussion

4.2.1. Different Scenarios of Data Replica Size

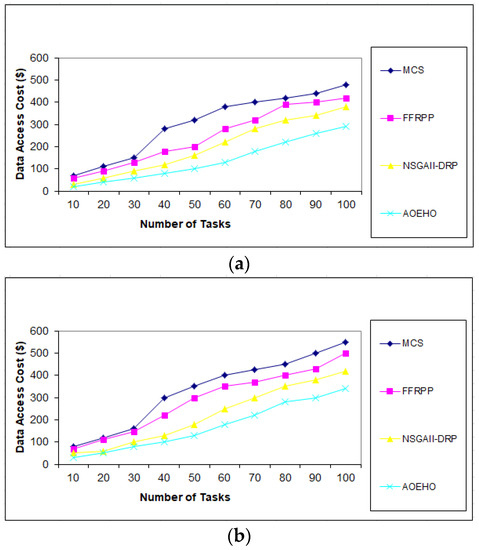

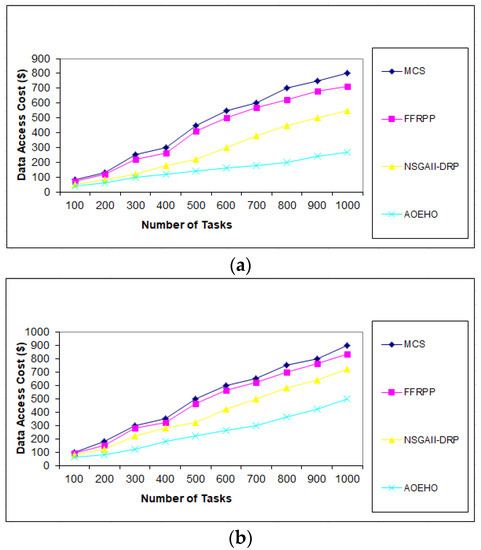

We created different scenarios of experiments on select and placement data replication, optimal user access, reduced waiting time, and reduced bandwidth. The proposed strategy was compared with three other strategies (MCS, FFRPP, and NSGAII-DRP) to evaluate their effectiveness of the proposed strategy. The proposed strategy is dynamic on IoT devices according to user access requests and choosing the best and most appropriate way to define and place replication over the IoT on cloud computing.

First Scenario of Tasks

Users submit a set of tasks to optimize data replication under different scenarios. The first scenario contains Figure 2, Figure 3 and Figure 4 of different data replication sizes, such as 64 and 320 MB. The tasks contain a different number according to the proposed strategy, ranging from 10 to 5000, to calculate the access cost for each task according to the proposed strategy. It considers the availability of data and the access time of each replica, determines the version that enjoys high popularity, selects it, and places it in the path of users. The proposed strategy outperformed other strategies in terms of cost deposited by users.

Figure 2.

Cost number of tasks and cost = 100 tasks. (a) Size of data replication = 64 MB. (b) Size of data replication = 320 MB.

Figure 3.

Cost number of tasks and cost = 1000 tasks. (a) Size of data replication = 64 MB. (b) Size of data replication = 320 MB.

Figure 4.

Cost number of tasks and cost = 5000 tasks. (a) Size of data replication = 64 MB. (b) Size of data replication = 320 MB.

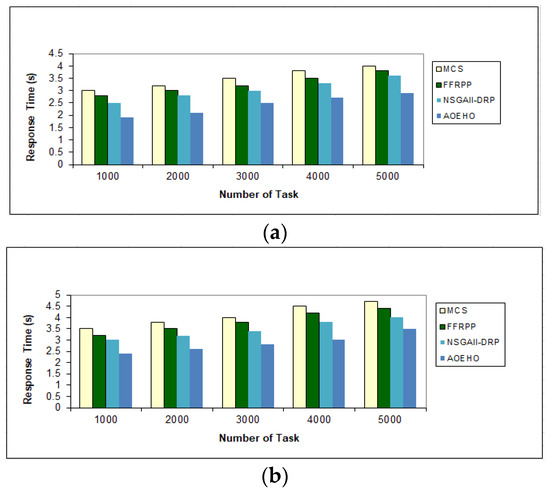

Second Scenario of Response Time for Tasks

Figure 5 shows the data access time with tasks ranging from 1000 to 5000 tasks and selecting files of 64 or 320 MB. Placing files from remote geographical locations and close to users reduces waiting time and speeds up access to optimal replication. The proposed strategy outperformed other strategies in reducing waiting time for users.

Figure 5.

Response time of tasks = 5000 tasks. (a) Size of data replication = 64 MB. (b) Size of data replication = 320 MB.

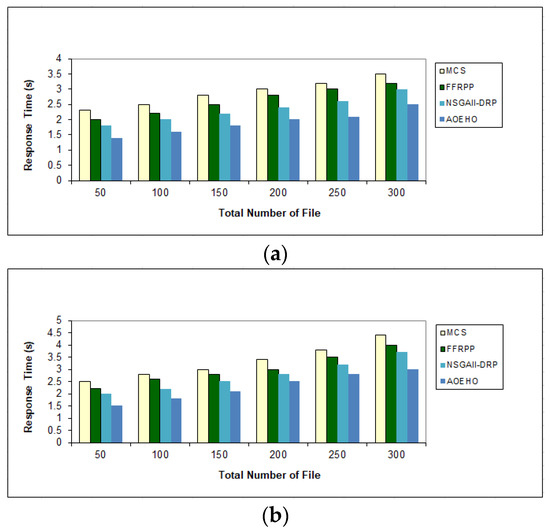

Third Scenario of Response Time for File

Figure 6 shows the number of replications, identifying them and placing them in the path of the two users with the least time to achieve optimal and frequent access to these files from different geographical locations. The optimal nodes will be determined according to the proposed strategy, and the most popular files will be placed to reduce users’ attention time. The proposed strategy outperformed other strategies in reducing waiting time for users.

Figure 6.

Response time of data file. (a) Size of data replication = 320 MB. (b) Size of data replication = 320 MB.

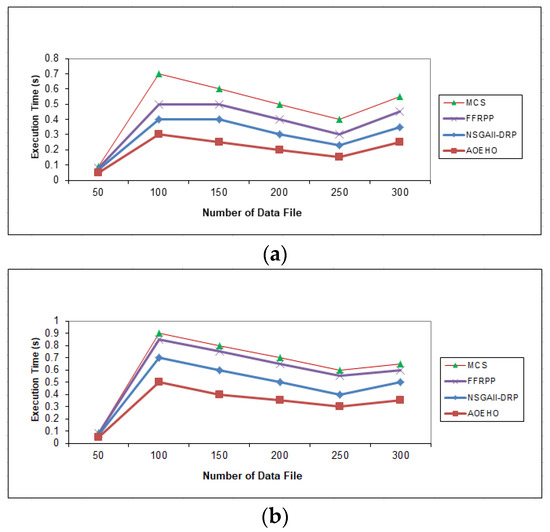

Third Scenario of Execution Time

Figure 7 contains the speed implementation of accessing, selecting data replication, and placement of the most popular files across nodes in cloud computing. A scenario of 64 and 320 MB was generated in select and placement data replication to obtain and place optimal replication across cloud computing nodes. The proposed strategy outperformed other strategies in reducing waiting time for users.

Figure 7.

Execution time of data file. (a) Size of data replication = 64 MB. (b) Size of data replication = 320 MB.

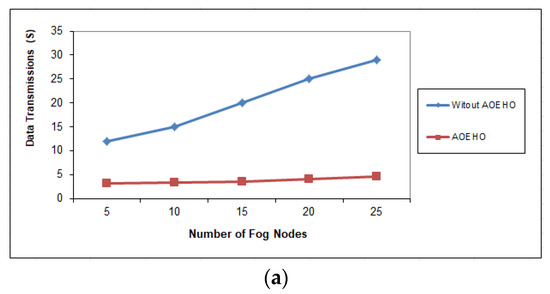

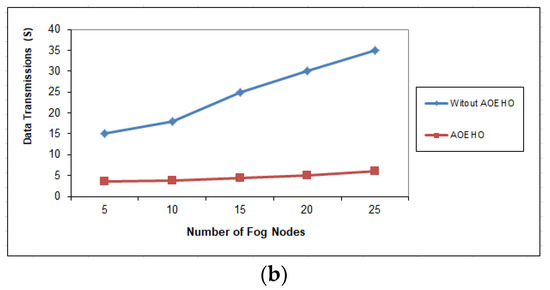

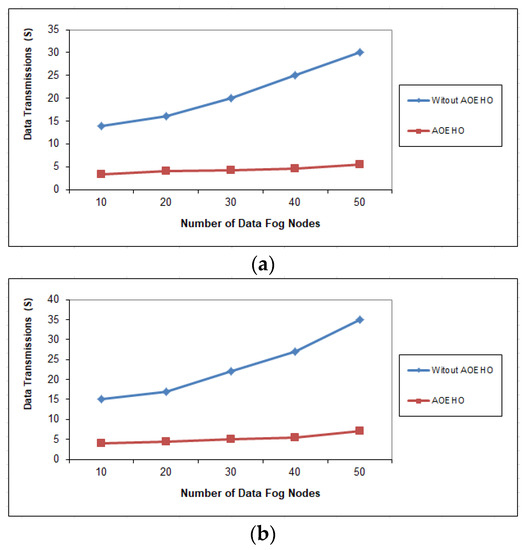

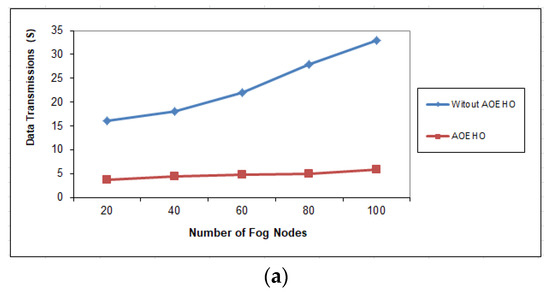

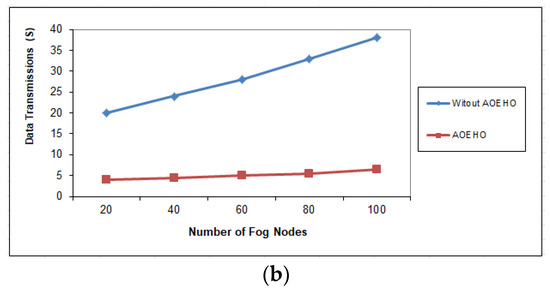

Fourth Scenario of Data Transmission in Nodes

Figure 8, Figure 9 and Figure 10 assess the impact of a different number of IoT-based nodes on cloud computing. We considered the number of replication and moved it across nodes in cloud computing with the lowest path and cost. We conducted the process of transferring data from x to 100 nodes and the effect of transferring data across nodes in cloud computing. From the reality of the proposed strategy, the greater the number of nodes, the more significant the improvement of the proposed strategy and the prediction of different ways to achieve the lowest path and cost. The proposed strategy outperformed other strategies in reducing waiting time for users.

Figure 8.

Data transmission between fog nodes = 25 nodes. (a) Size of data replication = 64 MB. (b) Size of data replication = 320 MB.

Figure 9.

Data transmission between fog nodes = 50 nodes. (a) Size of data replication = 64 MB. (b) Size of data replication = 320 MB.

Figure 10.

Data transmission between fog nodes = 100 nodes. (a) Size of data replication = 64 MB. (b) Size of data replication = 320 MB.

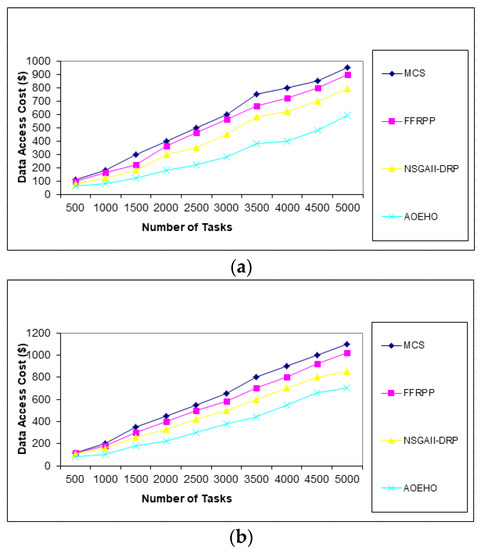

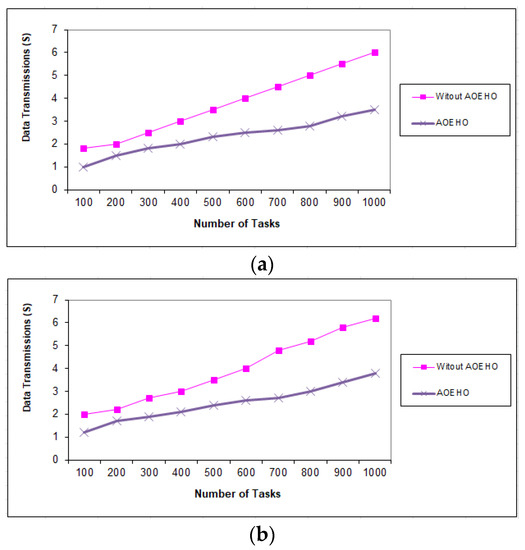

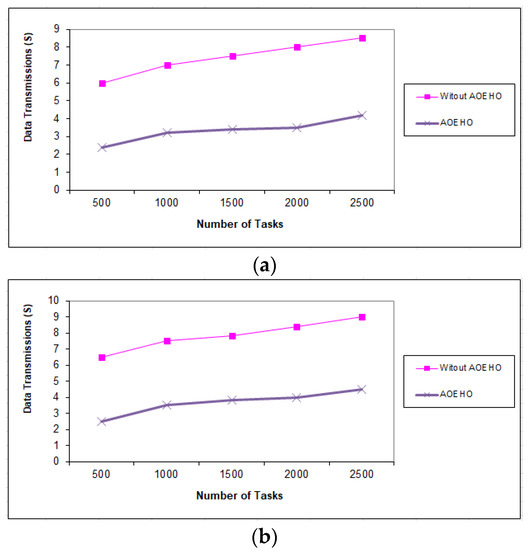

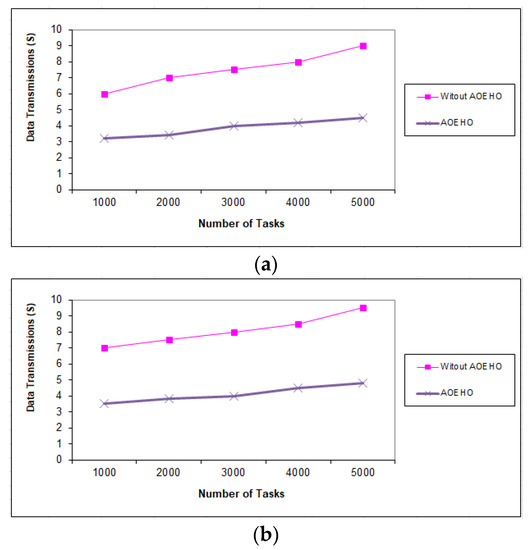

Fifth Scenario of Data Transmission in Tasks

Figure 11, Figure 12 and Figure 13 evaluate the impact of a different number of IoT-based tasks on cloud computing. We considered the number of replications with sizes of 64 and 320 MB and transferred them across nodes in cloud computing with the lowest path and lowest cost. We conducted data migration from 10 to 5000 tasks and the effect of data migration across nodes in cloud computing. Additionally, the number of files is determined from geographical locations according to the frequent access of users to files. The most popular files are identified by the proposed strategy and placed across the nodes near the users. From the reality of the proposed strategy, the greater the number of tasks, the more significant the improvement of the proposed strategy and the prediction of different ways to achieve the least path and cost. The proposed strategy outperformed other strategies in reducing waiting time for users.

Figure 11.

Data transmission between tasks = 1000 tasks. (a) Size of data replication = 64 MB. (b) Size of data replication = 320 MB.

Figure 12.

Data transmission between tasks = 2500 tasks. (a) Size of data replication = 64 MB. (b) Size of data replication = 320 MB.

Figure 13.

Data transmission between tasks = 5000 tasks. (a) Size of data replication = 64 MB. (b) Size of data replication = 320 MB.

4.3. Performance Evaluation

4.3.1. Degree of Balancing

Figure 14 shows the imbalance over the network fog nodes to perform several different tasks simultaneously. The proposed model lowers the degree of imbalance to a minimum level. The proposed strategy outperformed other strategies in reducing the degree of balancing.

Figure 14.

Degree of imbalance.

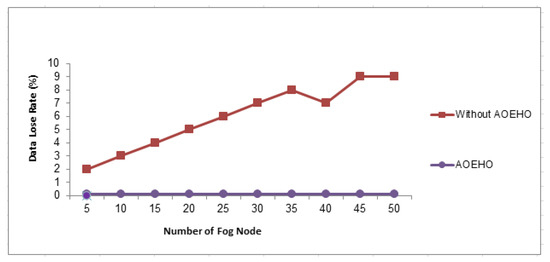

4.3.2. Data Loss Rate

Figure 15 shows the data loss rate across nodes in cloud computing. The data transfer rate across 50 nodes reaches a loss rate of 0 in the proposed system. The proposed strategy outperformed other strategies in reducing the data loss rate.

Figure 15.

Data lose rate.

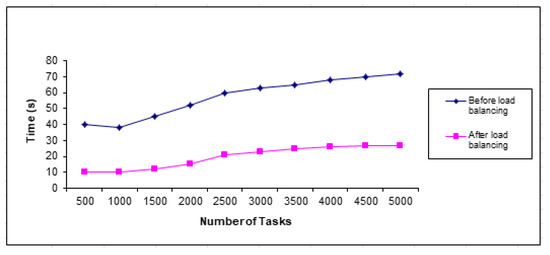

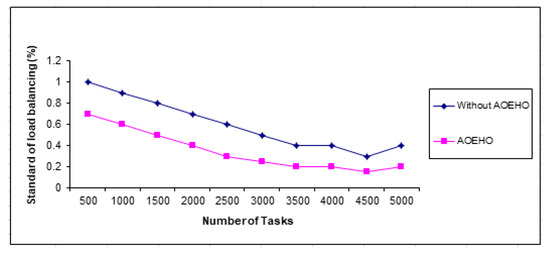

4.3.3. Load Balancing

Figure 16 shows load balancing in different geographic locations decreases with the size of different files in nodes across cloud computing. Five thousand tasks were performed on a data set of different sizes to evaluate the efficiency of load balancing across nodes and the capacity and width of different files across nodes with bandwidth reduction. The proposed strategy outperformed other strategies in reducing load balancing and bandwidth.

Figure 16.

Standard of load balancing.

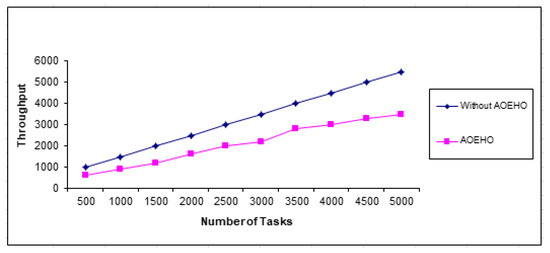

4.3.4. Throughput Time

The proposed strategy works on adequate access to data, optimal use of resources, and improved productivity. It places data across nodes in cloud computing and the data transfer rate across the proposed system. It achieves significant resource utilization and time and cost savings across nodes in cloud computing. An improvement can also be made to reduce congestion across the network in cloud computing. The proposed strategy outperformed other strategies in reducing load balancing and throughput as shown in Figure 17.

Figure 17.

Throughput time for tasks.

5. Conclusions and Future Work

Cloud computing deals with the internet of things to move data, achieve availability, make data available, and improve data access. In this research, we created a hybrid method called the AOEHO strategy to address the optimal and least expensive path and to determine the best replication via cloud computing. The aquila optimizer (AO) algorithm was combined with the elephant herding optimization (EHO) for solving dynamic data replication problems in the fog computing environment. Additionally, a set of objectives was used to improve the balance between nodes and costs across cloud computing. At the same time, AOEHO’s proposed strategy is to find the most popular files and choose the best location for the nodes closest to the users. The proposed strategy also reduces user response time and waiting time. Floyd’s algorithm optimized the shortest and most optimal path to select and place replication across nodes in cloud computing. The proposed AOEHO strategy is superior to other strategies regarding bandwidth, distance, load balancing, data transmission, and the least cost path. The proposed algorithm was simulated and evaluated via iFogSim. In future work, according to the efficiency of the AOEHO strategy, it can be applied to address more optimization problems in real-world implementation, including tasks in data replication, data transmission, routing data replication problems, healthcare, energy optimization problems, and multi-hop data between nodes.

Author Contributions

Methodology, A.A.; Software, A.a.M.; Validation, L.A.; Formal analysis, H.A.E.-W.K. All authors have read and agreed to the published version of the manuscript.

Funding

The researchers would like to thank the Deanship of Scientific Research, Qassim University for funding the publication of this project.

Institutional Review Board Statement

This article does not contain any studies with human participants or animals performed by any authors.

Informed Consent Statement

Informed consent was obtained from all individual participants included in the study.

Data Availability Statement

Data is available from the authors upon reasonable request.

Acknowledgments

The researchers would like to thank the Deanship of Scientific Research, Qassim University for funding the publication of this project.

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

References

- Calheiros, R.N.; Ranjan, R.; Beloglazov, A.; De Rose, C.A.F.; Buyya, R. CloudSim: A toolkit for modeling and simulation of cloud computing environments and evaluation of resource provisioning algorithms. Softw. Pract. Exp. 2011, 41, 23–50. [Google Scholar] [CrossRef]

- Long, S.; Zhao, Y. A toolkit for modeling and simulating cloud data storage: An extension to CloudSim. In Proceedings of the 2012 International Conference on Control Engineering and Communication Technology, Washington, DC, USA, 7–9 December 2012. [Google Scholar]

- Mansouri, Y.; Buyya, R. To move or not to move: Cost optimization in a dual cloud-based storage architecture. J. Netw. Comput. Appl. 2016, 75, 223–235. [Google Scholar] [CrossRef]

- Rajeshirke, N.; Sawant, R.; Sawant, S.; Shaikh, H. Load balancing in cloud computing. Int. J. Recent Trends Eng. Res. 2017, 3. [Google Scholar]

- Milani, A.S.; Navimipour, N.J. Load balancing mechanisms and techniques in the cloud environments: Systematic literature review an future trends. J. Netw. Comput. Appl. 2016, 71, 86–98. [Google Scholar] [CrossRef]

- Ghomi, E.J.; Rahmani, A.M.; Qader, N.N. Load-balancing algorithms in cloud computing: A survey. J. Netw. Comput. Appl. 2017, 88, 50–71. [Google Scholar] [CrossRef]

- Naha, R.K.; Othman, M. Cost-aware service brokering and performance sentient load balancing algorithms in the cloud. J. Netw. Comput. Appl. 2016, 75, 47–57. [Google Scholar] [CrossRef]

- Ahn, H.-Y.; Lee, K.-H.; Lee, Y.-J. Dynamic erasure coding decision for modern block-oriented distributed storage systems. J. Supercomput. 2016, 72, 1312–1341. [Google Scholar] [CrossRef]

- Zhu, T.; Feng, D.; Wang, F.; Hua, Y.; Shi, Q.; Xie, Y.; Wan, Y. A congestion-aware and robust multicast protocol in SDN-based datacenter networks. J. Netw. Comput. Appl. 2017, 95, 105–117. [Google Scholar] [CrossRef]

- Nguyen, B.; Binh, H.; Son, B. Evolutionary algorithms to optimize task scheduling problem for the IoT based bag-of-tasks application in cloud-fog computing environment. Appl. Sci. 2019, 9, 1730. [Google Scholar] [CrossRef]

- Ghasempour, A. Internet of things in smart grid: Architecture, applications, services, key technologies, and challenges. Inventions 2019, 4, 22. [Google Scholar] [CrossRef]

- Ranjana, T.R.; Jayalakshmi, D.; Srinivasan, R. On replication strategies for data intensive cloud applications. Int. J. Comput. Sci. Inf. Technol. 2017, 6, 2479–2484. [Google Scholar]

- Fu, J.; Liu, Y.; Chao, H.; Bhargava, B.; Zhang, Z. Secure data storage and searching for industrial IoT by integrating fog computing and cloud computing. IEEE Trans. Ind. Inf. 2018, 14, 4519–4528. [Google Scholar] [CrossRef]

- Lin, B.; Guo, W.; Xiong, N.; Chen, G.; Vasilakos, A.; Zhang, H. A pretreatment workflow scheduling approach for big data applications in multicloud environments. IEEE Trans. Netw. Serv. Manag. 2016, 13, 581–594. [Google Scholar] [CrossRef]

- Liu, X.-F.; Zhan, Z.-H.; Deng, J.; Li, Y.; Gu, T.; Zhang, J. An energy efficient ant colony system for virtual machine placement in cloud computing. IEEE Trans. Evol. Comput. 2016, 22, 113–128. [Google Scholar] [CrossRef]

- Kumar, P.J.; University, V.; Ilango, P. Data replication in current generation computing environment. Int. J. Eng. Trends Technol. 2017, 45, 488–492. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A. A novel hybrid antlion optimization algorithm for multi-objective task scheduling problems in cloud computing environments. Clust. Comput. 2020, 24, 205–223. [Google Scholar] [CrossRef]

- Wang, Y.; Guo, C.; Yu, J. Immune scheduling network based method for task scheduling in decentralized fog computing. Wirel. Commun. Mob. Comput. 2018, 2018, 2734219. [Google Scholar] [CrossRef]

- Yang, M.; Ma, H.; Wei, S.; Zeng, Y.; Chen, Y.; Hu, Y. A multi-objective task scheduling method for fog computing in cyber-physical-social services. IEEE Access 2020, 8, 65085–65095. [Google Scholar] [CrossRef]

- John, S.; Mirnalinee, T. A novel dynamic data replication strategy to improve access efficiency of cloud storage. Inf. Syst. e-Bus. Manag. 2020, 18, 405–426. [Google Scholar] [CrossRef]

- Pallewatta, S.; Kostakos, V.; Buyya, R. QoS-aware placement of microservices-based IoT applications in Fog computing environments. Future Gener. Comput. Syst. 2022, 131, 121–136. [Google Scholar] [CrossRef]

- Milani, B.A.; Navimipour, N.J. A comprehensive review of the data replication techniques in the cloud environments: Major trends and future directions. J. Netw. Comput. Appl. 2016, 64, 229–238. [Google Scholar] [CrossRef]

- Sahoo, J.; Salahuddin, M.A.; Glitho, R.; Elbiaze, H.; Ajib, W. A survey replica server placement algorithms for content delivery networks. IEEE Commun. Surv. Tutor. 2017, 19, 1002–1026. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, Q. Optimized data storage algorithm of IoT based on cloud computing in distributed system. Comput. Commun. 2020, 157, 124–131. [Google Scholar] [CrossRef]

- Taghizadeh, J.; Arani, M.; Shahidinejad, A. A metaheuristic-based data replica placement approach for data-intensive IoT applications in the fog computing environment. Softw. Pract. Exp. 2021, 52, 482–505. [Google Scholar] [CrossRef]

- Torabi, E.; Arani, M.; Shahidinejad, A. Data replica placement approaches in fog computing: A review. Clust. Comput. 2022, 25, 3561–3589. [Google Scholar] [CrossRef]

- Jin, W.; Lim, S.; Woo, S.; Park, C.; Kim, D. Decision-making of IoT device operation based on intelligent-task offloading for improving environmental optimization. Complex Intell. Syst. 2022, 8, 3847–3866. [Google Scholar] [CrossRef]

- Salem, R.; Abdsalam, M.; Abdelkader, H.; Awad, A. An Artificial Bee Colony Algorithm for Data Replication Optimization in Cloud Environments. IEEE Access 2020, 8, 51841–51852. [Google Scholar] [CrossRef]

- Awad, A.; Salem, R.; Abdelkader, H.; Abdsalam, M. A Novel Intelligent Approach for Dynamic Data Replication in Cloud Environment. IEEE Access 2021, 9, 40240–40254. [Google Scholar] [CrossRef]

- Awad, A.; Salem, R.; Abdelkader, H.; Abdsalam, M. A Swarm Intelligence-based Approach for Dynamic Data Replication in a Cloud Environment. Int. J. Intell. Eng. Syst. 2021, 14, 271–284. [Google Scholar] [CrossRef]

- Sarwar, K.; Yong, S.; Yu, J.; Rehman, S. Efficient privacy-preserving data replication in fog-enabled IoT. Future Gener. Comput. Syst. 2022, 128, 538–551. [Google Scholar] [CrossRef]

- Chen, D.; Yuan, H.; Hu, S.; Wang, Q.; Wang, C. BOSSA: A Decentralized System for Proofs of Data Retrievability and Replication. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 786–798. [Google Scholar] [CrossRef]

- Li, C.; Liu, J.; Wang, M.; Luo, Y. Fault-tolerant scheduling and data placement for scientific workflow processing in geo-distributed clouds. J. Syst. Softw. 2022, 187, 111227. [Google Scholar] [CrossRef]

- Shi, T.; Ma, H.; Chen, G.; Hartmann, S. Cost-Effective Web Application Replication and Deployment in Multi-Cloud Environment. IEEE Trans. Parallel Distrib. Syst. 2022, 33, 1982–1995. [Google Scholar] [CrossRef]

- Majed, A.; Raji, F.; Miri, A. Replication management in peer-to-peer cloud storage systems. Clust. Comput. 2022, 25, 401–416. [Google Scholar] [CrossRef]

- Li, C.; Cai, Q.; Youlong, L. Optimal data placement strategy considering capacity limitation and load balancing in geographically distributed cloud. Future Gener. Comput. Syst. 2022, 127, 142–159. [Google Scholar] [CrossRef]

- Khelifa, A.; Mokadem, R.; Hamrouni, T.; Charrada, F. Data correlation and fuzzy inference system-based data replication in federated cloud systems. Simul. Model. Pract. Theory 2022, 115, 102428. [Google Scholar] [CrossRef]

- Mohammadi, B.; Navimipour, N. A Fuzzy Logic-Based Method for Replica Placement in the Peer to Peer Cloud Using an Optimization Algorithm. Wirel. Pers. Commun. 2022, 122, 981–1005. [Google Scholar] [CrossRef]

- Zhao, J.; Gao, Z.; Chen, H. The Simplified Aquila Optimization Algorithm. IEEE Access 2022, 10, 22487–22515. [Google Scholar] [CrossRef]

- Li, J.; Lei, H.; Alavi, A.H.; Wang, G.G. Elephant Herding Optimization: Variants, Hybrids, and Applications. Mathematics 2020, 8, 1415. [Google Scholar] [CrossRef]

- Agushaka, J.O.; Ezugwu, A.E.; Abualigah, L.; Alharbi, S.K.; Khalifa, H.A.E.W. Efficient Initialization Methods for Population-Based Metaheuristic Algorithms: A Comparative Study. Arch. Comput. Methods Eng. 2022, 1–61. [Google Scholar] [CrossRef]

- Forestiero, A.; Pizzuti, C.; Spezzano, G. A single pass algorithm for clustering evolving data streams based on swarm intelligence. Data Min. Knowl. Discov. 2013, 26, 1–26. [Google Scholar] [CrossRef]

- Gul, F.; Mir, A.; Mir, I.; Mir, S.; Islaam, T.U.; Abualigah, L.; Forestiero, A. A Centralized Strategy for Multi-Agent Exploration. IEEE Access 2022, 10, 126871–126884. [Google Scholar] [CrossRef]

- Abualigah, L.; Elaziz, M.A.; Khodadadi, N.; Forestiero, A.; Jia, H.; Gandomi, A.H. Aquila Optimizer Based PSO Swarm Intelligence for IoT Task Scheduling Application in Cloud Computing. In Integrating Meta-Heuristics and Machine Learning for Real-World Optimization Problems; Springer: Cham, Switzerland, 2022; pp. 481–497. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).