1. Introduction

Security verification technologies have advanced rapidly in modern society. For example, airport check-in systems have advanced from manual to automatic inspections. With the increasing focus on personal privacy, much attention has been paid to biometric verification, which utilizes human physiological characteristics, such as features of the sclera [

1], fingerprints [

2], veins [

1,

3,

4], and face [

5], for identity verification. All these technologies have three things in common. First, every person has their own biometrics (i.e., “uniqueness”). Second, these features do not change dramatically over time (i.e., “stability”). Lastly, users need not bring all their personal keycards with them, and these biometric features allow accurate identification and are hard to spoof or steal (i.e., “safety and portability”). With these three advantages, biometrics has become the main trend for personal identification in modern society.

Finger vein features used in human recognition methods, whether it is image processing of photographic devices or finger vein feature recognition algorithms, have been implemented in various applications that require security, such as ATMs and security doors [

6,

7]. The finger vein identification system recognizes the structure of the visible blood vessel pattern in the finger, which can only be irradiated with near-infrared (NIR) light wavelengths. Because finger vein patterns are located under the skin, they are neither changeable over time nor easily affected by skin surface changes, such as cuts, abrasions, and surface stains, or by interference noise [

8].

In recent years, convolutional neural networks (CNN) have been widely used in many feature extraction and classification tasks. The CNN uses convolution kernels of different size to obtain the detailed features of images, including for finger vein recognition. Traditional CNN for vein recognition had been known for having heavyweight architectures [

9], which required large amounts of computational resources and data for training and inference. This led to the development of lightweight CNNs that are more efficient in terms of computational resources and memory usage. Several recent works have proposed lightweight CNN architectures to improve the performance of the recognition task while reducing the computational cost. One such system used a lightweight CNN with triplet loss function, composed of stem blocks for extracting coarse features of the images and stage blocks for extracting detailed features [

10]. Another system proposed a unified CNN which achieved high performance on both finger recognition and anti-spoofing tasks while making their neural network compatible [

11]. It is interesting to note that there have been several recent papers proposing lightweight CNN architectures for finger vein recognition tasks and this topic has been gaining more attention in the field of biometrics. Here, we propose a lightweight CNN with batch normalization (BN). The main design focus was adding noise in the training process to prevent overfitting, as well as reducing internal covariance shift.

One framework for mapping convolutional neural networks on FPGAs was fpgaConvNet [

12]. This approach allowed fast exploration of the design space by means of algebraic operations and it enabled the formulation of a CNN’s hardware mapping as an optimization problem. Different from the hardware conditions in this article, the FPGA core was an older process specification, and the core frequency and memory specifications used were lower than the hardware specifications in this article. The convolutional layer specification used was also relatively lower than the depth used in this article, and the BN proposed in this article was not used. Moreover, the characteristic specification of Sustained Performance would need to be further optimized and improved in the finger vein recognition system when compared with the method proposed in this article.

One design used an accelerator that trained the CNN using efficient frequency-domain computation [

13]. It performed convolution using simple pointwise multiplications without the Fourier transforms by mapping all the parameters and operations into the frequency domain. The differences from the designs proposed in this paper were mainly due to the specification conditions of the hardware design being ASIC and FPGA platforms, the core frequency and integration of the precision control of the complex number computation, and the neural network architecture being relatively limited due to the design conditions. For the GPU calculation of the follow-up system, the design proposed in this paper was to run directly on the neural network IP of the FPGA to more completely calculate the results of finger vein recognition.

With the popularity of internet of things (IoT) systems, network security is the most important issue for people, and people generally do not want to upload too much personal information in security messages to the cloud server system. Therefore, “edge computing” has become an important research topic. Using this, we can build a security system that is not only safer but also faster because the transmission of information from the sensors is minimized.

Previous work on finger vein sensor devices can be categorized into two types: commercial and academic devices. Commercial devices are those developed by vendors, such as Hitachi, IDEMIA, and Mofiria. These sensors have been widely used in the market for several years. However, the manufacturers of these devices only provide the performance metrics of their products, including false acceptance rate (FAR) and false reject rate (FRR), without documenting their hardware implementation details. Additionally, the output image format of these devices is encrypted, so researchers are not able to obtain raw finger image data. To address this issue, we developed a finger vein capture device for academic use that can export captured vein images in a non-proprietary format. Furthermore, cost-effective components and simplicity were considered while developing our own device. A small, single-board computer (SBC) with NIR camera ware was used in our design, which makes it compact and feasible for various applications.

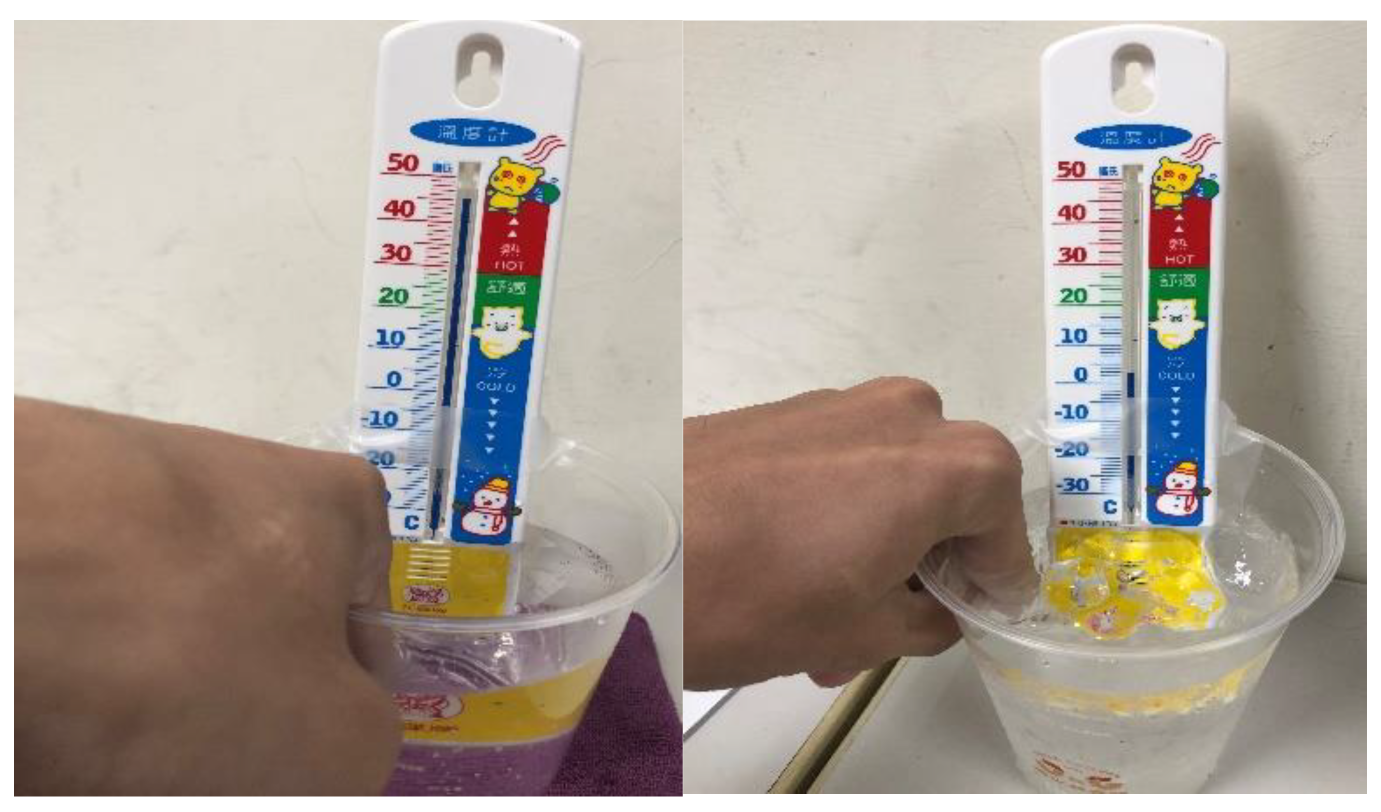

In this paper, we propose a low-complexity and lightweight CNN-based finger vein recognition system using edge computing, which can achieve a faster inference time and maintain a high-precision system recognition rate. Based on the specifications of the existing finger vein image acquisition products on the market, we designed and developed our own finger vein image capture device to collect the finger vein images for this study. Several preprocessing techniques and the modified CNN were combined to achieve better image quality and higher recognition accuracy. The low-complexity and lightweight CNN for the inference stage was designed based on an FPGA device that had limited resources, with only 13,300 logic slices, each with four six-input LUTs, eight flip-flops, and four clock management tiles, each with a phase and 220 DSP slices. Experiments were performed using a variety of application scenarios (including finger vein images obtained from fingers at different temperatures, heart rates (HRs), levels of cleanliness, etc.), illustrating the robustness and practicality of the proposed system.

The remainder of this paper is organized as follows.

Section 2 describes the proposed system.

Section 3 presents the experimental data. The system test results and comparisons are given in

Section 4.

Section 5 concludes this work.

2. Proposed System

This study proposes a low-complexity and lightweight CNN-based finger vein recognition system using edge computing, which allows faster reasoning time to obtain the personal information identification results.

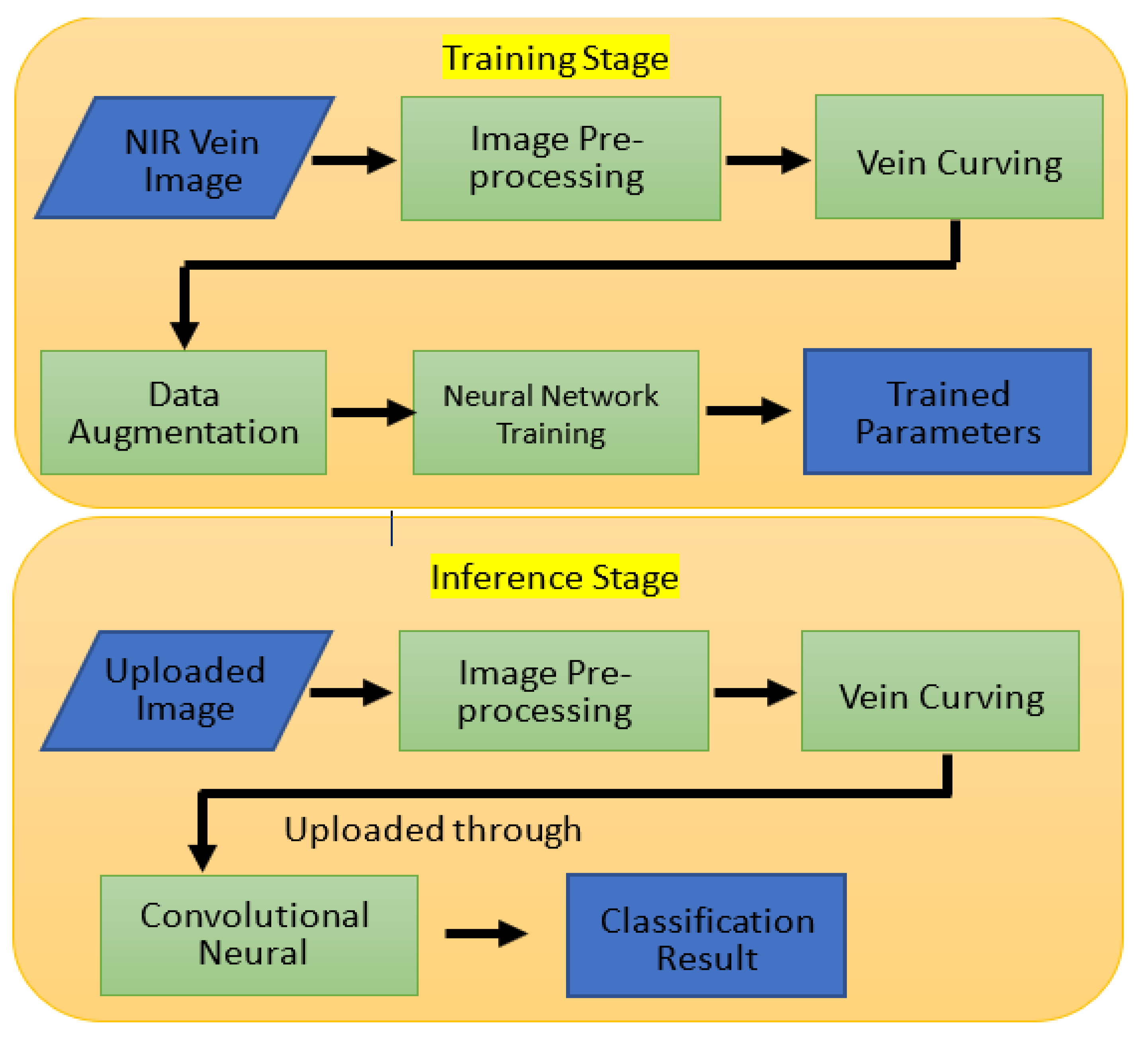

Figure 1 shows the proposed recognition system, which includes the training stage and the inference stage. A detailed description is presented in the following sub-sections.

2.1. Training Stage

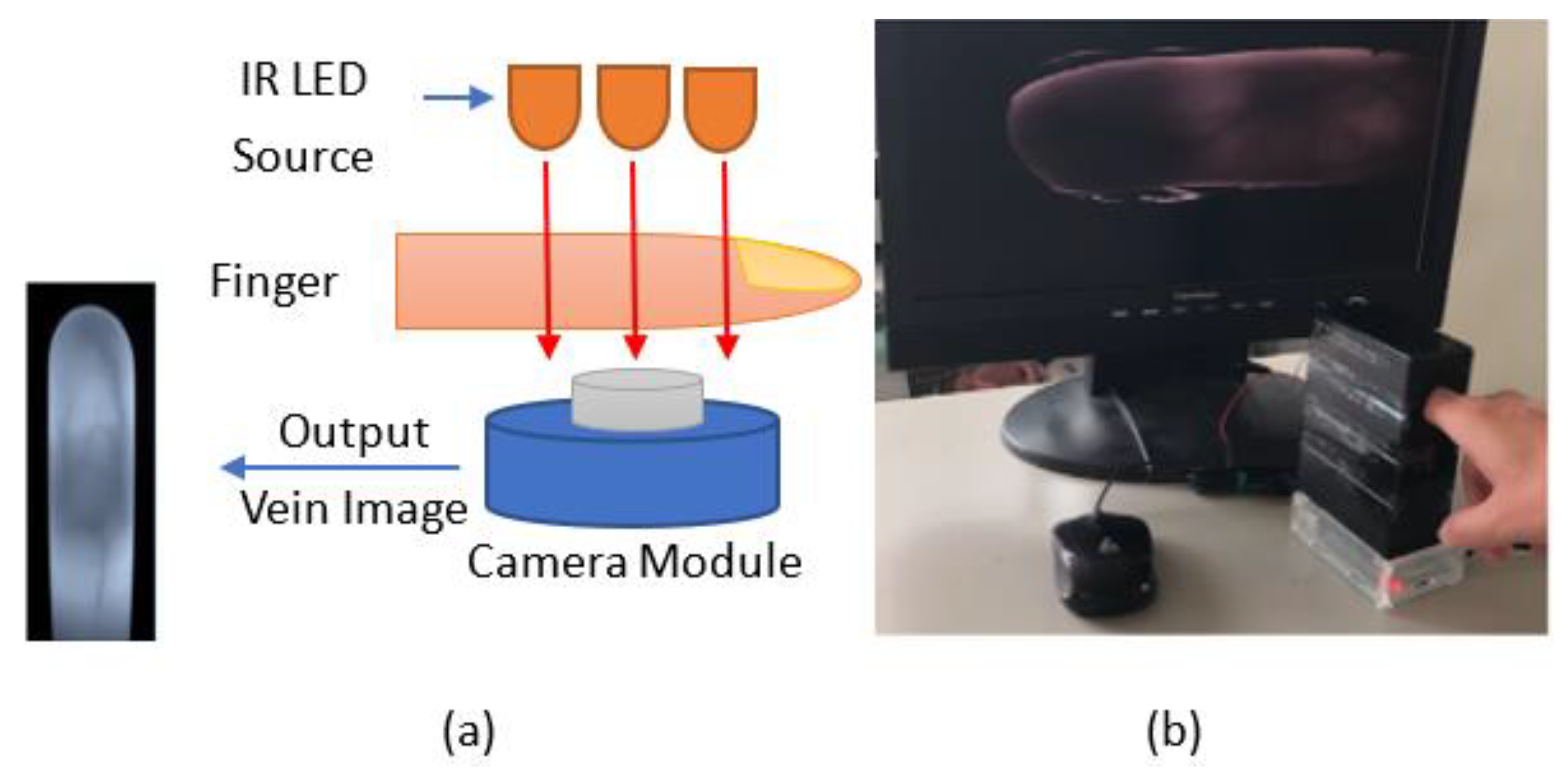

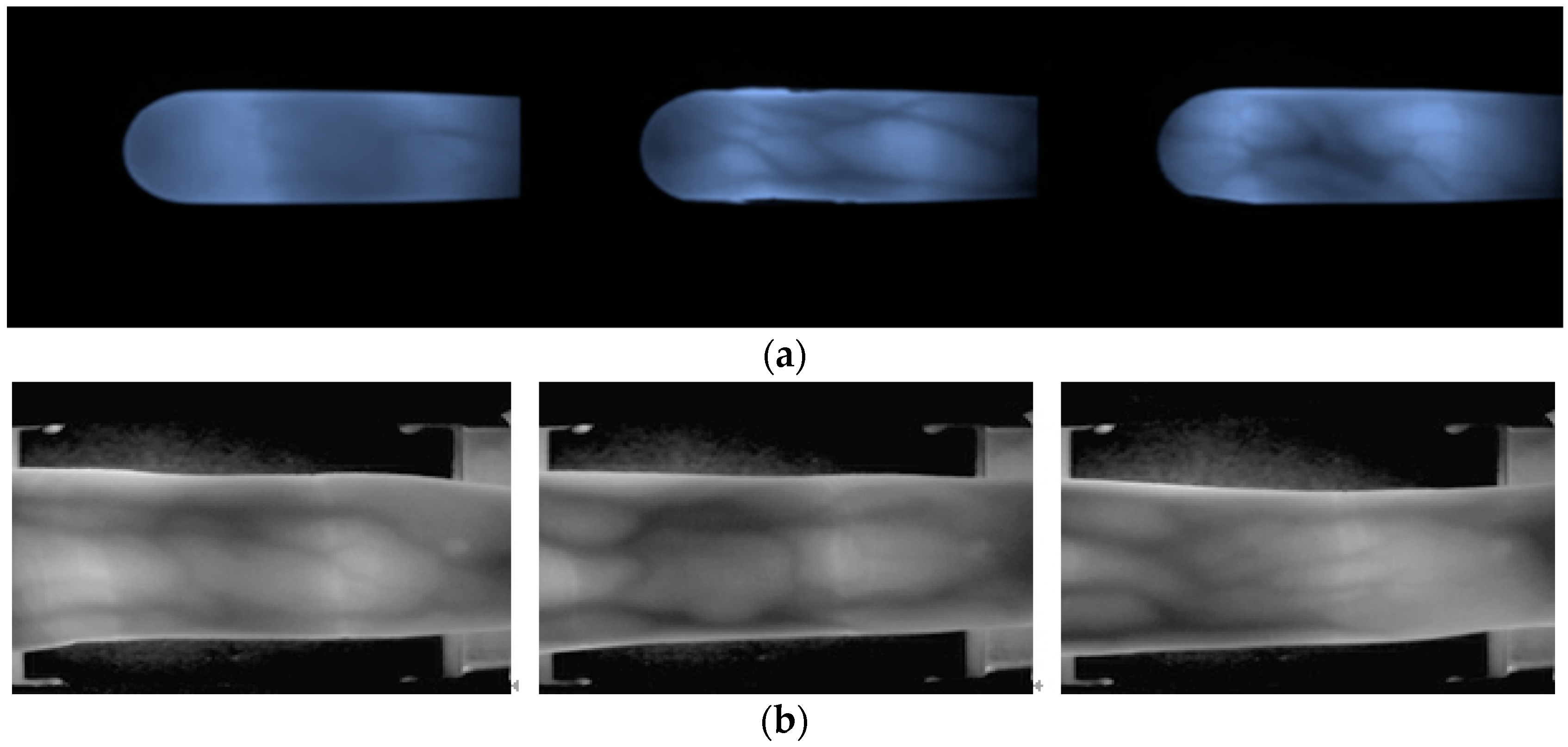

The original finger vein images were obtained from our capture device developed with the NIR 850 nm camera module shown in

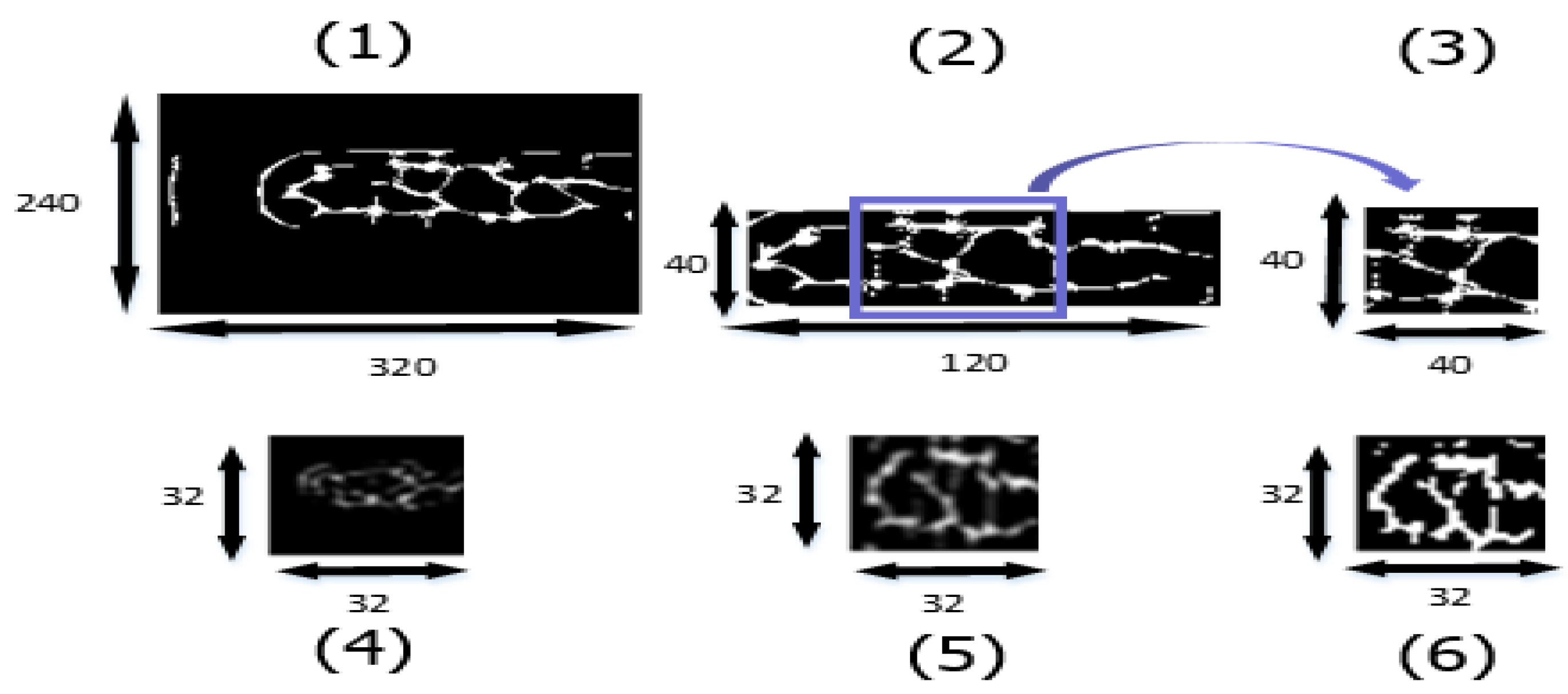

Figure 2. The image sensor was a Sony IMX219 in a fixed-focus module with an integral IR filter, and resolution was 8 M pixels with no infrared. This device was developed based on the specifications of existing commercial products. A simple system for illumination from the top side of the finger was designed. Therefore, to train the CNN on the finger vein features, we needed to perform several steps of preprocessing on the raw image. We conducted three main processes on the input data: vein curving, region of interest (ROI) capture, and scaling. The binary features and ROI of the finger vein images were calculated and used as the training data to obtain the parameters for the modified CNN, which were then used in the inference stage.

In order to collect the training and testing datasets, the following steps were used, as shown in

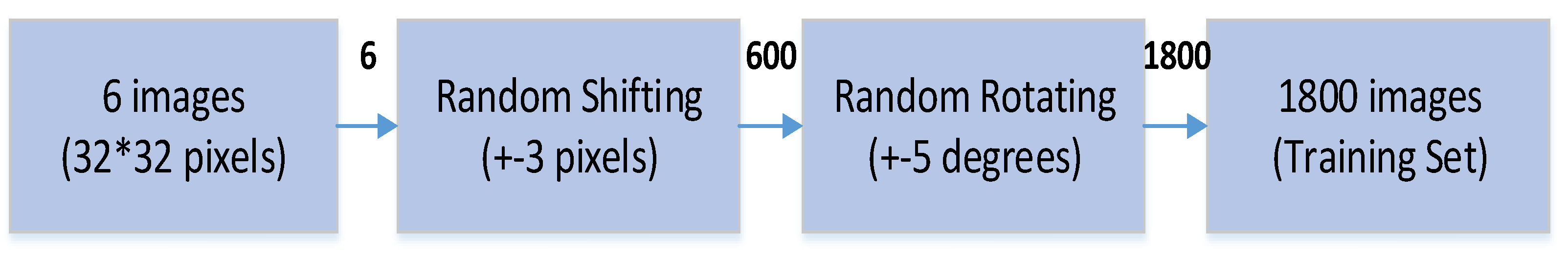

Figure 3. First, the finger vein images of 10 people were acquired through the camera equipment. Six real-time finger vein images were taken for each person, yielding a total of 60 original finger vein image files of the 10 people. After data augmentation, the total number was 1800. The images were then divided into two categories: 1620 images for training and 180 images for validation. In addition, the real-time finger vein images for these 10 people were re-acquired, and then another 60 original finger vein image files were obtained. After data augmentation, a total of 1800 images were obtained. From these, 1620 images were randomly selected for the system testing.

- (1)

Image Preprocessing.

In the actual application of the finger vein recognition device, it was necessary to consider various factors that can cause misjudgments when capturing the current finger vein image. For example, if the size of the finger is different, or the image is too large, the feature list is required, and if the image is too small, invalid edges where the edges of the fingers are overexposed cannot be removed. The depth of the fingerprint on the finger surface is also an important item that needs to be removed from noise interference. Thus, one must determine how to prevent the noise image of fingerprints or skin surface wear-and-tear from overlapping with the vein image. The surface temperature of the finger, affected by situational factors in various climates, may cause slight changes in the size of the pattern, and the training conditions must be added when the training dataset is initially established.

With reference to the structure of the standard finger vein devices, such as the Hitachi H1, the external light overlaps with the NIR light. The sensitivity and denoising ability of the CMOS lens react to the CMOS lens. The most common problems are irregular finger image edges and light overlap exposure. Therefore, we needed to perform image preprocessing for each angle and noise simulation or image after adding conditions when creating the initial finger vein image for each person being tested.

The main purpose of this stage was to generate the corresponding input pattern for the training network. First, we converted the captured RGB image into grayscale. Then, we scaled the image pixels ranging from 0 to 1. This normalization operation was to prevent a large gradient during training.

After normalization, we enhanced the normalized image in order to emphasize the vein features. We implemented the contrast-limited adaptive histogram equalization (CLAHE) method, as shown in

Figure 4. Compared with global histogram equalization, CLAHE amplifies local features by dividing images into small blocks called “tiles.” Then, each tile equalizes the histogram as in global equalization.

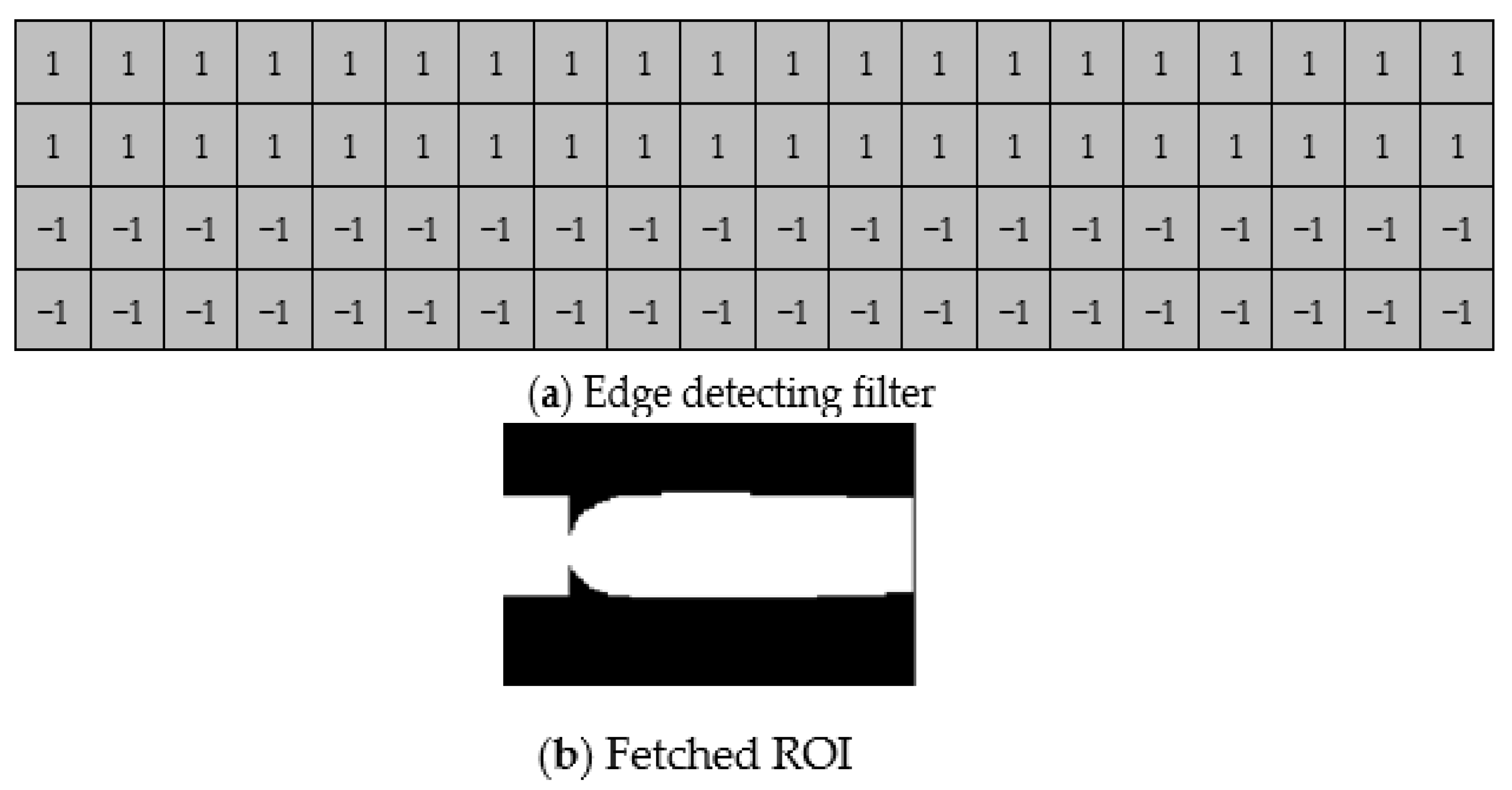

Since only a portion of the image has the features of finger veins, we needed to fetch the ROI of the image, as shown in

Figure 5. First, we used an edge detection filter with 20 × 4 pixels, which was divided into upper and lower parts. The upper part was filled with 1, and the lower part was filled with −1. By filtering the finger image, the upper part of the image produced a maximum value, while the lower part produced a minimum value.

For the sake of hardware structure and resource utilization, we scaled both our training and testing datasets to 32 × 32 pixels after we fetched the ROI of the finger vein, as shown in

Figure 6.

- (2)

Data Augmentation

A neural network usually needs a large amount of data to learn the differences between each category. Here, we performed the augmentation process considering the following:

- (1)

Since we used a high-resolution camera and scaled to a smaller size to perform the inference, the quality of the source image we captured was stable. There was no need for a training network with Gaussian noise, coarse dropout, or random brightness.

- (2)

We did not restrict the user’s finger position, so the finger could shift or rotate slightly. To address this issue, our augmentation process mainly focused on shifting and rotating.

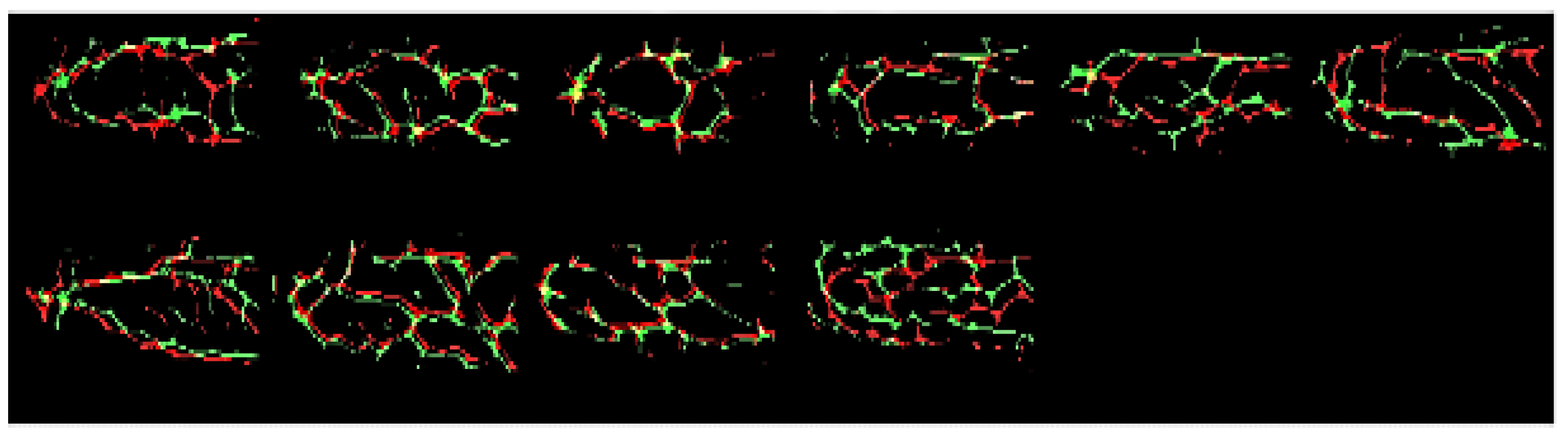

In the data augmentation phase, we compared the “registration” data, shown as green lines in

Figure 7, and the “verification” data, shown as red lines. Since the tolerance of rotation error mostly varied by plus–minus five degrees and the error of shifting varied by plus–minus three pixels, we only augmented the dataset with random shifting and random rotation. The two different datasets and the augmentation strategy are shown in

Figure 7 and

Figure 8. After the dataset was completed, there were 1620 images for training, 180 for validation, and another 1620 for testing.

- (3)

Neural Network Training

For neural network training, we used convolutional architecture for fast feature embedding (Caffe) to establish the architecture and obtain trained parameters for the purpose of FPGA inference. Caffe is a deep learning framework designed for expression, speed, and modularity [

14]. In practice, a complete Caffe training consists of several classes: Blob, Layer, Net, and Solver. Blob is an N-dimensional array-storing data type defined in Caffe; its main objective is to hold data, derivatives, and parameters in training and inference dataflow. Layer is Caffe’s fundamental unit of computation; basic layers such as Convolution and Pooling are defined in this class.

First, users can customize their own neural network using several layer declarations and then establish the network structure file, namely, the prototxt file. Second, in order to train with our own dataset, image data had to be transformed into LMDB format and thus referenced by the prototxt structure. Compared with TensorFlow [

15], Caffe has a faster training speed and lower memory requirements.

2.2. Inference Stage

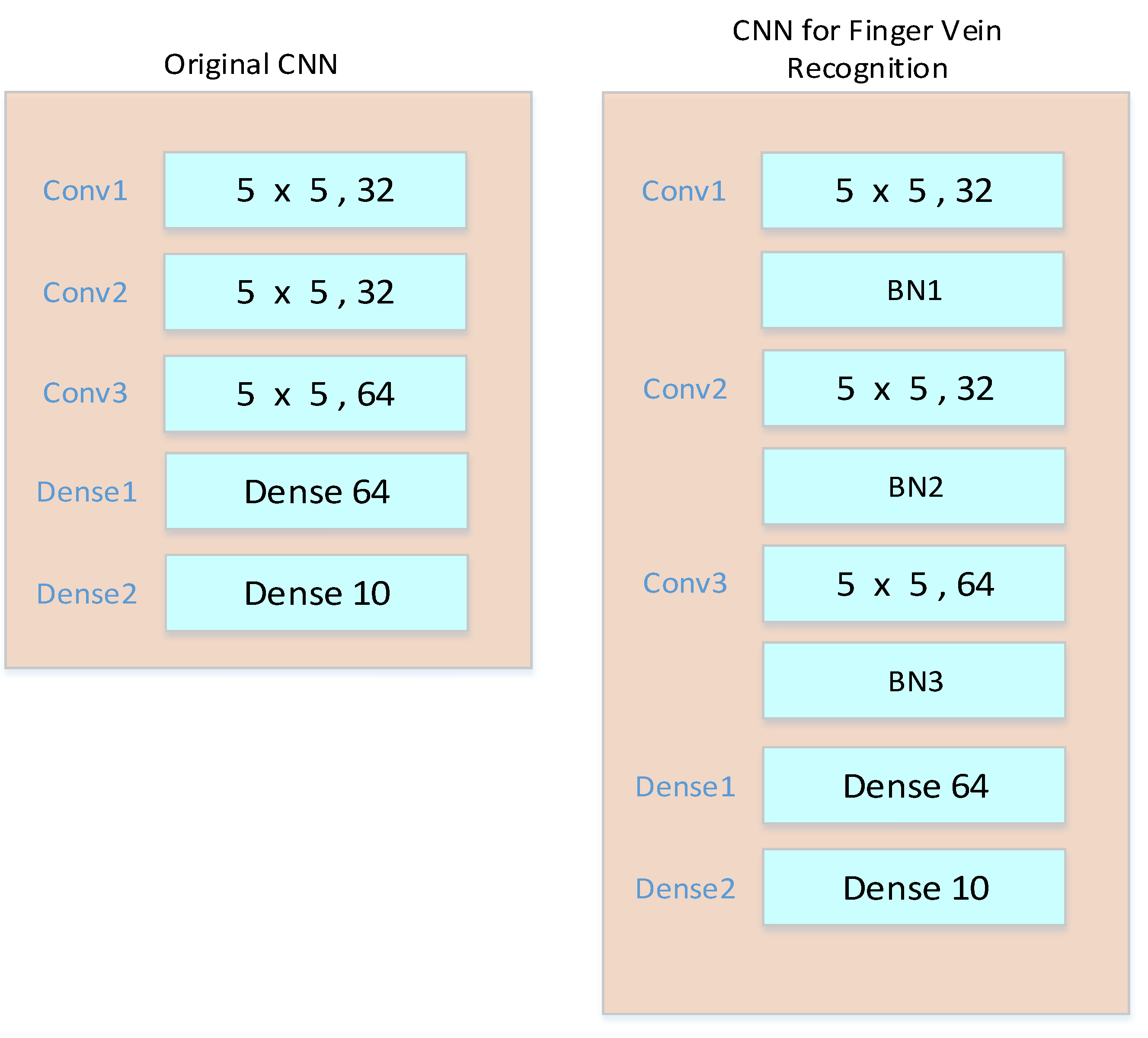

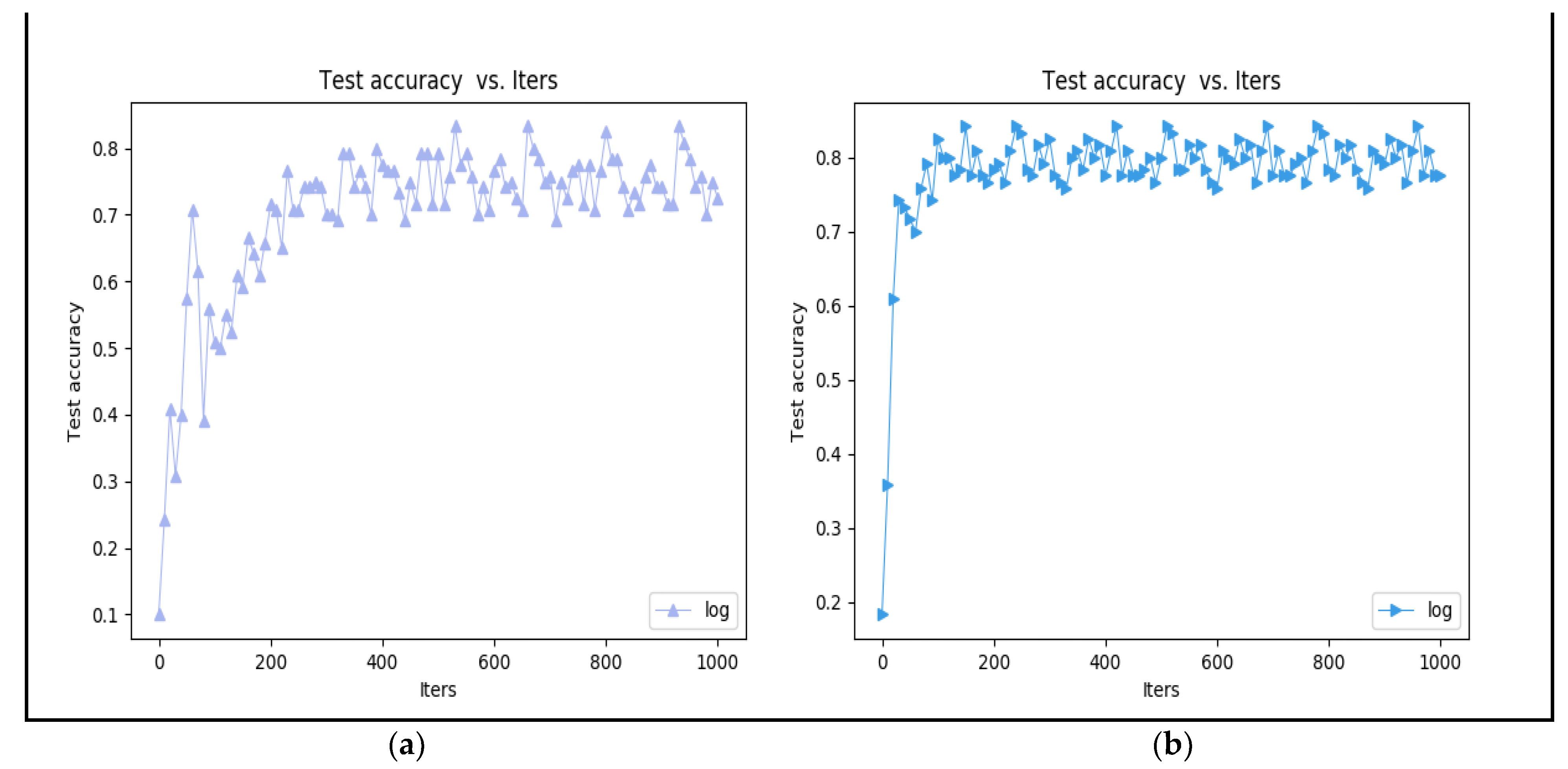

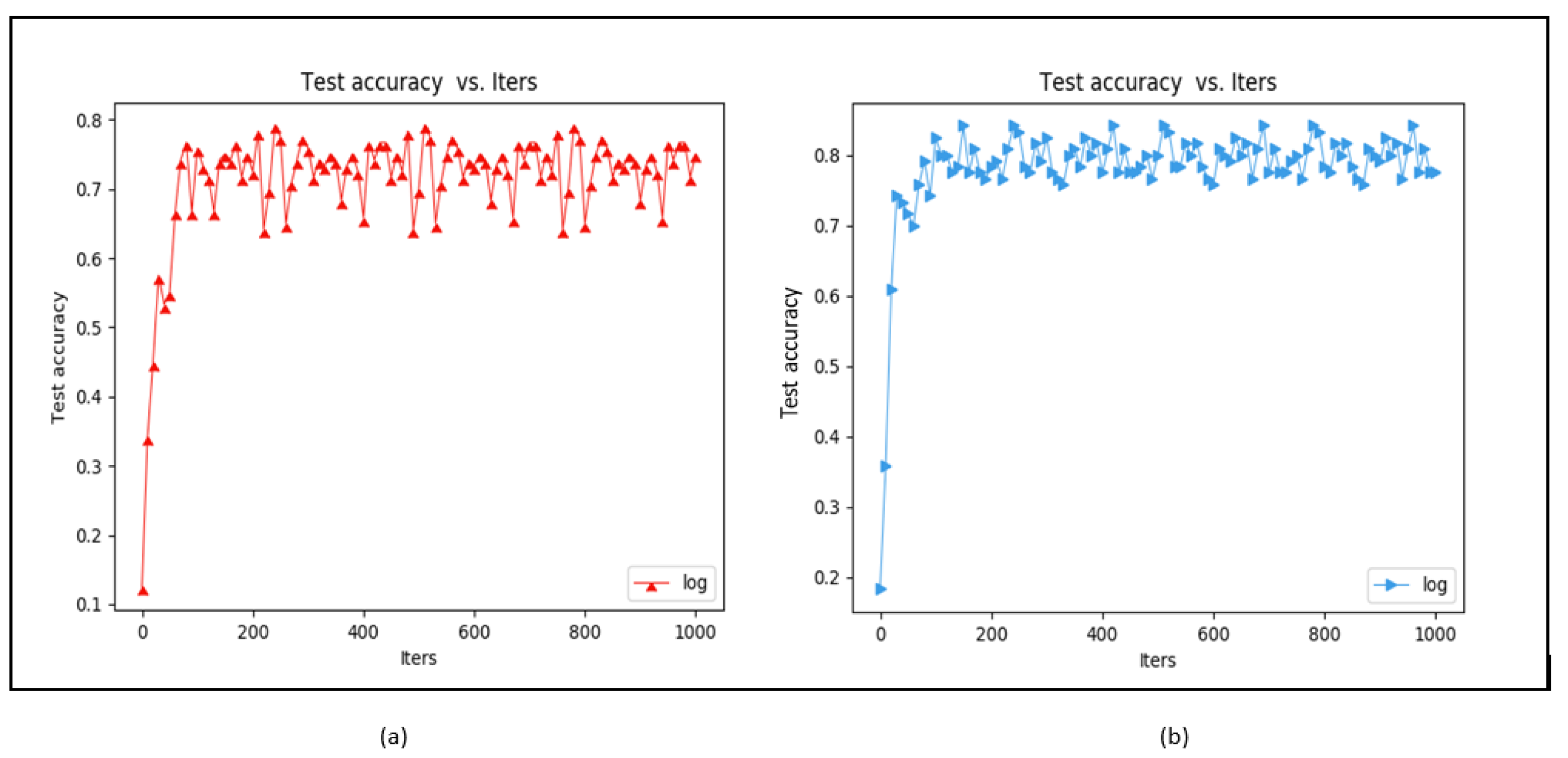

A modified CNN for finger vein recognition is proposed in this paper. The original CNN was obtained from the MNIST training model provided by Caffe, and the modified CNN with batch normalization (BN) can obtain higher accuracy. The original and modified CNNs are shown in

Figure 9.

Vivado HLS was used to accelerate our neural network. The modified CNN consisted of three convolutional layers, with BN after each layer. This intellectual property (IP) featured im2 col convolution, which can be supported by the matrix multiplication DSP provided by Xilinx. The acceleration ratio was 120× compared with the network, running in a Linux environment with a 650 Mhz ARM cortex-A9 dual core CPU. Since the preprocessing in the inference phase was similar to the preprocessing in the training phase, the following sections focus on our neural network IP design. First, we designed and modified the complexity and light weight of the CNN. In order to meet the requirement of FPGA hardware system computing efficiency, we had to ensure that the convolution algorithm occupied the largest proportion of the entire computing resources and that data were reused in the convolution stage. We implemented general matrix multiplications (GEMMs) to optimize the convolution operation in the IP design. GEMMs basically expand the feature map and the kernel into two main matrices and then complete the convolution by multiplying the two matrices. After the two matrices are completed, the multiplication is performed, based on a 5 × 5 feature map with two channels and a 3 × 3 kernel. Maximum pooling is carried out by selecting the maximum value within the pooling size. The fully connected layer can be regarded as a 1 × 1 convolution. After the convolutional layer chain, the feature map is flattened into a one-dimensional (1D) stream and convolved with the input fully connected parameters. Finally, we used Jupyter Notebook [

16] for connecting the uploaded image and the IP. Jupyter Notebook is an interactive computing environment that enables users to manipulate their input data and calling IP directly. The detailed design is described as follows.

- (1)

IP Structure

GEMMs [

14,

17], Winograd Transform [

18], and Fast Fourier Transform [

13] are well-known solutions to the issue of ensuring that the convolution algorithm occupies the largest proportion of the entire computing resources and that data are reused in the convolution stage. We implemented GEMMs to optimize the convolution operation in the IP design. The overall convolution IP flow is illustrated in

Figure 10.

- (2)

GEMMs

Before stepping into the dataflow in the IP, we need to introduce GEMMs. In a CPU or GPU, a common way to process convolutional and fully connected layers is to map them as matrix multiplication. GEMMs basically expand the feature maps and the kernels into two main matrices, and then the convolution is performed by multiplying the two matrices by each other. The details are illustrated in

Figure 11.

- (3)

SMM

After the two matrices are finished, the multiplication is carried out in this stage. Take a 5 × 5 feature map and 3 × 3 kernel with two channels as an example.

Figure 12 shows the calculated details for this simple example.

Max pooling is performed by choosing the maximum value within the pooling size. To achieve this goal, we compared each input value with the previous value. That is, three comparisons were carried out for a 2 × 2 max pooling.

Technically, a fully connected layer can be viewed as a 1 × 1 convolution. After the convolutional layer chains, the feature maps are flattened into a 1D stream and convolved with the input fully connected parameters.

- (4)

Batch Normalization (BN)

Batch normalization (BN) [

19] is known for reducing the internal covariate shift by normalizing the inputs to have a mean of 0 and a standard deviation of 1. Two parameters, β and γ, are obtained from mini-batches in the training phase and used for the inputs in the inference stage. γ is the scale of the standard deviation parameter, and β is the shift of the mean parameter. A detailed formula for the BN during the training stage is shown in Equation (2), where B is the mini-batch size, C is the depth of input feature maps (IFMs), N is the depth of output feature maps (OFMs), OF

W is output feature map width, OF

H is output feature map height, σ is mini-batch variance, and ε is a negligible constant.

At the inference stage, β and γ are used for scaling and shifting after the inputs are normalized by the maximum and minimum values in the input feature map. The scaling and shifting parts only involve multiplication, while normalization requires division, which could consume a large amount of computational resources on our FPGA board. The normalization formula can be described as follows:

Now, we have two ways of implementing this equation:

(1) Directly compute the division in the IP. (2) Preprocess the parameters by Python before sending them to the data stream. Initially, we tried the first approach, but this failed because our convolution parameters ranged from 0 to 1 while the batch norm parameters pretrained by Caffe exceeded this range. Fixed-point arithmetic will overflow in this case. Therefore, we moved to the second approach, because the output was obtained by the input divided by a variable pretrained by the neural network. To simplify the hardware architecture and save resources, we divided the batch norm layer parameters using Numpy before sending them into the data stream.

5. Conclusions

In this paper, we proposed a low-complexity and lightweight CNN-based finger vein recognition system with edge computing that can achieve a faster inference time and maintain a high-precision system recognition rate. Based on the equipment specifications of the existing finger vein image acquisition products on the market, we designed and developed our own finger vein image capture device to collect the finger vein images for this study. Specifically, a set of darkroom photography equipment that conforms to the NIR wavelength of 850 nm was used.

The modified CNN-based finger vein recognition system consists of three convolutional layers, and BN is performed after each layer. Several preprocessing techniques and the improved CNN were combined to obtain better image quality and higher recognition accuracy. The implementation details were described in this article. At the same time, experiments were performed using a variety of application scenarios (including finger vein images obtained from fingers at different temperatures, HRs, levels of cleanliness, etc., as well as standardized personal finger vein images). These experiments illustrated the robustness and practicality of the proposed system.

The proposed system has potential for both design flexibility and commercialization. The market application goal was to conform to the structure and market of miniaturized community security system applications. For a community-based smart security system connected to a smart city, although it would be necessary to add a very small amount of personal recognition data to the database, we would only need to retrain the personal feature items to complete the security system update and maintain high-efficiency and high-accuracy functions.