High-Quality 3D Visualization System for Light-Field Microscopy with Fine-Scale Shape Measurement through Accurate 3D Surface Data

Abstract

1. Introduction

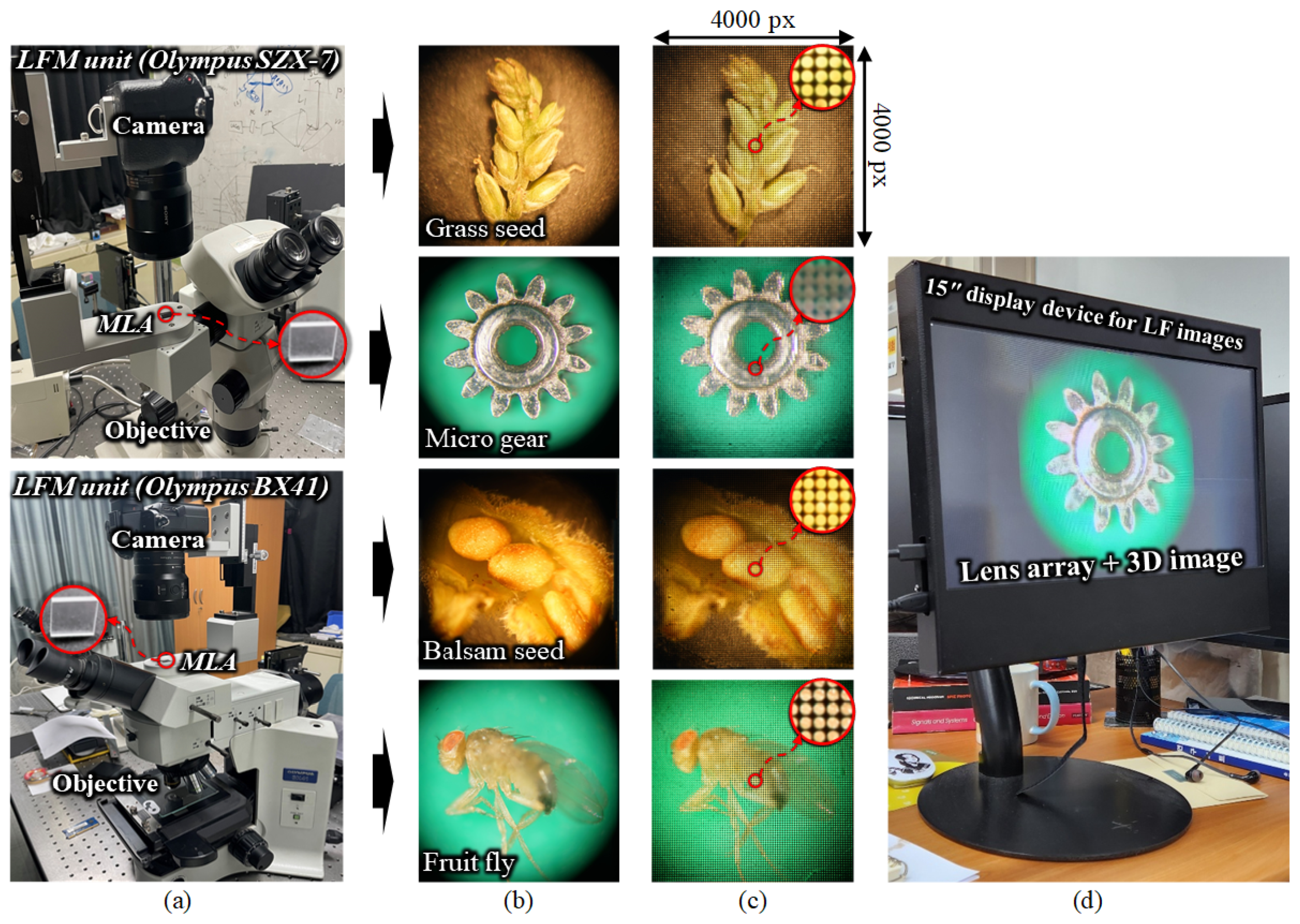

2. Principle of Light-Field Microscope

3. Materials and Methods

3.1. Optical Design

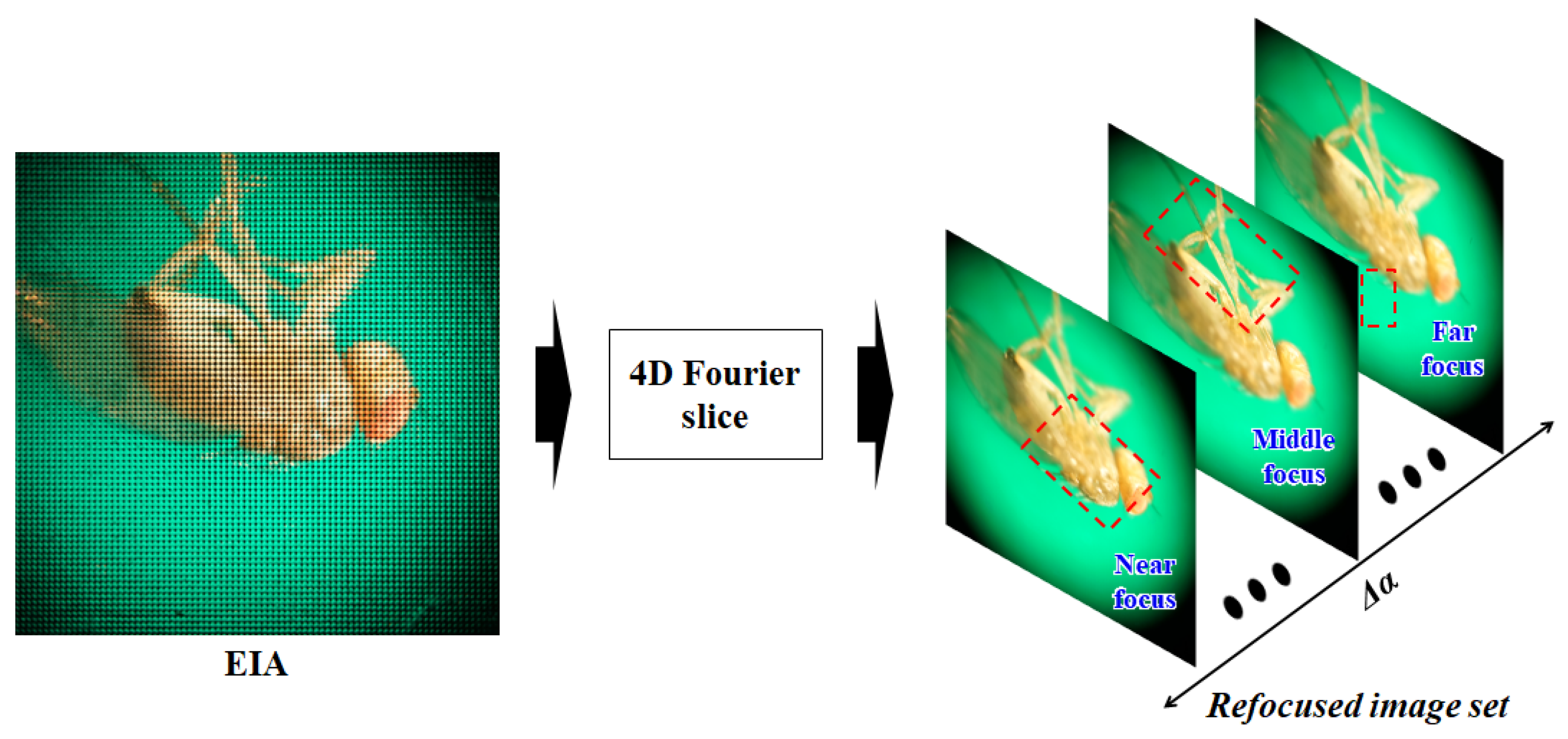

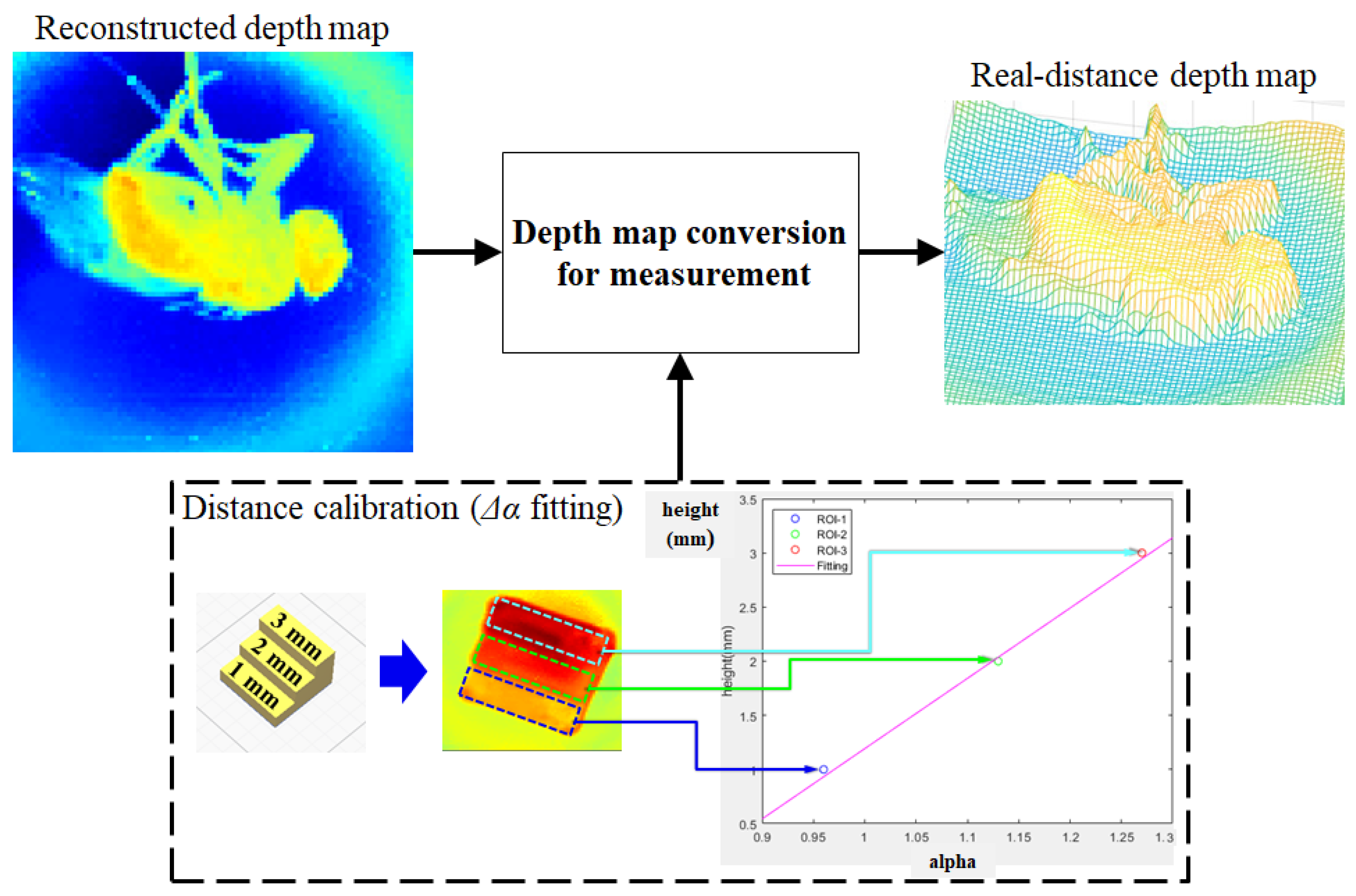

3.2. Depth Estimation Method and Measurement

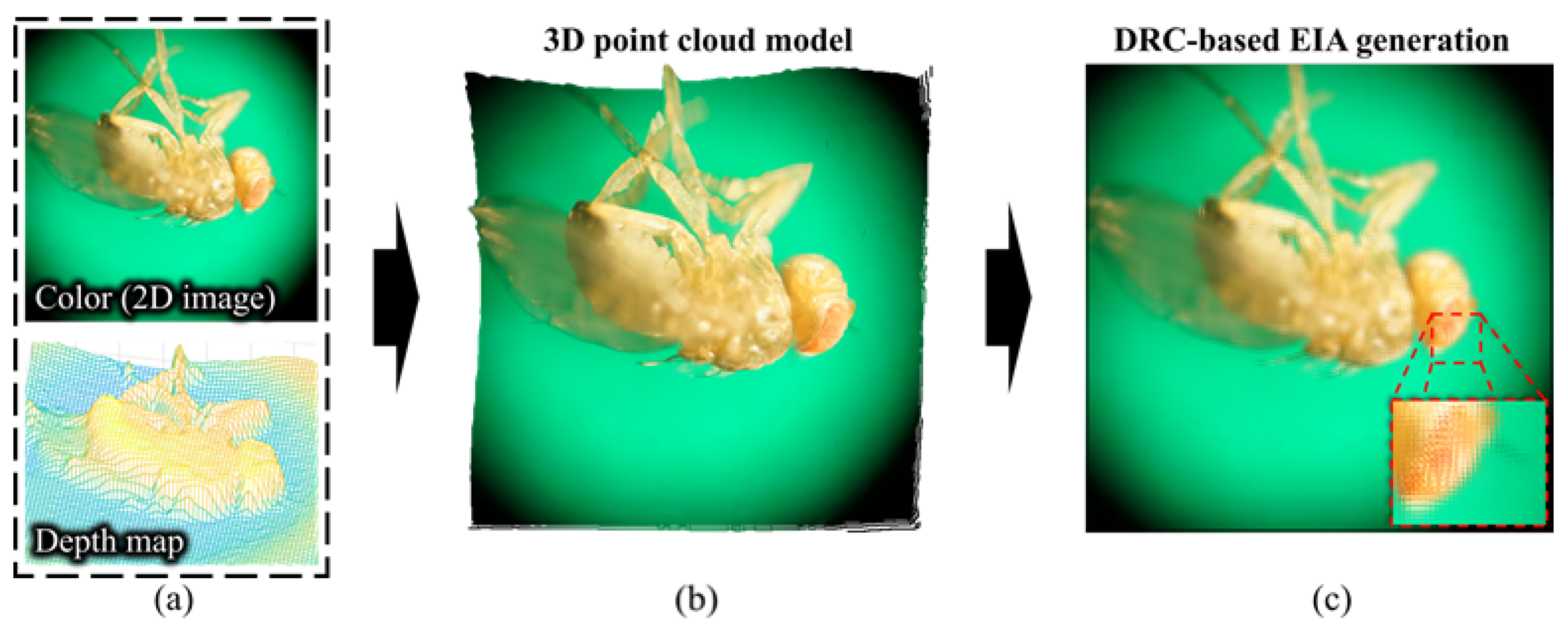

3.3. 3D Model Generation and 3D Visualization

4. Result and Discussion

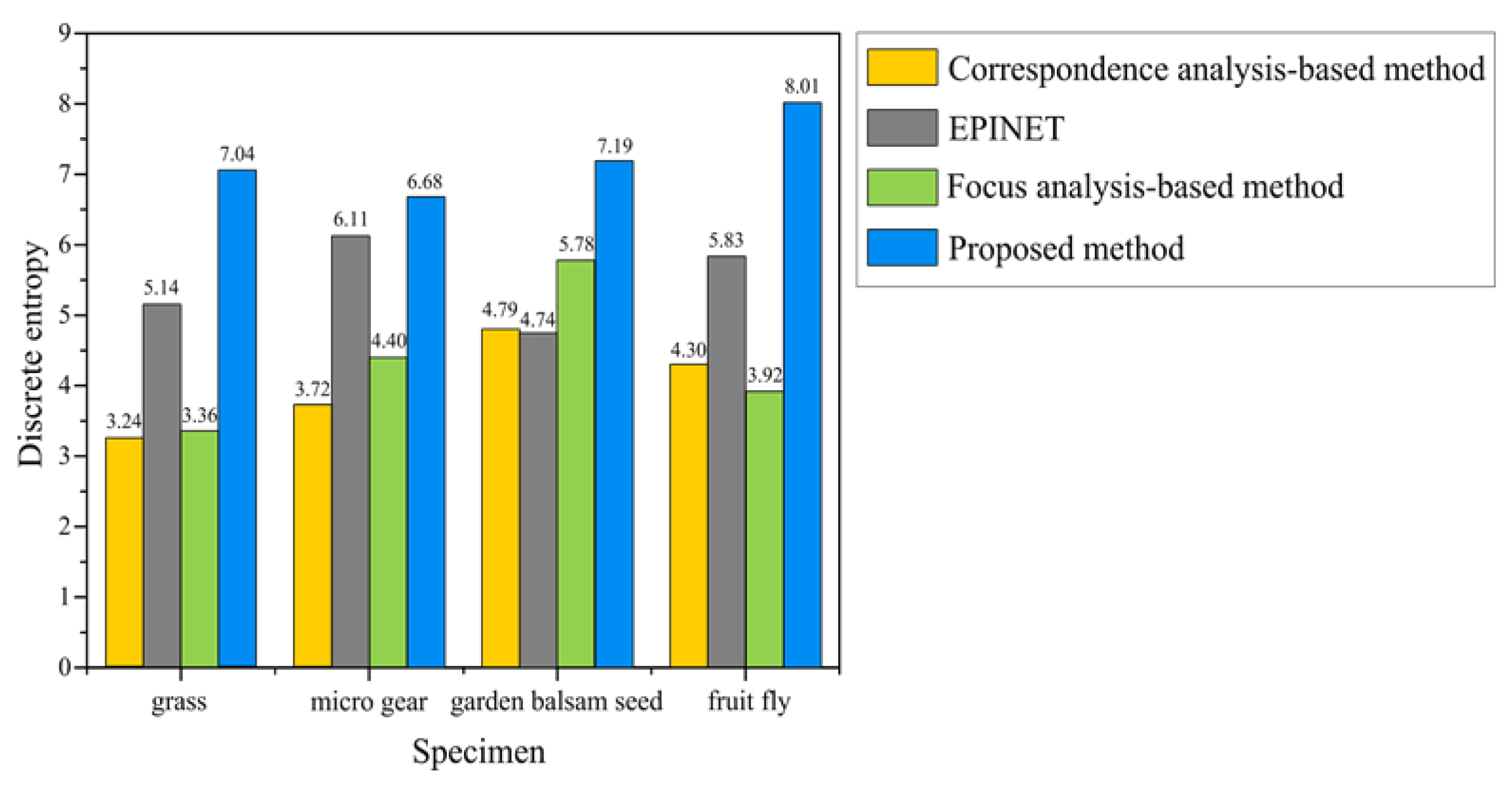

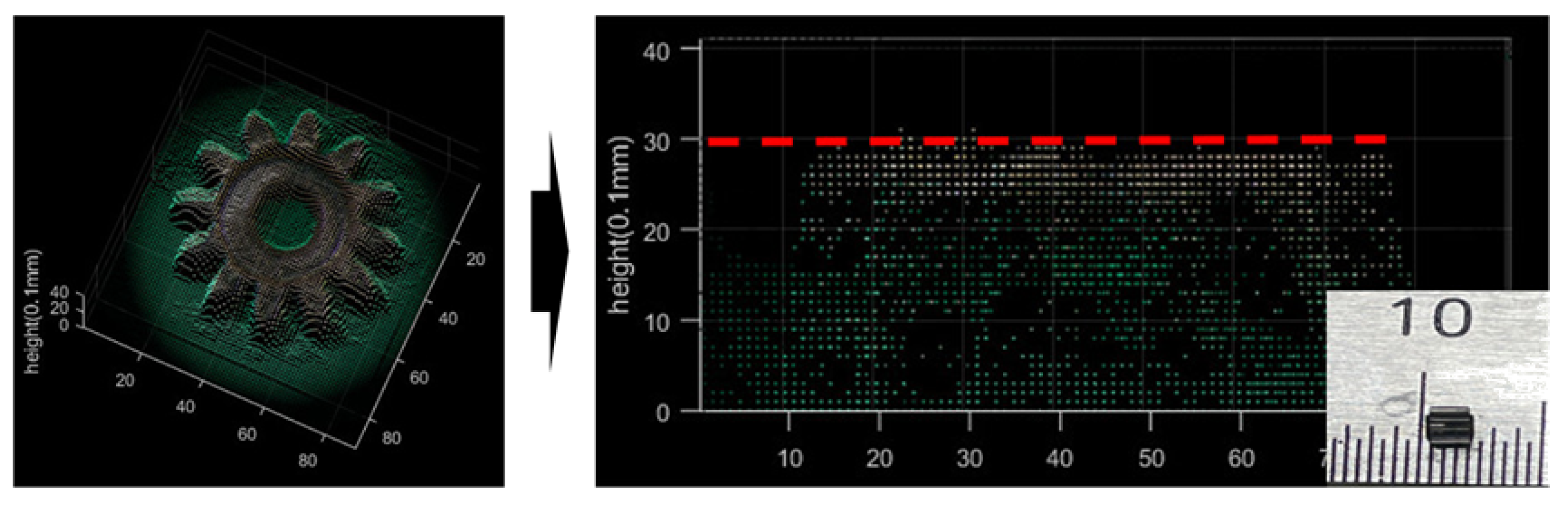

4.1. Depth Estimation

4.2. Measurement Analysis

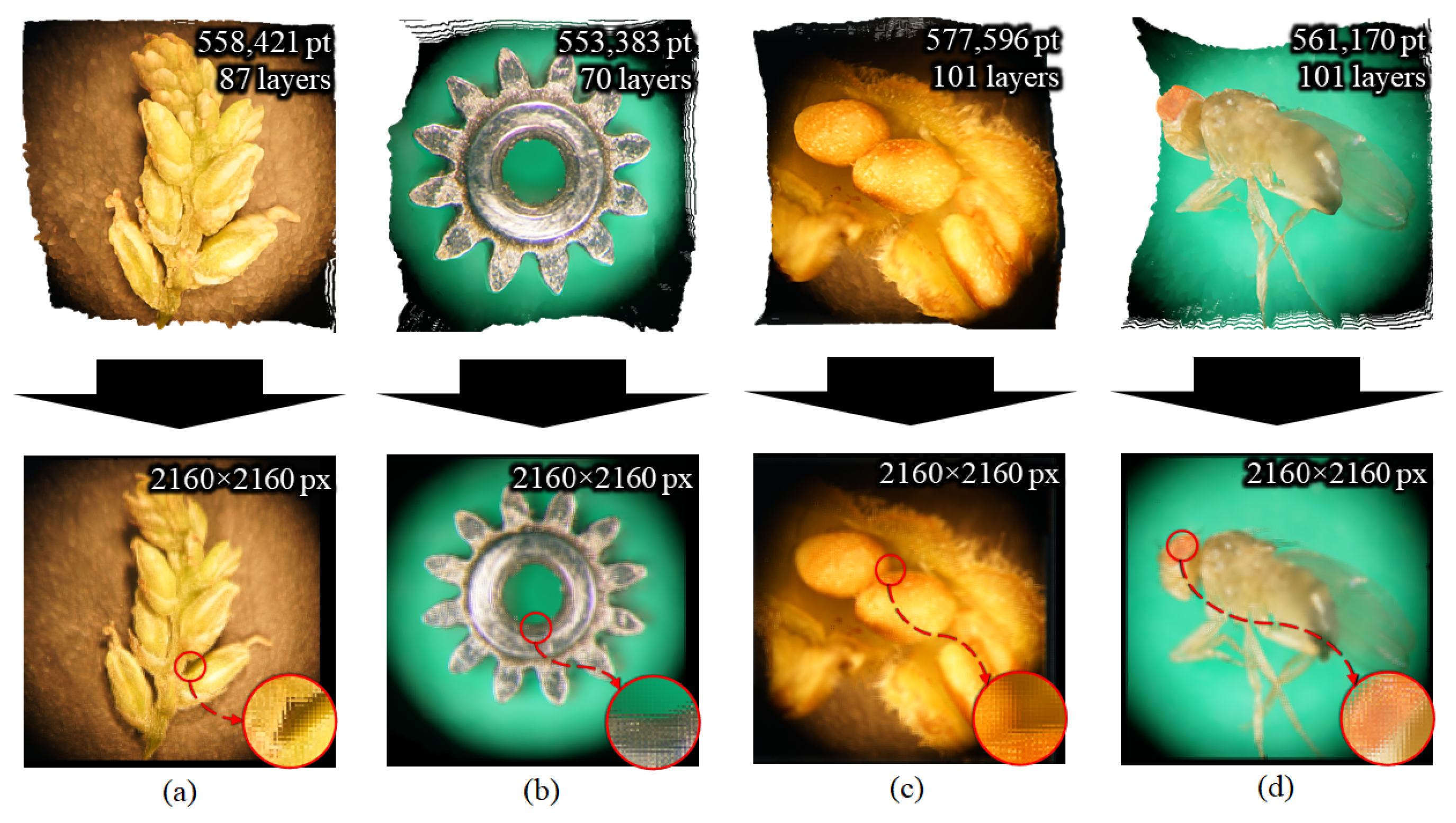

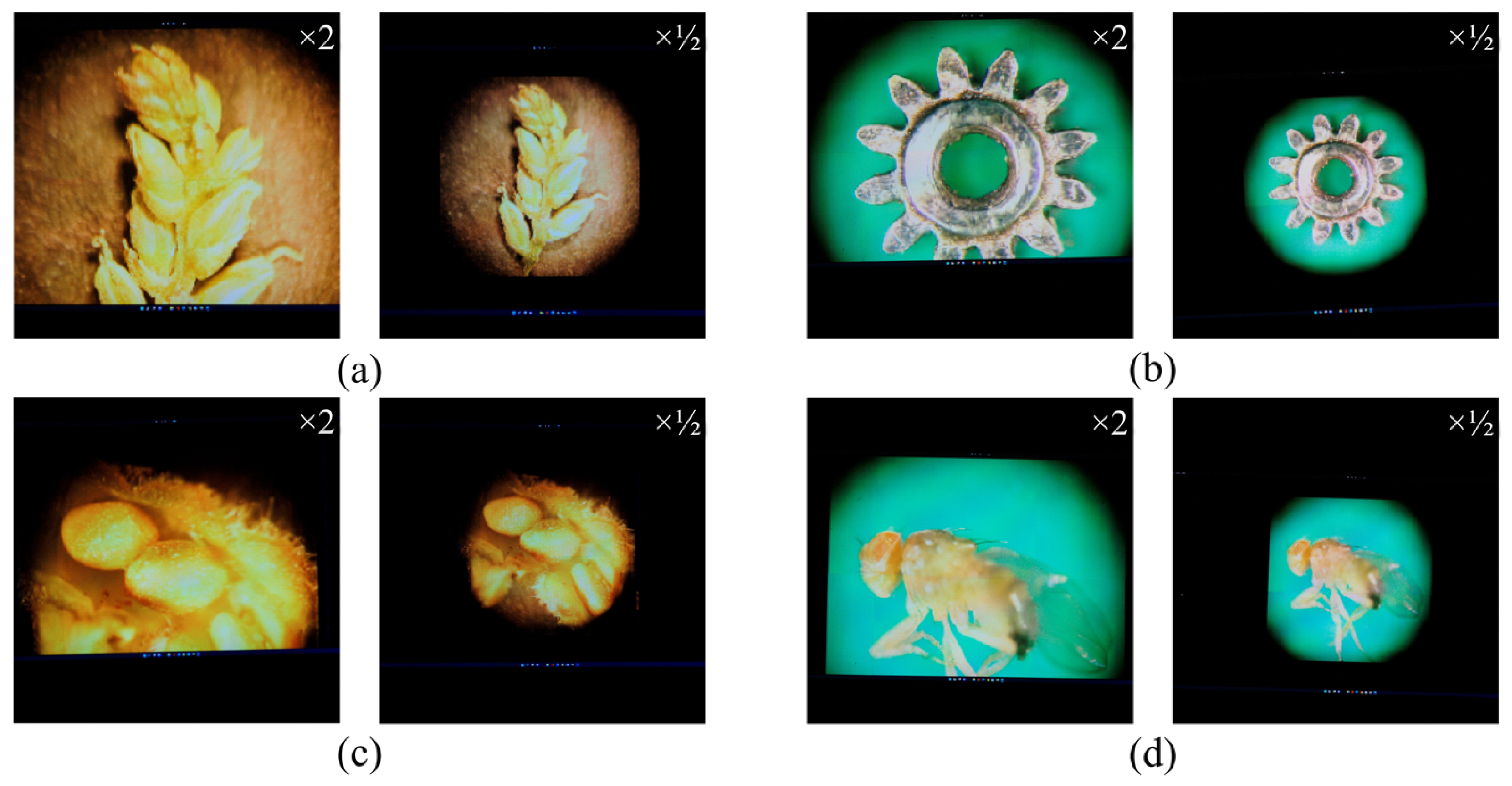

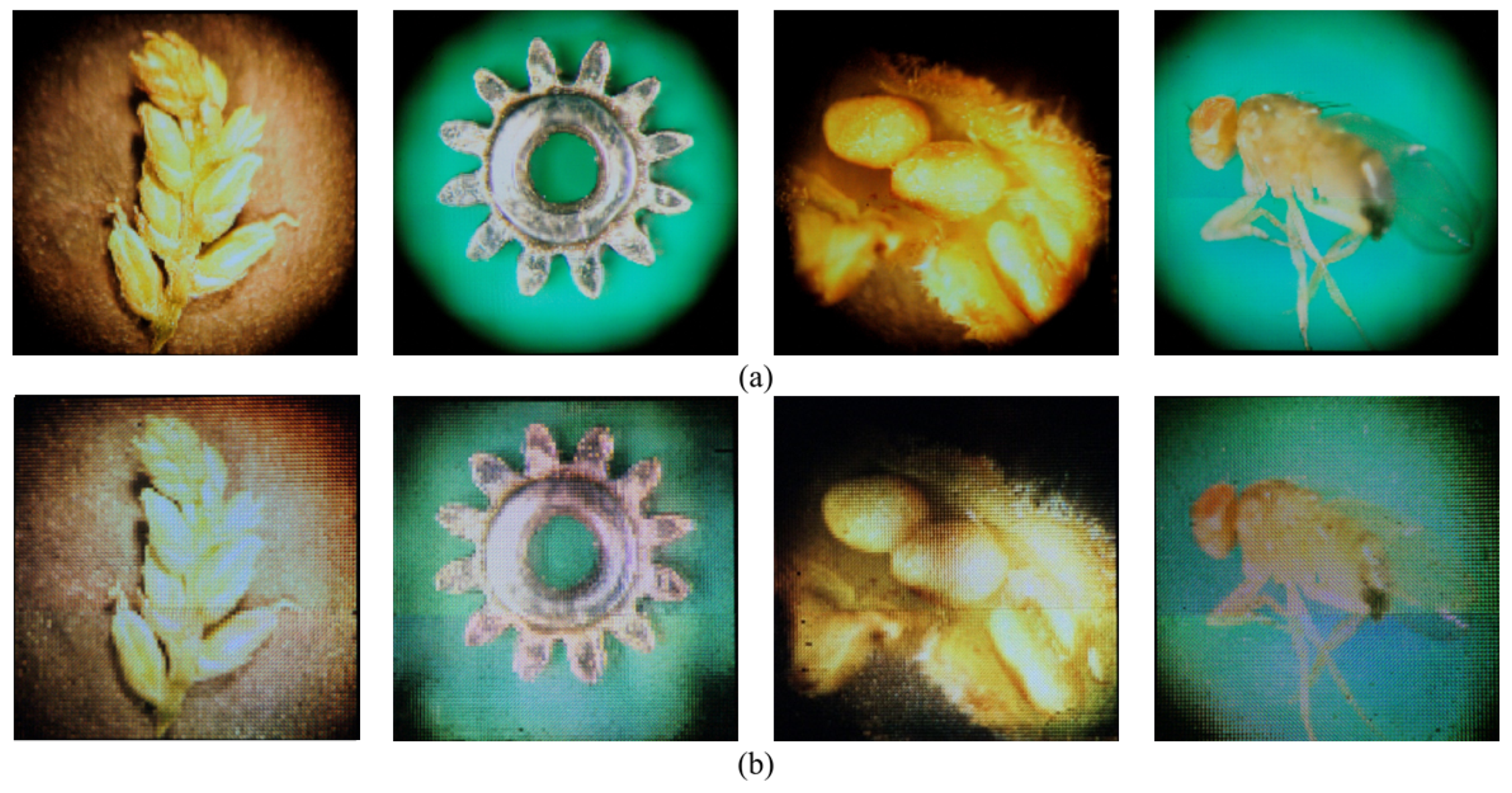

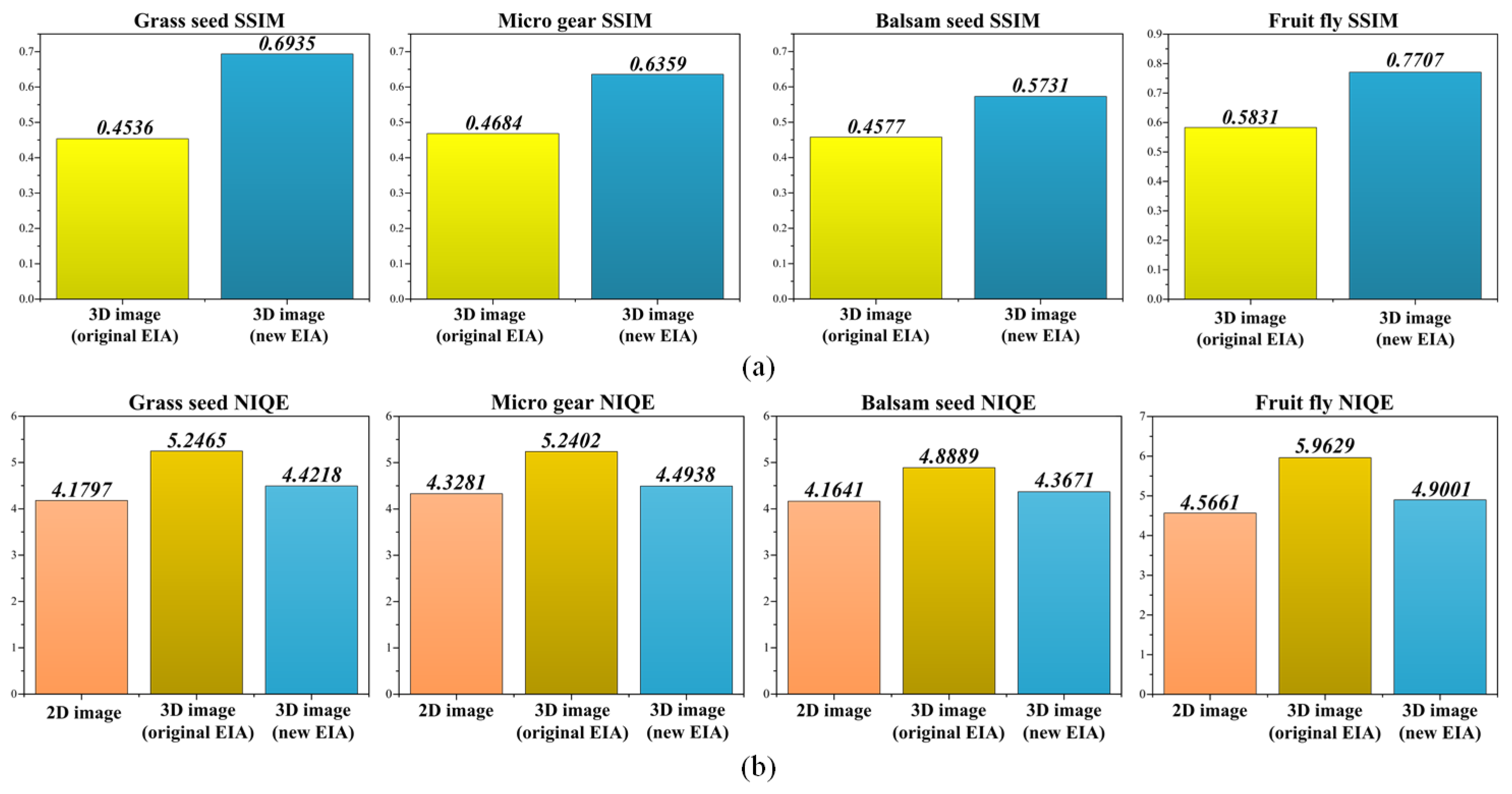

4.3. 3D Visualization Based on the 3D Model

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lippmann, G. Epreuves reversibles donnant la sensation du relief. J. Phys. Theor. Appl. 1908, 7, 821–825. [Google Scholar] [CrossRef]

- Martínez-Corral, M.; Javidi, B. Fundamentals of 3D imaging and displays: A tutorial on integral imaging, light-field, and plenoptic systems. Adv. Opt. Photonics 2018, 10, 512–566. [Google Scholar] [CrossRef]

- Adelson, E.H.; Wang, J.Y. Single lens stereo with a plenoptic camera. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 99–106. [Google Scholar] [CrossRef]

- Levoy, M.; Hanrahan, P. Light field rendering. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 4–9 August 1996; pp. 31–42. [Google Scholar]

- Bimber, O.; Schedl, D.C. Light-field microscopy: A review. J. Neurol. Neuromed. 2019, 4, 1–6. [Google Scholar] [CrossRef]

- Levoy, M.; Ng, R.; Adams, A.; Footer, M.; Horowitz, M. Light field microscopy. In Proceedings of the SIGGRAPH06: Special Interest Group on Computer Graphics and Interactive Techniques Conference, Boston, MA, USA, 30 July–3 August 2006; pp. 924–934. [Google Scholar]

- Kwon, K.C.; Kwon, K.H.; Erdenebat, M.U.; Piao, Y.L.; Lim, Y.T.; Kim, M.Y.; Kim, N. Resolution-enhancement for an integral imaging microscopy using deep learning. IEEE Photonics J. 2019, 11, 6900512. [Google Scholar] [CrossRef]

- Lumsdaine, A.; Georgiev, T. The focused plenoptic camera. In Proceedings of the 2009 IEEE International Conference on Computational Photography (ICCP), San Francisco, CA, USA, 16–17 April 2009; pp. 1–8. [Google Scholar]

- Llavador, A.; Sola-Pikabea, J.; Saavedra, G.; Javidi, B.; Martínez-Corral, M. Resolution improvements in integral microscopy with Fourier plane recording. Opt. Express 2016, 24, 20792–20798. [Google Scholar] [CrossRef] [PubMed]

- Scrofani, G.; Sola-Pikabea, J.; Llavador, A.; Sanchez-Ortiga, E.; Barreiro, J.; Saavedra, G.; Garcia-Sucerquia, J.; Martínez-Corral, M. FIMic: Design for ultimate 3D-integral microscopy of in-vivo biological samples. Biomed. Opt. Express 2018, 9, 335–346. [Google Scholar] [CrossRef]

- Guo, C.; Liu, W.; Hua, X.; Li, H.; Jia, S. Fourier light-field microscopy. Opt. Express 2019, 27, 25573–25594. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Yan, Z.; Jiang, X.; Jing, T.; Chen, S.; Lin, M.; Zhang, J.; Yan, X. Performance enhanced elemental array generation for integral image display using pixel fusion. Front. Phys. 2021, 9, 639117. [Google Scholar] [CrossRef]

- Sang, X.; Gao, X.; Yu, X.; Xing, S.; Li, Y.; Wu, Y. Interactive floating full-parallax digital three-dimensional light-field display based on wavefront recomposing. Opt. Express 2018, 26, 8883–8889. [Google Scholar] [CrossRef] [PubMed]

- Kwon, K.C.; Erdenebat, M.U.; Alam, M.A.; Lim, Y.T.; Kim, K.G.; Kim, N. Integral imaging microscopy with enhanced depth-of-field using a spatial multiplexing. Opt. Express 2016, 24, 2072–2083. [Google Scholar] [CrossRef] [PubMed]

- Wu, G.; Masia, B.; Jarabo, A.; Zhang, Y.; Wang, L.; Dai, Q.; Chai, T.; Liu, Y. Light field image processing: An overview. IEEE J. Sel. Top. Signal Process. 2017, 11, 926–954. [Google Scholar] [CrossRef]

- Kwon, K.C.; Erdenebat, M.U.; Lim, Y.T.; Joo, K.I.; Park, M.K.; Park, H.; Jeong, J.R.; Kim, H.R.; Kim, N. Enhancement of the depth-of-field of integral imaging microscope by using switchable bifocal liquid-crystalline polymer micro lens array. Opt. Express 2017, 25, 30503–30512. [Google Scholar] [CrossRef]

- Jeon, H.G.; Park, J.; Choe, G.; Park, J.; Bok, Y.; Tai, Y.W.; So Kweon, I. Accurate depth map estimation from a lenslet light field camera. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1547–1555. [Google Scholar]

- Da Sie, Y.; Lin, C.Y.; Chen, S.J. 3D surface morphology imaging of opaque microstructures via light-field microscopy. Sci. Rep. 2018, 8, 10505. [Google Scholar] [CrossRef]

- Palmieri, L.; Scrofani, G.; Incardona, N.; Saavedra, G.; Martínez-Corral, M.; Koch, R. Robust depth estimation for light field microscopy. Sensors 2019, 19, 500. [Google Scholar] [CrossRef]

- Shin, C.; Jeon, H.G.; Yoon, Y.; Kweon, I.S.; Kim, S.J. Epinet: A fully-convolutional neural network using epipolar geometry for depth from light field images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4748–4757. [Google Scholar]

- Imtiaz, S.M.; Kwon, K.C.; Hossain, M.B.; Alam, M.S.; Jeon, S.H.; Kim, N. Depth Estimation for Integral Imaging Microscopy Using a 3D–2D CNN with a Weighted Median Filter. Sensors 2022, 22, 5288. [Google Scholar] [CrossRef]

- Kinoshita, T.; Ono, S. Depth estimation from 4D light field videos. In Proceedings of the International Workshop on Advanced Imaging Technology (IWAIT), Online, 5–6 January 2021; Volume 11766, pp. 56–61. [Google Scholar]

- Kwon, K.C.; Kwon, K.H.; Erdenebat, M.U.; Piao, Y.L.; Lim, Y.T.; Zhao, Y.; Kim, M.Y.; Kim, N. Advanced three-dimensional visualization system for an integral imaging microscope using a fully convolutional depth estimation network. IEEE Photonics J. 2020, 12, 3900714. [Google Scholar] [CrossRef]

- Kwon, K.C.; Erdenebat, M.U.; Khuderchuluun, A.; Kwon, K.H.; Kim, M.Y.; Kim, N. High-quality 3D display system for an integral imaging microscope using a simplified direction-inversed computation based on user interaction. Opt. Lett. 2021, 46, 5079–5082. [Google Scholar] [CrossRef] [PubMed]

- Ng, R. Fourier slice photography. In Proceedings of the SIGGRAPH05: Special Interest Group on Computer Graphics and Interactive Techniques Conference, Los Angeles, CA, USA, 31 July–4 August 2005; pp. 735–744. [Google Scholar]

- Pertuz, S.; Puig, D.; Garcia, M.A. Analysis of focus measure operators for shape-from-focus. Pattern Recognit. 2013, 46, 1415–1432. [Google Scholar] [CrossRef]

- Levin, A.; Lischinski, D.; Weiss, Y. A closed-form solution to natural image matting. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 228–242. [Google Scholar] [CrossRef]

- Tseng, C.y.; Wang, S.J. Maximum-a-posteriori estimation for global spatial coherence recovery based on matting Laplacian. In Proceedings of the 2012 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 293–296. [Google Scholar]

- Tseng, C.Y.; Wang, S.J. Shape-from-focus depth reconstruction with a spatial consistency model. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 2063–2076. [Google Scholar] [CrossRef]

- Khuderchuluun, A.; Piao, Y.L.; Erdenebat, M.U.; Dashdavaa, E.; Lee, M.H.; Jeon, S.H.; Kim, N. Simplified digital content generation based on an inverse-directed propagation algorithm for holographic stereogram printing. Appl. Opt. 2021, 60, 4235–4244. [Google Scholar] [CrossRef] [PubMed]

- Favaro, P. Shape from focus and defocus: Convexity, quasiconvexity and defocus-invariant textures. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio De Janeiro, Brazil, 14–21 October 2007; pp. 1–7. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

| Devices | Indices | Specifications |

|---|---|---|

| Microscope Device | For low magnification (SZX-7) | Objective ×1, NA = 0.1 |

| (Working distance 90 mm) | ||

| Zoom body 7:1 | ||

| (Magnification range of 8×–56×) | ||

| For high magnification (BX41) | Objective ×10, NA = 0.3 | |

| (Working distance 10 mm) | ||

| MLA | Number of lenses | 100 × 100 lenses |

| Elemental lens pitch | 125 µm | |

| Focal length | 2.4 mm | |

| Camera | Model | Sony 6000 |

| Sensor resolution | 6000 × 4000 pixels | |

| Pixel pitch | 3.88 µm | |

| PC | CPU | Intel i7-8700 3.2 GHz |

| Memory | 16 GB | |

| Operating system | Windows 10 Pro (64-bit) | |

| Display device | Screen size | 15-inch (345 × 194 mm) |

| Resolution | 4K (3840 × 2160 px) | |

| Pixel pitch | 0.089 mm | |

| Lens array for 3D display | Focal length | 3.3 mm |

| Elemental lens pitch | 1 mm | |

| Number of lenses of lens array | 345 × 194 lenses | |

| Thickness of acrylic plate | 6 mm |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kwon, K.H.; Erdenebat, M.-U.; Kim, N.; Khuderchuluun, A.; Imtiaz, S.M.; Kim, M.Y.; Kwon, K.-C. High-Quality 3D Visualization System for Light-Field Microscopy with Fine-Scale Shape Measurement through Accurate 3D Surface Data. Sensors 2023, 23, 2173. https://doi.org/10.3390/s23042173

Kwon KH, Erdenebat M-U, Kim N, Khuderchuluun A, Imtiaz SM, Kim MY, Kwon K-C. High-Quality 3D Visualization System for Light-Field Microscopy with Fine-Scale Shape Measurement through Accurate 3D Surface Data. Sensors. 2023; 23(4):2173. https://doi.org/10.3390/s23042173

Chicago/Turabian StyleKwon, Ki Hoon, Munkh-Uchral Erdenebat, Nam Kim, Anar Khuderchuluun, Shariar Md Imtiaz, Min Young Kim, and Ki-Chul Kwon. 2023. "High-Quality 3D Visualization System for Light-Field Microscopy with Fine-Scale Shape Measurement through Accurate 3D Surface Data" Sensors 23, no. 4: 2173. https://doi.org/10.3390/s23042173

APA StyleKwon, K. H., Erdenebat, M.-U., Kim, N., Khuderchuluun, A., Imtiaz, S. M., Kim, M. Y., & Kwon, K.-C. (2023). High-Quality 3D Visualization System for Light-Field Microscopy with Fine-Scale Shape Measurement through Accurate 3D Surface Data. Sensors, 23(4), 2173. https://doi.org/10.3390/s23042173