Controlling Upper Limb Prostheses Using Sonomyography (SMG): A Review

Abstract

1. Introduction

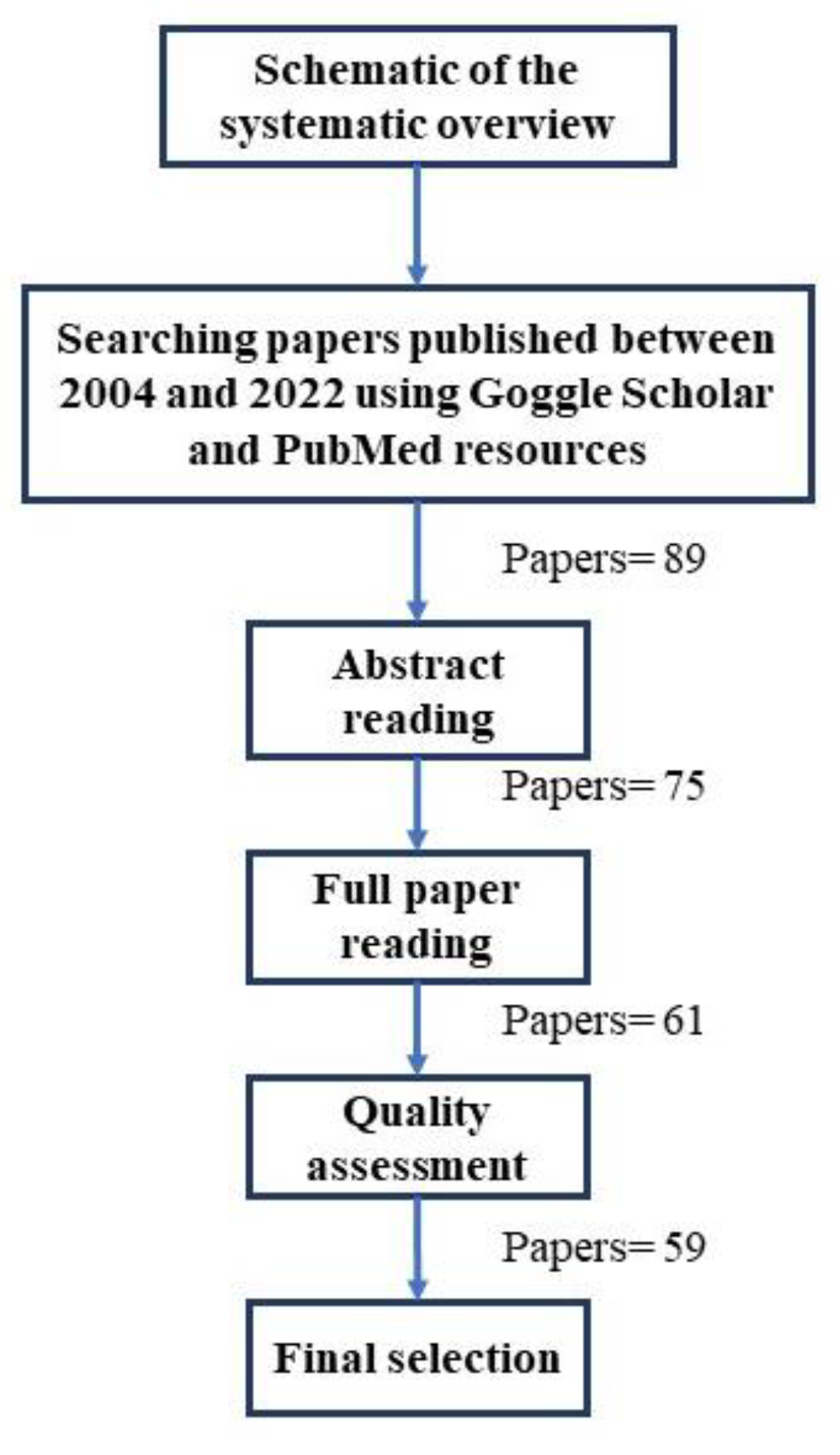

2. Methodology

3. Sonomyography (SMG)

3.1. Ultrasound Modes Used in SMG

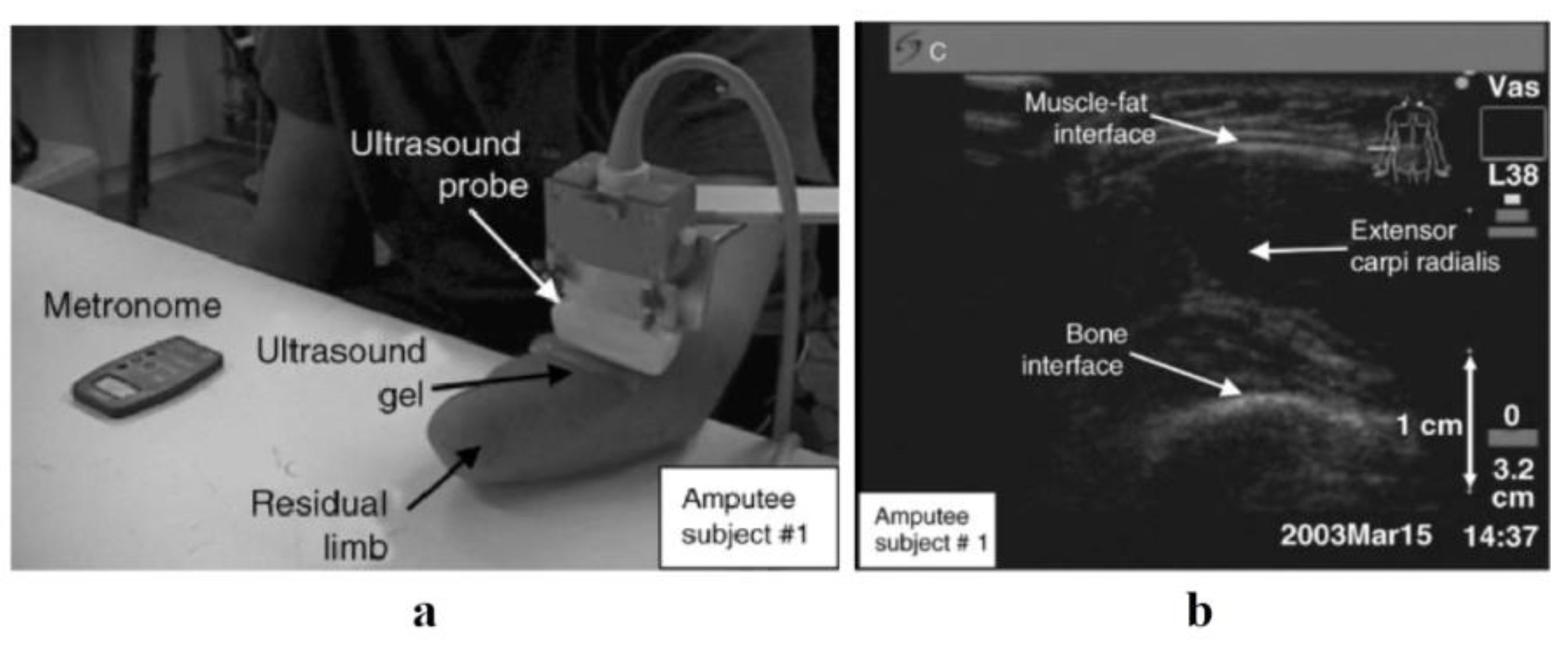

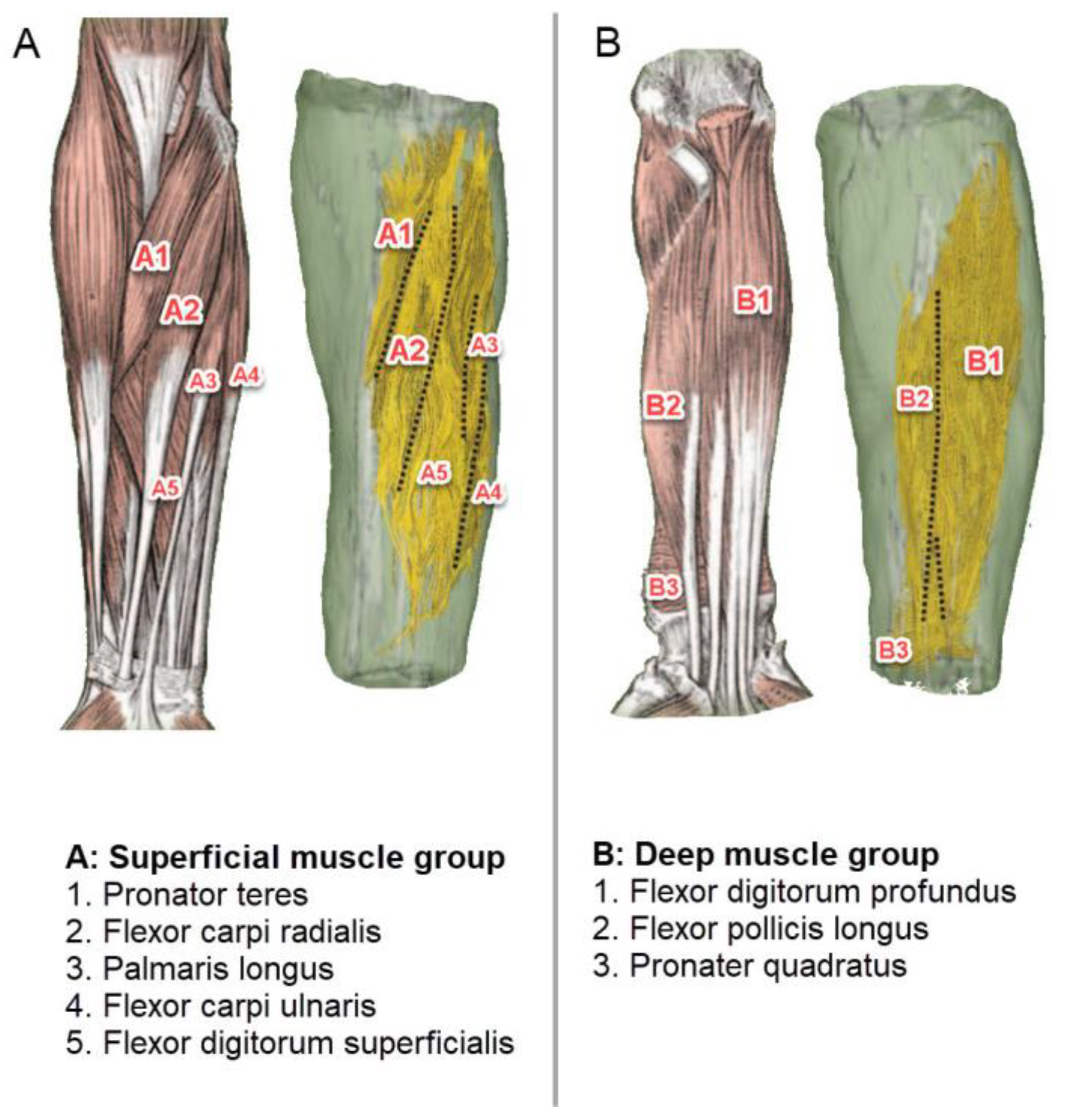

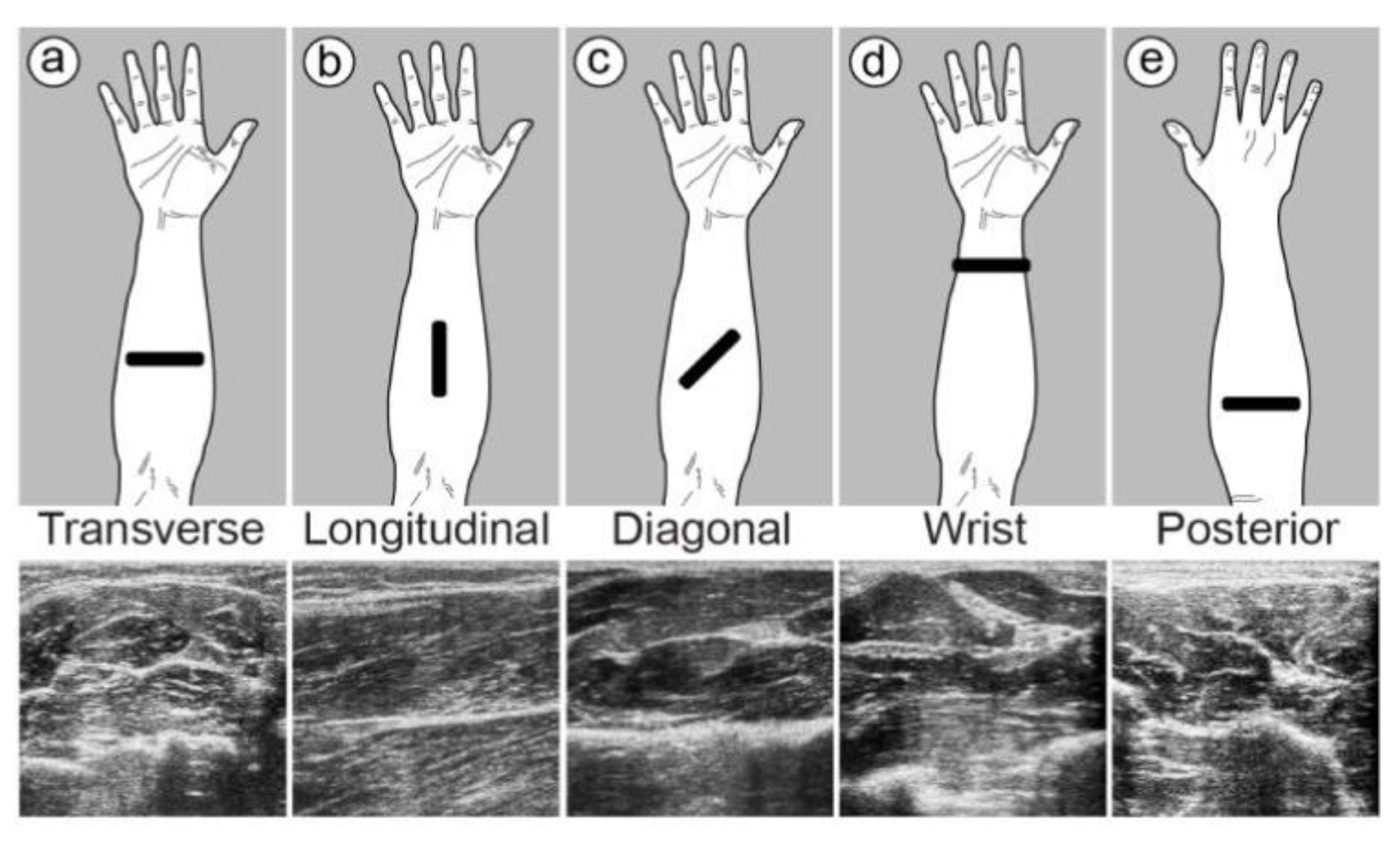

3.2. Muscle Location and Probe Fixation

3.3. Feature Extraction Algorithm

3.4. Artificial Intelligence in Classification

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Van Dijk, L.; van der Sluis, C.K.; van Dijk, H.W.; Bongers, R.M. Task-oriented gaming for transfer to prosthesis use. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 24, 1384–1394. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Dong, W.; Li, Y.; Li, F.; Geng, J.; Zhu, M.; Chen, T.; Zhang, H.; Sun, L.; Lee, C. An epidermal sEMG tattoo-like patch as a new human–machine interface for patients with loss of voice. Microsyst. Nanoeng. 2020, 6, 16. [Google Scholar] [PubMed]

- Jiang, N.; Dosen, S.; Muller, K.-R.; Farina, D. Myoelectric control of artificial limbs—Is there a need to change focus? [In the spotlight]. IEEE Signal Process. Mag. 2012, 29, 150–152. [Google Scholar]

- Nazari, V.; Pouladian, M.; Zheng, Y.-P.; Alam, M. A Compact and Lightweight Rehabilitative Exoskeleton to Restore Grasping Functions for People with Hand Paralysis. Sensors 2021, 21, 6900. [Google Scholar] [CrossRef]

- Nazari, V.; Pouladian, M.; Zheng, Y.-P.; Alam, M. Compact Design of A Lightweight Rehabilitative Exoskeleton for Restoring Grasping Function in Patients with Hand Paralysis. Res. Sq. 2021. Preprits. [Google Scholar] [CrossRef]

- Zhang, Y.; Yu, C.; Shi, Y. Designing autonomous driving HMI system: Interaction need insight and design tool study. In Proceedings of the International Conference on Human-Computer Interaction, Las Vegas, NV, USA, 15–20 July 2018; pp. 426–433. [Google Scholar]

- Young, S.N.; Peschel, J.M. Review of human–machine interfaces for small unmanned systems with robotic manipulators. IEEE Trans. Hum.-Mach. Syst. 2020, 50, 131–143. [Google Scholar] [CrossRef]

- Wilde, M.; Chan, M.; Kish, B. Predictive human-machine interface for teleoperation of air and space vehicles over time delay. In Proceedings of the 2020 IEEE Aerospace Conference, Big Sky, MT, USA, 7–14 March 2020; pp. 1–14. [Google Scholar]

- Morra, L.; Lamberti, F.; Pratticó, F.G.; La Rosa, S.; Montuschi, P. Building trust in autonomous vehicles: Role of virtual reality driving simulators in HMI design. IEEE Trans. Veh. Technol. 2019, 68, 9438–9450. [Google Scholar] [CrossRef]

- Bortole, M.; Venkatakrishnan, A.; Zhu, F.; Moreno, J.C.; Francisco, G.E.; Pons, J.L.; Contreras-Vidal, J.L. The H2 robotic exoskeleton for gait rehabilitation after stroke: Early findings from a clinical study. J. Neuroeng. Rehabil. 2015, 12, 54. [Google Scholar] [PubMed]

- Pehlivan, A.U.; Losey, D.P.; O’Malley, M.K. Minimal assist-as-needed controller for upper limb robotic rehabilitation. IEEE Trans. Robot. 2015, 32, 113–124. [Google Scholar] [CrossRef]

- Zhu, C.; Luo, L.; Mai, J.; Wang, Q. Recognizing Continuous Multiple Degrees of Freedom Foot Movements With Inertial Sensors. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 431–440. [Google Scholar] [CrossRef]

- Russell, C.; Roche, A.D.; Chakrabarty, S. Peripheral nerve bionic interface: A review of electrodes. Int. J. Intell. Robot. Appl. 2019, 3, 11–18. [Google Scholar] [CrossRef]

- Yildiz, K.A.; Shin, A.Y.; Kaufman, K.R. Interfaces with the peripheral nervous system for the control of a neuroprosthetic limb: A review. J. Neuroeng. Rehabil. 2020, 17, 43. [Google Scholar] [CrossRef] [PubMed]

- Taylor, C.R.; Srinivasan, S.; Yeon, S.H.; O’Donnell, M.; Roberts, T.; Herr, H.M. Magnetomicrometry. Sci. Robot. 2021, 6, eabg0656. [Google Scholar] [PubMed]

- Ng, K.H.; Nazari, V.; Alam, M. Can Prosthetic Hands Mimic a Healthy Human Hand? Prosthesis 2021, 3, 11–23. [Google Scholar]

- Geethanjali, P. Myoelectric control of prosthetic hands: State-of-the-art review. Med. Devices Evid. Res. 2016, 9, 247–255. [Google Scholar] [CrossRef]

- Mahmood, N.T.; Al-Muifraje, M.H.; Saeed, T.R.; Kaittan, A.H. Upper prosthetic design based on EMG: A systematic review. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Duhok, Iraq, 9–10 September 2020; p. 012025. [Google Scholar]

- Mohebbian, M.R.; Nosouhi, M.; Fazilati, F.; Esfahani, Z.N.; Amiri, G.; Malekifar, N.; Yusefi, F.; Rastegari, M.; Marateb, H.R. A Comprehensive Review of Myoelectric Prosthesis Control. arXiv 2021, arXiv:2112.13192. [Google Scholar]

- Cimolato, A.; Driessen, J.J.; Mattos, L.S.; De Momi, E.; Laffranchi, M.; De Michieli, L. EMG-driven control in lower limb prostheses: A topic-based systematic review. J. NeuroEngineering Rehabil. 2022, 19, 43. [Google Scholar] [CrossRef]

- Ortenzi, V.; Tarantino, S.; Castellini, C.; Cipriani, C. Ultrasound imaging for hand prosthesis control: A comparative study of features and classification methods. In Proceedings of the 2015 IEEE International Conference on Rehabilitation Robotics (ICORR), Singapore, 11–14 August 2015; pp. 1–6. [Google Scholar]

- Guo, J.-Y.; Zheng, Y.-P.; Kenney, L.P.; Bowen, A.; Howard, D.; Canderle, J.J. A comparative evaluation of sonomyography, electromyography, force, and wrist angle in a discrete tracking task. Ultrasound Med. Biol. 2011, 37, 884–891. [Google Scholar] [CrossRef]

- Ribeiro, J.; Mota, F.; Cavalcante, T.; Nogueira, I.; Gondim, V.; Albuquerque, V.; Alexandria, A. Analysis of man-machine interfaces in upper-limb prosthesis: A review. Robotics 2019, 8, 16. [Google Scholar] [CrossRef]

- Haque, M.; Promon, S.K. Neural Implants: A Review Of Current Trends And Future Perspectives. Eurpoe PMC 2022. Preprint. [Google Scholar] [CrossRef]

- Kuiken, T.A.; Dumanian, G.; Lipschutz, R.D.; Miller, L.; Stubblefield, K. The use of targeted muscle reinnervation for improved myoelectric prosthesis control in a bilateral shoulder disarticulation amputee. Prosthet. Orthot. Int. 2004, 28, 245–253. [Google Scholar] [CrossRef] [PubMed]

- Miller, L.A.; Lipschutz, R.D.; Stubblefield, K.A.; Lock, B.A.; Huang, H.; Williams, T.W., III; Weir, R.F.; Kuiken, T.A. Control of a six degree of freedom prosthetic arm after targeted muscle reinnervation surgery. Arch. Phys. Med. Rehabil. 2008, 89, 2057–2065. [Google Scholar] [CrossRef] [PubMed]

- Wadikar, D.; Kumari, N.; Bhat, R.; Shirodkar, V. Book recommendation platform using deep learning. Int. Res. J. Eng. Technol. IRJET 2020, 7, 6764–6770. [Google Scholar]

- Memberg, W.D.; Stage, T.G.; Kirsch, R.F. A fully implanted intramuscular bipolar myoelectric signal recording electrode. Neuromodulation Technol. Neural Interface 2014, 17, 794–799. [Google Scholar] [CrossRef]

- Hazubski, S.; Hoppe, H.; Otte, A. Non-contact visual control of personalized hand prostheses/exoskeletons by tracking using augmented reality glasses. 3D Print. Med. 2020, 6, 6. [Google Scholar] [CrossRef] [PubMed]

- Johansen, D.; Cipriani, C.; Popović, D.B.; Struijk, L.N. Control of a robotic hand using a tongue control system—A prosthesis application. IEEE Trans. Biomed. Eng. 2016, 63, 1368–1376. [Google Scholar] [CrossRef]

- Otte, A. Invasive versus Non-Invasive Neuroprosthetics of the Upper Limb: Which Way to Go? Prosthesis 2020, 2, 237–239. [Google Scholar]

- Fonseca, L.; Tigra, W.; Navarro, B.; Guiraud, D.; Fattal, C.; Bó, A.; Fachin-Martins, E.; Leynaert, V.; Gélis, A.; Azevedo-Coste, C. Assisted grasping in individuals with tetraplegia: Improving control through residual muscle contraction and movement. Sensors 2019, 19, 4532. [Google Scholar] [PubMed]

- Fang, C.; He, B.; Wang, Y.; Cao, J.; Gao, S. EMG-centered multisensory based technologies for pattern recognition in rehabilitation: State of the art and challenges. Biosensors 2020, 10, 85. [Google Scholar] [CrossRef]

- Briouza, S.; Gritli, H.; Khraief, N.; Belghith, S.; Singh, D. A Brief Overview on Machine Learning in Rehabilitation of the Human Arm via an Exoskeleton Robot. In Proceedings of the 2021 International Conference on Data Analytics for Business and Industry (ICDABI), Sakheer, Bahrain, 25–26 October 2021; pp. 129–134. [Google Scholar]

- Zhang, S.; Li, Y.; Zhang, S.; Shahabi, F.; Xia, S.; Deng, Y.; Alshurafa, N. Deep learning in human activity recognition with wearable sensors: A review on advances. Sensors 2022, 22, 1476. [Google Scholar] [CrossRef]

- Zadok, D.; Salzman, O.; Wolf, A.; Bronstein, A.M. Towards Predicting Fine Finger Motions from Ultrasound Images via Kinematic Representation. arXiv 2022, arXiv:2202.05204. [Google Scholar]

- Yan, J.; Yang, X.; Sun, X.; Chen, Z.; Liu, H. A lightweight ultrasound probe for wearable human–machine interfaces. IEEE Sens. J. 2019, 19, 5895–5903. [Google Scholar] [CrossRef]

- Zheng, Y.-P.; Chan, M.; Shi, J.; Chen, X.; Huang, Q.-H. Sonomyography: Monitoring morphological changes of forearm muscles in actions with the feasibility for the control of powered prosthesis. Med. Eng. Phys. 2006, 28, 405–415. [Google Scholar] [PubMed]

- Li, J.; Zhu, K.; Pan, L. Wrist and finger motion recognition via M-mode ultrasound signal: A feasibility study. Biomed. Signal Process. Control 2022, 71, 103112. [Google Scholar] [CrossRef]

- Dhawan, A.S.; Mukherjee, B.; Patwardhan, S.; Akhlaghi, N.; Diao, G.; Levay, G.; Holley, R.; Joiner, W.M.; Harris-Love, M.; Sikdar, S. Proprioceptive sonomyographic control: A novel method for intuitive and proportional control of multiple degrees-of-freedom for individuals with upper extremity limb loss. Sci. Rep. 2019, 9, 9499. [Google Scholar] [CrossRef]

- He, J.; Luo, H.; Jia, J.; Yeow, J.T.; Jiang, N. Wrist and finger gesture recognition with single-element ultrasound signals: A comparison with single-channel surface electromyogram. IEEE Trans. Biomed. Eng. 2018, 66, 1277–1284. [Google Scholar] [CrossRef]

- Begovic, H.; Zhou, G.-Q.; Li, T.; Wang, Y.; Zheng, Y.-P. Detection of the electromechanical delay and its components during voluntary isometric contraction of the quadriceps femoris muscle. Front. Physiol. 2014, 5, 494. [Google Scholar]

- Dieterich, A.V.; Botter, A.; Vieira, T.M.; Peolsson, A.; Petzke, F.; Davey, P.; Falla, D. Spatial variation and inconsistency between estimates of onset of muscle activation from EMG and ultrasound. Sci. Rep. 2017, 7, 42011. [Google Scholar] [CrossRef]

- Wentink, E.; Schut, V.; Prinsen, E.; Rietman, J.S.; Veltink, P.H. Detection of the onset of gait initiation using kinematic sensors and EMG in transfemoral amputees. Gait Posture 2014, 39, 391–396. [Google Scholar]

- Lopata, R.G.; van Dijk, J.P.; Pillen, S.; Nillesen, M.M.; Maas, H.; Thijssen, J.M.; Stegeman, D.F.; de Korte, C.L. Dynamic imaging of skeletal muscle contraction in three orthogonal directions. J. Appl. Physiol. 2010, 109, 906–915. [Google Scholar]

- Jahanandish, M.H.; Fey, N.P.; Hoyt, K. Lower limb motion estimation using ultrasound imaging: A framework for assistive device control. IEEE J. Biomed. Health Inform. 2019, 23, 2505–2514. [Google Scholar] [PubMed]

- Guo, J.-Y.; Zheng, Y.-P.; Huang, Q.-H.; Chen, X. Dynamic monitoring of forearm muscles using one-dimensional sonomyography system. J. Rehabil. Res. Dev. 2008, 45, 187–196. [Google Scholar] [CrossRef]

- Guo, J.-Y.; Zheng, Y.-P.; Huang, Q.-H.; Chen, X.; He, J.-F.; Chan, H.L.-W. Performances of one-dimensional sonomyography and surface electromyography in tracking guided patterns of wrist extension. Ultrasound Med. Biol. 2009, 35, 894–902. [Google Scholar] [PubMed]

- Guo, J. One-Dimensional Sonomyography (SMG) for Skeletal Muscle Assessment and Prosthetic Control. Ph.D. Thesis, Hong Kong Polytechnic University, Hong Kong, China, 2010. [Google Scholar]

- Chen, X.; Zheng, Y.-P.; Guo, J.-Y.; Shi, J. Sonomyography (SMG) control for powered prosthetic hand: A study with normal subjects. Ultrasound Med. Biol. 2010, 36, 1076–1088. [Google Scholar] [PubMed]

- Guo, J.-Y.; Zheng, Y.-P.; Xie, H.-B.; Koo, T.K. Towards the application of one-dimensional sonomyography for powered upper-limb prosthetic control using machine learning models. Prosthet. Orthot. Int. 2013, 37, 43–49. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Sun, X.; Zhou, D.; Li, Y.; Liu, H. Towards wearable A-mode ultrasound sensing for real-time finger motion recognition. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1199–1208. [Google Scholar] [PubMed]

- Szabo, T.L. Diagnostic Ultrasound Imaging: Inside Out; Academic Press: Cambridge, MA, USA, 2004. [Google Scholar]

- Yang, X.; Yan, J.; Fang, Y.; Zhou, D.; Liu, H. Simultaneous prediction of wrist/hand motion via wearable ultrasound sensing. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 970–977. [Google Scholar] [CrossRef]

- Engdahl, S.; Mukherjee, B.; Akhlaghi, N.; Dhawan, A.; Bashatah, A.; Patwardhan, S.; Holley, R.; Kaliki, R.; Monroe, B.; Sikdar, S. A Novel Method for Achieving Dexterous, Proportional Prosthetic Control using Sonomyography. In Proceedings of the MEC20 Symposium, Fredericton, NB, Canada, 10–13 August 2020; The Institute of Biomedical Engineering, University of New Brunswick: Fredericton, NB, Canada, 2020. [Google Scholar]

- Shi, J.; Chang, Q.; Zheng, Y.-P. Feasibility of controlling prosthetic hand using sonomyography signal in real time: Preliminary study. J. Rehabil. Res. Dev. 2010, 47, 87–98. [Google Scholar] [CrossRef]

- Shi, J.; Guo, J.-Y.; Hu, S.-X.; Zheng, Y.-P. Recognition of finger flexion motion from ultrasound image: A feasibility study. Ultrasound Med. Biol. 2012, 38, 1695–1704. [Google Scholar] [CrossRef]

- Akhlaghi, N.; Baker, C.A.; Lahlou, M.; Zafar, H.; Murthy, K.G.; Rangwala, H.S.; Kosecka, J.; Joiner, W.M.; Pancrazio, J.J.; Sikdar, S. Real-time classification of hand motions using ultrasound imaging of forearm muscles. IEEE Trans. Biomed. Eng. 2015, 63, 1687–1698. [Google Scholar] [CrossRef]

- McIntosh, J.; Marzo, A.; Fraser, M.; Phillips, C. Echoflex: Hand gesture recognition using ultrasound imaging. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 1923–1934. [Google Scholar]

- Akhlaghi, N.; Dhawan, A.; Khan, A.A.; Mukherjee, B.; Diao, G.; Truong, C.; Sikdar, S. Sparsity analysis of a sonomyographic muscle–computer interface. IEEE Trans. Biomed. Eng. 2019, 67, 688–696. [Google Scholar] [PubMed]

- Fernandes, A.J.; Ono, Y.; Ukwatta, E. Evaluation of finger flexion classification at reduced lateral spatial resolutions of ultrasound. IEEE Access 2021, 9, 24105–24118. [Google Scholar]

- Froeling, M.; Nederveen, A.J.; Heijtel, D.F.; Lataster, A.; Bos, C.; Nicolay, K.; Maas, M.; Drost, M.R.; Strijkers, G.J. Diffusion-tensor MRI reveals the complex muscle architecture of the human forearm. J. Magn. Reson. Imaging 2012, 36, 237–248. [Google Scholar] [CrossRef] [PubMed]

- Castellini, C.; Passig, G. Ultrasound image features of the wrist are linearly related to finger positions. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 2108–2114. [Google Scholar]

- Castellini, C.; Passig, G.; Zarka, E. Using ultrasound images of the forearm to predict finger positions. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 788–797. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Yang, X.; Li, Y.; Zhou, D.; He, K.; Liu, H. Ultrasound-based sensing models for finger motion classification. IEEE J. Biomed. Health Inform. 2017, 22, 1395–1405. [Google Scholar]

- Zhou, Y.; Zheng, Y.-P. Sonomyography: Dynamic and Functional Assessment of Muscle Using Ultrasound Imaging; Springer Nature: Singapore, 2021. [Google Scholar]

- Wang, C.; Chen, X.; Wang, L.; Makihata, M.; Liu, H.-C.; Zhou, T.; Zhao, X. Bioadhesive ultrasound for long-term continuous imaging of diverse organs. Science 2022, 377, 517–523. [Google Scholar] [CrossRef]

| Authors | Year | Ultrasound Mode | Feature Extraction Method | Machine Learning Algorithm | Subjects | Location | Targeted Muscles | Probe Mounting Position | Fixation Methods | Results |

|---|---|---|---|---|---|---|---|---|---|---|

| Zheng et al. [38] | 2006 | B-Mode | N/A | N/A | 6 healthy and 3 amputee volunteers | Forearm | ECR | Posterior | N/A | The normal participants had a ratio of 7.2 ± 3.7% between wrist angle and forearm-muscle percentage distortion. This ratio exhibited an intraclass correlation coefficient (ICC) of 0.868 between the three times it was tested. |

| Guo et al. [47] | 2008 | A-Mode | N/A | N/A | 9 healthy participants | Forearm | ECR | NA | Custom-maid holder | A mean correlation value of r = 0.91 for nine individuals was found based on the findings of a linear regression study linking muscle deformation to wrist extension angle. A correlation between wrist angle and muscle distortion was also investigated. The total mean ratio of deformation to angle was 0.130%/°. |

| Guo et al. [48] | 2009 | A-Mode | N/A | N/A | 16 healthy right-handed participants | Forearm | ECR | NA | Custom-designed holder | The root-mean-square tracking errors between SMG and EMG were measured, and the results showed that the SMG had a lower error in comparison with EMG. The mean RMS tracking error of SMG and EMG under three different waveform patterns ranged between 17 and 18.9 and between 24.7 and 30.3, respectively. |

| Chen et al. [50] | 2010 | A-Mode | N/A | N/A | 9 right-handed healthy individuals | Forearm | ECR | NA | Custom-designed holder | SMG control’s mean RMS tracking errors were 12.8% and 3.2%, and 14.8% and 4.6% for sinusoid and square tracks, respectively, at various movement speeds. |

| Shi et al. [56] | 2010 | B-Mode | N/A | N/A | 7 healthy participants | Forearm | ECR | NA | Custom-made bracket | There was excellent execution efficiency for the TDL algorithm, with and without streaming single-instruction multiple-data extensions, with a mean correlation coefficient of about 0.99. In this technique, the mean standard root-mean-square error was less than 0.75%, and the mean relative root mean square was less than 8.0% when compared to the cross-correlation algorithm baseline. |

| Shi et al. [57] | 2012 | B-Mode | Deformation field generated by the demons algorithm | SVM | 6 healthy volunteers | Forearm | ECU, EDM, ED, and EPL | Posterior | Custom-maid holder | A mean F value of 0.94 ± 0.02 indicates a high degree of accuracy and dependability for the proposed approach, which classifies finger flexion movements with an average accuracy of roughly 94%, with the best accuracy for the thumb (97%) and the lowest accuracy for the ring finger (92%). |

| Guo et al. [51] | 2013 | A-Mode | N/A | SVM, RBFANN and BP ANN | 9 healthy volunteers | Forearm | ECR | NA | N/A | The SVM algorithm, with a CC of around 0.98 and an RMSE of around 13%, had excellent potential in the prediction of wrist angle in comparison with the RBFANN and BP ANN. |

| Ortenzi et al. [21] | 2015 | B-Mode | Regions of Interest gradients and HOG | LDA, Naive Bayes classifier and Decision Trees | 3 able bodied volunteers | Forearm | Extrinsic forearm muscles | Transverse | Custom-made plastic cradle | The LDA classifier had the highest accuracy and could categorize 10 postures/grasps with 80% success. It could also classify the functional grasps with varied degrees of grip force with an accuracy of 60%. |

| Akhlaghi et al. [58] | 2015 | B-Mode | Customized image processing | Nearest Neighbor | 6 healthy volunteers | Forearm | FDS, FDP, and FPL | Transverse | Custom-designed cuff | In offline classification, 15 different hand motions with an accuracy of around 91.2% were categorized. However, in real-time control of a virtual prosthetic hand, the accuracy of classification was 92%. |

| McIntosh et al. [59] | 2017 | B-Mode | Optical flow | MLP and SVM | 2 healthy volunteers | Wrist and Forearm | FCR, FDS, FPL, FDP, and FCU | Transverse, longitudinal, and diagonal wrist and posterior | 3D-printed fixture | Both machine learning algorithms could classify 10 discrete hand gestures with an accuracy of more than 98%. In contrast to SVM, MLP had a minor advantage. |

| Yang et al. [52] | 2018 | A-Mode | Segmentation and linear fitting | LDA and SVM | Eight healthy participants | Forearm | FDP, FPL, EDC, EPL, and flexor digitorum sublimis | NA | Custom-made armband | Finger movements were classified with an accuracy of around 98%. |

| Akhlaghi et al. [60] | 2019 | B-Mode | N/A | Nearest Neighbor | 5 able-bodied subjects | Forearm | FDS, FDP, and FPL | Transverse | Custom-designed cuff | The 5 different hand gestures were categorized with an accuracy of 94.6% with 128 scanlines and 94.5% with 4 scanlines that were evenly spaced. |

| Yang et al. [54] | 2020 | A-Mode | Random Forest technique with the help of the Tree Bagger function | SDA and PCA | 8 healthy volunteers | Forearm | FCU, FCR, FDP, FDS, FPL, APL, EPL, EPB, ECU, ECR, and ECD | NA | Customized armband | The finger motions and wrist rotation simultaneously using the SDA machine learning algorithm were classified with an accuracy of around 99.89% and 95.2%, respectively. |

| Engdahl et al. [55] | 2020 | A-Mode | N/A | N/A | 5 healthy participants | Forearm | NA | NA | Custom-made wearable band | Nine different finger movements with an accuracy of around 95% were classified. |

| Fernandes et al. [61] | 2021 | B-Mode | DWT and LR | LDA | 5 healthy participants | Forearm | NA | Wrist | N/A | Classification accuracy ranged from 80% to 92% at full resolution. However, at low resolution, the accuracy improved to an average of 87% after using the proposed feature extraction method with discrete wavelet transform, which was considered good enough for classification purposes. |

| Li et al. [39] | 2022 | M-Mode and B-Mode | Linear fitting approach | SVM and BP ANN | 8 healthy participants | Forearm | FCR, FDS, FPL, FDP, ED, EPL, and ECU | Transverse | Custom-made transducer holder | The accuracy of the SVM classifier to classify 13 motions was 98.83 ± 1.03% and 98.77 ± 1.02% for M-mode and B-mode, respectively. However, the accuracy of the BP ANN classifier was 98.70 ± 0.99% for M-mode and 98.76±0.91% for B-mode. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nazari, V.; Zheng, Y.-P. Controlling Upper Limb Prostheses Using Sonomyography (SMG): A Review. Sensors 2023, 23, 1885. https://doi.org/10.3390/s23041885

Nazari V, Zheng Y-P. Controlling Upper Limb Prostheses Using Sonomyography (SMG): A Review. Sensors. 2023; 23(4):1885. https://doi.org/10.3390/s23041885

Chicago/Turabian StyleNazari, Vaheh, and Yong-Ping Zheng. 2023. "Controlling Upper Limb Prostheses Using Sonomyography (SMG): A Review" Sensors 23, no. 4: 1885. https://doi.org/10.3390/s23041885

APA StyleNazari, V., & Zheng, Y.-P. (2023). Controlling Upper Limb Prostheses Using Sonomyography (SMG): A Review. Sensors, 23(4), 1885. https://doi.org/10.3390/s23041885