Abstract

Many restoration methods use the low-rank constraint of high-dimensional image signals to recover corrupted images. These signals are usually represented by tensors, which can maintain their inherent relevance. The image of this simple tensor presentation has a certain low-rank property, but does not have a strong low-rank property. In order to enhance the low-rank property, we propose a novel method called sub-image based low-rank tensor completion (SLRTC) for image restoration. We first sample a color image to obtain sub-images, and adopt these sub-images instead of the original single image to form a tensor. Then we conduct the mode permutation on this tensor. Next, we exploit the tensor nuclear norm defined based on the tensor-singular value decomposition (t-SVD) to build the low-rank completion model. Finally, we perform the tensor-singular value thresholding (t-SVT) based the standard alternating direction method of multipliers (ADMM) algorithm to solve the aforementioned model. Experimental results have shown that compared with the state-of-the-art tensor completion techniques, the proposed method can provide superior results in terms of objective and subjective assessment.

1. Introduction

In recent years, image restoration methods using low-rank models have achieved great success. However, how do we construct a low-rank tensor? In image restoration, the most common method is to use the nonlocal self-similarity (NSS) of images. It uses the similarity between image patches to infer missing signal components. Similar patches are collected into a group so that these blocks in each group can have a similar structure to approximately form a low-rank matrix/tensor, and the image is restored by exploiting a low-rank prior in the matrix/tensor composed of similar patches [1,2]. However, when an image lacks enough similar components, or its similar components are damaged by noise, the quality of the reconstructed image will be poor. Therefore, in some cases, the method of using NSS to find similar blocks to construct low-rank tensors is not feasible. In addition, the large-scale searching of NSS patches is very time-consuming, which will affect the efficiency of the reconstruction algorithm.

It is well known that most high-dimensional data such as color images, videos, and hyperspectral images, can naturally be represented as tensors. For example, a color image with a resolution of 512-by-512 can be represented as a 512-by-512-by-3 tensor. Because of the similarity of tensor content, it is considered to be low-rank [3]. Especially, many images that contain many texture regions are often low rank. Nowadays, in most low-rank tensor completion (LRTC) algorithms, the low-rank constraint is performed on the whole of the high-dimensional data, not a part of it. Many algorithms follow this idea. These typical algorithms include fast low rank tensor completion (FaLRTC) [4], LRTC based tensor nuclear norm (LRTC-TNN) [5], the low-rank tensor factorization method (LRTF) [6], and the method of integrating total variation (TV) as regularization term into low-rank tensor completion based on tensor-train rank-1 (LRTV-TTr1) [7]. However, this simple representation does not make full use of the low-rank nature of this data. In this paper, we propose a novel method called sub-image based low-rank tensor completion (SLRTC) for image restoration. To start with, we utilize the local similarity in sampling an image to obtain a sub-image set which has a strong low-rank property. We use this sub-image set to recover the low-rank tensor from the corrupted observation image. In addition, the tensor nuclear norm is direction-dependent: the value of the tensor nuclear norm may be different if a tensor is rotated or its mode is permuted. In our completion method, the mode (row × column × RGB) of a third-order tensor is permuted to the mode with RGB in the middle (row × RGB × column), and then the low-rank optimization completion is performed on the permuted tensor. Finally, the alternating direction method of multipliers is used to solve the problem.

The main contributions of this paper can be summarized as follows:

- We propose a novel framework of sub-image based low-rank tensor completion for color image restoration. The tensor nuclear norm is based on the tensor tubal rank (TTR), which is obtained by the tensor-singular value decomposition (t-SVD) in this framework. In order to achieve the stronger low-rank tensor, we sample each channel of a color image into four sub-images, and use these sub-images instead of the original single image to form a tensor.

- The optimization completion is performed on the permuted tensor in the proposed framework. The mode of a third-order tensor of a color image is usually denoted by (row × column × RGB). It is permuted to the mode (row × RGB × column) in our framework. This permutation operation can make a better restoration and decrease the running time.

The remainder of the paper is organized as follows. Section 2 introduces the definitions and gives the basic knowledge about the t-SVD decomposition. In Section 3, we propose a novel model of low-rank tensor completion, and use the standard alternating direction method of multipliers (ADMM) algorithm to solve the model. In Section 4, we compare our model with other algorithms, and analyse the performance of the proposed method. Finally, we draw the conclusion of our work in Section 5.

2. Related Work and Foundation

This section mainly introduces some operator symbols, related definitions, and theorems of the tensor SVD.

2.1. Notations and Definitions

For convenience, we first introduce the notations that will be extensively used in the paper. (each element can be written as or ) represents a third-order tensor, and the real number field and the complex number field are represented as and . And , and are the horizontal slice, lateral slice and frontal slice of the third-order tensor, respectively. For simplicity, we denote the k-th frontal slice as . For , we denote as the discrete Fourier transform (DFT) results of all tubes of . By using the Matlab function fft, we get . Similarly, we denote the k-th frontal slice as .

2.2. Tensor Singular Value Decomposition

Recently, the tensor nuclear norm that is defined based on tensor singular value decomposition (t-SVD) has shown that it can effectively utilize the inherent low-rank structure of tensors [8,9,10]. Let be an unknown low rank tensor, the entries of are observed independently with probability and represents the set of indicators of the observed entries (i.e., ). So, the problem of tensor completion is to recover the underlying low rank tensor from the observations {}, and the corresponding low-rank tensor completion model can be written as:

where is the tensor nuclear norm (TNN) of tensor .

The TNN-based model shows its effectiveness in maintaining the internal structure of tensors [11,12]. In many low-order tensor restoration tasks, low-tubal-rank models have achieved better performance than low-Tucker-rank models, such as tensor completion [13,14,15], tensor denoising [16,17], tensor robust principal component analysis [11,18], etc.

In order to enhance the low-rank feature of an image, we utilize the local similarity to sub-sample an image to obtain a sub-image set which has a strong low-rank property, and propose a sub-image based TNN model to recover low-rank tensor signals from corrupted observation images.

Definition 1 (block circulant matrix [8]).

For, the block circulant matrixis defined as

Definition 2 (unfold, fold [9]).

For, the tensor unfold and matrix fold operators are defined as

where the unfold operation maps to a matrix of size , and fold is its inverse operator.

Definition 3 (T-product [8]).

Letand, then the T-productis defined as

and

where the operation is circular convolution.

Definition 4 (f-diagonal tensor [8]).

If each frontal slice of a tensor is a diagonal matrix, it is called f-diagonal tensor.

Definition 5 (t-SVD [8]).

Let, then it can be factored as

where and are orthogonal, and is an f-diagonal tensor.

The frontal slice of has the following properties:

where represents the downward integer operator.

We can effectively obtain the t-SVD by calculating a series of matrix SVDs in the Fourier domain.

Definition 6 (tensor tubal rank [16]).

For, the tensor tubal rank is denoted as, which is defined as the number of non-zero singular tubes of, whereis the t-SVD decomposition of, namely

Definition 7 (tensor average rank [18]).

For, the tensor tubal rank is denoted as, is defined as

Definition 8 (tensor nuclear norm [18]).

For, the tensor nuclear norm ofis defined as

where .

3. Proposed Model

In this section, we propose a sub-image tensor completion framework based on the tensor tubal rank for image restoration.

3.1. Sub-Image Generation

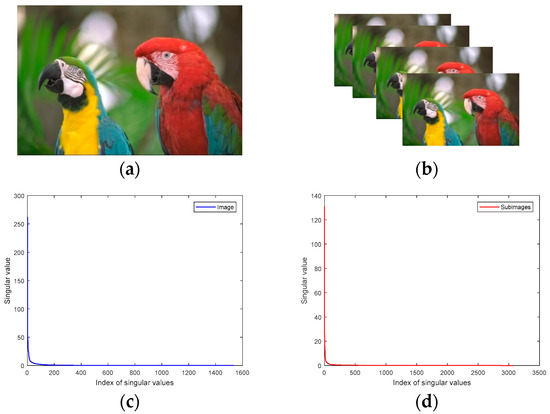

As we all know, real color images can be approximated by low-rank matrices on the three channels independently. If we regard a color image as a third-order tensor, and each channel corresponds to a frontal slice, then it can be well approximated by a low-tubal-rank tensor. Figure 1 shows an example to illustrate that most of the singular values of the corresponding tensor of an image are zero, so a low-tubal-rank tensor can be used to approximate a color image.

Figure 1.

Color image and its singular values. (a) Color Image kodim23 denoted by ; (b) Color sub-image set denoted by ; (c) the singular values of ; (d) the singular values of .

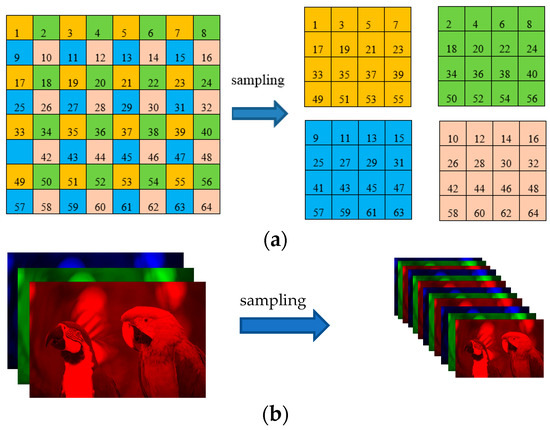

Although the aforesaid representation can approximate a color image, it does not make full use of the similarity of image data. In order to enhance its low-rank property, we sampled an image to obtain four similar images (All sampling factors in this paper are horizontal sampling factor: vertical sampling factor = 2:2), and each image is divided into four sub-images, and there is no pixel overlap between the sub-images, as shown in Figure 2a. Each small square represents a pixel. For a three-channel RGB image, its sampling method is illustrated in Figure 2b.

Figure 2.

A simple demonstration of the sampling method. (a) An image is sampled to obtain four sub-images; (b) A three-channel RGB image is sampled to form four three-channel sub-images.

According to the prior knowledge of image local similarity, the four sub-images are similar, so they are composed of a sub-image tensor which has a low-rank structure. It should be noted that if the pixels of the image rows and columns are not even, we can add one row or one column and then do the down-sampling processing. It can be seen that the tensor representation of the color image kodim23 is in Figure 1. After sampling, we get the sub-image set denoted by . Here we give the singular values of the tensor as shown in Figure 1d. Compared with Figure 1c, it can be seen that most of the singular values of the corresponding tensor of the sub-image set appear to be smaller. Therefore, compared with the original whole image, the sub-image data has stronger property of low rank.

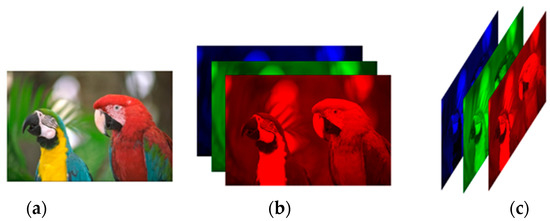

3.2. Mode Permutation

It is important to note that the TNN is orientation-dependent. If the tensor rotates, the value of TNN and the tensor completion results from Formula (1) may be quite different. For example, a three-channel color image of size can be represented as three types of tensors, i.e., , and , where is the most common image tensor representation, denotes the conjugate transpose of , and , .

In order to further improve the performance, we perform the mode permutation [19] after sampling. Here we give an example of the mode permutation as shown in Figure 3.

Figure 3.

Two tensor representations of a color image. (a) image kodim23; (b) ; (c) .

In Figure 3, the size of the color image kodim23 is . Its tensor representation is . After the mode permutation, it is denoted by . So is called the mode permutation of .

The mode permutation option can avoid scanning an entire image, which reduces the overall computational complexity [19].

3.3. Solution to the Proposed Method

For the completion problem of the color image tensor , we propose a color sub-image tensor (where ; ; ) low-rank optimization model:

where is the tensor nuclear norm of , and are third-order tensors of the same size.

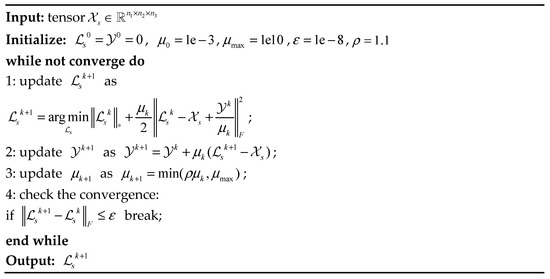

The problem (11) can be solved by the ADMM [20], where the key step is to calculate the proximity operator of the TNN, namely:

According to the literature [18], let be the t-SVD of , and for each , define the tensor singular value threshold (t-SVT) operator, as follows:

where , . It is worth noting that the t-SVT operator only needs to apply a soft threshold rule to the singular values (instead of ) of the frontal slice of . The t-SVT operator is a proximity operator related to TNN.

Based on t-SVT, we exploit the ADMM algorithm to solve the problem of (11). The augmented Lagrangian function of (11) is defined as

where is the Lagrangian multiplier and is the penalty parameter. We then update by alternately minimizing the augmented Lagrangian function . The sub-problem has a closed-form solution, with the t-SVT operator related to TNN. A pseudo-code description of the entire optimization problem (11) is given in Figure 4.

Figure 4.

Our algorithm SLRTC.

In the whole procedure of algorithm SLRTC, the main per-iteration cost lies in the update , which requires computing fast Fourier transform (FFT) and SVDS of matrices. The per-iteration complexity is .

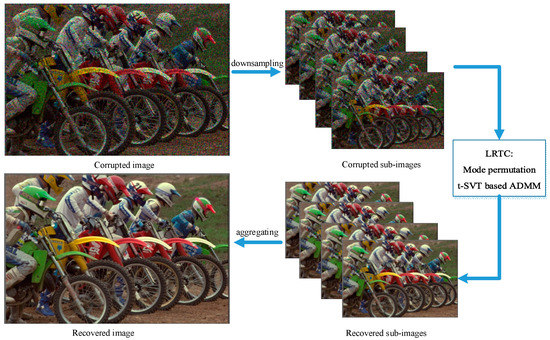

Therefore, the overall framework process of color image restoration based on sub-image low-rank tensor completion proposed is shown in Figure 5.

Figure 5.

Flowchart of the proposed framework. The downsampling method is as shown in Section 3.1. After the mode permutation of the sub-images, t-SVT is performed to obtain the recovered sub-images. Finally, the final recovered image can be obtained by aggregating the recovered sub-images.

4. Experiments

In this section, we will compare the proposed SLRTC with several classic color image restoration methods (including FaLRTC [4], LRTC-TNN [5], TCTF [6] and LRTV-TTr1 [7]). Among them, the frontal slice of the input tensor in the LRTC-TNN1 method corresponds to R, G, and B channels, while the lateral slice of the input tensor in the LRTC-TNN2 method corresponds to R, G, and B channels; the LRTC-TNN method is based on TNN, which solves the tensor completion problem by solving the convex optimization.

To evaluate the performance of different methods for color image restoration, we used the widely used peak signal-to-noise ratio (PSNR) and structure similarity (SSIM) [21] indicators in this experiment.

4.1. Color Image Recovery

We first use the original real nine color images for testing, as shown in Figure 6.

Figure 6.

Ground truth of nine benchmark color images: Airplane, Baboon, Barbara, Façade, House, Peppers, Sailboat, Giant and Tiger (from left to right).

The size of each image is 256 × 256 × 3, i.e., row × column × RGB. In order to test the repair effect of various algorithms on the images, we randomly lost 30%, 50%, and 70% of the pixels in each image, and formed an incomplete tensor .

Table 1 lists the PSNR and SSIM comparisons of all methods to restore the images. Compared with the LRTC-TNN1, LRTC-TNN2 and TCTF methods, the SLRTC method can usually obtain the best image restoration results when the missing rate is 30%, 50%, and 70%. By analysing the specific data in Table 1, it can be seen that when the local smoothing in the image accounts for a large proportion, such as Airplane, Pepper and Sailboat, the effect will be better if the LRTV-TTr1 method is utilized to restore the images, because the advantage of TV regularization is to make use of the local smoothness of the image. In addition, when the missing rate is higher (greater than or equal to 70%), the LRTV-TTr1 method is better than SLRTC. When the image contains a large number of texture regions, that is, the image itself has a strong low rank, the best effect can be achieved by applying low rank constraints to the restoration of degraded images, such as facade.

Table 1.

The PSNR and SSIM Comparison of different methods on nine images (The best results are highlighted).

In order to further verify the effectiveness of the proposed algorithm, we additionally used 24 color images from the Kodak PhotoCD dataset (http://r0k.us/graphics/kodak/ (accessed on 3 January 2018)) for testing. The size of each image is . As in the previous test, in order to test the repair effect of various algorithms on the image, we randomly lost 30%, 50%, and 70% of the pixels in each image, and formed an incomplete tensor .

Table 2 lists the PSNR and SSIM comparisons of all methods to restore the images when the missing rate is 30%. The best values among all these methods are in boldface. It can be seen that our algorithm SLRTC can surpass the other algorithms in terms of PSNR, and the PSNR value is about 1.5-5db higher than the other methods. However, in terms of SSIM, it can be seen that the LRTV-TTr1 method is basically optimal.

Table 2.

The PSNR and SSIM Comparison of different methods on the Kodak PhotoCD dataset, the incomplete images tested contain 30 percent missing entries (The best results are highlighted).

Table 3 and Table 4 are the comparison of PSNR and SSIM when the missing rate is 50% and 70%, respectively. As shown in Table 2, our algorithm can surpass the other algorithms in terms of PSNR, but the SSIM value of image restoration is lower than that of the LRTC-TTr1 method. The reason is mainly due to the sampling process from image to sub-image in the first step of the SLRTC method. Although the sub-image has a stronger low rank than the original image, it weakens the overall structural relevance of the image.

Table 3.

The PSNR and SSIM Comparison of different methods on the Kodak PhotoCD dataset, the incomplete images tested contain 50 percent missing entries (The best results are highlighted).

Table 4.

The PSNR and SSIM Comparison of different methods on the Kodak PhotoCD dataset, the incomplete images tested contain 70 percent missing entries (The best results are high-lighted).

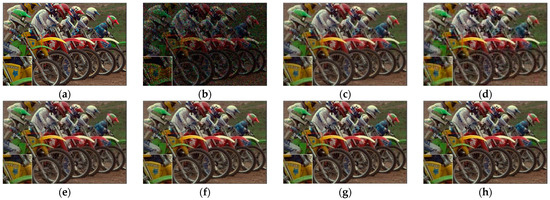

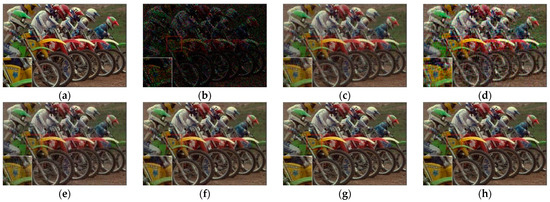

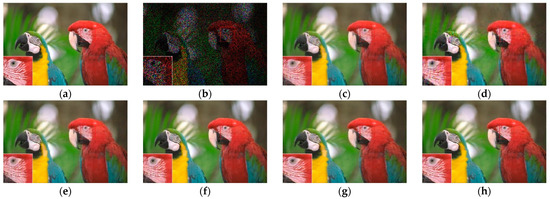

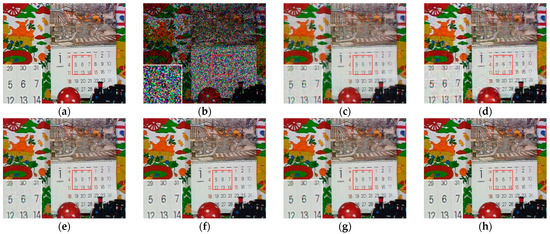

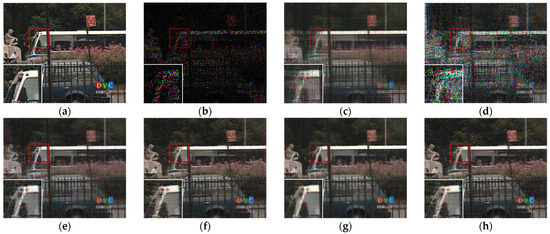

Figure 7, Figure 8, Figure 9 and Figure 10 show the comparison of visual quality when the missing rate is 50% and 70%. In contrast, the SLRTC method can better preserve the texture of the image.

Figure 7.

Visual effect comparison of different methods on the color image kodim05. The incomplete image contains 50% missing entries, shown as black pixels. (a) Original image; (b) Incomplete image; (c) FaLRTC (26.01 dB); (d) TCTF (22.45 dB); (e)LRTC-TNN1 (28.34 dB); (f) LRTC-TNN2 (29.98 dB); (g) LRTV-TTr1 (29.08 dB); (h) SLRTC (31.93 dB).

Figure 8.

Visual effect comparison of different methods on the color image kodim05. The incomplete image contains 70% missing entries, shown as black pixels. (a) Original image; (b) Incomplete image; (c) FaLRTC (21.80 dB); (d) TCTF (19.41 dB); (e) LRTC-TNN1 (22.67 dB); (f) LRTC-TNN2 (24.59 dB); (g) LRTV-TTr1 (24.48 dB); (h) SLRTC (26.14 dB).

Figure 9.

Visual effect comparison of different methods on the color image kodim23. The incomplete image contains 50% missing entries, shown as black pixels. (a) Original image; (b) Incomplete image; (c) FaLRTC (33.35 dB); (d) TCTF (29.21 dB); (e) LRTC-TNN1 (33.47dB); (f) LRTC-TNN2 (33.72 dB); (g) LRTV-TTr1 (34.46dB); (h) SLRTC (37.96dB).

Figure 10.

Visual effect comparison of different methods on the color image kodim23. The incomplete image contains 70% missing entries, shown as black pixels. (a) Original image; (b) Incomplete image; (c) FaLRTC (29.09 dB); (d) TCTF (26.94 dB); (e) LRTC-TNN1 (28.94 dB); (f) LRTC-TNN2 (29.14 dB); (g) LRTV-TTr1 (30.89 dB); (h) SLRTC (32.33 dB).

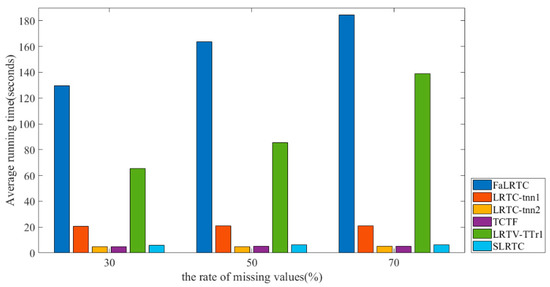

At the same time, we also give a comparison of the algorithm complexity of restoring 24 test images with a resolution of 768 × 512, as shown in Figure 11. It can be seen that SLRTC is much faster than the FaLRTC, LRTC-TNN1, and LRTV-TTr1 methods, and is comparable to the TCTF and LRTC-TNN2 methods.

Figure 11.

Comparison of the running time.

4.2. Color Video Recovery

Next, we tested the performance of different methods in completing the task of video data. The test video sequences are City, Bus, Crew, Soccer, and Mobile. (https://engineering.purdue.edu/~reibman/ece634/ (accessed on 3 January 2018)).

The main consideration is the third-order tensor. Here, the following preprocessing is performed on each video: adjust the video size of (row × column × RGB × number of frames) to a third-order tensor .

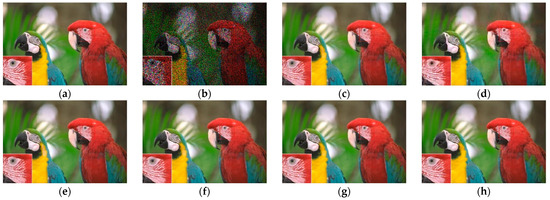

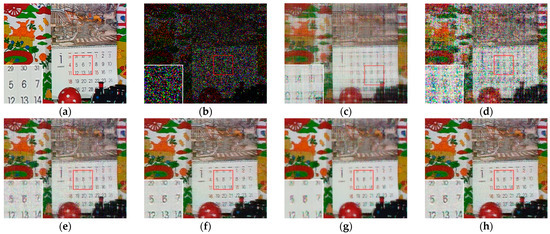

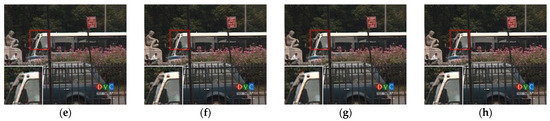

Figure 12, Figure 13, Figure 14 and Figure 15 show the visual quality comparison of the Mobile and Bus video sequences repaired by different methods when the missing rate is 50% and 80%. When the missing rate is 50%, SLRTC can capture the inherent multi-dimensional characteristics of the data, and the video frame recovery effect is better than other methods; when the missing rate is 80%, the subjective visual quality of SLRTC in the Mobile video frame repair is better than other methods, while the subjective visual quality of the repair effect on the Bus video frame is not as good as LRTV_TTr1.

Figure 12.

Visual effect comparison of different methods on the ninth frame completion of the Mobile video. The incomplete video contains 50% missing entries. (a) Original image; (b) Incomplete image; (c) FaLRTC (21.51 dB); (d) TCTF (21.80 dB); (e) LRTC-TNN1 (24.12 dB); (f) LRTC-TNN2 (28.09dB); (g) LRTV-TTr1 (26.15 dB); (h) SLRTC (29.97 dB).

Figure 13.

Visual effect comparison of different methods on the ninth frame completion of the Mobile video. The incomplete video contains 80% missing entries. (a) Original image; (b) Incomplete image; (c) FaLRTC (16.46 dB); (d) TCTF (11.53 dB); (e) LRTC-TNN1 (18.69 dB); (f) LRTC-TNN2 (20.67dB); (g) LRTV-TTr1 (19.91 dB); (h) SLRTC (20.87 dB).

Figure 14.

Visual effect comparison of different methods on the ninth frame completion of the Bus video. The incomplete video contains 50% missing entries. (a) Original image; (b) Incomplete image; (c) FaLRTC (27.30 dB); (d) TCTF (26.42 dB); (e) LRTC-TNN1 (29.05 dB); (f) LRTC-TNN2 (32.92 dB); (g) LRTV-TTr1 (31.77 dB); (h) SLRTC (34.34 dB).

Figure 15.

Visual effect comparison of different methods on the ninth frame completion of the Bus video. The incomplete video contains 80% missing entries. (a) Original image; (b) Incomplete image; (c) FaLRTC (21.42 dB); (d) TCTF (14.00 dB); (e) LRTC-TNN1 (22.51 dB); (f) LRTC-TNN2 (24.86 dB); (g) LRTV-TTr1 (23.99 dB); (h) SLRTC (24.69 dB).

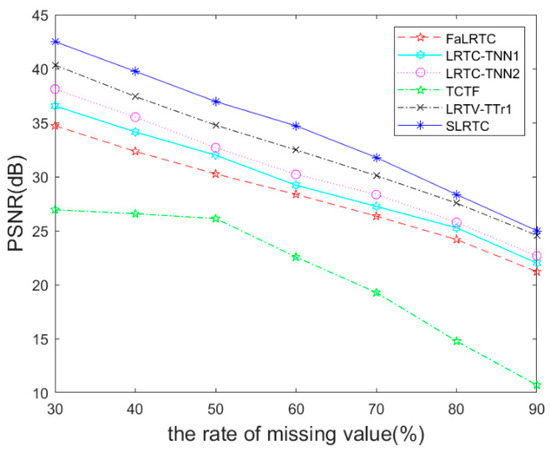

Randomly removing 30%, 40%, 50%, 60%, 70%, 80%, and 90% pixels in the videos, Figure 16 shows the performance comparison of various methods for videos recovery. It can be seen that the SLRTC algorithm proposed is better than other methods.

Figure 16.

The PSNR metric on video data recovery.

5. Conclusions

This paper proposes a color image restoration method called SLRTC. Based on the nature of the tensor tubal rank, our method does not minimize the tensor nuclear norm on the observed image, but uses the local similarity characteristics of the image to decompose the image into multiple sub-images through downsampling to enhance the tensor of low rank. Experiments show that the proposed algorithm is better than the comparison algorithms in terms of color image restoration quality and running time.

Obviously, the method proposed in this paper is parameter-independent, and parameter adjustment usually requires complex calculations. In the absence of TV regularization terms, the method proposed in this paper uses the image partial smoothing prior to downsampling the image. It is well integrated into the sub-image low-rank tensor completion, and the effectiveness of the model is proved through experiments.

Since deep learning-based algorithms have shown their potential to tackle this problem of image restoration in recent years [22,23], we next will integrate the low-rank prior into the neural networks to achieve better performance.

Author Contributions

Conceptualization, X.L. and G.T.; Methodology, X.L.; Software, X.L.; Writing—original draft, X.L.; Writing—review and editing X.L. and G.T. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Postgraduate Research & Practice Innovation Program of Jiangsu Province (KYCX17_0776), and the Research Project of Nanjing University of Posts and Telecommunications (NY218089).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data available on request from the authors.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, C.; Hu, W.; Jin, T.; Mei, Z. Nonlocal image denoising via adaptive tensor nuclear norm minimization. Neural Comput. Appl. 2015, 29, 3–19. [Google Scholar] [CrossRef]

- Rajwade, A.; Rangarajan, A.; Banerjee, A. Image Denoising Using the Higher Order Singular Value Decomposition. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 35, 849–862. [Google Scholar] [CrossRef] [PubMed]

- Kolda, T.G.; Bader, B.W. Tensor Decompositions and Applications. SIAM Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

- Liu, J.; Musialski, P.; Wonka, P.; Ye, J. Tensor Completion for Estimating Missing Values in Visual Data. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 208–220. [Google Scholar] [CrossRef] [PubMed]

- Lu, C.; Feng, J.; Lin, Z.; Yan, S. Exact Low Tubal Rank Tensor Recovery from Gaussian Measurements. In Proceedings of the International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 1948–1954. [Google Scholar]

- Zhou, P.; Lu, C.; Lin, Z.; Zhang, C. Tensor Factorization for Low-Rank Tensor Completion. IEEE Trans. Image Process. 2018, 27, 1152–1163. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Jing, X.Y.; Tang, G.; Wu, F.; Dong, X. Low-rank tensor completion for visual data recovery via the tensor train rank-1 decomposition. IET Image Process. 2020, 14, 114–124. [Google Scholar] [CrossRef]

- Kilmer, M.E.; Martin, C.D. Factorization Strategies for Third-order Tensors. Linear Algebra Appl. 2011, 435, 641–658. [Google Scholar] [CrossRef]

- Kilmer, M.E.; Braman, K.; Hao, N.; Hoover, R.C. Third-order Tensors as Operators on Matrices: A Theoretical and Computational Framework with in Imaging. SIAM J. Matrix Anal. Appl. 2013, 34, 148–172. [Google Scholar] [CrossRef]

- Martin, C.D.; Shafer, R.; LaRue, B. An order-p Tensor Factorization with Applications in Imaging. SIAM J. Sci. Comput. 2013, 35, A474–A490. [Google Scholar] [CrossRef]

- Lu, C.; Feng, J.; Chen, Y.; Liu, W.; Lin, Z.; Yan, S. Tensor robust Principal Component Analysis: Exact Recovery of Corrupted Low-rank Tensors via Convex Optimization. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5249–5257. [Google Scholar]

- Semerci, O.; Hao, N.; Kilmer, M.E.; Miller, E.L. Tensor-based Formulation and Nuclear Norm Regularization for Multienergy Computed Tomography. IEEE Trans. Image Process. 2014, 23, 1678–1693. [Google Scholar] [CrossRef] [PubMed]

- Sun, W.; Huang, L.; So, H.C.; Wang, J. Orthogonal Tubal Rank-1 Tensor Pursuit for Tensor Completion. Signal Process. 2019, 157, 213–224. [Google Scholar] [CrossRef]

- Sun, W.; Chen, Y.; Huang, L.; So, H.C. Tensor Completion via Generalized Tensor Tubal Rank Minimization using General Unfolding. IEEE Signal Process. Lett. 2018, 25, 868–872. [Google Scholar] [CrossRef]

- Long, Z.; Liu, Y.; Chen, L.; Zhu, C. Low Rank Tensor Completion for Multiway Visual Data. Signal Process. 2019, 155, 301–316. [Google Scholar] [CrossRef]

- Zhang, Z.; Ely, G.; Aeron, S.; Hao, N.; Kilmer, M. Novel methods for multilinear data completion and denoising based on tensor-SVD. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3842–3849. [Google Scholar]

- Wang, A.; Song, X.; Wu, X.; Lai, Z.; Jin, Z. Generalized Dantzig Selector for Low-tubal-rank Tensor Recovery. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Brighton, UK, 12–17 May 2019; pp. 3427–3431. [Google Scholar]

- Lu, C.; Feng, J.; Chen, Y.; Liu, W.; Lin, Z.; Yan, S. Tensor Robust Principal Component Analysis with A New Tensor Nuclear Norm. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 925–938. [Google Scholar] [CrossRef] [PubMed]

- Zdunek, R.; Fonal, K.; Sadowski, T. Image Completion with Filtered Low-Rank Tensor Train Approximations. In Proceedings of the International Work-Conference on Artificial Neural Networks, Part II, Gran Canaria, Spain, 12–14 June 2019; pp. 235–245. [Google Scholar]

- Lu, C.; Feng, J.; Yan, S.; Lin, Z. A Unified Alternating Direction Method of Multipliers by Majorization Minimization. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 527–541. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Ren, W.; Luo, W.; Lai, W.S.; Stenger, B.; Yang, M.H.; Li, H. Deep image deblurring: A survey. Int. J. Comput. Vis. 2022, 130, 2103–2130. [Google Scholar] [CrossRef]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H.; Shao, L. Multi-stage progressive image restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14821–14831. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).