At the Confluence of Artificial Intelligence and Edge Computing in IoT-Based Applications: A Review and New Perspectives

Abstract

1. Introduction

- We reviewed 114 related papers that have been published from 2019 to present.

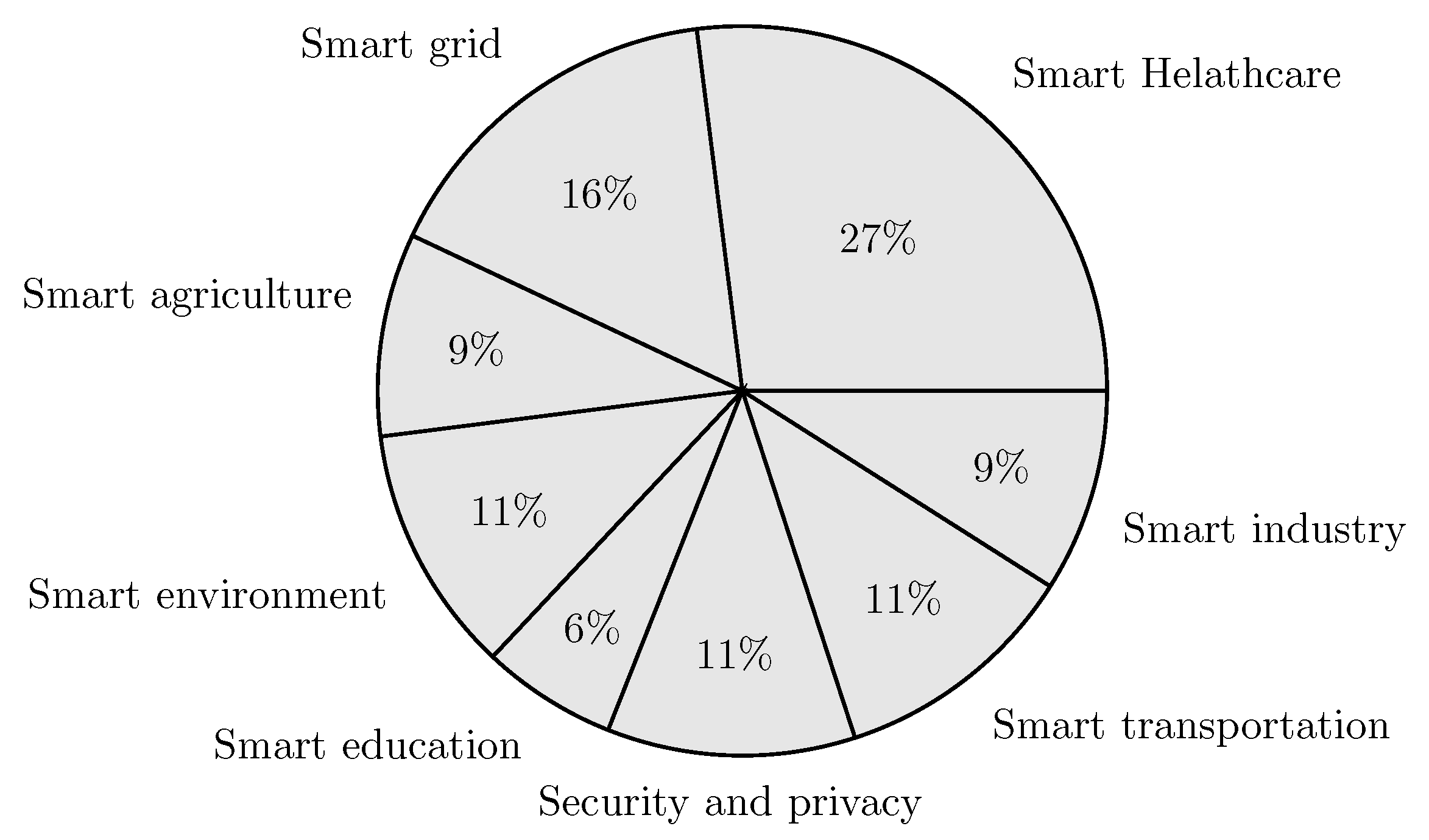

- To help readers understand the value and potential of implementing edge-based IoT infrastructure and to address cloud-based applications issues, we present an in-depth analysis of the state of the art of edge-based applications focusing on eight application areas: smart agriculture, smart environment, smart grid, smart healthcare, smart industry, smart education, smart transportation, and security and privacy.

- We present a qualitative comparison of related works in the eight aforementioned application areas. In this comparison we used eight characteristics: use case (the scope of application of AI for each application area), AI role (the potential of AI use), AI technique (AI-related algorithms), the used dataset, AI placement (on edge, cloud, or edge/cloud), employed technologies (technologies for running AI at the edge), the platform used for the implementation, and performance metrics. Three other columns are used to illustrate: the main contributions, benefits of edge-AI, and drawbacks of the reviewed works.

- We present a critical analysis of the presented state of the art by (1) exploring the current difficulties and limitations associated with the development and implementation of AI models and (2) investigating how AI can be used to overcome the difficulties presented by massive data in IoT systems and to improve the effectiveness of services on decentralized edge platforms.

- Based on the synthetic results, we suggest future trends for addressing the challenges of edge-based application deployment regarding big data analytics, scalability, resource management, security and privacy, and ultralow latency requirement.

2. Artificial Intelligence in Edge-Based IoT Applications: Literature Review

2.1. Smart Environment

2.1.1. Air Quality Monitoring (AQM)

2.1.2. Water Quality Monitoring (WQM)

2.1.3. Smart Water Management (SWM)

2.1.4. Underwater Monitoring (UWM)

2.2. Smart Grid

2.2.1. Load/Demand Forecasting (LDF)

| Use Case | Ref | Contribution | AI Role (At the Edge) | AI Algorithm | Dataset | AI Placement | Employed Technology | Platform | Metrics | Benefits AI-Edge | Drawbacks | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Smart grid | LDF | [33] | Short-term energy consumption forecasting | Prediction | LSTM | Pecan Street Inc’s Dataport site | Edge, cloud | Federated learning | Python, TensorFlow Federated 0.4.0 Tensorflow 1.13.1 backend | RMSE, MAPE | High accuracy | Heterogeneous data unsolved |

| [34] | Short-term energy consumption forecasting | Prediction, classification | LSTM, K-means | Energy company UK Power Networks | Edge device, cloud | Federated learning | Python, TensorFlow | RMSE, training time | High accuracy, heterogeneous data solved | Privacy still low | ||

| [35] | Day-ahead prediction of building energy demands | Prediction, Feature selection | Ant-bee, cuckoo, elephant, flower, genetic harmony, PSO, rhino, wolf, DT, HT | Ornl-research-house-3 | Edge server (Raspberry Pi) | Low-cost model | Keras, Python | Accuracy, time, speed, MAE | High accuracy, low training time | Low interpretability | ||

| [36] | Short-term electricity demand | Prediction, classification | XGBoost, K-means | Tianchi under license | Edge server (PC) | Low-cost model | Not mentioned | Training time, accuracy, cross-entropy loss | High accuracy | Data distribution unsolved | ||

| [37] | Short-term electricity demand | Outlier detection, Feature selection, prediction | NB, wrapper FS, Filter FS | EUNITE dataset | Fog nodes | Matlab | Accuracy, error, precision, sensitivity/recall | High accuracy, reliability, resilience, stability | High complexity of model | |||

| [38] | Online short-term energy prediction | data preprocessing, prediction | DNN | Real-world dataset | Edge server, edge devices, cloud | Collaborative learning | Not mentioned | Flexibility, accuracy | Flexibility, high accuracy, dynamic data, IoT addressed, real-time prediction | Less scalability | ||

| [39] | Load forecasting for optimal energy management | Prediction | CNN | IHEPC dataset | Edge devices | / | TensorFlow, Keras | MAPE, RMSE | Low complexity | Heterogeneous data, uncertainties, privacy is not addressed | ||

| [40] | Online short-term residential load forecasting | Prediction | STN | Ohta-AMPds datasets | Edge device | Low-cost model-reservoir computing | Not mentioned | RMSE, MAE | Low complexity, high accuracy | Heterogeneity not addressed | ||

| D.S.M | [41] | Demand-side management | Resource management | RL | Real-world dataset | Edge server (Raspberry Pi) | – | Real implementation | Not mentioned | / | Less scalability | |

| [42] | Demand-side management | Classification | LDA | REFIT project | Edge server | Low-cost model | Not mentioned | MAPE, RMSE | ||||

| [43] | Managing prosumers over wireless networks | Data preprocessing, prediction | LSTM | Pecan Street Inc.’s Dataport site | Edge server | Federated learning | TensorFlow | RMSE, data transmitted | Heterogeneous data addressed, high accuracy low-communication cost | Single-point failure not addressed | ||

| LAD | [44] | Detection of anomalous power consumption at household | prediction | GBR, RFR, LR, SVR | IHEPC dataset | Edge server, fog | / | Not mentioned | MAPE, RMSE | Load reduction | Communication cost still high | |

| [45] | Anomaly detection in smart-meter data | resource allocation, classification | SDA, GA, kNN | IHEPC dataset | Edge server | / | Not mentioned | Accuracy, execution time, energy consumption | – | – | ||

| [46] | Electric energy fraud detection | Dimensionality reduction, prediction | DTR, LR | D1C database | Edge server Raspberry Pi model | – | Not mentioned | MAPE | – | – | ||

| [47] | Anomaly detection consumption smart grid | Classification | DNN, HDBSC K-means, KNN | Midwest region | Edge server, Raspberry Pi | / | Not mentioned | Testing time, frequency, model size | Low complexity, high accuracy | – | ||

| [48] | Energy theft detection | Feature-extraction classification | VAE-GAN, K-means | GEF Com 2012 public dataset | Edge server | / | Not mentioned | ROC curve, running efficiency | Adaptive model, high accuracy | - | ||

| [49] | Energy theft detection | Classification | (SGCC) dataset | Edge devices | Federated learning | Flower | RMSE, log loss accuracy, precision F-measure | Privacy | Low accuracy compared with the centralized model |

2.2.2. Demand-Side Management (DSM)

2.2.3. Load Anomaly Detection (LAD)

2.3. Smart Agriculture

2.3.1. Weather Prediction (WP)

2.3.2. Livestock Management (LM)

2.3.3. Smart Irrigation (SI)

2.3.4. Crop Monitoring and Disease Detection (CMDD)

2.3.5. Monitoring the Health Status of Agriculture Machines (MHSAM)

2.4. Smart Education

2.4.1. Student Engagement Monitoring (SEM)

2.4.2. Skill Assessment (SA)

2.5. Smart Industry

2.5.1. Financial Industry (FI)

2.5.2. Commercial Industries (CI)

2.5.3. Machine Malfunction Monitoring (MMM)

2.5.4. Product quality monitoring and prediction (PQMP)

2.6. Smart Healthcare

2.6.1. Diet Health Management (DHM)

2.6.2. Ambient Assisted Living (AAL)

2.6.3. Human Activity Recognition (HAR)

2.6.4. Location-Based Disease Prediction (LDP)

2.6.5. Disease Diagnosis (DD)

2.7. Smart Transportation:

2.7.1. Smart Parking Management (SPM)

2.7.2. Traffic Monitoring/Prediction (TMP)

2.7.3. Intelligent Transportation Management (ITM)

2.8. Security and Privacy in Edge-Based Applications

2.8.1. Privacy Preservation (PP)

2.8.2. Authentication and Authorization (AA)

2.8.3. Intrusion Detection (ID)

3. Discussions of Related Works: Findings and Insights

3.1. The Relevance of Integrating AI and Edge Computing in IoT-Based Applications

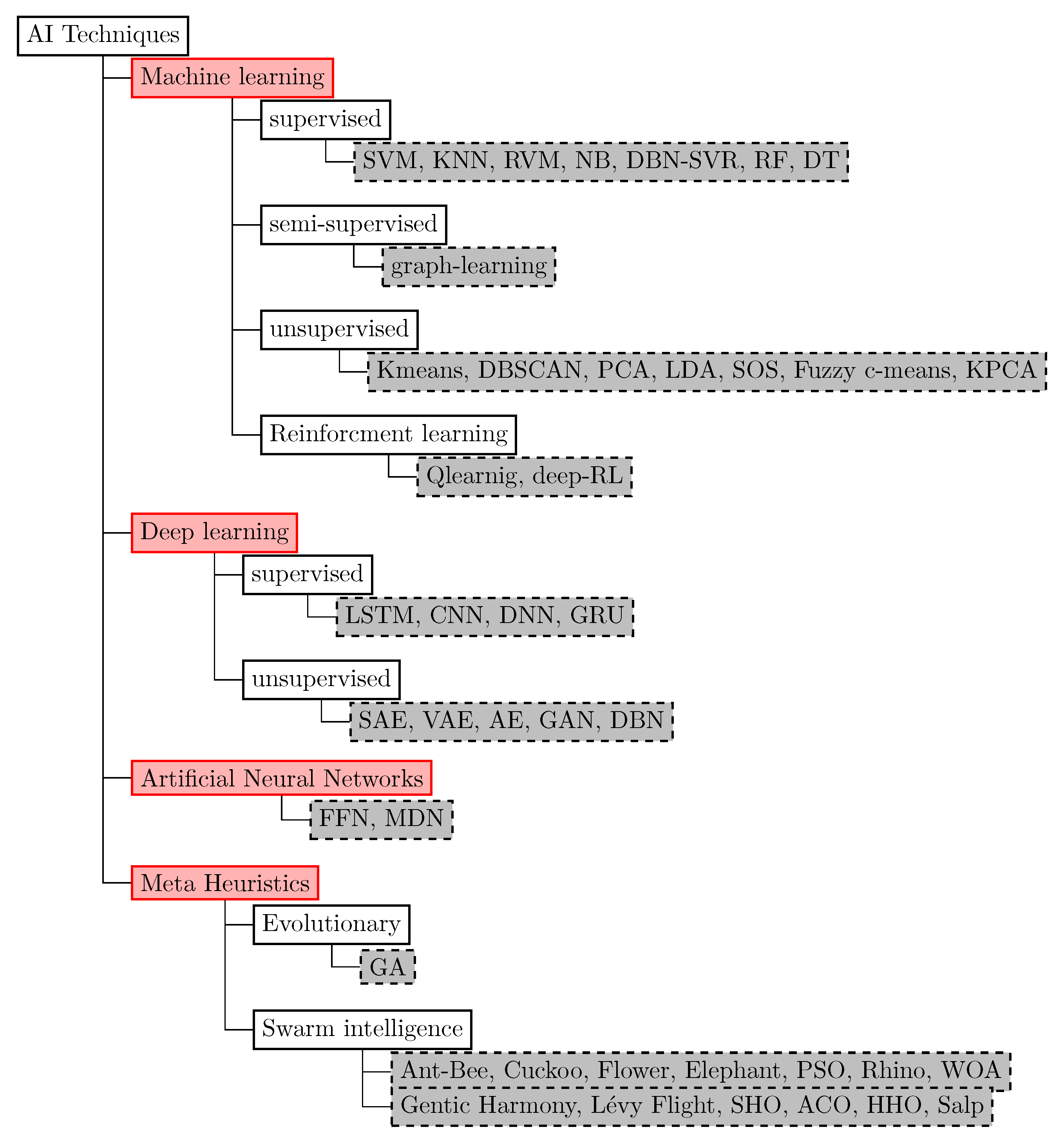

3.2. AI Technologies

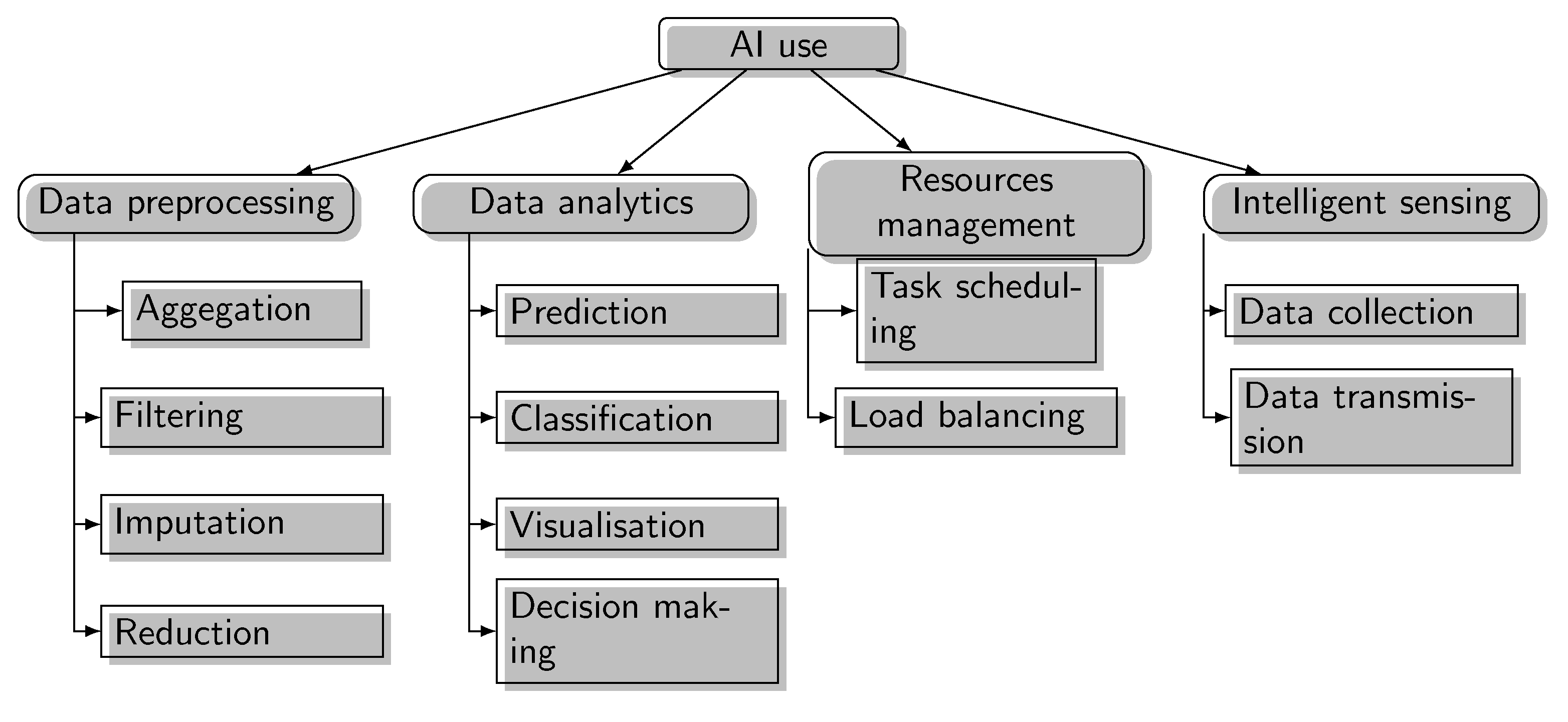

3.3. AI Use at the Network Edge

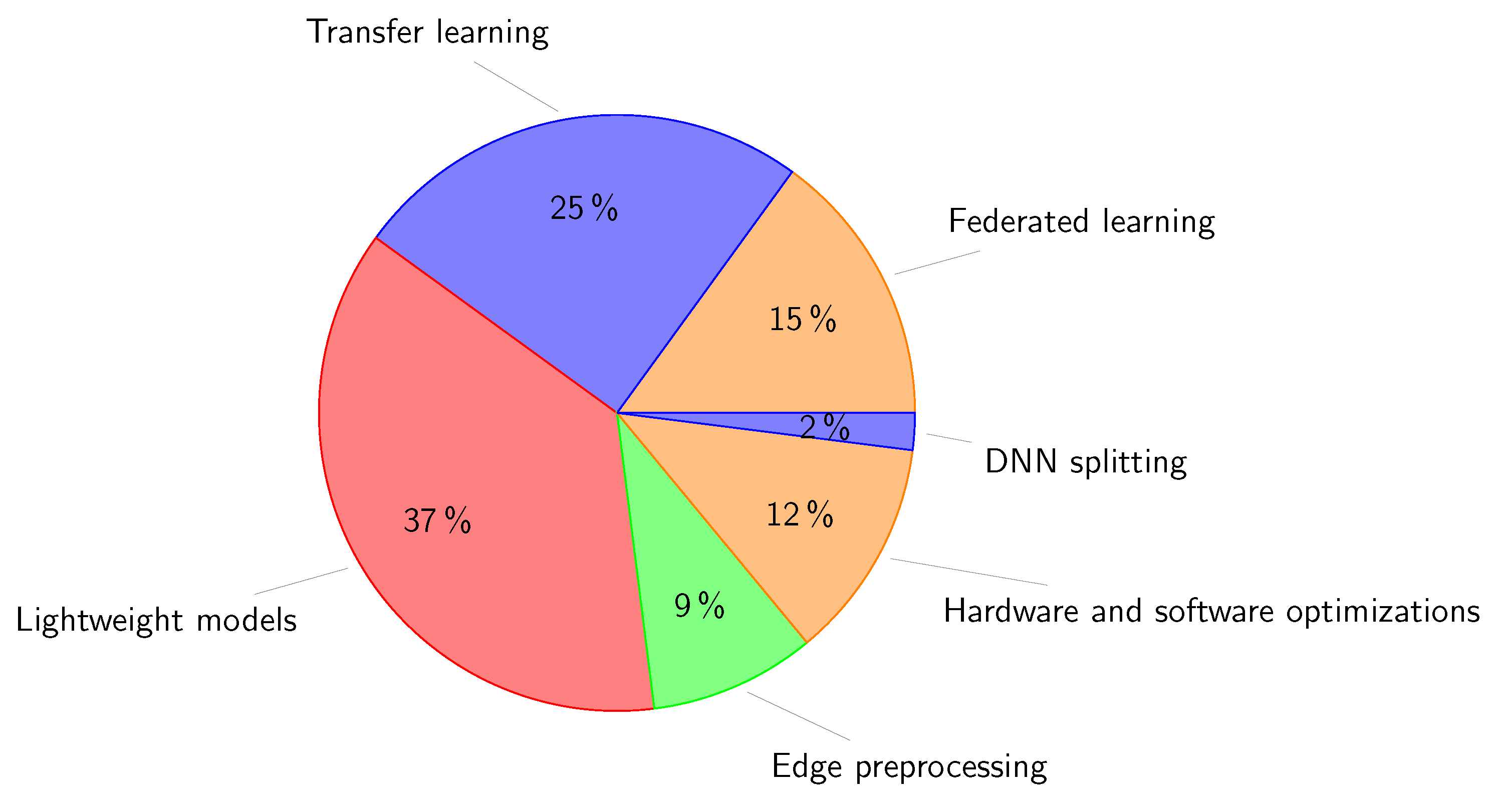

3.4. Enabling Technologies and Strategies that Provide Analytic Services at the Edge

3.5. Platforms and Software Tools

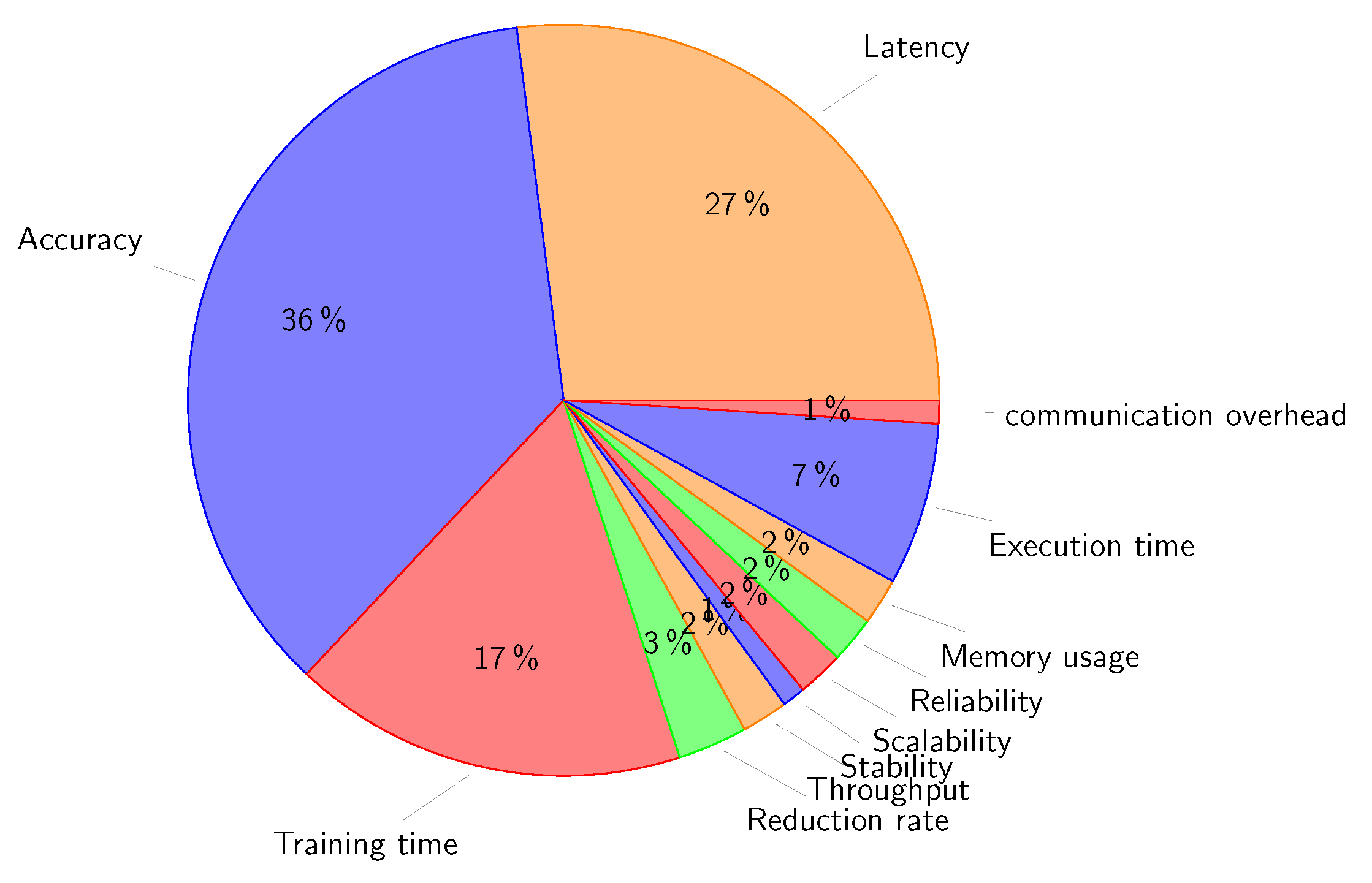

3.6. Performance Metrics

3.7. The Convergence of AI-Edge with Other Technologies

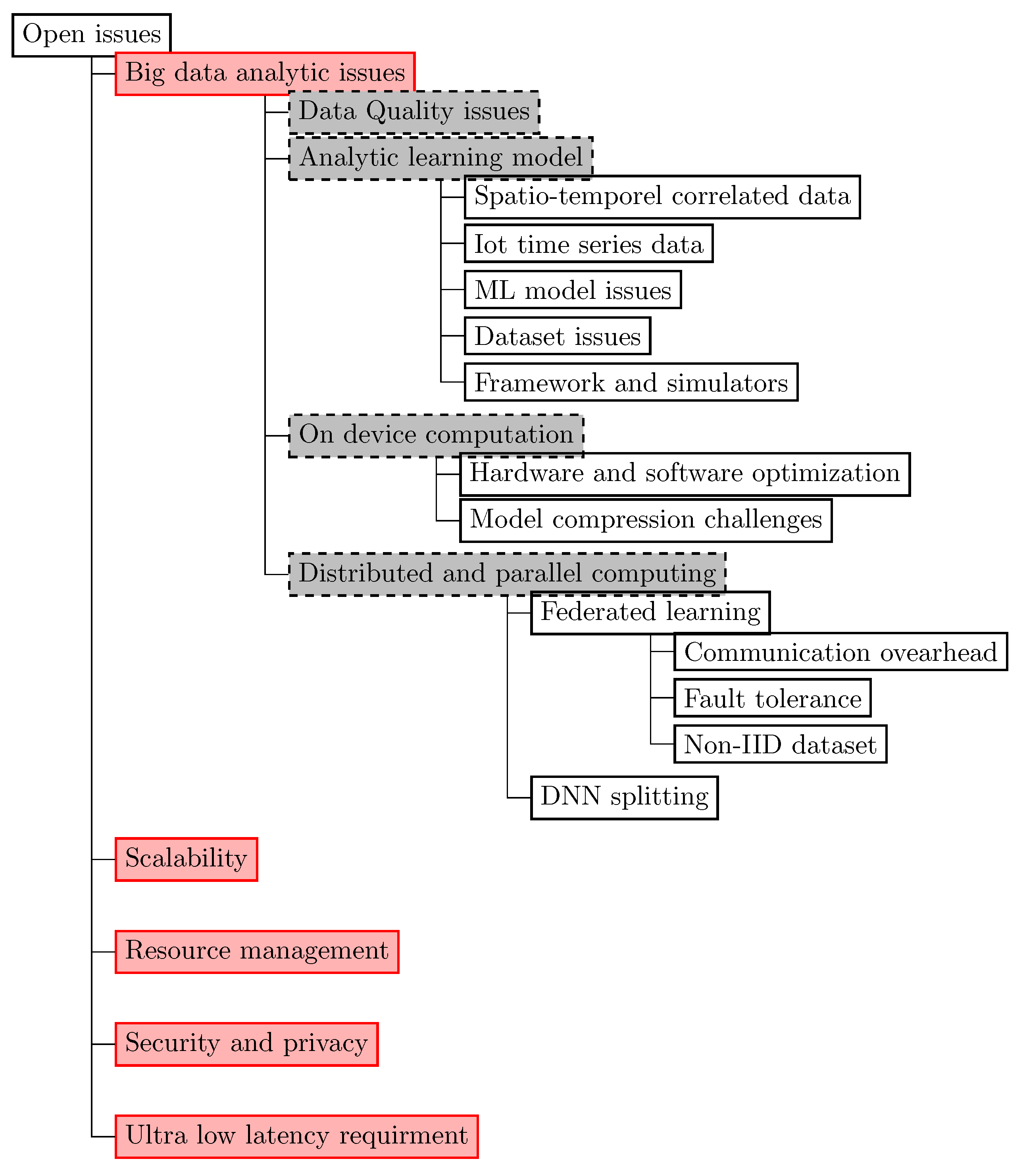

4. Open Issues and Future Directions

4.1. Big Data Analytic Issues

- With regard to data quality issues, the collected IoT data may include irrelevant, redundant, and missing data due to IoT network issues such as failure of devices, less coverage, the overlapping area of redundancy that cause high energy consumption and affect the limited power capabilities of IoT devices. All of these features may reduce the accuracy of the model while increasing the execution time and the computational complexity of the analysis. The authors of [54,57,102,138] used AI for spatial and temporal redundancy, data imputation, sensing coverage, and pipeline data preprocessing at the edge, respectively. However, not all of them consider the mobility, dynamic, and heterogeneity feature of an edge environment. The solutions based on (1) dynamic network management, (2) lightweight AI data fusion at the network edge, and (3) quality-aware, energy-efficient data management and data reduction at the network edges are still open issues. AI and 6G/5G are recommended solutions for efficient 3D coverage and intelligent sensing.

- With regard to analytical learning model choices to deal with IoT big data characteristics, we find the following.

- Spatio-temporal correlated data issue: Large-scale distributed geographic systems, such as large-scale environmental monitoring and city-wide traffic flow prediction, where data is captured from different geographic locations in continuous time, require the handling of the complex correlation between space–time dependency. Graph-based deep learning is considered a promising solution to handle the spatiotemporal correlation issues [139,140].

- Nonstationary, dynamic, and nonlinear IoT time series data: It is difficult for classical methods to extract effective features from the collected IoT data due to the nonstationary, dynamic, and nonlinear IoT data, such as in electric power systems. To this end, selecting a suitable model to deal with IoT data characteristics and in order to solve the problems associated with dynamic IoT data, it is desirable to develop an online/incremental learning model that can be further improved to become more flexible and adapt more quickly to changes in the IoT environment. Reservoir computing is used in [40] to deal with this problem. Retraining the deep learning model is still a problem due to the limited recourse constraint of the edge.

- Generalized, adaptability, and tradeoff between training/inference time and accuracy in ML models are also still challenges to be considered.

- Limited available dataset, multiclass classification, and imbalanced data set are also challenges to be considered.

- Frameworks and simulators: To support real-time analysis and development of fog computing, the authors of [141] developed modular simulation models for service migration, dynamic distributed cluster formation, and microservice orchestration for edge/fog computing based on real-world datasets. In [142], the authors proposed a multilayer fog deployment framework for job scheduling and big data processing in an industrial environment.

- With regard to device computation, we find the following.

- Hardware and software optimization challenges: In the literature, many hardware platforms capable of accelerating DL execution are used like server-class central processing units (CPUs), and graphics processing units (GPUs). As an innovative solution and to enhance the efficiency of computing in edge devices. Hardware implementation is designed as an integrated solution to the neural network in [143].

- Model compression challenges: Many solutions emphasize employing quantization and compression methods to address the limited hardware requirements of an edge device and compress CNN. The quantization requires careful tuning or retraining of the model, which can take a long time and affect the accuracy of the model. Other solutions use dynamic compression with an effort to reduce model complexity and eliminate redundant components, such as in [56]. Others formulate CNN model compression as a multiobjective optimization problem with three functional objectives: reducing the size, improving classification accuracy of the DCNN, which is related to the reliability of the model, and minimizing the number of neurons in the hidden layer using the Lévy flight optimization algorithm (LFOA) [59]. This model suffers from high complexity in training time. One of the future directions could be the combination of dynamic compression with quantization for more accuracy [56].

- With regard to distributed and parallel computing, we find the following.

- Federated learning:

- −

- Communication overhead: FL involves sharing the model parameters instead of the data. Transmitting complex models from large numbers of clients to centralized aggregators generates a massive load of traffic, which makes communication overhead. The iterative and nonoptimized methods of communication between the server and the clients are the main factors for increasing the communication overhead. Decreasing the communication frequency at each round is also essential to improve the efficiency of the algorithm considering the bandwidth cost. As a solution, authors in [144] proposed federated particle swarm optimization (FedPSO) for transmitting score values instead of large weights, which reduces the overall traffic in the network communication. Moreover, authors in [145] proposed a framework called COMET, in which clients can use heterogeneous models. It uses knowledge distillation to transfer its knowledge to other customers with similar data distributions.

- −

- Fault tolerance: Reliability and fault tolerance means the whole system architecture should be able to provide services even if any node (server) on any level fails [146]. Leveraging peer-to-peer FL updates model in the coordination of training can eliminate the single point of failure that may be inherent in an aggregator-based approach [33]. Authors in [147] proposed a decentralized learning variant of the P2P gossip averaging method with batch normalization (BN), adaptation for P2P architectures. BN layers accelerate the convergence of the nondistributed deep learning models.

- −

- The unbalanced and not independent and identically distributed (Non-IID) data: Non-IID data on the local devices (divergence in the data distribution) can significantly decrease learning performance. Many solutions proposed to solve this problem, such as model selection, and clustering are reported in [20,116].

- With regard to DNN splitting, its advantage is that, compared with model compression, it will not lose accuracy. However, it will create many caching and communication costs because tasks should be transferred between the edge nodes to reach the appropriate nodes with low delay and sufficient resources [148]. Early exit is used by [76] to overcome the limitation, but choosing the point of early exit is still inconvenient. Other problems are related to heterogeneous node failure, and many solutions in the literature are proposed, such as RoofSplit [148], which is used to overcome the limitation of communication cost. SplitPlace is used for mobility. Therefore, developing a heterogeneous, parallel, and collaborative architecture for edge data processing for various DL services will be helpful. Other solutions still need to be developed.

4.2. Scalability

4.3. Resource Management

4.4. Security and Privacy

4.5. Ultralow Latency Requirement

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mohammadi, M.; Al-Fuqaha, A.; Sorour, S.; Guizani, M. Deep Learning for IoT Big Data and Streaming Analytics: A Survey. IEEE Commun. Surv. Tutor. 2018, 20, 2923–2960. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge Intelligence: Paving the Last Mile of Artificial Intelligence with Edge Computing. Proc. IEEE 2019, 107, 1738–1762. [Google Scholar] [CrossRef]

- Chang, Z.; Liu, S.; Xiong, X.; Cai, Z.; Tu, G. A Survey of Recent Advances in Edge-Computing-Powered Artificial Intelligence of Things. IEEE IoT J. 2021, 8, 13849–13875. [Google Scholar] [CrossRef]

- Osifeko, M.O.; Hancke, G.P. Artificial Intelligence Techniques for Cognitive Sensing in Future IoT: State-of-the-Art, Potentials, and Challenges. J. Sens. Actuator Netw. 2020, 9, 21. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, J.; Han, G.; Huang, S.; Feng, Y.; Shu, L. A Data Set Accuracy Weighted Random Forest Algorithm for IoT Fault Detection Based on Edge Computing and Blockchain. IEEE IoT J. 2021, 8, 2354–2363. [Google Scholar] [CrossRef]

- Rafique, H.; Shah, M.A.; Islam, S.U.; Maqsood, T.; Khan, S.; Maple, C. A Novel Bio-Inspired Hybrid Algorithm (NBIHA) for Efficient Resource Management in Fog Computing. IEEE Access 2019, 7, 115760–115773. [Google Scholar] [CrossRef]

- Li, W.; Chai, Y.; Khan, F.; Jan, S.R.U.; Verma, S.; Menon, V.G.; Kavita; Li, X. A Comprehensive Survey on Machine Learning-Based Big Data Analytics for IoT-Enabled Smart Healthcare System. Mob. Netw. Appl. 2021, 26, 234–252. [Google Scholar] [CrossRef]

- Liao, R.F.; Wen, H.; Wu, J.; Pan, F.; Xu, A.; Song, H.; Xie, F.; Jiang, Y.; Cao, M. Security enhancement for mobile edge computing through physical layer authentication. IEEE Access 2019, 7, 116390–116401. [Google Scholar] [CrossRef]

- Torre-Bastida, A.I.; Díaz-de Arcaya, J.; Osaba, E.; Muhammad, K.; Camacho, D.; Del Ser, J. Bio-inspired computation for big data fusion, storage, processing, learning and visualization: State of the art and future directions. Neural Comput. Appl. 2021, 9, 1–31. [Google Scholar] [CrossRef]

- Ren, J.; Zhang, D.; He, S.; Zhang, Y.; Li, T. A survey on end-edge-cloud orchestrated network computing paradigms: Transparent computing, mobile edge computing, fog computing, and cloudlet. ACM Comput. Surv. (CSUR) 2019, 52, 1–36. [Google Scholar] [CrossRef]

- Bajaj, K.; Sharma, B.; Singh, R. Implementation analysis of IoT-based offloading frameworks on cloud/edge computing for sensor generated big data. Complex Intell. Syst. 2022, 8, 3641–3658. [Google Scholar] [CrossRef]

- Saeik, F.; Avgeris, M.; Spatharakis, D.; Santi, N.; Dechouniotis, D.; Violos, J.; Leivadeas, A.; Athanasopoulos, N.; Mitton, N.; Papavassiliou, S. Task offloading in Edge and Cloud Computing: A survey on mathematical, artificial intelligence and control theory solutions. Comput. Netw. 2021, 195, 108177. [Google Scholar] [CrossRef]

- Haddaji, A.; Ayed, S.; Fourati, L.C. Artificial Intelligence techniques to mitigate cyber-attacks within vehicular networks: Survey. Comput. Electr. Eng. 2022, 104, 108460. [Google Scholar] [CrossRef]

- Laroui, M.; Nour, B.; Moungla, H.; Cherif, M.A.; Afifi, H.; Guizani, M. Edge and fog computing for IoT: A survey on current research activities & future directions. Comput. Commun. 2021, 180, 210–231. [Google Scholar] [CrossRef]

- Deng, S.; Member, S.; Zhao, H.; Fang, W.; Yin, J. Edge Intelligence: The Confluence of Edge Computing and Artificial Intelligence. IEEE Internet Things J. 2020, 7, 7457–7469. [Google Scholar] [CrossRef]

- Xu, D.; Li, T.; Li, Y.; Su, X.; Tarkoma, S.; Jiang, T.; Crowcroft, J.; Hui, P. Edge Intelligence: Architectures, Challenges, and Applications. arXiv 2020, arXiv:2003.12172. [Google Scholar]

- Abdulkareem, K.H.; Mohammed, M.A.; Gunasekaran, S.S.; Al-Mhiqani, M.N.; Mutlag, A.A.; Mostafa, S.A.; Ali, N.S.; Ibrahim, D.A. A review of fog computing and machine learning: Concepts, applications, challenges, and open issues. IEEE Access 2019, 7, 153123–153140. [Google Scholar] [CrossRef]

- Iftikhar, S.; Gill, S.S.; Song, C.; Xu, M.; Aslanpour, M.S.; Toosi, A.N.; Du, J.; Wu, H.; Ghosh, S.; Chowdhury, D.; et al. AI-based fog and edge computing: A systematic review, taxonomy and future directions. Internet Things 2023, 21, 100674. [Google Scholar] [CrossRef]

- Asim, M.; Wang, Y.; Wang, K.; Huang, P.Q. A Review on Computational Intelligence Techniques in Cloud and Edge Computing. IEEE Trans. Emerg. Top. Comput. Intell. 2020, 4, 742–763. [Google Scholar] [CrossRef]

- Moon, J.; Kum, S.; Lee, S. A heterogeneous IoT data analysis framework with collaboration of edge-cloud computing: Focusing on indoor PM10 and PM2.5 status prediction. Sensors 2019, 19, 3038. [Google Scholar] [CrossRef] [PubMed]

- Putra, K.T.; Chen, H.-C.; Prayitno; Ogiela, M.R.; Chou, C.-L.; Weng, C.-E.; Shae, Z.-Y. Federated Compressed Learning Edge Computing Framework with Ensuring Data Privacy for PM2.5 Prediction in Smart City Sensing Applications. Sensors 2021, 21, 4586. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Liu, L.; Hu, B.; Lei, T.; Ma, H. Federated Region-Learning for Environment Sensing in Edge Computing System. IEEE Trans. Netw. Sci. Eng. 2020, 7, 2192–2204. [Google Scholar] [CrossRef]

- Ahmed, M.; Mumtaz, R.; Zaidi, S.M.H.; Hafeez, M.; Zaidi, S.A.R.; Ahmad, M. Distributed fog computing for internet of things (Iot) based ambient data processing and analysis. Electronics 2020, 9, 1756. [Google Scholar] [CrossRef]

- Wardana, I.N.K.; Gardner, J.W.; Fahmy, S.A. Optimising deep learning at the edge for accurate hourly air quality prediction. Sensors 2021, 21, 1064. [Google Scholar] [CrossRef]

- De Stefano, C.; Ferrigno, L.; Fontanella, F.; Gerevini, L.; Scotto di Freca, A. A novel PCA-based approach for building on-board sensor classifiers for water contaminant detection. Pattern Recogn. Lett. 2020, 135, 375–381. [Google Scholar] [CrossRef]

- Pattnaik, B.S.; Pattanayak, A.S.; Udgata, S.K.; Panda, A.K. Machine learning based soft sensor model for BOD estimation using intelligence at edge. Complex Intell. Syst. 2021, 7, 961–976. [Google Scholar] [CrossRef]

- Ren, J.; Zhu, Q.; Wang, C. Edge Computing for Water Quality Monitoring Systems. Mob. Inf. Syst. 2022, 2022, 5056606. [Google Scholar] [CrossRef]

- Thakur, T.; Mehra, A.; Hassija, V.; Chamola, V.; Srinivas, R.; Gupta, K.K.; Singh, A.P. Smart water conservation through a machine learning and blockchain-enabled decentralized edge computing network. Appl. Soft Comput. 2021, 106, 107274. [Google Scholar] [CrossRef]

- Yang, J.; Wen, J.; Wang, Y.; Jiang, B.; Wang, H.; Song, H. Fog-Based Marine Environmental Information Monitoring Toward Ocean of Things. IEEE IoT J. 2020, 7, 4238–4247. [Google Scholar] [CrossRef]

- Lu, H.; Wang, D.; Li, Y.; Li, J.; Li, X.; Kim, H.; Serikawa, S.; Humar, I. CONet: A Cognitive Ocean Network. IEEE Wirel. Commun. 2019, 26, 90–96. [Google Scholar] [CrossRef]

- Sun, X.; Wang, X.; Cai, D.; Li, Z.; Gao, Y.; Wang, X. Multivariate Seawater Quality Prediction Based on PCA-RVM Supported by Edge Computing towards Smart Ocean. IEEE Access 2020, 8, 54506–54513. [Google Scholar] [CrossRef]

- Kwon, D.; Jeon, J.; Park, S.; Kim, J.; Cho, S. Multiagent DDPG-Based Deep Learning for Smart Ocean Federated Learning IoT Networks. IEEE IoT J. 2020, 7, 9895–9903. [Google Scholar] [CrossRef]

- Taik, A.; Cherkaoui, S. Electrical Load Forecasting Using Edge Computing and Federated Learning. In Proceedings of the ICC 2020—2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020. [Google Scholar] [CrossRef]

- Savi, M.; Olivadese, F. Short-Term Energy Consumption Forecasting at the Edge: A Federated Learning Approach. IEEE Access 2021, 9, 95949–95969. [Google Scholar] [CrossRef]

- Li, T.; Fong, S.; Li, X.; Lu, Z.; Gandomi, A.H. Swarm Decision Table and Ensemble Search Methods in Fog Computing Environment: Case of Day-Ahead Prediction of Building Energy Demands Using IoT Sensors. IEEE IoT J. 2020, 7, 2321–2342. [Google Scholar] [CrossRef]

- Li, C.; Zheng, X.; Yang, Z.; Kuang, L. Predicting Short-Term Electricity Demand by Combining the Advantages of ARMA and XGBoost in Fog Computing Environment. Wirel. Commun. Mob. Comput. 2018, 2018, 5018053. [Google Scholar] [CrossRef]

- Rabie, A.H.; Ali, S.H.; Saleh, A.I.; Ali, H.A. A fog based load forecasting strategy based on multi-ensemble classification for smart grids. J. Ambient Intell. Hum. Comput. 2020, 11, 209–236. [Google Scholar] [CrossRef]

- Luo, H.; Cai, H.; Yu, H.; Sun, Y.; Bi, Z.; Jiang, L. A short-term energy prediction system based on edge computing for smart city. Future Gener. Comput. Syst. 2019, 101, 444–457. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Hawash, H.; Chakrabortty, R.K.; Ryan, M. Energy-Net: A Deep Learning Approach for Smart Energy Management in IoT-Based Smart Cities. IEEE IoT J. 2021, 8, 12422–12435. [Google Scholar] [CrossRef]

- Fujimoto, Y.; Fujita, M.; Hayashi, Y. Deep reservoir architecture for short-term residential load forecasting: An online learning scheme for edge computing. Appl. Energy 2021, 298, 117176. [Google Scholar] [CrossRef]

- Cicirelli, F.; Gentile, A.F.; Greco, E.; Guerrieri, A.; Spezzano, G.; Vinci, A. An Energy Management System at the Edge based on Reinforcement Learning. In Proceedings of the 2020 IEEE/ACM 24th International Symposium on Distributed Simulation and Real Time Applications (DS-RT), Prague, Czech Republic, 14–16 September 2020. [Google Scholar] [CrossRef]

- Tom, R.J.; Sankaranarayanan, S.; Rodrigues, J.J. Smart Energy Management and Demand Reduction by Consumers and Utilities in an IoT-Fog-Based Power Distribution System. IEEE IoT J. 2019, 6, 7386–7394. [Google Scholar] [CrossRef]

- Taïk, A.; Member, S.; Nour, B.; Cherkaoui, S.; Member, S. Empowering Prosumer Communities in Smart Grid with Wireless Communications and Federated Edge Learning. IEEE Wirel. Commun. 2021, 28, 26–33. [Google Scholar] [CrossRef]

- Jaiswal, R.; Chakravorty, A.; Rong, C. Distributed Fog Computing Architecture for Real-Time Anomaly Detection in Smart Meter Data. In Proceedings of the 2020 IEEE Sixth International Conference on Big Data Computing Service and Applications (BigDataService), Oxford, UK, 3–6 August 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Scarpiniti, M.; Baccarelli, E.; Momenzadeh, A.; Uncini, A. SmartFog: Training the Fog for the energy-saving analytics of Smart-Meter data. Appl. Sci. 2019, 9, 4193. [Google Scholar] [CrossRef]

- Olivares-Rojas, J.C.; Reyes-Archundia, E.; Rodriiguez-Maya, N.E.; Gutierrez-Gnecchi, J.A.; Molina-Moreno, I.; Cerda-Jacobo, J. Machine Learning Model for the Detection of Electric Energy Fraud using an Edge-Fog Computing Architecture. In Proceedings of the 2020 IEEE International Conference on Engineering Veracruz (ICEV), Boca del Rio, Mexico, 26–29 October 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Utomo, D.; Hsiung, P.A. A multitiered solution for anomaly detection in edge computing for smart meters. Sensors 2020, 20, 5159. [Google Scholar] [CrossRef]

- Zhang, Y.; Ai, Q.; Wang, H.; Li, Z.; Zhou, X. Energy theft detection in an edge data center using threshold-based abnormality detector. Int. J. Electr. Power Energy Syst. 2020, 121, 106162. [Google Scholar] [CrossRef]

- Ashraf, M.M.; Waqas, M.; Abbas, G.; Baker, T.; Abbas, Z.H.; Alasmary, H. FedDP: A Privacy-Protecting Theft Detection Scheme in Smart Grids Using Federated Learning. Energies 2022, 15, 6241. [Google Scholar] [CrossRef]

- Guillén, M.A.; Llanes, A.; Imbernón, B.; Martínez-España, R.; Bueno-Crespo, A.; Cano, J.C.; Cecilia, J.M. Performance evaluation of edge-computing platforms for the prediction of low temperatures in agriculture using deep learning. J. Supercomput. 2021, 77, 818–840. [Google Scholar] [CrossRef]

- Kaur, A.; Sood, S.K. Cloud-Fog based framework for drought prediction and forecasting using artificial neural network and genetic algorithm. J. Exp. Theor. Artif. Intell. 2020, 32, 273–289. [Google Scholar] [CrossRef]

- Lee, K.; Silva, B.N.; Han, K. Deep learning entrusted to fog nodes (DLEFN) based smart agriculture. Appl. Sci. 2020, 10, 1544. [Google Scholar] [CrossRef]

- Taneja, M.; Byabazaire, J.; Jalodia, N.; Davy, A.; Olariu, C.; Malone, P. Machine learning based fog computing assisted data-driven approach for early lameness detection in dairy cattle. Comput. Electron. Agric. 2020, 171, 105286. [Google Scholar] [CrossRef]

- Cordeiro, M.; Markert, C.; Araújo, S.S.; Campos, N.G.; Gondim, R.S.; da Silva, T.L.C.; da Rocha, A.R. Towards Smart Farming: Fog-enabled intelligent irrigation system using deep neural networks. Future Gener. Comput. Syst. 2022, 129, 115–124. [Google Scholar] [CrossRef]

- Dahane, A.; Benameur, R.; Kechar, B. An IoT low-cost smart farming for enhancing irrigation efficiency of smallholders farmers. Wirel. Pers. Commun. 2022, 127, 3173–3210. [Google Scholar] [CrossRef]

- De Vita, F.; Nocera, G.; Bruneo, D.; Tomaselli, V.; Giacalone, D.; Das, S.K. Porting deep neural networks on the edge via dynamic K-means compression: A case study of plant disease detection. Pervasive Mob. Comput. 2021, 75, 101437. [Google Scholar] [CrossRef]

- Gu, M.; Li, K.C.; Li, Z.; Han, Q.; Fan, W. Recognition of crop diseases based on depthwise separable convolution in edge computing. Sensors 2020, 20, 4091. [Google Scholar] [CrossRef]

- Zhang, R.; Li, X. Edge Computing Driven Data Sensing Strategy in the Entire Crop Lifecycle for Smart Agriculture. Sensors 2021, 21, 7502. [Google Scholar] [CrossRef] [PubMed]

- Gupta, N.; Khosravy, M.; Patel, N.; Dey, N.; Gupta, S.; Darbari, H.; Crespo, R.G. Economic data analytic AI technique on IoT edge devices for health monitoring of agriculture machines. Appl. Intell. 2020, 50, 3990–4016. [Google Scholar] [CrossRef]

- Rajakumar, M.P.; Ramya, J.; Maheswari, B.U. Health monitoring and fault prediction using a lightweight deep convolutional neural network optimized by Levy flight optimization algorithm. Neural Comput. Appl. 2021, 33, 12513–12534. [Google Scholar] [CrossRef]

- Umarale, D.; Sodhani, S.; Akhelikar, A.; Koshy, R. Attention Detection of Participants during Digital Learning Sessions using Edge Computing. SSRN Electron. J. 2021, 575–584. [Google Scholar] [CrossRef]

- Hou, C.; Hua, L.; Lin, Y.; Zhang, J.; Liu, G.; Xiao, Y. Application and exploration of artificial intelligence and edge computing in long-distance education on mobile network. Mob. Netw. Appl. 2021, 26, 2164–2175. [Google Scholar] [CrossRef]

- Li, J.; Shi, D.; Tumnark, P.; Xu, H. A system for real-time intervention in negative emotional contagion in a smart classroom deployed under edge computing service infrastructure. Peer-to-Peer Netw. Appl. 2020, 13, 1706–1719. [Google Scholar] [CrossRef]

- Preuveneers, D.; Garofalo, G.; Joosen, W. Cloud and edge based data analytics for privacy-preserving multi-modal engagement monitoring in the classroom. Inf. Syst. Front. 2021, 23, 151–164. [Google Scholar] [CrossRef]

- Singh, M.; Bharti, S.; Kaur, H.; Arora, V.; Saini, M.; Kaur, M.; Singh, J. A facial and vocal expression based comprehensive framework for real-time student stress monitoring in an IoT-Fog-Cloud environment. IEEE Access 2022, 10, 63177–63188. [Google Scholar] [CrossRef]

- Sood, S.K.; Singh, K.D. Optical fog-assisted smart learning framework to enhance students’ employability in engineering education. Comput. Appl. Eng. Educ. 2019, 27, 1030–1042. [Google Scholar] [CrossRef]

- Ahanger, T.A.; Tariq, U.; Ibrahim, A.; Ullah, I.; Bouteraa, Y. ANFIS-inspired smart framework for education quality assessment. IEEE Access 2020, 8, 175306–175318. [Google Scholar] [CrossRef]

- Ma, R.; Chen, X. Intelligent education evaluation mechanism on ideology and politics with 5G: PSO-driven edge computing approach. Wirel. Netw. 2022, 29, 685–696. [Google Scholar] [CrossRef]

- Munusamy, A.; Adhikari, M.; Balasubramanian, V.; Khan, M.A.; Menon, V.G.; Rawat, D.; Srirama, S.N. Service Deployment Strategy for Predictive Analysis of FinTech IoT Applications in Edge Networks. IEEE IoT J. 2021, 10, 2131–2140. [Google Scholar] [CrossRef]

- Zeng, H. Influences of mobile edge computing-based service preloading on the early-warning of financial risks. J. Supercomput. 2022, 78, 11621–11639. [Google Scholar] [CrossRef]

- Neelakantam, G.; Onthoni, D.D.; Sahoo, P.K. Fog computing enabled locality based product demand prediction and decision making using reinforcement learning. Electronics 2021, 10, 227. [Google Scholar] [CrossRef]

- Bv, N.; Guddeti, R.M.R. Fog-based Intelligent Machine Malfunction Monitoring System for Industry 4.0. IEEE Trans. Ind. Inform. 2021, 17, 7923–7932. [Google Scholar] [CrossRef]

- Syafrudin, M.; Fitriyani, N.L.; Alfian, G.; Rhee, J. An affordable fast early warning system for edge computing in assembly line. Appl. Sci. 2018, 9, 84. [Google Scholar] [CrossRef]

- Fawwaz, D.Z.; Chung, S.H. Real-time and robust hydraulic system fault detection via edge computing. Appl. Sci. 2020, 10, 5933. [Google Scholar] [CrossRef]

- Park, D.; Kim, S.; An, Y.; Jung, J.Y. Lired: A light-weight real-time fault detection system for edge computing using LSTM recurrent neural networks. Sensors 2018, 18, 2110. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Ota, K.; Dong, M. Deep Learning for Smart Industry: Efficient Manufacture Inspection System with Fog Computing. IEEE Trans. Ind. Inform. 2018, 14, 4665–4673. [Google Scholar] [CrossRef]

- Feng, Y.; Wang, T.; Hu, B.; Yang, C.; Tan, J. An integrated method for high-dimensional imbalanced assembly quality prediction supported by edge computing. IEEE Access 2020, 8, 71279–71290. [Google Scholar] [CrossRef]

- Qiao, H.; Wang, T.; Wang, P. A tool wear monitoring and prediction system based on multiscale deep learning models and fog computing. Int. J. Adv. Manuf. Technol. 2020, 108, 2367–2384. [Google Scholar] [CrossRef]

- Wang, J.; Li, D. Task scheduling based on a hybrid heuristic algorithm for smart production line with fog computing. Sensors 2019, 19, 1023. [Google Scholar] [CrossRef] [PubMed]

- Tan, R.E.N.Z.; Chew, X.; Khaw, K.W.A.H. Quantized Deep Residual Convolutional Neural Network for Image-Based Dietary Assessment. IEEE Access 2020, 8, 111875–111888. [Google Scholar] [CrossRef]

- Liu, C.; Cao, Y.; Member, S.; Luo, Y.; Chen, G. A New Deep Learning-Based Food Recognition System for Dietary Assessment on An Edge Computing Service Infrastructure. IEEE Trans. Serv. Comput. 2018, 11, 249–261. [Google Scholar] [CrossRef]

- Sarabia-jácome, D.; Usach, R.; Esteve, M. Internet of Things Highly-efficient fog-based deep learning AAL fall detection system. Internet Things 2020, 11, 100185. [Google Scholar] [CrossRef]

- Hassan, M.K.; El, A.I.; Mahmoud, D.; Amany, M.B.; Mohamed, M.S.; Gunasekaran, M. EoT-driven hybrid ambient assisted living framework with naı ¨ ve Bayes – firefly algorithm. Neural Comput. Appl. 2018, 31, 1275–1300. [Google Scholar] [CrossRef]

- Attaoui, A.E.; Largo, S.; Kaissari, S.; Benba, A.; Jilbab, A.; Bourouhou, A. Machine learning-based edge-computing on a multi-level architecture of WSN and IoT for real-time fall detection. IET Wirel. Sens. Syst. 2020, 10, 320–332. [Google Scholar] [CrossRef]

- Divya, V.; Sri, R.L. Intelligent Real-Time Multimodal Fall Detection in Fog Infrastructure Using Ensemble Learning. In Challenges and Trends in Multimodal Fall Detection for Healthcare; Springer: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Wu, Q.; Chen, X.; Member, S.; Zhou, Z.; Zhang, J. FedHome: Cloud-Edge based Personalized Federated Learning for In-Home Health Monitoring. arXiv 2020, arXiv:2012.07450v1. [Google Scholar] [CrossRef]

- Zhou, Y.; Han, M.; He, J.S.; Liu, L.; Xu, X.; Gao, X. Abnormal Activity Detection in Edge Computing: A Transfer Learning Approach. In Proceedings of the 2020 International Conference on Computing, Networking and Communications (ICNC), Big Island, HI, USA, 17–20 February 2020; pp. 107–111. [Google Scholar]

- Gumaei, A.; Al-rakhami, M.; Alsalman, H.; Mizanur, S. DL-HAR: Deep Learning-Based Human Activity Recognition Framework for Edge Computing. CMC-Comput. Mater. Contin. 2020, 65, 1033–1057. [Google Scholar] [CrossRef]

- Rashid, N.; Member, S.; Demirel, B.U. AHAR: Adaptive CNN for Energy-efficient Human Activity Recognition in Low-power Edge Devices. arXiv 2021, arXiv:2102.01875v1. [Google Scholar] [CrossRef]

- Islam, N.; Faheem, Y.; Ud, I.; Talha, M.; Guizani, M. A blockchain-based fog computing framework for activity recognition as an application to e-Healthcare services. Future Gener. Comput. Syst. 2019, 100, 569–578. [Google Scholar] [CrossRef]

- Manogaran, G.; Shakeel, P.M.; Fouad, H.; Nam, Y.; Baskar, S. Wearable IoT Smart-Log Patch: An Edge Computing-Based Bayesian Deep Learning Network System for Multi Access Physical Monitoring System. Sensors 2019, 19, 3030. [Google Scholar] [CrossRef]

- Manocha, A.; Kumar, G.; Bhatia, M.; Sharma, A. Video-assisted smart health monitoring for affliction determination based on fog analytics. J. Biomed. Inform. 2020, 109, 103513. [Google Scholar] [CrossRef]

- Ahanger, T.A.; Tariq, U.; Nusir, M.; Aldaej, A.; Ullah, I.; Sulman, A. A novel IoT–fog–cloud-based healthcare system for monitoring and predicting COVID-19 outspread. J. Supercomput. 2021, 78, 1783–1806. [Google Scholar] [CrossRef] [PubMed]

- Bhatia, M.; Kumari, S. A Novel IoT-Fog-Cloud-based Healthcare System for Monitoring and Preventing Encephalitis. Cogn. Comput. 2021, 14, 1609–1626. [Google Scholar] [CrossRef]

- Majumdar, A.; Debnath, T.; Sood, S.K.; Baishnab, K.L. Kyasanur Forest Disease Classification Framework Using Novel Extremal Optimization Tuned Neural Network in Fog Computing Environment. J. Med. Syst. 2018, 42, 187. [Google Scholar] [CrossRef]

- Vijayakumar, V.; Malathi, D.; Subramaniyaswamy, V.; Saravanan, P.; Logesh, R. Fog computing-based intelligent healthcare system for the detection and prevention of mosquito-borne diseases. Comput. Hum. Behav. 2019, 100, 275–285. [Google Scholar] [CrossRef]

- Qayyum, A.; Ahmad, K.; Ahsan, M.A.; Al-fuqaha, A.; Qadir, J. Collaborative Federated Learning for Healthcare: Multi-Modal COVID-19 Diagnosis at the Edge. arXiv 2021, arXiv:2101.07511v1. [Google Scholar] [CrossRef]

- Singh, P.; Kaur, R. An integrated fog and Artificial Intelligence smart health framework to predict and prevent COVID-19. Glob. Transit. 2020, 2, 283–292. [Google Scholar] [CrossRef]

- Singh, V.K.; Kolekar, M.H. Deep learning empowered COVID-19 diagnosis using chest CT scan images for collaborative edge-cloud computing platform. Multimed. Tools Appl. 2021, 81, 3–30. [Google Scholar] [CrossRef] [PubMed]

- Adhikari, M.; Munusamy, A. iCovidCare: Intelligent Health Monitoring Framework for COVID-19 using Ensemble Random Forest in Edge Networks Internet of Things iCovidCare: Intelligent health monitoring framework for COVID-19 using ensemble random forest in edge networks. Internet Things 2021, 14, 100385. [Google Scholar] [CrossRef]

- Prabukumar, M.; Agilandeeswari, L.; Ganesan, K. An intelligent lung cancer diagnosis system using cuckoo search optimization and support vector machine classifier. J. Ambient Intell. Hum. Comput. 2019, 10, 267–293. [Google Scholar] [CrossRef]

- Ding, W.; Member, S.; Abdel-basset, M.; Eldrandaly, K.A.; Abdel-fatah, L.; Albuquerque, V.H.C.D.; Member, S. Smart Supervision of Cardiomyopathy Based on Fuzzy Harris Hawks Optimizer and Wearable Sensing Data Optimization: A New Model. IEEE Trans. Cybern. 2020, 51, 4944–4958. [Google Scholar] [CrossRef]

- Hassan, M.K.; Desouky, A.I.E.; Elghamrawy, S.M.; Sarhan, A.M. A Hybrid Real-time remote monitoring framework with NB-WOA algorithm for patients with chronic diseases. Future Gener. Comput. Syst. 2018, 93, 77–95. [Google Scholar] [CrossRef]

- Muzammal, M.; Talat, R.; Hassan, A.; Pirbhulal, S. A multi-sensor data fusion enabled ensemble approach for medical data from body sensor networks. Inf. Fusion 2020, 53, 155–164. [Google Scholar] [CrossRef]

- El-Hasnony, I.M.; Barakat, S.I.; Mostafa, R.R. Optimized ANFIS model using hybrid metaheuristic algorithms for Parkinson’s disease prediction in IoT environment. IEEE Access 2020, 8, 119252–119270. [Google Scholar] [CrossRef]

- Shynu, P.G.; Menon, V.G.; Member, S. Blockchain-Based Secure Healthcare Application for Diabetic-Cardio Disease Prediction in Fog Computing. IEEE Access 2021, 9, 45706–45720. [Google Scholar] [CrossRef]

- Cheikhrouhou, O. One-Dimensional CNN Approach for ECG Arrhythmia Analysis in Fog-Cloud Environments. IEEE Access 2021, 9, 103513–103523. [Google Scholar] [CrossRef]

- Pustokhina, I.V.; Pustokhin, D.A.; Gupta, D.; Khanna, A.; Shankar, K. An Effective Training Scheme for Deep Neural Network in Edge Computing Enabled Internet of Medical Things (IoMT) Systems. IEEE Access 2020, 8, 107112–107123. [Google Scholar] [CrossRef]

- Ijaz, M.; Li, G.; Wang, H.; El-Sherbeeny, A.M.; Moro Awelisah, Y.; Lin, L.; Koubaa, A.; Noor, A. Intelligent fog-enabled smart healthcare system for wearable physiological parameter detection. Electronics 2020, 9, 2015. [Google Scholar] [CrossRef]

- Zhu, T.; Kuang, L.; Daniels, J.; Herrero, P.; Li, K.; Georgiou, P. IoMT-Enabled Real-time Blood Glucose Prediction with Deep Learning and Edge Computing. IEEE Internet Things J. 2022; Early Access. [Google Scholar] [CrossRef]

- Xu, T.; Han, G.; Qi, X.; Du, J.; Lin, C.; Shu, L. A Hybrid Machine Learning Model for Demand Prediction of Edge-Computing-Based Bike-Sharing System Using Internet of Things. IEEE Iot J. 2020, 7, 7345–7356. [Google Scholar] [CrossRef]

- Ke, R.; Zhuang, Y.; Pu, Z.; Wang, Y. A Smart, Efficient, and Reliable Parking Surveillance System with Edge Artificial Intelligence on IoT Devices. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4962–4974. [Google Scholar] [CrossRef]

- Huang, X.; Li, P.; Yu, R.; Wu, Y.; Xie, K.; Xie, S. FedParking: A Federated Learning Based Parking Space Estimation with Parked Vehicle Assisted Edge Computing. IEEE Trans. Veh. Technol. 2021, 70, 9355–9368. [Google Scholar] [CrossRef]

- Yan, G.; Qin, Q. The Application of Edge Computing Technology in the Collaborative Optimization of Intelligent Transportation System Based on Information Physical Fusion. IEEE Access 2020, 8, 153264–153272. [Google Scholar] [CrossRef]

- Ali, A.; Zhu, Y.; Zakarya, M. A data aggregation based approach to exploit dynamic spatio-temporal correlations for citywide crowd flows prediction in fog computing. Multimed. Tools Appl. 2021, 80, 31401–31433. [Google Scholar] [CrossRef]

- Liu, Y.; Member, S.; Yu, J.J.Q.; Kang, J.; Niyato, D.; Zhang, S. Privacy-Preserving Traffic Flow Prediction: A Federated Learning Approach. IEEE Internet Things J. 2020, 7, 7751–7763. [Google Scholar] [CrossRef]

- Liu, F.; Ma, X.; An, X.; Liang, G. Urban Traffic Flow Prediction Model with CPSO/SSVM Algorithm under the Edge Computing Framework. Wirel. Commun. Mob. Comput. 2020, 2020, 8871998. [Google Scholar] [CrossRef]

- Yuan, X.; Chen, J.; Yang, J.; Zhang, N.; Yang, T.; Han, T.; Taherkordi, A. FedSTN: Graph Representation Driven Federated Learning for Edge Computing Enabled Urban Traffic Flow Prediction. IEEE Trans. Intell. Transp. Syst. 2022; Early Access. [Google Scholar] [CrossRef]

- Xun, Y.; Qin, J.; Liu, J.; Qin, J.; Liu, J. Deep Learning Enhanced Driving Behavior Evaluation Based on Vehicle-Edge-Cloud Architecture. IEEE Trans. Veh. Technol. 2021, 70, 6172–6177. [Google Scholar] [CrossRef]

- Zhang, K.; Huang, W.; Hou, X.; Xu, J.; Su, R. A Fault Diagnosis and Visualization Method for High-Speed Train Based on Edge and Cloud Collaboration. Appl. Sci. 2021, 11, 1251. [Google Scholar] [CrossRef]

- Gumaei, A.; Al-Rakhami, M.; Hassan, M.M.; Alamri, A.; Alhussein, M.; Razzaque, M.A.; Fortino, G. A deep learning-based driver distraction identification framework over edge cloud. Neural Comput. Appl. 2020, 1, 1–16. [Google Scholar] [CrossRef]

- Kumar, R.; Kumar, P.; Tripathi, R.; Gupta, G.P.; Kumar, N.; Hassan, M.M. A Privacy-Preserving-Based Secure Framework Using Blockchain-Enabled Deep-Learning in Cooperative Intelligent Transport System. IEEE Trans. Intell. Transp. Syst. 2021, 23, 16492–16503. [Google Scholar] [CrossRef]

- Kumar, R.; Kumar, P.; Tripathi, R.; Gupta, G.P.; Gadekallu, T.R.; Srivastava, G. SP2F: A secured privacy-preserving framework for smart agricultural Unmanned Aerial Vehicles. Comput. Netw. 2021, 187, 107819. [Google Scholar] [CrossRef]

- Sharma, J.; Kim, D.; Lee, A.; Seo, D. On differential privacy-based framework for enhancing user data privacy in mobile edge computing environment. IEEE Access 2021, 9, 38107–38118. [Google Scholar] [CrossRef]

- Zeng, X.; Zhang, X.; Yang, S.; Shi, Z.; Chi, C. Gait-Based Implicit Authentication Using Edge Computing and Deep Learning for Mobile Devices. Sensors 2021, 21, 4592. [Google Scholar] [CrossRef]

- Samy, A. Fog-Based Attack Detection Framework for Internet of Things Using Deep Learning. IEEE Access 2020, 8, 74571–74585. [Google Scholar] [CrossRef]

- Ullah, I.; Raza, B.; Ali, S.; Abbasi, I.A.; Baseer, S.; Irshad, A. Software Defined Network Enabled Fog-to-Things Hybrid Deep Learning Driven Cyber Threat Detection System. Secur. Commun. Netw. 2021, 2021, 6136670. [Google Scholar] [CrossRef]

- Sadaf, K.; Sultana, J. Intrusion Detection Based on Autoencoder and Isolation Forest in Fog Computing. IEEE Access 2020, 8, 167059–167068. [Google Scholar] [CrossRef]

- Lee, S.J.; Yoo, P.D.; Member, S.; Asyhari, A.T.; Member, S.; Jhi, Y.; Chermak, L.; Yeun, C.Y.; Member, S. IMPACT: Impersonation attack detection via edge computing using deep autoencoder and feature abstraction. IEEE Access 2020. [Google Scholar] [CrossRef]

- Huong, T.T.; Bac, T.P.; Long, D.M.; Thang, B.D.; Luong, T.D.; Binh, N.T.; Phuc, T.K. LocKedge: Low-Complexity Cyberattack Detection in IoT Edge Computing. IEEE Access 2021, 9, 29696–29710. [Google Scholar] [CrossRef]

- Gavel, S.; Raghuvanshi, A.S.; Tiwari, S. Distributed intrusion detection scheme using dual-axis dimensionality reduction for Internet of things (IoT). J. Supercomput. 2021, 77, 10488–10511. [Google Scholar] [CrossRef]

- Hwaitat, A.K.; Manaseer, S.; Al-Sayyed, R.M.; Almaiah, M.A.; Almomani, O. An investigator digital forensics frequencies particle swarm optimization for detection and classification of APT attack in fog computing environment (IDF-FPSO). J. Theor. Appl. Inf. Technol. 2020, 98, 937–952. [Google Scholar]

- Haddadpajouh, H.; Mohtadi, A.; Dehghantanaha, A.; Karimipour, H.; Lin, X.; Choo, K.K.R. A Multikernel and Metaheuristic Feature Selection Approach for IoT Malware Threat Hunting in the Edge Layer. IEEE IoT J. 2021, 8, 4540–4547. [Google Scholar] [CrossRef]

- Adel, A. Utilizing technologies of fog computing in educational IoT systems: Privacy, security, and agility perspective. J. Big Data 2020, 7, 99. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, S.; Zhang, P.; Zhou, X.; Shao, X.; Pu, G.; Zhang, Y. Blockchain and Federated Learning for Collaborative Intrusion Detection in Vehicular Edge Computing. IEEE Trans. Veh. Technol. 2021, 70, 6073–6084. [Google Scholar] [CrossRef]

- Gupta, R.; Reebadiya, D.; Tanwar, S.; Kumar, N.; Guizani, M. When blockchain meets edge intelligence: Trusted and security solutions for consumers. IEEE Netw. 2021, 35, 272–278. [Google Scholar] [CrossRef]

- Nguyen, D.C.; Ding, M.; Pham, Q.V.; Pathirana, P.N.; Le, L.B.; Seneviratne, A.; Li, J.; Niyato, D.; Poor, H.V. Federated learning meets blockchain in edge computing: Opportunities and challenges. IEEE Internet Things J. 2021, 8, 12806–12825. [Google Scholar] [CrossRef]

- Alwateer, M.; Almars, A.M.; Areed, K.N.; Elhosseini, M.A.; Haikal, A.Y.; Badawy, M. Ambient Healthcare Approach with Hybrid Whale Optimization Algorithm and Naïve Bayes Classifier. Sensors 2021, 21, 4579. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Xie, S.; Wan, Z.; Lv, H.; Song, H.; Lv, Z. Graph-powered learning methods in the Internet of Things: A survey. Mach. Learn. Appl. 2023, 11, 100441. [Google Scholar] [CrossRef]

- Jiang, W. Graph-based deep learning for communication networks: A survey. Comput. Commun. 2021, 185, 40–54. [Google Scholar] [CrossRef]

- Mahmud, R.; Pallewatta, S.; Goudarzi, M.; Buyya, R. Ifogsim2: An extended ifogsim simulator for mobility, clustering, and microservice management in edge and fog computing environments. J. Syst. Softw. 2022, 190, 111351. [Google Scholar] [CrossRef]

- Qayyum, T.; Trabelsi, Z.; Waqar Malik, A.; Hayawi, K. Mobility-aware hierarchical fog computing framework for Industrial Internet of Things (IIoT). J. Cloud Comput. 2022, 11, 72. [Google Scholar] [CrossRef]

- Li, C.; Zhang, K.; Li, Y.; Shang, J.; Zhang, X.; Qian, L. ANNA: Accelerating Neural Network Accelerator through software-hardware co-design for vertical applications in edge systems. Future Gener. Comput. Syst. 2023, 140, 91–103. [Google Scholar] [CrossRef]

- Qolomany, B.; Ahmad, K.; Al-Fuqaha, A.; Qadir, J. Particle Swarm Optimized Federated Learning for Industrial IoT and Smart City Services. In Proceedings of the GLOBECOM 2020—2020 IEEE Global Communications Conference, Taipei, Taiwan, 7–11 December 2020. [Google Scholar] [CrossRef]

- Cho, Y.J.; Wang, J.; Chirvolu, T.; Joshi, G. Communication-Efficient and Model-Heterogeneous Personalized Federated Learning via Clustered Knowledge Transfer. IEEE J. Sel. Top. Signal Process. 2023; Early Access. [Google Scholar] [CrossRef]

- Grover, J.; Garimella, R.M. Reliable and fault-tolerant IoT-edge architecture. In Proceedings of the 2018 IEEE SENSORS, New Delhi, India, 28–31 October 2018; pp. 1–4. [Google Scholar]

- Mertens, J.; Galluccio, L.; Morabito, G. Federated learning through model gossiping in wireless sensor networks. In Proceedings of the 2021 IEEE International Black Sea Conference on Communications and Networking (BlackSeaCom), Bucharest, Romania, 24–28 May 2021; pp. 1–6. [Google Scholar]

- Huang, Y.; Zhang, H.; Shao, X.; Li, X.; Ji, H. RoofSplit: An edge computing framework with heterogeneous nodes collaboration considering optimal CNN model splitting. Future Gener. Comput. Syst. 2023, 140, 79–90. [Google Scholar] [CrossRef]

- Babar, M.; Khan, M.S. ScalEdge: A framework for scalable edge computing in Internet of things–based smart systems. Int. J. Distrib. Sens. Netw. 2021, 17, 15501477211035332. [Google Scholar] [CrossRef]

- da Silva, T.P.; Neto, A.R.; Batista, T.V.; Delicato, F.C.; Pires, P.F.; Lopes, F. Online machine learning for auto-scaling in the edge computing. Pervasive Mob. Comput. 2022, 87, 101722. [Google Scholar] [CrossRef]

- Agrawal, N. Dynamic load balancing assisted optimized access control mechanism for edge-fog-cloud network in Internet of Things environment. Concurr. Comput. Pract. Exp. 2021, 33, e6440. [Google Scholar] [CrossRef]

- Adhikari, M.; Ambigavathi, M.; Menon, V.G.; Hammoudeh, M. R andom F orest for D ata A ggregation to M onitor and P redict COVID-19 U sing E dge N etworks. IEEE Internet Things Mag. 2021, 4, 40–44. [Google Scholar] [CrossRef]

- Domeke, A.; Cimoli, B.; Monroy, I.T. Integration of Network Slicing and Machine Learning into Edge Networks for Low-Latency Services in 5G and beyond Systems. Appl. Sci. 2022, 12, 6617. [Google Scholar] [CrossRef]

| Year | Reference | AI Category | Big Data Analytics | Resource Management | Key Enabling Technologies | Application Domains |

|---|---|---|---|---|---|---|

| 2021 | [14] | No | No | Yes | No | Yes |

| 2022 | [13] | Yes | No | No | No | IoV |

| 2021 | [3] | Yes | No | Yes | Yes | yes |

| 2020 | [15] | yes | No | Yes | Yes | NO |

| 2020 | [16] | Yes | Yes | Yes | Yes | No |

| 2019 | [17] | Yes | Yes | Yes | No | Yes |

| 2020 | [19] | Yes | No | Yes | No | No |

| 2022 | [18] | Yes | No | Yes | No | Yes |

| 2023 | Our paper | Yes | Yes | Yes | Yes | Yes |

| AI-edge based applications | Smart environment | AQM | [20,21,22,23,24] |

| WQM | [25,26,27] | ||

| SWM | [28] | ||

| UM | [29,30,31,32] | ||

| Smart grid | LDF | [33,34,35,36,37,38,39,40] | |

| DSM | [41,42,43] | ||

| LAD | [44,45,46,47,48,49] | ||

| Smart agriculture | WP | [50,51] | |

| LM | [52,53] | ||

| SI | [54,55] | ||

| CMDD | [56,57,58] | ||

| MHSAM | [59,60] | ||

| Smart education | SEM | [61,62,63,64,65] | |

| SA | [66,67,68] | ||

| Smart industry | FI | [69,70] | |

| CI | [71] | ||

| MMM | [72,73,74,75,76] | ||

| PQMP | [77,78,79] | ||

| Smart healthcare | DHM | [80,81] | |

| AAL | [82,83,84,85,86] | ||

| HAR | [87,88,89,90,91,92] | ||

| LDP | [93,94,95,96,97,98,99,100] | ||

| DD | [101,102,103,104,105,106,107,108,109,110] | ||

| Smart transport | SPM | [111,112,113] | |

| TMP | [114,115,116,117,118] | ||

| ITM | [119,120,121] | ||

| Security and privacy | PP | [122,123,124] | |

| AA | [8,125] | ||

| ID | [126,127,128,129,130,131,132,133] |

| Use Case | Ref | Contribution | AI Role (At the Edge) | AI Algorithm | Dataset | AI Placement | Employed Technology | Platform | Metrics | Benefits AI-Edge | Drawbacks | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Smart environment | AQM | [20] | Predicting of futureindoor status of PM10 and PM2.5 | Prediction | LSTM | Data from Seoul, Korea | Edge device, cloud | Federated learning | TensorFlowKeras | RMSE | Minimize load Hight accuracy | Does not consider all factors in prediction |

| [21] | Green energy-based wireless sensing network for air-quality monitoring | Prediction | LSTM | Airbox system dataset | Edge device, cloud | Federated learning | Not mentioned | MAE-loss RMSEEnergy thresholdsaving, ratio error rate | Communication efficiencyPreserving data privacy Low computational complexity | Slightly lower accuracy | ||

| [22] | Location awareenvironment sensing | Prediction | k-means, LSTM, CNN (ResNet) | WA dataset Outdoor image datasets | Edge device, cloud | Distributedcomputing cluster | Federated learning | Accuracy, avg. sum of squared errors, silhouette coefficient | High accuracy | Homogeneous nodes only considered | ||

| [23] | Distributed data analysis for air prediction | Preprocessing | K-means SVM, MLP, DT, KNN, NB | U.S. Pollution Data Kaggle | Edge devices, cloud | Distributed computing | IFogSim toolkit-YAFS- | AccuracyPrecision recallF1-Score | Data reductionLow response time reduction | Not consider mobility of nodes | ||

| [24] | On-device air-quality prediction | Prediction | CNN, LSTM | Dataset from University of California–Irvine (UCI) Machine Learning Repository page | Edge devices(RPi3B+, RPi4B) | Posttraining quantization Hardware accelerator | TensorFlow Lite | RMSE, MAEexecution time | Low-complexity model latency | Accuracy degradation | ||

| WQM | [25] | Onboard sensor classifier for the detection of contaminants in water | Classification | EA PCA | Real-world dataset | Edge device (sensors) | Low-cost model | Not mentioned | Accuracy F-score TP TN FP FN | High accuracy | Low accuracy for unlabeled data | |

| [27] | Online water-quality monitoring | Prediction | BPNN | Real-world dataset | Edge gateway | Low-cost model | Not mentioned | Data transmission response time | Low-complexity model accuracy, data transmission reduction | Accuracy needs to improved | ||

| WQM | [26] | Real-time water- quality monitoring | Preprocessing prediction | PCA LR MLP SVM SMO Lazy-IBK, KStar RF RT | Data of sewage water-treatment plant of the institute, data collected from river Ganga | Edge device (Raspberry Pi) | Transfer learning | Python, Weka | Correlation coefficient MAE RMSE-RAE RRSE Edge response time | Less response time | Communication cost not considered | |

| SWM | [28] | Smart water saving and distribution | PredictionDecision making | FFN MDN | Real-world dataset | Edge server | SofT computing blockchain | Python | MSE accuracy | Effective decision-making | Accuracy needs to be enhanced | |

| Smart environment | UM | [29] | Reduce data and improve data quality or underwater | Data (fusion, reduction) | BPNN evidence theory | Western Pacific measurement information | Fog gateway Cloud | Edge preprocessing | Not mentioned | Time consumption Redundant data volume R, MAE, MSE SMAPE | Low communication costHigh accuracy | High delay |

| [30] | Real anomaly detection errors in underwater vehicles | Network management, data reduction classification, decision-making | YULO (CNN), RL | Real-world dataset | Edge device (Raspberry Pi) Fog gateway | Hardware accelerator, pretrained CNN | Not mentioned | Accuracy, latency, recall | High accuracy, less latency | Accuracy degraded | ||

| [31] | Low delay for Seawater quality prediction | Data reductionPrediction | PCA RVM | Real-world dataset | Mobile edge computing | Low-cost model | Not mentioned | CD MAE RMSE | Higher prediction Low time consumption | High-cost model | ||

| [32] | Downlink throughput performance enhancement | Resource allocation Classification | DRL DNN | Real-world dataset | Edge device (IoUT devices) | Federated learning | Not mentioned | Downlink throughput channel usage Convergence rate | Low complexity | – |

| Use Case | Ref | Contribution | AI Role (At the Edge) | AI Algorithm | Dataset | AI Placement | Employed Technology | Platform | Metrics | Benefits AI-Edge | Drawbacks | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Smart agriculture | WP | [50] | Timely prediction of frost in crops | Prediction | LSTM | Real-world dataset | Edge device (Nvidia Jetson) | Hardware accelerator | TensorFlow 1.10.1 Keras 2.2.4 | Power consumption, execution time, RMSE, MAE, memory usage, PCC R2 | Less execution time | Less scalability complexity of model causes overlearning and slightly increased error |

| [51] | Drought prediction | Feature extraction | ANN, PCA, GA | Drought attribute dataset | Fog gateway, cloud | Preprocessing edge | Matlab Amazon EC2 | Accuracy sensitivity specificity, precision, F-measure | Reduction of load to cloud High accuracy | High Communication cost | ||

| LM | [52] | Livestock surveillance | Feature extraction | CNN | Google ImageNet Pixabay | Edge device (Nvidia Tegra) Cloud | Splitting DNN | Caffe | Accuracy Reduction rate | Load reduction High accuracy | High communication cost | |

| [53] | Early lameness detection in dairy cattle | Feature extraction | K-means, KNN | Real-world dataset | Fog gateway (PC), cloud | Edge preprocessing | Python | Reduction rate Accuracy | High accuracy | High communication cost | ||

| SI | [54] | Prediction models of soil moisture | Missing-data imputation, prediction | GDR, LSTM, BiLSTM | Coconut, Cashew datasets | Single-board computer (Raspberry Pi 4 Model B) | Hardware accelerator | TensorFlow | CPU RAM usage, MAE | Data quality improvement High accuracy | Accuracy must be improved | |

| [55] | Intelligent irrigation system | Prediction | LSTM GRU | Historical Hourly Weather Data 2012–2017 | Edge devices | Hardware accelerator/software | Pytorch, TensorFlow, TensorFlow Lite | RMSE, MSE, MAE | Reliability | Overhead computation | ||

| CMDD | [56] | Timely diagnosis of crop disease | Prediction | CNN | Real-world dataset | Edge device (STM32F746G-disco board) | Quantization | TensorFlow Lite | Accuracy, memory usage, inference time, energy consumption | High accuracy Low memory usage | Accuracy may degrade | |

| [57] | Timely recognition of crop Diseases | Classification | CNN | Real-world dataset | Mobile edge device | Transfer learning | Python | Accuracy | High accuracy Less recognition time | High computational cost | ||

| CMDD | [58] | Intelligent sensing in the entire crop life cycle | Preprocessing network management | Fuzzy Gath–Geva clustering, Tkagi–Sugneo-fuzzy neural network, KNN, BPNN | Real-world dataset | Edge server | – | Not mentioned | AFE CC accuracy Sensing time, communication rate | Data collection times reduction Less energy consumption Sensed data quality improvement High accuracy | – | |

| MHSM | [59] | Timely vehicle health monitoring | Prediction | ANN GA | Not mentioned | Smartphone | Lightweight model | MATLAB 2019b | Accuracy, ROC curve, misclassification rate, MSE | High accuracy | Complexity reduction still recommended | |

| [60] | Vehicle health recognition | Classification | DCNN Levy flight | Real-world dataset | Smartphone | Lightweight DL | Not mentioned | Accuracy ROC, precision recall, F1-score | Low complexity | High training time |

| Use Case | Ref | Contribution | AI Role (At the Edge) | AI Algorithm | Dataset | AI Placement | Employed Technology | Platform | Metrics | Benefits AI-Edge | Drawbacks | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Smart education | S. engagement monitoring | [61] | Attention detection of participants | CNN | Prediction | (DAiSEE) | Edge ( pc) | Pretrained model | Python | Accuracy | - | Accuracy needs to improve |

| [62] | Improve long-distance education | Classification | ResNet-50 | Fer2013 emotion dataset | Mobile edge computing | Hardware accelerator | / | Confusion matrix accuracy | High accuracy | Accuracy needs to improve | ||

| [63] | Real-time intervention in negative emotional contagion in a smart classroom | Classification | CNN | Fer2013 emotion dataset | Edge preprocessing | Hardware accelerator | JavaScript, TensorFlow, OpenCV | Accuracy | Less response time | Accuracy needs to improve | ||

| [64] | Multimodal engagement analysis | Prediction | DL | Real-world data | Edge server (PC) | / | JIFF, JavaScript library, TensorFlow | Average performance impact on edge device /server | Scalability | Computational overhead | ||

| [65] | Student stress monitoring and real-time alert generating | Prediction | VGG16, BiLSTM, NB | Real-world data Kaggle dataset | Fog cloud | Cloud training | Not mentioned | Specificity, sensitivity, accuracy, F-measure | High accuracy | Eliminate historical record | ||

| Skill assessment | [66] | Monitors the academic/skill of students for timely employability classification of graduation. | Resource management | K-means, PCA, KNN | Real-world dataset | Fog nodes | / | iFogSim toolkit | Mean absolute percentage error (MAPE) | Scalability | Processing overhead | |

| [67] | Education quality evaluation | ANFIS Bayesian belief network (BBN) | Environmental datasets, staff-related dataset, physical dataset, students’ academic-related historical dataset | Raspberry Pi v3 is | / | Weka | Precision, specificity, sensitivity, BBM, accuracy, RMSE, MAS | Stability, reliability | Accuracy needs to be improved | |||

| [68] | Ideology and politics education evaluation in 5G | Resource management data caching | PSO | Edge devices | Not mentioned | - | / | Energy consumption, latency | Scalability, low energy consumption, low latency | - |

| Use Case | Ref | Contribution | AI Role (At the Edge) | AI Algorithm | Dataset | AI Placement | Employed Technology | Platform | Metrics | Benefits AI-Edge | Drawbacks | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Smart Industry | FI | [69] | Financial data analysis | Prediction | SVM | (Credit card fraud, credit card risk, Customer Churn, Insurance Claim) dataset | Edge devices, cloud | Low-cost model task offloading | Simulator (Not mentioned ) | Task assignment over delay power consumption precision recall F1-score | High accuracy | Communication overhead |

| [70] | Early-warning of financial risks | Prediction | BPNN | Real-world dataset | MEC | Quantization HARDWARE-CPU | Matlab | Accuracy, hit rate | Less response time | Accuracy needs improvement | ||

| C.I | [71] | Locality-based product demand prediction and decision making | Feature selection, classification, decision-making | RL, PCA, K-means | Kaggle open data | Edge device (GPU NVIDIA-SMI) | Low-cost model | Scikit-learn Python | Clustering score maximum/average cumulative reward execution time | Outperform others existing methods | Stability not tested | |

| MMM | [72] | Machine malfunction monitoring | RF SVM Adab LR MlP | (MIMII dataset | Fog (controller unit (ICU)/Microdata center) | Hardware accelerator | Lightweight model | Not mentioned | Time complexity, accuracy, precision, FScore | Response time reduction | – | |

| [73] | Abnormal events detection during assembly line production | Outlier detection prediction | RF, DBSCAN | Real-world dataset | Edge devices (Raspberry Pi) | Low-cost model | MongoDB Python | Accuracy recall F1-score precision | High accuracy | Dynamic of IoT data not addressed | ||

| [74] | Fault detection in a hydraulic system | Data reduction classification | LSTM, AE, GA | Real-world dataset | Edge server | Transfer learning | TensorFlow | Complexity DL accuracy detection time, data reduction | Reduction of load to cloud Low detection time Robust to noisy data | Communication overhead | ||

| Smart Industry | MM | [75] | Faults of machine detection | Classification | LSTM | Real-world dataset | Edge device (Raspberry Pi) | Lightweight model | Keras Python | Accuracy | Low-cost model Short fault detection | Memory usage overhead |

| [76] | Fast manufacture inspection | Feature extraction classification | CNN | Real-world dataset | Fog gateway | Early exit-DNN splitting | Not mentioned | ROC curve running efficiency | High accuracy | High communication cost | ||

| PQMP | [77] | Fast prediction of assembly quality | Feature selection, prediction | RF Adaboost | Real-world dataset | Edge server (PC) | Transfer learning | Python | Accuracy | Efficacy flexibility complexity reduction | Online learning not improved | |

| [78] | Fast tool wear monitoring and prediction | Feature extraction classification | CNN LSTM BiLSTM | Real-world dataset | Edge server (PC) | Transfer learning | Python TensorFlow | Response time, network bandwidth, data transmission RMSE MAPE | High monitoring accuracy, low-cost model, low response latency | Accuracy loss | ||

| [79] | Scheduling tasks production for smart production line | Task scheduling, resource allocation | PSO, ACO | Not mentioned | Fog gateway | - | Matlab | Completion time, energy consumption, reliability | Solves the problem of limited computing resources, high energy consumption, real-time/efficient processing | Does not consider heterogeneity of IoT devices. |

| Use Case | Ref | Contribution | AI Role (At the Edge) | AI Algorithm | Dataset | AI Placement | Employed Technology | Platform | Metrics | Benefits AI-Edge | Drawbacks | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Smart healthcare | DHM | [80] | Food recognition | Classification Storage | DRCNN | Food 101Image | Smartphone | Quantization, GPU accelerator | TensorFlow Lite | Accuracy loss values, computational power | Low response time | Loss of accuracy over time |

| [81] | Food recognition | Classification preprocessing | GoogLeNet | UEC-256 UEC-100 Food-101 | Smartphone | Pretrained CNN | Caffe | Response time, accuracy, computational power | Low response time | Loss of accuracy over time | ||

| AAL | [82] | Accurate and timely fall detection | Classification | LSTM/GRU | SisFall dataset | IoT, gateway (fog) | Virtualization | Docker HDFS-Apache Kafka-MongoDB Tensorflow | Accuracy, sensitivity, precision, inference | Scalability, flexibility | Memory consumption needs to be optimized Mobility not considered | |

| [83] | Online/offline monitoring elderly patients suffering from chronic disease | Prediction | NB-FA | Vital signs, behavioral data environmental data | Cloud, edge | Transfer learning | Weka, classifier, Spark job | Accuracy, sensitivity, precision, inference time | Accurate, fault-tolerant, fast decisions | High computational cost | ||

| [84] | Real-time fall detection | Preprocessing, prediction | LDA KNN SVM | SisFall datasets | Raspberry Pi 3 B + | Real-time test | Low-cost model | Response time | High accuracy, low response time | Accuracy and generalization still improved | ||

| [85] | Multimodal fall detection | Prediction | PCA linear regression MLP | SisFall data set | Mist, fog, cloud, edge | Not mentioned | Low-cost model | CC, MAE RMSE, RAE, RRSE response time | High accuracy, less inference time | Generalization needs to be solved | ||

| [86] | Real-time in-home health monitoring | Prediction | GCAE | MobiAct dataset | Cloud, edge | Federated learning | Not mentioned | Accuracy communication rounds scalability | Heterogeneity of data and communication cost solved | Data privacy issues | ||

| Smart healthcare | HAR | [87] | Real-time abnormal human activities | Prediction | PCA -CNN | UniMiB DATASET | Edge device | Transfer learning | Python 3.6 | Process time | Low energy consumption, less computational cost | Lack of security |

| [88] | Real-time, human activity recognition | Prediction | DRNN | WISDM dataset | Raspberry Pi3 (edge devices) | Virtualization | TensorFlow | Accuracy F1-score recognition time | Less recognition time, high accuracy | High computational cost | ||

| [89] | Energy-efficient, human-activity recognition | Training, prediction | CNN | Opportunity dataset, w-HAR dataset | Edge devices | Transfer learning | Not mentioned | Accuracy, precision, recall, weighted, F1-score | Less memory overhead, high accuracy | Stability not tested | ||

| [90] | Human activity recognition | classification | SVM | KTH Dataset Hollywood2 Action Dataset | Edge/cloud | Transfer learning Blockchain | TensorFlow | Accuracy | High accuracy multiclass classification | Less scalability | ||

| [91] | Multiaccess physical monitoring system | Classification | BDN | Real-world dataset | Wearable IoT | Transfer learning | Not mentioned | Accuracy data transmission time RMSE | Less energy consumption, high accuracy | Lack of data privacy, less scalability | ||

| [92] | Physical instance-based irregularity recognition | Classification | CNN LSTM | NTU RGB dataset | Fog nodes | Transfer learning | Python-Pillow, OpenCV, Numpy libraries | Rate of latency analysis | High accuracy, less latency | Environmental changes and model generalization not considered | ||

| Smart healthcare | LDP | [93] | Monitoring and predicting COVID-19 outspread | Prediction visualisation | FCM T-RNN SOM | - | Fog nodes | MATLAB-Ifogsim | Preprocessing | Latency time, response delay, accuracy, precision | reliability, high accuracy | Lack of security |

| [94] | Location-aware monitoring and preventing encephalitis | Prediction visualisation | FCM- T-RNN, SOM | Cloud, edge | UCI-repository data | Preprocessing | MATLAB | Latency time, response delay, accuracy, precision | Reliability, high accuracy, location aware, data management | Lack of security | ||

| [95] | Early detection of Kyasanur forest disease and control the disease outbreak | Classification | ANN | KFD dataset | Fog/cloud | Lightweight model | Not mentioned | Accuracy, sensitivity, specificity, RMSE MAE | High accuracy | High computational cost | ||

| [96] | Continuous monitoring and early detection of mosquito-borne disease | Classification | FNN, SNA graph | UCI-repository data | Fog node | Lightweight model | Not mentioned | Accuracy, sensitivity, specificity | High accuracy | Data integrity and security not considered | ||

| [97] | Automatic diagnosis of COVID-19 | Classification | K-MEANS -VGG16 | X-ray ultrasound datasets | Edge devices | Pretrained model | TensorFlow | RMSE, MAE | Cope with data heterogeneity | Less accuracy, lack of security | ||

| [99] | Remote COVID-19 diagnosis | classification | RF GAN GNB | Generated dataset | Fog nodes | Open-source language R iFogSim | Accuracy response time, recall | High accuracy | High energy consumption, lack of security | |||

| Smart healthcare | LDP | [99] | Remote COVID-19 diagnosis | Classification | Mobile-Net V2 | Chest CT scan image dataset | Transfer learning | Edge devices | TensorFlow | Sensitivity specificity precision F1-score | High accuracy, less response | Not tested for large datasets, accuracy needs to be improved |

| [100] | Low delay in prediction of health status of COVID-19 patients | Preprocessing prediction | eRF | COVID-19 dataset | Edge devices | Lightweight model | TensorFlow | Training time, accuracy, precision, recall, MAE, RMSE | High accuracy | High computational cost | ||

| DD | [101] | Early lung cancer diagnosis | Preprocessing, feature selection Classification | FCM, CS, SVM | (ELCAP) dataset | Fog nodes | Lightweight model | MATLAB 2013a | Accuracy, sensitivity, specificity, MCC, F-measure, ROC curves, computational cost | Less training time, high accuracy | High cost of model for fog implementation | |

| [102] | Intelligent monitoring of cardiomyopathy patients | Intelligent sensing | FHHO, FL | Real-world dataset | Fog nodes | – | Not mentioned | Execution time, accuracy, precision, recall, F-measure | High accuracy, low time cost | Lack of security, high energy consumption | ||

| [103] | Real-time monitoring patients with chronic diseases | Classification | NB-WOA | Clinical dataset, Physio Bank-MIMIC II database | Fog nodes, cloud | Transfer learning | Weka, Spark | Accuracy, recall, precision | Higher accuracy, high response time | High complexity of model, lack of security | ||

| [104] | Early heart disease prediction | data fusion prediction | CFS, KRF | UCI repository data | Fog nodes | Lightweight model | – | Accuracy, training time, scalability | Scalability, accuracy | Quality of the data depends on the number of sensors, improved accuracy is required | ||

| Smart healthcare | DD | [105] | Early detection of Parkinson’s Disease | Prediction | ANFIS GWO PSO | UCI University of California | Fog nodes | Distributed computing | TensorFlow | RMSE, MAE | High accuracy | Lack of security |

| [106] | Diabetic cardio disease prediction | Prediction | Rule-based clustering, CRA, ANFIS | (Heart disease, diabetes) dataset | Edge devices | Blockchain | Java | Purity NMI accuracy execution time | Efficient grouping medical data, high accuracy, secure data sharing, good training with uncertainty | Low accuracy | ||

| [107] | Remote cardiac patient monitoring | Classification | 1D-CNN | MIT-BIH Arrhythmia | Fog nodes (single-board computer), cloud | Transfer learning | Not mentioned | RMSE MAE CPU usage accuracy loss recall precision F1-score | High accuracy, low computational overhead, low resource usage, low response time | Scalability not considered | ||

| [108] | Timely disease diagnosis of health conditions | Data preprocessing classification | AE HMWWO | UCI-repository data | Edge devices | Lightweight model | Not mentioned | Latency, F-measure time complexity sensitivity | High sensitivity, improved accuracy Minimum time complexity and latency scalability | Small dataset used for evaluation, lack of data protection | ||

| [109] | Real-time physiological parameter detection | Preprocessing prediction, load balancing | RK-PCA HMM MoSHO SpikQ-Net | UCI repository data | Edge devices, fog nodes | Lightweight model | iFogSim | Execution, time accuracy, latency | Stability, scalability, low execution, time, low latency, low complexity | Lack of security | ||

| [110] | Real-time blood glucose | Prediction | GRU | (OhioT1DM ABC4D ARISES) datasets | Edge device (Smartphone) | Hardware accelerator | TensorFlow Lite | RMSE, MSE | Low energy consumption, good training with uncertainty | Less sensitivity |

| Use Case | Ref | Contribution | AI Role (At the Edge) | AI Algorithm | Dataset | AI Placement | Employed Technology | Platform | Metrics | Benefits AI-Edge | Drawbacks | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Smart transportation | SPM | [111] | Real-time prediction Bike charging at each stationReduce load to cloud | Prediction | RT SOM | Kaggle competition, London shared bike data | MEC | Lightweight model (ML) | Not mentioned | RMSE RMSLE | High accuracy Generalization | Multivariate data not supported security |

| [112] | Real-time parking occupancy surveillance Reduce load to cloud | Classification | Mobile-net SSD, BG, SORT | MIO-TCD | Edge device Raspberry Pi 3B, | Transfer learning | TensorFlow Lite | Accuracy | Flexibility Reliability Online and high accuracy | Accuracy needs to be enhanced (=95),security | ||

| [113] | Privacy preserving Parking space estimation | Prediction, decision making | LSTM DRL Game theory | Birmingham parking dataset | Fog nodes | Federated learning | Not mentioned | MSE | Computation offloading in nonstatic environment, improve security, flexibility, high accuracy | Less convergence speed | ||

| T.M.P | [115] | Timely citywide traffic prediction, context data management | Data aggregation | CNN, LTSM | Beijing taxicabs data NYC bike data | Fog nodes | Transfer learning | IFogSim | Complexity, training time, prediction time, accuracy | Reduce network congestion,increase energy efficiency, less training/prediction times | Cloud inference, non-real-time prediction | |

| [114] | Forecast the overall traffic, adjust the redirected flow | Prediction | DBN-SVR | Caltrans PeMS | Fog nodes | / | MATLAB | Scalability, processing time, accuracy | Scalability, security | Accuracy needs to be enhanced | ||

| [116] | Privacy preservation Traffic flow prediction | Prediction | GRU, k-means | PeMS database | Edge nodes | Federated learning | Not mentioned | MAE, MSE, RMSE, MAPE | Low communication overheadStatistical heterogeneity solved, high accuracy | Spatiotemporal correlation not solved | ||

| [117] | Timely traffic flow prediction | Prediction | SVM PSO | Guiyang City dataset | Fog nodes | Lightweight ML | Matlab 2014a | MSE | Low time overhead, faster processing, adaptability, good prediction | Model complexity high | ||

| [118] | Spatial traffic flow prediction | Prediction | GCNs | TaxiBJ TaxiNYC dataset | Edge nodes | Federated learning | Not mentioned | RMSE, MSE, MAPE | High accuracy | Less scalability | ||

| ITM | [88] | Driver distraction identification | prediction | VGG1-CNN -k-means | Kaggle’s state farm, distracted driver challenge | Edge deviceRaspberry Pi | Transfer learning | KERAS | Accuracy, precision, recall, F1-score | High accuracy | Securityless scalability | |

| [119] | Driving behavior evaluation | Prediction | CNN-LSTM | ToN UCI knowledge discovery, archive database | Fog nodes | Transfer learning | TensorFlow | Accuracy-loss curves | High accuracy, generalization | Less scalability, security | ||