SD-HRNet: Slimming and Distilling High-Resolution Network for Efficient Face Alignment

Abstract

1. Introduction

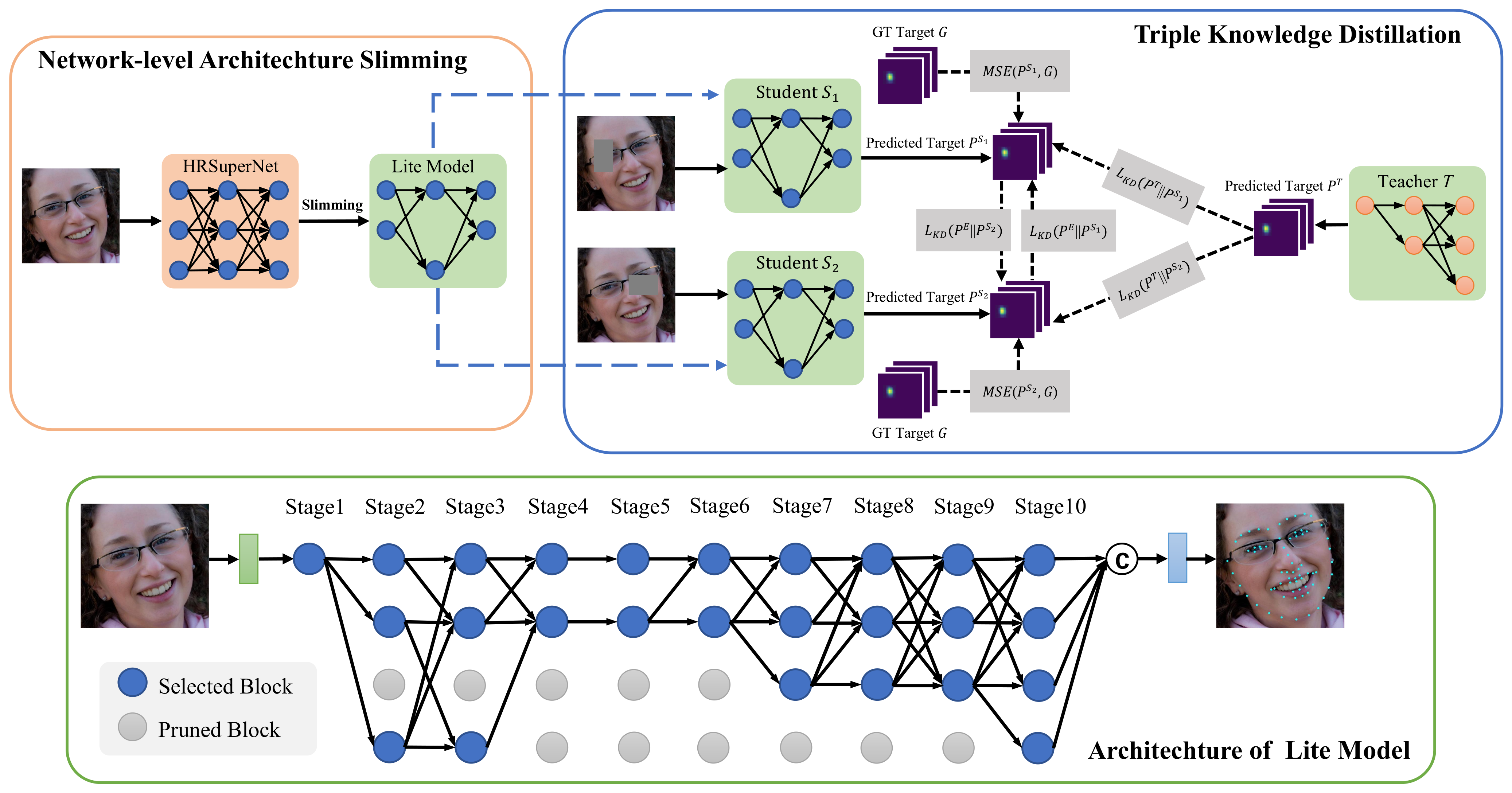

- We propose a flexible network-level architecture slimming method that can quantify and reduce the redundancy of the network structure to obtain a lightweight facial landmark detector adapted to different computing resources.

- We design a triple knowledge distillation scheme, in which a slimmed network could be improved without additional complexity by jointly learning the implicit landmark distribution from a teacher network and two peer student networks.

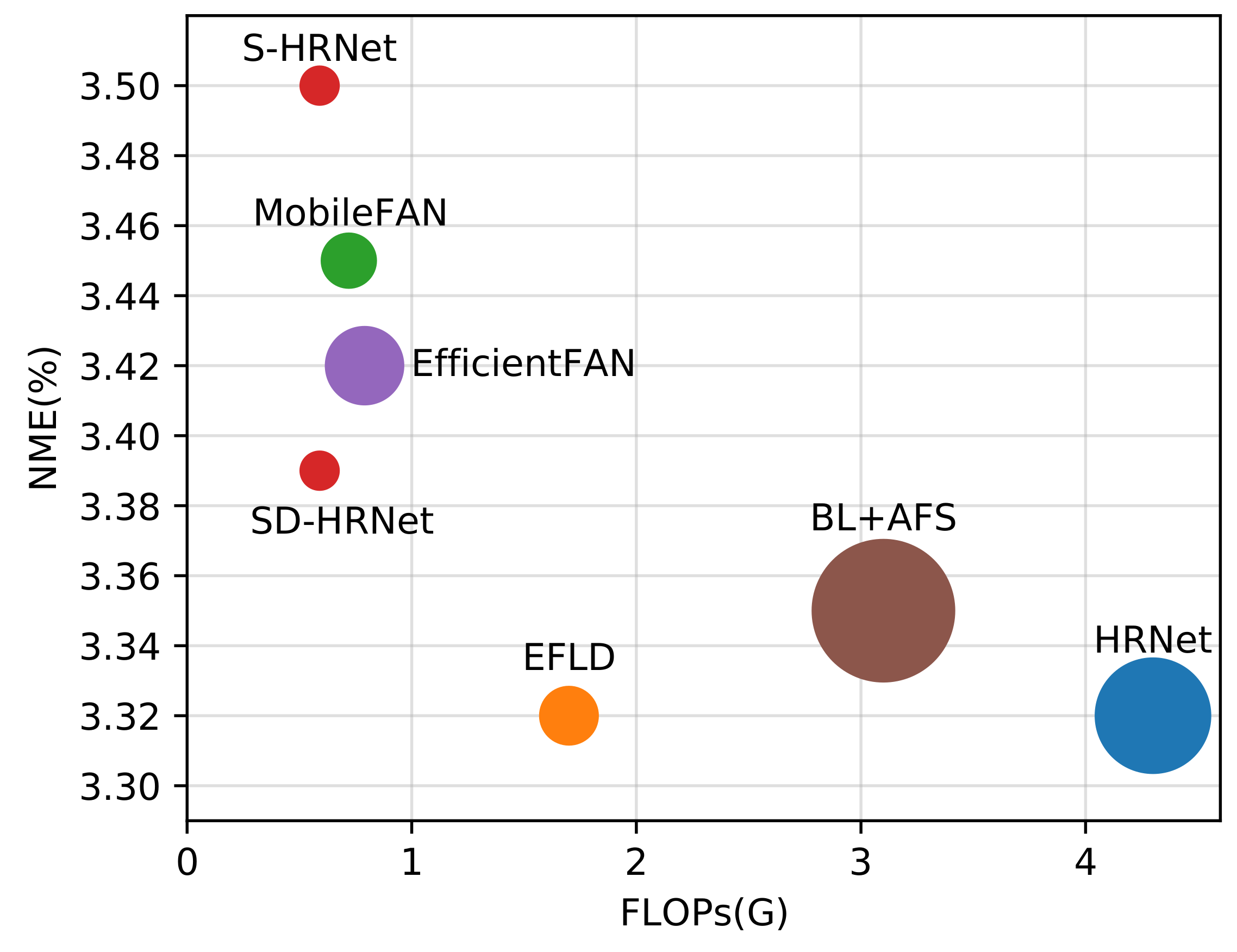

- Extensive experimental results on challenging benchmarks demonstrate that our approach achieves a better trade-off between accuracy and efficiency than recent state-of-the-art methods (see Figure 1).

2. Related Work

2.1. Conventional Face Alignment

2.2. Large CNN-Based Face Alignment

2.3. Lightweight CNN-Based Face Alignment

3. Methods

3.1. Network-Level Architecture Slimming

3.2. Triple Knowledge Distillation

4. Experiments

4.1. Datasets

4.2. Evaluation Metrics

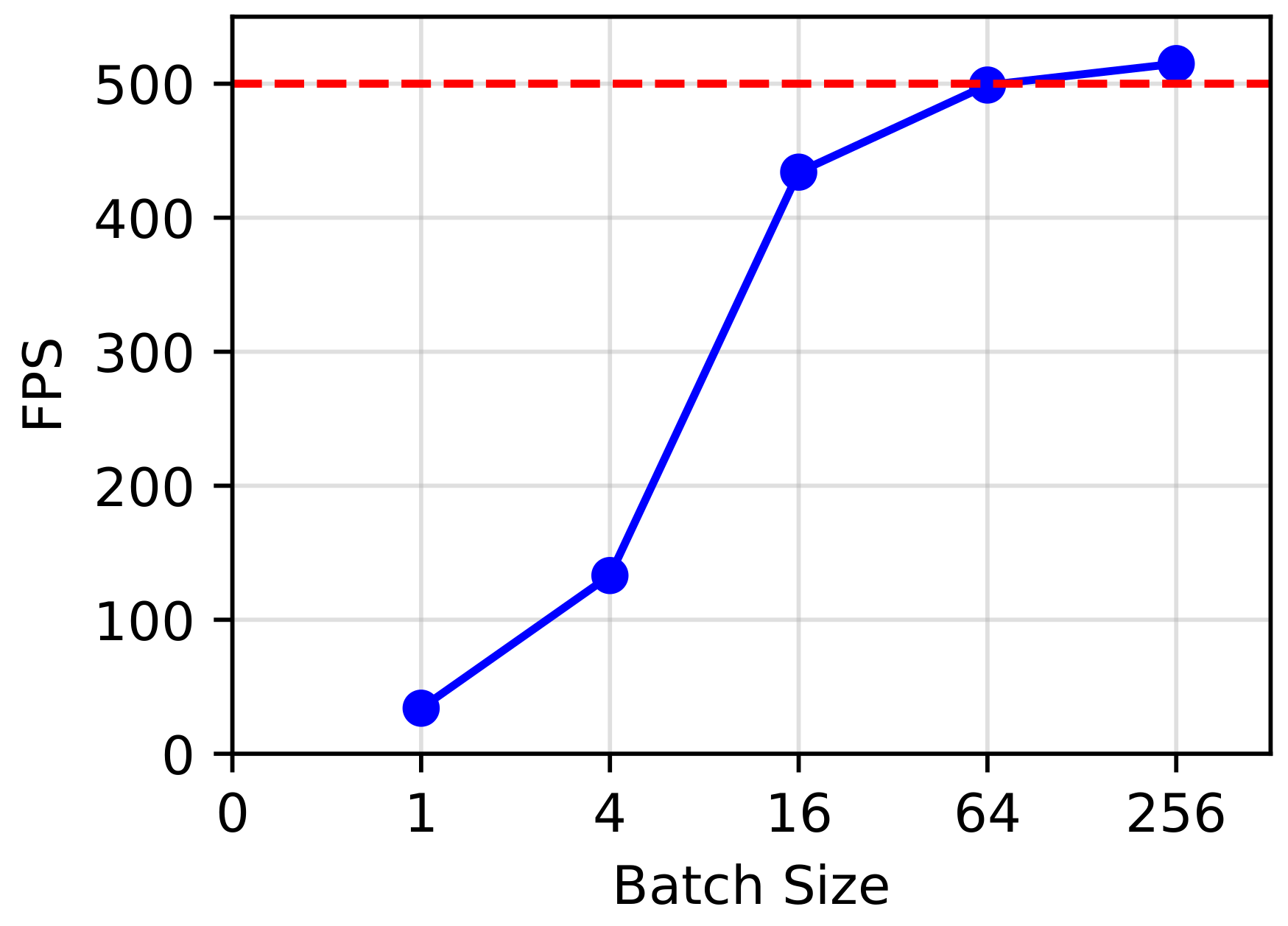

4.3. Implementation Detail

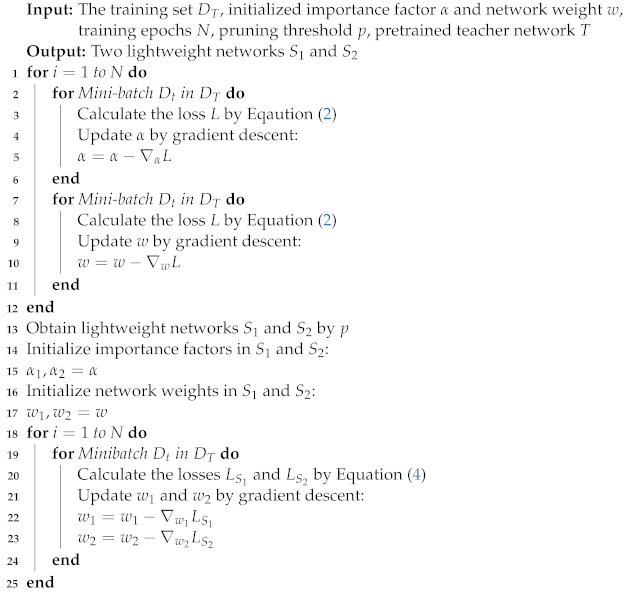

| Algorithm 1: SD-HRNet Algorithm |

|

4.4. Comparison with State-of-the-Art Methods

4.4.1. Results on 300W

4.4.2. Results on COFW

4.4.3. Results on WFLW

4.5. Ablation Study

4.5.1. HRNet vs. HRSuperNet

4.5.2. KD Components

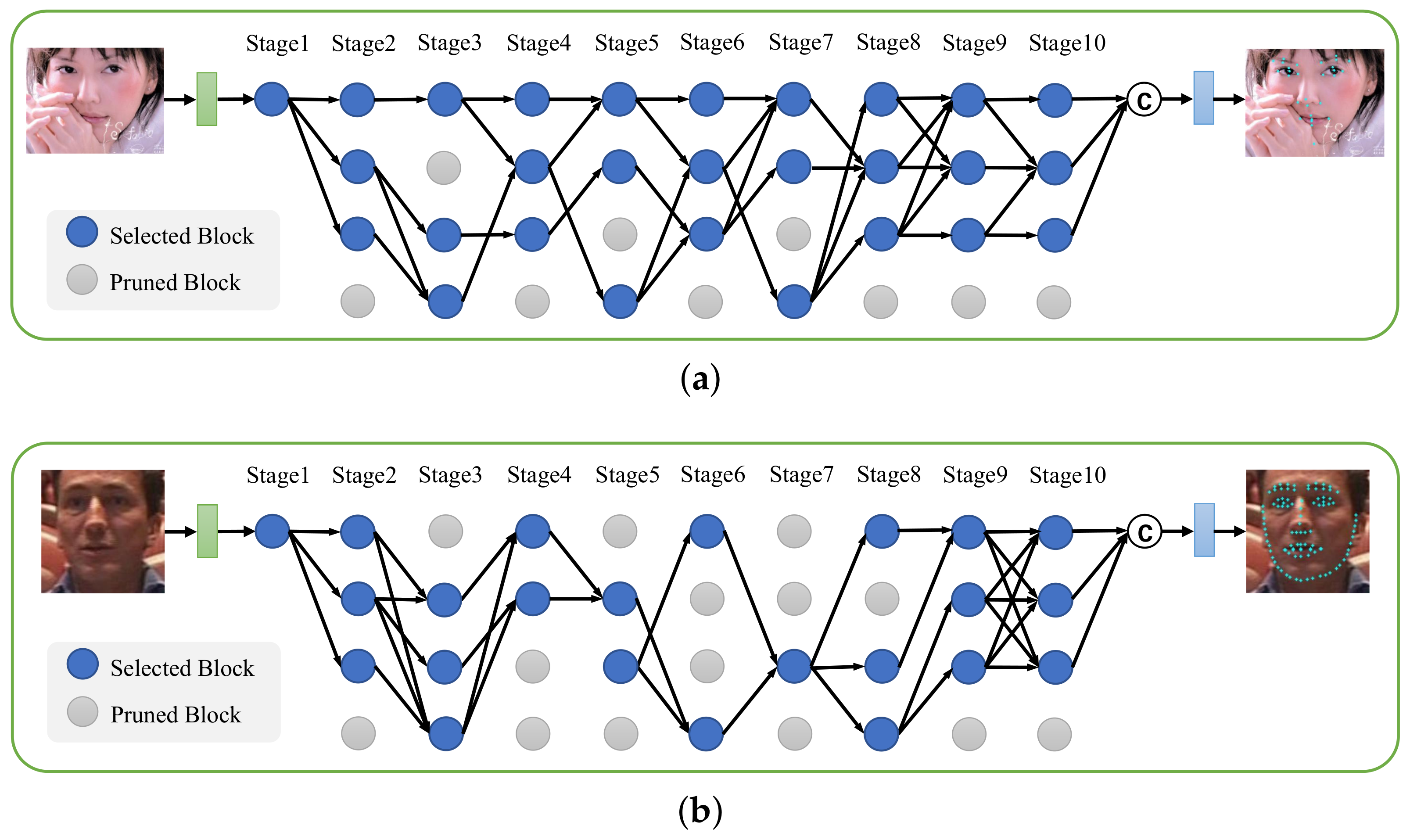

4.6. Visualization of the Architectures

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNN | Convolutional neural network |

| HRSuperNet | High-resolution super network |

| S-HRNet | Slimmed network from HRSuperNet |

| SD-HRNet | Refined S-HRNet using triple knowledge distillation |

| LSFF | Lightweight selective feature fusion |

| TA | Transformation and aggregation |

| MBConvs | Mobile inverted bottleneck convolutions |

| KD | Knowledge distillation |

| KL | Kullback–Leibler |

| 2D | Two-dimensional |

| FLOPs | Number of floating-point operations |

| NME | Normalized mean error |

| #Params | Number of parameters |

| FPS | Frames per second |

| SIFT | Scale-invariant feature transform |

| M | Mega |

| G | Giga |

References

- Taigman, Y.; Yang, M.; Ranzato, M.; Wolf, L. Deepface: Closing the gap to human-level performance in face verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar]

- Pantic, M.; Rothkrantz, L.J.M. Automatic analysis of facial expressions: The state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1424–1445. [Google Scholar] [CrossRef]

- Jabbar, R.; Shinoy, M.; Kharbeche, M.; Al-Khalifa, K.; Krichen, M.; Barkaoui, K. Driver drowsiness detection model using convolutional neural networks techniques for android application. In Proceedings of the 2020 IEEE International Conference on Informatics, IoT and Enabling Technologies (ICIoT), Doha, Qatar, 2–5 February 2020; pp. 237–242. [Google Scholar]

- Taskiran, M.; Kahraman, N.; Erdem, C.E. Face recognition: Past, present and future (a review). Digital Signal Process. 2020, 106, 102809. [Google Scholar] [CrossRef]

- Adjabi, I.; Ouahabi, A.; Benzaoui, A.; Taleb-Ahmed, A. Past, present, and future of face recognition: A review. Electronics 2020, 9, 1188. [Google Scholar] [CrossRef]

- Xiong, X.; la Torre, F. Supervised descent method and its applications to face alignment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 532–539. [Google Scholar]

- Cao, X.; Wei, Y.; Wen, F.; Sun, J. Face alignment by explicit shape regression. Int. J. Comput. Vision 2014, 107, 177–190. [Google Scholar] [CrossRef]

- Ren, S.; Cao, X.; Wei, Y.; Sun, J. Face alignment at 3000 fps via regressing local binary features. In Proceedings of the 27th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 1685–1692. [Google Scholar]

- Zhu, S.; Li, C.; Loy, C.; Tang, X. Face alignment by coarse-to-fine shape searching. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4998–5006. [Google Scholar]

- Wu, W.; Yang, S. Leveraging intra and inter-dataset variations for robust face alignment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2096–2105. [Google Scholar]

- Lin, X.; Wan, J.; Xie, Y.; Zhang, S.; Lin, C.; Liang, Y.; Guo, G.; Li, S. Task-Oriented Feature-Fused Network With Multivariate Dataset for Joint Face Analysis. IEEE Trans. Cybern. 2019, 50, 1292–1305. [Google Scholar] [CrossRef] [PubMed]

- Lin, X.; Liang, Y.; Wan, J.; Lin, C.; Li, S.Z. Region-based Context Enhanced Network for Robust Multiple Face Alignment. IEEE Trans. Multimed. 2019, 21, 3053–3067. [Google Scholar] [CrossRef]

- Feng, Z.H.; Kittler, J.; Awais, M.; Huber, P.; Wu, X.J. Wing loss for robust facial landmark localisation with convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake, UT, USA, 18–23 June 2018; pp. 2235–2245. [Google Scholar]

- Dong, X.; Yan, Y.; Ouyang, W.; Yang, Y. Style aggregated network for facial landmark detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake, UT, USA, 18–23 June 2018; pp. 379–388. [Google Scholar]

- Wu, W.; Qian, C.; Yang, S.; Wang, Q.; Cai, Y.; Zhou, Q. Look at Boundary: A Boundary-Aware Face Alignment Algorithm. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake, UT, USA, 18–23 June 2018; pp. 2129–2138. [Google Scholar]

- Wang, X.; Bo, L.; Fuxin, L. Adaptive wing loss for robust face alignment via heatmap regression. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6971–6981. [Google Scholar]

- Wang, T.; Tong, X.; Cai, W. Attention-based face alignment: A solution to speed/accuracy trade-off. Neurocomputing 2020, 400, 86–96. [Google Scholar] [CrossRef]

- Gao, P.; Lu, K.; Xue, J.; Shao, L.; Lyu, J. A coarse-to-fine facial landmark detection method based on self-attention mechanism. IEEE Trans. Multimed. 2021, 23, 926–938. [Google Scholar] [CrossRef]

- Xia, J.; Qu, W.; Huang, W.; Zhang, J.; Wang, X.; Xu, M. Sparse Local Patch Transformer for Robust Face Alignment and Landmarks Inherent Relation Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 4052–4061. [Google Scholar]

- Sagonas, C.; Tzimiropoulos, G.; Zafeiriou, S.; Pantic, M. 300 faces in-the-wild challenge: The first facial landmark localization challenge. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, NSW, Australia, 2–8 December 2013; pp. 397–403. [Google Scholar]

- Burgos-Artizzu, X.P.; Perona, P.; Dollár, P. Robust face landmark estimation under occlusion. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 1513–1520. [Google Scholar]

- Ghiasi, G.; Fowlkes, C.C. Occlusion coherence: Detecting and localizing occluded faces. arXiv, 2015; arXiv:1506.08347. [Google Scholar]

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the devil in the details: Delving deep into convolutional nets. In Proceedings of the British Machine Vision Conference 2014, Lenton, Nottingham, UK, 1–5 September 2014. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Newell, A.; Yang, K.; Deng, J. Stacked hourglass networks for human pose estimation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 483–499. [Google Scholar]

- Bulat, A.; Tzimiropoulos, G. Binarized convolutional landmark localizers for human pose estimation and face alignment with limited resources. Proceedings of IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3706–3714. [Google Scholar]

- Guo, X.; Li, S.; Yu, J.; Zhang, J.; Ma, J.; Ma, L.; Liu, W.; Ling, H. PFLD: A practical facial landmark detector. arXiv, 2019; arXiv:1902.10859v2. [Google Scholar]

- Zhao, Y.; Liu, Y.; Shen, C.; Gao, Y.; Xiong, S. Mobilefan: Transferring deep hidden representation for face alignment. Pattern Recognit. 2020, 100, 107114. [Google Scholar] [CrossRef]

- Gao, P.; Lu, K.; Xue, J.; Lyu, J.; Shao, L. A facial landmark detection method based on deep knowledge transfer. IEEE Trans. Neural Netw. Learn. Syst. 2021. [Google Scholar] [CrossRef] [PubMed]

- Sha, Y. Efficient Facial Landmark Detector by Knowledge Distillation. Proceedings of 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2021), Jodhpur, India, 15–18 December 2021; pp. 1–8. [Google Scholar]

- Fard, A.P.; Mahoor, M.H. Facial landmark points detection using knowledge distillation-based neural networks. Comput. Vis. Image Underst. 2022, 215, 103316. [Google Scholar] [CrossRef]

- Liu, H.; Simonyan, K.; Yang, Y. Darts: Differentiable architecture search. arXiv, 2018; arXiv:1806.09055. [Google Scholar]

- Yu, J.; Yang, L.; Xu, N.; Yang, J.; Huang, T. Slimmable Neural Networks. In Proceedings of the International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Sun, K.; Zhao, Y.; Jiang, B.; Cheng, T.; Xiao, B.; Liu, D.; Mu, Y.; Wang, X.; Liu, W.; Wang, J. High-resolution representations for labeling pixels and regions. arXiv, 2019; arXiv:1904.04514. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning (PMLR), Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Dong, X.; Yu, S.; Weng, X.; Wei, S.; Yang, Y.; Sheikh, Y. Supervision-by-registration: An unsupervised approach to improve the precision of facial landmark detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 360–368. [Google Scholar]

- Zhu, C.; Liu, H.; Yu, Z.; Sun, X. Towards omni-supervised face alignment for large scale unlabeled videos. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 13090–13097. [Google Scholar]

- Zhu, C.; Li, X.; Li, J.; Dai, S.; Tong, W. Multi-sourced Knowledge Integration for Robust Self-Supervised Facial Landmark Tracking. IEEE Trans. Multimed. 2022. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiang, T.; Hospedales, T.M.; Lu, H. Deep mutual learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4320–4328. [Google Scholar]

- Le, V.; Brandt, J.; Lin, Z.; Bourdev, L.; Huang, T.S. Interactive facial feature localization. In Proceedings of the 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 679–692. [Google Scholar]

- Belhumeur, P.N.; Jacobs, D.W.; Kriegman, D.J.; Kumar, N. Localizing parts of faces using a consensus of exemplars. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2930–2940. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.; Ramanan, D. Face detection, pose estimation, and landmark localization in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2879–2886. [Google Scholar]

- Messer, K.; Matas, J.; Kittler, J.; Luettin, J.; Maitre, G. XM2VTSDB: The extended M2VTS database. In Proceedings of the International Conference on Audio- and Video-Based Biometric Person Authentication (AVBPA), Washington, DC, USA, 22–23 March 1999; Volume 964, pp. 965–966. [Google Scholar]

- Zhu, C.; Li, X.; Li, J.; Dai, S. Improving robustness of facial landmark detection by defending against adversarial attacks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 11751–11760. [Google Scholar]

- Zhu, C.; Li, X.; Li, J.; Dai, S.; Tong, W. Reasoning structural relation for occlusion-robust facial landmark localization. Pattern Recognit. 2022, 122, 108325. [Google Scholar] [CrossRef]

- Zhu, C.; Wan, X.; Xie, S.; Li, X.; Gu, Y. Occlusion-Robust Face Alignment Using a Viewpoint-Invariant Hierarchical Network Architecture. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11112–11121. [Google Scholar]

- Zhu, M.; Shi, D.; Zheng, M.; Sadiq, M. Robust facial landmark detection via occlusion-adaptive deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3486–3496. [Google Scholar]

- Zhu, H.; Liu, H.; Zhu, C.; Deng, Z.; Sun, X. Learning spatial-temporal deformable networks for unconstrained face alignment and tracking in videos. Pattern Recognit. 2020, 107, 107354. [Google Scholar] [CrossRef]

- Trigeorgis, G.; Snape, P.; Nicolaou, M.A.; Antonakos, E.; Zafeiriou, S. Mnemonic descent method: A recurrent process applied for end-to-end face alignment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4177–4187. [Google Scholar]

- Tai, Y.; Liang, Y.; Liu, X.; Duan, L.; Li, J.; Wang, C.; Huang, F.; Chen, Y. Towards highly accurate and stable face alignment for high-resolution videos. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 8893–8900. [Google Scholar]

- Ghiasi, G.; Fowlkes, C. Occlusion coherence: Localizing occluded faces with a hierarchical deformable part model. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2385–2392. [Google Scholar]

- Feng, Z.; Hu, G.; Kittler, J.; Christmas, W.; Wu, X. Cascaded collaborative regression for robust facial landmark detection trained using a mixture of synthetic and real images with dynamic weighting. IEEE Trans. Image Process. 2015, 24, 3425–3440. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Kan, M.; Shan, S.; Chen, X. Occlusion-free face alignment: Deep regression networks coupled with de-corrupt autoencoders. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3428–3437. [Google Scholar]

- Xiao, S.; Feng, J.; Xing, J.; Lai, H.; Yan, S.; Kassim, A. Robust facial landmark detection via recurrent attentive-refinement networks. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 57–72. [Google Scholar]

- Feng, Z.; Kittler, J.; Christmas, W.; Huber, P.; Wu, X. Dynamic attention-controlled cascaded shape regression exploiting training data augmentation and fuzzy-set sample weighting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3681–3690. [Google Scholar]

- Cao, X.; Wei, Y.; Wen, F.; Sun, J. Face alignment by explicit shape regression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2887–2894. [Google Scholar]

- Kumar, A.; Marks, T.K.; Mou, W.; Wang, Y.; Jones, M.; Cherian, A.; Koike-Akino, T.; Liu, X.; Feng, C. Luvli face alignment: Estimating landmarks’ location, uncertainty, and visibility likelihood. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 8236–8246. [Google Scholar]

| Method | Backbone | #Params (M) | FLOPs (G) |

|---|---|---|---|

| DVLN [10] | VGG-16 [23] | 132.0 | 14.4 |

| Wing+PDB [13] | ResNet-50 [24] | 25 | 3.8 |

| SAN [14] | ResNet-152 [24] | 57.4 | 10.7 |

| LAB [15] | Hourglass [25] | 25.1 | 19.1 |

| HRNet [34] | HRNetV2-W18 [34] | 9.3 | 4.3 |

| AWing [16] | Hourglass [25] | 24.15 | 26.79 |

| BL+AFS [17] | - | 14.29 | 3.10 |

| LGSA [18] | Hourglass [25] | 18.64 | 15.69 |

| SLPT [19] | HRNetV2-W18 [34] | 13.18 | 5.17 |

| SRN [47] | Hourglass [25] | 19.89 | - |

| GlomFace [48] | - | - | 13.48 |

| MobileFAN [28] | MobileNetV2 [36] | 2.02 | 0.72 |

| EfficientFAN [29] | EfficientNet-B0 [37] | 4.19 | 0.79 |

| EFLD [30] | HRNetV2-W9 [34] | 2.3 | 1.7 |

| mnv2 [31] | MobileNetV2 [36] | 2.4 | 0.6 |

| HRSuperNet | HRSuperNet | 3.27 | 1.25 |

| SD-HRNet (300W) | S-HRNet | 0.98 | 0.59 |

| SD-HRNet (COFW) | S-HRNet | 1.05 | 0.60 |

| SD-HRNet (WFLW) | S-HRNet | 1.32 | 0.60 |

| Method | Common | Challenge | Full |

|---|---|---|---|

| ODN [49] | 3.56 | 6.67 | 4.17 |

| SAN [14] | 3.34 | 6.60 | 3.98 |

| LAB [15] | 2.98 | 5.19 | 3.49 |

| HRNet [34] | 2.87 | 5.15 | 3.32 |

| AWing [16] | 2.72 | 4.52 | 3.07 |

| BL+AFS [17] | 2.89 | 5.23 | 3.35 |

| LGSA [18] | 2.92 | 5.16 | 3.36 |

| SAAT [46] | 2.87 | 5.03 | 3.29 |

| SRN [47] | 3.08 | 5.86 | 3.62 |

| GlomFace [48] | 2.79 | 4.87 | 3.20 |

| SLPT [19] | 2.75 | 4.90 | 3.17 |

| MobileFAN [28] | 2.98 | 5.34 | 3.45 |

| EfficientFAN [29] | 2.98 | 5.21 | 3.42 |

| EFLD [30] | 2.88 | 5.03 | 3.32 |

| mnv2 [31] | 3.56 | 6.13 | 4.06 |

| HRSuperNet | 3.00 | 5.28 | 3.45 |

| S-HRNet | 3.02 | 5.44 | 3.50 |

| SD-HRNet | 2.93 ± 0.01 | 5.32 ± 0.05 | 3.40 ± 0.01 |

| SD-HRNet | 2.94 ± 0.01 | 5.33 ± 0.02 | 3.41 ± 0.01 |

| Method | Common | Challenge | Full |

|---|---|---|---|

| CFSS [9] | 11.73 | 19.98 | 13.35 |

| DHGN [50] | 8.98 | 12.19 | 9.61 |

| SBR [38] | 8.72 | 13.28 | 9.6 |

| SHG [25] | 8.17 | 13.52 | 9.22 |

| MDM [51] | 7.66 | 11.67 | 8.44 |

| FHR [52] | 7.02 | 11.28 | 7.85 |

| LAB [15] | 6.07 | 9.59 | 6.76 |

| SAAT [46] | 5.42 | 11.36 | 6.58 |

| SRN [47] | 5.78 | 9.28 | 6.46 |

| GlomFace [48] | 5.29 | 8.81 | 5.98 |

| HRSuperNet | 14.03 | 20.52 | 15.30 |

| S-HRNet | 19.18 | 29.37 | 21.17 |

| SD-HRNet | 6.43 ± 0.25 | 11.05 ± 0.51 | 7.34 ± 0.28 |

| SD-HRNet | 6.23 ± 0.23 | 10.72 ± 0.25 | 7.11 ± 0.20 |

| Method | NME (%) | Failure Rate (%) |

|---|---|---|

| HPM [53] | 7.50 | 13.00 |

| CCR [54] | 7.03 | 10.9 |

| DRDA [55] | 6.46 | 6.00 |

| RAR [56] | 6.03 | 4.14 |

| DAC-CSR [57] | 6.03 | 4.73 |

| Wing+PDB [13] | 5.07 | 3.16 |

| LAB [15] | 3.92 | 0.39 |

| HRNet [34] | 3.45 | 0.19 |

| LGSA [18] | 3.13 | 0.002 |

| SLPT [19] | 3.32 | 0.00 |

| MobileFAN [28] | 3.66 | 0.59 |

| EfficientFAN [29] | 3.40 | 0.00 |

| EFLD [30] | 3.50 | 0.00 |

| mnv2 [31] | 4.11 | 2.36 |

| HRSuperNet | 3.74 | 0.59 |

| S-HRNet | 3.69 | 0.20 |

| SD-HRNet | 3.61 ± 0.02 | 0.12 ± 0.16 |

| SD-HRNet | 3.63 ± 0.03 | 0.20 ± 0.17 |

| Method | Test | Pose | Expression | Illumination | Make-Up | Occlusion | Blur |

|---|---|---|---|---|---|---|---|

| ESR [58] | 11.13 | 25.88 | 11.47 | 10.49 | 11.05 | 13.75 | 12.20 |

| SDM [6] | 10.29 | 24.10 | 11.45 | 9.32 | 9.38 | 13.03 | 11.28 |

| CFSS [9] | 9.07 | 21.36 | 10.09 | 8.30 | 8.74 | 11.76 | 9.96 |

| DVLN [10] | 6.08 | 11.54 | 6.78 | 5.73 | 5.98 | 7.33 | 6.88 |

| LAB [15] | 5.27 | 10.24 | 5.51 | 5.23 | 5.15 | 6.79 | 6.32 |

| Wing+PDB [13] | 5.11 | 8.75 | 5.36 | 4.93 | 5.41 | 6.37 | 5.81 |

| HRNet [34] | 4.60 | 7.94 | 4.85 | 4.55 | 4.29 | 5.44 | 5.42 |

| AWing [16] | 4.36 | 7.38 | 4.58 | 4.32 | 4.27 | 5.19 | 4.96 |

| LUVLi [59] | 4.37 | 7.56 | 4.77 | 4.30 | 4.33 | 5.29 | 4.94 |

| LGSA [18] | 4.28 | 7.63 | 4.33 | 4.16 | 4.27 | 5.33 | 4.95 |

| mnv2 [31] | 8.57 | 15.06 | 8.81 | 8.15 | 8.75 | 9.92 | 9.40 |

| MobileFAN [28] | 4.93 | 8.72 | 5.27 | 4.93 | 4.70 | 5.94 | 5.73 |

| EFLD [30] | 4.74 | 8.41 | 5.01 | 4.71 | 4.57 | 5.70 | 5.45 |

| EfficientFAN [29] | 4.54 | 8.20 | 4.87 | 4.39 | 4.54 | 5.42 | 5.04 |

| HRSuperNet | 4.83 | 8.45 | 5.10 | 4.80 | 4.85 | 5.79 | 5.53 |

| S-HRNet | 4.98 | 8.68 | 5.33 | 4.86 | 4.88 | 5.87 | 5.70 |

| SD-HRNet | 4.93 ± 0.01 | 8.63 ± 0.03 | 5.31 ± 0.05 | 4.81 ± 0.03 | 4.76 ± 0.02 | 5.73 ± 0.02 | 5.56 ± 0.03 |

| SD-HRNet | 4.96 ± 0.03 | 8.66 ± 0.10 | 5.35 ± 0.04 | 4.82 ± 0.05 | 4.81 ± 0.04 | 5.76 ± 0.04 | 5.61 ± 0.06 |

| Teacher | Peer Student | Masked Inputs | NME (%) |

|---|---|---|---|

| ✖ | ✖ | ✖ | 3.50 |

| ✓ | ✖ | ✖ | 3.46 |

| ✓ | ✓ | ✖ | 3.44 |

| ✓ | ✓ | ✓ | 3.39 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, X.; Zheng, H.; Zhao, P.; Liang, Y. SD-HRNet: Slimming and Distilling High-Resolution Network for Efficient Face Alignment. Sensors 2023, 23, 1532. https://doi.org/10.3390/s23031532

Lin X, Zheng H, Zhao P, Liang Y. SD-HRNet: Slimming and Distilling High-Resolution Network for Efficient Face Alignment. Sensors. 2023; 23(3):1532. https://doi.org/10.3390/s23031532

Chicago/Turabian StyleLin, Xuxin, Haowen Zheng, Penghui Zhao, and Yanyan Liang. 2023. "SD-HRNet: Slimming and Distilling High-Resolution Network for Efficient Face Alignment" Sensors 23, no. 3: 1532. https://doi.org/10.3390/s23031532

APA StyleLin, X., Zheng, H., Zhao, P., & Liang, Y. (2023). SD-HRNet: Slimming and Distilling High-Resolution Network for Efficient Face Alignment. Sensors, 23(3), 1532. https://doi.org/10.3390/s23031532