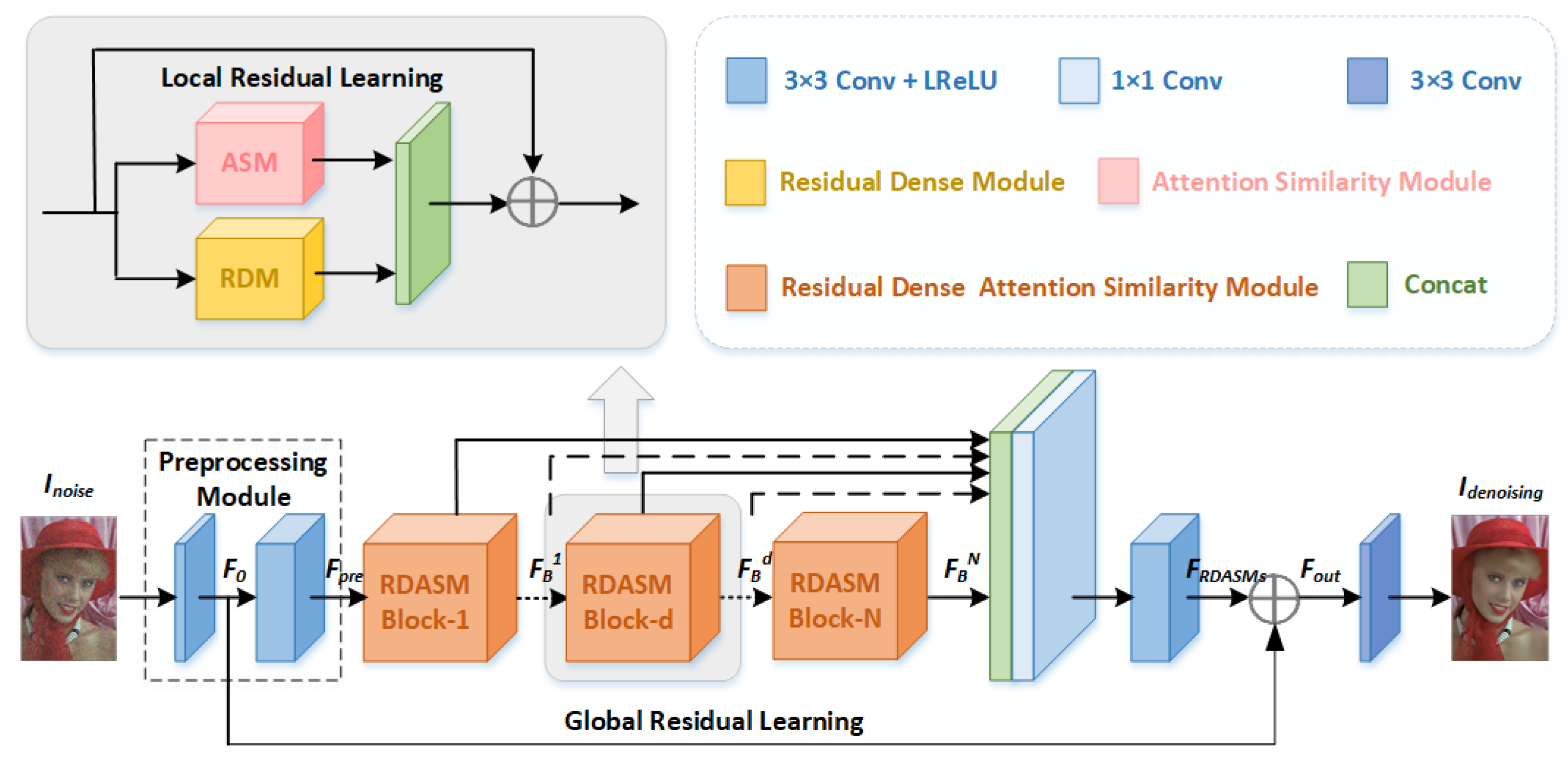

Figure 1.

Network architecture of the proposed RDASNet.

Figure 1.

Network architecture of the proposed RDASNet.

Figure 2.

Residual dense attention similarity module. CASM and SASM represent the channel attention similarity module and spatial attention similarity module, respectively.

Figure 2.

Residual dense attention similarity module. CASM and SASM represent the channel attention similarity module and spatial attention similarity module, respectively.

Figure 3.

Channel attention similarity module. Channels with similar pixels have similar weights, and key channel features have larger weights. The two dark red channels in the figure are similar and have a similar weight .

Figure 3.

Channel attention similarity module. Channels with similar pixels have similar weights, and key channel features have larger weights. The two dark red channels in the figure are similar and have a similar weight .

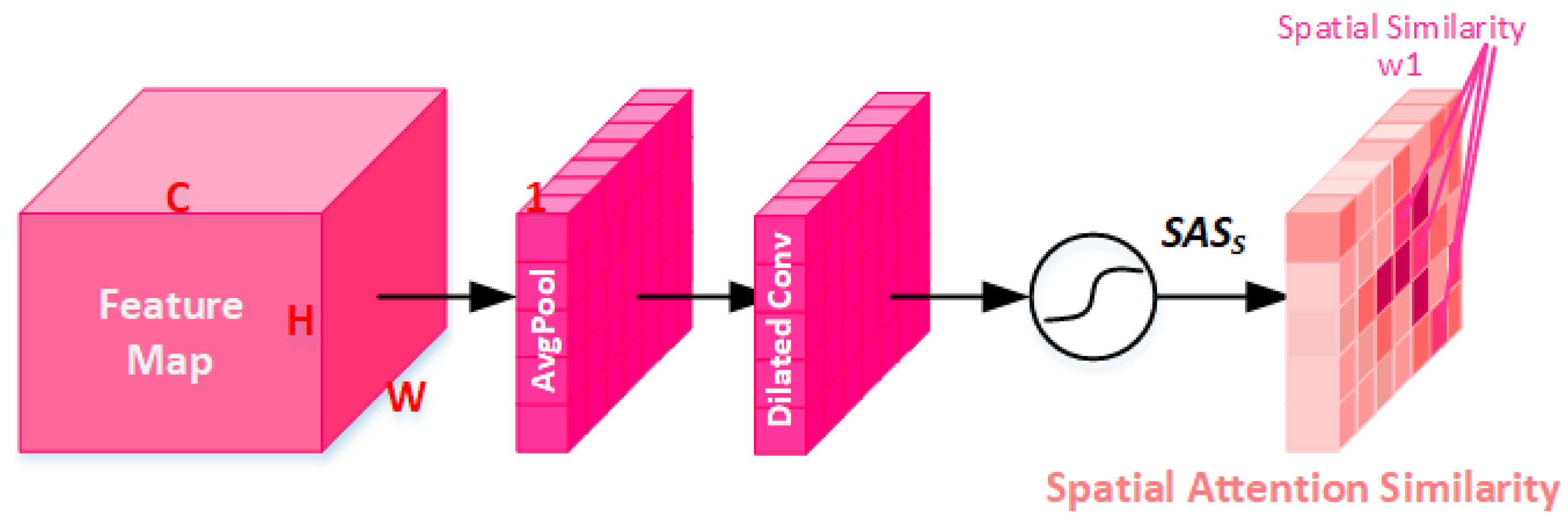

Figure 4.

Spatial attention similarity module. Spatial similar regions have similar weights, and spatial key features have larger weights.

Figure 4.

Spatial attention similarity module. Spatial similar regions have similar weights, and spatial key features have larger weights.

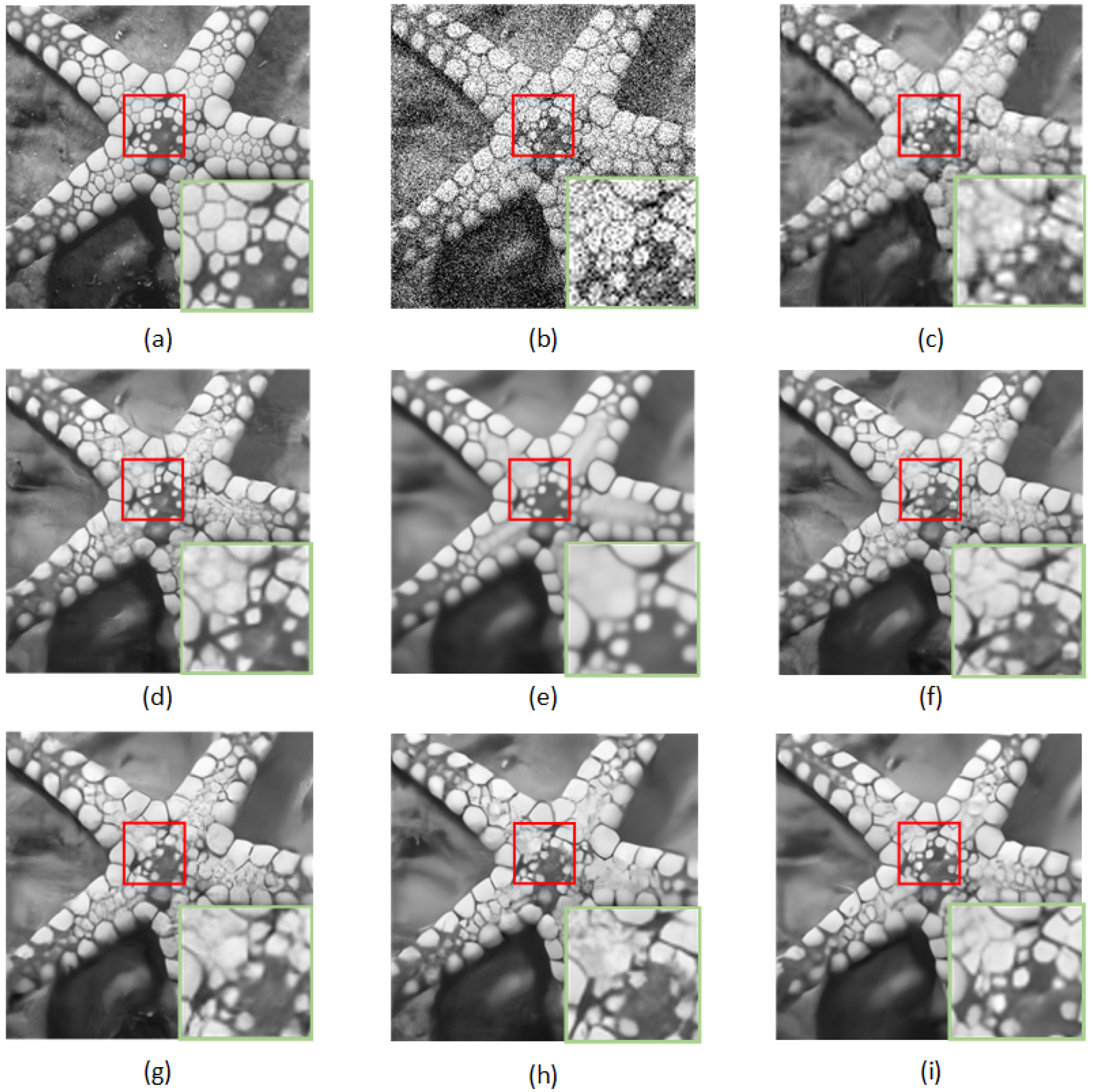

Figure 5.

Denoising results of different methods on one image from Set12 with noise level : (a) original image, (b) noisy image, (c) BM3D/25.04 dB, (d) DnCNN/25.70 dB, (e) FFDNet/25.75 dB, (f) BRDNet/25.77 dB, (g) ADNet/25.70 dB, (h) RDN/25.65 dB, and (i) RDASNet/25.94 dB.

Figure 5.

Denoising results of different methods on one image from Set12 with noise level : (a) original image, (b) noisy image, (c) BM3D/25.04 dB, (d) DnCNN/25.70 dB, (e) FFDNet/25.75 dB, (f) BRDNet/25.77 dB, (g) ADNet/25.70 dB, (h) RDN/25.65 dB, and (i) RDASNet/25.94 dB.

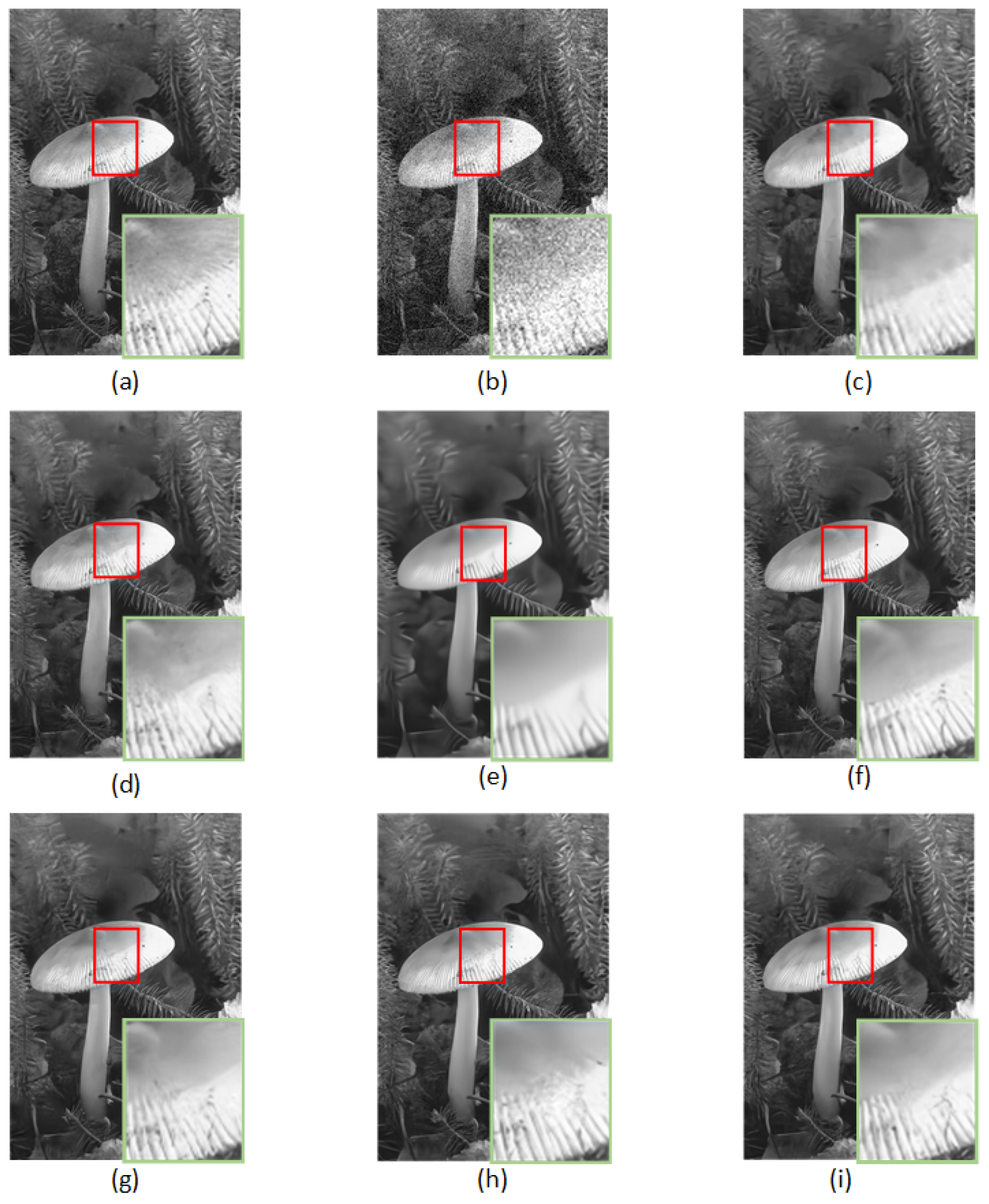

Figure 6.

Denoising results of different methods on one image from BSD68 with noise level : (a) original image, (b) noisy image, (c) BM3D/28.42 dB, (d) DnCNN/29.11 dB, (e) FFDNet/29.16 dB, (f) BRDNet/29.26 dB, (g) ADNet/29.11 dB, (h) RDN/29.17 dB, and (i) RDASNet/29.21 dB.

Figure 6.

Denoising results of different methods on one image from BSD68 with noise level : (a) original image, (b) noisy image, (c) BM3D/28.42 dB, (d) DnCNN/29.11 dB, (e) FFDNet/29.16 dB, (f) BRDNet/29.26 dB, (g) ADNet/29.11 dB, (h) RDN/29.17 dB, and (i) RDASNet/29.21 dB.

Figure 7.

Denoising results of different methods on one image from Kodak24 with noise level : (a) original image, (b) noisy image, (c) CBM3D/27.33 dB, (d) DnCNN/28.43 dB, (e) FFDNet/28.60 dB, (f) BRDNet/28.88 dB, (g) ADNet/28.68 dB, (h) RDN/28.94 dB, and (i) RDASNet/29.11 dB.

Figure 7.

Denoising results of different methods on one image from Kodak24 with noise level : (a) original image, (b) noisy image, (c) CBM3D/27.33 dB, (d) DnCNN/28.43 dB, (e) FFDNet/28.60 dB, (f) BRDNet/28.88 dB, (g) ADNet/28.68 dB, (h) RDN/28.94 dB, and (i) RDASNet/29.11 dB.

Figure 8.

Denoising results of different methods on one image from McMaster with noise level : (a) original image, (b) noisy image, (c) CBM3D/35.49 dB, (d) DnCNN/35.63 dB, (e) FFDNet/36.64 dB, (f) BRDNet/37.20 dB, (g) ADNet/36.59 dB, (h) RDN/37.20 dB, and (i) RDASNet/37.39 dB.

Figure 8.

Denoising results of different methods on one image from McMaster with noise level : (a) original image, (b) noisy image, (c) CBM3D/35.49 dB, (d) DnCNN/35.63 dB, (e) FFDNet/36.64 dB, (f) BRDNet/37.20 dB, (g) ADNet/36.59 dB, (h) RDN/37.20 dB, and (i) RDASNet/37.39 dB.

Figure 9.

The thermodynamic image of RDASM is proposed. (a1–a3) are the noise images, (b1–b3) are the corresponding heatmaps, (c1–c3) are the corresponding denoising images. In (a2) figure, the details of (a1–a3) are similar, and the weights w1, w2, and w3 are similar. Red indicates high weight, and attention gives more weight to key features.

Figure 9.

The thermodynamic image of RDASM is proposed. (a1–a3) are the noise images, (b1–b3) are the corresponding heatmaps, (c1–c3) are the corresponding denoising images. In (a2) figure, the details of (a1–a3) are similar, and the weights w1, w2, and w3 are similar. Red indicates high weight, and attention gives more weight to key features.

Figure 10.

A comparison of avg-pooling and max-pooling. (The noise level ).

Figure 10.

A comparison of avg-pooling and max-pooling. (The noise level ).

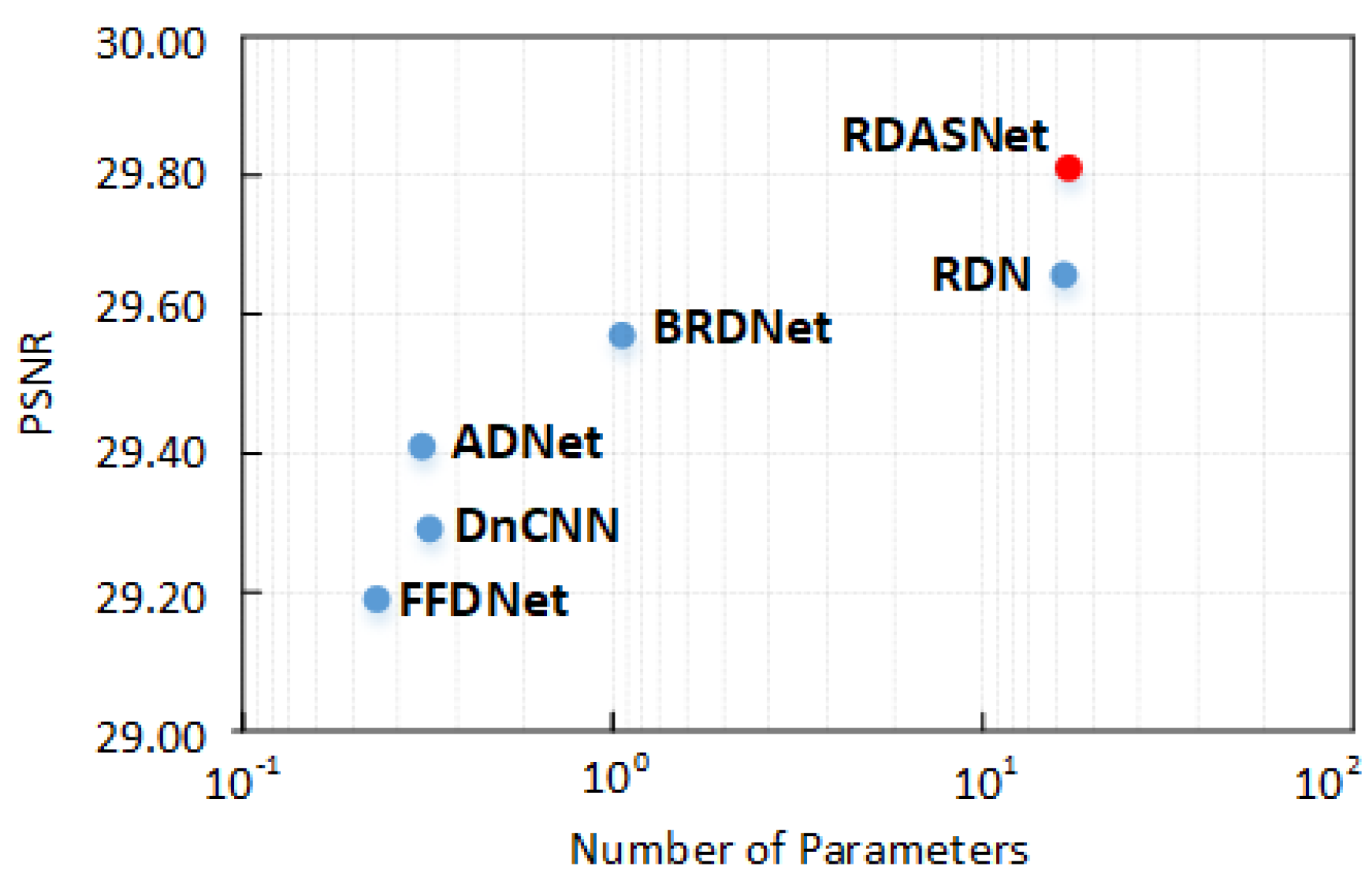

Figure 11.

PSNR results on McMaster vs. the number of parameters of different methods.

Figure 11.

PSNR results on McMaster vs. the number of parameters of different methods.

Table 1.

Main parameters of RDASNet.

Table 1.

Main parameters of RDASNet.

| RDASNet | Blind Range | Patch Size | Batch Size | Epochs |

|---|

| gray | [0, 75] | 80 × 80 | 4 | 400 |

| color | [0, 75] | 80 × 80 | 4 | 400 |

| real | - | 64 × 64 | 4 | 65 |

Table 2.

PSNR (dB) and SSIM of different methods on the Set12 for different noise levels (i.e., 15, 25, and 50). The best PSNR two results are shown in bold and underlined, respectively.

Table 2.

PSNR (dB) and SSIM of different methods on the Set12 for different noise levels (i.e., 15, 25, and 50). The best PSNR two results are shown in bold and underlined, respectively.

| Images | C.man | House | Peppers | Starfish | Monarch | Airplane | Parrot | Lena | Barbara | Boat | Man | Couple | Average |

|---|

| | | | | | Noise | Level | | = | 15 | | | | | |

| BM3D | 31.91 | 34.93 | 32.69 | 31.14 | 31.85 | 31.07 | 31.37 | 34.26 | 33.10 | 32.13 | 31.92 | 32.10 | 32.37/- |

| DnCNN | 32.61 | 34.97 | 33.30 | 32.20 | 33.09 | 31.70 | 31.83 | 34.62 | 32.64 | 32.42 | 32.46 | 32.47 | 32.86/0.9020 |

| FFDNet | 32.43 | 35.07 | 33.25 | 31.99 | 32.66 | 31.57 | 31.81 | 34.62 | 32.54 | 32.38 | 32.41 | 32.46 | 32.77/0.9055 |

| BRDNet | 32.80 | 35.27 | 33.47 | 32.24 | 33.35 | 31.85 | 32.00 | 34.75 | 32.93 | 32.55 | 32.50 | 32.62 | 33.03/0.9076 |

| ADNet | 32.81 | 35.22 | 33.49 | 32.17 | 33.17 | 31.86 | 31.96 | 34.71 | 32.80 | 32.57 | 32.47 | 32.58 | 32.98/0.9031 |

| RDN | 32.33 | 35.18 | 33.41 | 32.14 | 33.00 | 31.70 | 31.87 | 34.69 | 32.34 | 32.42 | 32.41 | 32.58 | 32.84/0.9045 |

| RDASNet | 32.70 | 35.28 | 33.41 | 32.26 | 33.18 | 31.85 | 31.96 | 34.75 | 32.76 | 32.48 | 32.49 | 32.63 | 32.99/0.9069 |

| | | | | | Noise | Level | | = | 25 | | | | | |

| BM3D | 29.45 | 32.85 | 30.16 | 28.56 | 29.25 | 28.42 | 28.93 | 32.07 | 30.71 | 29.90 | 29.61 | 29.71 | 29.97/- |

| DnCNN | 30.18 | 33.06 | 30.87 | 29.41 | 30.28 | 29.13 | 29.43 | 32.44 | 30.00 | 30.21 | 30.10 | 30.12 | 30.43/0.8605 |

| FFDNet | 30.10 | 33.28 | 30.93 | 29.32 | 30.08 | 29.04 | 29.44 | 32.57 | 30.01 | 30.25 | 30.11 | 30.20 | 30.44/0.8662 |

| BRDNet | 31.39 | 33.41 | 31.04 | 29.46 | 30.50 | 29.20 | 29.55 | 32.65 | 30.34 | 30.33 | 30.14 | 30.28 | 30.61/0.8669 |

| ADNet | 30.34 | 33.41 | 31.14 | 29.41 | 30.39 | 29.17 | 29.49 | 32.61 | 30.25 | 30.37 | 30.08 | 30.24 | 30.58/0.8634 |

| RDN | 29.97 | 33.53 | 31.11 | 29.25 | 30.23 | 29.14 | 29.43 | 32.62 | 29.72 | 30.21 | 30.09 | 30.31 | 30.47/0.8644 |

| RDASNet | 30.29 | 33.86 | 31.12 | 29.42 | 30.51 | 29.21 | 29.61 | 32.80 | 30.31 | 30.35 | 30.18 | 30.36 | 30.67/0.8679 |

| | | | | | Noise | Level | | = | 50 | | | | | |

| BM3D | 26.13 | 29.69 | 26.68 | 25.04 | 25.82 | 25.10 | 25.90 | 29.05 | 27.22 | 26.78 | 26.81 | 26.46 | 26.72/- |

| DnCNN | 27.03 | 30.00 | 27.32 | 25.70 | 26.78 | 25.87 | 26.48 | 29.39 | 26.22 | 27.20 | 27.24 | 26.90 | 27.18/0.7810 |

| FFDNet | 27.05 | 30.37 | 27.54 | 25.75 | 26.81 | 25.89 | 26.57 | 29.66 | 26.45 | 27.33 | 27.29 | 27.08 | 27.32/0.7893 |

| BRDNet | 27.44 | 30.53 | 27.67 | 25.77 | 26.97 | 25.93 | 26.66 | 29.73 | 26.85 | 27.38 | 27.27 | 27.17 | 27.45/0.7915 |

| ADNet | 27.31 | 30.59 | 27.69 | 25.70 | 26.90 | 25.88 | 25.56 | 29.59 | 26.64 | 27.35 | 27.17 | 27.07 | 27.37/0.7862 |

| RDN | 27.16 | 30.77 | 27.51 | 25.65 | 26.93 | 25.80 | 26.53 | 29.75 | 26.08 | 27.37 | 27.25 | 27.14 | 27.33/0.7876 |

| RDASNet | 27.34 | 31.03 | 27.69 | 25.94 | 26.99 | 26.12 | 26.53 | 29.80 | 26.89 | 27.41 | 27.28 | 27.29 | 27.53/0.7940 |

Table 3.

PSNR (dB) and SSIM of different methods on the BSD68 for different noise levels (i.e., 15, 25, and 50). The best PSNR two results are shown in bold and underlined, respectively.

Table 3.

PSNR (dB) and SSIM of different methods on the BSD68 for different noise levels (i.e., 15, 25, and 50). The best PSNR two results are shown in bold and underlined, respectively.

| Methods | | | |

|---|

| BM3D | 31.07/- | 28.57/- | 25.62/- |

| DnCNN | 31.72/0.8901 | 29.23/0.8276 | 26.23/0.7170 |

| FFDNet | 31.62/0.8952 | 29.19/0.8345 | 26.30/0.7278 |

| BRDNet | 31.79/0.8966 | 29.29/0.8346 | 26.26/0.7284 |

| ADNet | 31.74/0.8882 | 29.25/0.8260 | 29.29/0.7169 |

| RDN | 31.62/0.8943 | 29.16/0.8314 | 26.27/0.7223 |

| RDASNet | 31.77/0.8960 | 29.30/0.8347 | 26.38/0.7294 |

Table 4.

PSNR (dB) and SSIM of different methods on the CBSD68, Kodak24, and McMaster for different noise levels (i.e., 15, 25, 35, 50, and 75). The best PSNR two results are shown in bold and underlined, respectively.

Table 4.

PSNR (dB) and SSIM of different methods on the CBSD68, Kodak24, and McMaster for different noise levels (i.e., 15, 25, 35, 50, and 75). The best PSNR two results are shown in bold and underlined, respectively.

| Datasets | Methods | | | | | |

|---|

| | CBM3D | 33.52/- | 30.71/- | 28.89/- | 27.38/- | 25.74/- |

| | DnCNN | 33.98/0.9290 | 31.31/0.8830 | 29.65/0.8421 | 28.01/0.7896 | - |

| | FFDNet | 33.80/0.9310 | 31.18/0.8864 | 29.58/0.8473 | - | 26.57/0.7285 |

| CBSD68 | BRDNet | 34.10/- | 31.43/0.8912 | 29.77/0.8500 | 28.16/0.8005 | 26.43/0.7342 |

| | ADNet | 33.99/0.9325 | 31.31/0.8878 | 29.66/0.8479 | 28.04/0.7961 | 26.33/0.7288 |

| | RDN | 34.06/0.9342 | 31.42/0.8907 | 29.79/0.8522 | 28.18/0.8016 | 26.50/0.7376 |

| | RDASNet | 34.17/0.9354 | 31.53/0.8925 | 29.91/0.8547 | 28.31/0.8055 | 26.63/0.7421 |

| | CBM3D/- | 34.28/- | 31.68/- | 29.90/- | 28.46/- | 26.82/- |

| | DnCNN | 34.73/0.9209 | 32.23/0.8775 | 30.64/0.8398 | 29.02/0.7917 | - |

| | FFDNet | 34.55/0.9234 | 32.11/0.8818 | 30.56/0.8455 | 28.99/0.7993 | 27.25/0.7373 |

| Kodak24 | BRDNet | 34.88/- | 32.41/0.8869 | 30.80/0.8485 | 29.22/0.8029 | 27.49/0.7442 |

| | ADNet | 34.76/0.9250 | 32.26/0.8830 | 30.68/0.8461 | 29.10/0.7990 | 27.40/0.7637 |

| | RDN | 35.02/0.9272 | 32.55/0.8869 | 31.00/0.8515 | 29.45/0.8061 | 27.80/0.7498 |

| | RDASNet | 35.16/0.9290 | 32.69/0.8893 | 31.16/0.8548 | 29.60/0.8111 | 27.95/0.7546 |

| | CBM3D | 34.06/- | 31.66/- | 29.92/- | 28.51/- | 26.79/- |

| | DnCNN | 34.80/- | 32.47/- | 30.91/- | 29.21/- | - |

| | FFDNet | 34.47/0.9224 | 32.25/0.8894 | 30.76/0.8599 | 29.14/0.8201 | 27.29/0.7635 |

| McMaster | BRDNet | 35.08/- | 32.75/0.8965 | 31.15/0.8647 | 29.52/0.8260 | 27.72/0.7734 |

| | ADNet | 34.93/0.9256 | 32.56/0.8899 | 31.00/0.8598 | 29.36/0.8190 | 27.53/0.7637 |

| | RDN | 35.04/0.9285 | 32.74/0.8961 | 31.22/0.8672 | 29.60/0.8300 | 27.82/0.7800 |

| | RDASNet | 35.21/0.9301 | 32.91/0.8988 | 31.41/0.8710 | 29.81/0.8360 | 28.04/0.7884 |

Table 5.

PSNR (dB) of different methods using the cc dataset. The best two results are shown in bold and underlined, respectively.

Table 5.

PSNR (dB) of different methods using the cc dataset. The best two results are shown in bold and underlined, respectively.

| Camera Setting | CBM3D | WNNM | DnCNN | BRDNet | ADNet | RDN | RDASNet |

|---|

| Canon | 39.76 | 37.51 | 37.26 | 37.63 | 35.96 | 35.85 | 37.05 |

| 5D | 36.40 | 33.86 | 34.13 | 37.28 | 36.11 | 36.07 | 37.14 |

| ISO = 3200 | 36.37 | 31.43 | 34.09 | 37.75 | 34.49 | 36.42 | 36.85 |

| Nikon | 34.18 | 33.46 | 33.62 | 34.55 | 33.94 | 38.00 | 38.09 |

| D600 | 35.07 | 36.09 | 34.48 | 35.99 | 34.33 | 37.41 | 38.14 |

| ISO = 3200 | 37.13 | 39.86 | 35.41 | 38.62 | 38.87 | 35.87 | 34.47 |

| Nikon | 36.81 | 36.35 | 35.79 | 39.22 | 37.61 | 39.66 | 39.96 |

| D800 | 37.76 | 39.99 | 36.08 | 39.67 | 38.24 | 39.06 | 39.36 |

| ISO = 1600 | 37.51 | 37.15 | 35.48 | 39.04 | 36.89 | 37.91 | 38.40 |

| Nikon | 35.05 | 38.60 | 34.08 | 38.28 | 37.20 | 37.93 | 38.33 |

| D800 | 34.07 | 36.04 | 33.70 | 37.18 | 35.67 | 37.73 | 37.86 |

| ISO = 3200 | 34.42 | 39.73 | 33.31 | 38.85 | 38.09 | 37.31 | 38.30 |

| Nikon | 31.13 | 33.29 | 29.83 | 32.75 | 32.24 | 35.47 | 36.25 |

| D800 | 31.22 | 31.16 | 30.55 | 33.24 | 32.59 | 30.61 | 28.68 |

| ISO = 6400 | 30.97 | 31.98 | 30.09 | 32.89 | 33.14 | 36.49 | 37.27 |

| Average | 35.19 | 35.77 | 33.86 | 36.73 | 35.69 | 36.79 | 37.01 |

Table 6.

PSNR (dB) of RDASM and RDM using the Set12 and BSD68 datasets for different noise levels (i.e., 15, 25, 35, 50, and 75).

Table 6.

PSNR (dB) of RDASM and RDM using the Set12 and BSD68 datasets for different noise levels (i.e., 15, 25, 35, 50, and 75).

| Methods | | | | | |

|---|

| | | Set12 | | | |

| RDM | 32.84 | 30.47 | 28.96 | 27.33 | 25.54 |

| RDASM | 32.99 | 30.67 | 29.17 | 27.53 | 25.73 |

| | | BSD68 | | | |

| RDM | 31.62 | 29.16 | 27.70 | 26.27 | 24.79 |

| RDASM | 31.76 | 29.30 | 29.82 | 26.37 | 24.90 |

Table 7.

PSNR (dB) on Set12, BSD68, CBSD68, Kodak24, and McMaster using different pooling methods.

Table 7.

PSNR (dB) on Set12, BSD68, CBSD68, Kodak24, and McMaster using different pooling methods.

| Methods | Avg-Pooling | Max-Pooling | CBAM |

|---|

| Noise Level | | | |

| Set12 | 27.4941 | 27.4028 | 27.4138 |

| BSD68 | 26.3455 | 26.2740 | 26.2614 |

| CBSD68 | 28.3153 | 28.2431 | 28.2083 |

| Kodak24 | 29.5174 | 29.5124 | 29.4744 |

| McMaster | 29.6908 | 29.6906 | 29.6834 |

Table 8.

Comparison of parameters and run times.

Table 8.

Comparison of parameters and run times.

| Methods | Device | PSNR/256 × 256 | PSNR/512 × 512 | Parameters |

|---|

| BM3D | CPU | 25.04/0.59 | 29.05/2.52 | - |

| WNNM | CPU | 25.44/203.1 | 29.25/773.2 | - |

| DnCNN | GPU | 25.70/0.0061 | 29.39/0.0089 | 556 K |

| FFDNet | GPU | 25.75/0.0113 | 29.66/0.0168 | 490 K |

| BRDNet | GPU | 25.77/0.0631 | 29.73/0.2018 | 1115 K |

| ADNet | GPU | 25.70/0.0086 | 29.59/0.0109 | 519 K |

| RDN | GPU | 25.65/0.0203 | 29.75/0.0335 | 21.97 M |

| RDASNet | GPU | 25.94/0.0194 | 25.80/0.0201 | 22.05 M |