Research and Evaluation on an Optical Automatic Detection System for the Defects of the Manufactured Paper Cups

Abstract

:1. Introduction

2. Research Methods

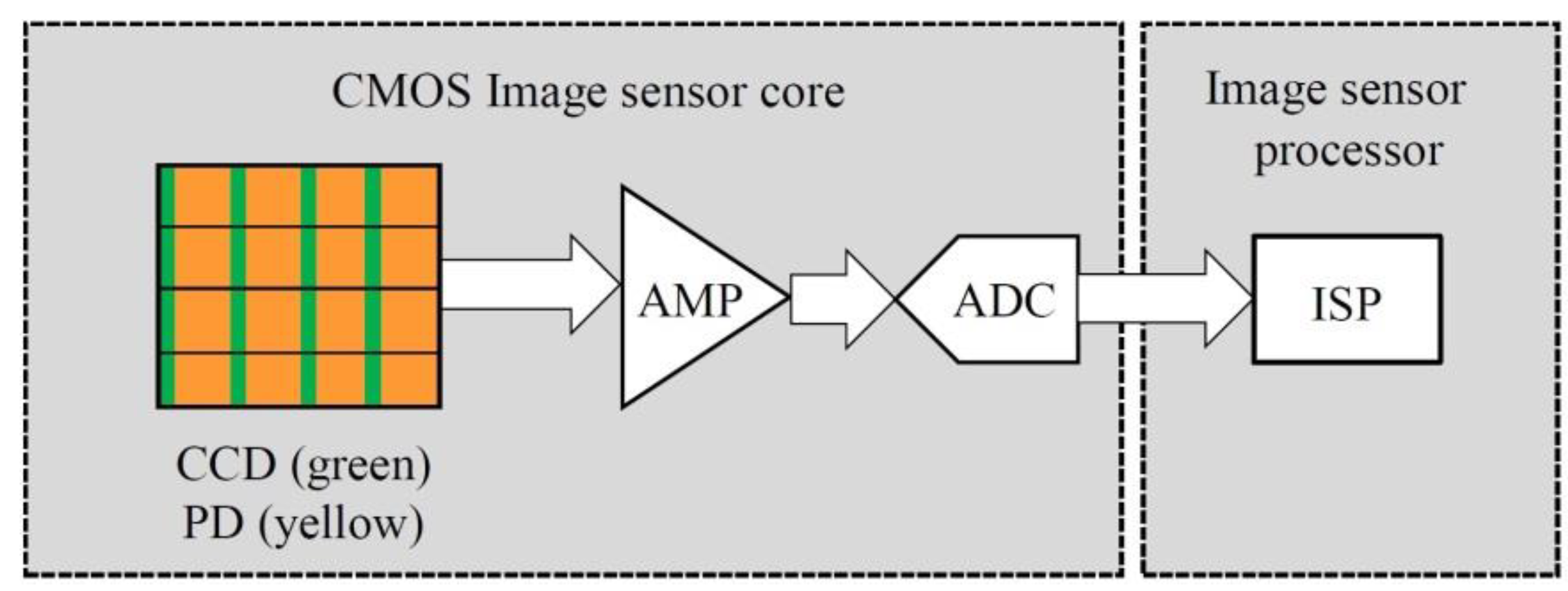

2.1. System Architecture

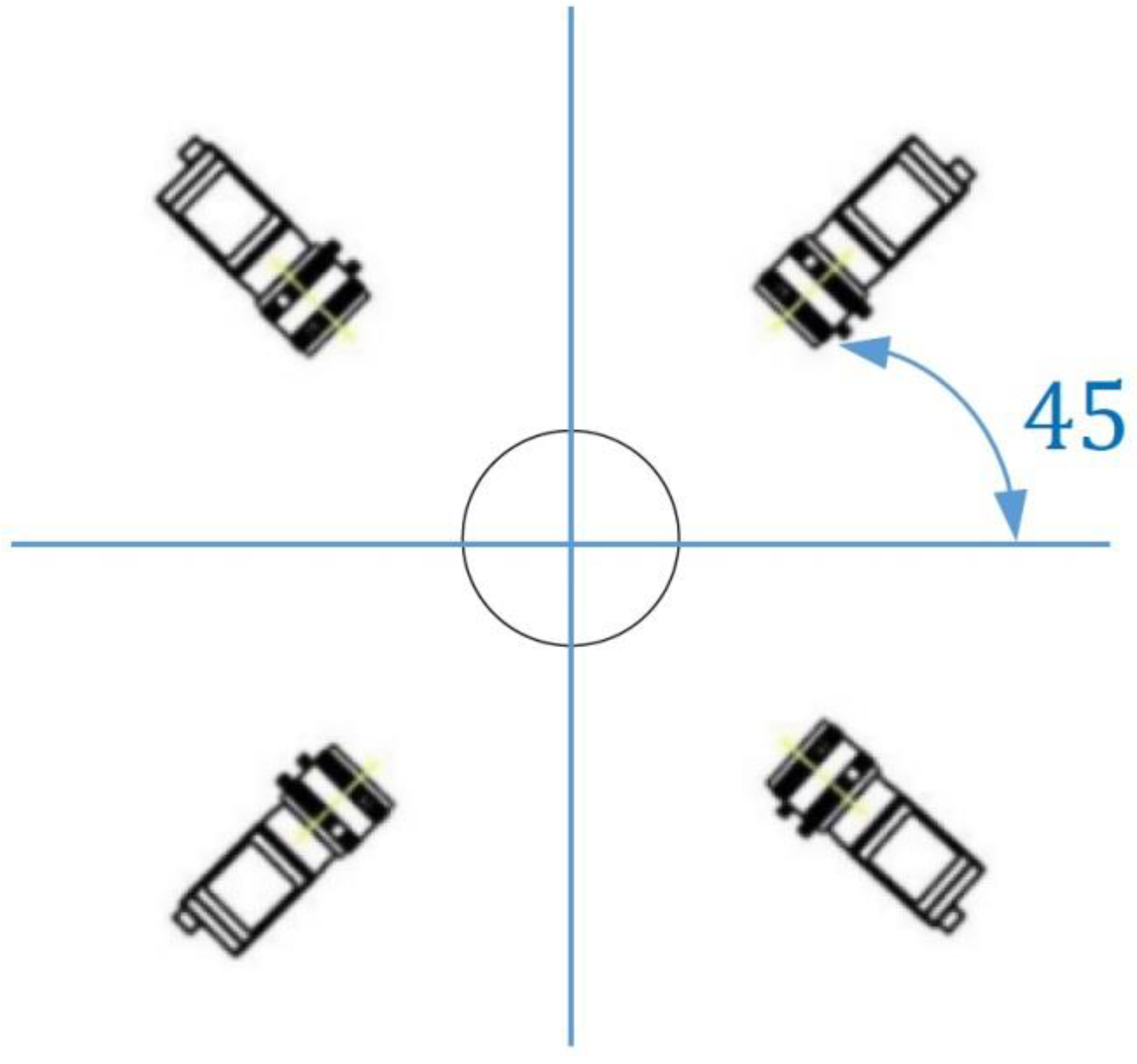

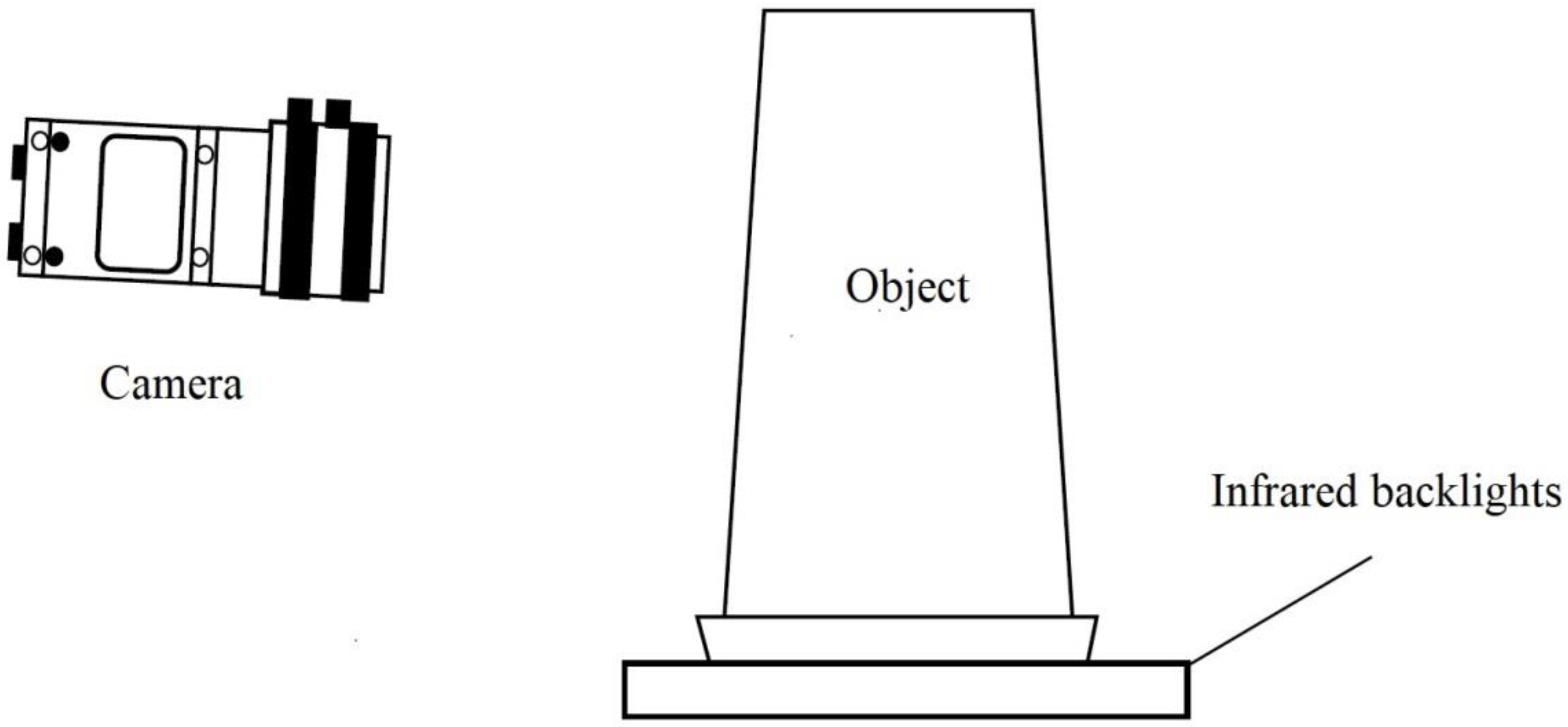

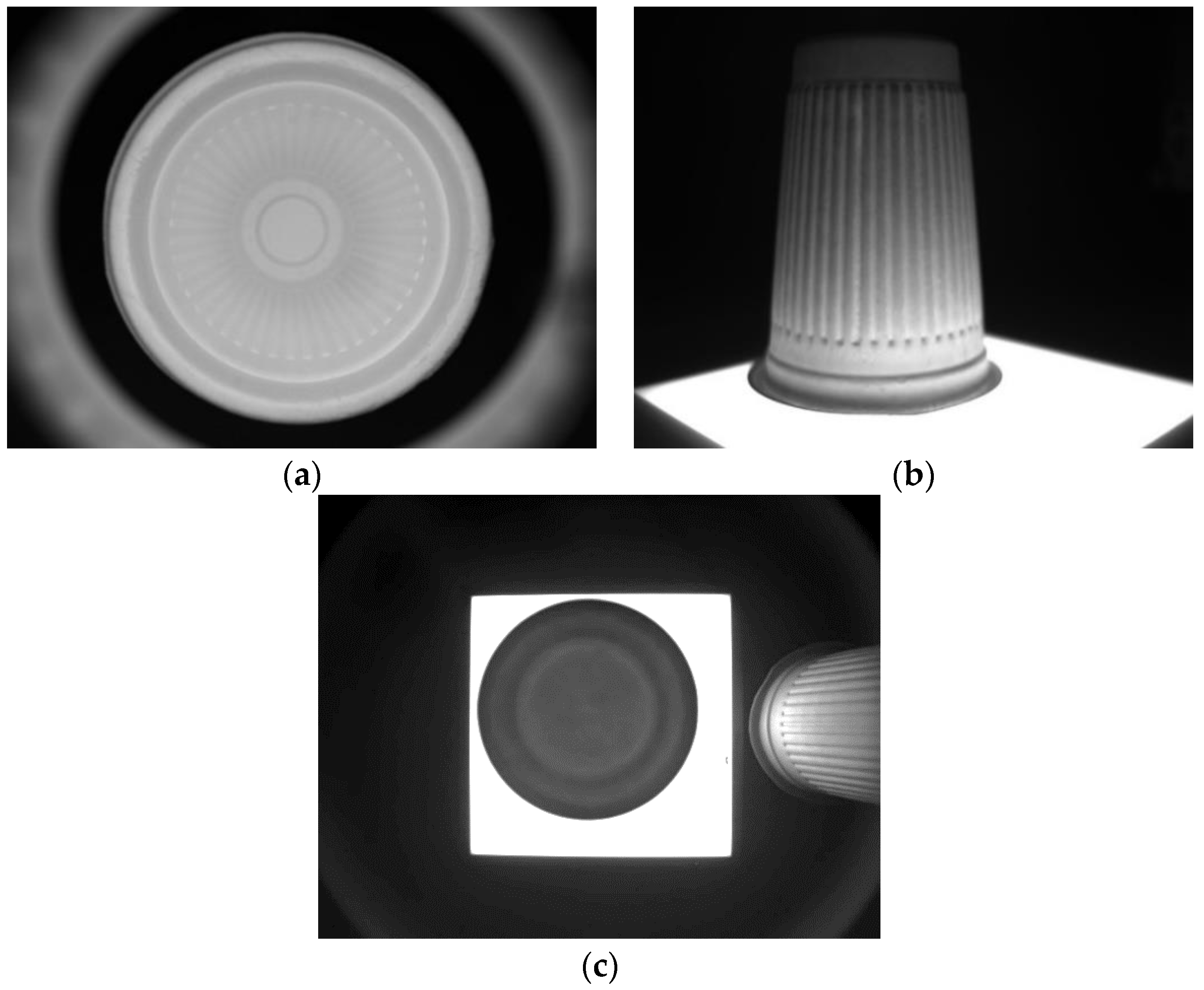

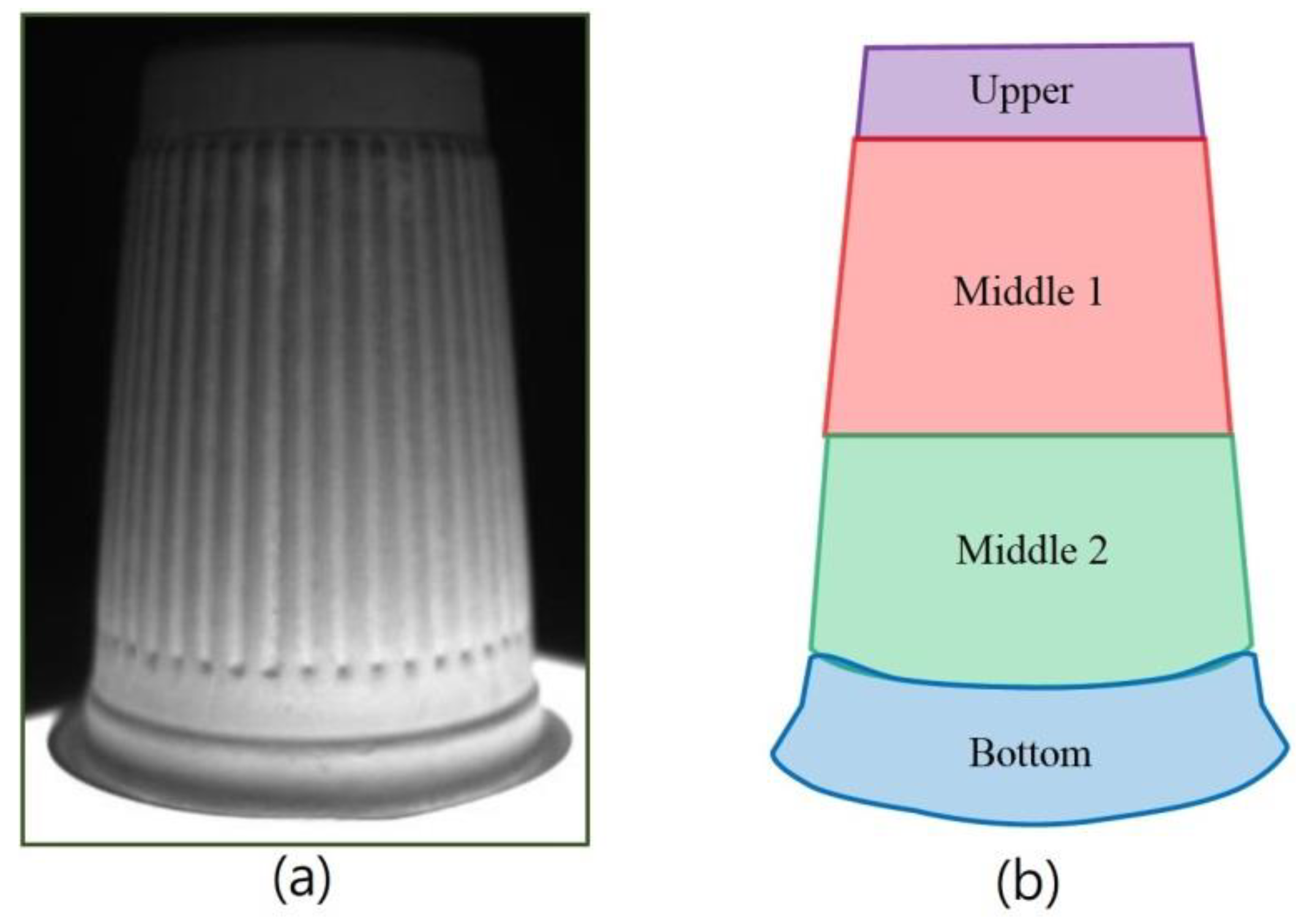

2.2. Methods to Capture the Images

3. Image Processing Results and Discussion

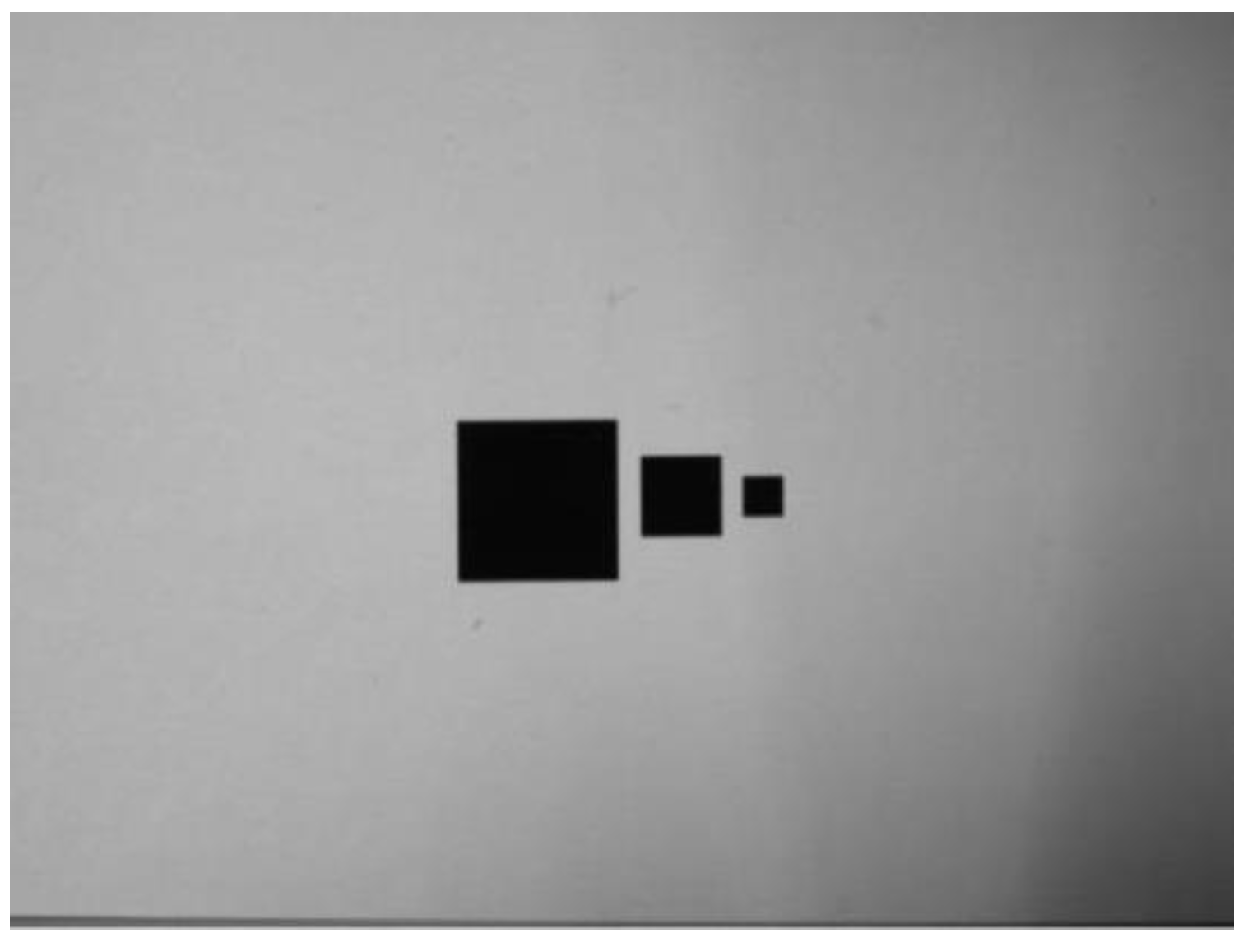

3.1. Measurement of the Defect Areas

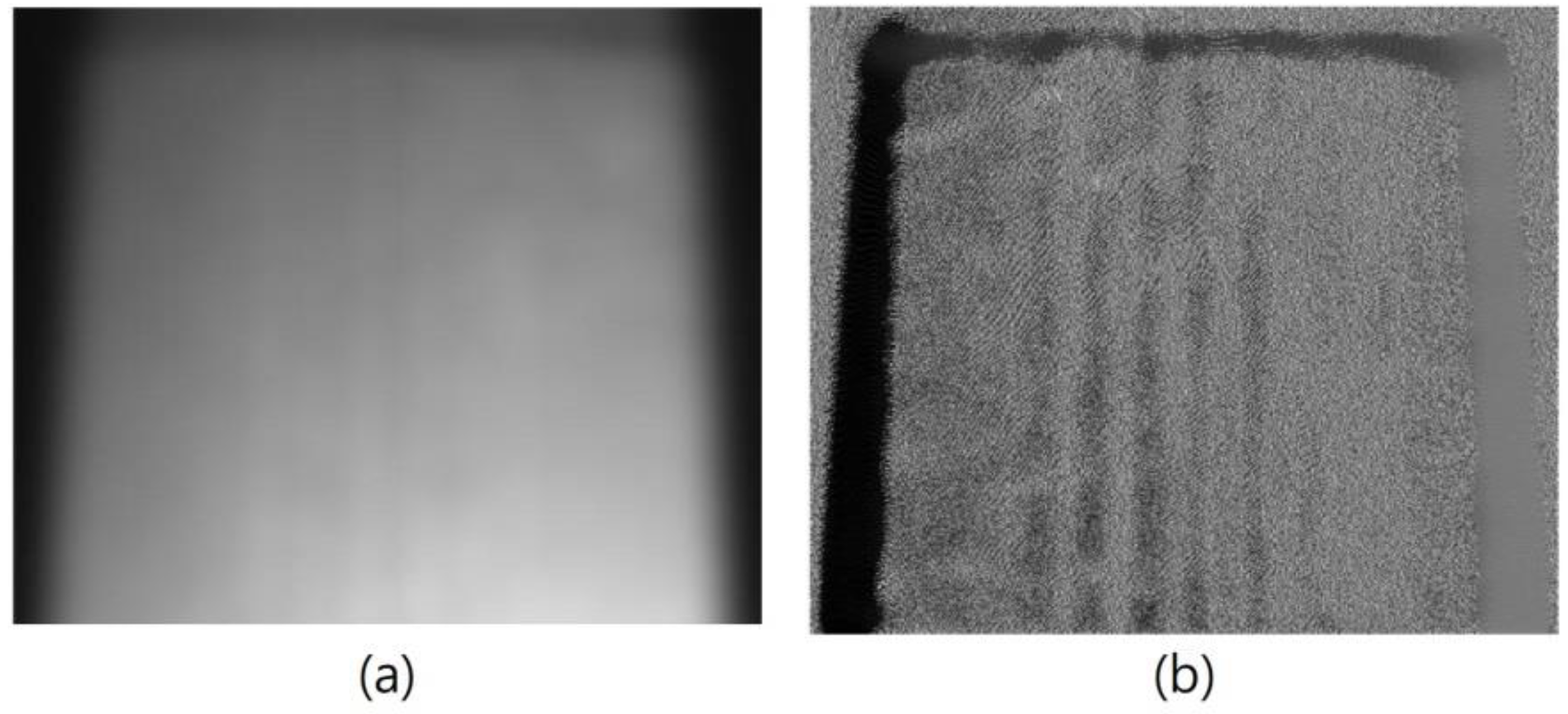

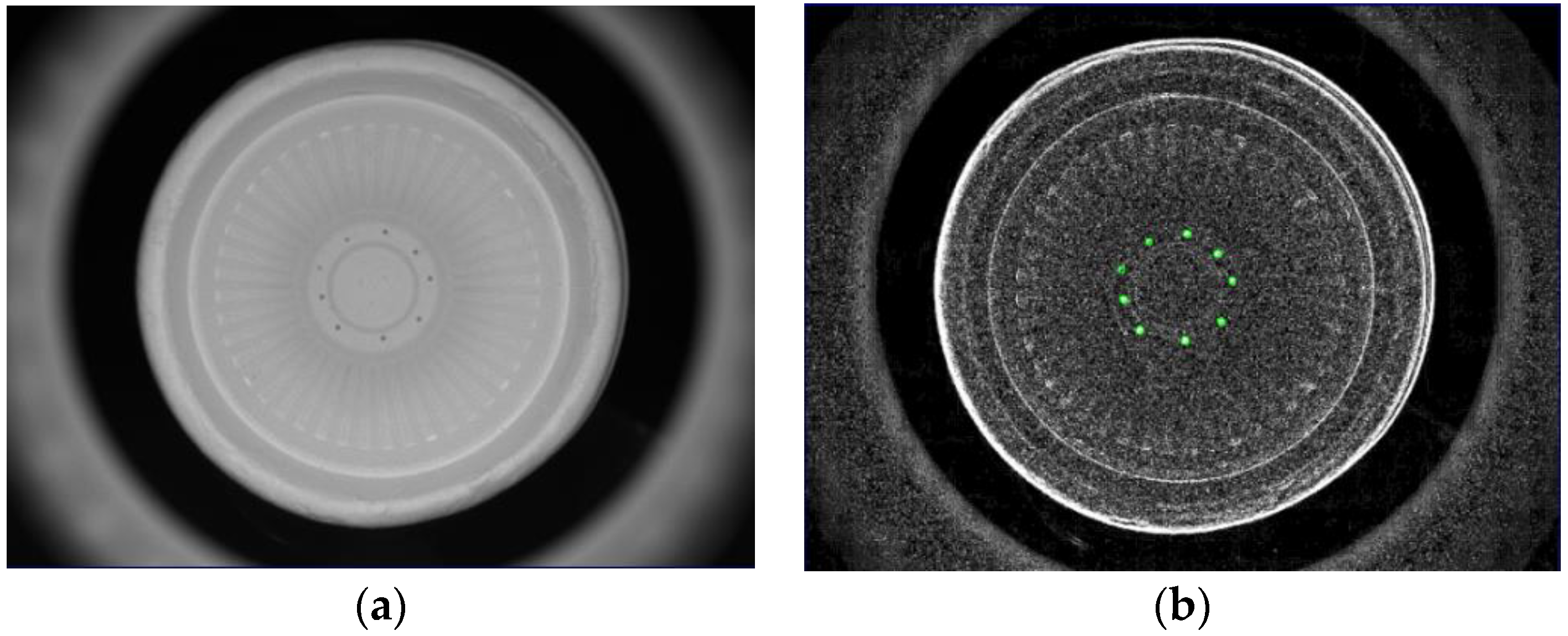

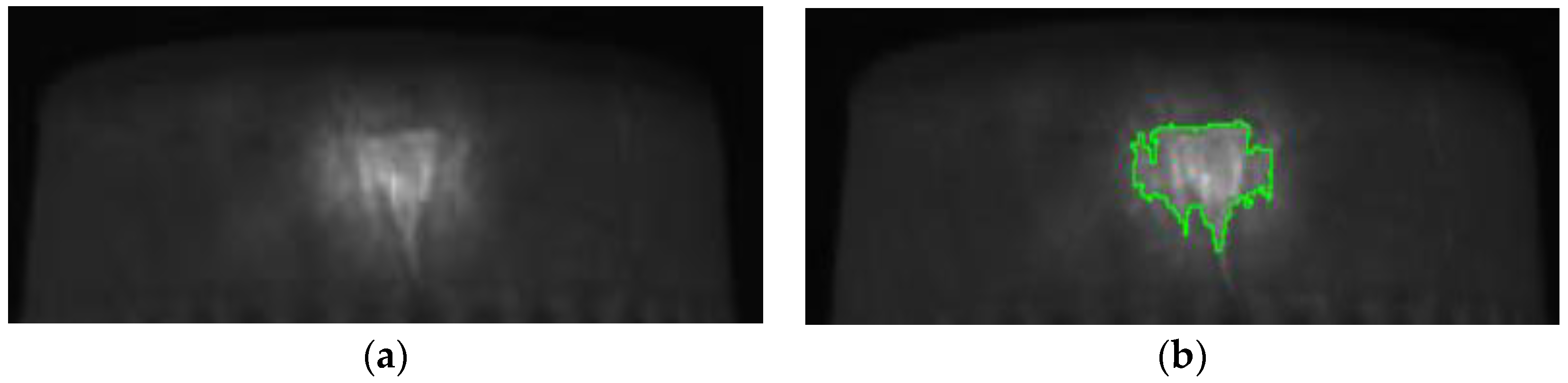

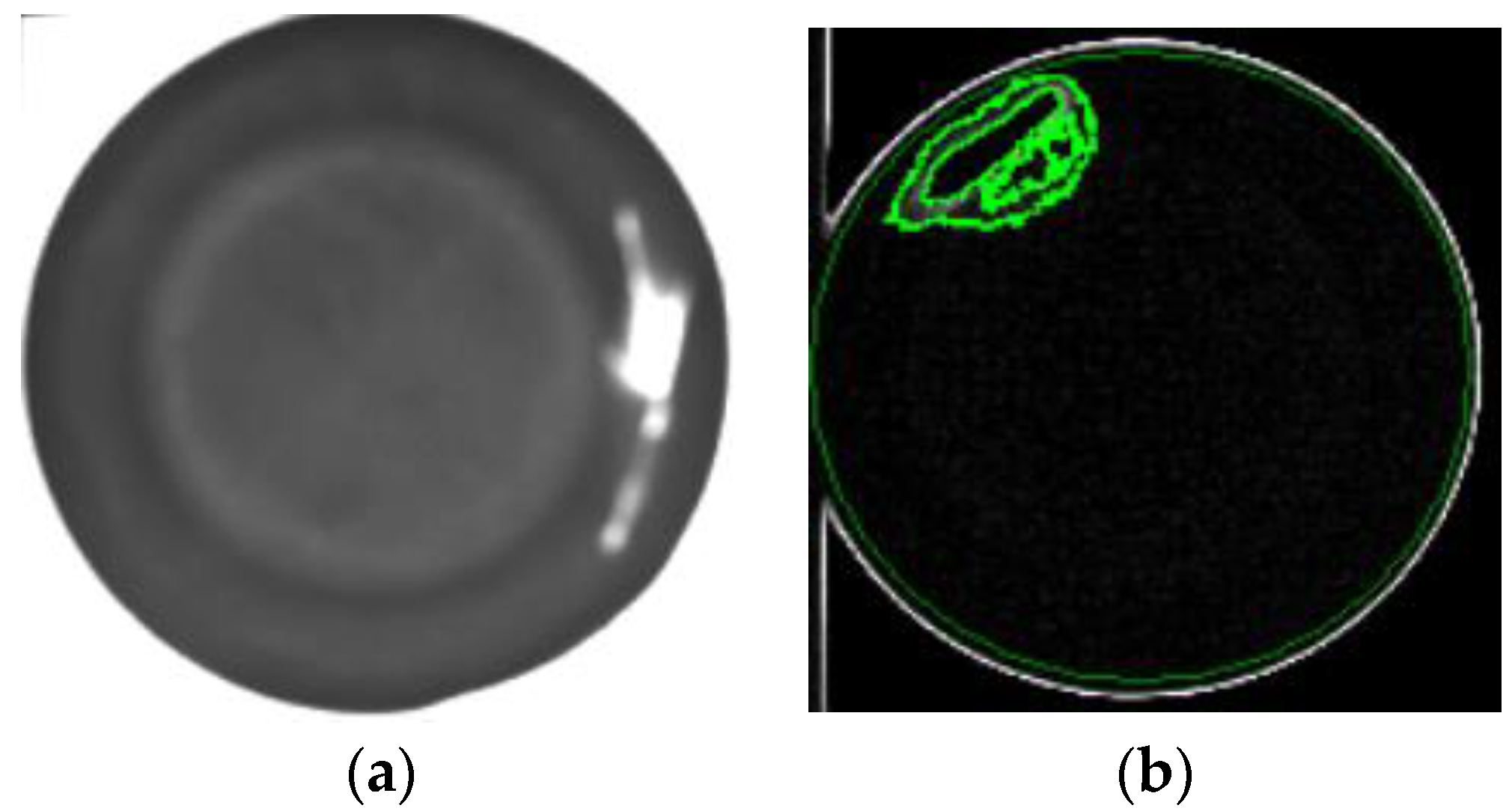

3.2. Image Processing Method

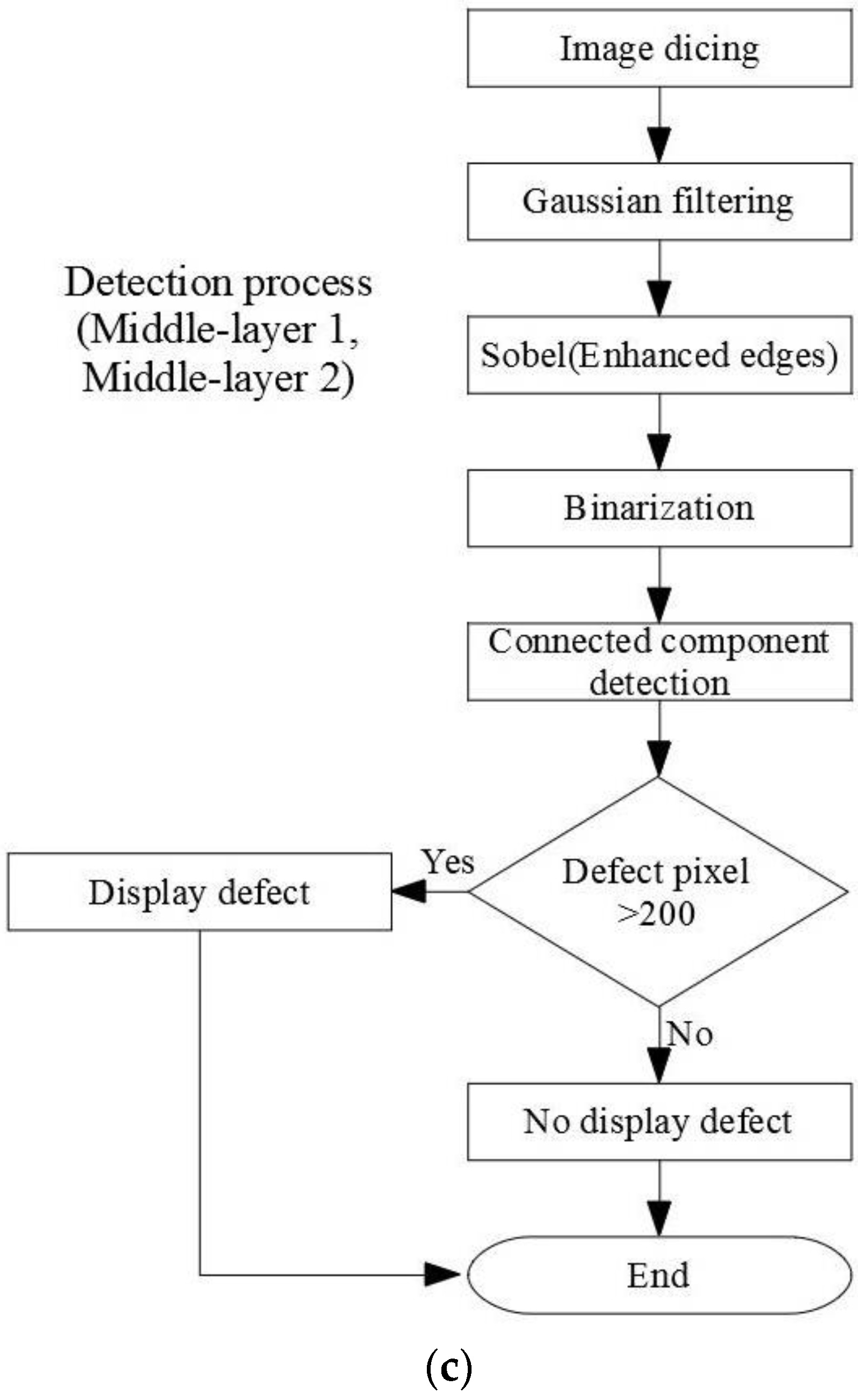

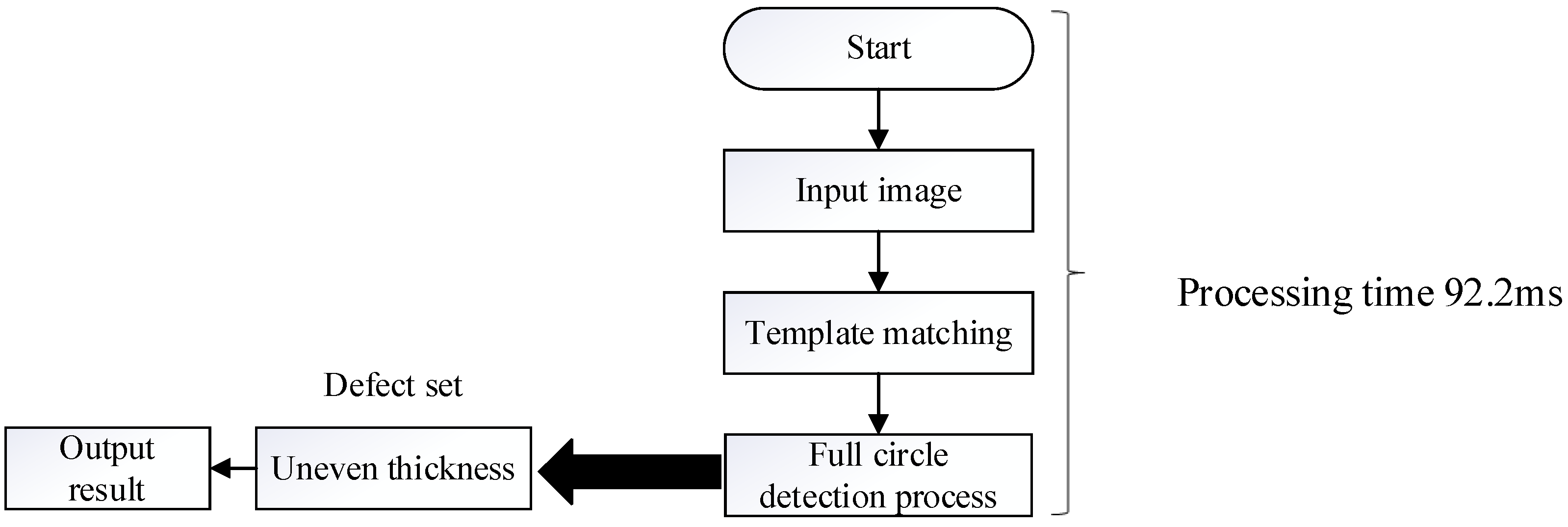

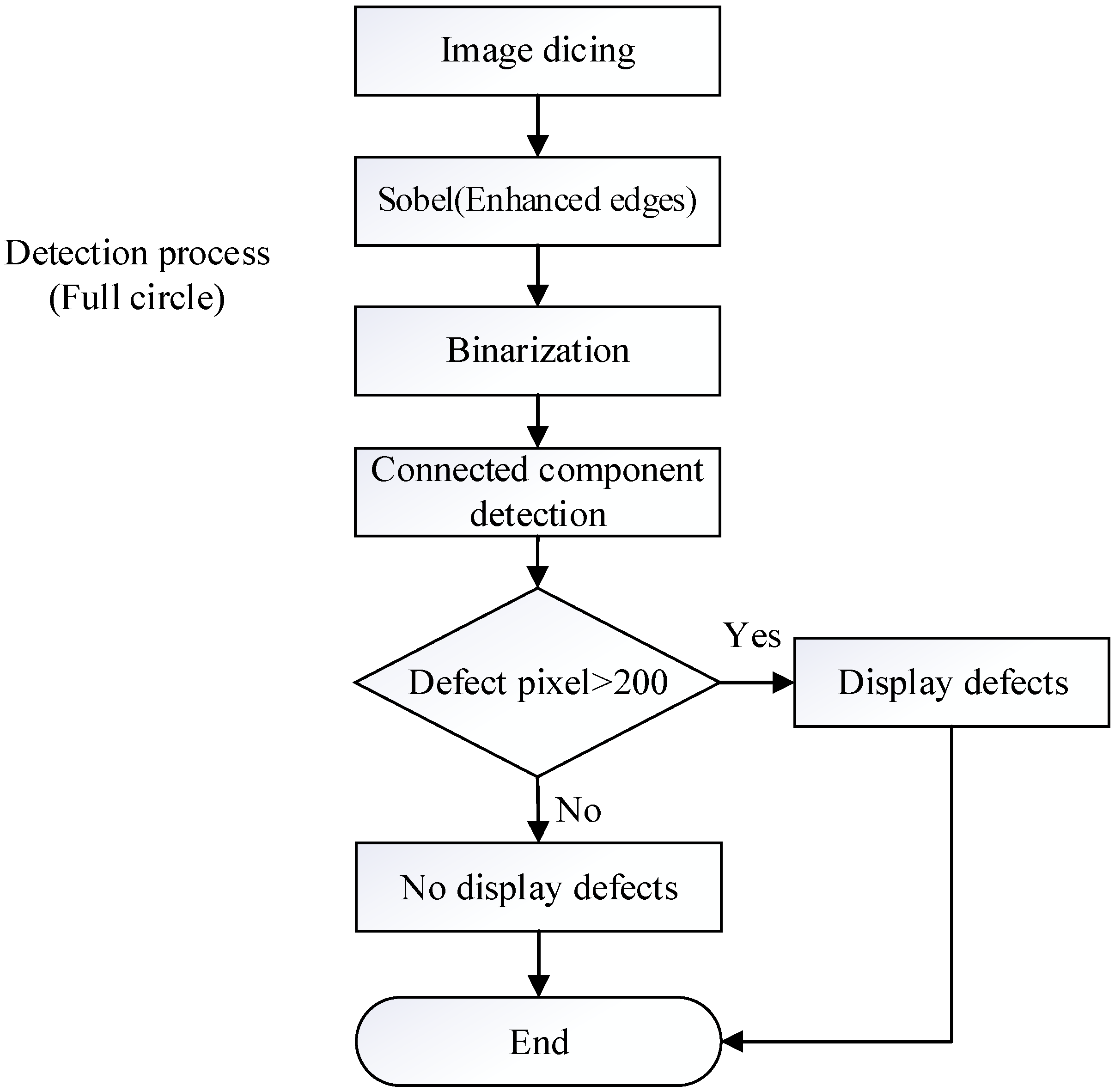

3.3. Image Processing Procedure

3.4. Detection Results of Defects in Paper Cups

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- An Environmental Protection Agency. Plastic-Reduction Business Boom Worldwide: Chung Hwa Pulp Launch Non-Plastic Pulp-based Full Product Line. (In Chinese). Available online: https://topic.epa.gov.tw/edcs/cp-362-8978-30510-6.html (accessed on 25 December 2020).

- Finean, M.N.; Merkt, M.; Havoutis, I. Where Should I Look? Optimised Gaze Control for Whole-Body Collision Avoidance in Dynamic Environments. IEEE Robot. Autom. Lett. 2022, 7, 1095–1102. [Google Scholar] [CrossRef]

- Yang, S.; Hu, Y.; Yang, W.; Duan, L.Y.; Liu, J. Towards Coding for Human and Machine Vision: Scalable Face Image Coding. IEEE Trans. Multimed. 2021, 23, 2957–2971. [Google Scholar] [CrossRef]

- Zhu, H.; Huang, J.; Liu, H.; Zhou, Q.; Zhu, J.; Li, B. Deep-Learning-Enabled Automatic Optical Inspection for Module-Level Defects in LCD. IEEE Internet Things J. 2022, 9, 1122–1135. [Google Scholar] [CrossRef]

- Liao, H.C.; Lim, Z.Y.; Hu, Y.X.; Tseng, H.W. Guidelines of automated optical inspection (AOI) system development. In Proceedings of the IEEE 3rd International Conference on Signal and Image Processing (ICSIP), Shenzhen, China, 13–15 July 2018; pp. 362–366. [Google Scholar]

- Choi, J.; Kim, B.; Jeon, J.Y.; Lee, H.J.; Lim, E.; Rhee, C.E. A Lightweight and Efficient GPU for NDP Utilizing Data Access Pattern of Image Processing. IEEE Trans. Comput. 2022, 71, 13–26. [Google Scholar] [CrossRef]

- Olan, F.; Arakpogun, E.O.; Jayawickrama, U.; Suklan, J.; Liu, S. Sustainable Supply Chain Finance and Supply Networks: The Role of Artificial Intelligence. IEEE Trans. Eng. Manag. 2022, 4, 1–16. [Google Scholar] [CrossRef]

- Kumra, S.; Joshi, S.; Sahin, F. Learning Robotic Manipulation Tasks via Task Progress Based Gaussian Reward and Loss Adjusted Exploration. IEEE Robot. Autom. Lett. 2022, 7, 534–541. [Google Scholar] [CrossRef]

- Chehade, A.A.; Hussein, A.A. A Multioutput Convolved Gaussian Process for Capacity Forecasting of Li-Ion Battery Cells. IEEE Trans. Power Electr. 2022, 37, 896–909. [Google Scholar] [CrossRef]

- Zhang, R.; Xiao, Q.; Du, Y.; Zuo, X. DSPI Filtering Evaluation Method Based on Sobel Operator and Image Entropy. IEEE Photon. J. 2021, 13, 7800110. [Google Scholar] [CrossRef]

- Zhang, X.; Zheng, J.; Wang, W.; Tseng, H.W.; Yang, C.F. Algorithm to Merge Images to Increase Browsing Speed of Smart Video Surveillance System. Sens. Mater. 2021, 33, 3283–3293. [Google Scholar] [CrossRef]

- Lv, W.; Xiong, L.; Xie, J.; Yang, C.F. Automatic System for Detecting Student Attendance in College Classroom Based on Template Matching Method and Cellphone Storage Hanging Pocket. Sens. Mater. 2022, 34, 3283–3293. [Google Scholar] [CrossRef]

- Esquembri, S.; Nieto, J.; Carpeño, A.; Ruiz, M.; Astrain, M.; Costa, V.; Gracia, A. Application of Heterogeneous Computing Techniques for the Development of an Image-Based Hot Spot Detection System Using MTCA. IEEE Trans. Nucl. Sci. 2021, 68, 2151–2158. [Google Scholar] [CrossRef]

- Chaple, G.; Daruwala, R.D. Design of Sobel operator based image edge detection algorithm on FPGA. In Proceedings of the International Conference on Communication and Signal Processing, Bangalore, India, 22–25 July 2014; pp. 788–792. [Google Scholar]

- Zhang, C.C.; Fang, J.D. Edge Detection Based on Improved Sobel Operator. In Proceedings of the International Conference on Computer Engineering and Information Systems, Cairo, Egypt, 20–21 December 2016; pp. 129–132. [Google Scholar]

- Wang, M.; Zheng, S.; Li, X.; Qin, X. A new image denoising method based on Gaussian filter. In Proceedings of the International Conference on Information Science, Electronics and Electrical Engineering, Sapporo, Japan, 26–28 April 2014; pp. 163–167. [Google Scholar]

- He, M.Q.; Zhang, D.B.; Wang, Z.D. Quantum Gaussian filter for exploring ground-state properties. Phys. Rev. A 2022, 106, 032420. [Google Scholar] [CrossRef]

- Windrim, L.; Carnegie, A.J.; Webster, M.; Bryson, M. Tree Detection and Health Monitoring in Multispectral Aerial Imagery and Photogrammetric Pointclouds Using Machine Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2554–2572. [Google Scholar] [CrossRef]

- Starynska, A.; Messinger, D.; Kong, Y. Revealing a history: Palimpsest text separation with generative networks. Int. J. Doc. Anal. Recognit. 2021, 24, 181–195. [Google Scholar] [CrossRef]

- Kim, S.; Yoo, J.; Choi, H.; Seo, J.; Lee, S.; Won, S.M.; Park, J.H.; Heo, K. In-Depth Study of 3D Color-Resist Coating Process for Optically Uniform Image Sensors. IEEE Access 2021, 9, 146525–146532. [Google Scholar] [CrossRef]

- Callens, N.; Gielen, G.G.E. Analysis and Comparison of Readout Architectures and Analog-to-Digital Converters for 3D-Stacked CMOS Image Sensors. IEEE Trans. Circuits Syst. I Regul. Pap. 2021, 68, 3117–3130. [Google Scholar] [CrossRef]

- Wu, Y.; Wen, J.; Zhang, P. Application of AOI light source modes in multi-chip modules inspection. In Proceedings of the 19th International Conference on Electronic Packaging Technology (ICEPT), Shanghai, China, 8–11 August 2018; pp. 141–143. [Google Scholar]

- Peng, D.; Zhang, K.; Liu, Z. Design and Fabrication of Fine-Pitch Pixelated-Addressed Micro-LED Arrays on Printed Circuit Board for Display and Communication Applications. IEEE J. Electron Devices Soc. 2017, 5, 90–94. [Google Scholar] [CrossRef]

- Wu, W.; Xian, Y.; Su, J.; Ren, L. A Siamese Template Matching Method for SAR and Optical Image. IEEE Geosci. Remote. Sens. Lett. 2021, 19, 4017905. [Google Scholar] [CrossRef]

- Lee, W.C.; Cao, K.S. Application of Machine Vision to Inspect a Wiring Harness. In Proceedings of the 2019 IEEE International Conference on Industrial Cyber Physical Systems (ICPS), Taipei, Taiwan, 6–9 May 2019; pp. 457–460. [Google Scholar]

- Park, C.H.; Kwon, Y.H.; Lee, S.O.; Jung, J.Y. Automated Inspection for Paper Cups Using Deep Learning. J. Korean Soc. Precis. Eng. 2017, 34, 449–453. [Google Scholar] [CrossRef]

- Bing, Z.L. Inspection of the outer packaging quality of paper cups based on machine vision. Comput. CD Softw. Appl. 2012, 10, 56–58. (In Chinese) [Google Scholar]

| WD (mm) | Pixel Area (Pixels) | Identified Defect Area (mm2) | Actual Defect Area (mm2) | Error (%) |

|---|---|---|---|---|

| 310 | 2959 | 22.5 | 25 | 10 |

| 320 | 2803 | 22.7 | 25 | 9.2 |

| 330 | 2620 | 22.6 | 25 | 9.7 |

| 340 | 2472 | 22.6 | 25 | 9.6 |

| 350 | 2302 | 22.3 | 25 | 10.8 |

| 360 | 2218 | 22.7 | 25 | 9.0 |

| 370 | 2087 | 22.6 | 25 | 9.6 |

| 380 | 1977 | 22.6 | 25 | 9.7 |

| 390 | 1878 | 22.6 | 25 | 9.6 |

| 400 | 1764 | 22.3 | 25 | 10.7 |

| Average error 9.8 | ||||

| WD (mm) | Pixel Area (Pixels) | Identified Defect Area (mm2) | Actual Defect Area (mm2) | Error (%) |

|---|---|---|---|---|

| 310 | 11,972 | 91 | 100 | 9.0 |

| 320 | 11,322 | 91.7 | 100 | 8.3 |

| 330 | 10,571 | 91.1 | 100 | 8.9 |

| 340 | 10,069 | 92.1 | 100 | 7.9 |

| 350 | 9435 | 91.4 | 100 | 8.6 |

| 360 | 9037 | 92.6 | 100 | 7.4 |

| 370 | 8508 | 92.1 | 100 | 7.9 |

| 380 | 8060 | 92.1 | 100 | 7.9 |

| 390 | 7675 | 92.3 | 100 | 7.7 |

| 400 | 7256 | 91.8 | 100 | 8.2 |

| Average error 8.2 | ||||

| WD(mm) | Pixel Area (Pixels) | Identified Defect Area (mm2) | Actual Defect Area (mm2) | Error (%) |

|---|---|---|---|---|

| 310 | 48,034 | 365.1 | 400 | 8.7 |

| 320 | 45,295 | 366.9 | 400 | 8.3 |

| 330 | 42,427.3 | 365.5 | 400 | 8.6 |

| 340 | 40,361.6 | 369.1 | 400 | 7.7 |

| 350 | 37,906.8 | 367.3 | 400 | 8.2 |

| 360 | 36,136.6 | 370.5 | 400 | 7.4 |

| 370 | 34,123.4 | 369.5 | 400 | 7.6 |

| 380 | 32,402.2 | 370.1 | 400 | 7.5 |

| 390 | 30,946.8 | 372.3 | 400 | 6.9 |

| 400 | 29,343.4 | 371.4 | 400 | 7.2 |

| Average error 7.8 | ||||

| Sample | Internal (ms) | External (ms) | Bottom (ms) |

|---|---|---|---|

| 1 | 105 | 72 | 95 |

| 2 | 112 | 74 | 94 |

| 3 | 102 | 70 | 93 |

| 4 | 102 | 69 | 92 |

| 5 | 106 | 74 | 91 |

| 6 | 144 | 69 | 90 |

| 7 | 114 | 71 | 91 |

| 8 | 130 | 70 | 90 |

| 9 | 106 | 76 | 92 |

| 10 | 110 | 66 | 89 |

| 11 | 110 | 52 | 91 |

| 12 | 108 | 71 | 92 |

| 13 | 114 | 71 | 93 |

| 14 | 104 | 71 | 95 |

| 15 | 101 | 70 | 93 |

| 16 | 151 | 69 | 90 |

| 17 | 105 | 68 | 94 |

| 18 | 105 | 69 | 99 |

| 19 | 144 | 73 | 92 |

| 20 | 147 | 66 | 88 |

| Average | 116 | 69.6 | 92.2 |

| Total | 277.8 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, P.; Lee, Y.-H.; Tseng, H.-W.; Yang, C.-F. Research and Evaluation on an Optical Automatic Detection System for the Defects of the Manufactured Paper Cups. Sensors 2023, 23, 1452. https://doi.org/10.3390/s23031452

Wang P, Lee Y-H, Tseng H-W, Yang C-F. Research and Evaluation on an Optical Automatic Detection System for the Defects of the Manufactured Paper Cups. Sensors. 2023; 23(3):1452. https://doi.org/10.3390/s23031452

Chicago/Turabian StyleWang, Ping, Yang-Han Lee, Hsien-Wei Tseng, and Cheng-Fu Yang. 2023. "Research and Evaluation on an Optical Automatic Detection System for the Defects of the Manufactured Paper Cups" Sensors 23, no. 3: 1452. https://doi.org/10.3390/s23031452

APA StyleWang, P., Lee, Y.-H., Tseng, H.-W., & Yang, C.-F. (2023). Research and Evaluation on an Optical Automatic Detection System for the Defects of the Manufactured Paper Cups. Sensors, 23(3), 1452. https://doi.org/10.3390/s23031452