Active and Low-Cost Hyperspectral Imaging for the Spectral Analysis of a Low-Light Environment

Abstract

1. Introduction

1.1. Background

1.2. Related Works

1.3. Contributions

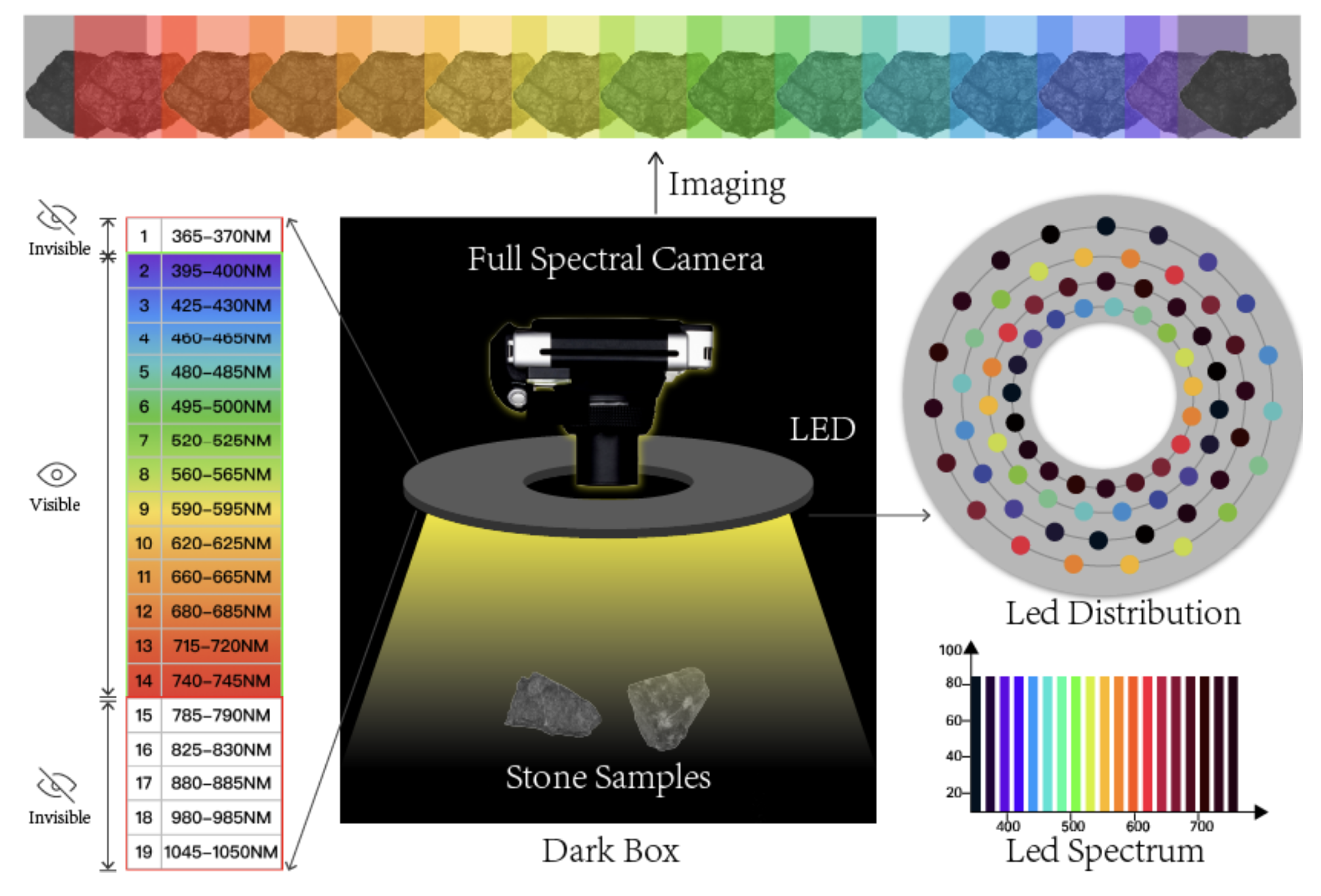

2. Methods and Materials

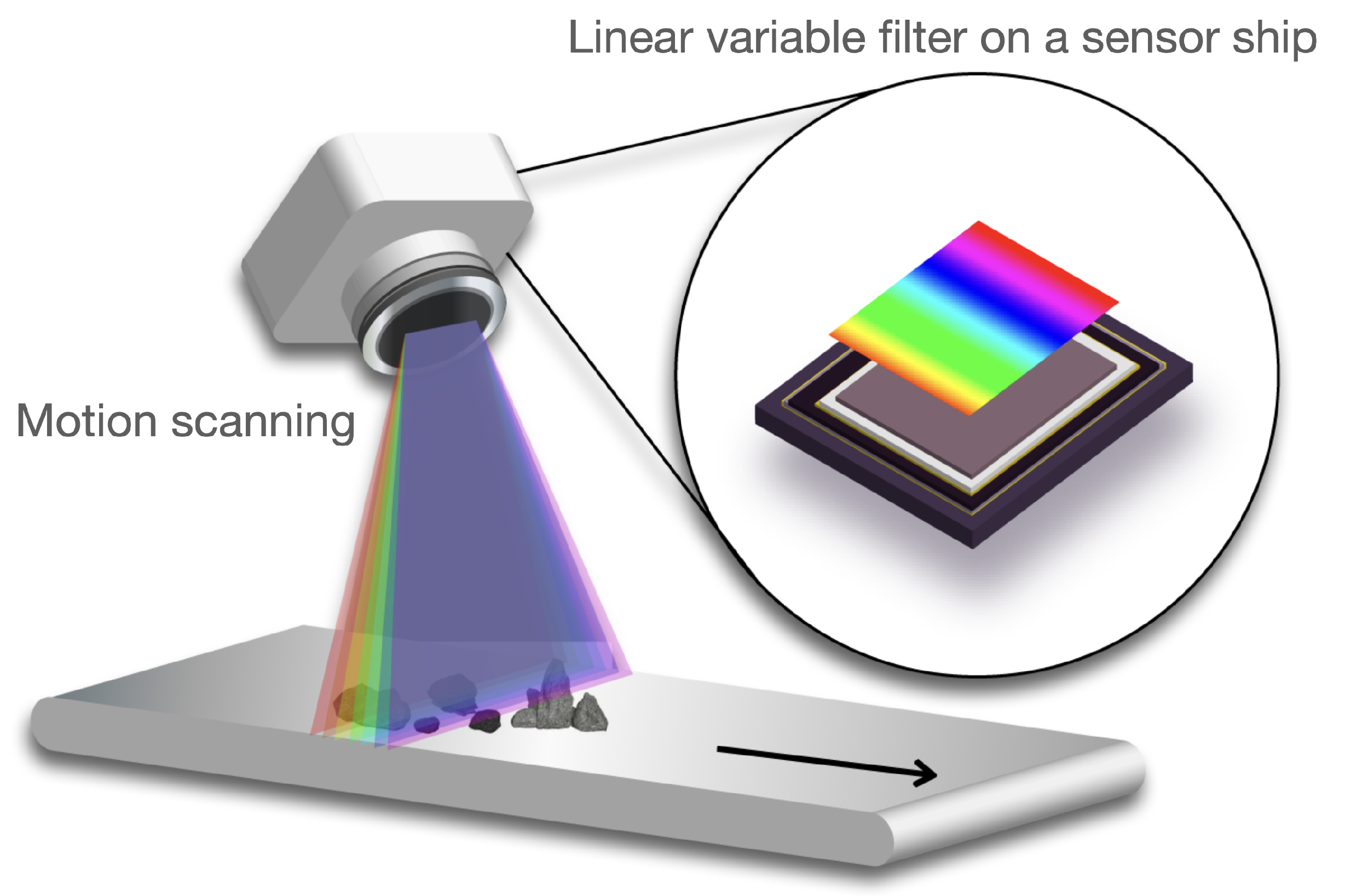

2.1. An Overview of the Proposed System

2.2. The LED Illuminator

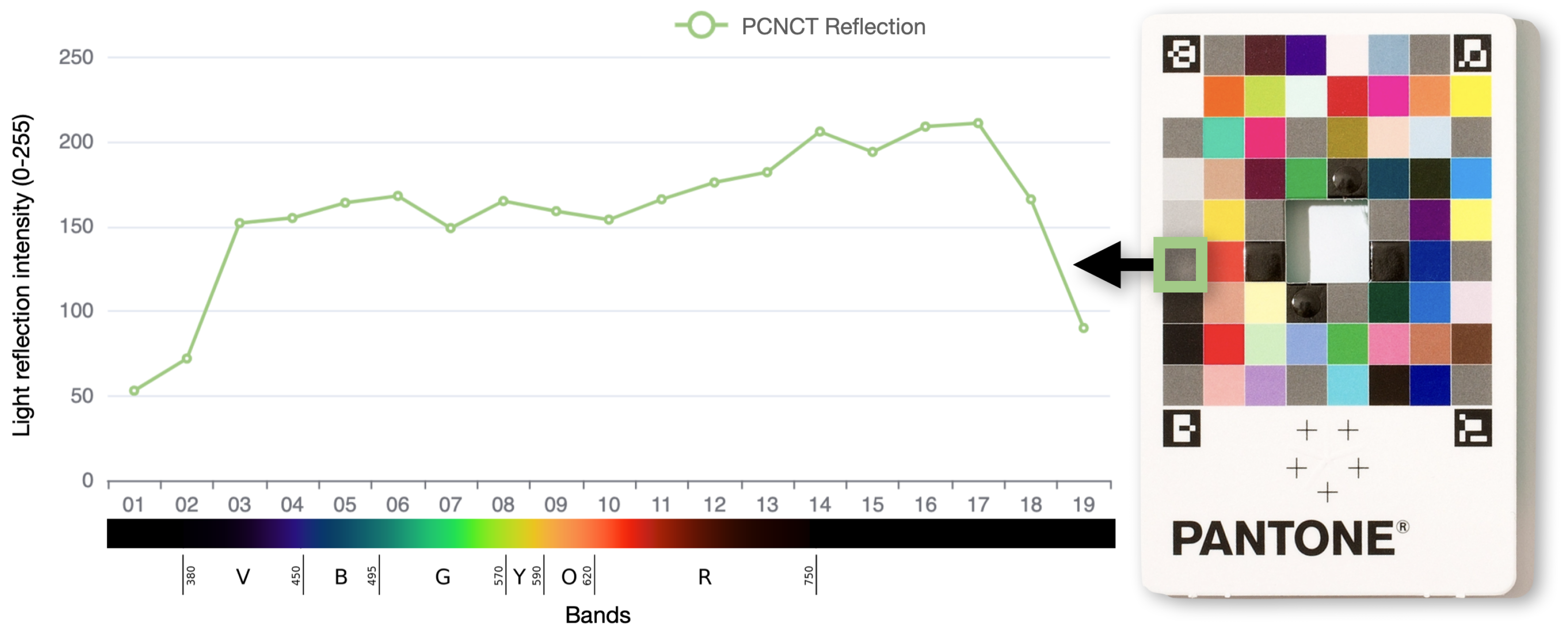

2.3. Camera and Illuminator Control

2.4. Image Collection

3. Experiment

3.1. Experiment I—Identifying Fresh and Wilted Strawberry

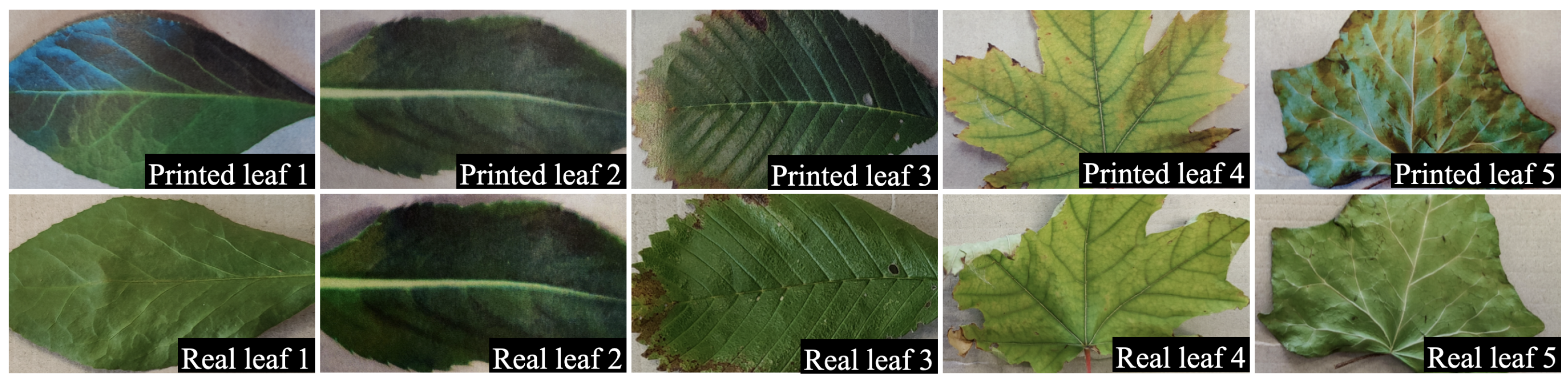

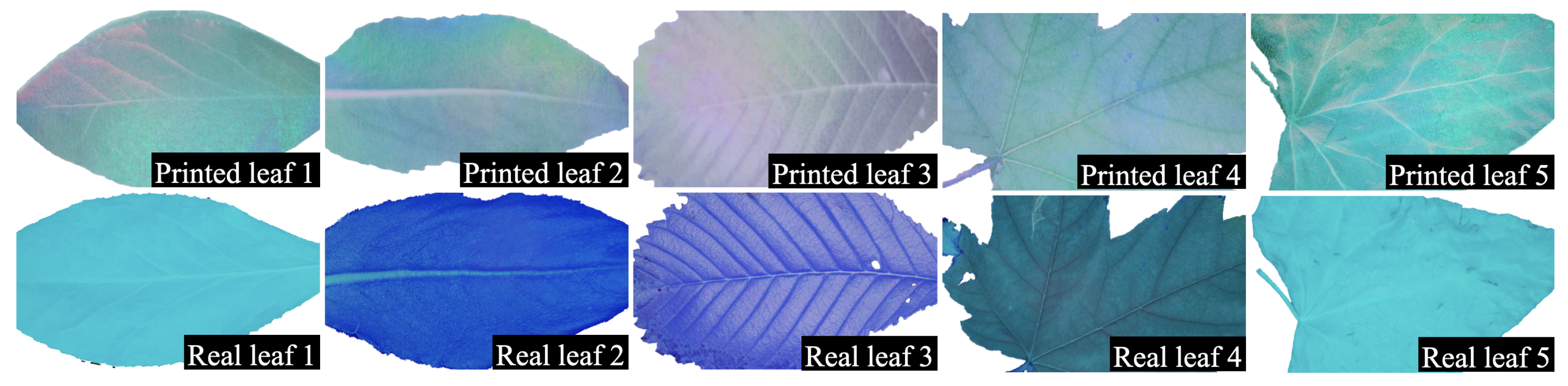

3.2. Experiment II—Leaf Experiment

3.3. Experiment III—Stone Specimen Experiment

4. Discussion

5. Conclusions

6. Disclaimer

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| LED | Light-Emitting Diode |

| CNN | Convolutional Neural Networks |

| PCB | Printed Circuit Board |

| DC | Direct Current |

| PCNCT | Pantone Color Match Card |

| CMOS | Complementary Metal Oxide Semiconductor |

| UV | Ultraviolet |

| IR | Infrared |

| HDR | High Dynamic Range |

References

- Sowmya, V.; Soman, K.P.; Hassaballah, M. Hyperspectral Image: Fundamentals and Advances. In Recent Advances in Computer Vision: Theories and Applications; Hassaballah, M., Hosny, K.M., Eds.; Studies in Computational Intelligence; Springer International Publishing: Cham, Switzerland, 2019; pp. 401–424. [Google Scholar] [CrossRef]

- Li, J.; Pei, Y.; Zhao, S.; Xiao, R.; Sang, X.; Zhang, C. A Review of Remote Sensing for Environmental Monitoring in China. Remote Sens. 2020, 12, 1130. [Google Scholar] [CrossRef]

- Song, W.; Song, W.; Gu, H.; Li, F. Progress in the Remote Sensing Monitoring of the Ecological Environment in Mining Areas. Int. J. Environ. Res. Public Health 2020, 17, 1846. [Google Scholar] [CrossRef] [PubMed]

- Liaghat, S.; Balasundram, S. A Review: The Role of Remote Sensing in Precision Agriculture. Am. J. Agric. Biol. Sci. 2010, 5, 50–55. [Google Scholar] [CrossRef]

- Ambrose, A.; Kandpal, L.M.; Kim, M.S.; Lee, W.H.; Cho, B.K. High Speed Measurement of Corn Seed Viability Using Hyperspectral Imaging. Infrared Phys. Technol. 2016, 75, 173–179. [Google Scholar] [CrossRef]

- Mehta, N.; Sahu, S.P.; Shaik, S.; Devireddy, R.; Gartia, M.R. Dark-Field Hyperspectral Imaging for Label Free Detection of Nano-Bio-Materials. WIREs Nanomed. Nanobiotechnol. 2021, 13, e1661. [Google Scholar] [CrossRef] [PubMed]

- Raza, A.; Dumortier, D.; Jost-Boissard, S.; Cauwerts, C.; Dubail, M. Accuracy of Hyperspectral Imaging Systems for Color and Lighting Research. LEUKOS 2023, 19, 16–34. [Google Scholar] [CrossRef]

- Roggo, Y.; Edmond, A.; Chalus, P.; Ulmschneider, M. Infrared Hyperspectral Imaging for Qualitative Analysis of Pharmaceutical Solid Forms. Anal. Chim. Acta 2005, 535, 79–87. [Google Scholar] [CrossRef]

- Lassalle, G.; Credoz, A.; Hédacq, R.; Fabre, S.; Dubucq, D.; Elger, A. Assessing Soil Contamination Due to Oil and Gas Production Using Vegetation Hyperspectral Reflectance. Environ. Sci. Technol. 2018, 52, 1756–1764. [Google Scholar] [CrossRef] [PubMed]

- Saeedifar, M.; Mansvelder, J.; Mohammadi, R.; Zarouchas, D. Using Passive and Active Acoustic Methods for Impact Damage Assessment of Composite Structures. Compos. Struct. 2019, 226, 111252. [Google Scholar] [CrossRef]

- Dong, L.; Pei, Z.; Xie, X.; Zhang, Y.; Yan, X. Early Identification of Abnormal Regions in Rock-Mass Using Traveltime Tomography. Engineering 2022. [Google Scholar] [CrossRef]

- Zhang, Y.B.; Yao, X.L.; Liang, P.; Wang, K.X.; Sun, L.; Tian, B.Z.; Liu, X.X.; Wang, S.Y. Fracture Evolution and Localization Effect of Damage in Rock Based on Wave Velocity Imaging Technology. J. Cent. South Univ. 2021, 28, 2752–2769. [Google Scholar] [CrossRef]

- Lacar, F.M.; Lewis, M.; Grierson, I. Use of Hyperspectral Imagery for Mapping Grape Varieties in the Barossa Valley, South Australia. In Proceedings of the IGARSS 2001, Scanning the Present and Resolving the Future, IEEE 2001 International Geoscience and Remote Sensing Symposium (Cat. No.01CH37217), Sydney, Australia, 9–13 July 2001. [Google Scholar] [CrossRef]

- Asner, G.P.; Knapp, D.E.; Kennedy-Bowdoin, T.; Jones, M.O.; Martin, R.E.; Boardman, J.W.; Field, C.B. Carnegie Airborne Observatory: In-Flight Fusion of Hyperspectral Imaging and Waveform Light Detection and Ranging for Three-Dimensional Studies of Ecosystems. J. Appl. Remote Sens. 2007, 1, 013536. [Google Scholar] [CrossRef]

- Ferwerda, J.G. Charting the Quality of Forage: Measuring and Mapping the Variation of Chemical Components in Foliage with Hyperspectral Remote Sensing; Wageningen University: Wageningen, The Netherlands, 2005. [Google Scholar]

- Mars, J.C.; Crowley, J.K. Mapping Mine Wastes and Analyzing Areas Affected by Selenium-Rich Water Runoff in Southeast Idaho Using AVIRIS Imagery and Digital Elevation Data. Remote Sens. Environ. 2003, 84, 422–436. [Google Scholar] [CrossRef]

- Zhang, M.; He, T.; Li, G.; Xiao, W.; Song, H.; Lu, D.; Wu, C. Continuous Detection of Surface-Mining Footprint in Copper Mine Using Google Earth Engine. Remote Sens. 2021, 13, 4273. [Google Scholar] [CrossRef]

- Yu, L.; Xu, Y.; Xue, Y.; Li, X.; Cheng, Y.; Liu, X.; Porwal, A.; Holden, E.J.; Yang, J.; Gong, P. Monitoring Surface Mining Belts Using Multiple Remote Sensing Datasets: A Global Perspective. Ore Geol. Rev. 2018, 101, 675–687. [Google Scholar] [CrossRef]

- Themelis, G.; Yoo, J.S.; Ntziachristos, V. Multispectral Imaging Using Multiple-Bandpass Filters. Opt. Lett. 2008, 33, 1023–1025. [Google Scholar] [CrossRef]

- Du, H.; Tong, X.; Cao, X.; Lin, S. A Prism-Based System for Multispectral Video Acquisition. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 175–182. [Google Scholar] [CrossRef]

- Gómez-Sanchis, J.; Lorente, D.; Soria-Olivas, E.; Aleixos, N.; Cubero, S.; Blasco, J. Development of a Hyperspectral Computer Vision System Based on Two Liquid Crystal Tuneable Filters for Fruit Inspection. Application to Detect Citrus Fruits Decay. Food Bioprocess Technol. 2014, 7, 1047–1056. [Google Scholar] [CrossRef]

- Renhorn, I.G.E.; Bergström, D.; Hedborg, J.; Letalick, D.; Möller, S. High Spatial Resolution Hyperspectral Camera Based on a Linear Variable Filter. Opt. Eng. 2016, 55, 114105. [Google Scholar] [CrossRef]

- Functionality of Measuring Systems—LLA Instruments GmbH & Co KG. Available online: https://www.lla-instruments.de/en/how-it-works-en/functionality-of-measuring-systems.html (accessed on 18 December 2022).

- Fischer, C.; Kakoulli, I. Multispectral and Hyperspectral Imaging Technologies in Conservation: Current Research and Potential Applications. Stud. Conserv. 2006, 51, 3–16. [Google Scholar] [CrossRef]

- Boldrini, B.; Kessler, W.; Rebner, K.; Kessler, R.W. Hyperspectral Imaging: A Review of Best Practice, Performance and Pitfalls for in-Line and on-Line Applications. J. Near Infrared Spectrosc. 2012, 20, 483–508. [Google Scholar] [CrossRef]

- Guo, Z.; Liu, Y.; Zheng, X.; Yin, K. Active Hyperspectral Imaging with a Supercontinuum Laser Source in the Dark. Chin. Phys. B 2019, 28, 034206. [Google Scholar] [CrossRef]

- Multispectral Imaging Systems. Available online: https://spectraldevices.com/collections/multispectral-imaging-system (accessed on 18 December 2022).

- Song, J.Y.; Bian, L.F.; Sun, X.M.; Ding, Z.; Yang, C. Design of Active Hyperspectral Light Source Based on Compact Light Pipe with LED Deflection Layout. Opt. Laser Technol. 2022, 145, 107536. [Google Scholar] [CrossRef]

- Park, J.I.; Lee, M.H.; Grossberg, M.D.; Nayar, S.K. Multispectral Imaging Using Multiplexed Illumination. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio De Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Li, H.N.; Feng, J.; Yang, W.P.; Wang, L.; Xu, H.B.; Cao, P.F.; Duan, J.J. Multi-Spectral Imaging Using LED Illuminations. In Proceedings of the 2012 5th International Congress on Image and Signal Processing, Chongqing, China, 16–18 October 2012; pp. 538–542. [Google Scholar] [CrossRef]

- Wang, H.; Hu, Y.; Ma, X.; Sun, J.; Sun, X.; Chen, D.; Zheng, X.; Li, Q. An Active Hyperspectral Imaging System Based on a Multi-LED Light Source. Rev. Sci. Instrum. 2019, 90, 026107. [Google Scholar] [CrossRef] [PubMed]

- Goel, M.; Whitmire, E.; Mariakakis, A.; Saponas, T.S.; Joshi, N.; Morris, D.; Guenter, B.; Gavriliu, M.; Borriello, G.; Patel, S.N. HyperCam: Hyperspectral Imaging for Ubiquitous Computing Applications. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp’15), Osaka, Japan, 7–11 September 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 145–156. [Google Scholar] [CrossRef]

- Orlando, S.; Minacapilli, M.; Sarno, M.; Carrubba, A.; Motisi, A. A Low-Cost Multispectral Imaging System for the Characterisation of Soil and Small Vegetation Properties Using Visible and near-Infrared Reflectance. Comput. Electron. Agric. 2022, 202, 107359. [Google Scholar] [CrossRef]

- Tschannerl, J.; Ren, J.; Zhao, H.; Kao, F.J.; Marshall, S.; Yuen, P. Hyperspectral Image Reconstruction Using Multi-colour and Time-multiplexed LED Illumination. Opt. Lasers Eng. 2019, 121, 352–357. [Google Scholar] [CrossRef]

- Mo, C.; Kim, G.; Lee, K.; Kim, M.S.; Cho, B.K.; Lim, J.; Kang, S. Non-Destructive Quality Evaluation of Pepper (Capsicum annuum L.) Seeds Using LED-induced Hyperspectral Reflectance Imaging. Sensors 2014, 14, 7489–7504. [Google Scholar] [CrossRef]

- JSSS. Near-infrared LED System to Recognize Road Surface Conditions for Autonomous Vehicles. 2022. Available online: https://jsss.copernicus.org/articles/11/187/2022/ (accessed on 18 December 2022).

- Casselgren, J.; Rosendahl, S.; Sjödahl, M.; Jonsson, P. Road Condition Analysis Using NIR Illumination and Compensating for Surrounding Light. Opt. Lasers Eng. 2016, 77, 175–182. [Google Scholar] [CrossRef]

- Thörnberg, B. The Material Imaging Analyzer MIA. In Proceedings of the 2022 IEEE Sensors Applications Symposium (SAS), Sundsvall, Sweden, 1–3 August 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Xu, J.L.; Sun, D.W. Identification of Freezer Burn on Frozen Salmon Surface Using Hyperspectral Imaging and Computer Vision Combined with Machine Learning Algorithm. Int. J. Refrig. 2017, 74, 151–164. [Google Scholar] [CrossRef]

- Xu, J.L.; Riccioli, C.; Sun, D.W. Comparison of Hyperspectral Imaging and Computer Vision for Automatic Differentiation of Organically and Conventionally Farmed Salmon. J. Food Eng. 2017, 196, 170–182. [Google Scholar] [CrossRef]

- Hong, D.; Han, Z.; Yao, J.; Gao, L.; Zhang, B.; Plaza, A.; Chanussot, J. SpectralFormer: Rethinking Hyperspectral Image Classification With Transformers. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yao, J.; Zhang, B.; Plaza, A.; Chanussot, J. Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5966–5978. [Google Scholar] [CrossRef]

- Chang, J.R.; Chen, Y.S. Pyramid Stereo Matching Network. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5410–5418. [Google Scholar] [CrossRef]

- Lohumi, S.; Lee, H.; Kim, M.S.; Qin, J.; Kandpal, L.M.; Bae, H.; Rahman, A.; Cho, B.K. Calibration and Testing of a Raman Hyperspectral Imaging System to Reveal Powdered Food Adulteration. PLoS ONE 2018, 13, e0195253. [Google Scholar] [CrossRef] [PubMed]

- Abdel-Rahman, F.; Okeremgbo, B.; Alhamadah, F.; Anthony, K.; Saleh, M. Caenorhabditis Elegans as a Model to Study the Impact of Exposure to Light Emitting Diode (LED) Domestic Lighting. J. Environ. Sci. Health Part A Toxic/Hazardous Subst. Environ. Eng. 2017, 52, 1–7. [Google Scholar] [CrossRef]

- Li, S.X. Filter Selection for Optimizing the Spectral Sensitivity of Broadband Multispectral Cameras Based on Maximum Linear Independence. Sensors 2018, 18, 1455. [Google Scholar] [CrossRef] [PubMed]

- Yu, C.; Han, R.; Song, M.; Liu, C.; Chang, C.I. A Simplified 2D-3D CNN Architecture for Hyperspectral Image Classification Based on Spatial–Spectral Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2485–2501. [Google Scholar] [CrossRef]

- Yang, X.; Ye, Y.; Li, X.; Lau, R.Y.K.; Zhang, X.; Huang, X. Hyperspectral Image Classification With Deep Learning Models. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5408–5423. [Google Scholar] [CrossRef]

- Zhang, Y.; Duijster, A.; Scheunders, P. A Bayesian Restoration Approach for Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3453–3462. [Google Scholar] [CrossRef]

- Oh, S.W.; Brown, M.S.; Pollefeys, M.; Kim, S.J. Do It Yourself Hyperspectral Imaging with Everyday Digital Cameras. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2461–2469. [Google Scholar] [CrossRef]

- Khan, M.N. Understanding Led Illumination; Taylor & Francis: Oxfordshire, UK, 2020. [Google Scholar]

- Deep Red LED, 3535 Led Chip, Hyper Red Led. Available online: https://www.moon-leds.com/product-3535-deep-red-660nm-smd-led.html (accessed on 18 December 2022).

- Royal Blue 3535 SMD LED, 3535 LED, Blue Led. Available online: https://www.moon-leds.com/product-royal-blue-450nm-3535-smd-led.html (accessed on 18 December 2022).

- Huang, Y.; Cohen, T.A.; Luscombe, C.K. Naturally Derived Organic Dyes for LED Lightings of High Color Rendering and Fidelity Index. ChemRxiv 2021. [Google Scholar] [CrossRef]

- LED Correlated Color Temperature and 5050 LEDs. Available online: https://www.boogeylights.com/understanding-led-color-temperature/ (accessed on 18 December 2022).

- PANTONE® USA|Pantone Color Match Card (PCNCT). Available online: https://www.pantone.com/pantone-color-match-card (accessed on 18 December 2022).

- Bhargava, A.; Bansal, A. Fruits and Vegetables Quality Evaluation Using Computer Vision: A Review. J. King Saud Univ. Comput. Inf. Sci. 2021, 33, 243–257. [Google Scholar] [CrossRef]

- Nagata, M.; Tallada, J.G.; Kobayashi, T. Bruise Detection Using NIR Hyperspectral Imaging for Strawberry (Fragaria × ananassa Duch.). Environ. Control Biol. 2006, 44, 133–142. [Google Scholar] [CrossRef]

- Cheng, J.H.; Sun, D.W.; Nagata, M.; Tallada, J.G. Chapter 13—Quality Evaluation of Strawberry. In Computer Vision Technology for Food Quality Evaluation, 2nd ed.; Sun, D.W., Ed.; Academic Press: San Diego, CA, USA, 2016; pp. 327–350. [Google Scholar] [CrossRef]

- Mihai, D.; Strǎjescu, E. From Wavelength to R G B Filter. UPB Sci. Bull. 2007, 69, 77–84. [Google Scholar]

- Krauz, L.; Páta, P.; Kaiser, J. Assessing the Spectral Characteristics of Dye- and Pigment-Based Inkjet Prints by VNIR Hyperspectral Imaging. Sensors 2022, 22, 603. [Google Scholar] [CrossRef] [PubMed]

- Borsuk, A.M.; Brodersen, C.R. The Spatial Distribution of Chlorophyll in Leaves. Plant Physiol. 2019, 180, 1406–1417. [Google Scholar] [CrossRef] [PubMed]

| Band(#) | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|

| Wavelength (nm) | 365–370 | 395–400 | 425–430 | 460–465 | 480–485 | 495–500 | 520–525 |

| Band(#) | 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| Wavelength (nm) | 560–565 | 590–595 | 620–625 | 660–665 | 680–685 | 715–720 | 740–745 |

| Band(#) | 15 | 16 | 17 | 18 | 19 | ||

| Wavelength (nm) | 785–790 | 825–830 | 880–885 | 980–985 | 1045–1050 |

| Data | Mean Value | Median Value | Gaussian Weighted Mean Value |

|---|---|---|---|

| RGB image | 74.4% | 75.1% | 68.8% |

| Hyperspectral image | 90.2% | 91.6% | 91.0% |

| Difference | +15.8% | +16.5% | +22.2% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, Y.; Song, S.; Gui, S.; Chao, W.; Cheng, C.; Qin, R. Active and Low-Cost Hyperspectral Imaging for the Spectral Analysis of a Low-Light Environment. Sensors 2023, 23, 1437. https://doi.org/10.3390/s23031437

Tang Y, Song S, Gui S, Chao W, Cheng C, Qin R. Active and Low-Cost Hyperspectral Imaging for the Spectral Analysis of a Low-Light Environment. Sensors. 2023; 23(3):1437. https://doi.org/10.3390/s23031437

Chicago/Turabian StyleTang, Yang, Shuang Song, Shengxi Gui, Weilun Chao, Chinmin Cheng, and Rongjun Qin. 2023. "Active and Low-Cost Hyperspectral Imaging for the Spectral Analysis of a Low-Light Environment" Sensors 23, no. 3: 1437. https://doi.org/10.3390/s23031437

APA StyleTang, Y., Song, S., Gui, S., Chao, W., Cheng, C., & Qin, R. (2023). Active and Low-Cost Hyperspectral Imaging for the Spectral Analysis of a Low-Light Environment. Sensors, 23(3), 1437. https://doi.org/10.3390/s23031437