Intraprocedure Artificial Intelligence Alert System for Colonoscopy Examination

Abstract

1. Introduction

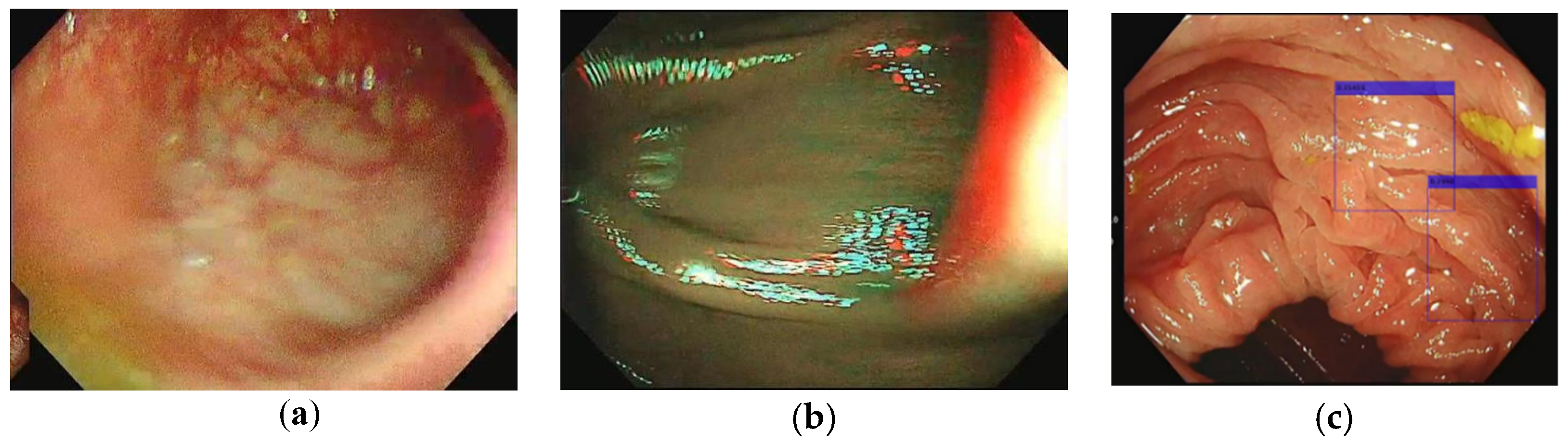

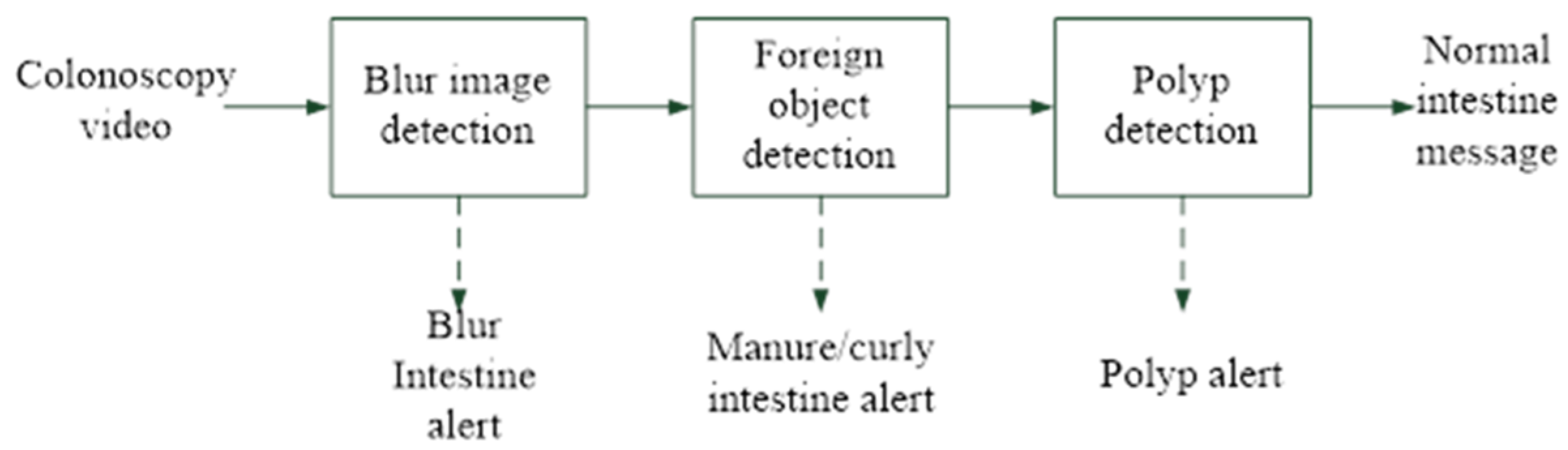

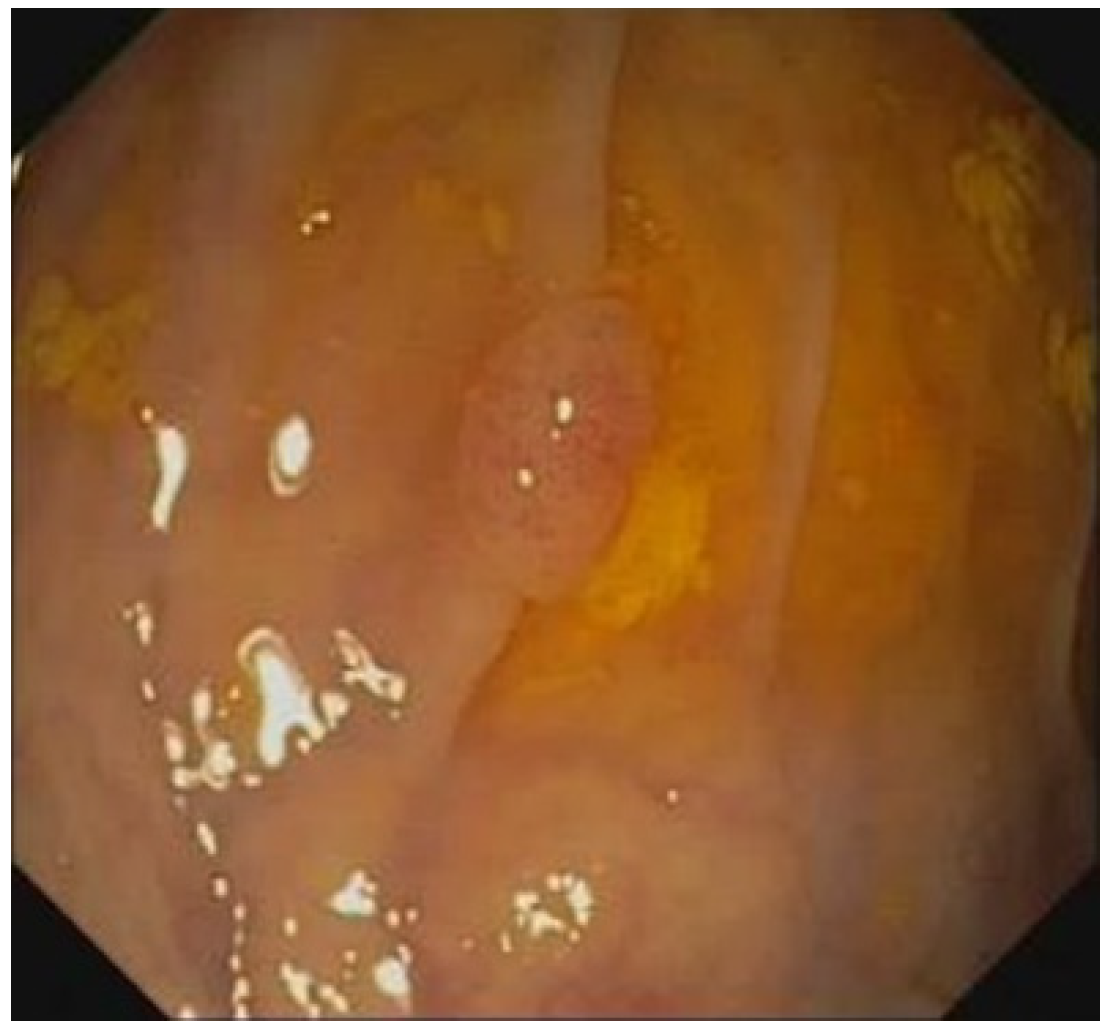

2. Materials and Methods

3. Experimental Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Galloro, G.; Ruggiero, S.; Russo, T.; Saunders, B. Recent advances to improve the endoscopic detection and differentiation of early colorectal neoplasia. Color. Dis. 2015, 17, 25–30. [Google Scholar] [CrossRef]

- Kaltenbach, T.; Sano, Y.; Friedland, S.; Soetikno, R.; American Gastroenterological Association. American Gastroenterological Association (AGA) Institute technology assessment on image-enhanced endoscopy. Gastroenterology 2008, 134, 327–340. [Google Scholar] [CrossRef]

- Hsu, C.M.; Hsu, C.C.; Hsu, Z.M.; Shih, F.Y.; Chang, M.L.; Chen, T.H. Colorectal Polyp Image Detection and Classification through Grayscale Images and Deep Learning. Sensors 2021, 21, 5995. [Google Scholar] [CrossRef]

- Suzuki, H.; Yamamura, T.; Nakamura, M.; Hsu, C.M.; Su, M.Y.; Chen, T.H.; Chiu, C.T.; Hirooka, Y.; Goto, H. An International Study on the Diagnostic Accuracy of the Japan Narrow-Band Imaging Expert Team Classification for Colorectal Polyps Observed with Blue Laser Imaging. Digestion 2020, 101, 339–346. [Google Scholar] [CrossRef]

- Zauber, A.G.; Winawer, S.J.; O’Brien, M.J.; Lansdorp-Vogelaar, I.; Ballegooijen, M.V.; Hankey, B.F.; Shi, W.; Bond, J.H.; Schapiro, M.; Panish, J.F.; et al. Colonoscopic Polypectomy and Long-Term Prevention of Colorectal-Cancer Deaths. N. Engl. J. Med. 2012, 366, 687–696. [Google Scholar] [CrossRef]

- Rex, D.K.; Schoenfeld, P.S.; Cohen, J.; Pike, I.M.; Adler, D.G.; Fennerty, M.B.; Lieb, J.G., 2nd; Park, W.G.; Rizk, M.K.; Sawhney, M.S.; et al. Quality indicators for colonoscopy. Am. J. Gastroenterol. 2015, 110, 72–90. [Google Scholar] [CrossRef]

- May, F.P.; Shaukat, A. State of the Science on Quality Indicators for Colonoscopy and How to Achieve Them. Am. J. Gastroenterol. 2020, 115, 1183–1190. [Google Scholar] [CrossRef]

- Korbar, B.; Olofson, A.M.; Miraflor, A.P.; Nicka, C.M.; Suriawinata, M.A.; Torresani, L.; Suriawinata, A.A.; Hassanpour, S. Looking under the Hood: Deep Neural Network Visualization to Interpret Whole-Slide Image Analysis Outcomes for Colorectal Polyps. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 821–827. [Google Scholar] [CrossRef]

- Liu, Q. Deep Learning Applied to Automatic Polyp Detection in Colonoscopy Images. Master’s Thesis, University College of Southeast Norway, Notodden, Norway, 2017. Available online: http://hdl.handle.net/11250/2449603 (accessed on 1 January 2022).

- Chao, W.L.; Manickavasagan, H.; Krishna, S.G. Application of Artificial Intelligence in the Detection and Differentiation of Colon Polyps: A Technical Review for Physicians. Diagnostics 2019, 9, 99. [Google Scholar] [CrossRef]

- Barua, I.; Vinsard, D.G.; Jodal, H.C.; Loberg, M.; Kalager, M.; Holme, O.; Misawa, M.; Bretthauer, M.; Mori, Y. Artificial intelligence for polyp detection during colonoscopy: A systematic review and meta-analysis. Endoscopy 2021, 53, 277–284. [Google Scholar] [CrossRef]

- Jin, P.; Ji, X.; Kang, W.; Li, Y.; Liu, H.; Ma, F.; Ma, S.; Hu, H.; Li, W.; Tian, Y. Artificial intelligence in gastric cancer: A systematic review. J. Cancer Res. Clin. Oncol. 2020, 146, 2339–2350. [Google Scholar] [CrossRef]

- Sanchez-Peralta, L.F.; Bote-Curiel, L.; Picon, A.; Sanchez-Margallo, F.M.; Pagador, J.B. Deep learning to find colorectal polyps in colonoscopy: A systematic literature review. Artif. Intell. Med. 2020, 108, 101923. [Google Scholar] [CrossRef]

- Parmar, R.; Martel, M.; Rostom, A.; Barkun, A.N. Validated Scales for Colon Cleansing: A Systematic Review. Am. J. Gastroenterol. 2016, 111, 197–204. [Google Scholar] [CrossRef]

- Chen, C.-W. Real-time Colorectal Polyp Segmentation with Deep Learning in NBI and WL Colonoscopy. Master’s Thesis, National Taiwan University, Taipei, Taiwan, 2021. [Google Scholar] [CrossRef]

- Park, S.Y. Colonoscopic Polyp Detection Using Convolutional Neural Networks. In Proceedings of the Medical Imaging 2016: Computer-Aided Diagnosis, San Diego, CA, USA, 27 February–March 2016; p. 978528. [Google Scholar]

- Shin, Y.; Qadir, H.A.; Aabakken, L.; Bergsland, J.; Balasingham, I. Automatic Colon Polyp Detection Using Region Based Deep CNN and Post Learning Approaches. IEEE Access 2018, 6, 40950–40962. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, R. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the 28th International Conference on Neural Information Processing Systems (NIPS’15), Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Wang, P.; Xiao, X.; Glissen Brown, J.R.; Berzin, T.M.; Tu, M.; Xiong, F.; Hu, X.; Liu, P.; Song, Y.; Zhang, D.; et al. Development and validation of a deep-learning algorithm for the detection of polyps during colonoscopy. Nat. Biomed. Eng. 2018, 2, 741–748. [Google Scholar] [CrossRef]

- Zheng, Y.; Yu, R.; Jiang, Y.; Mak, T.W.C.; Wong, S.H.; Lau, J.Y.W.; Poon, C.C.Y. Localisation of Colorectal Polyps by Convolutional Neural Network Features Learnt from White Light and Narrow Band Endoscopic Images of Multiple Databases. In Proceedings of the 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 4142–4145. [Google Scholar]

- Nogueira-Rodríguez, A.; Domínguez-Carbajales, R.; Campos-Tato, F.; Herrero, J.; Puga, M.; Remedios, D.; Rivas, L.; Sánchez, E.; Iglesias, Á.; Cubiella, J.; et al. Real-time polyp detection model using convolutional neural networks. Neural Comput. Appl. 2022, 34, 10375–10396. [Google Scholar] [CrossRef]

- Li, K.; Fathan, M.I.; Patel, K.; Zhang, T.; Zhong, C.; Bansal, A.; Rastogi, A.; Wang, J.S.; Wang, G. Colonoscopy polyp detection and classification: Dataset creation and comparative evaluations. PLoS ONE 2021, 16, e0255809. [Google Scholar] [CrossRef]

- Viscaino, M.; Torres Bustos, J.; Munoz, P.; Auat Cheein, C.; Cheein, F.A. Artificial intelligence for the early detection of colorectal cancer: A comprehensive review of its advantages and misconceptions. World J. Gastroenterol. 2021, 27, 6399–6414. [Google Scholar] [CrossRef]

- Hassan, C.; Badalamenti, M.; Maselli, R.; Correale, L.; Iannone, A.; Radaelli, F.; Rondonotti, E.; Ferrara, E.; Spadaccini, M.; Alkandari, A.; et al. Computer-aided detection-assisted colonoscopy: Classification and relevance of false positives. Gastrointest. Endosc. 2020, 92, 900–904.e4. [Google Scholar] [CrossRef] [PubMed]

- Rutter, M.D.; Beintaris, I.; Valori, R.; Chiu, H.M.; Corley, D.A.; Cuatrecasas, M.; Dekker, E.; Forsberg, A.; Gore-Booth, J.; Haug, U.; et al. World Endoscopy Organization Consensus Statements on Post-Colonoscopy and Post-Imaging Colorectal Cancer. Gastroenterology 2018, 155, 909–925.e3. [Google Scholar] [CrossRef]

- Liu, Y.; Li, Y.; Liu, J.; Peng, X.; Zhou, Y.; Murphey, Y.L. FOD Detection using DenseNet with Focal Loss of Object Samples for Airport Runway. In Proceedings of the IEEE Symposium Series on Computational Intelligence (SSCI), Bangalore, India, 18–21 November 2018; pp. 547–554. [Google Scholar]

- Xu, H.; Han, Z.; Feng, S.; Zhou, H.; Fang, Y. Foreign object debris material recognition based on convolutional neural networks. EURASIP J. Image Video Process. 2018, 2018, 21. [Google Scholar] [CrossRef]

- Snover, D.C. Update on the serrated pathway to colorectal carcinoma. Hum. Pathol. 2011, 42, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Hisabe, T.; Hirai, F.; Matsui, T. Development and progression of colorectal cancer based on follow-up analysis. Dig. Endosc. 2014, 26, 73–77. [Google Scholar] [CrossRef] [PubMed]

- Winawer, S.J.; Zauber, A.G.; Ho, M.N.; O’Brien, M.J.; Gottlieb, L.S.; Sternberg, S.S.; Waye, J.D.; Schapiro, M.; Bond, J.H.; Panish, J.F.; et al. Prevention of colorectal cancer by colonoscopic polypectomy. The National Polyp Study Workgroup. N. Engl. J. Med. 1993, 329, 1977–1981. [Google Scholar] [CrossRef]

- Nishihara, R.; Wu, K.; Lochhead, P.; Morikawa, T.; Liao, X.; Qian, Z.R.; Inamura, K.; Kim, S.A.; Kuchiba, A.; Yamauchi, M.; et al. Long-term colorectal-cancer incidence and mortality after lower endoscopy. N. Engl. J. Med. 2013, 369, 1095–1105. [Google Scholar] [CrossRef] [PubMed]

- Haug, U.; Regula, J. Interval cancer: Nightmare of colonoscopists. Gut 2014, 63, 865–866. [Google Scholar] [CrossRef] [PubMed]

- Rex, D.K. Colonoscopic withdrawal technique is associated with adenoma miss rates. Gastrointest. Endosc. 2000, 51, 33–36. [Google Scholar] [CrossRef]

- Aronchick, C.A.; Lipshutz, W.H.; Wright, S.H.; Dufrayne, F.; Bergman, G. A novel tableted purgative for colonoscopic preparation: Efficacy and safety comparisons with Colyte and Fleet Phospho-Soda. Gastrointest. Endosc. 2000, 52, 346–352. [Google Scholar] [CrossRef]

- Lai, E.J.; Calderwood, A.H.; Doros, G.; Fix, O.K.; Jacobson, B.C. The Boston bowel preparation scale: A valid and reliable instrument for colonoscopy-oriented research. Gastrointest. Endosc. 2009, 69, 620–625. [Google Scholar] [CrossRef]

- Rostom, A.; Jolicoeur, E. Validation of a new scale for the assessment of bowel preparation quality. Gastrointest. Endosc. 2004, 59, 482–486. [Google Scholar] [CrossRef]

- Johnson, D.A.; Barkun, A.N.; Cohen, L.B.; Dominitz, J.A.; Kaltenbach, T.; Martel, M.; Robertson, D.J.; Boland, C.R.; Giardello, F.M.; Lieberman, D.A.; et al. Optimizing adequacy of bowel cleansing for colonoscopy: Recommendations from the US multi-society task force on colorectal cancer. Gastroenterology 2014, 147, 903–924. [Google Scholar] [CrossRef]

- Barclay, R.L.; Vicari, J.J.; Doughty, A.S.; Johanson, J.F.; Greenlaw, R.L. Colonoscopic Withdrawal Times and Adenoma Detection during Screening Colonoscopy. N. Engl. J. Med. 2006, 355, 2533–2541. [Google Scholar] [CrossRef] [PubMed]

- Shaukat, A.; Rector, T.S.; Church, T.R.; Lederle, F.A.; Kim, A.S.; Rank, J.M.; Allen, J.I. Longer Withdrawal Time Is Associated with a Reduced Incidence of Interval Cancer After Screening Colonoscopy. Gastroenterology 2015, 149, 952–957. [Google Scholar] [CrossRef] [PubMed]

- Gong, D.; Wu, L.; Zhang, J.; Mu, G.; Shen, L.; Liu, J.; Wang, Z.; Zhou, W.; An, P.; Huang, X.; et al. Detection of colorectal adenomas with a real-time computer-aided system (ENDOANGEL): A randomised controlled study. Lancet Gastroenterol. Hepatol. 2020, 5, 352–361. [Google Scholar] [CrossRef] [PubMed]

- Su, J.R.; Li, Z.; Shao, X.J.; Ji, C.R.; Ji, R.; Zhou, R.C.; Li, G.C.; Liu, G.Q.; He, Y.S.; Zuo, X.L.; et al. Impact of a real-time automatic quality control system on colorectal polyp and adenoma detection: A prospective randomized controlled study (with videos). Gastrointest. Endosc. 2020, 91, 415–424.e4. [Google Scholar] [CrossRef] [PubMed]

- Pickhardt, P.J.; Nugent, P.A.; Mysliwiec, P.A.; Choi, J.R.; Schindler, W.R. Location of adenomas missed by optical colonoscopy. Ann. Intern. Med. 2004, 141, 352–359. [Google Scholar] [CrossRef] [PubMed]

| Image Type | Image# | |

|---|---|---|

| Blurred image | 2500 | |

| Folds/fecal matter and water | 1250 | |

| Good quality image | Polyp | 1250 |

| Normal | 1250 | |

| Total | 6250 | |

| Image Type | Image# | |

|---|---|---|

| Blurred image | 8716 | |

| Folds/fecal matter and water | 1967 | |

| Good quality image | Polyp | 50 |

| Normal | 399 | |

| Total | 11132 | |

| Case# | Polyp# |

|---|---|

| 1 | 2 |

| 2 | 1 |

| 3 | 1 |

| 4 | 1 |

| 5 | 1 |

| 6 | 1 |

| Dataset | Image# | Size |

|---|---|---|

| CVC-ClinicDB-training | 612 | 384 × 288 |

| PolypsSet-training | 500 | 640 × 480 |

| Total | 1112 |

| Layers | Filters (N) | Size/Stride | Output (W × H) |

|---|---|---|---|

| Image Input | 480 × 640 | ||

| Convolution | 16 | (3 × 3) | 480 × 640 |

| Batch Normalization | 480 × 640 | ||

| ReLu | 480 × 640 | ||

| Max pooling | 2 × 2/2 | 240 × 320 | |

| Convolution | 16 | (3 × 3) | 240 × 320 |

| Batch Normalization | 240 × 320 | ||

| ReLu | 240 × 320 | ||

| Max Pooling | 2 × 2/2 | 480 × 640 | |

| Convolution | 32 | (3 × 3) | 480 × 640 |

| Batch Normalization | 480 × 640 | ||

| ReLu | 480 × 640 | ||

| Max Pooling | 2 × 2/2 | 120 × 160 | |

| Convolution | 32 | (3 × 3) | 120 × 160 |

| Batch Normalization | 120 × 160 | ||

| ReLu | 120 × 160 | ||

| Max Pooling | 2 × 2/2 | 60 × 80 | |

| Convolution | 32 | (3 × 3) | 60 × 80 |

| Batch Normalization | 60 × 80 | ||

| ReLu | 60 × 80 | ||

| Max Pooling | 2 × 2/2 | 30 × 40 | |

| Convolution | 32 | (3 × 3) | 30 × 40 |

| Batch Normalization | 30 × 40 | ||

| ReLu | 30 × 40 | ||

| Max Pooling | 2 × 2/2 | 15 × 20 | |

| Convolution | 32 | (3 × 3) | 15 × 20 |

| Batch Normalization | 15 × 20 | ||

| ReLu | 15 × 20 | ||

| Max Pooling | 2 × 2/2 | 7 × 10 | |

| Fully Connected | 7 × 10 | ||

| Softmax | 7 × 10 | ||

| Classification Output | 7 × 10 |

| Layers | Filters (N) | Size/Stride | Output (W × H) |

|---|---|---|---|

| Image Input | 128 × 128 | ||

| Convolution | 32 | (3 × 3 + 3 × 1 + 1 × 3) | 128 × 128 |

| Batch Normalization | 128 × 128 | ||

| ReLu | 128 × 128 | ||

| Max Pooling | 2 × 2/2 | 64 × 64 | |

| Convolution | 64 | (3 × 3 + 3 × 1 + 1 × 3) | 64 × 64 |

| Batch Normalization | 64 × 64 | ||

| ReLu | 64 × 64 | ||

| Max Pooling | 2 × 2/2 | 32 × 32 | |

| Convolution | 128 | (3 × 3 + 3 × 1 + 1 × 3) | 32 × 32 |

| Batch Normalization | 32 × 32 | ||

| ReLu | 32 × 32 | ||

| Max Pooling | 2 × 2/2 | 16 × 16 | |

| Convolution | 256 | (3 × 3 + 3 × 1 + 1 × 3) | 16 × 16 |

| Batch Normalization | 16 × 16 | ||

| ReLu | 16 × 16 | ||

| Max Pooling | 2 × 2/2 | 8 × 8 | |

| Convolution | 256 | (3 × 3 + 3 × 1 + 1 × 3) | 8 × 8 |

| Batch Normalization | 8 × 8 | ||

| ReLu | 8 × 8 | ||

| Convolution | 256 | (3 × 3 + 3 × 1 + 1 × 3) | 8 × 8 |

| Batch Normalization | 8 × 8 | ||

| ReLu | 8 × 8 | ||

| Convolution | 256 | (3 × 3 + 3 × 1 + 1 × 3) | 8 × 8 |

| Batch Normalization | 8 × 8 | ||

| ReLu | 8 × 8 | ||

| Convolution | 24 | 1 × 1/1 | 8 × 8 |

| Transform | 8 × 8 | ||

| Output |

| Image Type | Training Set# | Validation Set # | Total# | |

|---|---|---|---|---|

| Blurred image | 2000 | 500 | 2500 | |

| Good quality image | Polyp | 1000 | 250 | 1250 |

| Normal | 1000 | 250 | 1250 | |

| Subtotal | 4000 | 1000 | 5000 | |

| Image Type | Training Set# | Validation Set # | Total# | |

|---|---|---|---|---|

| Folds/fecal matter and water image | 1000 | 250 | 1250 | |

| Good quality image | Polyp | 500 | 125 | 625 |

| Normal | 500 | 125 | 625 | |

| Subtotal | 2000 | 500 | 2500 | |

| Blurred Image (Predicted) | Good Quality Image (Predicted) | |

|---|---|---|

| Blurred image (Actual) | 468 (TP) | 32 (FN) |

| Good quality image (Actual) | 6 (FP) | 494 (TN) |

| Folds/Fecal Matter and Water Image (Predicted) | Good Quality Image (Predicted) | |

|---|---|---|

| Folds/fecal matter and water image (Actual) | 237 (TP) | 13 (FN) |

| Good quality image (Actual) | 6 (FP) | 244 (TN) |

| Accuracy (Acc) | F1-measure (F1) | ||

| Precision (Prec) | F2-measure (F2) | ||

| Recall (Rec) |

| Acc% | Prec% | Rec% | F1% | F2% | |

|---|---|---|---|---|---|

| Blurred image detection | 96.2 | 98.8 | 93.6 | 96.1 | 94.6 |

| Foreign body detection | 96.2 | 97.5 | 94.8 | 96.1 | 95.3 |

| Case# | Actual Polyp# | Predicted Polyp# |

|---|---|---|

| 1 | 2 | 2 |

| 2 | 1 | 1 |

| 3 | 1 | 1 |

| 4 | 1 | 1 |

| 5 | 1 | 1 |

| 6 | 1 | 1 |

| Total polyp | 7 | 7 |

| Good Quality Image | Polyp Image | Total | |

|---|---|---|---|

| Image# | 399 | 50 | 449 |

| Predicted# | 382 | 46 | 428 |

| Recall (%) | 95.7 | 92 | 95.3 |

| False alarm rate | 21/11132 = 0.0018 = 0.18% | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hsu, C.-M.; Hsu, C.-C.; Hsu, Z.-M.; Chen, T.-H.; Kuo, T. Intraprocedure Artificial Intelligence Alert System for Colonoscopy Examination. Sensors 2023, 23, 1211. https://doi.org/10.3390/s23031211

Hsu C-M, Hsu C-C, Hsu Z-M, Chen T-H, Kuo T. Intraprocedure Artificial Intelligence Alert System for Colonoscopy Examination. Sensors. 2023; 23(3):1211. https://doi.org/10.3390/s23031211

Chicago/Turabian StyleHsu, Chen-Ming, Chien-Chang Hsu, Zhe-Ming Hsu, Tsung-Hsing Chen, and Tony Kuo. 2023. "Intraprocedure Artificial Intelligence Alert System for Colonoscopy Examination" Sensors 23, no. 3: 1211. https://doi.org/10.3390/s23031211

APA StyleHsu, C.-M., Hsu, C.-C., Hsu, Z.-M., Chen, T.-H., & Kuo, T. (2023). Intraprocedure Artificial Intelligence Alert System for Colonoscopy Examination. Sensors, 23(3), 1211. https://doi.org/10.3390/s23031211