A Review on Immune-Inspired Node Fault Detection in Wireless Sensor Networks with a Focus on the Danger Theory

Abstract

1. Introduction

1.1. Immune-Inspired Fault Detection

1.2. Related Work

1.3. Article Outline

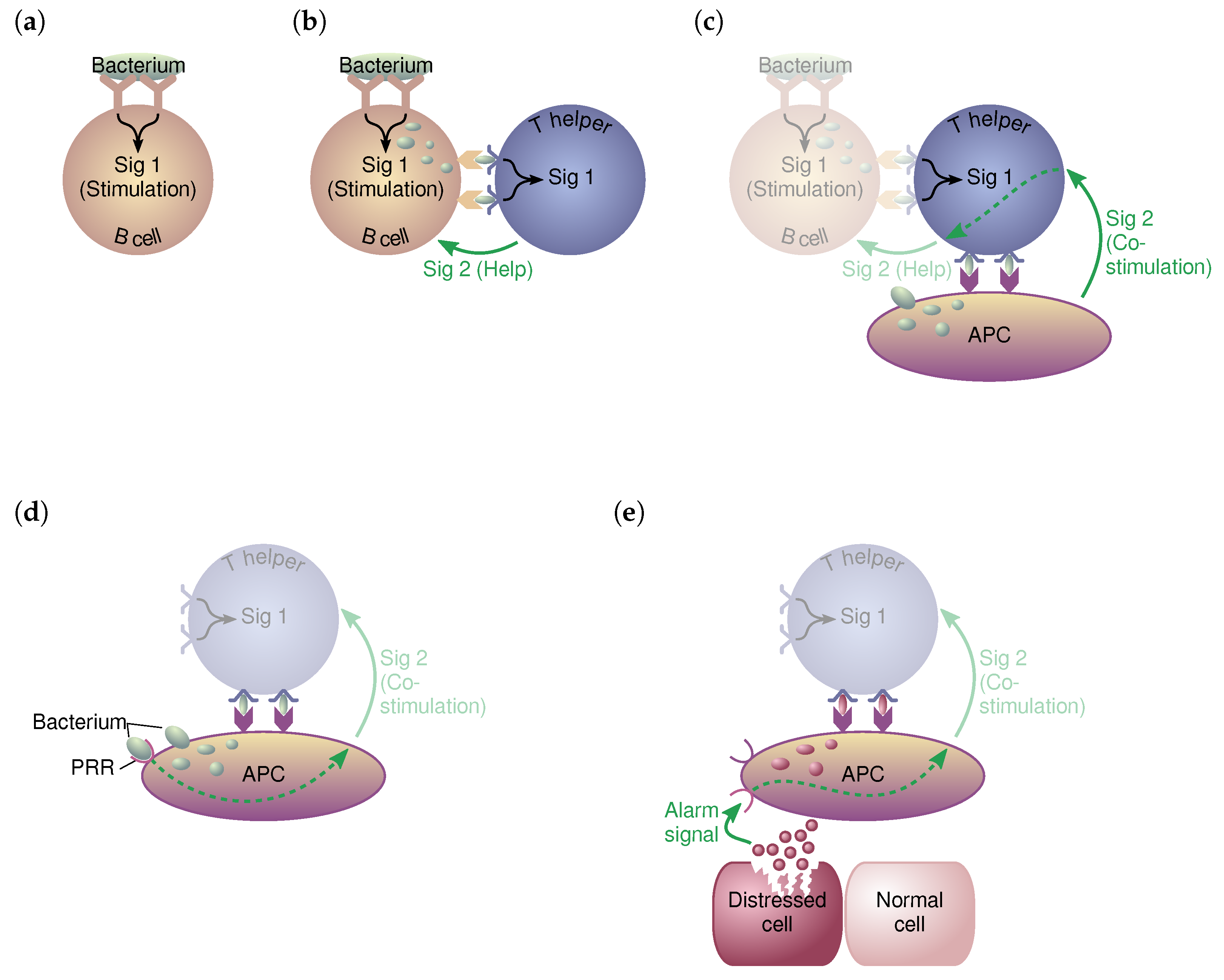

2. History and Immunological Theories

- Antigen recognition (i.e., the affinity between T cell receptors and certain antigens);

- Co-stimulation by T helper cells.

3. Unique Properties of the Immune System

- The nervous system.

- The endocrine system.

- The immune system.

3.1. Nervous, Endocrine-, and Immune System

3.2. Innate and Adaptive Immunity

- Innate (non-specific) immunity.

- Adaptive (specific) immunity.

3.2.1. Innate Immunity

- Anatomic barriers;

- Physiologic barriers;

- Endocytic and phagocytic barriers;

- Inflammatory barriers.

3.2.2. Adaptive Immunity

- The detection of intrusions by T helper cells (Th).

- The attraction of cytotoxic T cells (Tc) for the disposal of infected cells [68].

3.3. White Blood Cells

- dendritic cell (DCs) are a particular class of APCs that moves in blood and processes information about antigens and dead cells found in their way.

- T cells are produced by the bone marrow and are responsible for destroying infectious cells.

- B cells are also produced by the bone marrow and stimulate the production of antibodies.

4. The Danger Theory

4.1. Basic Concept

- Defending the host in the early stages of infection.

- Initiating adaptive immune responses.

- Determining the actual type of adaptive response through APCs (i.e., DCs).

- The tissue with the signals contained.

- The alignment of innate and adaptive immunity by DCs.

4.2. Immunological Signals

- Apoptosis: natural death of cells (the “safe signals”).

- Necrosis: unnatural death of cells (the “danger signals”).

- PAMP: biological signatures of potential intrusions (e.g., foreign bacteria).

4.3. The Role of Dendritic Cells

4.4. Impact on Computational Problems

5. Classical AIS Theories and Their Applications

- Immune modeling.

- Theoretical AISs.

- Applied AISs.

- Negative/positive selection (mainly based on T cells; see Section 5.1)

- Clonal selection (mainly based on B cells; see Section 5.2)

- Immune network theories (i.e., idiotypic network theory; see Section 5.3)

- Danger theory (i.e., dendritic cell-based algorithms; see Section 5.4)

5.1. Negative and Positive Selection

5.2. Clonal Selection

5.3. Artificial Immune Networks

5.4. Danger-Theory-Based Approaches

6. The Dendritic Cell Algorithm

6.1. Working Principle

- Initialization (setting of various parameters);

- Cell update (event-driven update of variables);

- Data aggregation.

6.1.1. Cell Update

- The sampling of antigens;

- The update of the input signals;

- The calculation of the cell’s interim output signals.

- PAMP (P) — signals that are known to be pathogenic.

- Safe (S) — signals that are known to be normal.

- Danger (D) — signals that indicate changes in behavior.

- Inflammatory (I) — signals that amplify the other signals.

- co-stimulatory molecule (CSM): expresses the cell’s maturation status.

- Semi-mature value: response to a safe environment.

- Mature value: response to a dangerous environment.

6.1.2. Data Aggregation

6.1.3. Algorithmic Properties

6.2. Variants and Further Developments

- The lifetime of the dendritic cells.

- The way antigens are sampled and stored.

- The processing of the input signals.

- The input signals (danger and safe).

- The dendritic cell population size.

- The lifetime of the single cells.

7. Literature Review on Immune-Inspired Approaches

- “AIS” OR “immune” OR “immunity”

- “anomaly” OR “abnormal” OR “fault”

- “wireless sensor network” OR “WSN” OR “sensor node”

7.1. Anomaly Detection

| Scope | Locus | Immune Concept | Target System | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Authors | Year | Data Anomaly | Network Intrusion | Fault Diagnosis | Centralized | Distributed | Negative Selection | Clonal Selection | Immune Network | Danger Theory | Sensor Networks | Computer Networks | Other | Adaptability | Learning | Notes |

| Harmer et al. [124] | 2002 | ○ | ● | ○ | ○ | ● | ● | ○ | ○ | ○ | ● | ○ | ○ | ● | ◐ | |

| Sarafijanović and Le Boudec [15] | 2005 | ○ | ● | ○ | ○ | ● | ● | ● | ○ | ○ | ○ | ● | ○ | ◐ | ◐ | Initial concept confirmed by simulations |

| Boukerche et al. [173] | 2007 | ○ | ● | ○ | ● | ○ | ● | ○ | ○ | ○ | ○ | ● | ○ | ◐ | ◐ | |

| Drozda et al. [174] | 2007 | ○ | ● | ○ | ● | ○ | ● | ○ | ○ | ○ | ● | ○ | ○ | ● | ◐ | Apply random-generate-and-test process |

| Powers and He [175] | 2008 | ○ | ● | ○ | ● | ○ | ● | ○ | ○ | ○ | ○ | ● | ○ | ● | ● | Negative selection with GA |

| Liu et al. [176] | 2008 | ○ | ● | ○ | ○ | ● | ● | ● | ○ | ○ | ● | ○ | ○ | ● | ◐ | Concept simulated with TOSSIM |

| Yang et al. [177] | 2010 | ○ | ○ | ● | ○ | ● | ● | ● | ○ | ○ | ○ | ● | ○ | ◐ | ◐ | |

| Greensmith et al. [66] | 2010 | ○ | ● | ○ | ● | ○ | ○ | ○ | ○ | ● | ○ | ● | ○ | ● | ○ | Summary of seminal works on the DCA |

| Bo Chen [111] | 2010 | ○ | ○ | ● | ○ | ● | ● | ● | ○ | ○ | ● | ○ | ○ | ◐ | ◐ | Applied to structural health monitoring |

| Laurentys et al. [178] | 2011 | ○ | ○ | ● | ● | ○ | ● | ● | ○ | ○ | ○ | ○ | ● | ◐ | ◐ | |

| Ou et al. [168] | 2013 | ○ | ● | ○ | ○ | ● | ○ | ○ | ○ | ● | ○ | ● | ○ | ● | ○ | Utilizes an adapted DCA |

| Shamshirband et al. [135] | 2014 | ○ | ● | ○ | ● | ○ | ○ | ○ | ○ | ● | ● | ○ | ○ | ◐ | ● | |

| Salvato et al. [169] | 2015 | ○ | ○ | ● | ● | ○ | ● | ● | ○ | ○ | ● | ○ | ○ | ● | ◐ | |

| Xiao et al. [179] | 2015 | ● | ○ | ● | ○ | ● | ○ | ○ | ○ | ● | ● | ○ | ○ | ● | ○ | |

| Rizwan et al. [180] | 2015 | ○ | ● | ○ | ○ | ● | ● | ○ | ○ | ○ | ● | ○ | ○ | ○ | ○ | Applies a form of artificial vaccination |

| Cui et al. [181] | 2015 | ○ | ○ | ● | ● | ○ | ○ | ○ | ○ | ● | ● | ○ | ○ | ● | ○ | DCA-based fault diagnosis |

| Mohapatra and Khilar [182] | 2017 | ○ | ○ | ● | ● | ○ | ● | ● | ○ | ○ | ● | ○ | ○ | ◐ | ◐ | |

| Sun et al. [183] | 2018 | ○ | ● | ○ | ○ | ● | ● | ○ | ○ | ○ | ● | ○ | ○ | ○ | ○ | Offline & computation-intense training |

| Alaparthy and Morgera [73] | 2018 | ○ | ● | ○ | ● | ○ | ○ | ○ | ○ | ● | ● | ○ | ○ | ● | ○ | |

| Li and Cai [184] | 2018 | ◐ | ○ | ● | ● | ○ | ○ | ○ | ○ | ● | ● | ○ | ○ | ● | ○ | |

| Alizadeh et al. [185] | 2018 | ○ | ○ | ● | ● | ○ | ○ | ○ | ○ | ● | ● | ○ | ○ | ● | ○ | DCA-based fault diagnosis |

| Akram and Raza [186] | 2018 | ○ | ○ | ● | ● | ○ | ○ | ○ | ○ | ● | ○ | ○ | ● | ● | ○ | DCA-based fault diagnosis |

| Aldhaheri et al. [187] | 2020 | ○ | ● | ○ | ● | ○ | ○ | ○ | ○ | ● | ○ | ○ | ● | ● | ● | DCA-based IDS |

| Bejoy et al. [188] | 2022 | ○ | ● | ○ | ● | ○ | ● | ● | ○ | ○ | ○ | ● | ○ | ● | ● | |

7.2. Intrusion Detection Systems (IDS)

7.3. Fault Detection

7.4. DCA-Based Fault Detection

8. Open Problems and Research Directions

8.1. Entity Mapping

8.2. Feature Selection

8.3. Learning Capabilities

8.4. Data Correlation

9. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AIN | artificial immune network |

| AIRS | artificial immune recognition system |

| AIS | artificial immune system |

| ANN | artificial neural network |

| APC | antigen-presenting cell |

| CLONALG | clonal selection algorithm |

| CPS | cyber-physical system |

| CSM | co-stimulatory molecule |

| CSPRA | conserved self pattern recognition algorithm |

| DC | dendritic cell |

| DCA | dendritic cell algorithm |

| dDCA | deterministic dendritic cell algorithm |

| FAR | false alarm rate |

| FNR | false negative rate |

| FPR | false positive rate |

| GA | genetic algorithm |

| HIS | human immune system |

| IDS | intrusion detection system |

| INS | infectious non-self |

| IoT | Internet of Things |

| min-dDCA | minimized dDCA |

| NSA | negative selection algorithm |

| OPC UA | Open Platform Communications Unified Architecture |

| OS | operating system |

| PAMP | pathogen associated molecular patterns |

| PCA | principal component analysis |

| PRR | pattern recognition receptor |

| SHM | structural health monitoring |

| SOM | self-organizing map |

| SNS | self/non-self |

| TLR | toll-like receptors |

| TNR | true negative rate |

| TPR | true positive rate |

| WSN | wireless sensor network |

References

- Mahmoud, H.; Fahmy, A. WSN Applications. In Concepts, Applications, Experimentation and Analysis of Wireless Sensor Networks; Springer International Publishing: Berlin/Heidelberg, Germany, 2020. [Google Scholar] [CrossRef]

- Widhalm, D. Sensor Node Fault Detection in Wireless Sensor Networks: An Immune-inspired Approach. Ph.D. Dissertation, Vienna University of Technology, Vienna, Austria, 2022. [Google Scholar] [CrossRef]

- Widhalm, D.; Goeschka, K.M.; Kastner, W. An Open-Source Wireless Sensor Node Platform with Active Node-Level Reliability for Monitoring Applications. Sensors 2021, 21, 7613. [Google Scholar] [CrossRef] [PubMed]

- Jurdak, R.; Wang, X.R.; Obst, O.; Valencia, P. Wireless Sensor Network Anomalies: Diagnosis and Detection Strategies. In Intelligence-Based Systems Engineering; Springer: Berlin/Heidelberg, Germany, 2011; pp. 309–325. [Google Scholar] [CrossRef]

- Burgess, M. Computer Immunology. In Proceedings of the 12th USENIX Conference on System Administration, LISA ’98, Boston, MA, USA, 6–11 December 1998; USENIX Association: Berkeley, CA, USA, 1998; pp. 283–298. [Google Scholar]

- Somayaji, A.; Hofmeyr, S.; Forrest, S. Principles of a Computer Immune System. In Proceedings of the 1997 Workshop on New Security Paradigms, NSPW ’97, Langdale, UK, 23–26 September 1997; ACM: New York, NY, USA, 1997; pp. 75–82. [Google Scholar] [CrossRef]

- Hong, L.; Yang, J. Danger theory of immune systems and intrusion detection systems. In Proceedings of the 2009 International Conference on Industrial Mechatronics and Automation, Changchun, China, 9–12 August 2009; pp. 208–211. [Google Scholar] [CrossRef]

- Kim, J.; Bentley, P.; Wallenta, C.; Ahmed, M.; Hailes, S. Danger Is Ubiquitous: Detecting Malicious Activities in Sensor Networks Using the Dendritic Cell Algorithm. In Artificial Immune Systems, Proceedings of the 5th International Conference, ICARIS 2006, Oeiras, Portugal, 4–6 September 2006; Bersini, H., Carneiro, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 390–403. [Google Scholar]

- Twycross, J.; Aickelin, U. Information fusion in the immune system. Inf. Fusion 2010, 11, 35–44. [Google Scholar] [CrossRef]

- Burgess, M.; Haugerud, H.; Straumsnes, S.; Reitan, T. Measuring System Normality. ACM Trans. Comput. Syst. 2002, 20, 125–160. [Google Scholar] [CrossRef]

- D’haeseleer, P.; Forrest, S.; Helman, P. An immunological approach to change detection: Algorithms, analysis and implications. In Proceedings of the 1996 IEEE Symposium on Security and Privacy, Oakland, CA, USA, 6–8 May 1996; pp. 110–119. [Google Scholar] [CrossRef]

- Greensmith, J.; Aickelin, U.; Twycross, J. Articulation and Clarification of the Dendritic Cell Algorithm. In Artificial Immune Systems, Proceedings of the 5th International Conference, ICARIS 2006, Oeiras, Portugal, 4–6 September 2006; Bersini, H., Carneiro, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Aickelin, U.; Greensmith, J.; Twycross, J. Immune System Approaches to Intrusion Detection—A Review. In Artificial Immune Systems, Proceedings of the 3rd International Conference, ICARIS 2004, Catania, Italy, 13–16 September 2004; Nicosia, G., Cutello, V., Bentley, P.J., Timmis, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 316–329. [Google Scholar]

- Hofmeyr, S.A.; Forrest, S. Immunity by Design: An Artificial Immune System. In Proceedings of the 1st Annual Conference on Genetic and Evolutionary Computation, GECCO’99, Orlando, FL, USA, 13–17 July 1999; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1999; Volume 2, pp. 1289–1296. [Google Scholar]

- Sarafijanovic, S.; Le Boudec, J. An artificial immune system approach with secondary response for misbehavior detection in mobile ad hoc networks. IEEE Trans. Neural Netw. 2005, 16, 1076–1087. [Google Scholar] [CrossRef]

- Kim, J.; Bentley, P.J.; Aickelin, U.; Greensmith, J.; Tedesco, G.; Twycross, J. Immune system approaches to intrusion detection—A review. Nat. Comput. 2007, 6, 413–466. [Google Scholar] [CrossRef]

- Hart, E.; Timmis, J. Application areas of AIS: The past, the present and the future. Appl. Soft Comput. 2008, 8, 191–201. [Google Scholar] [CrossRef]

- Anchor, K.P.; Williams, P.D.; Gunsch, G.H.; Lamont, G.B. The computer defense immune system: Current and future research in intrusion detection. In Proceedings of the 2002 Congress on Evolutionary Computation (CEC’02), Honolulu, HI, USA, 12–17 May 2002; Volume 2, pp. 1027–1032. [Google Scholar] [CrossRef]

- Ramotsoela, D.; Abu-Mahfouz, A.; Hancke, G. A Survey of Anomaly Detection in Industrial Wireless Sensor Networks with Critical Water System Infrastructure as a Case Study. Sensors 2018, 18, 2491. [Google Scholar] [CrossRef] [PubMed]

- Khalastchi, E.; Kalech, M. Fault Detection and Diagnosis in Multi-Robot Systems: A Survey. Sensors 2019, 19, 4019. [Google Scholar] [CrossRef]

- Malhotra, N.; Bala, M. Fault Diagnosis in Wireless Sensor Networks—A Survey. In Proceedings of the 2018 4th International Conference on Computing Sciences (ICCS), Phagwara, India, 30–31 August 2018; pp. 28–34. [Google Scholar] [CrossRef]

- Zhang, Z.; Mehmood, A.; Shu, L.; Huo, Z.; Zhang, Y.; Mukherjee, M. A Survey on Fault Diagnosis in Wireless Sensor Networks. IEEE Access 2018, 6, 11349–11364. [Google Scholar] [CrossRef]

- Widhalm, D.; Goeschka, K.M.; Kastner, W. SoK: A Taxonomy for Anomaly Detection in Wireless Sensor Networks Focused on Node-Level Techniques. In Proceedings of the 15th International Conference on Availability, Reliability and Security (ARES ’20), online, 25–28 August 2020. [Google Scholar] [CrossRef]

- Eichmann, K. (Ed.) The idiotypic network theory. In The Network Collective: Rise and Fall of a Scientific Paradigm; Birkhäuser Basel: Basel, Switzerland, 2008; pp. 82–94. [Google Scholar] [CrossRef]

- Matzinger, P. The danger model: A renewed sense of self. Science 2002, 296, 301–305. [Google Scholar] [CrossRef]

- Aickelin, U.; Cayzer, S. The Danger Theory and Its Application to Artificial Immune Systems. CoRR 2002, 2002, abs/0801.3549. [Google Scholar] [CrossRef]

- Burnet, F.M. A Modification of Jerne’s Theory of Antibody Production using the Concept of Clonal Selection. CA Cancer J. Clin. 1976, 26, 119–121. [Google Scholar] [CrossRef] [PubMed]

- Fekety, F.R. The Clonal Selection Theory of Acquired Immunity. Yale J. Biol. Med. 1960, 32, 480. [Google Scholar]

- Oudin, J.; Cazenave, P.A. Similar Idiotypic Specificities in Immunoglobulin Fractions with Different Antibody Functions or Even without Detectable Antibody Function. Proc. Natl. Acad. Sci. USA 1971, 68, 2616–2620. [Google Scholar] [CrossRef]

- Bretscher, P.; Cohn, M. A Theory of Self-Nonself Discrimination: Paralysis and induction involve the recognition of one and two determinants on an antigen, respectively. Science 1970, 169, 1042–1049. [Google Scholar] [CrossRef]

- Jerne, N. Towards a network theory of the immune system. Ann. Immunol. 1974, 125C, 373–389. [Google Scholar]

- Langman, R.; Cohn, M. The ‘complete’ idiotype network is an absurd immune system. Immunol. Today 1986, 7, 100–101. [Google Scholar] [CrossRef]

- Lafferty, K.; Cunningham, A. A new analysis of allogeneic interactions. Aust. J. Exp. Biol. Med Sci. 1975, 53, 27–42. [Google Scholar] [CrossRef]

- Janeway, C. Approaching the Asymptote? Evolution and Revolution in Immunology. Cold Spring Harb. Symp. Quant. Biol. 1989, 54, 1–13. [Google Scholar] [CrossRef]

- Matzinger, P. Tolerance, danger, and the extended family. Annu. Rev. Immunol. 1994, 12, 991–1045. [Google Scholar] [CrossRef]

- Mosmann, T.R.; Livingstone, A.M. Dendritic cells: The immune information management experts. Nat. Immunol. 2004, 5, 564–566. [Google Scholar] [CrossRef]

- Greensmith, J.; Aickelin, U.; Cayzer, S. Introducing Dendritic Cells as a Novel Immune-Inspired Algorithm for Anomaly Detection. In Artificial Immune Systems, Proceedings of the 4th International Conference, ICARIS 2005, Banff, AB, Canada, 14–17 August 2005; Jacob, C., Pilat, M.L., Bentley, P.J., Timmis, J.I., Eds.; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar] [CrossRef]

- Xu, Q.z.; Wang, L. Recent advances in the artificial endocrine system. J. Zhejiang Univ. SCIENCE C 2011, 12, 171–183. [Google Scholar] [CrossRef]

- Sherwood, L. Human Physiology: From Cells to Systems; Cengage Learning: Boston, MA, USA, 2015. [Google Scholar]

- Neal, J. How the Endocrine System Works; The How it Works Series; Wiley: Hoboken, NJ, USA, 2016. [Google Scholar]

- Sinha, S.; Chaczko, Z. Concepts and Observations in Artificial Endocrine Systems for IoT Infrastructure. In Proceedings of the 2017 25th International Conference on Systems Engineering (ICSEng), Las Vegas, NV, USA, 22–24 August 2017; pp. 427–430. [Google Scholar] [CrossRef]

- Ihara, H.; Mori, K. Autonomous Decentralized Computer Control Systems. Computer 1984, 17, 57–66. [Google Scholar] [CrossRef]

- Miyamoto, S.; Mori, K.; Ihara, H.; Matsumaru, H.; Ohshima, H. Autonomous decentralized control and its application to the rapid transit system. Comput. Ind. 1984, 5, 115–124. [Google Scholar] [CrossRef]

- Mori, K. Autonomous Decentralized Systems Technologies and Their Application to a Train Transport Operation System. In The Kluwer International Series in Engineering and Computer Science; Springer: New York, NY, USA, 2001; pp. 89–111. [Google Scholar] [CrossRef]

- Shen, W.M.; Chuong, C.M.; Will, P. Digital Hormone Models for Self-Organization In Artificial Life VIII, Standish, Abbass, Bedau; MIT Press: Cambridge, MA, USA, 2002; pp. 116–120. [Google Scholar]

- Wei-Min, S.; Cheng-Ming, C.; Will, P. Simulating self-organization for multi-robot systems. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Lusanne, Switzerland, 30 September–4 October 2002; Volume 3, pp. 2776–2781. [Google Scholar] [CrossRef]

- Heylighen, F.; Gershenson, C.; Staab, S.; Flake, G.W.; Pennock, D.M.; Fain, D.C.; De Roure, D.; Aberer, K.; Wei-Min, S.; Dousse, O. Neurons, viscose fluids, freshwater polyp hydra-and self-organizing information systems. IEEE Intell. Syst. 2003, 18, 72–86. [Google Scholar] [CrossRef]

- Kravitz, E. Hormonal control of behavior: Amines and the biasing of behavioral output in lobsters. Science 1988, 241, 1775–1781. [Google Scholar] [CrossRef]

- Brooks, R.A. Integrated Systems Based on Behaviors. SIGART Bull. 1991, 2, 46–50. [Google Scholar] [CrossRef]

- Avila-Garcia, O.; Canamero, L. Using hormonal feedback to modulate action selection in a competitive scenario. In From Animals to Animats, Proceedings of the 8th International Conference of Adaptive Behavior (SAB’04), Santa Monica, LA, USA, 24 Auguest 2004; MIT Press: Cambridge, MA, USA, 2004; pp. 243–252. [Google Scholar]

- Avila-Garcia, O.; Canamero, L. Hormonal modulation of perception in motivation-based action selection architectures. In Procs of the Symposium on Agents that Want and Like; University of Hertfordshire: Hertfordshire, UK, 2005. [Google Scholar]

- Brinkschulte, U.; Pacher, M.; von Renteln, A. An Artificial Hormone System for Self-Organizing Real-Time Task Allocation in Organic Middleware. In Organic Computing; Springer: Berlin/Heidelberg, Germany, 2009; pp. 261–283. [Google Scholar] [CrossRef]

- von Renteln, A.; Brinkschulte, U.; Pacher, M. The Artificial Hormone System—An Organic Middleware for Self-organising Real-Time Task Allocation. In Organic Computing — A Paradigm Shift for Complex Systems; Springer: Basel, Switzerland, 2011; pp. 369–384. [Google Scholar] [CrossRef]

- de Castro, L.N.; Timmis, J.I. Artificial immune systems as a novel soft computing paradigm. Soft Comput.—Fusion Found. Methodol. Appl. 2003, 7, 526–544. [Google Scholar] [CrossRef]

- Dasgupta, D. Artificial Immune Systems and Their Applications; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Aickelin, U.; Bentley, P.; Cayzer, S.; Kim, J.; McLeod, J. Danger Theory: The Link between AIS and IDS? In Proceedings of the Artificial Immune Systems; Timmis, J., Bentley, P.J., Hart, E., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; pp. 147–155. [Google Scholar]

- Ki-Won, Y.; Ji-Hyung, P. An Artificial Immune System Model for Multi Agents based Resource Discovery in Distributed Environments. In Proceedings of the 1st International Conference on Innovative Computing, Information and Control - Volume I (ICICIC’06), Beijing, China, 30 August–1 September 2006; Volume 1, pp. 234–239. [Google Scholar] [CrossRef]

- Goldsby, R.A.; Goldsby, R.A.K.i. Immunology, 5th ed.; W.H. Freeman: New York, NY, USA, 2003. [Google Scholar]

- Janeway, C.A. How the Immune System Recognizes Invaders. Sci. Am. 1993, 269, 72–79. [Google Scholar] [CrossRef]

- Janeway, C.A.; Medzhitov, R. Innate Immune Recognition. Annu. Rev. Immunol. 2002, 20, 197–216. [Google Scholar] [CrossRef]

- Alberts, B.; Johnson, A.; Lewis, J.; Raff, M.; Roberts, K.; Walter, P. Molecular Biology of the Cell, 4th ed.; Garland Science: New York, NY, USA, 2002. [Google Scholar]

- Dasgupta, D.; Yu, S.; Majumdar, N.S. MILA—Multilevel immune learning algorithm and its application to anomaly detection. Soft Comput. 2005, 9, 172–184. [Google Scholar] [CrossRef]

- Twycross, J.; Aickelin, U. Towards a Conceptual Framework for Innate Immunity. In Artificial Immune Systems, Proceedings of the ICARIS 2008, Phuket, Thailand, 10–13 August 2008; Jacob, C., Pilat, M.L., Bentley, P.J., Timmis, J.I., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 112–125. [Google Scholar]

- Vivier, E.; Malissen, B. Innate and adaptive immunity: Specificities and signaling hierarchies revisited. Nat. Immunol. 2005, 6, 17–21. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Bentley, P. Immune Memory and Gene Library Evolution in the Dynamic Clonal Selection Algorithm. Genet. Program. Evol. Mach. 2004, 5, 361–391. [Google Scholar] [CrossRef]

- Greensmith, J.; Aickelin, U.; Tedesco, G. Information fusion for anomaly detection with the dendritic cell algorithm. Inf. Fusion 2010, 11, 21–34. [Google Scholar] [CrossRef]

- Aickelin, U.; Dasgupta, D. Artificial Immune Systems. In Search Methodologies: Introductory Tutorials in Optimization and Decision Support Techniques; Springer US: Boston, MA, USA, 2005; pp. 375–399. [Google Scholar] [CrossRef]

- Vidal, J.M.; Orozco, A.L.S.; Villalba, L.J.G. Adaptive artificial immune networks for mitigating DoS flooding attacks. Swarm Evol. Comput. 2018, 38, 94–108. [Google Scholar] [CrossRef]

- Delves, P.J.; Martin, S.J.; Burton, D.R.; Roitt, I.M. Roitt’s Essential Immunology, 13th ed.; Essentials, Wiley-Blackwell: Hoboken, NJ, USA, 2017. [Google Scholar]

- Venkatesan, S.; Baskaran, R.; Chellappan, C.; Vaish, A.; Dhavachelvan, P. Artificial immune system based mobile agent platform protection. Comput. Stand. Interfaces 2013, 35, 365–373. [Google Scholar] [CrossRef]

- Coico, R.; Sunshine, G. Immunology: A Short Course, 7th ed.; Coico, Immunology; Wiley-Blackwell: Hoboken, NJ, USA, 2015. [Google Scholar]

- Green, D.R.; Droin, N.; Pinkoski, M. Activation-induced cell death in T cells. Immunol. Rev. 2003, 193, 70–81. [Google Scholar] [CrossRef]

- Alaparthy, V.T.; Morgera, S.D. A Multi-Level Intrusion Detection System for Wireless Sensor Networks Based on Immune Theory. IEEE Access 2018, 6, 47364–47373. [Google Scholar] [CrossRef]

- Jacob, C.; Steil, S.; Bergmann, K. The Swarming Body: Simulating the Decentralized Defenses of Immunity. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2006; pp. 52–65. [Google Scholar] [CrossRef]

- Punt, J. Kuby Immunology; W. H. Freeman: New York, NY, USA, 2018. [Google Scholar]

- Greensmith, J.; Aickelin, U. The Deterministic Dendritic Cell Algorithm. In Artificial Immune Systems, Proceedings of the ICARIS 2008, Phuket, Thailand, 10–13 August 2008; Springer: Berlin/Heidelberg, 2008. [Google Scholar] [CrossRef]

- Bentley, P.J.; Greensmith, J.; Ujjin, S. Two Ways to Grow Tissue for Artificial Immune Systems. In Artificial Immune Systems, Proceedings of the 4th International Conference, ICARIS 2005, Banff, AB, Canada, 14–17 August 2005; Pilat, M.L., Bentley, P.J., Timmis, J.I., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 139–152. [Google Scholar]

- Pradeu, T.; Cooper, E.L. The danger theory: 20 years later. Front. Immunol. 2012, 3, 287. [Google Scholar] [CrossRef]

- Matzinger, P. An innate sense of danger. Semin. Immunol. 1998, 10, 399–415. [Google Scholar] [CrossRef]

- Sompayrac, L.M. How the Immune System Works; Wiley-Blackwell: Hoboken, NJ, USA, 2019. [Google Scholar]

- Gallucci, S.; Matzinger, P. Danger signals: SOS to the immune system. Curr. Opin. Immunol. 2001, 13, 114–119. [Google Scholar] [CrossRef] [PubMed]

- Kerr, J.F.R.; Winterford, C.M.; Harmon, B.V. Apoptosis. Its significance in cancer and cancer Therapy. Cancer 1994, 73, 2013–2026. [Google Scholar] [CrossRef] [PubMed]

- Steinman, R.M. Identification of a novel cell type in peripheral lymphoid organs of mice: I. morphology, quantitation, tissue distribution. J. Exp. Med. 1973, 137, 1142–1162. [Google Scholar] [CrossRef] [PubMed]

- Greensmith, J.; Aickelin, U.; Cayzer, S. Detecting Danger: The Dendritic Cell Algorithm. In Robust Intelligent Systems; Springer: London, UK, 2008; pp. 89–112. [Google Scholar] [CrossRef]

- Kapsenberg, M.L. Dendritic-cell control of pathogen-driven T-cell polarization. Nat. Rev. Immunol. 2003, 3, 984–993. [Google Scholar] [CrossRef]

- Kim, J.; Greensmith, J.; Twycross, J.; Aickelin, U. Malicious Code Execution Detection and Response Immune System inspired by the Danger Theory. arXiv 2010, arXiv:1003.4142. [Google Scholar]

- Medzhitov, R. Decoding the Patterns of Self and Nonself by the Innate Immune System. Science 2002, 296, 298–300. [Google Scholar] [CrossRef]

- Greensmith, J. The Dendritic Cell Algorithm. Ph.D. Thesis, University of Nottingham, Nottingham, UK, 2007. [Google Scholar]

- Del Ser, J.; Osaba, E.; Molina, D.; Yang, X.S.; Salcedo-Sanz, S.; Camacho, D.; Das, S.; Suganthan, P.N.; Coello Coello, C.A.; Herrera, F. Bio-inspired computation: Where we stand and what’s next. Swarm Evol. Comput. 2019, 48, 220–250. [Google Scholar] [CrossRef]

- Dasgupta, D.; Yu, S.; Nino, F. Recent Advances in Artificial Immune Systems: Models and Applications. Appl. Soft Comput. 2011, 11, 1574–1587. [Google Scholar] [CrossRef]

- Le Boudec, J.Y.; Sarafijanović, S. An Artificial Immune System Approach to Misbehavior Detection in Mobile Ad Hoc Networks. In Proceedings of the Biologically Inspired Approaches to Advanced Information Technology, Lausanne, Switzerland, 29–30 January 2004; Ijspeert, A.J., Murata, M., Wakamiya, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 396–411. [Google Scholar]

- Timmis, J.; Hone, A.; Stibor, T.; Clark, E. Theoretical advances in artificial immune systems. Theor. Comput. Sci. 2008, 403, 11–32. [Google Scholar] [CrossRef]

- Mak, T.W. Order from disorder sprung: Recognition and regulation in the immune system. Philos. Trans. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 2003, 361, 1235–1250. [Google Scholar] [CrossRef]

- Read, M.; Andrews, P.S.; Timmis, J. An Introduction to Artificial Immune Systems. In Handbook of Natural Computing; Springer: Berlin/Heidelberg, Germany, 2012; pp. 1575–1597. [Google Scholar] [CrossRef]

- Becker, M.; Drozda, M.; Jaschke, S.; Schaust, S. Comparing performance of misbehavior detection based on Neural Networks and AIS. In Proceedings of the 2008 IEEE International Conference on Systems, Man and Cybernetics, Singapore, 12–15 October 2008; pp. 757–762. [Google Scholar] [CrossRef]

- Yu, S.; Dasgupta, D. Conserved Self Pattern Recognition Algorithm. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2008; pp. 279–290. [Google Scholar] [CrossRef]

- Timmis, J.; Andrews, P.; Owens, N.; Clark, E. An interdisciplinary perspective on artificial immune systems. Evol. Intell. 2008, 1, 5–26. [Google Scholar] [CrossRef]

- Forrest, S.; Beauchemin, C. Computer immunology. Immunol. Rev. 2007, 216, 176–197. [Google Scholar] [CrossRef] [PubMed]

- Dasgupta, D. Advances in artificial immune systems. IEEE Comput. Intell. Mag. 2006, 1, 40–49. [Google Scholar] [CrossRef]

- Chang, P.C.; Huang, W.H.; Ting, C.J. A hybrid genetic-immune algorithm with improved lifespan and elite antigen for flow-shop scheduling problems. Int. J. Prod. Res. 2011, 49, 5207–5230. [Google Scholar] [CrossRef]

- Coello, C.A.C.; Cortes, N.C. Solving Multiobjective Optimization Problems Using an Artificial Immune System. Genet. Program. Evolvable Mach. 2005, 6, 163–190. [Google Scholar] [CrossRef]

- Luo, X.; Wei, W. A New Immune Genetic Algorithm and Its Application in Redundant Manipulator Path Planning. J. Robot. Syst. 2004, 21, 141–151. [Google Scholar] [CrossRef]

- Graaff, A.; Engelbrecht, A. Optimised Coverage of Non-self with Evolved Lymphocytes in an Artificial Immune System. Int. J. Comput. Intell. Res. Res. India Publ. 2006, 2, 973–1873. [Google Scholar] [CrossRef]

- Kim, J.; Bentley, P. Negative Selection and Niching by an Artificial Immune System for Network Intrusion Detection. In Proceedings of the Late Breaking Papers at the 1999 Genetic and Evolutionary Computation Conference, Orlando, FL, USA, 13 July 1999; pp. 149–158. [Google Scholar]

- Forrest, S.; Perelson, A.S.; Allen, L.; Cherukuri, R. Self-nonself discrimination in a computer. In Proceedings of the 1994 IEEE Computer Society Symposium on Research in Security and Privacy, Oakland, CA, USA, 16–18 May 1994; pp. 202–212. [Google Scholar] [CrossRef]

- Cohn, M.; Mitchison, N.A.; Paul, W.E.; Silverstein, A.M.; Talmage, D.W.; Weigert, M. Reflections on the clonal-selection theory. Nat. Rev. Immunol. 2007, 7, 823–830. [Google Scholar] [CrossRef]

- Costa Silva, G.; Dasgupta, D. A Survey of Recent Works in Artificial Immune Systems. In Handbook on Computational Intelligence; World Scientific: Singapore, 2016; Volume 2, pp. 547–586. [Google Scholar] [CrossRef]

- Dasgupta, D.; Gonzalez, F. An immunity-based technique to characterize intrusions in computer networks. IEEE Trans. Evol. Comput. 2002, 6, 281–291. [Google Scholar] [CrossRef]

- Mostardinha, P.; Faria, B.F.; Zúquete, A.; de Abreu, F.V. A Negative Selection Approach to Intrusion Detection. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; pp. 178–190. [Google Scholar] [CrossRef]

- Greensmith, J.; Feyereisl, J.; Aickelin, U. The DCA: SOMe Comparison: A comparative study between two biologically-inspired algorithms. arXiv 2008, arXiv:1006.1518. [Google Scholar] [CrossRef]

- Chen, B. Agent-based artificial immune system approach for adaptive damage detection in monitoring networks. J. Netw. Comput. Appl. 2010, 33, 633–645. [Google Scholar] [CrossRef]

- Ayara, M.; Timmis, J.; Lemos, R.; De Castro, L.; Duncan, R. Negative selection: How to generate detectors. In Proceedings of the 1st International Conference on Artificial Immune Systems (ICARIS), Canterbury, UK, 9–11 September 2002. [Google Scholar]

- Lu, H. Artificial Immune System for Anomaly Detection. In Proceedings of the 2008 IEEE International Symposium on Knowledge Acquisition and Modeling Workshop, Wuhan, China, 21–22 December 2008; pp. 340–343. [Google Scholar] [CrossRef]

- Balthrop, J.; Esponda, F.; Forrest, S.; Glickman, M. Coverage and Generalization in an Artificial Immune System. In Proceedings of the 4th Annual Conference on Genetic and Evolutionary Computation, GECCO’02, New York, NY, USA, 9–13 July 2002; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2002; pp. 3–10. [Google Scholar]

- Shapiro, J.M.; Lamont, G.B.; Peterson, G.L. An Evolutionary Algorithm to Generate Hyper-ellipsoid Detectors for Negative Selection. In Proceedings of the 7th Annual Conference on Genetic and Evolutionary Computation, GECCO ’05, Washington, DC, USA, 25–29 June 2005; ACM: New York, NY, USA, 2005; pp. 337–344. [Google Scholar] [CrossRef]

- Jungwon, K.; Bentley, P.J. Towards an artificial immune system for network intrusion detection: An investigation of clonal selection with a negative selection operator. In Proceedings of the 2001 Congress on Evolutionary Computation (IEEE Cat. No.01TH8546), Seoul, Korea, 27–30 May 2001; Volume 2, pp. 1244–1252. [Google Scholar] [CrossRef]

- Ji, Z.; Dasgupata, D. Augmented negative selection algorithm with variable-coverage detectors. In Proceedings of the Congress on Evolutionary Computation, Portland, OR, USA, 19–23 June 2004; Volume 1, pp. 1081–1088. [Google Scholar] [CrossRef]

- Ji, Z.; Dasgupta, D. Real-Valued Negative Selection Algorithm with Variable-Sized Detectors. In Genetic and Evolutionary Computation—GECCO 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 287–298. [Google Scholar] [CrossRef]

- González, F. A Study of Artificial Immune Systems Applied to Anomaly Detection. Ph.D. Thesis, The University of Memphis, Memphis, TN, USA, 2003. [Google Scholar]

- Timmis, J.; Andrews, P.; Hart, E. On artificial immune systems and swarm intelligence. Swarm Intell. 2010, 4, 247–273. [Google Scholar] [CrossRef]

- Nanas, N.; Uren, V.S.; de Roeck, A. Nootropia: A User Profiling Model Based on a Self-Organising Term Network. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2004; pp. 146–160. [Google Scholar] [CrossRef]

- McEwan, C.; Hart, E. Representation in the (Artificial) Immune System. J. Math. Model. Algorithms 2009, 8, 125–149. [Google Scholar] [CrossRef]

- González, F.; Dasgupta, D.; Gómez, J. The Effect of Binary Matching Rules in Negative Selection. In Genetic and Evolutionary Computation—GECCO 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 195–206. [Google Scholar] [CrossRef]

- Harmer, P.K.; Williams, P.D.; Gunsch, G.H.; Lamont, G.B. An artificial immune system architecture for computer security applications. IEEE Trans. Evol. Comput. 2002, 6, 252–280. [Google Scholar] [CrossRef]

- Farmer, J.; Packard, N.H.; Perelson, A.S. The immune system, adaptation, and machine learning. Phys. D Nonlinear Phenom. 1986, 22, 187–204. [Google Scholar] [CrossRef]

- Kim, J.; Bentley, P.J. An Evaluation of Negative Selection in an Artificial Immune System for Network Intrusion Detection. In Proceedings of the Genetic and Evolutionary Computation Conference GECCO ’01, San Francisco, CA, USA, 7–11 July 2001; Morgan Kaufmann: San Francisco, CA, USA, 2001; pp. 1330–1337. [Google Scholar]

- Balthrop, J.; Forrest, S.; Glickman, M. Revisiting LISYS: Parameters and normal behavior. In Proceedings of the 2002 Congress on Evolutionary Computation (CEC’02), Honolulu, HI, USA, 12–17 May 2002. [Google Scholar] [CrossRef]

- Gao, X.Z.; Ovaska, S.J.; Wang, X. Genetic Algorithms-based Detector Generation in Negative Selection Algorithm. In Proceedings of the 2006 IEEE Mountain Workshop on Adaptive and Learning Systems, Logan, UT, USA, 24–26 July 2006; pp. 133–137. [Google Scholar] [CrossRef]

- Gao, X.Z.; Ovaska, S.J.; Wang, X.; Chow, M. Clonal Optimization of Negative Selection Algorithm with Applications in Motor Fault Detection. In Proceedings of the 2006 IEEE International Conference on Systems, Man and Cybernetics, Taipei, Taiwan, 8–11 October 2006; Volume 6, pp. 5118–5123. [Google Scholar] [CrossRef]

- Cayzer, S.; Smith, J. Gene Libraries: Coverage, Efficiency and Diversity. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2006; pp. 136–149. [Google Scholar] [CrossRef]

- Gomez, J.; Gonzalez, F.; Dasgupta, D. An immuno-fuzzy approach to anomaly detection. In Proceedings of the 12th IEEE International Conference on Fuzzy Systems, 2003, FUZZ ’03, St. Louis, MS, USA, 25–28 May 2003; Volume 2, pp. 1219–1224. [Google Scholar] [CrossRef]

- Gonzalez, F.; Gomez, J.; Madhavi, K.; Dipankar, D. An evolutionary approach to generate fuzzy anomaly (attack) signatures. In Proceedings of the IEEE Systems, Man and Cybernetics SocietyInformation Assurance Workshop, New York, NY, USA, 18–20 June 2003; pp. 251–259. [Google Scholar] [CrossRef]

- Esponda, F.; Forrest, S.; Helman, P. A Formal Framework for Positive and Negative Detection Schemes. Trans. Sys. Man Cybern. Part B 2004, 34, 357–373. [Google Scholar] [CrossRef]

- Hang, X.; Dai, H. Applying Both Positive and Negative Selection to Supervised Learning for Anomaly Detection. In Proceedings of the 7th Annual Conference on Genetic and Evolutionary Computation, GECCO ’05, Boston, MA, USA, 9–13 July 2005; ACM: New York, NY, USA, 2005; pp. 345–352. [Google Scholar] [CrossRef]

- Shamshirband, S.; Anuar, N.B.; Kiah, M.L.M.; Rohani, V.A.; Petković, D.; Misra, S.; Khan, A.N. Co-FAIS: Cooperative fuzzy artificial immune system for detecting intrusion in wireless sensor networks. J. Netw. Comput. Appl. 2014, 42, 102–117. [Google Scholar] [CrossRef]

- de Castro, P.A.D.; Zuben, F.J.V. BAIS: A Bayesian Artificial Immune System for the effective handling of building blocks. Inf. Sci. 2009, 179, 1426–1440. [Google Scholar] [CrossRef]

- Castro, P.A.D.; Zuben, F.J.V. MOBAIS: A Bayesian Artificial Immune System for Multi-Objective Optimization. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2008; pp. 48–59. [Google Scholar] [CrossRef]

- Wang, W.; Gao, S.; Tang, Z. A Complex Artificial Immune System. In Proceedings of the 2008 Fourth International Conference on Natural Computation, Jinan, China, 18–20 October 2008; Volume 6, pp. 597–601. [Google Scholar] [CrossRef]

- Dasgupta, D.; Forrest, S. An Anomaly Entection Algorithm Inspired by the Immune Syste. In Artificial Immune Systems and Their Applications; Springer: Berlin/Heidelberg, Germany, 1999; pp. 262–277. [Google Scholar] [CrossRef]

- Tyrell, A.M. Computer know thy self!: A biological way to look at fault-tolerance. In Proceedings of the 25th EUROMICRO Conference, Informatics: Theory and Practice for the New Millennium, Milan, Italy, 8–10 September 1999; Volume 2, pp. 129–135. [Google Scholar] [CrossRef]

- Coello Coello, C.A.; Cruz Cortes, N. A parallel implementation of an artificial immune system to handle constraints in genetic algorithms: Preliminary results. In Proceedings of the 2002 Congress on Evolutionary Computation. CEC’02 (Cat. No.02TH8600), Honolulu, HI, USA, 12–17 May 2002; Volume 1, pp. 819–824. [Google Scholar] [CrossRef]

- Liu, S.; Li, T.; Wang, D.; Zhao, K.; Gong, X.; Hu, X.; Xu, C.; Liang, G. Immune Multi-agent Active Defense Model for Network Intrusion. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2006; pp. 104–111. [Google Scholar] [CrossRef]

- Dasgupta, D. Immunity-Based Intrusion Detection System: A General Framework; Technical Report; The University of Memphis: Memphis, TN, USA, 1999. [Google Scholar]

- Ji, Z.; Dasgupta, D. Revisiting Negative Selection Algorithms. Evol. Comput. 2007, 15, 223–251. [Google Scholar] [CrossRef]

- Castro, L.N.D.; Zuben, F.J.V. The Clonal Selection Algorithm with Engineering Applications. In Proceedings of the GECCO 2002, Workshop, New York, NY, USA, 9–13 July 2002; Morgan Kaufmann: New York, NY, USA, 2002; pp. 36–37. [Google Scholar]

- Watkins, A.; Timmis, J. Exploiting Parallelism Inherent in AIRS, an Artificial Immune Classifier. In Artificial Immune Systems; Nicosia, G., Cutello, V., Bentley, P.J., Timmis, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 427–438. [Google Scholar]

- Alaparthy, V.T.; Amouri, A.; Morgera, S.D. A Study on the Adaptability of Immune models for Wireless Sensor Network Security. Procedia Comput. Sci. 2018, 145, 13–19. [Google Scholar] [CrossRef]

- Ciccazzo, A.; Conca, P.; Nicosia, G.; Stracquadanio, G. An Advanced Clonal Selection Algorithm with Ad-Hoc Network-Based Hypermutation Operators for Synthesis of Topology and Sizing of Analog Electrical Circuits. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2008; pp. 60–70. [Google Scholar] [CrossRef]

- Timmis, J.; Neal, M. A resource limited artificial immune system for data analysis. Knowl.-Based Syst. 2001, 14, 121–130. [Google Scholar] [CrossRef]

- Watkins, A.; Timmis, J.; Boggess, L. Artificial Immune Recognition System (AIRS): An Immune-Inspired Supervised Learning Algorithm. Genet. Program. Evolvable Mach. 2004, 5, 291–317. [Google Scholar] [CrossRef]

- Goodman, D.E.; Boggess, L.; Watkins, A. An investigation into the source of power for AIRS, an artificial immune classification system. In Proceedings of the International Joint Conference on Neural Networks, Portland, OR, USA, 20–24 July 2003; Volume 3, pp. 1678–1683. [Google Scholar] [CrossRef]

- Fang, L.; Bo, Q.; Rongsheng, C. Intrusion Detection Based on Immune Clonal Selection Algorithms. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2004; pp. 1226–1232. [Google Scholar] [CrossRef]

- Ishida, Y. Fully distributed diagnosis by PDP learning algorithm: Towards immune network PDP model. In Proceedings of the 1990 IJCNN International Joint Conference on Neural Networks, San Diego, CA, USA, 17–21 June 1990; pp. 777–782. [Google Scholar] [CrossRef]

- Hunt, J.E.; Cooke, D.E. Learning using an artificial immune system. J. Netw. Comput. Appl. 1996, 19, 189–212. [Google Scholar] [CrossRef]

- de Castro, L.N.; Zuben, F.J.V. aiNet: An Artificial Immune Network for Data Analysis. In Data Mining; IGI Global: Hershey, PA, USA, 2001; pp. 231–260. [Google Scholar] [CrossRef]

- Zhang, C.; Yi, Z. An Artificial Immune Network Model Applied to Data Clustering and Classification. In Advances in Neural Networks—ISNN 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 526–533. [Google Scholar] [CrossRef]

- Timmis, J.; Neal, M.; Hunt, J. An artificial immune system for data analysis. Biosystems 2000, 55, 143–150. [Google Scholar] [CrossRef]

- Greensmith, J.; Twycross, J.; Aickelin, U. Dendritic Cells for Anomaly Detection. In Proceedings of the 2006 IEEE International Conference on Evolutionary Computation, Vancouver, BC, Canada, 16–21 July 2006. [Google Scholar] [CrossRef]

- Twycross, J. Integrated Innate and Adaptive Artificial Immune Systems Applied to Process Anomaly Detection. Ph.D. Thesis, University of Nottingham, Nottingham, UK, 2007. [Google Scholar]

- Aickelin, U.; Greensmith, J. Sensing danger: Innate immunology for intrusion detection. Inf. Secur. Tech. Rep. 2007, 12, 218–227. [Google Scholar] [CrossRef]

- Greensmith, J.; Aickelin, U. Dendritic Cells for Real-Time Anomaly Detection. SSRN Electr. J. 2006. [Google Scholar] [CrossRef]

- Greensmith, J. Migration Threshold Tuning in the Deterministic Dendritic Cell Algorithm. In Proceedings of the 8th International Conference on the Theory and Practice of Natural Computing (TPNC’19), Kingston, ON, Canada, 9–11 December 2019; Volume 2. [Google Scholar]

- Pinto, R.; Gonçalves, G.; Tovar, E.; Delsing, J. Attack Detection in Cyber-Physical Production Systems using the Deterministic Dendritic Cell Algorithm. In Proceedings of the 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 8–11 September 2020. [Google Scholar] [CrossRef]

- Oates, R.; Kendall, G.; Garibaldi, J.M. Frequency analysis for dendritic cell population tuning. Evol. Intell. 2008, 1, 145–157. [Google Scholar] [CrossRef]

- Gu, F.; Greensmith, J.; Aickelin, U. Integrating Real-Time Analysis with the Dendritic Cell Algorithm through Segmentation. In Proceedings of the 11th Annual Conference on Genetic and Evolutionary Computation, GECCO ’09, Montreal, QC, Canada, 8–12 July 2009; Association for Computing Machinery: New York, NY, USA, 2009. [Google Scholar] [CrossRef]

- Musselle, C.J. Insights into the Antigen Sampling Component of the Dendritic Cell Algorithm. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar] [CrossRef]

- Aldhaheri, S.; Alghazzawi, D.; Cheng, L.; Barnawi, A.; Alzahrani, B.A. Artificial Immune Systems approaches to secure the internet of things: A systematic review of the literature and recommendations for future research. J. Netw. Comput. Appl. 2020, 157. [Google Scholar] [CrossRef]

- Ou, C.M.; Ou, C.R.; Wang, Y.T. Agent-Based Artificial Immune Systems (ABAIS) for Intrusion Detections: Inspiration from Danger Theory. In Agent and Multi-Agent Systems in Distributed Systems—Digital Economy and E-Commerce; Springer: Berlin/Heidelberg, Germany, 2013; pp. 67–94. [Google Scholar] [CrossRef]

- Salvato, M.; De Vito, S.; Guerra, S.; Buonanno, A.; Fattoruso, G.; Di Francia, G. An adaptive immune based anomaly detection algorithm for smart WSN deployments. In Proceedings of the 2015 XVIII AISEM Annual Conference, Trento, Italy, 3–5 February 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Chao, R.; Tan, Y. A Virus Detection System Based on Artificial Immune System. In Proceedings of the 2009 International Conference on Computational Intelligence and Security, Beijing, China, 11–14 December 2009; Volume 1, pp. 6–10. [Google Scholar] [CrossRef]

- Tan, Y.; Mi, G.; Zhu, Y.; Deng, C. Artificial immune system based methods for spam filtering. In Proceedings of the 2013 IEEE International Symposium on Circuits and Systems (ISCAS), Beijing, China, 19–23 May 2013; pp. 2484–2488. [Google Scholar] [CrossRef]

- Gadi, M.F.A.; Wang, X.; do Lago, A.P. Credit Card Fraud Detection with Artificial Immune System. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2008; pp. 119–131. [Google Scholar] [CrossRef]

- Boukerche, A.; Machado, R.B.; Jucá, K.R.; Sobral, J.B.M.; Notare, M.S. An agent based and biological inspired real-time intrusion detection and security model for computer network operations. Comput. Commun. 2007, 30, 2649–2660, Sensor-Actuated Networks. [Google Scholar] [CrossRef]

- Drozda, M.; Schaust, S.; Szczerbicka, H. AIS for misbehavior detection in wireless sensor networks: Performance and design principles. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation, Singapore, 25–28 September 2007; pp. 3719–3726. [Google Scholar] [CrossRef]

- Powers, S.T.; He, J. A hybrid artificial immune system and Self Organising Map for network intrusion detection. Inf. Sci. 2008, 178, 3024–3042. [Google Scholar] [CrossRef]

- Yang, L.; Yang, L.; Fengqi, Y. Immunity-based intrusion detection for wireless sensor networks. In Proceedings of the 2008 IEEE World Congress on Computational Intelligence, Hong Kong, China, 1–6 June 2008; pp. 439–444. [Google Scholar] [CrossRef]

- Yang, H.; Elhadef, M.; Nayak, A.; Yang, X. Network Fault Diagnosis: An Artificial Immune System Approach. In Proceedings of the 2008 14th IEEE International Conference on Parallel and Distributed Systems, Melbourne, VIC, Australia, 8–10 December 2008; pp. 463–469. [Google Scholar] [CrossRef]

- Laurentys, C.; Palhares, R.; Caminhas, W. A novel Artificial Immune System for fault behavior detection. Expert Syst. Appl. 2011, 38, 6957–6966. [Google Scholar] [CrossRef]

- Xiao, Y.; Wang, W.; Fang, D.; Gao, H.; Chen, X.; Zeng, Y.; Liu, B. A survival condition model of earthen sites based on the danger theory. In Proceedings of the 11th International Conference on Natural Computation (ICNC), Zhangjiajie, China, 15–17 August 2015; pp. 354–362. [Google Scholar] [CrossRef]

- Rizwan, R.; Khan, F.A.; Abbas, H.; Chauhdary, S.H. Anomaly Detection in Wireless Sensor Networks Using Immune-Based Bioinspired Mechanism. Int. J. Distrib. Sens. Netw. 2015, 11, 84952. [Google Scholar] [CrossRef]

- Cui, D.; Zhang, Q.; Xiong, J.; Li, Q.; Liu, M. Fault diagnosis research of rotating machinery based on Dendritic Cell Algorithm. In Proceedings of the IEEE International Conference on Information and Automation, Lijiang, China, 8–10 August 2015. [Google Scholar] [CrossRef]

- Mohapatra, S.; Khilar, P.M. Artificial immune system based fault diagnosis in large wireless sensor network topology. In Proceedings of the TENCON 2017—2017 IEEE Region 10 Conference, Penang, Malaysia, 5–8 November 2017; pp. 2687–2692. [Google Scholar] [CrossRef]

- Sun, Z.; Xu, Y.; Liang, G.; Zhou, Z. An Intrusion Detection Model for Wireless Sensor Networks With an Improved V-Detector Algorithm. IEEE Sens. J. 2018, 18, 1971–1984. [Google Scholar] [CrossRef]

- Li, W.; Cai, X. Intelligent Immune System for Sustainable Manufacturing. In Proceedings of the 2018 IEEE 22nd International Conference on Computer Supported Cooperative Work in Design ((CSCWD)), Nanjing, China, 9–11 May 2018; pp. 190–195. [Google Scholar] [CrossRef]

- Alizadeh, E.; Meskin, N.; Khorasani, K. A Dendritic Cell Immune System Inspired Scheme for Sensor Fault Detection and Isolation of Wind Turbines. IEEE Trans. Ind. Inform. 2018, 14, 545–555. [Google Scholar] [CrossRef]

- Akram, M.; Raza, A. Towards the development of robot immune system: A combined approach involving innate immune cells and T-lymphocytes. Biosystems 2018, 172, 52–67. [Google Scholar] [CrossRef] [PubMed]

- Aldhaheri, S.; Alghazzawi, D.; Cheng, L.; Alzahrani, B.; Al-Barakati, A. DeepDCA: Novel Network-Based Detection of IoT Attacks Using Artificial Immune System. Appl. Sci. 2020, 10, 1909. [Google Scholar] [CrossRef]

- Bejoy, B.; Raju, G.; Swain, D.; Acharya, B.; Hu, Y.C. A generic cyber immune framework for anomaly detection using artificial immune systems. Appl. Soft Comput. 2022, 130, 109680. [Google Scholar] [CrossRef]

- Dasgupta, D. An Overview of Artificial Immune Systems and Their Applications. In Artificial Immune Systems and Their Applications; Springer: Berlin/Heidelberg, Germany, 1993; pp. 3–21. [Google Scholar] [CrossRef]

- Kim, J.; Bentley, P. An Artificial Immune Model for Network Intrusion Detection. In Proceedings of the Conference on Intelligent Techniques and Soft Computing (EUFIT’99), Aachen, Germany, 13–16 September 1999. [Google Scholar]

- Shafi, K.; Abbass, H.A. Biologically-inspired Complex Adaptive Systems approaches to Network Intrusion Detection. Inf. Secur. Tech. Rep. 2007, 12, 209–217. [Google Scholar] [CrossRef]

- Fernandes, D.A.; Freire, M.M.; Fazendeiro, P.A.; Inácio, P.R. Applications of artificial immune systems to computer security: A survey. J. Inf. Secur. Appl. 2017, 35, 138–159. [Google Scholar] [CrossRef]

- Naik, B.; Mehta, A.; Yagnik, H.; Shah, M. The impacts of artificial intelligence techniques in augmentation of cybersecurity: A comprehensive review. Complex Intell. Syst. 2021, 8, 1763–1780. [Google Scholar] [CrossRef]

- Fasanotti, L.; Dovere, E.; Cagnoni, E.; Cavalieri, S. An Application of Artificial Immune System in a Wastewater Treatment Plant. IFAC-PapersOnLine 2016, 49, 55–60. [Google Scholar] [CrossRef]

- Gong, M.; Jiao, L.; Ma, W.; Ma, J. Intelligent multi-user detection using an artificial immune system. Sci. China Ser. F Inf. Sci. 2009, 52, 2342–2353. [Google Scholar] [CrossRef]

- Zuccolotto, M.; Pereira, C.E.; Fasanotti, L.; Cavalieri, S.; Lee, J. Designing an Artificial Immune Systems for Intelligent Maintenance Systems. IFAC-PapersOnLine 2015, 48, 1451–1456. [Google Scholar] [CrossRef]

- Bradley, D.W.; Tyrrell, A.M. The architecture for a hardware immune system. In Proceedings of the 3rd NASA/DoD Workshop on Evolvable Hardware, EH-2001, Long Beach, CA, USA, 12–14 July 2001; pp. 193–200. [Google Scholar] [CrossRef]

- Kayama, M.; Sugita, Y.; Morooka, Y.; Fukuoka, S. Distributed diagnosis system combining the immune network and learning vector quantization. In Proceedings of the IECON ’95—21st Annual Conference on IEEE Industrial Electronics, Orlando, FL, USA, 6–10 November 1995; Volume 2, pp. 1531–1536. [Google Scholar] [CrossRef]

- Liu, W.; Chen, B. Optimal control of mobile monitoring agents in immune-inspired wireless monitoring networks. J. Netw. Comput. Appl. 2011, 34, 1818–1826. [Google Scholar] [CrossRef]

- Mohapatra, S.; Khilar, P.M. Immune Inspired Fault Diagnosis in Wireless Sensor Network. In Nature Inspired Computing for Wireless Sensor Networks; Springer: Singapore, 2020; Chapter 5. [Google Scholar] [CrossRef]

- Chelly, Z.; Elouedi, Z. A survey of the dendritic cell algorithm. Knowl. Inf. Syst. 2015, 48, 505–535. [Google Scholar] [CrossRef]

- Sarafijanović, S.; Le Boudec, J.Y. An Artificial Immune System for Misbehavior Detection in Mobile Ad-Hoc Networks with Virtual Thymus, Clustering, Danger Signal, and Memory Detectors. In Artificial Immune Systems, Proceedings of the Third International Conference, ICARIS 2004, Catania, Sicily, Italy, 13–16 September 2004; Nicosia, G., Cutello, V., Bentley, P.J., Timmis, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 342–356. [Google Scholar]

- Widhalm, D.; Goeschka, K.M.; Kastner, W. Node-level indicators of soft faults in wireless sensor networks. In Proceedings of the 40th International Symposium on Reliable Distributed Systems (SRDS ’21), Chicago, IL, USA, 21–24 September 2021; pp. 13–22. [Google Scholar] [CrossRef]

- Elisa, N.; Yang, L.; Fu, X.; Naik, N. Dendritic Cell Algorithm Enhancement Using Fuzzy Inference System for Network Intrusion Detection. In Proceedings of the IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), New Orleans, LA, USA, 26–26 June 2019. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Widhalm , D.; Goeschka , K.M.; Kastner , W. A Review on Immune-Inspired Node Fault Detection in Wireless Sensor Networks with a Focus on the Danger Theory. Sensors 2023, 23, 1166. https://doi.org/10.3390/s23031166

Widhalm D, Goeschka KM, Kastner W. A Review on Immune-Inspired Node Fault Detection in Wireless Sensor Networks with a Focus on the Danger Theory. Sensors. 2023; 23(3):1166. https://doi.org/10.3390/s23031166

Chicago/Turabian StyleWidhalm , Dominik, Karl M. Goeschka , and Wolfgang Kastner . 2023. "A Review on Immune-Inspired Node Fault Detection in Wireless Sensor Networks with a Focus on the Danger Theory" Sensors 23, no. 3: 1166. https://doi.org/10.3390/s23031166

APA StyleWidhalm , D., Goeschka , K. M., & Kastner , W. (2023). A Review on Immune-Inspired Node Fault Detection in Wireless Sensor Networks with a Focus on the Danger Theory. Sensors, 23(3), 1166. https://doi.org/10.3390/s23031166