Disentangled Dynamic Deviation Transformer Networks for Multivariate Time Series Anomaly Detection

Abstract

1. Introduction

- 1.

- Graph convolution-based models cannot accurately describe the changes in dynamics in multivariate time series when modeling inter-sensor correlations. Spatial dependencies are highly dynamic due to unknown topologies, changing realities, and multiple factors. For each sensor, its correlated sensor varies with time step. The studies [12,13] model the dependencies between sensors by a learnable embedding of each node in the graph. Although the performance of these models has improved compared to previous deep learning models, it is still far from satisfactory. The reason is that the dependencies between sensors remain fixed after training, so it is not enough to consider only the fixed correlations of the dependencies in the graph structure during graph structure-based modeling. Moreover, for robust time series prediction, the ideal algorithm should go beyond local connectivity and extract multiscale structural features and long-range dependencies since structurally separated sensors in the learned sensor relationship graph can also have hidden correlations. Therefore, it is necessary to efficiently capture these dynamic spatial dependencies and hidden correlations to improve time series anomaly detection.

- 2.

- Long-term time dependence has often been overlooked in previous work. Long-term time dependence refers to the fact that the current state of a system may be influenced by the state of the system long ago. Gated recurrent neural networks are the most effective sequential models for practical applications, including gated recurrent units and long and short-term memory (LSTM). The papers [7,15] learn time dependence based on LSTM to capture anomalous patterns in multivariate time series. However, since the LSTM cannot adequately encode long sequences as intermediate vectors, it cannot capture temporal correlations that do not match its structure. At the same time, these models lead to time-consuming computational processes and limited scalability due to the sequential propagation characteristics. Therefore, long-term time dependence remains highly challenging in multivariate time series.

- 1.

- We design a novel method that eliminates redundant dependencies in the sensor relationship graph by combining a disentangled method with a graph convolution method, enabling a powerful multiscale aggregator to capture fixed correlations between sensors on a time series effectively. At the same time, the method also models highly dynamic inter-sensor correlations. It captures hidden feature patterns of multivariate time series, alleviating the deficiency of graph convolution models in modeling correlations between sensors and accurately describing changes in temporal dynamics in multivariate time series.

- 2.

- We propose a method for capturing long-term temporal dependencies that learns hidden long-term temporal dependencies by considering multiscale correlations at different time steps and can be easily extended to long sequences by processing remote dependencies in parallel.

- 3.

- Combining inter-sensor dependencies with long-term time dependencies yields a robust anomaly detection model () with multiscale receptive fields across sensor and time dimensions. The designed disentangled method further enhances the model’s performance while the model has good interpretability.

2. Related Work

2.1. Anomaly Detection in Time Series

2.2. Disentangled Representation Learning

2.3. Transformer

3. The Proposed Model

3.1. Problem Formulation

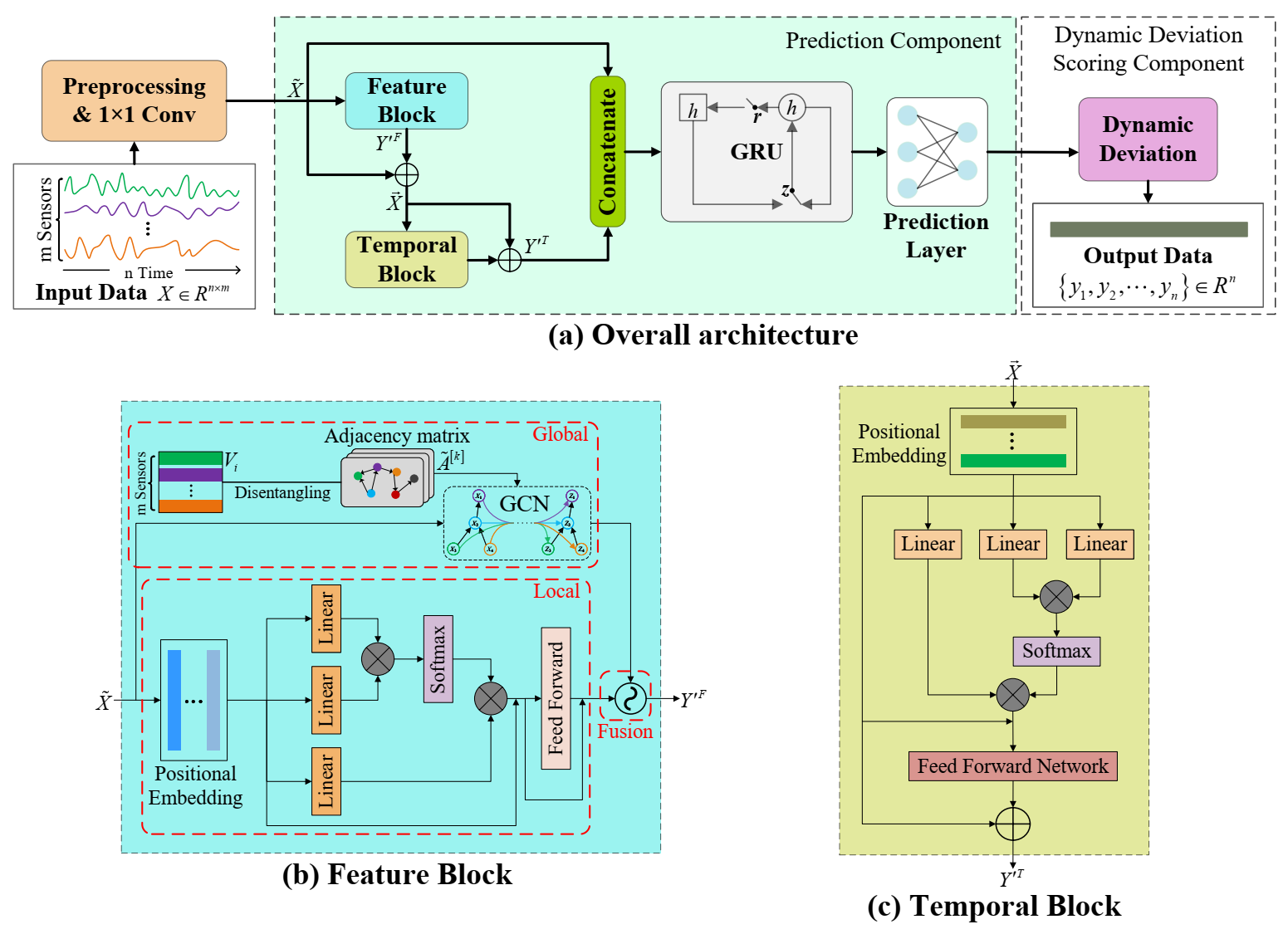

3.2. Overall Architecture

3.3. Feature Block

3.3.1. Disentangled Global Graph Convolution Layer

3.3.2. Local Attention Layer

3.3.3. Feature Fusion Layer

3.4. Temporal Block

3.5. Prediction and Model Training

3.6. Dynamic Deviation Scoring

| Algorithm 1 POT-MoM |

|

4. Performance Analysis

4.1. Experiment Setup

4.1.1. Datasets

4.1.2. Evaluation Metrics

4.1.3. Experimental Scheme

- 1.

- Comparison with existing state-of-the-art methods. We validate the effectiveness of the proposed model by comparing it with six state-of-the-art anomaly detection models.

- 2.

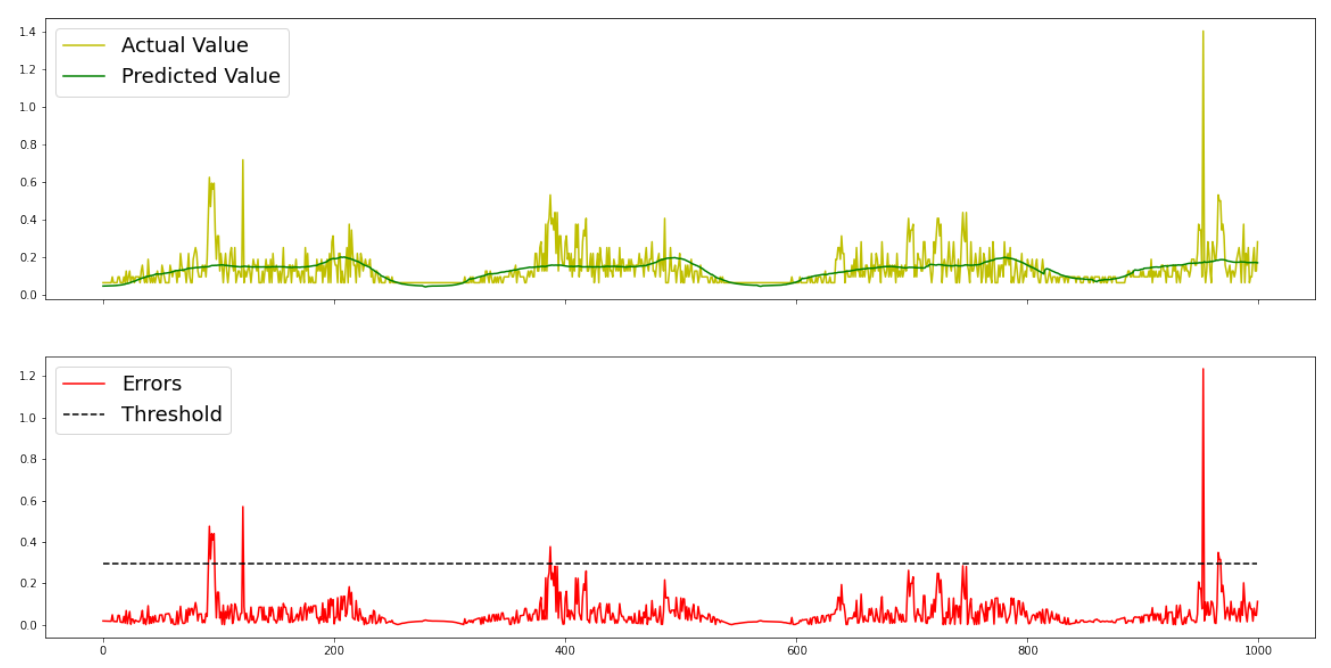

- Visualize model training results. We select some results from single server monitoring data on the SMD dataset to highlight the model’s excellent performance and provide support for model comparison analysis.

- 3.

- Ablation experiments. In order to verify the validity of the components that make up the model, we design ablation experiments to remove components one by one and keep all experimental environments consistent.

- 4.

- Anomaly explanation. The ability of the model to accurately provide valuable insights for anomaly detection is an important metric. These insights can help operators troubleshoot quickly and save problem-solving effort.

4.1.4. Baselines

- 1.

- LSTM-NDT [7]: An unsupervised threshold determination method is proposed for anomaly detection of multivariate time series using LSTM.

- 2.

- LSTM-VAE [10]: LSTM replaces the feedforward network in VAE by modeling the underlying distribution of the multidimensional signal and then reconstructing the signal with the desired distribution information, using the negative log-likelihood of the reconstructed observation distribution as the anomaly score.

- 3.

- OmniAnomaly [9]: Capturing the normal patterns of multivariate time series by learning a robust representation of them and then reconstructing the input data using the reconstructed probabilities as anomaly scores.

- 4.

- MAD-GAN [8]: LSTM-RNN is used as the base model for GAN learning to capture the temporal dependence, the discriminator and generator of GAN are used to detect anomalies, and the discriminative results and reconstruction bias of the test samples are combined to calculate the anomaly score.

- 5.

- MTAD-GAT [14]: A prediction-based and reconstruction-based model is jointly optimized using two parallel GAT layers that dynamically learn the relationship between different time series and timestamps.

- 6.

- USAD [24]: Building an encoder–decoder architecture using a self-encoder that utilizes an adversarial training strategy to learn how to amplify the reconstruction bias of anomalous inputs is more stable than with the traditional GAN-based approach.

4.1.5. Parameter Settings

4.2. Performance Comparison

- 1.

- LSTM-NDT performs the worst on the MSL and SMD datasets, while LSTM-VAE performs the worst on the SMAP dataset. This is reasonable since LSTM-NDT only considers the temporal patterns of univariate time series and ignores the inter-sensor dependencies, which leads to its inability to make accurate predictions when the dataset has numerous sensors.

- 2.

- In contrast, OmniAnomaly models inter-sensor dependencies by stochastic methods, and MAD-GAN and USAD use adversarial training to learn inter-sensor dependencies, all achieving better performance. However, they ignore the low-dimensional representation of the temporal dimension and perform poorly in modeling temporal dependencies. All three methods are based on reconstructed models, and they reconstruct the data within the window as well as possible during the training learning process. In reality, the training data contains anomalous data. USAD performs the best among these three methods, and we speculate that USAD compensates for this shortcoming by combining the advantages of both autoencoder and adversarial training to improve the detection of anomalies.

- 3.

- Unlike the above, MTAD-GAT integrates prediction-based and reconstruction-based models, using graph attention networks to learn temporal and feature dimensions’ dependencies, respectively. However, it assumes that all sensors in the dataset are interdependent, which not only simplifies the complex partial orientation dependencies between sensors but also introduces a large amount of irrelevant information that increases the complexity of the model and thus reduces the performance of the model.

4.3. Performance Visualization

4.4. Ablation Experiments

- 1.

- w/o Feature: Remove the feature block, the data is pre-processed and fed straight into the temporal block to capture the time dependence of the time series.

- 2.

- w/o Temporal: Remove the temporal block, the data is fed directly into the GRU after output from the feature block.

- 3.

- w/o GRU: Remove the GRU and send the learned inter-sensor dependencies and temporal dependencies directly to the prediction layer for prediction.

4.5. Anomaly Explanation

4.6. Engineering Applications

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Huang, S.; Liu, A.; Zhang, S.; Wang, T.; Xiong, N.N. BD-VTE: A novel baseline data based verifiable trust evaluation scheme for smart network systems. IEEE Trans. Netw. Sci. Eng. 2020, 8, 2087–2105. [Google Scholar] [CrossRef]

- Cirstea, R.G.; Kieu, T.; Guo, C.; Yang, B.; Pan, S.J. EnhanceNet: Plugin neural networks for enhancing correlated time series forecasting. In Proceedings of the 2021 IEEE 37th International Conference on Data Engineering (ICDE), Chania, Greece, 19–22 April 2021; pp. 1739–1750. [Google Scholar]

- Wu, M.; Tan, L.; Xiong, N. A Structure Fidelity Approach for Big Data Collection in Wireless Sensor Networks. Sensors 2015, 15, 248–273. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Perkins, S. Time-series novelty detection using one-class support vector machines. In Proceedings of the International Joint Conference on Neural Networks, Portland, OR, USA, 20–24 July 2003; Volume 3, pp. 1741–1745. [Google Scholar]

- Chaovalitwongse, W.A.; Fan, Y.J.; Sachdeo, R.C. On the time series k-nearest neighbor classification of abnormal brain activity. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2007, 37, 1005–1016. [Google Scholar] [CrossRef]

- Kiss, I.; Genge, B.; Haller, P.; Sebestyén, G. Data clustering-based anomaly detection in industrial control systems. In Proceedings of the 2014 IEEE 10th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj, Romania, 4–6 September 2014; pp. 275–281. [Google Scholar]

- Hundman, K.; Constantinou, V.; Laporte, C.; Colwell, I.; Soderstrom, T. Detecting spacecraft anomalies using lstms and nonparametric dynamic thresholding. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 387–395. [Google Scholar]

- Li, D.; Chen, D.; Jin, B.; Shi, L.; Goh, J.; Ng, S.K. MAD-GAN: Multivariate anomaly detection for time series data with generative adversarial networks. In Proceedings of the International Conference on Artificial Neural Networks, Bristol, UK, 14–17 September 2019; pp. 703–716. [Google Scholar]

- Su, Y.; Zhao, Y.; Niu, C.; Liu, R.; Sun, W.; Pei, D. Robust anomaly detection for multivariate time series through stochastic recurrent neural network. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2828–2837. [Google Scholar]

- Park, D.; Hoshi, Y.; Kemp, C.C. A multimodal anomaly detector for robot-assisted feeding using an lstm-based variational autoencoder. IEEE Robot. Autom. Lett. 2018, 3, 1544–1551. [Google Scholar] [CrossRef]

- Munir, M.; Siddiqui, S.A.; Dengel, A.; Ahmed, S. DeepAnT: A deep learning approach for unsupervised anomaly detection in time series. IEEE Access 2018, 7, 1991–2005. [Google Scholar] [CrossRef]

- Wu, Z.; Pan, S.; Long, G.; Jiang, J.; Chang, X.; Zhang, C. Connecting the dots: Multivariate time series forecasting with graph neural networks. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, San Francisco, CA, USA, 6–10 July 2020; pp. 753–763. [Google Scholar]

- Deng, A.; Hooi, B. Graph neural network-based anomaly detection in multivariate time series. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual Event, 2–9 February 2021; Volume 35, pp. 4027–4035. [Google Scholar]

- Zhao, H.; Wang, Y.; Duan, J.; Huang, C.; Cao, D.; Tong, Y.; Xu, B.; Bai, J.; Tong, J.; Zhang, Q. Multivariate time-series anomaly detection via graph attention network. In Proceedings of the 2020 IEEE International Conference on Data Mining (ICDM), Sorrento, Italy, 17–20 November 2020; pp. 841–850. [Google Scholar]

- Phiboonbanakit, T.; Huynh, V.N.; Horanont, T.; Supnithi, T. Detecting abnormal behavior in the transportation planning using long short term memories and a contextualized dynamic threshold. In Proceedings of the Adjunct 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2019 ACM International Symposium on Wearable Computers, London, UK, 9–13 September 2019; pp. 996–1007. [Google Scholar]

- Liu, Z.; Zhang, H.; Chen, Z.; Wang, Z.; Ouyang, W. Disentangling and unifying graph convolutions for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LO, USA, 19–20 June 2020; pp. 143–152. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Baragona, R.; Battaglia, F. Outliers detection in multivariate time series by independent component analysis. Neural Comput. 2007, 19, 1962–1984. [Google Scholar] [CrossRef]

- Yao, Y.; Xiong, N.; Park, J.H.; Ma, L.; Liu, J. Privacy-preserving max/min query in two-tiered wireless sensor networks. Comput. Math. Appl. 2013, 65, 1318–1325. [Google Scholar] [CrossRef]

- Xia, F.; Hao, R.; Li, J.; Xiong, N.; Yang, L.T.; Zhang, Y. Adaptive GTS allocation in IEEE 802.15. 4 for real-time wireless sensor networks. J. Syst. Archit. 2013, 59, 1231–1242. [Google Scholar] [CrossRef]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Philip, S.Y. A comprehensive survey on graph neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4–24. [Google Scholar] [CrossRef]

- Gao, K.; Han, F.; Dong, P.; Xiong, N.; Du, R. Connected Vehicle as a Mobile Sensor for Real Time Queue Length at Signalized Intersections. Sensors 2019, 19, 2059. [Google Scholar] [CrossRef]

- Jiang, Y.; Tong, G.; Yin, H.; Xiong, N. A pedestrian detection method based on genetic algorithm for optimize XGBoost training parameters. IEEE Access 2019, 7, 118310–118321. [Google Scholar] [CrossRef]

- Audibert, J.; Michiardi, P.; Guyard, F.; Marti, S.; Zuluaga, M.A. Usad: Unsupervised anomaly detection on multivariate time series. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, San Francisco, CA, USA, 6–10 July 2020; pp. 3395–3404. [Google Scholar]

- Li, H.; Liu, J.; Wu, K.; Yang, Z.; Liu, R.W.; Xiong, N. Spatio-temporal vessel trajectory clustering based on data mapping and density. IEEE Access 2018, 6, 58939–58954. [Google Scholar] [CrossRef]

- Muralidhara, S.; Hashmi, K.A.; Pagani, A.; Liwicki, M.; Stricker, D.; Afzal, M.Z. Attention-Guided Disentangled Feature Aggregation for Video Object Detection. Sensors 2022, 22, 8583. [Google Scholar] [CrossRef]

- Ma, J.; Zhou, C.; Yang, H.; Cui, P.; Wang, X.; Zhu, W. Disentangled self-supervision in sequential recommenders. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, San Francisco, CA, USA, 6–10 July 2020; pp. 483–491. [Google Scholar]

- Wang, Y.; Tang, S.; Lei, Y.; Song, W.; Wang, S.; Zhang, M. Disenhan: Disentangled heterogeneous graph attention network for recommendation. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Virtual Event, 19–23 October 2020; pp. 1605–1614. [Google Scholar]

- Hamaguchi, R.; Sakurada, K.; Nakamura, R. Rare event detection using disentangled representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9327–9335. [Google Scholar]

- Wang, X.; Chen, H.; Zhu, W. Multimodal disentangled representation for recommendation. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Virtual Event, 5–9 July 2021; pp. 1–6. [Google Scholar]

- Yamada, M.; Kim, H.; Miyoshi, K.; Iwata, T.; Yamakawa, H. Disentangled representations for sequence data using information bottleneck principle. In Proceedings of the Asian Conference on Machine Learning, PMLR, Bangkok, Thailand, 18–20 November 2020; pp. 305–320. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Chen, Q.; Zhao, H.; Li, W.; Huang, P.; Ou, W. Behavior sequence transformer for e-commerce recommendation in alibaba. In Proceedings of the 1st International Workshop on Deep Learning Practice for High-Dimensional Sparse Data, Anchorage, AL, USA, 5 August 2019; pp. 1–4. [Google Scholar]

- Lim, B.; Arık, S.Ö.; Loeff, N.; Pfister, T. Temporal fusion transformers for interpretable multi-horizon time series forecasting. Int. J. Forecast. 2021, 37, 1748–1764. [Google Scholar] [CrossRef]

- Liu, S.; Yu, H.; Liao, C.; Li, J.; Lin, W.; Liu, A.X.; Dustdar, S. Pyraformer: Low-complexity pyramidal attention for long-range time series modeling and forecasting. In Proceedings of the International Conference on Learning Representations, Virtual Event, 3–7 May 2021. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Li, Z.; Zhao, Y.; Han, J.; Su, Y.; Jiao, R.; Wen, X.; Pei, D. Multivariate time series anomaly detection and interpretation using hierarchical inter-metric and temporal embedding. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Singapore, 14–18 August 2021; pp. 3220–3230. [Google Scholar]

- Wan, R.; Xiong, N. An energy-efficient sleep scheduling mechanism with similarity measure for wireless sensor networks. Hum. Centric Comput. Inf. Sci. 2018, 8, 18. [Google Scholar] [CrossRef]

- Coifman, R.R.; Lafon, S.; Lee, A.B.; Maggioni, M.; Nadler, B.; Warner, F.; Zucker, S.W. Geometric diffusions as a tool for harmonic analysis and structure definition of data: Diffusion maps. Proc. Natl. Acad. Sci. USA 2005, 102, 7426–7431. [Google Scholar] [CrossRef]

- Li, M.; Chen, S.; Chen, X.; Zhang, Y.; Wang, Y.; Tian, Q. Actional-structural graph convolutional networks for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–19 June 2019; pp. 3595–3603. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Siffer, A.; Fouque, P.A.; Termier, A.; Largouet, C. Anomaly detection in streams with extreme value theory. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 1067–1075. [Google Scholar]

- Li, J.; Di, S.; Shen, Y.; Chen, L. FluxEV: A fast and effective unsupervised framework for time-series anomaly detection. In Proceedings of the 14th ACM International Conference on Web Search and Data Mining, Jerusalem, Israel, 8–12 March 2021; pp. 824–832. [Google Scholar]

- Ren, H.; Xu, B.; Wang, Y.; Yi, C.; Huang, C.; Kou, X.; Xing, T.; Yang, M.; Tong, J.; Zhang, Q. Time-series anomaly detection service at microsoft. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 3009–3017. [Google Scholar]

- Entekhabi, D.; Njoku, E.G.; O’Neill, P.E.; Kellogg, K.H.; Crow, W.T.; Edelstein, W.N.; Entin, J.K.; Goodman, S.D.; Jackson, T.J.; Johnson, J.; et al. The soil moisture active passive (SMAP) mission. Proc. IEEE 2010, 98, 704–716. [Google Scholar] [CrossRef]

- Xu, H.; Chen, W.; Zhao, N.; Li, Z.; Bu, J.; Li, Z.; Liu, Y.; Zhao, Y.; Pei, D.; Feng, Y.; et al. Unsupervised anomaly detection via variational auto-encoder for seasonal kpis in web applications. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018; pp. 187–196. [Google Scholar]

- Chen, X.; Deng, L.; Huang, F.; Zhang, C.; Zheng, K. DAEMON: Unsupervised Anomaly Detection and Interpretation for Multivariate Time Series. In Proceedings of the 2021 IEEE 37th International Conference on Data Engineering (ICDE), Chania, Greece, 19–22 April 2021. [Google Scholar]

- Yang, P.; Xiong, N.; Ren, J. Data security and privacy protection for cloud storage: A survey. IEEE Access 2020, 8, 131723–131740. [Google Scholar] [CrossRef]

- Lu, Y.; Wu, S.; Fang, Z.; Xiong, N.; Yoon, S.; Park, D.S. Exploring finger vein based personal authentication for secure IoT. Future Gener. Comput. Syst. 2017, 77, 149–160. [Google Scholar] [CrossRef]

- Lu, C.; Huang, J.; Huang, L. Detecting Urban Anomalies Using Factor Analysis and One Class Support Vector Machine. Comput. J. 2021. [Google Scholar] [CrossRef]

| Dataset | #Entities | #Features | Train | Test | Anomaly |

|---|---|---|---|---|---|

| MSL | 55 | 25 | 58317 | 73729 | 10.72% |

| SMAP | 27 | 55 | 135183 | 427617 | 13.13% |

| SMD | 28 | 38 | 708405 | 708420 | 4.16% |

| Methods | MSL | SMAP | SMD | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | P | R | F1 | |

| LSTM-NDT | 0.5934 | 0.5374 | 0.5640 | 0.8965 | 0.8846 | 0.8905 | 0.5684 | 0.6438 | 0.6037 |

| LSTM-VAE | 0.5257 | 0.9546 | 0.6780 | 0.8551 | 0.6366 | 0.7298 | 0.8698 | 0.7879 | 0.8268 |

| OmniAnomaly | 0.8867 | 0.9117 | 0.8989 | 0.7416 | 0.9776 | 0.8434 | 0.8334 | 0.9449 | 0.8857 |

| MAD-GAN | 0.8517 | 0.8991 | 0.8747 | 0.8049 | 0.8214 | 0.8131 | 0.9230 | 0.8694 | 0.8982 |

| MTAD-GAT | 0.8754 | 0.9440 | 0.9084 | 0.8906 | 0.9123 | 0.9013 | 0.9396 | 0.9283 | 0.9339 |

| USAD | 0.8810 | 0.9786 | 0.9272 | 0.7697 | 0.9831 | 0.8634 | 0.9314 | 0.9617 | 0.9463 |

| 0.9454 | 0.9673 | 0.9562 | 0.9534 | 0.9350 | 0.9441 | 0.9356 | 0.9709 | 0.9529 | |

| IPS@100% | IPS@150% | |

|---|---|---|

| SMD | 0.7984 | 0.8923 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Xing, S.; Gao, R.; Yan, L.; Xiong, N.; Wang, R. Disentangled Dynamic Deviation Transformer Networks for Multivariate Time Series Anomaly Detection. Sensors 2023, 23, 1104. https://doi.org/10.3390/s23031104

Wang C, Xing S, Gao R, Yan L, Xiong N, Wang R. Disentangled Dynamic Deviation Transformer Networks for Multivariate Time Series Anomaly Detection. Sensors. 2023; 23(3):1104. https://doi.org/10.3390/s23031104

Chicago/Turabian StyleWang, Chunzhi, Shaowen Xing, Rong Gao, Lingyu Yan, Naixue Xiong, and Ruoxi Wang. 2023. "Disentangled Dynamic Deviation Transformer Networks for Multivariate Time Series Anomaly Detection" Sensors 23, no. 3: 1104. https://doi.org/10.3390/s23031104

APA StyleWang, C., Xing, S., Gao, R., Yan, L., Xiong, N., & Wang, R. (2023). Disentangled Dynamic Deviation Transformer Networks for Multivariate Time Series Anomaly Detection. Sensors, 23(3), 1104. https://doi.org/10.3390/s23031104