Robust Point Cloud Registration Network for Complex Conditions

Abstract

:1. Introduction

- A new point cloud registration network, CCRNet, is proposed, which registers two point clouds in a coarse-to-fine registration manner. Compared with previous methods, our method can register point clouds robustly and accurately in complex situations without iteration.

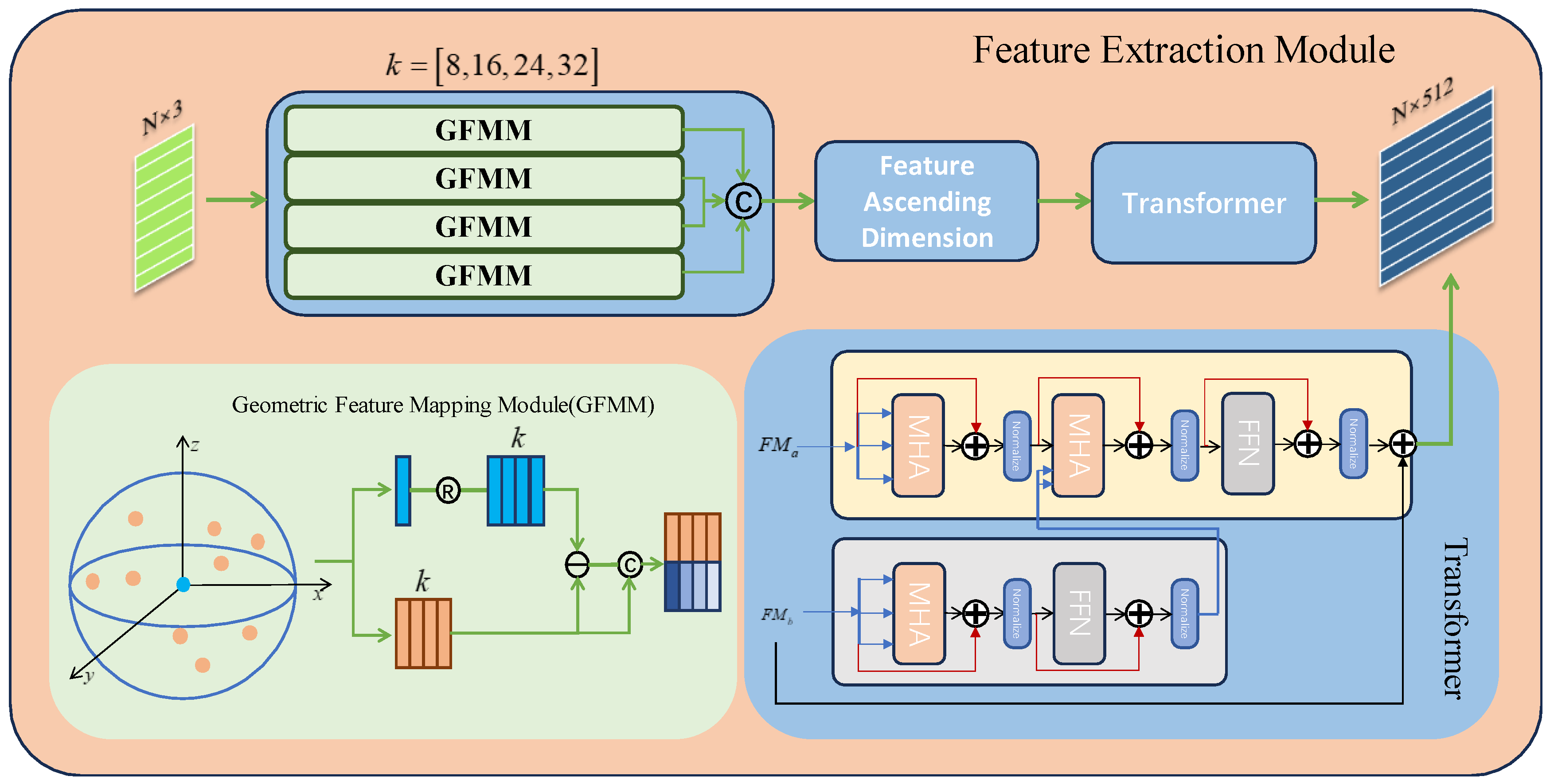

- A multi-scale feature extraction module is proposed, which combines the point cloud neighborhood map and the Transformer structure to obtain the features of different scales of the point cloud and the information of the relationship between two point clouds, which can greatly improve the ability of point cloud registration.

- The soft correspondence connection in the fine registration module combines the CBAM structure suitable for point clouds, which improves the accuracy of the point correspondence in the soft registration module, and also improves its ability in incomplete overlapping point cloud registration.

- The experimental results on the general dataset show that our network, CCRNet, is superior to the current popular algorithms in point cloud registration, and show its effectiveness.

Related Work

2. Methods

2.1. Overview

2.2. Point Cloud Feature Extraction Module

2.3. Coarse Registration Module

2.4. Fine Registration Module

2.5. Training Loss

3. Experiments

3.1. Dataset

3.2. Metrics

3.3. Implementation Details

3.4. Experimental Results

3.4.1. Results on Clean Data of a Large Initial Attitude Difference

3.4.2. Results on Noise Data

3.4.3. Results on Incomplete Overlapping Data

4. Discussion

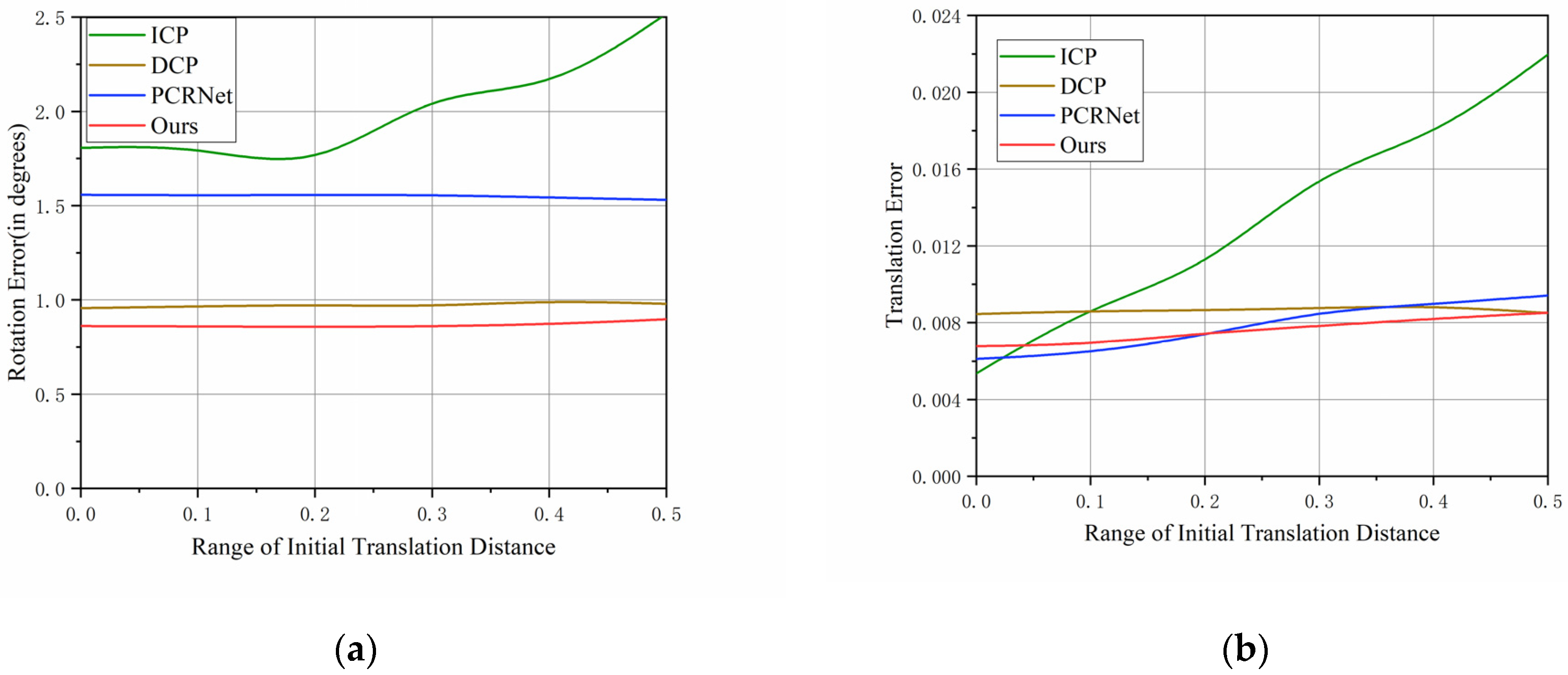

4.1. The Effect of the Initial Rotation Angle on the Results

4.2. The Effect of the Initial Rotation Angle on the Results

4.3. The Effect of Different Overlapping Area Regions on the Results

4.4. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Huang, X.; Mei, G.; Zhang, J.; Abbas, R. A comprehensive survey on point cloud registration. arXiv 2021, arXiv:2103.02690. [Google Scholar]

- Pomerleau, F.; Colas, F.; Siegwart, R. A review of point cloud registration algorithms for mobile robotics. Found. Trends® Robot. 2015, 4, 1–104. [Google Scholar] [CrossRef]

- Pomerleau, F.; Liu, M.; Colas, F.; Siegwart, R. Challenging data sets for point cloud registration algorithms. Int. J. Robot. Res. 2012, 31, 1705–1711. [Google Scholar] [CrossRef]

- Chen, J.; Kira, Z.; Cho, Y.K. Deep learning approach to point cloud scene understanding for automated scan to 3D reconstruction. J. Comput. Civ. Eng. 2019, 33, 04019027. [Google Scholar] [CrossRef]

- Yang, L.; Liu, Y.; Peng, J.; Liang, Z. A novel system for off-line 3D seam extraction and path planning based on point cloud segmentation for arc welding robot. Robot. Comput.-Integr. Manuf. 2020, 64, 101929. [Google Scholar] [CrossRef]

- Alexiou, E.; Yang, N.; Ebrahimi, T. PointXR: A toolbox for visualization and subjective evaluation of point clouds in virtual reality. In Proceedings of the 2020 Twelfth International Conference on Quality of Multimedia Experience (QoMEX), Athlone, Ireland, 26–28 May 2020; pp. 1–6. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Aoki, Y.; Goforth, H.; Srivatsan, R.A.; Lucey, S. Pointnetlk: Robust & efficient point cloud registration using pointnet. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Sarode, V.; Li, X.; Goforth, H.; Aoki, Y.; Srivatsan, R.A.; Lucey, S.; Choset, H. Pcrnet: Point cloud registration network using pointnet encoding. arXiv 2019, arXiv:1908.07906. [Google Scholar]

- Wang, Y.; Solomon, J.M. Deep closest point: Learning representations for point cloud registration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Besl, P.J.; Mckay, H.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Estépar, R.S.J.; Brun, A.; Westin, C.-F. Robust generalized total least squares iterative closest point registration. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2004: 7th International Conference, Saint-Malo, France, 26–29 September 2004; Springer: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Gold, S.; Rangarajan, A.; Lu, C.-P.; Pappu, S.; Mjolsness, E. New algorithms for 2D and 3D point matching: Pose estimation and correspondence. Pattern Recognit. 1998, 31, 1019–1031. [Google Scholar] [CrossRef]

- Pavlov, A.; Ovchinnikov, G.W.; Derbyshev, D.; Oseledets, I. AA-ICP: Iterative Closest Point with Anderson Acceleration. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018. [Google Scholar]

- Yang, J.; Li, H.; Campbell, D.; Jia, Y. Go-ICP: A globally optimal solution to 3D ICP point-set registration. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 2241–2254. [Google Scholar] [CrossRef] [PubMed]

- Mellado, N.; Aiger, D.; Mitra, N.J. Super 4pcs fast global pointcloud registration via smart indexing. In Computer Graphics Forum; Wiley Online Library: Hoboken, NJ, USA, 2014. [Google Scholar]

- Biber, P. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No.03CH37453), Las Vegas, NV, USA, 27–31 October 2003. [Google Scholar]

- Masuda, T.; Sakaue, A.; Yokoya, N. Registration and integration of multiple range images for 3-D model construction. In Proceedings of the 13th International Conference on Pattern Recognition, Vienna, Austria, 25–29 August 1996; Volume 871, pp. 879–883. [Google Scholar]

- Sipiran, I.; Bustos, B. Harris 3D: A robust extension of the Harris operator for interest point detection on 3D meshes. Vis. Comput. 2011, 27, 963. [Google Scholar] [CrossRef]

- Stamos, I.; Allen, P.K. Geometry and Texture Recovery of Scenes of Large Scale. Comput. Vis. Image Underst. 2002, 88, 94–118. [Google Scholar] [CrossRef]

- Prokop, M.; Shaikh, S.A.; Kim, K.S. Low Overlapping Point Cloud Registration Using Line Features Detection. Remote Sens. 2019, 12, 61. [Google Scholar] [CrossRef]

- Brenner, C.; Dold, C.; Ripperda, N. Coarse orientation of terrestrial laser scans in urban environments. ISPRS J. Photogramm. Remote Sens. 2008, 63, 4–18. [Google Scholar] [CrossRef]

- Chen, C.S.; Hung, Y.P.; Cheng, J.B. RANSAC-Based DARCES: A new approach to fast automatic registration of partially overlapping range images. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 21, 1229–1234. [Google Scholar] [CrossRef]

- Benyue, S.; Wei, H.; Yusheng, P.; Min, S. 4D-ICP point cloud registration method for RGB-D data. J. Nanjing Univ. (Nat. Sci.) 2018, 54, 829–837. [Google Scholar] [CrossRef]

- Ni, P.; Zhang, W.; Zhu, X.; Cao, Q. Pointnet++ grasping: Learning an end-to-end spatial grasp generation algorithm from sparse point clouds. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020. [Google Scholar]

- Anestis, Z.; Li, S.; Tom, D.; Grzegorz, C. Integrating Deep Semantic Segmentation Into 3-D Point Cloud Registration. IEEE Robot. Autom. Lett. 2018, 3, 2942–2949. [Google Scholar]

- Zeng, A.; Xiao, J. 3DMatch: Learning Local Geometric Descriptors from RGB-D Reconstructions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Yew, Z.J.; Lee, G.H. 3DFeat-Net: Weakly supervised local 3D features for point cloud registration. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Li, J.; Lee, G.H. USIP: Unsupervised Stable Interest Point Detection from 3D Point Clouds. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar] [CrossRef]

- Du, A.; Huang, X.; Zhang, J.; Yao, L.; Wu, Q. Kpsnet: Keypoint detection and feature extraction for point cloud registration. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019. [Google Scholar]

- Deng, H.; Birdal, T.; Ilic, S. Ppfnet: Global context aware local features for robust 3D point matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Deng, H.; Birdal, T.; Ilic, S. Ppf-foldnet: Unsupervised learning of rotation invariant 3d local descriptors. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the IJCAI’81: 7th International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981. [Google Scholar]

- Lu, W.; Wan, G.; Zhou, Y.; Fu, X.; Yuan, P.; Song, S. Deepvcp: An end-to-end deep neural network for point cloud registration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic graph cnn for learning on point clouds. ACM Trans. Graph. 2019, 38, 146. [Google Scholar] [CrossRef]

- Yew, Z.J.; Lee, G.H. Rpm-net: Robust point matching using learned features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Qiu, S.; Wu, Y.; Anwar, S.; Li, C. Investigating attention mechanism in 3D point cloud object detection. In Proceedings of the 2021 International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021. [Google Scholar]

- Rubner, Y.; Tomasi, C.; Guibas, L.J. The earth mover’s distance as a metric for image retrieval. Int. J. Comput. Vis. 2000, 40, 99–121. [Google Scholar] [CrossRef]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3D shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. In Proceedings of the Robotics: Science and Systems, Seattle, WA, USA, 28 June–1 July 2009. [Google Scholar]

- Lei, H.; Jiang, G.; Quan, L. Fast descriptors and correspondence propagation for robust global point cloud registration. IEEE Trans. Image Process. 2017, 26, 3614–3623. [Google Scholar] [CrossRef] [PubMed]

| Methods | MSE(R) | RMSE(R) | MAE(R) | MSE(t) | RMSE(t) | MAE(t) | TIME(s) |

|---|---|---|---|---|---|---|---|

| ICP(P2P) [11] | 10.947370 | 3.308681 | 2.517660 | 0.069160 | 0.262983 | 0.021960 | 0.031 |

| Generalized-ICP [44] | 10.600160 | 3.255789 | 2.022742 | 0.073520 | 0.271146 | 0.015420 | 0.082 |

| PointNet-LK [8] | 11.531400 | 3.395792 | 2.656400 | 0.082380 | 0.287019 | 0.020400 | 0.064 |

| PCRNet [9] | 4.629300 | 2.151581 | 1.531110 | 0.025940 | 0.161059 | 0.009410 | 0.057 |

| DCPv1 [10] | 7.624540 | 2.761257 | 1.689656 | 0.000205 | 0.014318 | 0.010835 | 0.027 |

| DCPv2 [10] | 2.717369 | 1.648444 | 0.979240 | 0.000134 | 0.011580 | 0.008505 | 0.035 |

| FDCP [45] | 0.000124 | 0.011135 | 0.000640 | 0.000001 | 0.003160 | 0.001810 | 12.15 |

| Ours (CCRNet) | 2.185389 | 1.478306 | 0.900615 | 0.000125 | 0.011180 | 0.008482 | 0.040 |

| Methods | MSE(R) | RMSE(R) | MAE(R) | MSE(t) | RMSE(t) | MAE(t) |

|---|---|---|---|---|---|---|

| ICP [11] | 9.417890 | 3.068860 | 2.640210 | 0.088700 | 0.297825 | 0.027700 |

| Generalized-ICP [44] | 8.150900 | 2.854980 | 1.881210 | 0.091270 | 0.302109 | 0.023260 |

| PCRNet [9] | 4.214110 | 2.052830 | 0.944300 | 0.027180 | 0.164864 | 0.009410 |

| DCPv1 [10] | 7.263767 | 2.695138 | 1.691554 | 0.000232 | 0.015232 | 0.011358 |

| DCPv2 [10] | 2.694659 | 1.641542 | 0.918025 | 0.000105 | 0.010247 | 0.007796 |

| Ours (CCRNet) | 2.251652 | 1.500551 | 0.856361 | 0.00099 | 0.009925 | 0.007579 |

| Methods | ICP | FDCP | Ours (CCRNet) | |||

|---|---|---|---|---|---|---|

| Sigma | MAE(R) | TIME(s) | MAE(R) | TIME(s) | MAE(R) | TIME(s) |

| 0.01 | 2.64021 | 0.02993 | 2.14 | 384 | 0.858245 | 0.03974 |

| 0.02 | 2.79802 | 0.03024 | 2.232 | 664 | 0.885979 | 0.03992 |

| 0.03 | 3.05914 | 0.03012 | 4.57 | 873 | 0.981902 | 0.03904 |

| 0.05 | 3.59124 | 0.02981 | 6.73 | 877 | 1.009078 | 0.03967 |

| 0.1 | 4.39743 | 0.03007 | 13.62 | 865 | 1.596119 | 0.04012 |

| Methods | MSE(R) | RMSE(R) | MAE(R) | MSE(t) | RMSE(t) | MAE(t) |

|---|---|---|---|---|---|---|

| ICP [11] | 12.51042 | 3.536948 | 3.3844 | 0.1243 | 0.352562 | 0.03584 |

| Generalized-ICP [44] | 12.87378 | 3.588005 | 5.06372 | 0.12722 | 0.356679 | 0.05631 |

| PCRNet [9] | 15.7834 | 3.972833 | 4.45132 | 0.00291 | 0.053944 | 0.04642 |

| DCPv1 [10] | 25.78643 | 5.078034 | 3.578882 | 0.005004 | 0.070739 | 0.058286 |

| DCPv2 [10] | 16.25156 | 4.031323 | 2.709049 | 0.002836 | 0.053254 | 0.043401 |

| Ours (CCRNet) | 10.30937 | 3.21082 | 2.202929 | 0.002258 | 0.047518 | 0.038064 |

| Overlap | 0.5 | 0.6 | 0.7 | 0.8 |

|---|---|---|---|---|

| MAE(R) | 7.29 | 3.63 | 2.41 | 2.20 |

| MAE(t) | 0.084 | 0.051 | 0.042 | 0.038 |

| CCRNet w/o CFRM + SVD | CCRNet w/o MSFEM + PointNet | CCRNet w/o Transformer | CCRNet w/o CBAM | |

|---|---|---|---|---|

| MSE(R) | 2.63187 | 3.54291 | 2.93541 | 2.4345 |

| Enhance Percent | 20.43% | 62.11% | 34.31% | 11.39% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hao, R.; Wei, Z.; He, X.; Zhu, K.; He, J.; Wang, J.; Li, M.; Zhang, L.; Lv, Z.; Zhang, X.; et al. Robust Point Cloud Registration Network for Complex Conditions. Sensors 2023, 23, 9837. https://doi.org/10.3390/s23249837

Hao R, Wei Z, He X, Zhu K, He J, Wang J, Li M, Zhang L, Lv Z, Zhang X, et al. Robust Point Cloud Registration Network for Complex Conditions. Sensors. 2023; 23(24):9837. https://doi.org/10.3390/s23249837

Chicago/Turabian StyleHao, Ruidong, Zhongwei Wei, Xu He, Kaifeng Zhu, Jiawei He, Jun Wang, Muyu Li, Lei Zhang, Zhuang Lv, Xin Zhang, and et al. 2023. "Robust Point Cloud Registration Network for Complex Conditions" Sensors 23, no. 24: 9837. https://doi.org/10.3390/s23249837

APA StyleHao, R., Wei, Z., He, X., Zhu, K., He, J., Wang, J., Li, M., Zhang, L., Lv, Z., Zhang, X., & Zhang, Q. (2023). Robust Point Cloud Registration Network for Complex Conditions. Sensors, 23(24), 9837. https://doi.org/10.3390/s23249837