Abstract

In recent times, pollution has emerged as a significant global concern, with European regulations stipulating limits on PM 2.5 particle levels. Addressing this challenge necessitates innovative approaches. Smart low-cost sensors suffer from imprecision, and can not replace legal stations in terms of accuracy, however, their potential to amplify the capillarity of air quality evaluation on the territory is not under discussion. In this paper, we propose an AI system to correct PM 2.5 levels in low-cost sensor data. Our research focuses on data from Turin, Italy, emphasizing the impact of humidity on low-cost sensor accuracy. In this study, different Neural Network architectures that vary the number of neurons per layer, consecutive records and batch sizes were used and compared to gain a deeper understanding of the network’s performance under various conditions. The AirMLP7-1500 model, with an impressive R-squared score of 0.932, stands out for its ability to correct PM 2.5 measurements. While our approach is tailored to the city of Turin, it offers a systematic methodology for the definition of those models and holds the promise to significantly improve the accuracy of air quality data collected from low-cost sensors, increasing the awareness of citizens and municipalities about this critical environmental information.

1. Introduction

In recent times, the issue of pollution has become a significant concern for humanity [1,2]. Exposure to particulate matter (PM) poses significant health risks, with strong associations established between PM exposure and cardiopulmonary morbidity and mortality, and lung cancer [3]. Fine particles, specifically those with a diameter of 2.5 m or smaller, raise specific concerns because of their heightened toxicity, ability to penetrate the lungs deeply, and prolonged presence within the respiratory system [4,5]. Beyond health impacts, PM 2.5 also contributes to environmental problems such as reduced visibility, ecological damage such as soil nutrient depletion and acid rain effects, as well as material deterioration, exemplified by the discolouration of cultural landmarks [6,7,8]. In response to this mounting concern, European regulations and U.S. National Ambient Air Quality Standards (NAAQS) [9,10] have implemented stringent measures. These actions involve the establishment of rigorous standards that limit the number of days in a year when PM 2.5 and 10 levels can exceed defined thresholds. Furthermore, they have enforced restrictions on particulate matter emissions from a wide range of sources in various industries.

The concentrations of PM exhibit inherent spatial and temporal variations, leading to differing personal exposure levels [11]. To tackle this challenge and expand the monitored areas, various initiatives have been undertaken to create affordable PM sensor networks [12,13,14,15]. Usually, these networks employ low-cost sensors that offer advantages such as scalability, real-time data, and citizen science participation; while these widely distributed sensors cannot completely replace reference stations in terms of accuracy, they play a vital role in advancing air quality research [16,17,18].

There are various types of low-cost (LC) sensors designed for measuring pollutants, broadly classified into gases and particulate matter [19,20,21]. In the context of our paper, we focus on PM low-cost sensors that employ laser scattering techniques to estimate particle size and number concentrations, which are subsequently converted into mass concentrations using proprietary algorithms.

Laser scattering methods have limitations in accuracy compared to more expensive reference instruments. Moreover, low-cost sensors may be sensitive to environmental factors such as humidity, temperature, and atmospheric pressure, which can influence sensor performance and be susceptible to interference from other pollutants or sources, leading to erroneous readings. Artificial intelligence methods have demonstrated their effectiveness in enhancing the precision of low-cost sensor measurements. Many of these approaches involve a calibration procedure that requires positioning the low-cost sensors close to reference stations [22,23] and then aligning the data exploiting machine-learning techniques or neural networks.

In this paper, we address the issue of a calibration of low-cost PM sensors using Multilayer Perceptron (MLP) networks, which have a track record of outperforming other algorithms in similar scenarios, as highlighted in [24]. Our objective is to establish a basis for future research initiatives, starting with Turin’s city, Italy. We aim to introduce a systematic methodology applicable to training neural networks in diverse settings beyond our initial study area.

Our research encompasses several key components:

- 1.

- Data Understanding and Assembling: Our first step involves the assembly of a comprehensive dataset. We collect data from multiple sources, including five low-cost sensors and one reference station. The resulting dataset is designed to capture the temporal patterns inherent in the data and to consider the influence of various meteorological factors on the detected concentrations;

- 2.

- Neural Network Training: We train various neural network models, using the assembled dataset. These models are designed to minimise the error introduced by the low-cost sensor in estimating PM 2.5 concentrations. Different hyperparameters to identify the optimal model configuration were explored and compared.

- 3.

- Results Comparison: A comparative analysis of the best-performing model has been conducted, and additional insights and considerations derived from our findings are provided.

The rest of the paper is organised as follows. In Section 2, we describe the devices used in the study, elucidate the process of dataset creation, and introduce the MLP architectures employed. Section 3 delves into the tests carried out and provides an analysis of the results achieved through a comparison of different architectures. Section 4 and Section 5 are dedicated to the discussion and conclusions, respectively, drawing upon our discoveries and providing valuable insights for potential avenues of future research.

2. Materials and Methods

This section delves into the air quality sensor characteristics employed in the study and the main challenges related to their deployment, the creation of a dataset designed to capture the temporal nature of the sensor data and the neural network architecture used in our research.

2.1. Air Quality Sensors

In the context of our research, we have access to observations obtained from five low-cost devices, named Arianna and produced by Wiseair [25], located in Turin; positioned near a Tecora Sequential Unit, a reference station that allows the automated and sequential collections of the atmospherical PM; and installed and managed by Arpa Piemonte [26,27]. These devices were placed in a green area, distanced from the most congested streets. The dataset covers four months, with three devices deployed for two months between March and April and the remaining two devices collecting data for two months from October to November.

Each Arianna device includes an SPS30 sensor [28,29] and sensors for measuring relative humidity (RH) and temperature. SPS30 employs laser scattering technology (see Section 2.1.1) to measure PM 1, PM 2.5, PM 4, and PM 10 concentrations, giving in output both number and mass concentration. Unfortunately, the number concentration was not available at the time of this study. Specifications relative to PM 2.5 are reported in Table 1.

Table 1.

SPS30 specifications related to PM 2.5.

In summary, this study incorporates a range of features, including PM 2.5 concentrations (g/m), RH and temperature (%), and temperature (C), and integrates these with additional meteorological factors such as atmospheric pressure (hPa), cloud coverage (%), and wind speed (m/s). These features are the most significant in the context of air quality monitoring. Pressure affects air density, which, in turn, impacts the dispersion of particulate matter in the air. Cloud coverage is related to RH [30]. Temperature plays a main role in influencing RH, which is a major concern in this study. Wind speed affects the dispersion of particles. All of these factors have the potential to influence the state of air quality over time.

An important aspect to consider is that when Arpa station records raw data below the detection limit, the result is assumed to be equal to half of the detection limit. This approach is referred to as a medium bound technique (when the concentration of a target substance is below the detection limit, it is typically considered to be estimated as half of the detection limit). As a result, the Agency introduces a lower limit in the ground truth data, which is set at 4 g/m in this case.

2.1.1. Laser-Scattering Technology

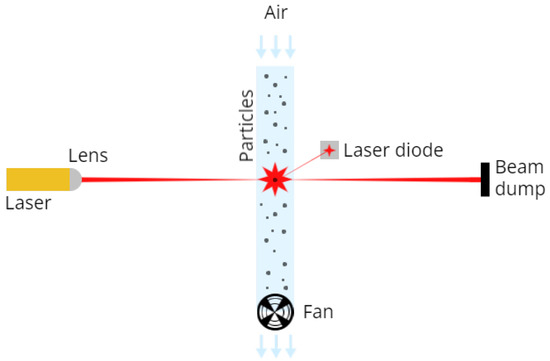

In the realm of air quality monitoring, various types of low-cost sensors have been employed to measure pollutants, particularly PM. We categorise the instruments used as optical particle sensors (OPS), which can be further classified into two primary types: nephelometers and optical particle counters (OPC) [31]. Nephelometers operate by measuring particles collectively, capturing light scattered by all particles across a wide range of angles. In contrast, OPC detects particles individually. A simple laser scattering example is given in Figure 1. From the light scattered, by applying the Mie theory, it is possible to calculate the equivalent particle diameter and the number of particles with different diameters per unit volume. The data collected are then converted into particle mass concentration, expressed in units of micrograms per cubic meter (g/m).

Figure 1.

Simple diagram of laser scattering technology.

The term low-cost sensor encompasses a wide range of technologies, ranging from sensors that cost tens of dollars to those with higher price tags, sometimes reaching a few thousand dollars. In contrast, reference stations are significantly more expensive, often exceeding tens of thousands of dollars in cost.

Current state-of-the-art research primarily focuses on data collected from sensors priced below a few hundred dollars. These sensors are affordable for citizens, making them accessible, but they also come with a level of simplicity. The straightforward technology used in these sensors presents challenges in accurately detecting PM concentrations.

Table 2 compares various low-cost PM sensors frequently discussed in the literature [31,32,33,34,35,36,37]. These sensors are recognised for their simplicity and affordability but lack additional technology to counteract the impact of external factors, including meteorological conditions.

Table 2.

A comparison of PM low-cost laser-scattering sensors.

In contrast, Table 3 highlights devices that combine low-cost sensors with supplementary technologies. For instance, the device employed in this study, named Arianna, features an SPS30 sensor, RH and temperature sensors, and a filter to exclude larger external objects, such as insects, from entering the device. It is worth noting that Arianna does not include an air conditioning system, as it relies on solar panels for power, which limits its energy resources. In contrast, the other devices in Table 3 use such technology to reduce humidity, a critical factor that will be discussed further in Section 2.1.2.

Table 3.

A comparison of PM devices.

Although the inclusion of a drying system is essential for accuracy, it increases the cost, making these devices less accessible to the general public. In fact, as shown in Table 2, low-cost sensors are remarkably affordable. In contrast, the devices presented in Table 3 are significantly more expensive, often exceeding thousands of dollars due to their heightened complexity. The cost of these devices may vary depending on factors such as the quantity sold and whether they are available for purchase or exclusively for rental.

2.1.2. Hygroscopicity Issue

One significant challenge faced by these low-cost sensors is their susceptibility to various influencing factors, particularly the impact of humidity [46,47].

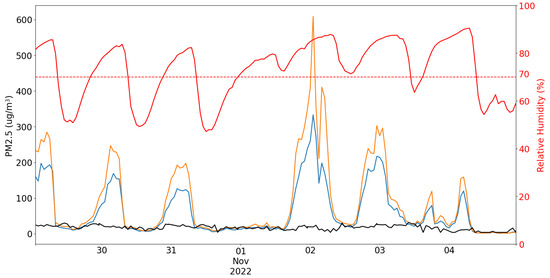

When humidity levels increase, the low-cost sensor reports higher PM 2.5 values than the actual concentration. This stems from the hygroscopic nature of airborne particles [48,49]. As illustrated in Figure 2, when RH, indicated by the red line, exceeds a certain threshold, there is a noticeable increase in the reported PM 2.5 values, represented by the blue and orange lines. This increase in PM 2.5 concentration, as observed in the low-cost sensor data, deviates significantly from the measurements obtained from the reference station, shown in black. This discrepancy arises from the humidity being attracted and held via either absorption or adsorption from the surrounding environment, leading to particle size expansion. Consequently, the low-cost sensor detects an elevated number of PM 2.5 particles, resulting in inaccurate measurements.

Figure 2.

Comparison of PM levels measured by two low-cost sensors (represented by blue and orange lines) with data from the Arpa reference station (black line), accompanied by RH measurements (red) and an RH threshold of 70% (dotted red line).

Simply eliminating or truncating these humidity-induced spikes is an inadequate solution, particularly in areas with elevated humidity levels, as it would lead to a substantial loss of data. When referring to elevated humidity levels, we mean RH exceeding 70% [48]. However, it is essential to emphasise that this threshold may vary based on the specific sensor in use. Experiments have demonstrated that low-cost devices equipped with dryers at their inlets measured an average of 64% lower PM 2.5 concentrations with elevated humidity levels compared to sensors without these dryers [50]. This underscores the critical importance of mitigating fluctuations in sensor readings induced by humidity [51]. However, it is important to note that the addition of a dryer increases the cost of the sensor and may not always be a feasible solution (see Table 2 and Table 3).

More comprehensive approaches involve calibration or mitigation of the hygroscopicity effect, which involves developing models capable of effectively addressing this issue while potentially addressing other external factors contributing to erratic sensor readings.

2.1.3. Calibration Challenges

Previous research has extensively explored the influence of variables such as RH and temperature on sensor performance [46,52,53]. These studies have underscored the pressing need for correction models to rectify inaccuracies in sensor data induced by humidity.

Nevertheless, it is important to be aware that calibration challenges may arise when models are trained in one location but are later deployed in different places [54]. This can be attributed to variations in pollutant sources and environmental contexts, which can result in significant spatiotemporal differences. To tackle this issue, methods like corrective functions have been proposed [11,55,56]. Corrective functions are particularly valuable when only humidity and PM data are available. They derive a correction coefficient based on humidity levels to reduce detected PM concentrations. While they can be fine-tuned by calibrating against a reference station, this step is not always mandatory. Alternatively, an approach involves optimizing function parameters minimizing the correlation between humidity and PM concentration.

Another challenge includes the need for sensor maintenance, typically involving recalibration approximately twice a year [57]. Additionally, many of these techniques address mass concentration, aligning with the focus of our study. However, it is worth noting that directly considerate particle counts could offer advantages [58,59], as it sidesteps potential complexities associated with the conversion from counts to mass, and because a larger particle size translates into a shift of particles into a smaller diameter bin and not in a real diminishing of particle mass. Data frequency is another factor to consider, as reference stations often provide data daily or hourly, limiting the potential for real-time monitoring and fine-grain frequency accuracy.

Our study leverages neural networks as superior models for improving the accuracy of PM concentrations [24], particularly for sensor data affected by the hygroscopicity issue in high humidity regions (see Section 2.1.2). In these conditions, traditional linear correction methods often prove inadequate. In contrast to simpler methodologies, however, MLPs introduce certain limitations. These encompass reduced interpretability of the model’s decision-making process, potentially constraining their utility in quantitative applications. Furthermore, the intricacies associated with model design and the meticulous fine-tuning of hyperparameters contribute to the challenges posed by this approach.

Our research aims to develop a systematic methodology for future research in this field. However, like other calibration techniques, this approach is dependent on the specific location for which it is trained. To address this local aspect, a potential solution could involve collecting data from the same sensor model deployed in different locations. Currently, this poses a challenge due to the scarcity of resources for co-locating low-cost sensors with reference stations.

2.2. Dataset Assembly

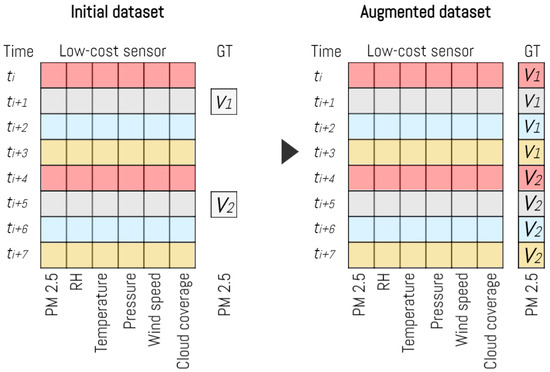

To handle the dissimilarity in data frequencies between the low-cost devices (generating four data entries per hour) and the reference station (providing one data entry per hour), we implemented a data augmentation strategy. Instead of averaging the low-cost device data on an hourly basis, and downsizing the dataset, we opted for upsizing the dataset, as reported in Figure 3. We replicated the ground truth values for each input vector originating from the low-cost devices based on the timestamp proximity.

Figure 3.

Dataset augmentation phase.

This approach allows you to maintain a consistent and synchronised dataset, even though the low-cost devices generate data more frequently than the reference station. By generating four corresponding input vectors for each hour of data collected by the reference station, we ensure that the data from the low-cost devices is still represented accurately in relation to the hourly measurements from the reference station.

This technique can be useful when working with data sources with varying sampling frequencies and can help ensure that all data sources are comparable and synchronised for further analysis or modelling.

As previously mentioned, each data record from low-cost devices comprised six distinct features (PM 2.5, RH, temperature, pressure, wind speed, and cloud coverage), and a timestamp indicating the temporal aspect.

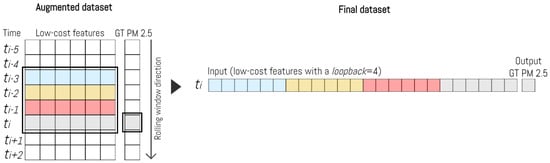

To improve our ability to account for the temporal context, we developed a dataset structure that connects multiple consecutive historical records, which we refer to as a loopback, associated with the same device, as illustrated in Figure 4. The ground truth value for each input vector was determined based on the PM 2.5 reading from Arpa corresponding to the final observation within the interval. This process was systematically executed for every entry in the dataset.

Figure 4.

Dataset construction schema with a loopback of 4.

We consistently applied this approach to all the devices under investigation and integrated the results into a unified dataset. As a result, each entry was enriched with valuable temporal insights.

Then, the dataset was partitioned into training (75%) and test sets (25%), and a shuffling process was applied to the data during this segmentation. It is worth noting that a sensible precaution, which was not integrated into this research, would involve taking into account the data’s temporal characteristics to prevent closely related data points from ending up in both the training and test sets.

The dataset in our study remains unprocessed, and no additional steps have been taken. It is worth mentioning that potential future steps could involve outlier removal, for which we may utilise techniques described in [60,61]. Furthermore, the dataset has not been normalised, and it is noteworthy that, in our results, normalization led to inferior performance.

2.3. MLP Architectures

Our approach involves a systematic exploration of various neural network architectures, each characterised by a unique set of hyperparameters. The primary evaluation metric for their performance is the coefficient of determination, denoted as .

All these architectures have a consistent input size, which is six times the length of the loopback, as there are six features involved. They generate a single neuron output, given the regression nature of the task. The architectural diversity primarily revolves around variations in depth and width.

We conducted experiments using architectures with depths ranging from six to eight layers, maintaining uniform layer widths throughout. Each architecture consisted of a linear layer followed by a Rectified Linear Unit (ReLU) activation function.

In the training process, we employed the L1 Error Loss. Additionally, we introduced a variant of the architecture in which the last hidden layer contained half the number of neurons, specifically when working with seven or eight layers. In total, we considered five distinct architectures, collectively referred to as AirMLP. The nomenclature is based on the layer count, the presence of an “h” denoting half neurons in the last layer, and the number of neurons per layer. For example, an architecture with seven layers and 500 neurons per layer is labelled as AirMLP7-500.

Furthermore, we investigated the potential advantages of the dataset normalization and the inclusion of a batch normalization layer, which normalises data across batches while learning affine parameters. Our experimentation revealed significant findings, indicating that the inclusion of a batch normalization layer, without prior dataset normalization, yielded superior results.

No other regularization techniques were applied.

3. Results

Since we utilised multilayer perceptron architectures, a key consideration was finding the right network size that achieves a harmonious blend of excellent performance, preventing overfitting, and handling computational demands. This prompted us to investigate a range of architectures with different combinations of hyperparameters.

We established a systematic and comprehensive pipeline that facilitated the training of each network with a unique combination of hyperparameters. Specifically, we focused on two key hyperparameters: the number of consecutive old records used to create the new dataset, the loopback, and the number of neurons per layer. These parameters collectively defined the specific architecture of the model being trained. Furthermore, we conducted experiments using three different batch sizes to assess their impact during training.

The hyperparameter values under consideration were as follows: the number of neurons per layer spanned from [300, 500, 700], the number of consecutive records fluctuated between [6, 12, 20], and batch sizes covered [64, 256, 512]. We assessed these hyperparameter combinations across five distinct architectures, resulting in a total of 135 potential configurations, each requiring training and evaluation. This thorough method enabled us to acquire a more profound insight into the network’s performance across a range of conditions.

In Table 4, the R2 scores corresponding to each architecture evaluated on the test set, alongside different sets of hyperparameters, is provided. Each column in the table represents the results obtained for various combinations of batch size and consecutive records, with the column name structured as batch size : loopback number. It is important to note that the values presented are not averages of multiple test results; instead, they represent a single measurement for each configuration.

Table 4.

R2 score on models trained differently. The meaning of the first row is [batch size]:[num records].

The data in the table highlights some noteworthy trends. First and foremost, increasing the loopback consistently results in better performance. This is a valuable insight as it suggests that capturing longer-term temporal dependencies enhances the network’s ability to correct PM 2.5 values. Conversely, increasing the batch size tends to lead to a decrease in the value. This implies that smaller batch sizes might be more effective in training the neural network.

Furthermore, the number of neurons per layer and the depth of the network, indicated by the number of layers, impacts performance. Increasing the number of neurons per layer within the network often results in better values. Keeping a constant number of neurons, deeper networks tend to yield higher values. This suggests that having a more complex model with a higher capacity for learning intricate patterns is beneficial.

As highlighted in the results, the optimal configuration for each model consists of a higher number of neurons per layer and a batch size of 64. However, to gain a more comprehensive understanding, we should investigate the effects of further increasing the number of neurons per layer and assess whether this leads to the onset of overfitting. This analysis will help us strike a balance between model complexity and performance, ensuring that we do not compromise generalization capabilities while seeking optimal performance.

Analyzing the loss graph reveals several valuable insights. Firstly, during the initial training attempts, it becomes evident that overfitting is not a prevalent issue, as demonstrated in Figure 5, Figure 6 and Figure 7. This observation suggests that there is room to consider increasing the network size without immediate concerns about overfitting.

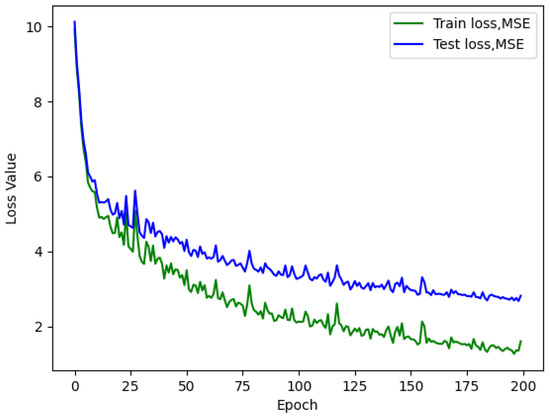

Figure 5.

AirMLP8-700 loss with a batch size of 64, test L1 value of 2.732.

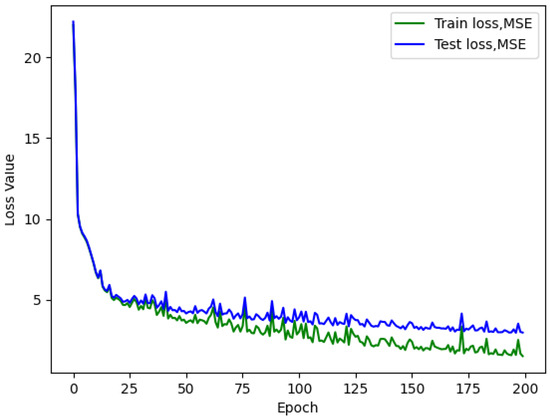

Figure 6.

AirMLP8-700 loss with a batch size of 256, test L1 value of 2.976.

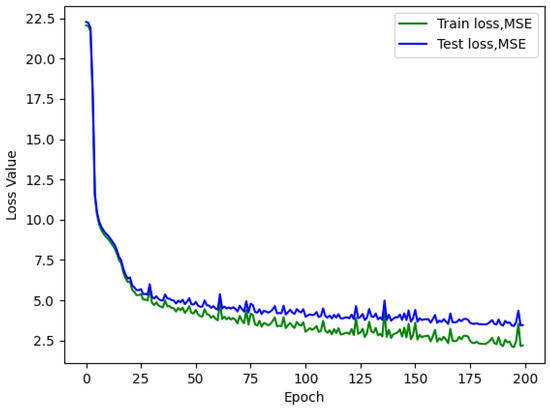

Figure 7.

AirMLP8-700 loss with a batch size of 512; test L1 value of 3.461.

Furthermore, the loss graph unveils two significant insights. When a larger batch size is employed, the network converges more rapidly and smoothly, but at the cost of a higher loss value, ultimately leading to poorer performance. Conversely, when a smaller batch size is used, the network achieves convergence at a lower loss value, even with an identical number of neurons.

The continuous descent of the loss curves implies that there is potential for further enhancement in the model’s performance. Rather than employing a fixed number of epochs for training, as demonstrated in this study, the implementation of an early stopping technique warrants consideration and could yield substantial benefits.

Following our initial assessment, which identified the most effective hyperparameter set for each model as a batch size of 64, a loopback of 20 consecutive records (indicating the inclusion of 19 past observations in addition to the current one being corrected), and 700 neurons per layer, it becomes imperative to investigate the potential benefits of further increasing the number of neurons per layer.

In pursuit of this objective, we carried out additional training sessions for all five models, using varying neuron counts of 900, 1100, and 1500 per layer, while maintaining a consistent 20 consecutive records and a batch size of 64. As depicted in Table 5, it is evident that the model achieves optimal performance with seven layers and 1500 neurons in each layer. This observation holds for different neuron counts as well, signifying that employing seven layers seems adequate for representing this regression task effectively.

Table 5.

Performance with a batch size of 64, and a loopback of 20, increasing the number of neurons per layer.

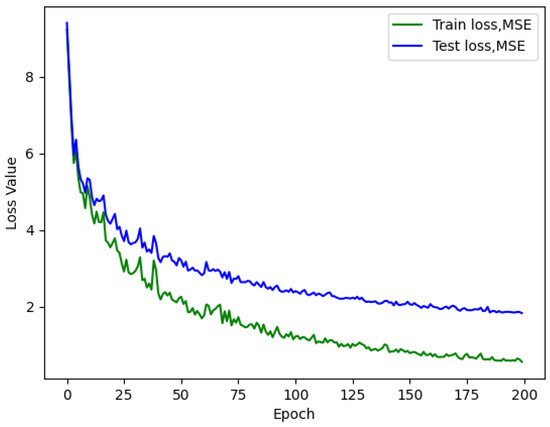

The outcomes depicted in Figure 8 are rather remarkable, as they reveal not only enhancements in terms of the R2 metrics but also significant improvements in the loss reduction process during training. The loss curves now exhibit a smoother descent, and they converge to lower values compared to the previous configurations. These findings strongly indicate that augmenting the number of neurons per layer has exerted a positive influence on both the model’s predictive performance and its training stability.

Figure 8.

AirMLP7-1500 loss. Batch size of 64 and a loopback of 20.

Upon inspecting the figures, it becomes evident that there is still no indication of overfitting throughout the training process. Notably, the AirMLP7-1500 model performs well, potentially serving as a solid foundation for further exploration.

While it is tempting to increase the network’s dimensions even further, it is imperative to carefully weigh the trade-off between potential performance enhancements, which may be modest, and the accompanying escalation in computational requirements.

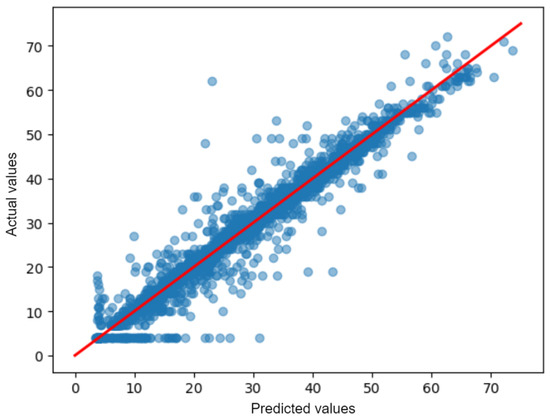

Evaluating the AirMLP7-1500 model’s performance on the test dataset involves contrasting its predicted results with the actual values. To highlight this, we drew the predicted test scatter plot in Figure 9. The ideal scenario is when these predicted values (blue dots) perfectly align along the diagonal line (the red line in Figure 9), indicating that the model’s predictions match the reference station’s actual values. Any deviations from this diagonal line signify, instead, the magnitude of prediction errors and provide insights into the model’s performance. The Figure shows a strong correlation between the predicted values generated by the model and the original values (ground truth); indeed, the predicted values are closely clustered around the diagonal line. This suggests that the neural network AirMLP7-1500 is effective in accurately predicting PM 2.5 concentrations.

Figure 9.

Comparison between predicted results and actual values from the AirMLP7-1500 model on the test dataset (blue dots).

The figure also shows a negative consequence of the lower threshold applied to the data by the Arpa Agency (described in Section 2.1) is visible in the left-bottom part of the plot. The data equal to such threshold are horizontally aligned at , and the NN reproduce this threshold, visible at .

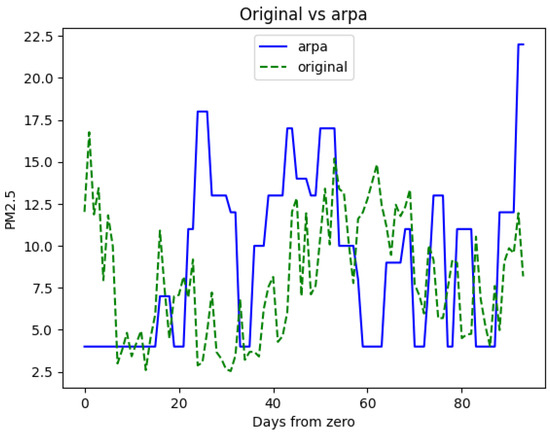

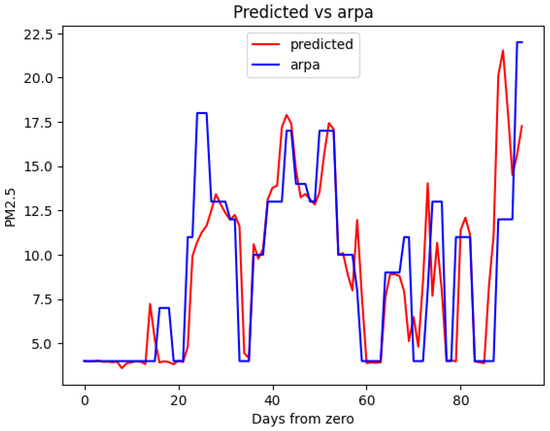

Now, let us examine a secondary test dataset comprising consecutive data from a few days, which is not part of the training set. The PM 2.5 values originally recorded and the PM 2.5 values generated by the AirMLP7-1500 model are contrasted with the ground truth (Arpa), as illustrated in Figure 10 and Figure 11, respectively. This analysis will offer insights into the model’s ability to adapt to novel, unobserved days and its performance in a real-world context beyond the training data.

Figure 10.

Original vs. Arpa ground truth data.

Figure 11.

AirMLP7-1500’s predicted output vs. Arpa ground truth data.

The first thing you may notice is the notable improvement in sensor data alignment compared to Arpa when introducing the predictions from the AirMLP7-1500 model. Moreover, the predictions do not exhibit any unusual behaviour, indicating a smooth correction of the data.

Some minor observations are that in both Figure 10 and Figure 11, the Arpa line displays plateaus due to the replication of data to match the 15-min low-cost granularity frequency, and in Figure 11, there are instances where the model does not precisely reconstruct certain peaks. For instance, around day 25, the neural network entirely misses a peak, and near day 90, it anticipates one. These discrepancies may be attributed to the data augmentation method employed, which might lack access to the exact PM concentration values.

A particular aspect that can be noticed is the lower threshold imposed by Arpa through the medium bound technique, as discussed in Section 2.1, which is learned by the neural network. This lower threshold is visible in Figure 10 and Figure 11 in the line representing Arpa. It does not reach a PM concentration of 0 but is imposed at 4 g/m.

These outcomes are indicators of the model’s generalization capabilities and provide further evidence that the network does not suffer from overfitting. They highlight the robustness of the model and its ability to provide accurate PM 2.5 estimates, reinforcing its potential for real-world applications in air quality monitoring.

4. Discussion

In this Section, we explore significant discoveries, potential implications, and future directions.

The results, provided in Section 3, underscored the capability of MLPs to effectively represent and address the problem. These networks yield commendable scores. It is important to note that overfitting is not observed in this context, affirming the robustness of the models. Furthermore, the batch size significantly influenced performance, with larger batch sizes leading to a decrease in performance. On the other hand, employing a wider network with 1500 neurons per layer proved to be the optimal choice. Additionally, networks with seven or eight layers demonstrated a better performance. The inclusion of a loopback mechanism, allowing the network to consider a longer history of data, proved to be advantageous. The performance results indicate that leveraging more historical data leads to improved model accuracy.

Regarding the influence of RH, our study emphasizes the significant impact of humidity on the accuracy of low-cost PM 2.5 measurements. When RH surpasses a threshold of 70%, low-cost sensors tend to overestimate PM 2.5 levels. This distortion can undermine the reliability of air quality assessments, highlighting the limitations of such data in pollution-related decision-making.

Among the models, the AirMLP7-1500 model stood out, achieving an impressive R2 score of 0.932. The achieved R2 result aligns with the performance of neural networks reported in the existing literature, which typically surpasses 0.9 [24]. This again underscores the potential for AI-based correction methods to enhance the accuracy of low-cost sensor data.

The proposed method is not without limitations, particularly in terms of dataset shuffling and augmentation techniques. The specular pattern between training loss and test loss is shown in all the loss figures. This phenomenon could likely be attributed to the initial shuffling of data, which may not have adequately accounted for the presence of very similar data points in both the training and test sets. Further investigation and refinement of the data splitting and shuffling procedures could potentially mitigate this issue and provide a more accurate representation of the model’s performance on unseen data.

Additionally, in the context of handling Arpa reference station data, rather than the current approach of oversampling and repeating values to achieve finer granularity from 1 h to 15 min frequency, it is crucial to explore alternative strategies. One such strategy involves developing a weighted mean between the value at a given time point and the subsequent hour’s value. This exploratory approach holds the potential to not only enhance the quality of the ground truth data but also mitigate the erroneous reconstruction of peaks.

An important limitation to acknowledge is that the proposed correction models are tailored to a specific location and a particular set of low-cost devices. As previously highlighted, applying these models to different sensors or locations may yield sub-optimal results.

Numerous promising avenues for future research emerge from this study. One immediate direction is to investigate the impact of further increasing the model’s complexity, with a focus on balancing improved performance with computational resources. Regularization techniques like dropout could also be explored to enhance model robustness.

Considering seasonality when training PM 2.5 correction models is another promising direction. Seasonal variations in air quality are a well-known phenomenon [62,63]. By training separate models tailored to different seasons, it is possible to capture and correct seasonal variations in PM 2.5 measurements. Moreover, incorporating temporal information into the dataset is a strategy that can further enhance the network’s ability to differentiate between diverse environmental contexts. This could involve including features such as day of the week, time of day, or even specific holidays or events that might impact air quality. By accounting for both seasonality and temporal dynamics, the models can become more adaptive and provide accurate corrections across various environmental scenarios. This approach aligns with the idea of developing context-aware models that tailor their corrections based on the prevailing conditions [64], ultimately advancing the effectiveness of low-cost sensor data in air quality monitoring.

In addition, extending the models’ evaluation to new, unseen data from diverse geographical locations is essential to assess their generalization capabilities [65]. This would help determine the models’ adaptability to varying environmental conditions. Incorporating data from low-cost laser scattering sensors manufactured by different companies is also an important consideration. Different sensor models may exhibit unique characteristics and behaviours. Testing the models with data from various sensor manufacturers can help validate their robustness and ensure that they perform effectively across a spectrum of sensor types.

Beyond correction, the models developed here could be adapted for anomaly detection, helping to identify unusual PM 2.5 readings that may indicate pollution events or sensor malfunctions. Additionally, transitioning from correction to prediction could enable the forecasting of future PM 2.5 levels based on atmospheric conditions, contributing to proactive pollution management at the installation site.

Overall, the findings of this research provide valuable insights into the potential of neural networks, specifically MLPs, in improving the accuracy of low-cost sensor measurements. The study’s results contribute to the broader understanding of machine learning techniques in air quality monitoring and provide a foundation for further exploration and application in this field.

5. Conclusions

In this paper, we have addressed a critical challenge, which is improving the accuracy of PM 2.5 measurements acquired from low-cost devices equipped with an SPS30 sensor using MLP networks.

Our research employed data collected from five distinct low-cost devices for five months. These devices were located near a Tecora Arpa reference station in Turin. The data underwent augmentation to align with the low-cost device granularity, and it was provided to the neural networks in its raw form.

Remarkably, the AirMLP7-1500 model, which consists of seven layers, each containing 1500 neurons, and includes a loopback of 20, achieved an impressive R2 score of 0.932, effectively mitigating the impact of hygroscopicity.

The significance of this study provides the foundation for a systematic methodology that can be adapted for training similar models in various environmental contexts. This level of customization is a key strength, as it enables the model to effectively capture the complex relationships between local atmospheric parameters and the accurate detection of PM 2.5 concentration levels.

Looking ahead, our work opens the door to further investigations. These avenues include exploring the impact of increasing network complexity while guarding against overfitting, the application of regularization techniques, and enhanced data preprocessing before the training phase. Considerations such as seasonality, improved data preprocessing, and extending the applicability of the models to various geographical locations and sensor types are also promising directions for future research.

We argue that this study serves as a solid foundation for broader and more versatile inquiries within the realm of air quality monitoring and correction, setting the stage for further advancements in this critical field.

Author Contributions

Conceptualization, L.P. and M.C.; methodology, L.P. and M.C.; software, L.Z. and M.C.; validation, L.Z. and M.C. All authors have read and agreed to the published version of the manuscript.

Funding

Parts of the research published in this paper were carried out within the Ph.D. research project of Martina Casari titled “Artificial intelligence techniques to tackle urban air pollution”, supported by the Italian Ministry of Education, University and Research under the PON R&I 2014-2020 programme (FSE React-EU) DM 1061, 2021.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The source code and dataset can be accessed on Zenodo via the following links: [66,67].

Acknowledgments

We would like to express our appreciation to Wiseair and Arpa Torino for their valuable contributions to this study. The data collected and shared by both organizations have played a pivotal role in advancing our research and enhancing our understanding of air quality.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sharma, S.; Chandra, M.; Kota, S.H. Health effects associated with PM 2.5: A systematic review. Curr. Pollut. Rep. 2020, 6, 345–367. [Google Scholar] [CrossRef]

- Fuller, R.; Landrigan, P.J.; Balakrishnan, K.; Bathan, G.; Bose-O’Reilly, S.; Brauer, M.; Caravanos, J.; Chiles, T.; Cohen, A.; Corra, L.; et al. Pollution and health: A progress update. Lancet Planet. Health 2022, 6, e535–e547. [Google Scholar] [CrossRef]

- Pope, C.A.; Coleman, N.; Pond, Z.A.; Burnett, R.T. Fine particulate air pollution and human mortality: 25+ years of cohort studies. Environ. Res. 2020, 183, 108924. [Google Scholar] [CrossRef] [PubMed]

- Pope, C.A., III; Dockery, D.W. Health effects of fine particulate air pollution: Lines that connect. EM Air Waste Manag. Assoc. Mag. Environ. Manag. 2006, 56, 709–742. [Google Scholar] [CrossRef] [PubMed]

- Thangavel, P.; Park, D.; Lee, Y.C. Recent insights into particulate matter (PM 2.5)-mediated toxicity in humans: An overview. Int. J. Environ. Res. Public Health 2022, 19, 7511. [Google Scholar] [CrossRef] [PubMed]

- Luan, T.; Guo, X.; Guo, L.; Zhang, T. Quantifying the relationship between PM2.5 concentration, visibility and planetary boundary layer height for long-lasting haze and fog–haze mixed events in Beijing. Atmos. Chem. Phys. 2018, 18, 203–225. [Google Scholar] [CrossRef]

- Wu, W.; Zhang, Y. Effects of particulate matter (PM2.5) and associated acidity on ecosystem functioning: Response of leaf litter breakdown. Environ. Sci. Pollut. Res. 2018, 25, 30720–30727. [Google Scholar] [CrossRef]

- Zheng, T.; Bergin, M.H.; Johnson, K.K.; Tripathi, S.N.; Shirodkar, S.; Landis, M.S.; Sutaria, R.; Carlson, D.E. Field evaluation of low-cost particulate matter sensors in high-and low-concentration environments. Atmos. Meas. Tech. 2018, 11, 4823–4846. [Google Scholar] [CrossRef]

- US EPA NAAQS Table. 2016. Available online: https://www.epa.gov/criteria-air-pollutants/naaqs-table (accessed on 20 September 2023).

- World Health Organization Regional Office for Europe. Air Quality Guidelines for Europe; World Health Organization, Regional Office for Europe: Copenhagen, Denmark, 2000. [Google Scholar]

- Chen, T.; He, J.; Lu, X.; She, J.; Guan, Z. Spatial and Temporal Variations of PM2.5 and Its Relation to Meteorological Factors in the Urban Area of Nanjing, China. Int. J. Environ. Res. Public Health 2016, 13, 921. [Google Scholar] [CrossRef]

- Chen, M.; Yuan, W.; Cao, C.; Buehler, C.; Gentner, D.R.; Lee, X. Development and Performance Evaluation of a Low-Cost Portable PM2.5 Monitor for Mobile Deployment. Sensors 2022, 22, 2767. [Google Scholar] [CrossRef]

- Hart, R.; Liang, L.; Dong, P. Monitoring, mapping, and modeling spatial–temporal patterns of PM2.5 for improved understanding of air pollution dynamics using portable sensing technologies. Int. J. Environ. Res. Public Health 2020, 17, 4914. [Google Scholar] [CrossRef]

- Schilt, U.; Barahona, B.; Buck, R.; Meyer, P.; Kappani, P.; Möckli, Y.; Meyer, M.; Schuetz, P. Low-Cost Sensor Node for Air Quality Monitoring: Field Tests and Validation of Particulate Matter Measurements. Sensors 2023, 23, 794. [Google Scholar] [CrossRef]

- Munir, S.; Mayfield, M.; Coca, D.; Jubb, S.A.; Osammor, O. Analysing the performance of low-cost air quality sensors, their drivers, relative benefits and calibration in cities—A case study in Sheffield. Environ. Monit. Assess. 2019, 191, 94. [Google Scholar] [CrossRef] [PubMed]

- Danek, T.; Zaręba, M. The use of public data from low-cost sensors for the geospatial analysis of air pollution from solid fuel heating during the COVID-19 pandemic spring period in Krakow, Poland. Sensors 2021, 21, 5208. [Google Scholar] [CrossRef] [PubMed]

- Zareba, M.; Dlugosz, H.; Danek, T.; Weglinska, E. Big-Data-Driven Machine Learning for Enhancing Spatiotemporal Air Pollution Pattern Analysis. Atmosphere 2023, 14, 760. [Google Scholar] [CrossRef]

- Peltier, R.E.; Castell, N.; Clements, A.L.; Dye, T.; Hüglin, C.; Kroll, J.H.; Lung, S.C.C.; Ning, Z.; Parsons, M.; Penza, M.; et al. An Update on Low-Cost Sensors for the Measurement of Atmospheric Composition, December 2020; WMO: Geneva, Switzerland, 2021. [Google Scholar]

- Ahn, K.H.; Lee, H.; Lee, H.D.; Kim, S.C. Extensive evaluation and classification of low-cost dust sensors in laboratory using a newly developed test method. Indoor Air 2020, 30, 137–146. [Google Scholar] [CrossRef] [PubMed]

- Raysoni, A.U.; Pinakana, S.D.; Mendez, E.; Wladyka, D.; Sepielak, K.; Temby, O. A Review of Literature on the Usage of Low-Cost Sensors to Measure Particulate Matter. Earth 2023, 4, 168–186. [Google Scholar] [CrossRef]

- Dhall, S.; Mehta, B.; Tyagi, A.; Sood, K. A review on environmental gas sensors: Materials and technologies. Sensors Int. 2021, 2, 100116. [Google Scholar] [CrossRef]

- Li, J.; Biswas, P. Calibration and applications of low-cost particle sensors: A review of recent advances. In Aerosols; De Gruyter: Berlin, Germany, 2022; pp. 91–114. [Google Scholar] [CrossRef]

- Liang, L. Calibrating low-cost sensors for ambient air monitoring: Techniques, trends, and challenges. Environ. Res. 2021, 197, 111163. [Google Scholar] [CrossRef]

- Liang, L.; Daniels, J. What Influences Low-cost Sensor Data Calibration?—A Systematic Assessment of Algorithms, Duration, and Predictor Selection. Aerosol Air Qual. Res. 2022, 22, 220076. [Google Scholar] [CrossRef]

- Wiseair Site. Available online: https://wiseair.vision/ (accessed on 20 September 2023).

- Turin Air Quality Monitoring Station. Available online: http://www.cittametropolitana.torino.it/cms/ambiente/qualita-aria/rete-monitoraggio/stazioni-monitoraggio (accessed on 13 September 2023).

- Turin Air Quality Monitoring Station Characteristics. Available online: http://www.sistemapiemonte.it/ambiente/srqa/stazioni/pdf/226.pdf (accessed on 13 September 2023).

- Sensirion PM2.5 Sensor for HVAC and Air Quality Applications SPS30. Available online: https://sensirion.com/products/catalog/SPS30/ (accessed on 13 September 2023).

- Kuula, J.; Mäkelä, T.; Aurela, M.; Teinilä, K.; Varjonen, S.; González, Ó.; Timonen, H. Laboratory evaluation of particle-size selectivity of optical low-cost particulate matter sensors. Atmos. Meas. Tech. 2020, 13, 2413–2423. [Google Scholar] [CrossRef]

- Giorgio, N. Relation between Cloud Cover and Relative Humidity. B.S. Thesis, Universiteit Utrecht, Utrecht, The Netherlands, 2017. [Google Scholar]

- Hagan, D.H.; Kroll, J.H. Assessing the accuracy of low-cost optical particle sensors using a physics-based approach. Atmos. Meas. Tech. 2020, 13, 6343–6355. [Google Scholar] [CrossRef]

- Alfano, B.; Barretta, L.; Giudice, A.D.; De Vito, S.; Francia, G.D.; Esposito, E.; Formisano, F.; Massera, E.; Miglietta, M.L.; Polichetti, T. A review of low-cost particulate matter sensors from the developers’ perspectives. Sensors 2020, 20, 6819. [Google Scholar] [CrossRef] [PubMed]

- Giordano, M.R.; Malings, C.; Pandis, S.N.; Presto, A.A.; McNeill, V.; Westervelt, D.M.; Beekmann, M.; Subramanian, R. From low-cost sensors to high-quality data: A summary of challenges and best practices for effectively calibrating low-cost particulate matter mass sensors. J. Aerosol Sci. 2021, 158, 105833. [Google Scholar] [CrossRef]

- Malings, C.; Tanzer, R.; Hauryliuk, A.; Saha, P.K.; Robinson, A.L.; Presto, A.A.; Subramanian, R. Fine particle mass monitoring with low-cost sensors: Corrections and long-term performance evaluation. Aerosol Sci. Technol. 2020, 54, 160–174. [Google Scholar] [CrossRef]

- Jayaratne, R.; Liu, X.; Ahn, K.H.; Asumadu-Sakyi, A.; Fisher, G.; Gao, J.; Mabon, A.; Mazaheri, M.; Mullins, B.; Nyaku, M.; et al. Low-cost PM2.5 sensors: An assessment of their suitability for various applications. Aerosol Air Qual. Res. 2020, 20, 520–532. [Google Scholar] [CrossRef]

- Ueda, S.; Osada, K.; Yamagami, M.; Ikemori, F.; Hisatsune, K. Estimating mass concentration using a low-cost portable particle counter based on full-year observations: Issues to obtain reliable atmospheric PM2.5 data. Asian J. Atmos. Environ. 2020, 14, 155–169. [Google Scholar] [CrossRef]

- Gao, R.; Telg, H.; McLaughlin, R.; Ciciora, S.; Watts, L.; Richardson, M.; Schwarz, J.; Perring, A.; Thornberry, T.; Rollins, A.; et al. A light-weight, high-sensitivity particle spectrometer for PM2.5 aerosol measurements. Aerosol Sci. Technol. 2016, 50, 88–99. [Google Scholar] [CrossRef]

- SDS011 Datasheet. Available online: https://cdn-reichelt.de/documents/datenblatt/X200/SDS011-DATASHEET.pdf (accessed on 20 September 2023).

- SPS30 Datasheet. Available online: https://sensirion.com/media/documents/8600FF88/616542B5/Sensirion_PM_Sensors_Datasheet_SPS30.pdf (accessed on 20 September 2023).

- HPMA115C0 Datasheet. Available online: https://media.distrelec.com/Web/Downloads/_t/ds/HPMA115C0-003_eng_tds.pdf (accessed on 20 September 2023).

- OPC-N2 Datasheet. Available online: https://parmex.com.mx/show_catalogue_pdf/142183/1 (accessed on 20 September 2023).

- 10000 Ambient Air Monitor Datasheet. Available online: https://particlesplus.com/wp-content/datasheets/10000/Particles%20Plus%2010000%20Datasheet.pdf (accessed on 20 September 2023).

- 12000 Ambient Air Monitor Datasheet. Available online: https://particlesplus.com/wp-content/datasheets/12000/Particles%20Plus%2012000%20Datasheet.pdf (accessed on 20 September 2023).

- AM520 Datasheet. Available online: https://tsi.com/getmedia/3b6a2fdc-b348-466f-b6f6-b2014be9a0d5/SidePak_AM520-AM520i_A4_5001738_RevC_Web?ext=.pdf (accessed on 20 September 2023).

- AQMESH Technical Documentation. Available online: https://d3pcsg2wjq9izr.cloudfront.net/files/84570/download/667711/10reasonswhyyoushouldchooseAQMesh.pdf (accessed on 20 September 2023).

- Jayaratne, R.; Liu, X.; Thai, P.; Dunbabin, M.; Morawska, L. The influence of humidity on the performance of a low-cost air particle mass sensor and the effect of atmospheric fog. Atmos. Meas. Tech. 2018, 11, 4883–4890. [Google Scholar] [CrossRef]

- Wang, P.; Xu, F.; Gui, H.; Wang, H.; Chen, D.R. Effect of relative humidity on the performance of five cost-effective PM sensors. Aerosol Sci. Technol. 2021, 55, 957–974. [Google Scholar] [CrossRef]

- Won, W.S.; Oh, R.; Lee, W.; Ku, S.; Su, P.C.; Yoon, Y.J. Hygroscopic properties of particulate matter and effects of their interactions with weather on visibility. Sci. Rep. 2021, 11, 16401. [Google Scholar] [CrossRef]

- Carslaw, K.S. Chapter 5—Aerosol processes. In Aerosols and Climate; Carslaw, K.S., Ed.; Elsevier: Amsterdam, The Netherlands, 2022; pp. 135–185. [Google Scholar] [CrossRef]

- Chacón-Mateos, M.; Laquai, B.; Vogt, U.; Stubenrauch, C. Evaluation of a low-cost dryer for a low-cost optical particle counter. Atmos. Meas. Tech. 2022, 15, 7395–7410. [Google Scholar] [CrossRef]

- Samad, A.; Melchor Mimiaga, F.E.; Laquai, B.; Vogt, U. Investigating a low-cost dryer designed for low-cost PM sensors measuring ambient air quality. Sensors 2021, 21, 804. [Google Scholar] [CrossRef]

- Kim, H.; Kim, J.; Roh, S. Effects of Gas and Steam Humidity on Particulate Matter Measurements Obtained Using Light-Scattering Sensors. Sensors 2023, 23, 6199. [Google Scholar] [CrossRef] [PubMed]

- Samad, A.; Obando Nuñez, D.R.; Solis Castillo, G.C.; Laquai, B.; Vogt, U. Effect of relative humidity and air temperature on the results obtained from low-cost gas sensors for ambient air quality measurements. Sensors 2020, 20, 5175. [Google Scholar] [CrossRef]

- DeSouza, P.; Kahn, R.; Stockman, T.; Obermann, W.; Crawford, B.; Wang, A.; Crooks, J.; Li, J.; Kinney, P. Calibrating networks of low-cost air quality sensors. Atmos. Meas. Tech. 2022, 15, 6309–6328. [Google Scholar] [CrossRef]

- Crilley, L.R.; Shaw, M.; Pound, R.; Kramer, L.J.; Price, R.; Young, S.; Lewis, A.C.; Pope, F.D. Evaluation of a low-cost optical particle counter (Alphasense OPC-N2) for ambient air monitoring. Atmos. Meas. Tech. 2018, 11, 709–720. [Google Scholar] [CrossRef]

- Casari, M.; Po, L. Mitigating the Impact of Humidity on Low-Cost PM Sensors. In Proceedings of the 3rd National Conference on Artificial Intelligence, Organized by CINI, Pisa, Italy, 29–31 May 2023; Volume 3486, pp. 599–604. [Google Scholar]

- Bulot, F.M.; Ossont, S.J.; Morris, A.K.; Basford, P.J.; Easton, N.H.; Mitchell, H.L.; Foster, G.L.; Cox, S.J.; Loxham, M. Characterisation and calibration of low-cost PM sensors at high temporal resolution to reference-grade performance. Heliyon 2023, 9, e15943. [Google Scholar] [CrossRef] [PubMed]

- Brattich, E.; Bracci, A.; Zappi, A.; Morozzi, P.; Di Sabatino, S.; Porcù, F.; Di Nicola, F.; Tositti, L. How to Get the Best from Low-Cost Particulate Matter Sensors: Guidelines and Practical Recommendations. Sensors 2020, 20, 3073. [Google Scholar] [CrossRef] [PubMed]

- Kosmopoulos, G.; Salamalikis, V.; Wilbert, S.; Zarzalejo, L.F.; Hanrieder, N.; Karatzas, S.; Kazantzidis, A. Investigating the Sensitivity of Low-Cost Sensors in Measuring Particle Number Concentrations across Diverse Atmospheric Conditions in Greece and Spain. Sensors 2023, 23, 6541. [Google Scholar] [CrossRef]

- Rollo, F.; Sudharsan, B.; Po, L.; Breslin, J.G. Air Quality Sensor Network Data Acquisition, Cleaning, Visualization, and Analytics: A Real-world IoT Use Case. In Proceedings of the UbiComp/ISWC ’21: 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and 2021 ACM International Symposium on Wearable Computers, Virtual Event, 21–25 September 2021; Doryab, A., Lv, Q., Beigl, M., Eds.; ACM: New York, NY, USA, 2021; pp. 67–68. [Google Scholar] [CrossRef]

- Rollo, F.; Bachechi, C.; Po, L. Anomaly Detection and Repairing for Improving Air Quality Monitoring. Sensors 2023, 23, 640. [Google Scholar] [CrossRef]

- Bodor, Z.; Bodor, K.; Keresztesi, Á.; Szép, R. Major air pollutants seasonal variation analysis and long-range transport of PM 10 in an urban environment with specific climate condition in Transylvania (Romania). Environ. Sci. Pollut. Res. 2020, 27, 38181–38199. [Google Scholar] [CrossRef] [PubMed]

- Tian, Y.; Zhang, L.; Wang, Y.; Song, J.; Sun, H. Temporal and Spatial Trends in Particulate Matter and the Responses to Meteorological Conditions and Environmental Management in Xi’an, China. Atmosphere 2021, 12, 1112. [Google Scholar] [CrossRef]

- Dejchanchaiwong, R.; Tekasakul, P.; Saejio, A.; Limna, T.; Le, T.C.; Tsai, C.J.; Lin, G.Y.; Morris, J. Seasonal Field Calibration of Low-Cost PM2.5 Sensors in Different Locations with Different Sources in Thailand. Atmosphere 2023, 14, 496. [Google Scholar] [CrossRef]

- Nowack, P.; Konstantinovskiy, L.; Gardiner, H.; Cant, J. Machine learning calibration of low-cost NO2 and PM 10 sensors: Non-linear algorithms and their impact on site transferability. Atmos. Meas. Tech. 2021, 14, 5637–5655. [Google Scholar] [CrossRef]

- Martina Casari, L.P.; Zini, L. AirMLP—Source Code. 2023. Available online: https://zenodo.org/records/10044375 (accessed on 1 November 2023).

- Martina Casari, L.P.; Zini, L. AirMLP—Data. 2023. Available online: https://zenodo.org/records/10037781 (accessed on 1 November 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).