2.3. ROS/ROS 2

In robotic systems with divided architectures, the two most popular current frameworks are the Robot Operating System (ROS) and Robot Operating System 2 (ROS 2). Although similar in name, their internal publish/subscribe models have fundamentally different protocols. ROS uses a custom message queuing protocol similar to Advanced Message Queuing Protocol (AMQP), in which two types of nodes are used: ROS nodes and a ROS Master. A roscore is a computational entity that can communicate with other nodes and can publish and subscribe to receive and transmit data. The ROS Master acts as central hub for the ROS ecosystem, keeping a registry of active nodes, services, and topics to ease communication between nodes, i.e., discovery registration. Multiple ROS nodes can be executed at the same time and be registered to a roscore; however, cooperation between multiple roscores is not natively supported by ROS [

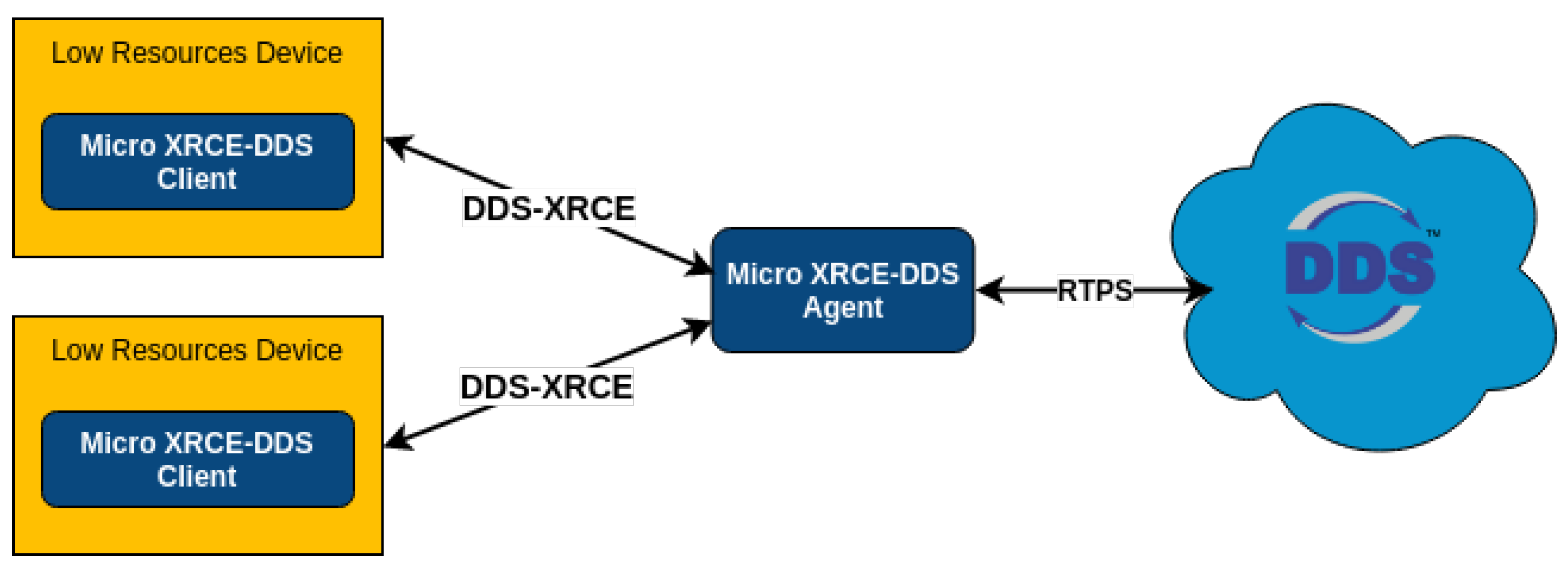

9]. In contrast, ROS 2 implements a decentralized architecture using a Data Distribution Service (DDS), which eliminates the need for a ROS Master and automates the discovery registration. ROS 2 nodes can transmit and receive data without central coordination, with the direct result that ROS2 incurs less latency than ROS. ROS 2 enables the use of several node types (regular, real-time, intra-process, composable, and lifecycle nodes), enabling cooperation between different layers of a system as long as the ROS 2 nodes of interest are under the same DDS domain [

10,

11].

2.9. Latency Assessment

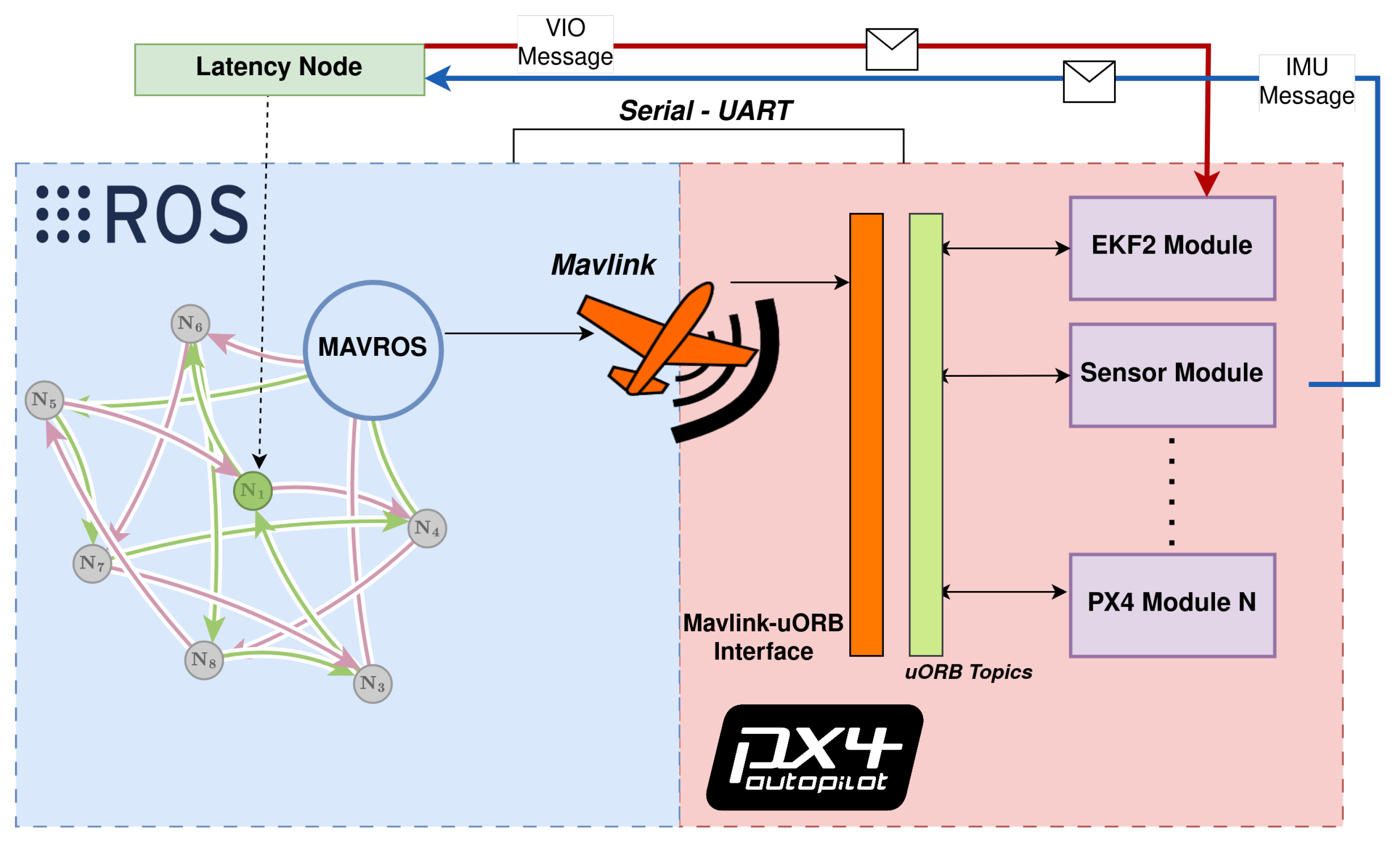

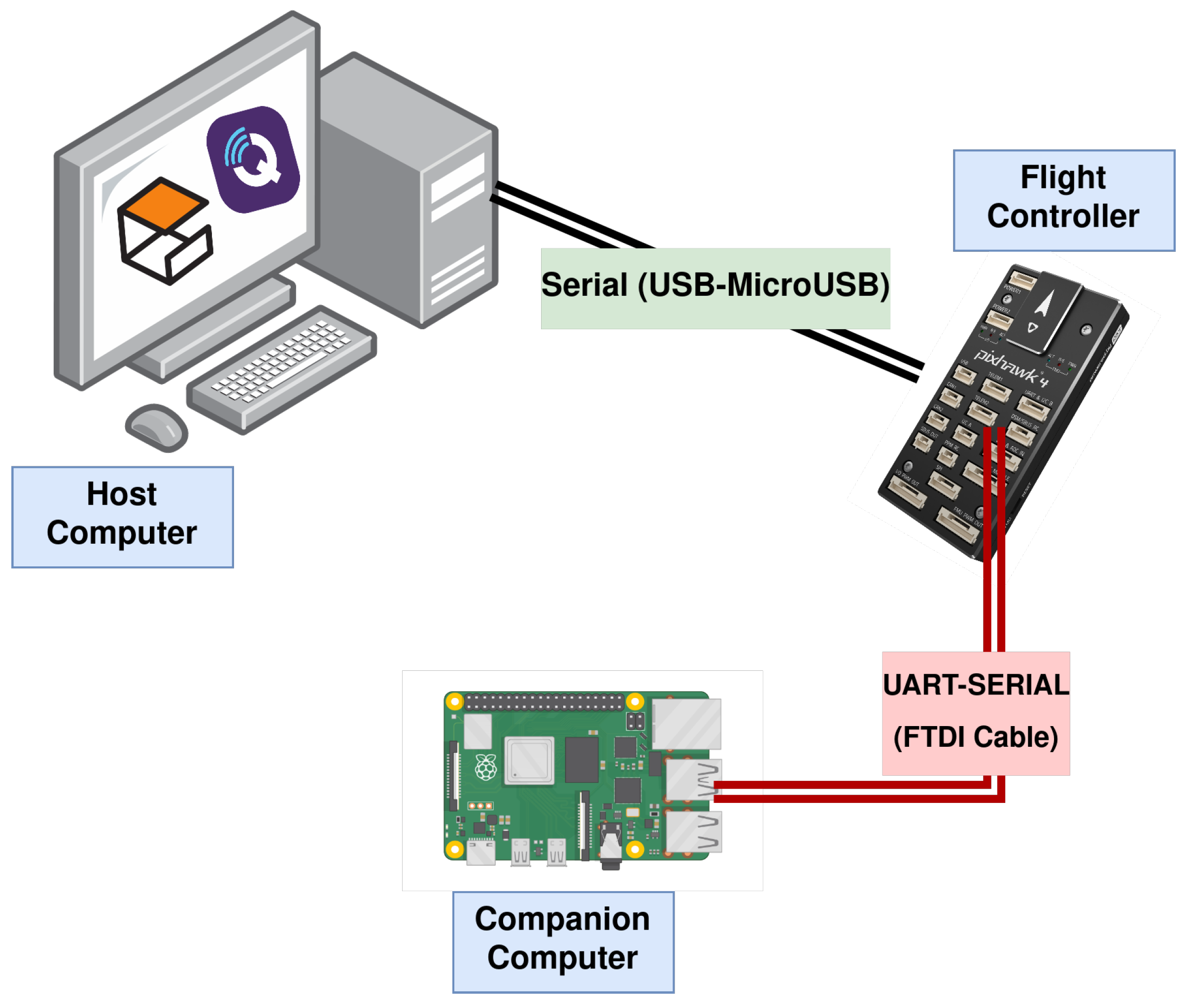

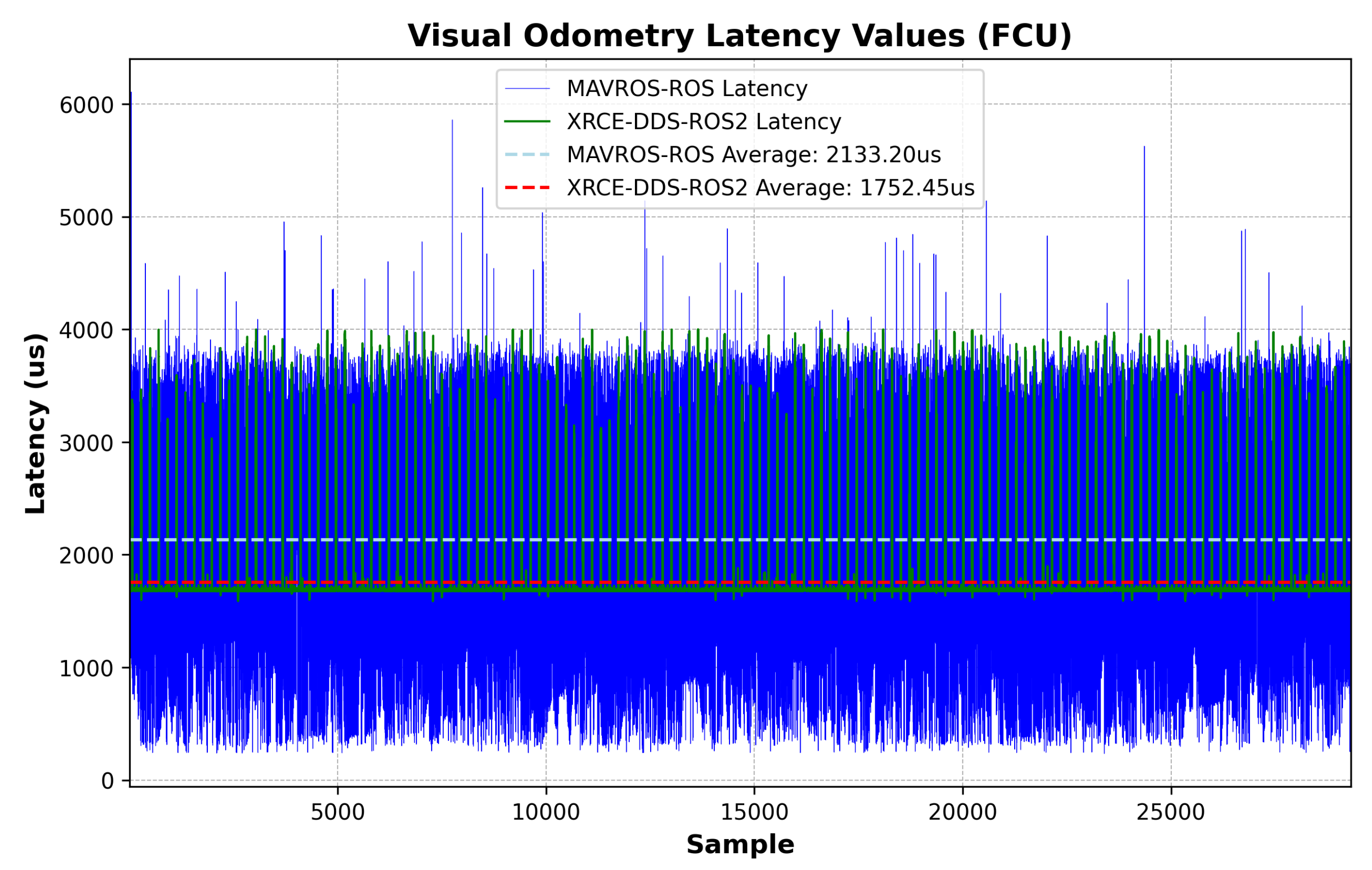

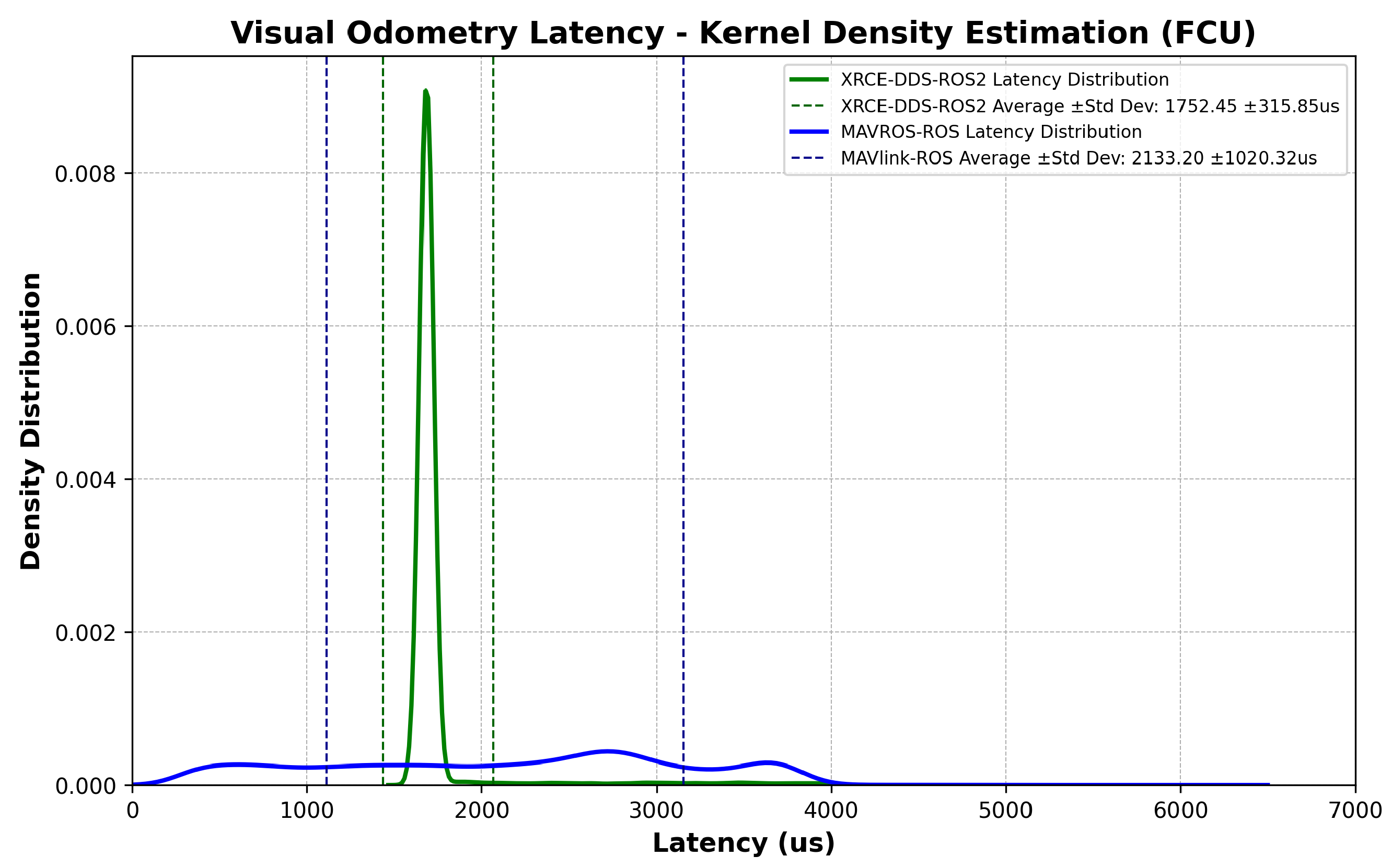

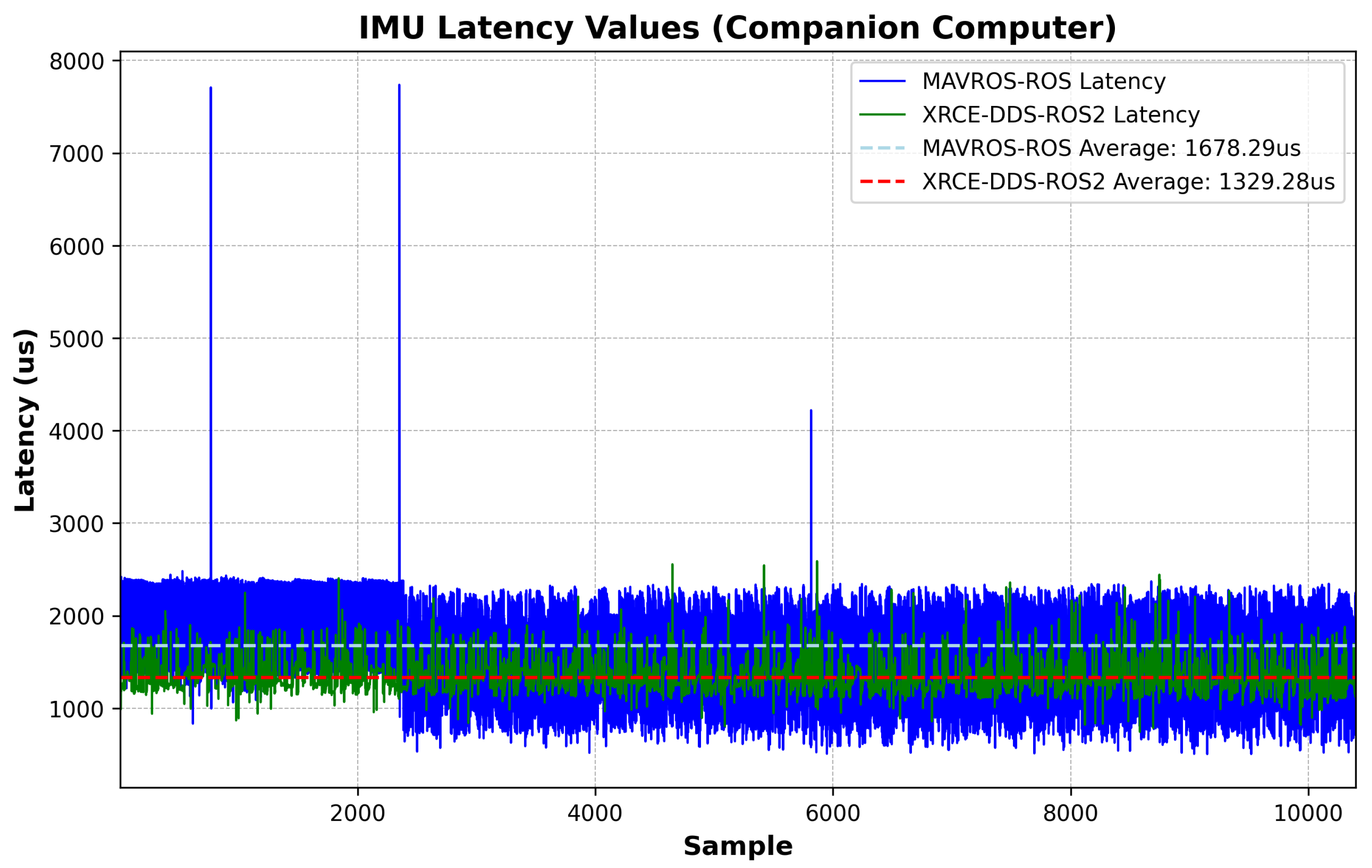

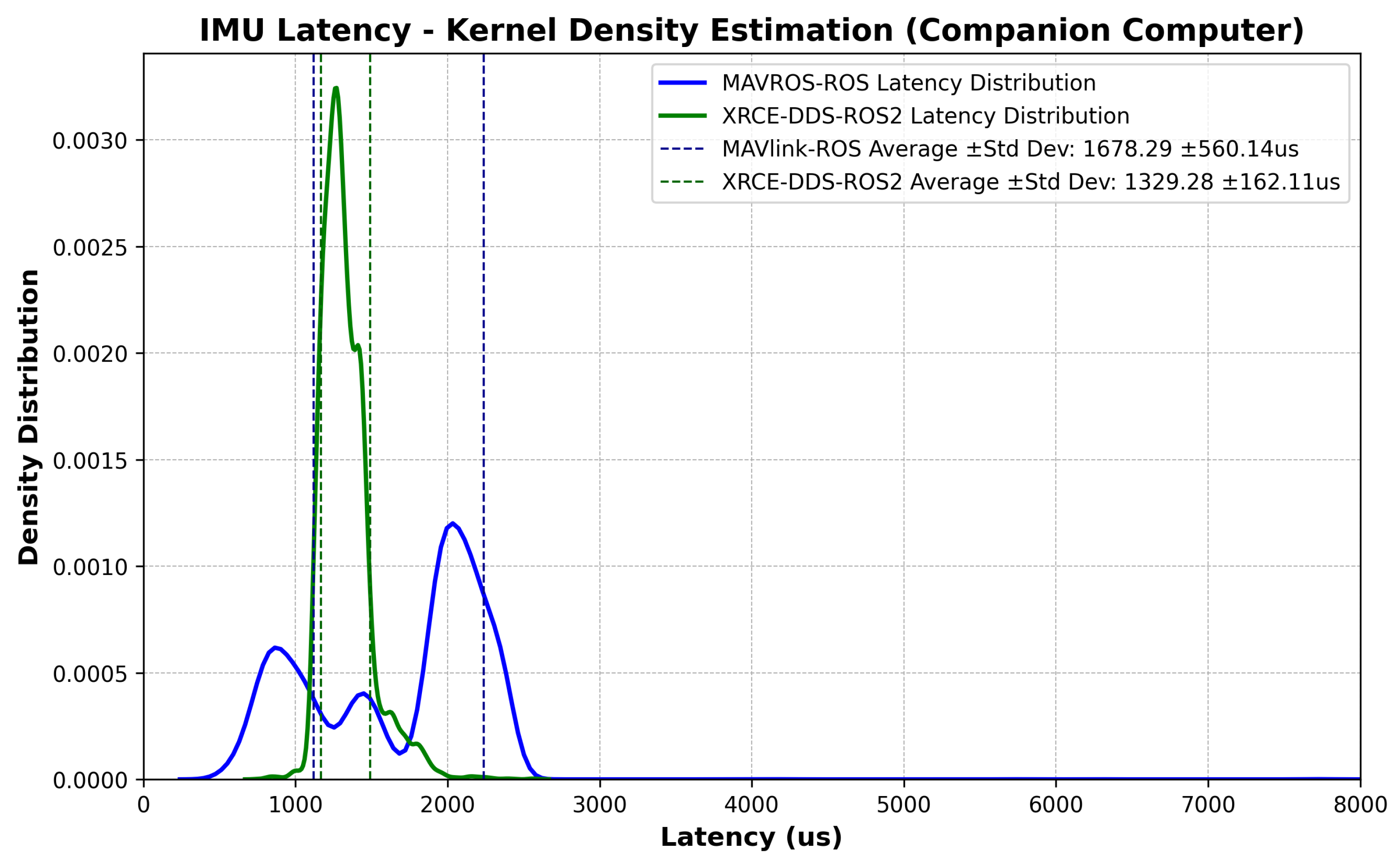

Latency assessment was implemented via hardware-in-the-loop simulation, and consisted of measuring the time offset between transmitted and received messages on both the FCU side and a high-frequency ROS or ROS 2 node. The forward path consisted of messages sent from the companion computer, simulating data from an external vision sensor to be fused at the PX4’s Extended Kalman Filter (EKF2); latencies were collected when the message was parsed into the estimator module in the FCU. The reverse path consisted of messages sent from the FCU to the ROS or ROS 2 node containing raw measurements from the FCU’s IMU. The choice of messages relates to typical GNC applications such as SLAM or Visual Inertia Odometry, in which rapid IMU feedback and fast state estimate transmission are crucial for mission performance. The messages were custom-modified to carry the same number of 115 bytes.

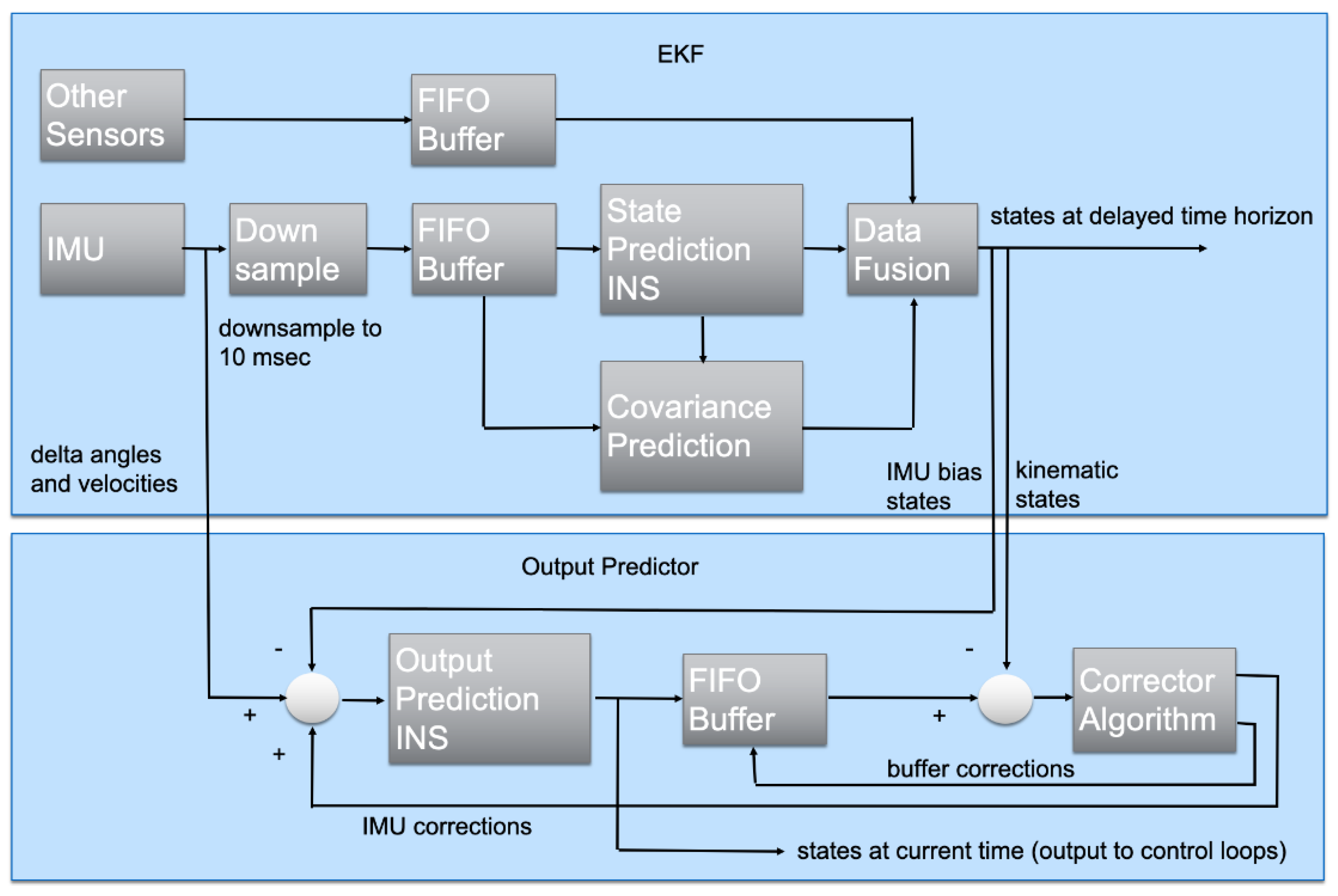

Figure 2 outlines the PX4’s EKF2 algorithm [

18]. The top block shows the main estimator, which uses a ring buffer to account for different sensor sampling frequencies and predict the states in a delayed horizon. The second block is the output predictor, which uses corrected high-frequency IMU measurements for quick state prediction and UAV rate control. Pseudocode and diagrams outlining communications between higher-level and lower-level processors are presented for both scenarios in Algorithms 1 and 2, and in

Figure 3 and

Figure 4.

| Algorithm 1 Latency Test Node in rospy (ROS) |

Class LatencyTest: Initialize variables Initialize publisher and subscriber Initialize timer function Initialize variables empty deque of max length 2 function Initialize publisher and subscriber Subscribe to ’mavros/imu/data_raw’ Publish to ’mavros/vision_pose/pose’ function Initialize timer Set timer frequency to 200 Hz function Sensor callback(msg) Calculate Obtain Update and counters based on and Write data to log.txt function Update offset and counters(rtt, imu_time_offset_observed) if 10,000 then Update , and using else function Cmdloop callback(event) Create and publish Append to function reset filter

|

| Algorithm 2 Latency Test Node in RCLPY (ROS 2) |

Class LatencyTest extends Node: Initialize variables Initialize publisher and subscriber Initialize timer function Initialize variables empty deque of max length 2 function Initialize publisher and subscriber Create a subscription to ‘/fmu/out/vehicle_imu’ Create a publisher to ‘/fmu/in/vehicle_visual_odometry’ function Initialize timer Set timer frequency to 200 Hz function Sensor callback(msg) Calculate Calculate Update and counters based on and Write data to “time_offsets.txt” function Update offset and counters(rtt, imu_time_offset_observed) if 10,000 then Update , and using else function Cmdloop callback Create and publish Append to function reset filter

|

The ROS messages in

Table 1 directly translate into the uORB messages shown in

Table 2. The red and blue lines in

Figure 3 and

Figure 4 show the message flow (IMU and VIO) and modules involved. Interpreting the time offset results requires understanding how the FCU firmware and the ROS/ROS 2 nodes estimate the time offset in each message, including how both lower-level and higher-level system time bases can be aligned and how the communication delay is quantified. The time offset is defined as the difference between two clock readings:

where

is the time when the packet containing the uORB or MAVlink messages was created, that is, the time when information originated from the ROS/ROS 2 node or FCU was serialized and sent,

is the time when the message was received and sent back from the remote level (either the higher-level or lower-level system layer), and

is the current system time. Clock skew is defined as the difference in the register update rate (loop rate) at both the FCU and companion computer. The offset and clock skew are estimated using an exponential moving average filter, as described in [

19,

20]:

where

and

are the filter gains for the offset and skew, respectively. To check convergence of the estimated offset, the message round-trip time is obtained and to determine whether it falls within a maximum threshold:

If Equation (

4) is true, the deviation between the estimated offset and the latest observed offset is compared against a maximum threshold:

If Equation (

5) holds, the statistical quality of

and

is assessed for each estimated offset by counting the number of times the expressions used to determine

and

are called in the code:

If Equations (

4) and (

5) hold while Equation (

6) fails (i.e., the number of calls is smaller than the convergence window), then

and

are corrected by interpolation:

with

and

set as 0.05 and

and

set as 0.003 for convergence of the moving average filter under the tested conditions. The one-way time-synchronized latency is as follows.

The respective bounds of 10 ms and 100 ms in Equations (4) and (5) are specific to the implementation in the PX4 platform, and are MAVlink defaults [

12,

18]. While they can be fine-tuned, this could affect the number of filter resets, in turn impacting estimation of the offset.