From Pulses to Sleep Stages: Towards Optimized Sleep Classification Using Heart-Rate Variability

Abstract

:1. Introduction

2. Methods

2.1. Participant

2.2. Materials

2.3. Missing Data Handling and Synchronization

2.4. Model Optimization

2.5. Sleep Parameters

2.6. Model Performance and Statistical Analysis

3. Results

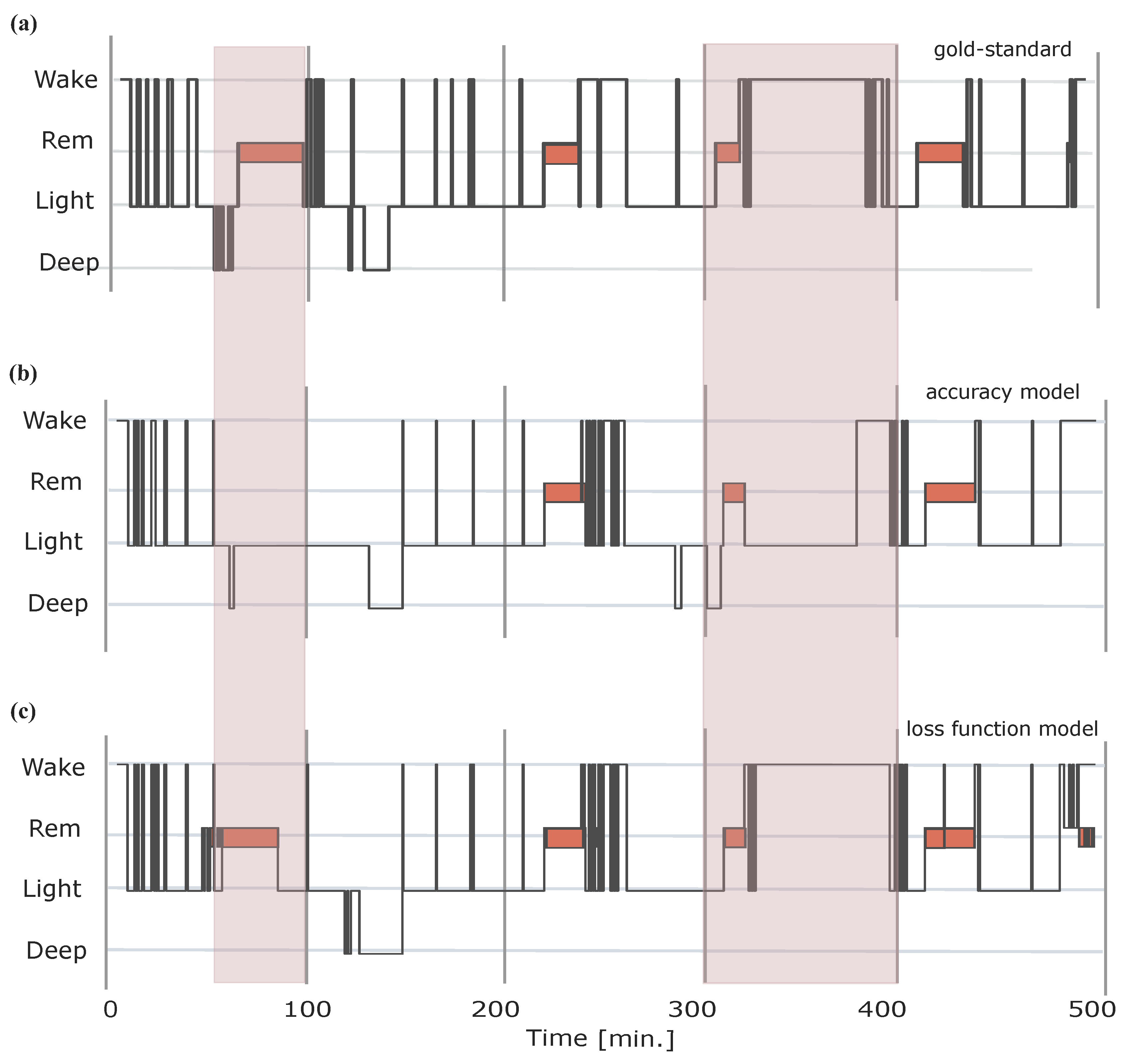

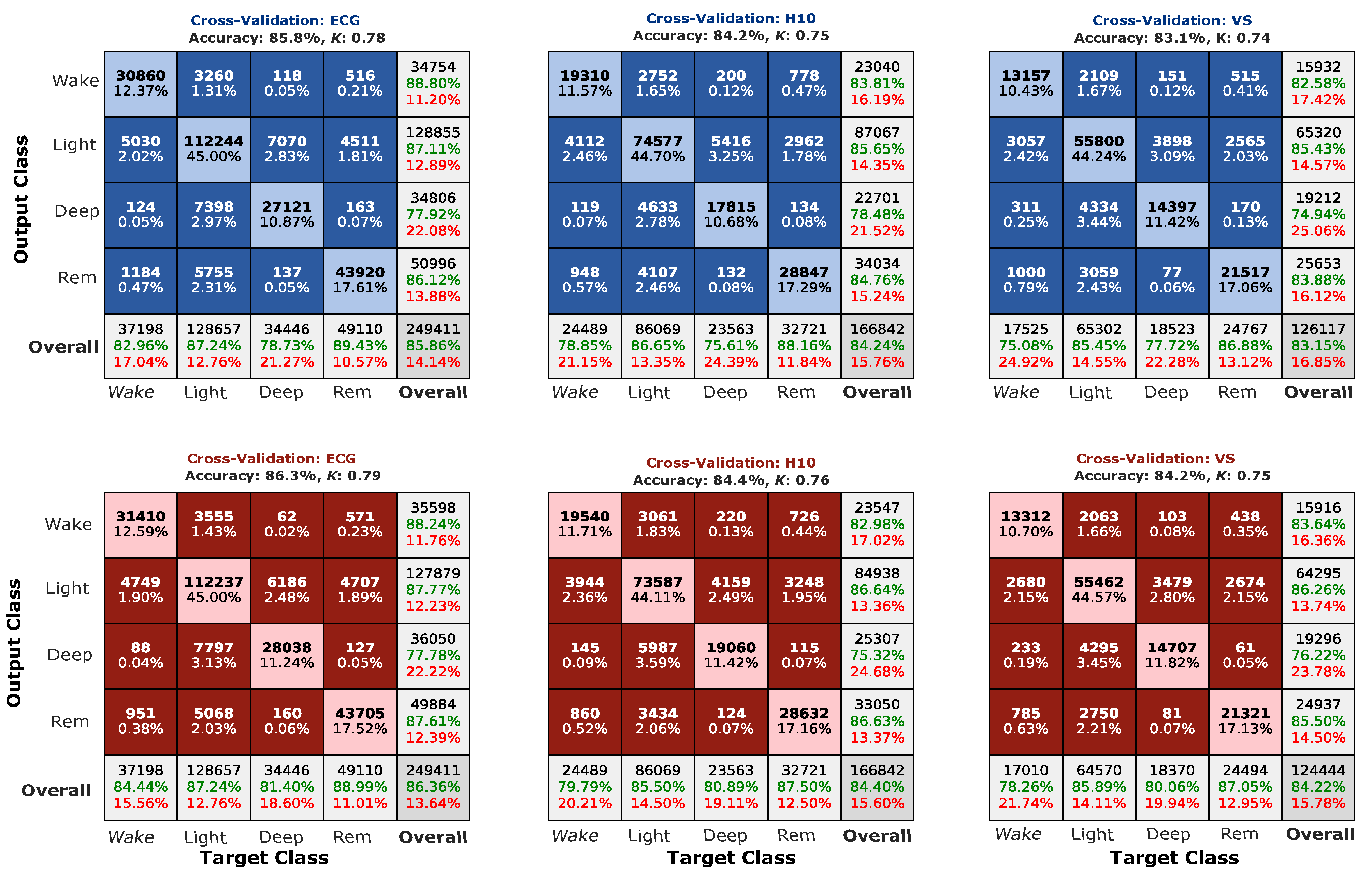

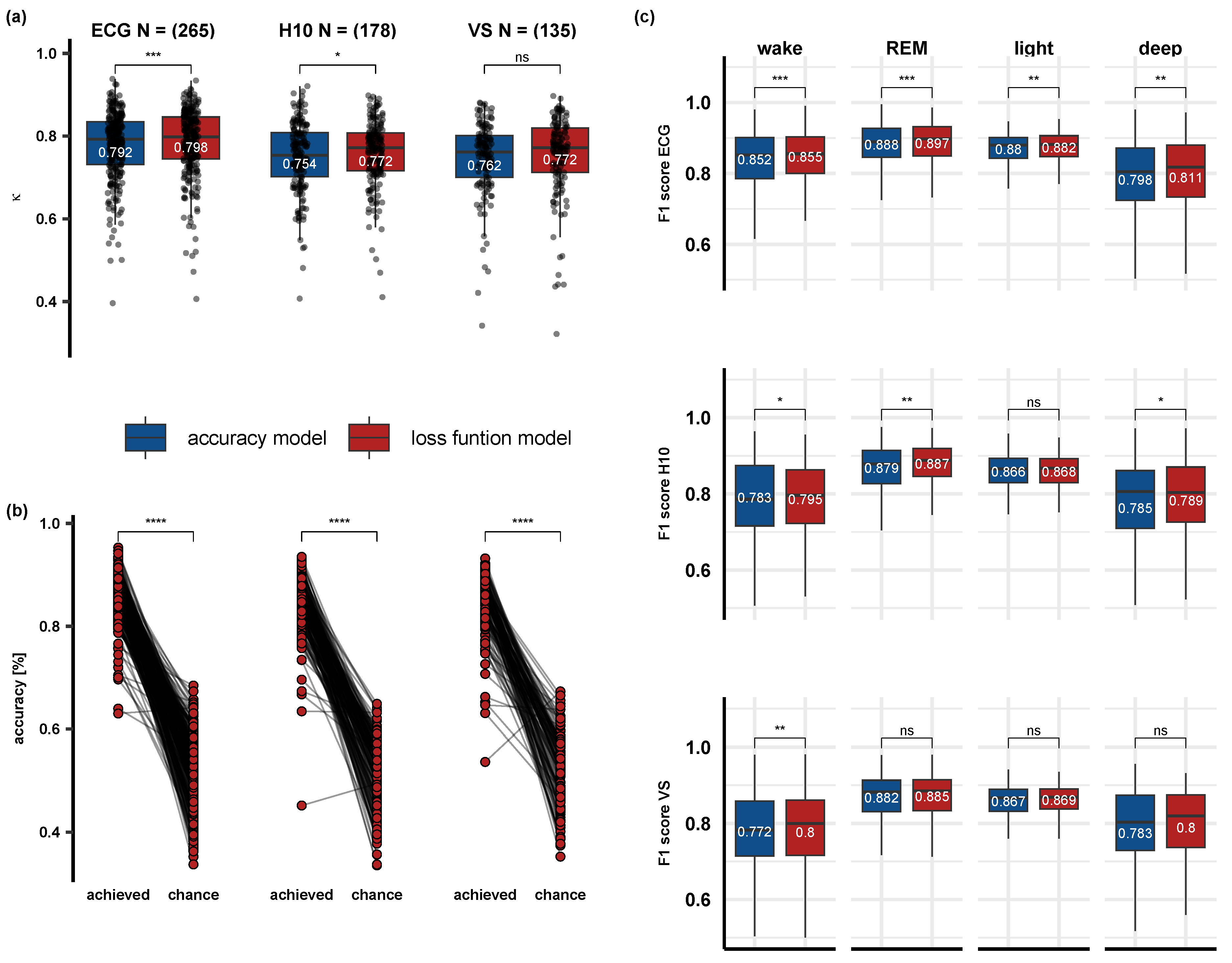

3.1. Comparison of the Accuracy and Loss Function Models and Performance after Optimization

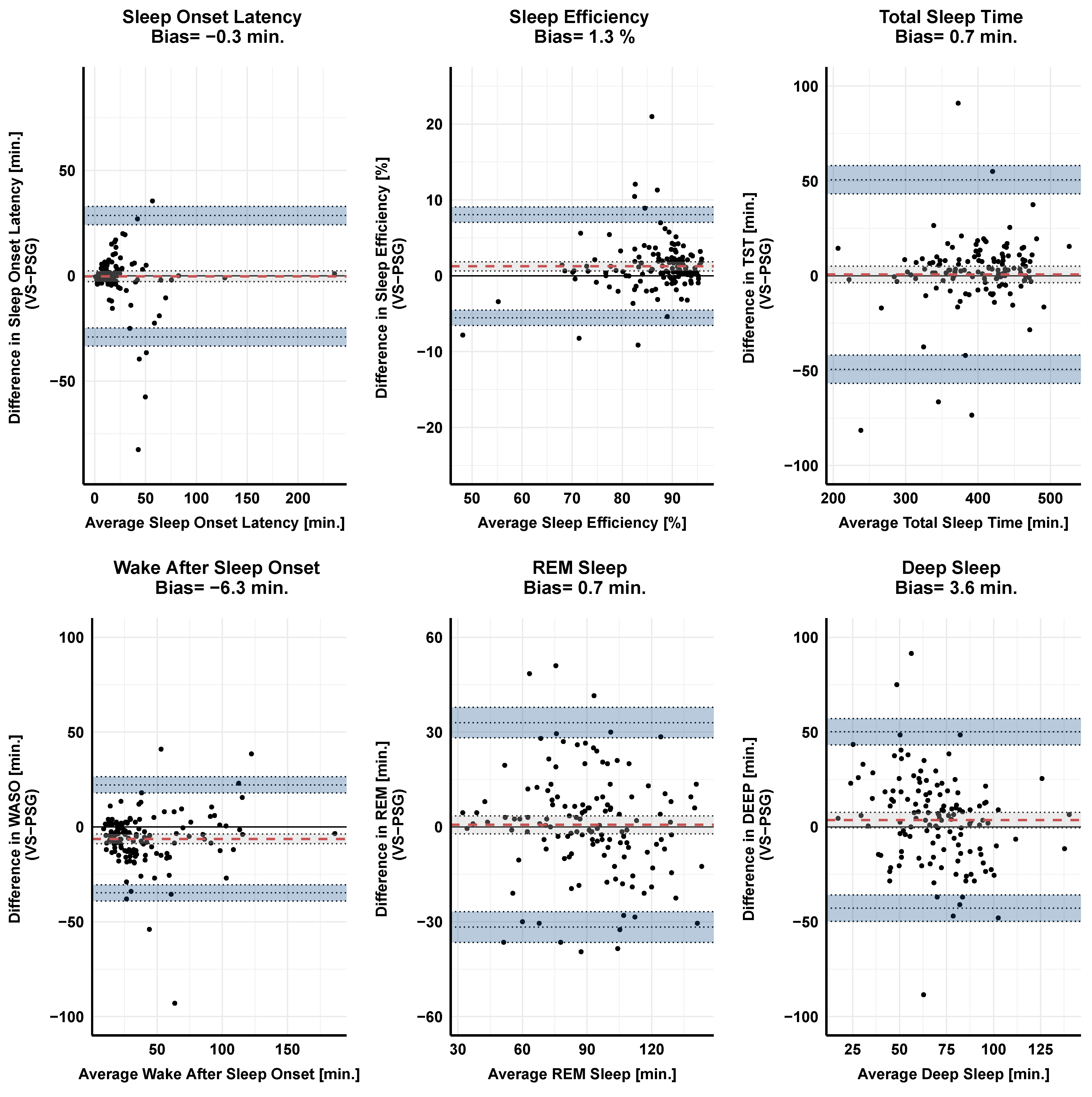

3.2. Correlation and Agreement between the Gold-Standard PSG and the Two Wearable Devices on Primary Sleep Parameters

3.3. Effects of Psychoactive and/or Heart Affecting Medication on Classification Performance

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| IBI | Inter-Beat-Interval |

| HRV | Heart Rate Variability |

| PSG | Polysomnography |

| ECG | Electrocardiography |

References

- Ramar, K.; Malhotra, R.K.; Carden, K.A.; Martin, J.L.; Abbasi-Feinberg, F.; Aurora, R.N.; Kapur, V.K.; Olson, E.J.; Rosen, C.L.; Rowley, J.A.; et al. Sleep is essential to health: An American Academy of Sleep Medicine position statement. J. Clin. Sleep Med. 2021, 17, 2115–2119. [Google Scholar] [CrossRef] [PubMed]

- Baron, K.G.; Abbott, S.; Jao, N.; Manalo, N.; Mullen, R. Orthosomnia: Are some patients taking the quantified self too far? J. Clin. Sleep Med. 2017, 13, 351–354. [Google Scholar] [CrossRef] [PubMed]

- Rentz, L.E.; Ulman, H.K.; Galster, S.M. Deconstructing commercial wearable technology: Contributions toward accurate and free-living monitoring of sleep. Sensors 2021, 21, 5071. [Google Scholar] [CrossRef]

- Gavriloff, D.; Sheaves, B.; Juss, A.; Espie, C.A.; Miller, C.B.; Kyle, S.D. Sham sleep feedback delivered via actigraphy biases daytime symptom reports in people with insomnia: Implications for insomnia disorder and wearable devices. J. Sleep Res. 2018, 27, e12726. [Google Scholar] [CrossRef] [PubMed]

- Ravichandran, R.; Sien, S.W.; Patel, S.N.; Kientz, J.A.; Pina, L.R. Making sense of sleep sensors: How sleep sensing technologies support and undermine sleep health. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 6864–6875. [Google Scholar]

- Downey, R.; Bonnet, M.H. Training subjective insomniacs to accurately perceive sleep onset. Sleep 1992, 15, 58–63. [Google Scholar] [CrossRef]

- Tang, N.K.; Harvey, A.G. Correcting distorted perception of sleep in insomnia: A novel behavioural experiment? Behav. Res. Ther. 2004, 42, 27–39. [Google Scholar] [CrossRef]

- Tang, N.K.; Harvey, A.G. Altering misperception of sleep in insomnia: Behavioral experiment versus verbal feedback. J. Consult. Clin. Psychol. 2006, 74, 767. [Google Scholar] [CrossRef]

- Roomkham, S.; Lovell, D.; Cheung, J.; Perrin, D. Promises and challenges in the use of consumer-grade devices for sleep monitoring. IEEE Rev. Biomed. Eng. 2018, 11, 53–67. [Google Scholar] [CrossRef]

- Aji, M.; Glozier, N.; Bartlett, D.J.; Grunstein, R.R.; Calvo, R.A.; Marshall, N.S.; White, D.P.; Gordon, C. The effectiveness of digital insomnia treatment with adjunctive wearable technology: A pilot randomized controlled trial. Behav. Sleep Med. 2022, 20, 570–583. [Google Scholar] [CrossRef]

- Spina, M.A.; Andrillon, T.; Quin, N.; Wiley, J.F.; Rajaratnam, S.M.; Bei, B. Does providing feedback and guidance on sleep perceptions using sleep wearables improves insomnia? Findings from Novel Insomnia Treatment Experiment (“NITE”), a randomised controlled trial. Sleep 2023, 8, zsad167. [Google Scholar] [CrossRef]

- Song, Y.M.; Choi, S.J.; Park, S.H.; Lee, S.J.; Joo, E.Y.; Kim, J.K. A real-time, personalized sleep intervention using mathematical modeling and wearable devices. Sleep 2023, 46, zsad179. [Google Scholar] [CrossRef] [PubMed]

- Murray, J.M.; Magee, M.; Giliberto, E.S.; Booker, L.A.; Tucker, A.J.; Galaska, B.; Sibenaller, S.M.; Baer, S.A.; Postnova, S.; Sondag, T.A.; et al. Mobile app for personalized sleep–wake management for shift workers: A user testing trial. Digit. Health 2023, 9, 20552076231165972. [Google Scholar] [CrossRef] [PubMed]

- Imtiaz, S.A. A systematic review of sensing technologies for wearable sleep staging. Sensors 2021, 21, 1562. [Google Scholar] [CrossRef] [PubMed]

- Topalidis, P.; Heib, D.P.; Baron, S.; Eigl, E.S.; Hinterberger, A.; Schabus, M. The Virtual Sleep Lab—A Novel Method for Accurate Four-Class Sleep Staging Using Heart-Rate Variability from Low-Cost Wearables. Sensors 2023, 23, 2390. [Google Scholar] [CrossRef]

- Chinoy, E.D.; Cuellar, J.A.; Huwa, K.E.; Jameson, J.T.; Watson, C.H.; Bessman, S.C.; Hirsch, D.A.; Cooper, A.D.; Drummond, S.P.; Markwald, R.R. Performance of seven consumer sleep-tracking devices compared with polysomnography. Sleep 2021, 44, zsaa291. [Google Scholar] [CrossRef]

- Chee, N.I.; Ghorbani, S.; Golkashani, H.A.; Leong, R.L.; Ong, J.L.; Chee, M.W. Multi-night validation of a sleep tracking ring in adolescents compared with a research actigraph and polysomnography. Nat. Sci. Sleep 2021, 13, 177–190. [Google Scholar] [CrossRef] [PubMed]

- Altini, M.; Kinnunen, H. The promise of sleep: A multi-sensor approach for accurate sleep stage detection using the oura ring. Sensors 2021, 21, 4302. [Google Scholar] [CrossRef]

- Mathunjwa, B.M.; Lin, Y.T.; Lin, C.H.; Abbod, M.F.; Sadrawi, M.; Shieh, J.S. Automatic IHR-based sleep stage detection using features of residual neural network. Biomed. Signal Process. Control 2023, 85, 105070. [Google Scholar] [CrossRef]

- Habib, A.; Motin, M.A.; Penzel, T.; Palaniswami, M.; Yearwood, J.; Karmakar, C. Performance of a Convolutional Neural Network Derived from PPG Signal in Classifying Sleep Stages. IEEE Trans. Biomed. Eng. 2022, 70, 1717–1728. [Google Scholar] [CrossRef]

- Sridhar, N.; Shoeb, A.; Stephens, P.; Kharbouch, A.; Shimol, D.B.; Burkart, J.; Ghoreyshi, A.; Myers, L. Deep learning for automated sleep staging using instantaneous heart rate. NPJ Digit. Med. 2020, 3, 106. [Google Scholar] [CrossRef] [PubMed]

- Berry, R.B.; Brooks, R.; Gamaldo, C.E.; Harding, S.M.; Marcus, C.; Vaughn, B.V. The AASM manual for the scoring of sleep and associated events. In Rules, Terminology and Technical Specifications; American Academy of Sleep Medicine: Darien, IL, USA, 2012; Volume 176, p. 2012. [Google Scholar]

- Bakker, J.P.; Ross, M.; Cerny, A.; Vasko, R.; Shaw, E.; Kuna, S.; Magalang, U.J.; Punjabi, N.M.; Anderer, P. Scoring sleep with artificial intelligence enables quantification of sleep stage ambiguity: Hypnodensity based on multiple expert scorers and auto-scoring. Sleep 2023, 46, zsac154. [Google Scholar] [CrossRef]

- Schaffarczyk, M.; Rogers, B.; Reer, R.; Gronwald, T. Validity of the polar H10 sensor for heart rate variability analysis during resting state and incremental exercise in recreational men and women. Sensors 2022, 22, 6536. [Google Scholar] [CrossRef]

- Gilgen-Ammann, R.; Schweizer, T.; Wyss, T. RR interval signal quality of a heart rate monitor and an ECG Holter at rest and during exercise. Eur. J. Appl. Physiol. 2019, 119, 1525–1532. [Google Scholar] [CrossRef]

- Hettiarachchi, I.T.; Hanoun, S.; Nahavandi, D.; Nahavandi, S. Validation of Polar OH1 optical heart rate sensor for moderate and high intensity physical activities. PLoS ONE 2019, 14, e0217288. [Google Scholar] [CrossRef]

- Dorffner, G.; Vitr, M.; Anderer, P. The effects of aging on sleep architecture in healthy subjects. In GeNeDis 2014: Geriatrics; Springer International Publishing: Cham, Switzerland, 2014; pp. 93–100. [Google Scholar]

- Radha, M.; Fonseca, P.; Moreau, A.; Ross, M.; Cerny, A.; Anderer, P.; Long, X.; Aarts, R.M. Sleep stage classification from heart-rate variability using long short-term memory neural networks. Sci. Rep. 2019, 9, 14149. [Google Scholar] [CrossRef] [PubMed]

- Viera, A.J.; Garrett, J.M. Understanding interobserver agreement: The kappa statistic. Fam. Med. 2005, 37, 360–363. [Google Scholar] [PubMed]

- de Vet, H.C.; Terwee, C.B.; Ostelo, R.W.; Beckerman, H.; Knol, D.L.; Bouter, L.M. Minimal changes in health status questionnaires: Distinction between minimally detectable change and minimally important change. Health Qual. Life Outcomes 2006, 4, 54. [Google Scholar] [CrossRef] [PubMed]

- Koo, T.; Li, M. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2017. [Google Scholar]

- Reddy, O.C.; van der Werf, Y.D. The sleeping brain: Harnessing the power of the glymphatic system through lifestyle choices. Brain Sci. 2020, 10, 868. [Google Scholar] [CrossRef]

- Menghini, L.; Cellini, N.; Goldstone, A.; Baker, F.C.; de Zambotti, M. A standardized framework for testing the performance of sleep-tracking technology: Step-by-step guidelines and open-source code. Sleep 2021, 44, zsaa170. [Google Scholar] [CrossRef]

- Ebrahimi, F.; Alizadeh, I. Automatic sleep staging by cardiorespiratory signals: A systematic review. Sleep Breath. 2021, 26, 965–981. [Google Scholar] [CrossRef] [PubMed]

- Doghramji, K.; Jangro, W.C. Adverse effects of psychotropic medications on sleep. Psychiatr. Clin. 2016, 39, 487–502. [Google Scholar]

- Symanski, J.D.; Gettes, L.S. Drug effects on the electrocardiogram: A review of their clinical importance. Drugs 1993, 46, 219–248. [Google Scholar] [CrossRef] [PubMed]

- Arroyo, A.C.; Zawadzki, M.J. The implementation of behavior change techniques in mHealth apps for sleep: Systematic review. JMIR MHealth UHealth 2022, 10, e33527. [Google Scholar] [CrossRef] [PubMed]

- Hinterberger, A.; Eigl, E.S.; Schwemlein, R.N.; Topalidis, P.; Schabus, M. Investigating the Subjective and Objective Efficacy of a Cognitive Behavioral Therapy for Insomnia (CBT-I)-Based Smartphone App on Sleep: A Randomized Controlled Trial. 2023. Available online: https://osf.io/rp2qf/ (accessed on 30 October 2023).

| Parameter | Mean PSG (SD) | Mean H10 (SD) | LOA | Bias | SME | MDC | ICC |

|---|---|---|---|---|---|---|---|

| SO [min.] | 18.4 (18) | 18.3 (21.6) | 31.8 − 31.9 | −0.1 | 1.2 | 3.4 | 0.7 |

| SE [%] | 85.5 (8.8) | 86.3 (8.8) | 10 −8.5 | 0.8 | 0.4 | 1.0 | 0.9 |

| TST [min.] | 400.4 (59) | 402.5 (60.6) | 49.2 −45 | 2.1 | 1.8 | 5.0 | 0.9 |

| WASO [min.] | 51.3 (42.3) | 47.4 (40.9) | 25.1 −32.9 | −3.9 | 1.1 | 3.1 | 0.9 |

| REM [min.] | 92.2 (27.9) | 92.9 (26.7) | 34.9 −33.5 | 0.7 | 1.3 | 3.6 | 0.8 |

| Deep [min.] | 66.6 (28.3) | 71.4 (23.4) | 48.3 −38.8 | 4.8 | 1.7 | 4.6 | 0.6 |

| Parameter | Mean PSG (SD) | Mean VS (SD) | LOA | Bias | SME | MDC | ICC |

|---|---|---|---|---|---|---|---|

| SO [min.] | 22 (27.8) | 21.8 (27.3) | 28.6 −29.1 | −0.3 | 1.3 | 3.5 | 0.9 |

| SE [%] | 86 (7.8) | 87.3 (8.3) | 8.1 −5.5 | 1.3 | 0.3 | 0.8 | 0.9 |

| TST [min.] | 397.6 (56) | 398.3 (62) | 50.6 −49.3 | 0.7 | 2.2 | 6.1 | 0.9 |

| WASO [min.] | 43.9 (30.5) | 37.6 (32) | 22.2 −34.8 | −6.3 | 1.3 | 3.5 | 0.9 |

| REM [min.] | 90.7 (26.6) | 91.4 (24.5) | 33 −31.7 | 0.7 | 1.4 | 4.0 | 0.8 |

| Deep [min.] | 67.4 (27.3) | 71.1 (21.6) | 50.2 −42.9 | 3.6 | 2.1 | 5.7 | 0.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Topalidis, P.I.; Baron, S.; Heib, D.P.J.; Eigl, E.-S.; Hinterberger, A.; Schabus, M. From Pulses to Sleep Stages: Towards Optimized Sleep Classification Using Heart-Rate Variability. Sensors 2023, 23, 9077. https://doi.org/10.3390/s23229077

Topalidis PI, Baron S, Heib DPJ, Eigl E-S, Hinterberger A, Schabus M. From Pulses to Sleep Stages: Towards Optimized Sleep Classification Using Heart-Rate Variability. Sensors. 2023; 23(22):9077. https://doi.org/10.3390/s23229077

Chicago/Turabian StyleTopalidis, Pavlos I., Sebastian Baron, Dominik P. J. Heib, Esther-Sevil Eigl, Alexandra Hinterberger, and Manuel Schabus. 2023. "From Pulses to Sleep Stages: Towards Optimized Sleep Classification Using Heart-Rate Variability" Sensors 23, no. 22: 9077. https://doi.org/10.3390/s23229077

APA StyleTopalidis, P. I., Baron, S., Heib, D. P. J., Eigl, E.-S., Hinterberger, A., & Schabus, M. (2023). From Pulses to Sleep Stages: Towards Optimized Sleep Classification Using Heart-Rate Variability. Sensors, 23(22), 9077. https://doi.org/10.3390/s23229077